Abstract

Large datasets are required to develop Artificial Intelligence (AI) models in AI powered smart farming for reducing farmers’ routine workload, this paper contributes the first large lion-head goose dataset GooseDetectlion, which consists of 2,660 images and 98,111 bounding box annotations. The dataset was collected with 6 cameras deployed in a goose farm in Chenghai district of Shantou city, Guangdong province, China. Images sampled from videos collected during July 9 -10 in 2022 were fully annotated by a team of fifty volunteers. Compared with another 6 well known animal datasets in literature, our dataset has higher capacity and density, which provides a challenging detection benchmark for main stream object detectors. Six state-of-the-art object detectors have been selected to be evaluated on the GooseDetectlion, which includes one two-stage anchor-based detector, three one-stage anchor-based detectors, as well as two one-stage anchor-free detectors. The results suggest that the one-stage anchor-based detector You Only Look Once version 5 (YOLO v5) achieves the best overall performance in terms of detection precision, model size and inference efficiency.

Similar content being viewed by others

Background & Summary

Recent years have witnessed the rapid advances in technologies such as Internet of Things (IoTs), Cloud Computing, and Artificial Intelligence (AI), which can be integrated to develop AI-empowered smart poultry or stock farming systems1. These systems offer farmers with real-time monitoring services like counting2,3, abnormality warning4,5, and disease warning6, etc. Such services significantly improve the quantity and quality of farmed poultry or stocks, and reduce farmers’ routine workload dramatically.

Video surveillance is one of real-time monitoring technologies, where video imagery based object detection is a key technology for camera-based object analysis tasks such as object identification, tracking, and behavior analysis. This has been widely used in farming systems2,3 and other varied applications such as intelligent transportation systems7, autonomous driving8, animal behavior understanding9, and detection of infectious disease to prevent the spread of the epidemic10, etc. Actually, most existing object detection technologies primarily rely on deep neural network learning methods, which require a large amount of data to train the network models.

As a result, many researchers have built a number of public animal datasets, e.g., UAVCattle11, aerial elephant dataset12 and multi-Pig13 etc. for developing intelligent animal behavior analysis systems. As a pasture aerial image dataset, UAV-Cattle contains two subsets for cattle detection and counting. While one of the subsets contains 1886 annotations of 212 cattle, the other one contains 62 annotations of 6 cattle. Aerial elephant dataset consists of 2,101 images of 15,511 African bush elephants in natural habitats, which can be used for elephant detection in aerial surveys. Multi-Pig is the first public dataset for pig detection in group-housing environments, which contains 2,000 images of 24,842 annotated pigs located at 17 different sites. In addition to publicly available datasets, other datasets, e.g., individual pig14, shed sheep15 and preening hens16 etc. are private for own use. Individual pig contains 4019 images with 13,996 annotated pigs, shed sheep contains 108 images with 2,317 annotated sheep, and preening hens contains 1700 images with 12,014 annotated preening hens. To the best of our knowledge, no lion-head goose dataset is publicly available yet, in literature.

Lion-head goose industry is one of the pillar industries in Shantou City, Guangdong Province, China. Isolated cage breeding methods cannot be applied to Lion-head geese since they are waterfowl. Till now, most farmers use traditional methods to breed lion-head geese. As the number of geese per farmer household is usually 103 or more, it is a typical dense farming environment. When a goose is infected by diseases like paramyxovirus, it should be immediately isolated and treated on time. Meanwhile, the healthy geese flock also need urgent treatment like taking vaccine and interferon, so as to prevent the large-scale disease outbreak. Otherwise, the fast infection will kill most of the geese in short time and cause huge loses to the farmers. The dense farming environment requires huge monitoring workload of the farmers to promptly detect sick geese.

AI-empowered smart farming systems help improve the efficiency of lion-head goose farmers, where video imagery based goose detection is an indispensable task. In farming environments, geese are often densely packed, which makes them prone to mutual occlusion, background clutter and pose diversity. In addition, geese present small individual differences. These introduce great challenges to goose detection. Several methods e.g., encoding long-term temporal dependencies across multiple cues17, incorporating a relation-aware global attention module18, etc have been developed to address such visual challenges. Such deep learning based methods have demonstrated remarkable object detection performance in dense environments. When they are applied to lion-head goose detection, a large-scale dataset is needed to train the model. However, no such dataset is publicly available yet.

This paper releases the first publicly available dataset, i.e., the GooseDetectlion, for lion-head goose detection. The data were collected by 6 cameras deployed in a lion-head goose farm. The dataset contains 2 categories of geese defined chronologically: big geese and small geese. The big geese are older than 9 weeks, and the small ones are between 5 and 8 weeks. The GooseDetectlion is a collection of 2,660 images, captured by 6 cameras from 6 different views over big geese and small geese for 2 days and 5 days respectively. It is fully and manually annotated at the frame level, with following labels.

-

Bounding boxes: The position of each goose in an image is given in the form of a bounding box.

-

Goose category tags: Each goose is labelled by a goose category tag, i.e., “big goose” or “small goose”.

The statistics of the GooseDetectlion are detailed in the paper, and an experimental study has been carried out to benchmark 6 representative deep learning based object detection methods. Our experimental results show that the YOLO v5 (You Only Look Once version 5) achieves the best performance on the GooseDetectlion.

Methods

The GooseDetectlion is an original fully annotated dataset specifically constructed for lion-head goose detection. In this section, we describe the information of the GooseDetectlion, including the details of its data collection, selection, annotation, and statistics.

Data collection and selection

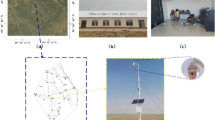

The objective of data collection and selection is to collect the videos of geese’ daily activities via cameras, and then select images with the number of geese larger than a given threshold at the frame level. Figure 1a depicts the aerial view of the farm, whose area is around 1,200–1,300 square meters. Figure 1b illustrates the farm’s functional zones, and depicts the distribution of the 6 deployed Hikvision DS-2CD3T46WDV3-I3 cameras with frame rate 25fps and resolution 1920 × 1080, where 5 cameras monitor the big geese and 1 monitors the small geese. Here the subzones within the two dotted lines connecting the same camera represent actual surveillance scope of the camera. Table 1 further outlines the detailed settings of each camera, including its height (meter) and horizontal rotation (degree). The distribution and settings of the 6 cameras enable a multi-angle and cross-time surveillance over the lion-head geese in different zones. Figure 2 demonstrates the sample images captured by different cameras.

As shown in Fig. 1b, big geese and small geese are bred in separate zones, and they will not appear in the same video. Therefore, images in the GooseDetectlion are naturally divided into 2 subsets, the one containing only big geese, i.e., \({{\rm{GooseDetect}}}_{b}^{lion}\), and the one containing only small geese, i.e., \({{\rm{GooseDetect}}}_{s}^{lion}\). Data of \({{\rm{GooseDetect}}}_{b}^{lion}\) and \({{\rm{GooseDetect}}}_{s}^{lion}\) are collected separately. For big geese, 20 videos were collected during July 09–10 in 2022 (2 days in total), and the average duration of each video is 1 hour. For small geese, 30 videos were collected during November 03–07 in 2022 (5 days in total), and the average duration of each video is 0.5 hour. Images are randomly selected from each video as long as they meet the following condition: the number of the big geese on each image was larger than 30, or that of the small geese on each image was larger than 50. In addition, the final number of images from each camera is lager than 200, which guarantees the diversity. Specifically, the main steps of the image selection are described as follows.

Step 1: Extract video segments with sufficient number of geese from each video. There exist video segments without any geese or with insufficient number of geese, since the surveillance area of each camera is a sub-zone, and geese are usually active and move around frequently. Such video segments will be excluded.

Step 2: Construct image sequences by sampling frames from each video segment at regular intervals, between 10 and 30 seconds.

Finally, we obtain a collection with 2000 images of big geese and 660 images of small geese. In all, the dataset is characteristic of good generalization since it contain images under different weather conditions and different illuminations:

-

Have different illuminations since they are sampled from whole day videos, including morning, noon, afternoon and evening.

-

Cover different seasons since images of big geese are sampled from videos collected in summer, i.e., July, and small ones are sampled from videos collected in winter, i.e., November.

-

Cover varied weather conditions, including thunderstorm, sunny and cloudy. For example, we choose images of big geese sampled from videos collected on July 9 and July 10, since it was thunderstorm in July 9 and cloudy in July 10.

Data annotation

The GooseDetectlion is a hand-annotated dataset. Around fifty volunteers participated in the labelling under the guidance of an experienced mentor throughout the whole annotation process. The contributors are under-graduate or graduate students from the college of computer science and software engineering, Shenzhen University. Specifically, the workflow for annotation of the selected images includes 3 steps: pre-processing, annotation, and refinement.

First, in pre-processing, following geese are identified and removed:

-

1)

Geese far away from the cameras, e.g. those in the dotted area in Fig. 2b, which are too vague, due to the luminance and cameras’ resolution;

-

2)

Geese with obscuration rate larger than 70%, where obscuration rate refers to the proportion of visible body over entire body. As aforementioned, the goose farm is a dense farming environment, lots of geese are obscurated by others.

Second, the annotation is conducted on the pre-processed images by the 50 volunteers to label bounding boxes and categories, i.e., big goose or small goose. We follow the annotation rule given in the VOC2011 Annotation Guidelines19, i.e., a bounding box is drawn on a goose, either fully-visible or partially-visible, together with a corresponding category tag. In Fig. 3, the left image is a sample annotated image with big geese and the right one is for the small geese.

Third, refinement is conducted to review the annotations and ensure their quality. Images with inappropriate annotations, such as wrong position and inappropriate size of a bounding box, or a wrong tag, are recorded. During the refinement, each annotated image is marked as either correct or problematic by three experienced annotators, and images with inappropriate labels will be re-annotated.

Table 2 summarizes the details of the GooseDetectlion. In total, the GooseDetectlion consists of 2660 images and 98,111 tagged bounding boxes. In addition, Table 3 summarizes the characteristics and size distribution of its subsets. First, the \({{\rm{GooseDetect}}}_{b}^{lion}\) consists of 2,000 big goose images with 80,550 tagged bounding boxes, and the \({{\rm{GooseDetect}}}_{s}^{lion}\) consists of 660 small goose images with 17,561 tagged bounding boxes. The ratio of the training set, the validation set, and the testing set for each category is 8:1:1.

Data Records

The GooseDetectlion is free and available at https://doi.org/10.57760/sciencedb.1438220. The file structure, scale, and location of the GooseDetectlion dataset is to be presented in detail in this section, serving as a foundation for establishing real-world smart lion-head goose farming systems.

Folder structure

The dataset contains 2660 image files in JPG format, corresponding 2660 annotation files in XML format. The files are organized using 2 ZIP archives, one is named as “Big_Goose.zip” and the other one is “Small_Goose.zip”. The “Big_Goose.zip” archive contains 2000 images and corresponding 2000 annotation files, while the “Small_Goose.zip” archive contains 660 images and corresponding annotation files. Each zip archive consists of two folders, the one with the name “JPEGImages” stores the image files, and the other one with the name “Annotations” stores the annotation files.

Scale

To analyze the size of geese in GooseDetectlion, the geese are grouped into bins according to their image size (the area of bounding boxes in pixels), and Fig. 4a depicts the scale distribution of the GooseDetectlion: 1) Most of the big geese have image size between 0 to 20,000 pixels, with an average of 14,270.67 pixels; 2) Most of the small geese have image size between 0 to 10,000 pixels, with an average of 9,334.22 pixels. One can observe from the figure that for either big geese or small geese, the number of geese with small image size is much larger than that with large image size.

Location

Figure 4b,c depict the location heat maps of geese in GooseDetectlion, where Fig. 4b is the location distribution of big geese and Fig. 4c is that of small geese. Particularly, all images here have been resized to the same resolution (1400 × 800) and centers of each bounding box are used to represent the location. From Fig. 4b,c, we can see that the geese’ locations are relatively uniform, i.e. they mainly stay on the left side of the images.

Technical Validation

Deep learning based object detection is an active research topic, many methods have been proposed to improve the detection precision and efficiency. According to whether object detection is carried out on the original images or not, existing deep learning based object detection methods are usually classified into two categories: two-stage methods (also known as region proposal-based methods), and one-stage methods (also known as regression-based methods). For two-stage methods, region proposals are first extracted from the original images. The early one-stage methods were carried out on the original images directly, achieving better efficiency. Recent years have witness the rapid progress of one-stage methods and their precision is getting better. According to whether anchors are used or not, existing deep learning based object detection methods can also be classified into anchor-based and anchor-free.

Faster R-CNN (Faster Regions with Convolutional Neural Network features)21, SSD (Single Shot MultiBox Detector)22, RetinaNet23, CenterNet24, YOLO (You Only Look Once) version 1 (v1)25 and its variants, i.e., YOLO v2-v7, are representative deep learning based object detection benchmarks26,27. As Faster R-CNN is a typical two-stage anchor-based method, SSD, Retinanet and YOLO v5 are classical one-stage anchor-based methods, while CenterNet and YOLO v728 are recent one-stage anchor-free methods, Faster R-CNN, SSD, RetinaNet, CenterNet, YOLO v5, and YOLO v7 are selected as 6 representative methods for benchmarking goose detection performance using our GooseDetectlion.

Following codes used in the experiments are publicly available: the 6 data augmentation methods in python (https://github.com/srp-31/Data-Augmentation-for-Object-Detection-YOLO-), and the 6 deep learning based object detection methods, including Faster R-CNN (https://github.com/bubbliiiing/faster-rcnn-pytorch), RetinaNet (https://github.com/bubbliiiing/retinanet-pytorch), CenterNet (https://github.com/bubbliiiing/centernet-pytorch), SSD (https://github.com/bubbliiiing/ssd-pytorch), YOLO v5 (https://github.com/bubbliiiing/yolov5-v6.1-pytorch) and YOLO v7 (https://github.com/bubbliiiing/yolov7-pytorch).

In our empirical experimental study, all models are trained and tested using PyTorch 1.2.0 on 1 GeForce GTX TITAN X GPU card. In this paper, we follow the settings of original papers proposing corresponding detectors and use the same backbone networks as the papers. Particularly, ResNet5029 is used as the backbone network for Faster R-CNN, RetinaNet, and CenterNet, and the models were trained using stochastic gradient descent (SGD) for 150 epochs, with learning rate of 0.01. The VGG1630 is used as the backbone of SSD, and the modes were trained using SGD for 200 epochs, with learning rate of 0.01. Finally, CSP-DarkNet31 is used as the backbone of YOLO v5 and YOLO v7, and the models were trained using SGD for 300 epochs, with learning rate of 0.01.

Six data augmentation methods in python are exploited to augment the training set of the GooseDetectlion, including rotate, pan, mirror, random noise, random crop, and mosaic. After data augmentation, for the big goose subset \({{\rm{GooseDetect}}}_{b}^{lion}\), the number of augmented images of the training set is 8000 and corresponding number of bounding boxes is 293,559. For the small goose subset \({{\rm{GooseDetect}}}_{s}^{lion}\), augmented image number and bounding box number is 5470 and 148,102 respectively.

The performance of object detection is usually evaluated in terms of detection precision, model size and inference efficiency. In literature, part of or all of the 12 COCO metrics32 (which are summarized in Table 4), or Pascal VOC metric are used to evaluate the precision of an object detector, where Pascal VOC metric is actually one of the 12 COCO metrics. The model size can be measured by the number of parameters, the larger the size is, the more memory it will require. Finally, the model’s inference efficiency can be measured by the average inference time on an image (inference time for short in the rest of the paper). 12 COCO metrics, the model size and inference efficiency of the 6 representative methods on the GooseDetectlion will be detailed in this subsection.

Detection precision

Table 5 reports the 12 COCO metrics of the 6 representative methods on each subset of the GooseDetectlion, i.e., big goose subset \({{\rm{GooseDetect}}}_{b}^{lion}\) and small goose subset \({{\rm{GooseDetect}}}_{s}^{lion}\), based on which we can draw the following conclusions:

-

YOLO v7 obtains the best detection precision among all methods, with the mAP of 0.549 on big goose subset and 0.432 on the small goose subset;

-

The recent one-stage methods, i.e., YOLO v5 (anchor-based) and YOLO v7 (anchor-free) outperform the other 4 methods, including the two-stage method and early one-stage methods.

-

The two-stage method, i.e., Faster R-CNN, achieves better detection precision than the other two early one-stage anchor-based methods, i.e., SSD and Retinanet;

-

All methods have better detection precision on the big goose dataset than on the small goose dataset, as most of the big geese have larger bounding boxes (see Fig. 4(a)).

Figure 5 depicts the P-R (precision-recall) and F1 score curves for both subsets and the 6 detection methods at the IoU threshold of 0.5. First, for a given recall, the larger area under a P-R curve is, the better performance the model achieves. From the P-R curves in Fig. 5a,b, we can draw the following conclusions:

-

YOLO v7 achieves the most stable and the highest precision on both the big goose and small goose subset. To be specific, for the big goose dataset, it obtains stable high precision when the recall is less than 0.85. Though its precision drops dramatically when the recall is larger than 0.85, it still achieves relative higher precision than the other 5 methods. The Retinanet and SSD demonstrate relatively poor performance for both subsets.

-

All methods obtain better performance on big goose subset than small goose subset.

The P-R (precision-recall) and F1 score curves of the 6 representative methods (IoU = 0.5), where the data labels in (b), (c) and (d) are the same as those in (a): (a) P-R curves (\({{\rm{GooseDetect}}}_{b}^{lion}\)), (b) P-R curves (\({{\rm{GooseDetect}}}_{s}^{lion}\)), (c) F1 curves (\({{\rm{GooseDetect}}}_{b}^{lion}\)), (d) F1 curves (\({{\rm{GooseDetect}}}_{s}^{lion}\)).

For a given confidence, the higher F1 score is, the better mean of precision and recall the method achieves. From the F1 score curves in Fig. 5c,d, we can see that YOLO v5 and YOLO v7 achieve higher F1 scores than the other 4 methods for both subsets, and still, YOLO v7 demonstrates the best performance. To be specific, for the big goose subset, YOLO v7 achieves the most stable and best F1 scores when confidence is larger than 0.02 and less than 0.7. That is, they achieve higher detection precision as well as higher recall. For the small goose subset, similar results can be observed when confidence is larger than 0.018 and less than 0.69 respectively.

Faster R-CNN has the highest F1 score when the confidence is higher than 0.7 and 0.69 for the big goose subset and small goose subset respectively. As a two-stage method, the region proposals extracted from the original images help improve the fitness of the detected bounding box against ground truth. A higher confidence means higher precision and lower recall. But normally, object detection applications require not only high precision but also high recall. When confidence is higher than 0.7 or 0.69, corresponding recall may fail to satisfy such a requirement. Therefore, the F1 score curves in Fig. 5c,d suggest that YOLO v7 demonstrates the best performance.

Figure 6 illustrates example results of the 6 representative methods for the small goose image shown in Fig. 3b. Here we can see that YOLO v7, YOLO v5, CenterNet, and Faster R-CNN can detect most of the geese, while Retinanet and SSD miss some geese on the upper right corner. Especially, the Retinanet misses the most number of geese in this case. This is consistent with the aforementioned discussion regarding the precision of the 6 methods.

Model size and inference efficiency

Table 6 reports the model size and inference time of the 6 representative methods on the GooseDetectlion. Here, we can see that the model of YOLO v5 has the least number of parameters, i.e., 7,063,542 and relatively faster inference time, i.e., 0.0179 second per image. While YOLO v7 has the largest number of parameters, i.e., 36,479,926, its inference time is 0.0407 second per image, slower than YOLO v5 and SSD, but faster than the other 3 methods. Particularly, SSD has the shortest single image inference time.

In summary, YOLO v5 has the best overall performance on the GooseDetectlion when detection precision, model size and inference efficiency are considered. As discussed in data collection and selection section, our dataset contains images of geese in summer and winter, in thunderstorm, cloudy and sunny weather conditions, and from morning to evening. It covers most scenes, but not all scenes. In order to improve the goose detection accuracy in more diverse environments, more research work can be done on optimizing the goose detection algorithms, or collecting more diversified training data in extreme conditions, e.g., infrared goose images at night.

Code availability

No custom code was used to generate or process the data described in the manuscript.

References

Collins, L. & Smith, L. Review: Smart agri-systems for the pig industry. animal 16, 100518, https://doi.org/10.1016/j.animal.2022.100518 (2022). Manipulating Pig Production XVIII: Proceedings of the Eighteenth Biennial Conference of the Australasian Pig Science Association (APSA), 15–18 November 2021, Brisbane, Australia.

Shang, H., Li, R., He, X., Wang, J. & Peng, X. Real-time accurate object counting for smart farms. In 2019 International Joint Conference on Neural Networks (IJCNN), 1–8 (IEEE, 2019).

Čakić, S., Popović, T., Krčo, S., Nedić, D. & Babić, D. Developing object detection models for camera applications in smart poultry farms. In 2022 IEEE International Conference on Omni-layer Intelligent Systems (COINS), 1–5, https://doi.org/10.1109/COINS54846.2022.9854975 (2022).

Budiarto, R., Gunawan, N. K. & Nugroho, B. A. Smart chicken farming: Monitoring system for temperature, ammonia levels, feed in chicken farms. IOP Conference Series: Materials Science and Engineering 852, 012175, https://doi.org/10.1088/1757-899X/852/1/012175 (2020).

Akbar, M. O., Khan, M., Ali, M. J., Hussain, A. & Akhtar, N. Iot for development of smart dairy farming. Journal of Food Quality 2020, 1–8 (2020).

Kim, S. J., Yoe, H. & Lee, M. H. Design of poultry farm disease detection system based on k-nearest neighbor algorithm. In 2023 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), 762–766 (IEEE, 2023).

Tian, B. et al. Hierarchical and networked vehicle surveillance in its: A survey. IEEE Transactions on Intelligent Transportation Systems 16, 557–580 (2015).

Yurtsever, E., Lambert, J., Carballo, A. & Takeda, K. A survey of autonomous driving: Common practices and emerging technologies. IEEE access 8, 58443–58469 (2020).

Khan, M. H. et al. Animalweb: A large-scale hierarchical dataset of annotated animal faces. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 6939–6948 (2020).

Kattirnani, S. L., Maidargi, P., Bajantri, S. C., Jahagirdar, M. W. M. & Bidari, V. “an automated social distance monitoring & alarm system based on human structure using video surveillance in covid-19 pandemic by ai techniques, a review”. In 2021 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), 1–5 (2021).

Shao, W. et al. Cattle detection and counting in uav images based on convolutional neural networks. International Journal of Remote Sensing 41, 31–52 (2020).

Naude, J. & Joubert, D. The aerial elephant dataset: A new public benchmark for aerial object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 48–55 (2019).

Psota, E. T., Mittek, M., Pérez, L. C., Schmidt, T. & Mote, B. Multi-pig part detection and association with a fully-convolutional network. Sensors 19, 852 (2019).

Hao, W., Han, W., Han, M. & Li, F. A novel improved yolov3-sc model for individual pig detection. Sensors 22, 8792 (2022).

Xu, J., Wu, Q., Zhang, J. & Tait, A. Automatic sheep behaviour analysis using mask r-cnn. In 2021 Digital Image Computing: Techniques and Applications (DICTA), 01–06 (IEEE, 2021).

Li, G., Hui, X., Lin, F. & Zhao, Y. Developing and evaluating poultry preening behavior detectors via mask region-based convolutional neural network. Animals 10, 1762 (2020).

Sadeghian, A., Alahi, A. & Savarese, S. Tracking the untrackable: Learning to track multiple cues with long-term dependencies. In Proceedings of the IEEE international conference on computer vision, 300–311 (2017).

Zhang, Z., Lan, C., Zeng, W., Jin, X. & Chen, Z. Relation-aware global attention for person re-identification. In Proceedings of the ieee/cvf conference on computer vision and pattern recognition, 3186–3195 (2020).

Everingham, M. & Winn, J. The pascal visual object classes challenge 2011 (voc2011) development kit. Pattern Analysis, Statistical Modelling and Computational Learning, Tech. Rep 8 (2011).

Feng, Y. et al. GooseDetectlion[DS/OL]. Science Data Bank, V3, https://doi.org/10.57760/sciencedb.14382 (2024).

Ren, S., He, K., Girshick, R. & Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Advances in neural information processing systems 28 (2015).

Liu, W. et al. Ssd: Single shot multibox detector. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14, 21–37 (Springer, 2016).

Lin, T.-Y., Goyal, P., Girshick, R., He, K. & Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE international conference on computer vision, 2980–2988 (2017).

Zhou, X., Wang, D. & Krähenbühl, P. Objects as points. arXiv preprint arXiv:1904.07850 (2019).

Redmon, J., Divvala, S., Girshick, R. & Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, 779–788 (2016).

Li, K., Wan, G., Cheng, G., Meng, L. & Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS Journal of Photogrammetry and Remote Sensing 159, 296–307 (2020).

Wang, Y. et al. Remote sensing image super-resolution and object detection: Benchmark and state of the art. Expert Systems with Applications197 (2022).

Wang, C.-Y., Bochkovskiy, A. & Liao, H.-Y. M. Yolov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 7464–7475 (2023).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, 770–778 (2016).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. Computer Science (2014).

Bochkovskiy, A., Wang, C.-Y. & Liao, H.-Y. M. Yolov4: Optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934 (2020).

Hosang, J., Benenson, R., Dollár, P. & Schiele, B. What makes for effective detection proposals? IEEE transactions on pattern analysis and machine intelligence 38, 814–830 (2015).

Acknowledgements

Here, we would like to thank Dr. Joanna Siebert for her valued comments on improving the paper. This work is supported by the National Natural Science Foundation of China under Grant 82261138629; Guangdong Basic and Applied Basic Research Foundation under Grant 2023A1515010688 and Shenzhen Municipal Science and Technology Innovation Council under Grant JCYJ20210324093212034 and JCYJ20220531101412030, 2022 Guangdong Province Undergraduate University Quality Engineering Project (Shenzhen University Academic Affairs [2022] No. 7), and 2022 Shenzhen University Teaching Reform Research Project (Free Application, JG2022038).

Author information

Authors and Affiliations

Contributions

Y. Feng and L. Shen conceived dataset and experiments. W. Li and Y. Guo conducted the experiments. Y. Wang and Y. Yuan deployed the cameras, collected and selected the data, and organized the data annotation. Y. Feng and S. Tang analysed the results. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Feng, Y., Li, W., Guo, Y. et al. GooseDetectlion: A Fully Annotated Dataset for Lion-head Goose Detection in Smart Farms. Sci Data 11, 980 (2024). https://doi.org/10.1038/s41597-024-03776-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41597-024-03776-1