Abstract

Due to their high resolution, anonymized CT scans can be reidentified using face recognition tools. However, little is known regarding PET deanonymization because of its lower resolution. In this study, we analysed PET/CT scans of 853 patients from a TCIA-restricted dataset (AutoPET). First, we built denoised 2D morphological reconstructions of both PET and CT scans, and then we determined how frequently a PET reconstruction could be matched to the correct CT reconstruction with no other metadata. Using the CT morphological reconstructions as ground truth allows us to frame the problem as a face recognition problem and to quantify our performance using traditional metrics (top k accuracies) without any use of patient pictures. Using our denoised PET 2D reconstructions, we achieved 72% top 10 accuracy after the realignment of all CTs in the same reference frame, and 71% top 10 accuracy after realignment and mixing within a larger face dataset of 10, 168 pictures. This highlights the need to consider face identification issues when dealing with PET imaging data.

Similar content being viewed by others

Introduction

Positron emission tomography (PET) has become a key imaging modality for cancer care worldwide and is now widely used for numerous oncological, inflammatory, and infectious conditions in daily practice. Because of its unique foundations – radiolabelled molecular targeting and very sensitive quantitative imaging – PET imaging has been increasingly used as a biomarker for clinical research on national or international cohorts of patients suffering from diseases presenting major public health issues. Examples include the Alzheimer’s Disease Neuroimaging Initiative (ADNI), a North American longitudinal multicentre study devoted to developing clinical, imaging, genetic, and biochemical biomarkers for the early detection and tracking of Alzheimer’s disease (AD)1, and the Cancer Imaging Archive2 (TCIA), funded by the National Cancer Institute’s Cancer Imaging Program, which is an open-access database of medical images created to enhance research in oncology. Beyond healthcare issues, the emergence of artificial intelligence in medicine and the needs related to the increase in the quantity of data generated by the new generation of PET/CT systems have paved the way to extensive prospective research and development in automated image processing, which also largely relies on data sharing through research consortia such as the Quantitative Imaging and Medical Physics (QIMP) initiative (Moose project)3 or data challenges such as Hecktor4 or AutoPet5.

With increased quantities of sensitive medical data being shared to accelerate research, natural questions around appropriate regulations and pseudonymization arise. Although methods to pseudonymize patient metadata exist, sensitive information is often contained within the medical imaging data itself. This problem is well documented for high-resolution image modalities such as CT6, where defacing and blurring methods alleviate risks posed by facial recognition software applied to such images. Additionally, little is known regarding the possibilities of such face recognition applied to lower-resolution imagery such as PET data. Indeed, to the best of our knowledge, only one study evaluated the possibility of reidentifying patients’ faces from their PET scans7. They conducted the analysis on 182 patients using Microsoft Azure face recognition software to match the face reconstructions to patients’ pictures.

In a growing context of data sharing requirements on anonymization, we assessed the capabilities of automated facial recognition on a whole-body PET dataset with 853 patients. We evaluate the risk of deanonymization from PET images by using 2D faces reconstructed from CT images as proxies8 for “patient faces”. We proposed an end-to-end semi-supervised framework that (i) builds a 2D morphological representation of a patient’s face from the PET signal, (ii) denoises such a representation using deep learning methods, and (iii) performs face recognition using the best proxy available in the absence of patient pictures inspired by modern facial recognition software9: landmark placement comparison between a query (PET) and all targets (CTs).

Results

Dataset

We used a whole-body FDG-PET/CT dataset5 subject to a TCIA license agreement, as described in Methods. A basic exploratory analysis of our thresholded data shows the distributions of means of SUV counts and HU values across the original and selected patient sets (Fig. 1). We note that excluding some patients did not provoke any shift in the SUV or HU distributions, confirming that we have not biased the dataset in an obvious way.

Deep Learning-based Facial Recognition

We built a 2D morphological reconstruction pipeline using a semi-supervised approach defined in Methods and depicted in Fig. 2. We further defined three main objectives to assess the capabilities of automated facial recognition using a deep-learning based approach, as described in Methods. Briefly, Objective 1 evaluates model confidence when placing facial key points (landmarks). Objective 2 compares facial landmark placement between the 2D PET facial reconstructions and the corresponding 2D CT facial reconstructions. Finally, Objective 3 evaluates how facial landmark placement performs in a matching task by using landmark set matching to retrieve the correct CT scan for each PET facial reconstruction.

Overview of the three consecutive modules for facial recognition. The first module (unsupervised morphological reconstruction) reconstructs a 2D face from a PET volume, building on an approach previously developed to reconstruct a 3D mesh. For this purpose, all the PET data are binarized, nonspatially connected regions are removed. For each patient, a facial isosurface is reconstructed to deduce a 3D mesh, which is finally converted to a 2D representation of the patient’s face by using raycasting methods. The second module (either with standard or deep learning-based approaches) denoises the 2D representation of the patients’ face. The third module performs face recognition thanks to landmark placement. To ensure the robustness of our analysis, we performed 5-fold cross-validation for the denoising and landmark detection analysis.

Objective 1

Across the five folds, we were able to place the facial landmarks with high confidence on 88.0%+/−1.6% of the faces reconstructed from CT scans. In the absence of denoising, we reached a landmark placement success rate of 44.8%+/− 4.0% for PET morphological reconstructions, whereas minor improvement was noted with traditional non-deep denoising methods, as shown in Fig. 3 (46.8%+/− 3.8% across folds). After deep denoising, we reached an 81.6%+/− 3.1% landmark placement success rate. For each fold, we retained only the PET and CT pairs for which we successfully placed the landmarks on both modalities, leading to final test sets with following size: [99, 103, 96, 107, 101] over 128 initial pairs. These test sets remain balanced and are used in the rest of the analysis.

Experiment results. (a) Training/validation loss across all folds. (b) Percentage of landmarks placed across all patients for each fold on CT and PET modalities as well as the percentage of well-placed PET landmarks that are congruent to CT placement. Error bars indicate the variability among folds. (c) Mean absolute distance normalized by interocular distance distributions across all folds for denoised and nondenoised PET images. We note that the median is systematically below for the denoised PET images.

Objective 2

Well-placed landmarks were observed for only 27.2%+/− 3.0% of the PET samples when no further denoising was applied. In the “non-deep” denoising configuration, we noted minor improvements that would not be statistically relevant (Fig. 3). Finally, in the “deep” denoising configuration, the median normalized mean absolute distance (MAD) falls to 9% on average across folds, compared to 12% in the “original” configuration. More importantly, the average standard deviation falls from 27% (“original”) to 9% (“deep”), which allows accurate placement of 59.5%+- 3.4% of the landmarks in the “deep” configuration, as shown in Fig. 3.

Objective 3

Across all folds, we managed to identify the matching CT scan using landmark set matching for 78.4%+/− 5.3% of all the PET scans in the test sets. After relaxation to the top-5 accuracy, we reached 92.5%+/−2.7% accuracy for our patient face-matching task. When a distance minimization-based transformation was performed to ensure that each comparison was calculated within a common reference, similar accuracies were reached after relaxing to the top-10 accuracy, as shown in Fig. 4 (72.1%+/− 7.4%). When performing comparisons, with a common reference frame alignment step, against landmarks of CT scans mixed with landmarks derived from a large facial database, top-10 accuracy reaches 71.2%+/− 6.3%.

Discussion

In this work, we assessed the automated capabilities of facial recognition applied to PET data. We developed a partially supervised face reconstruction and detection method, including three encapsulated modules (morphology reconstruction, denoising and landmark detection). The method was then applied to a large dataset of 853 PET/CT patient scans from the Cancer Imaging Archive (TCIA-restricted data5). We assessed the capability of the method to confidently place a set of landmarks on the corresponding face on PET data (with and without denoising), the quantitative bias between the PET and corresponding CT-based landmarks, and the capacity of the PET landmarks to be correctly matched with the right CT at the whole dataset level and when the dataset is mixed within a much larger dataset of landmarks obtained from facial pictures.

The method we developed achieved excellent PET/CT matching on a large dataset from the TCIA-secured database, with a top 10 accuracy of 72% after patient realignment in a common reference and 71% after realignment with the database enlarged with more than 10 000 additional face photographs. This clearly demonstrates the capability of facial recognition methods to accurately recognize patients’ faces from PET imaging data, which are notoriously prone to lower spatial resolution compared to CT imaging or MRI. This study is, to our knowledge, the first to investigate face recognition on standard whole-body PET/CT without specific face-centred acquisition as well as investigate real-world PET re-identification risk by matching against a database with tens of thousands of samples. Previous investigations on face centred PET/CT scans have shown similar face identification performances with real patient pictures. For example, Schwarz et al. report an accuracy of 34% on 129 PET/face pictures matching, which is comparable to what we observe for each fold (32% mean top 1 accuracy). The nearest neighbour matching approach was the closest proxy to a natural face identification task. We corrected for a natural bias present in the task, as matching PET and CT samples are acquired simultaneously and the patient is at the same place, by performing a rotation/translation on all CT landmarks sets to bring them into the same reference frame as the query PET.

The original PET imaging data provided, as expected, quite limited performance. The use of a supervised denoising step on the morphological reconstructions themselves substantially improved landmark placement performances by nearly doubling the placement success rate and the ratio of landmarks well placed on test sets. Deep learning-based methods with relevant loss functions incorporating structural information demonstrated the highest performance, overpassing standard denoising methods in our study (35% gain on landmark placement performance by using deep-leaning based methods versus standard denoising). This showed that, despite the lower spatial resolution, accurate landmark placement on morphological reconstructions from PET imaging could be achieved by leveraging deep-leaning methods. Additionally, as newer generation PET scans achieve higher resolutions, the threat posed by automated face recognition approaches jointly increases.

It should be noted that this study was carried out devoid of personal patient pictures, which means that directly addressing the face recognition task as defined previously is unfeasible. We could not compare PET face reconstruction embeddings to face embeddings calculated on real patient pictures. We thus focused on the most accurate proxies available without ground-truth annotations, which are heavily inspired by knowledge of the workings of modern facial recognition software9. Indeed, we used face landmark placements as the compressed facial representation and, therefore, face landmark comparisons as a proxy for the one-to-one face comparison task.

The findings of this study have important implications for the medical community. They highlight the potential for using facial recognition combined with ML-based denoising on whole-body PET data at scale and raise concerns about its potential use to deanonymize data from public datasets. Given these possibilities, there may be the need to implement defacing methods when publicly sharing PET data. In the case of hybrid PET/CT or PET/MR acquisitions, defacing methods should be applied to all image modalities. Assessing the efficacy of such defacing methods is out of the scope of the present study. Future studies are mandatory to further explore this emerging critical point that our study highlighted, in particular in the context of emerging total body PET systems8. According to human rights and privacy laws, this point should be considered in the anonymization process of international multicentre PET data sharing.

Methods

Data description

We used a whole-body FDG-PET/CT dataset10 subject to a TCIA licence agreement5. The dataset cannot be shared here, in keeping with the Data Usage Agreement. It was initially provided for deep learning-based PET/CT data analysis to find tumour lesions in the context of the AutoPET challenge11. It includes images from 1,014 whole-body standard FDG-PET/CT scans of 900 patients. The scans had been acquired over four to eight PET bed positions, with the majority from the skull base to the mid-thigh. For all the scans, only the voxel size in the metadata was exploited. The PET imaging data were originally reconstructed using a 3D-ordered subset expectation maximization algorithm (two iterations, 21 subsets, Gaussian filter 2.0 mm, matrix size 400 × 400, slice thickness 3.0 mm, voxel size of 2.04 × 2.04 × 3 mm3), while the CT files were originally reconstructed using the following parameters: reference dose intensity of 200 mAs, tube voltage of 120 kV, and iterative reconstruction with a slice thickness of 2-3 mm. For this study purpose, all the CT images were resampled to the PET image space (CTres.nii.gz).

Proposed approach

The overall process is provided in Fig. 2. Briefly, we used a semi-supervised approach based on the three following consecutive modules to perform facial recognition from PET imaging data:

Morphology reconstruction module

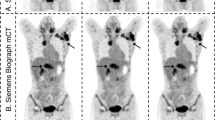

We first binarized the PET images by thresholding the standardized uptake values (SUVs) at the 95th percentile to remove structural noise while keeping relevant signal to distinguish the skin, on which we would expect to see nonzero voxel values. As Fig. 5. A shows, a percentile-based thresholding is necessary to remove very low SUV values that represent noise while accounting for the specificities of the SUV count distributions, which make mean-based thresholding (Otsu) inadequate. Indeed, the outlier voxels with very large SUV counts will bias any mean calculation-based threshold and lead to considering the voxels from the skin as noise. For the CT scans, we identified two peaks in the histograms (Hounsfield units, HU): the first peak, at very low HU, represents air and water, and the second one, with very high HU, represents denser tissues such as bone. To exclude low-density tissues, we applied an Otsu threshold on voxel histograms. Contrary to PET imaging data, CT intensities follow a bimodal distribution, which makes the Otsu threshold better suited to perform binarization. An example is shown in Fig. 5B. It is also important to note that for both PET and CT modalities, removing zero or negative-valued voxels exclusively (0 SUV and –1024 HU) does not adequately remove structural noise, which warrants the use of the different thresholds. For each patient, we then selected the largest connected component to remove spatially isolated regions and used the marching cubes algorithm12 implemented in scikit-image13 to form iso-surfaces and deduce 3D meshes. As we are interested in 2D images for demonstration purposes exclusively here, we projected our 3D meshes to 2D space with raycasting14 by using the Open3d15 library. To isolate the upper part of the body while capturing the face with the best angle, we placed our camera centre at 1/8 from the top of the 3D mesh. We then cropped the centred 2D projection to keep only the top 1/4 of the total image, a rule of thumb for keeping the reconstructed facial morphology. Finally, we manually excluded patients with arms in front of their faces relative to the raycasting viewpoint and the patients for whom no full-body PET/CT scan was available, leading to 853 effective patients for downstream analysis.

(a) SUV count (PET, left panel) and HU (CT, right panel) mean value distributions before and after thresholding to remove noisy voxels. Mean SUV counts were calculated for all voxels after removing voxels that were exactly at zero and after removing all voxels falling under a threshold. Mean HU values were calculated for all voxels after removing voxels at –1024. (referred to as zero thresholding in the figure), after thresholding calculated using the Otsu method and after applying the thresholding mask calculated using the PET modality of the same patient. (b) An example of CT binarization with Otsu thresholding. (c) An example of PET binarization with 95th percentile thresholding (same patient).

Denoising module

Three different configurations were assessed:

-

A basic configuration without any denoising, called “original”;

-

An unsupervised denoising configuration based on standard non-local means16 and wavelet transformations by using the scikit-image python functions, which we call “non-deep”;

-

A deep-learning-based method named “deep”, for which we trained a U-NET17 architecture with 4 levels of 16, 32, 64, and 128 filters. The input image is our reconstructed 2D morphology from the PET scan and we aim at outputting a “denoised” face image. Our ground truth is the 2D morphology obtained from the corresponding CT scan. For regularization purposes, we added batch normalization18 layers during the training and removed them at inference time. Our loss was a modified version of the structured similarity index19. Contrary to traditional losses such as the mean squared error (MSE) or the L1 norm, the structured similarity better considers structural information by aggregating three main features from an image: luminance \(l\), contrast \(c\), and structure \(s\). We performed a 5-fold cross-validation to test the denoising and downstream landmark detection analysis. For each fold, we split the training data into training and validation subsets to check for overfitting. We trained our model for 30 epochs with a batch size of 32 samples. We used the Adam optimizer20 with a starting learning rate of 0.01. We saved model checkpoints and used the set of model weights that achieved the lowest validation loss during training as our final model for the given fold.

Landmarks detection module

The placement of landmarks was performed using a partially accessible end-to-end neural network model, which takes images as inputs and outputs a dense mesh model of 468 vertices placed on the image (MediaPipe library in python API21). The machine learning pipeline includes two deep neural network models. The first operates on the full image and computes face locations, whereas the second, based on a residual neural network architecture, predicts the 3D landmarks via regression.

Statistical analysis

To determine whether we can accurately ‘recognize’ a patient’s face directly from the PET data, we defined 3 evaluation objectives:

-

Objective 1: The capacity of our semi-supervised approach to confidently place a set of landmarks on the corresponding face without and with denoising. The MediaPipe model outputs a confidence score along with the corresponding landmarks. Any model output with a confidence score under 0.5 was considered of low quality following the recommendations of the MediaPipe library and was, therefore, disregarded. The MediaPipe model is trained on photographic face images and its ability to confidently place landmarks on faces derived from PET reconstructions hints to the generalization capabilities of the facial recognition approach detailed here.

-

Objective 2: Whether the position of these landmarks was similar to the positions placed on the corresponding CT scan of the same patient, as CT scans have higher resolution with notoriously good face recognition potential. We used the mean absolute distance normalized by the interocular distance21 between the landmarks placed on the 2D PET facial reconstructions and the corresponding landmarks on the CT. We considered these landmarks to be well distributed on the patient’s face if this normalized distance was under 15%. This threshold was chosen qualitatively to reflect the human perception of the similarity between point clouds. The same analysis was conducted with a threshold of 10%, and similar conclusions were drawn.

-

Objective 3: Whether our model retrieves the correct patient by comparing the landmarks placed on the corresponding PET image to the landmarks placed on all CT morphological reconstructions from the entire dataset. We computed the mean Euclidean distance between each pair of CT/PET landmark point clouds without and with realignment between the two modalities with the iterative closest point (ICP) algorithm22 (N = 10 iterations). Realignment is necessary to ensure that the correct CT is not matched to a PET solely due to the similar patient position in the scans but instead, because the facial landmarks closely resemble one another. Results without realignment provide an upper bound to evaluate the retrieval performance. They are indicated here to serve as the “best case scenario” where only corresponding PET and CT pairs are in the same reference frame, due to the position of the patient during signal acquisition. To perform the realignment, we focused on feature-based realignment using the facial landmarks and ICP as opposed to intensity-based alignment algorithms. This choice was motivated by the applicability of our alignment to PET images with photographic face images captured in a different setting. Real pictures displaying radically different intensity profiles to images derived from the PET modality will be much less prone to realignment into a common reference frame using intensity-based methods. Using facial landmarks as the features for our alignment further ensures that our method generalizes to photographic images being compared to our PET facial reconstructions as we are sure that the features are directly linked to facial features. Relying on descriptors given by a method such as SIFT23 would give us unreliable key points potentially unrelated to facial features. We considered the top-n accuracies in the comparison task (n in [1, 15]), i.e., the accuracy of choosing the corresponding CT with the lowest distance for each PET image or if the correct CT is within the n CT scans with the lowest distance. We further considered these top-n accuracies where we mixed CT target landmarks with landmarks obtained from a larger face picture database of 10 168 adult face images while performing realignment24. The choice of Euclidean distance on facial landmarks as our comparison metric also ensures that the proposed pipeline generalizes to comparisons with photographic face images with different intensity profiles.

Data availability

Although we cannot share any of the raw CT/PET files in keeping with the Data Usage Agreement of the original dataset10, we provide the data necessary to reproduce all our figures not relying directly on patient data (Figs. 3 and 4). This data is available at figshare24 and includes top-level statistics around model training and final performance on landmark placement and matching tasks. With regards to performance, we also provide the raw landmark point clouds themselves, to be able to recalculate all metrics from scratch. Finally, we provide the specific python notebooks used to produce the figures from the shared data25.

Code availability

The full python code can be found in open access on GitHub. (https://github.com/SebastianPartarrieu/pet-deanonymization).

References

Petersen, R. C. et al. Alzheimer’s Disease Neuroimaging Initiative (ADNI): clinical characterization. Neurology 74, 201–209 (2010).

Clark, K. et al. The Cancer Imaging Archive (TCIA): Maintaining and Operating a Public Information Repository. J. Digit. Imaging 26, 1045–1057 (2013).

Shiyam Sundar, L. K. et al. Fully Automated, Semantic Segmentation of Whole-Body 18F-FDG PET/CT Images Based on Data-Centric Artificial Intelligence. J. Nucl. Med. Off. Publ. Soc. Nucl. Med. 63, 1941–1948 (2022).

Andrearczyk, V. et al. Overview of the HECKTOR Challenge at MICCAI 2021: Automatic Head and Neck Tumor Segmentation and Outcome Prediction in PET/CT Images. https://doi.org/10.48550/arXiv.2201.04138 (2022).

Gatidis, S. et al. A whole-body FDG-PET/CT Dataset with manually annotated Tumor Lesions. Sci. Data 9, 601 (2022).

Parks, C. L. & Monson, K. L. Automated Facial Recognition of Computed Tomography-Derived Facial Images: Patient Privacy Implications. J. Digit. Imaging 30, 204–214 (2017).

Schwarz, C. G. et al. Face recognition from research brain PET: An unexpected PET problem. NeuroImage 258, 119357 (2022).

Selfridge, A. R. et al. Facial anonymization and privacy concerns in total-body PET/CT. J. Nucl. Med. 64, 1304–1309. https://doi.org/10.2967/jnumed.122.265280 (2023).

Ouanan, H., Ouanan, M. & Aksasse, B. Facial landmark localization: Past, present and future. in 2016 4th IEEE International Colloquium on Information Science and Technology (CiSt) 487–493, https://doi.org/10.1109/CIST.2016.7805097 (2016).

Gatidis, S. & Kuestner, T. A whole-body FDG-PET/CT dataset with manually annotated tumor lesions (FDG-PET-CT-Lesions) [dataset]. The Cancer Imaging Archive https://doi.org/10.7937/gkr0-xv29 (2022).

Gatidis, S., Küstner, T., Ingrisch, M., Fabritius, M. & Cyran, C. Automated Lesion Segmentation in Whole-Body FDG-PET/CT. Zenodo https://doi.org/10.5281/zenodo.6362493 (2022).

Lorensen, W. E. & Cline, H. E. Marching cubes: A high resolution 3D surface construction algorithm. ACM SIGGRAPH Comput. Graph. 21, 163–169 (1987).

van der Walt, S. et al. scikit-image: Image processing in Python. PeerJ 2, e453 (2014).

Roth, S. D. Ray casting for modeling solids. Comput. Graph. Image Process. 18, 109–144 (1982).

Zhou, Q.-Y., Park, J. & Koltun, V. Open3D: A Modern Library for 3D Data Processing. https://doi.org/10.48550/arXiv.1801.09847 (2018).

Buades, A., Coll, B. & Morel, J.-M. Non-Local Means Denoising. Image Process. Line 1, 208–212 (2011).

Ronneberger, O., Fischer, P. & Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. https://doi.org/10.48550/arXiv.1505.04597 (2015).

Ioffe, S. & Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. https://doi.org/10.48550/arXiv.1502.03167 (2015).

Wang, Z., Bovik, A. C., Sheikh, H. R. & Simoncelli, E. P. Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13, 600–612. https://doi.org/10.1109/TIP.2003.819861 (2004).

Kingma, D. P. & Ba, J. Adam: A Method for Stochastic Optimization. https://doi.org/10.48550/arXiv.1412.6980 (2017).

Kartynnik, Y., Ablavatski, A., Grishchenko, I. & Grundmann, M. Real-time Facial Surface Geometry from Monocular Video on Mobile GPUs. https://doi.org/10.48550/arXiv.1907.06724 (2019).

Rusinkiewicz, S. & Levoy, M. Efficient variants of the ICP algorithm. in Proceedings Third International Conference on 3-D Digital Imaging and Modeling 145–152, https://doi.org/10.1109/IM.2001.924423 (2001).

Lowe, D. G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 60, 91–110 (2004).

Bainbridge, W. A., Isola, P. & Oliva, A. The intrinsic memorability of face photographs. J. Exp. Psychol. Gen. 142, 1323–1334 (2013).

Bou Hanna, E., Partarrieu, S., Berenbaum, A., Allassonnière, S. & Besson, F. Datasets and notebooks for reproducibility of manuscript ‘Exploring de-anonymization risks in PET imaging: Insights from a comprehensive analysis of 853 patient scans’. figshare https://doi.org/10.6084/m9.figshare.25909150.v1 (2024).

Author information

Authors and Affiliations

Contributions

E.B.H., S.P., A.B. and F.B. conceived and designed the study. E.B.H. and S.P. implemented the code, conducted the data analysis, and produced the figures. E.B.H., S.P., A.B. and F.B. wrote the manuscript. S.A reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Bou Hanna, E., Partarrieu, S., Berenbaum, A. et al. Exploring de-anonymization risks in PET imaging: Insights from a comprehensive analysis of 853 patient scans. Sci Data 11, 932 (2024). https://doi.org/10.1038/s41597-024-03800-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41597-024-03800-4