Abstract

Facial expression is among the most natural methods for human beings to convey their emotional information in daily life. Although the neural mechanisms of facial expression have been extensively studied employing lab-controlled images and a small number of lab-controlled video stimuli, how the human brain processes natural dynamic facial expression videos still needs to be investigated. To our knowledge, this type of data specifically on large-scale natural facial expression videos is currently missing. We describe here the natural Facial Expressions Dataset (NFED), an fMRI dataset including responses to 1,320 short (3-second) natural facial expression video clips. These video clips are annotated with three types of labels: emotion, gender, and ethnicity, along with accompanying metadata. We validate that the dataset has good quality within and across participants and, notably, can capture temporal and spatial stimuli features. NFED provides researchers with fMRI data for understanding of the visual processing of large number of natural facial expression videos.

Similar content being viewed by others

Background & Summary

Facial expressions are essential to nonverbal communication1, and how they are represented in the human brain is a core question in affective science as important affective information is carried out this way2,3,4. Affective computing is also one of the crucial research areas in contemporary human-computer interaction5.

The research on neural mechanisms underlying the processing of facial expressions has made significant progress in the past couple of decades. Researchers have systematically investigated the neural mechanisms involved in facial expression processing by using images or a small number of video stimuli. Numerous brain regions have been determined as involved in processing emotional information conveyed by observed facial expressions. These regions include the posterior superior temporal sulcus (pSTS)6,7,8,9,10 and the temporoparietal junction (TPJ)11,12,13, responsible for processing dynamic faces; the fusiform face area (FFA)14,15,16,17,18,19 and the occipital face area (OFA)20,21,22, which characterize faces’ static features; and the inferior parietal (IP) region, which processes facial expression and face recognition21. Large-scale functional magnetic resonance imaging (fMRI) datasets for facial expression image stimuli were employed to represent the relationship between brain responses and perceived stimuli through neural encoding and decoding23,24,25. However, these studies on facial expression processing used lab-controlled images and a limited number of lab-controlled video stimuli. How the human brain processes the complex characterization of natural dynamic facial expression videos still needs to be explored.

The utilization of naturalistic stimuli like films has enhanced our comprehension of the human brain in the field of cognitive neuroscience26. Experiments using lab-controlled stimuli necessarily aim at the brain’s responses within limited ranges. However, the naturalistic stimuli’s the sample space is much broader27,28. Naturalistic stimuli are more suitable for engaging participants and maintaining their attention, thereby evoking rich, real-life neural processes29,30,31,32,33. An fMRI dataset with a continuous movie stimulus has been collected to investigate social cognitive processes and face processing26. However, the lack of appropriate facial expression annotations (e.g., category labels) for these movie stimuli restricts the use of such datasets to test hypotheses regarding facial expressions. Moreover, the extended length of movies brings about ambiguous temporal event boundaries and other complexities34,35. Consequently, short naturalistic videos achieve an optimal balance between ecological validity and experimental control36.

Therefore, we present the Natural Facial Expressions Dataset (NFED), which recorded fMRI responses to 1,320 short (3-second) natural facial expression videos using a rapid event-related paradigm. The videos were sampled from the DFEW37 and CAER38. DFEW and CAER are collections of video clips respectively extracted from over 1,500 movies and 79 television series, encompassing various challenging disruptions (e.g., pose changes and extreme illuminations). These datasets were created for recognizing dynamic facial expressions in uncontrolled real-world environments in the field of computer vision, facilitating collaboration with the most advanced computer vision models. Additionally, to enhance the potential of NFED, we annotated a series of semantic metadata for natural facial expression videos (Fig. 1c).

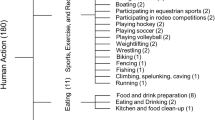

The NFED’s Experimental design. (a) fMRI main experiment: The sessions of main experiment are identical, comprising three field maps runs and six runs (four training runs and two testing runs). The sessions of the main experiment are the same, composed of 3 runs of field maps and 6 runs of video presentation. The 3-second videos were exhibited against a gray background at an 11.3° visual angle covered with a white fixation cross. Following the presentation of stimuli, a 3-second rest comprising a white fixation cross against a gray background was presented. A one-back task was performed by the participants by pressing buttons. (b) Eye-tracking experiment: the EyeLink 1000 Plus system embedded in the scanner is employed to surveil the attention states of the participants. (c) Stimuli and metadata: The 1,320 3-second video stimuli were annotated with semantic labels related to expressions, actions, and the description of sentences.

Below, we validate NFED’s quality through within and across participants analysis and display its suitability for modeling methods. First, we illustrate the hierarchical correspondence between deep convolutional neural networks (DCNNs) and the human brain by constructing encoding models. We then show that NFED can capture temporal and spatial stimulus features through metadata-driven analysis. This dataset provides fMRI data for researchers who are specifically interested in studying the visual processing of natural facial expression videos.

Methods

Participants

The research was approved by the Ethics Committee of the First Affiliated Hospital of Henan University of Chinese Medicine(approval number: AF/SC-08/03.2). To recruit potential participants, flyers were posted on campus. The experiment initially admitted 8 participants. All participants provided informed written consent for the sharing of their anonymized data. In the screening session, 3 participants were not admitted to participate in the NFED experiment because their attention was not focused. Finally, 5 participants (male, mean age ± SD = 28.6 ± 3.83 years) participated in the NFED experiment.

Stimuli

The stimuli for natural facial expression videos were sampled from the DFEW and CAER datasets, which were annotated with the expression categories. The DFEW and CAER datasets were respectively gathered from over 1,500 movies worldwide and 79 TV shows, encompassing various challenging disruptions (e.g., pose changes and extreme illuminations). Both DFEW and CAER are designed to be implemented as benchmarks for assessing the performance of computer vision models in recognizing dynamic facial expressions in real-world environments. Because our goal is to gather fMRI data for understanding the processing of emotional information conveyed by natural dynamic facial expressions, we selected 1,320 video clips extracted from the two datasets as stimuli for the NFED experiment. Each of these video clips is annotated with three types of labels: emotion, gender, and ethnicity (Table 1). Each video was square-cropped, trimmed to 3 seconds (composed of 30 frames per second), resized to 768 × 768 pixels, covered by a circular mask, and situated on a gray backdrop. The video lasts for three seconds, which is roughly equivalent to the length of human working memory39,40, and is considered an optimal duration for capturing brain responses to basic events36. Video durations less than three seconds may fail to capture complete actions, while those that are more than three seconds may introduce unwanted composite expressions (e.g., starting with an expression of happiness and then turning neutral). Compared with lab-controlled images and video stimuli, the naturalistic nature of movies and TV shows guarantees that we can evoke and capture the full extent of brain activity13,41,42.

The 1,320 videos were divided into two sets: “testing” and “training” sets. The testing set included 120 videos, and the training set encompassed the remaining 1,200 videos. In the testing set and training set, the number of repetitions presented to the participants was different. Specifically, the NFED comprised a testing set with a high repetition of 10 times and a training set with a low repetition of 2 times, serving to support analyses that rely on numerous repetitions and stimuli. In addition, the purpose of designing the testing and training sets is to mirror the testing and training sets used in the field of machine learning, indicating their potential application in constructing and assessing models.

Experimental design

A total of 11 separate fMRI sessions were completed by each participant. Session 1 included field maps, the population receptive field (pRF)43 defining retinotopic visual areas, functional localizer (fLoc)44 experiments defining category-selective regions, and structural scans. Sessions 2–11 included field maps and the runs of main functional experiment (during which the participants watched testing and training set videos). For a given participant throughout the entire experiment, each testing set video was presented 10 times, and each training set video was presented 2 times, resulting in 1,200 trials in the testing sets and 2,400 trials in the training sets. We arranged the trials into experimental runs, ensuring that each run exclusively comprised either training set videos (training runs) or testing set videos (test runs). Each of the 10 sessions after session 1 consisted of 4 training runs and 2 test runs. Field maps are located at the beginning, middle, and end of task runs in sessions 1–11.

Main Experiment

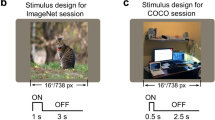

Natural facial expression videos were displayed employing a rapid event-related design with a trial structure of 3-second ON/3-second OFF. The main experiment consisted of 60 runs for each participant. Each run was composed of 30 distinct stimulus trials repeated twice, 4 one-back trials, and 4 blank trials (Fig. 1a). These trials were randomly presented in each run. Blank periods of 12 and 8 seconds were added to each run’s beginning and end to establish a robust baseline. Each run lasted 428 seconds. All stimuli (11.3° × 11.3° visual angle) were exhibited at the gray screen’s center and covered by a central white fixation cross (0.5° × 0.5° visual angle). Participants were requested to concentrate on the fixation cross during MRI scans and to press a button during the one-back task. The average accuracy of all participants for the one-back task was 98.7%.

Natural facial expression videos were presented using a BOLDscreen 32 monitor (resolution: 1920 × 1080, frequency: 120 Hz; size: 89 cm(width) × 50 cm(height); viewing distance: 168 cm) placed at the head of the scanner bed. Subjects watched the monitor through a mirror mounted on the head coil. The visual stimulation program was written using PsychoPy 2022.2.3, and the stimulation playback was remotely and synchronously controlled by a computer. Meanwhile, a dedicated MRI button box was used to record the behavioral data of the participants. Besides, an Eye-Link 1000 Plus system was employed to monitor the attention and sleep states of the participants during MRI scans (Fig. 1b).

Population receptive field (pRF) experiment

The early visual cortex’s retinotopic organization in each participant was mapped employing the pRF experiments obtained from the Human Connectome Project (HCP) 7 T Retinotopy experiment43,45. The stimuli comprised of apertures that moved slowly and were filled with a background of pink noise and dynamic colorful textures. Two different types of runs were utilized: multibar and wedgering. A total of 4 runs (2 multibar and 2 wedgering) were gathered for the NFED experiment. Each run lasted 300 seconds. There was a fixation dot shown at the screen’s center throughout the stimuli’s presentation. The dot randomly changed its color to either red, black, or white. Participants were requested to pay attention to the fixation dot and push a button when the central dot’s color turns red.

Functional localizer (fLoc) experiment

We utilized fLoc (http://vpnl.stanford.edu/fLoc/) to localize category-selective regions44,45. The experiment showed 10 distinct categories of grayscale images, encompassing faces (adult and child), characters (word and number), bodies (body and limb), objects (car and instrument), and places (corridor and house). Eight images from a specific category were presented successively in each trial, with each image lasting 0.5 seconds. For the NFED experiment, a total of 4 runs were gathered in session 1. Each run lasted 300 seconds. There was a small red dot shown at the screen’s center during the presentation of the stimuli. We requested participants to pay attention to the fixation dot and push buttons during the one-back trial.

MRI acquisition

A 3 Tesla Siemens MAGNETOM Prisma scanner was employed to collect MRI data at the Henan Key Laboratory of Imaging and Intelligent Processing, Zhengzhou, China, using a 64-channel head coil. Task-based fMRI data were gathered during all 11 sessions. At the end of session 1, we collected a T1-weighted (T1w) anatomical image for all participants. Three spin-echo field maps were obtained at the beginning, middle, and end of each fMRI session to correct magnetic field distortion. Earplugs were employed to reduce the noise generated by the scanner, and using a sponge cushion to stabilize the head and minimize head movement. In all scan runs, we utilized an EyeLink 1000 Plus system inside the scanner to monitor the participants’ sleep states and attention states. Specifically, we observed the subject’s eyes and the position of a blue dot on the screen (Fig. 1b). The presence of the blue dot within a central box indicated that the subject’s attention was focused, while deviations from this pattern suggested potential distractions or drowsiness.

Functional MRI

The collection of functional data was performed using an interleaved T2*-weighted, single-shot, gradient-echo echo-planar imaging (EPI) sequence: TR = 1000 ms; slices = 56, slice gap = 10%; matrix size = 96 × 96; voxel size = 2 × 2 × 2 mm3; slice thickness = 2 mm; flip angle = 68°; TE = 30 ms; bandwidth = 1748 Hz/Px; echo spacing = 0.68 ms; multi-band acceleration factor = 4; field of view (FOV) = 220 × 220mm2; Phase-encoding direction: anterior to posterior (AP). Prescan Normalize: Off; Slice orientation: axial, coronal, sagittal; Auto-align: Head > Brain; Shim mode: B0 Shim mode Standard, B1 Shim mode TrueForm; Slice acquisition order:Interleaved; Other acceleration: acceleration factor PE = 2.

Field map

The field maps were obtained for correcting EPI spatial distortion using a 2-D spin-echo sequence: TR = 1000 ms; field of view = 220 × 220 mm2; flip angle = 60°; voxel size = 2 × 2 × 2 mm3;TE1/TE2 = 4.76/7.22 ms; slice thickness = 2 mm; slices = 56,slice gap = 10%. Prescan Normalize: Off; Slice orientation: axial, coronal, sagittal; Auto-align: Head > Brain; Shim mode: B0 Shim mode Standard, B1 Shim mode TrueForm; Slice acquisition order: Interleaved.

Structural MRI

We employed a 3D-MPRAGE sequence to acquire structural T1-weighted images: 192 slices; TR = 2300 ms; TE = 2.26 ms; TI = 1100 ms; voxel size = 1 × 1 × 1 mm3; FOV = 256 × 256 mm2; matrix size = 256 × 256; echo spacing = 6.8 ms; slice thickness = 1 mm; flip angle = 8°. Prescan normalization: turned on; Acceleration Factor = 2.

Data analysis

We adopted the data analysis method proposed by Kay et al.46 (http://surfer.nmr.mgh.harvard.edu). The FreeSurfer software was utilized to generate participants’ pial and white surfaces based on the T1 volume. Furthermore, for each participant, we constructed an intermediate gray matter surface between the pial and the white surfaces.

We employed the FSL utility “prelude” to phase-unwrap field maps obtained during each scan session. To regularize the field maps, we then used an Epanechnikov kernel to perform three-dimensional local linear regression. In the regression, we employed the magnitude component values from the field maps as weights, enhancing the field estimates’ robustness. This regularization process eliminates noise in the field maps and imposes spatial smoothness. Ultimately, The field maps underwent linear interpolation over time, resulting in an estimation of the field strength for all functional volumes.

We initially pre-processed the task-based functional data based on volume by executing one spatial resampling and one temporal resampling. For the purpose of EPI distortion and head motion correction, we performed one spatial resampling. The correction for slice timing was achieved through temporal resampling, which involved performing one cubic interpolation on the time series of each voxel. The EPI spatial distortion was corrected by using regularized time-interpolated field maps. The SPM12 utility “spm_realign” was then employed to estimate the parameters of rigid-body motion from the undistorted EPI volumes. Ultimately, we used one cubic interpolation on each volume that had been corrected for slice timing to realize the spatial resampling.

The mean attained by pre-processing functional volumes in a session was co-registered to the T1 volume. A three-dimensional ellipse defined manually was employed to concentrate cost metric on brain areas that were not impacted by gross susceptibility influences during the co-registration alignment estimation. The ultimate outcome of the co-registration process is a transformation that illustrates how to align the EPI data with the native anatomy of the participants.

We used pre-processing based on surface to re-analyze the functional data following the completion of co-registration for the anatomical data. This two-stage method was adopted due to the necessity of volume-based pre-processing to produce undistorted functional volumes of high quality, which is essential for registering the functional data with the anatomical data. Surface-based pre-processing can only begin after obtaining this registration.

During pre-processing based on surface, the processes are identical to those in volume-based pre-processing, with the only difference being that the ultimate spatial interpolation is conducted at the vertices of the intermediate gray matter surfaces. Therefore, the sole distinction lies in whether the data is being prepared on an irregular manifold of densely spaced vertices (surface) or a regular three-dimensional grid (volume). The procedures of surface-based pre-processing can finally be simplified to one spatial resampling (for addressing EPI distortion, head motion, and registration to anatomy) and one temporal resampling (for managing slice acquisition times). The advantage of conducting only two simple pre-processing steps is to avoid unnecessary interpolation and maximize the preservation of spatial resolution46,47,48. The time-series data were ultimately generated for every vertex of the cortical surfaces after completing this pre-processing stage.

GLM analysis of main experiment

We utilized a single-trial General Linear Model (GLMsingle)49 (https://github.com/cvnlab/GLMsingle) approach, an advanced denoising approach implemented in MATLAB R2019a, to model the pre-processed fMRI data from the main experiment. For single trials, the method of GLM was developed to offer estimations of BOLD response magnitudes (‘betas’). In this study, three betas were calculated by analyzing the BOLD response corresponding to individual video onset ranging from 1 to 3 seconds with 1-second intervals. We produced individual GLMsingle models for each session (consisted of 4 training runs and 2 test runs). In general, for each video within the training set, 2 (repetitions) × 3 (seconds) betas were acquired. Similarly, for each video within the testing set, 10 (repetitions) × 3 (seconds) betas were acquired. The utilization of repetitions enabled us to acquire video-evoked responses with a high signal-to-noise ratio (SNR).

GLM analysis of the pRF experiment

We used the Compressive Spatial Summation model50 conducted in analyzePRF (http://cvnlab.net/analyzePRF/) to model the time-series data acquired from the pRF experiment. Initially, the time-series data from the 2 repetitions for each type of run (multibar, wedgering) were averaged. Then, we estimated pRF parameters for each vertex using the analyzePRF. We mapped the outcomes to the cortex surface by executing linear interpolation on the vertices and then computing the average across cortical depth.

GLM analysis of the fLoc experiment

GLMdenoise51,52, a denoising approach based on data-driven, was applied to model the time-series data from the fLoc experiment. We utilized a “condition-split” approach to encode the 10 stimulus categories, dividing the trials linked to each category into distinct conditions within every run. For each category, six condition-splits were employed to generate six betas of response. For the purpose of quantifying the selectivity for various categories and fields, we calculated t-values from the GLM betas following conducting the GLM. We conducted linear interpolation to map the results to the inflated surface of FreeSurfer.

Data Records

The data, which is structured following the BIDS format53, were accessible at https://openneuro.org/datasets/ds00504754. The “sub- < subID > ” folders store the raw data of each participant (Fig. 2c). The pre-processed volume data are saved in the “derivatives/pre-processed_volume_data/sub- < subID > ” folders (Fig. 2d), while the pre-processed surface data are saved in the “derivatives/volumetosurface/sub- < subID > ” folders (Fig. 2e).

The NFED’s folder structure. (a) The NFED’s overall directory structure. (b) The stimulus’s folder structure. (c) The raw data’s folder structure from a participant. (d) The pre-processed volume data’s folder structure. (e) The pre-processed surface data’s folder structure. (f) The folder structure of the results of reconstructing the cortical surface for all participants. (g) The folder structure of the GLM analysis data from the main experiment and the mean of the GLM analysis data from the pRF and fLoc experiments. (h) The folder structure of technical validation.

Stimulus

Distinct folders store the stimuli for distinct fMRI experiments: “stimuli/face-video”, “stimuli/floc”, and “stimuli/prf” (Fig. 2b). The category labels and metadata corresponding to video stimuli are stored in the “videos-stimuli_category_metadata.tsv”. The “videos-stimuli_description.json” file describes category and metadata information of video stimuli (Fig. 2b).

Raw MRI data

Each participant’s folder is comprised of 11 session folders: “sub- < subID > /ses-anat”, “sub- < subID > /ses- < sesID > ” (Fig. 2c). The “ses-anat” folder comprises one folder, named “anat”. The “ses- < sesID > ” folder comprises “fmap”and “func” folders. A “sub- < subID > _ses- < sesID > _scans.tsv” file stores the scan information for a scan session. The task events were saved as a “sub- < subID > /func/sub- < subID > _ses- < sesID > _task- < taskID > _run- < runID > _events.tsv” file for each run. The parameter descriptions in “events.tsv” are saved in”task-face_events.json”.

Volume data from pre-processing

The pre-processed volume-based fMRI data were in the folder named “pre-processed_volume_data/sub- < subID > /ses- < sesID > ” (Fig. 2d). A “sub- < subID > _ses- < sesID > _task- < taskID > _space-individual_desc-volume_run- < runID > .nii” file stores the pre-processed volumes data of a run. A “sub- < subID > _ses- < sesID > _task- < taskID > _space-individual_desc-volume_mean.nii” file stores the mean of the pre-processed volume data of a session. A “sub- < subID > _ses- < sesID > _task- < taskID > _space-individual_desc-volume_run- < runID > _timeseries.tsv” file stores the head motion of participants. The explanation of head motion parameters are in the folder named “sub- < subID > _ses- < sesID > _task- < taskID > _space-individual_desc-volume_run- < runID > _timeseries.json”. The parameters of co-registration alignment for a session were stored in the folder named “sub- < subID > _ses- < sesID > _task- < taskID > _alignment”. The process of the pre-processing based on volume is stored in “pre-processed_volume_data_description.json”.

Surface data from pre-processing

The pre-processed surface-based data were stored in a file named “volumetosurface/sub- < subID > /ses- < sesID > /sub- < subID > _ses- < sesID > _task- < taskID > _run- < index > _hemi-lh/rh.surfacedata.gii” for each run (Fig. 2e). The process of the pre-processing based on surface is stored in “volumetosurface_data_description.json”.

FreeSurfer recon-all

The results of reconstructing the cortical surface are saved as “recon-all-FreeSurfer/sub- < subID > ” folders (Fig. 2f). The process of the recon-all and the information of FreeSurfer are stored in “recon-all-freesurfer_data_description.json”.

Surface-based GLM analysis data

We have conducted GLMsingle on the data of the main experiment. There is a file named “sub– < subID > _ses- < sesID > _task- < taskID > _betas_FITHRF_GLMDENOISE_RR.hdf5”, which contains the betas from a scanning session. A “sub- < subID > _ses- < sesID > _task- < taskID > _FRACvalue.hdf5” file stores the the fractional ridge regression regularization level selected for individual voxels of a scanning session. A “sub- < subID > _ses- < sesID > _task- < taskID > _HRFindex.hdf5” file stores the 1-index of the best Hemodynamic Response Function (HRF) of a scanning session. The file named “sub- < subID > _ses- < sesID > _task- < taskID > _HRFindexrun.hdf5” stores HRF index which are segregated by run. A “sub- < subID > _ses- < sesID > _task- < taskID > _R2.hdf5” file stores the model accuracy. A “sub- < subID > _ses- < sesID > _task- < taskID > _R2run.hdf5” file stores R2 which is segregated by run. A “sub- < subID > _ses- < sesID > _task- < taskID > _mean.mat” file stores the mean of the GLM analysis data for the pRF and fLoc experiments (Fig. 2g). The process of the GLM analysis are stored in “surface-based_GLM_analysis_data_description.json”.

Validation

The code of technical validation was saved in the “derivatives/validation/code” folder. The results of technical validation were saved in the “derivatives/validation/results” folder (Fig. 2h). The “README.md” describes the detailed information of code and results.

Technical Validation

Basic quality control

The fundamental quality control suggests that the data displays good quality. To evaluate the quality of the structural data obtained from NFED, we employed four crucial metrics55 (see Fig. 3a): Coefficient of Joint Variation (CJV), Contrast-to-Noise Ratio (CNR), Signal-to-Noise Ratio in Grey Matter (SNR_GM), and Signal-to-Noise Ratio in White Matter (SNR_WM). Specifically, the CJV is between white matter (WM) and grey matter (GM). The CNR assesses the relationship between the contrast of GM and WM with the noise present in the image. The SNR assesses the connection between the mean signal measurements and the noise present in the image. The SNR assessment is conducted individually for GM and WM.

Basic data quality check of the NFED. (a) The scatter plots display data quality of T1-weighted structural scan. Each point represents the data value from each participant. In the figure, a downward arrow suggests that a smaller value be equivalent to better data quality. Conversely, an upward arrow means that a larger value is indicative of better data quality. μ represents the mean brightness of the image, while σ represents the standard deviation. (b) The violin plots display FD for each participant across all runs. The pink bars, black dots, and error bars lines stand for the range of quartiles, the mean, and minimum and maximum values, respectively. Participant motion was low in the NFED, as shown by a mean FD well below 0.5 mm for all participants. (c) The violin plots of tSNR values across the brain. The mean tSNR across participants was 75.0 ± 5.79(with a minimum mean across participants was 68.16 and a maximum mean across participants was 81.90). (d) The tSNR maps for the NFED experiment in the whole brain.

For quality control of the NFED’s functional scans, we assessed the amount of head motion for each participant and the temporal signal-to-noise ratio (tSNR) of the time-series data, separately.

We used the framewise displacement (FD) metric56 to measure the head motion of each participant. All participants display no volumes with FD exceeding 0.5 mm (Fig. 3a), which is a standard commonly utilized in the literature to identify volumes exhibiting large head motion56. The mean FD across participants was 0.051 mm (with a minimum mean of 0.038 mm and a maximum of 0.069 mm, see Fig. 3a). The results suggest that participants effectively controlled head motion throughout the scan.

The tSNR is a commonly employed measure to evaluate the capacity for detecting brain activation in fMRI data57. To be specific, the tSNR was calculated as the average of the time course of each vertex divided by its standard deviation of the pre-processed data from each run. Individual tSNR maps for the main experiment were then generated by averaging across all runs. The mean tSNR for the whole-brain across participants was 75.0 ± 5.79 (with a minimum mean of 68.16 and a maximum of 81.90, see Fig. 3b), which has comparability with previous datasets26,58,59. As predicted, there was variation in tSNR among different brain regions, with dorsal areas exhibiting higher tSNR levels, while the anterior temporal cortex and orbito-frontal cortex showed lower tSNR levels (Fig. 3c). Overall, these findings suggest that the data exhibit strong tSNR levels in the main experiment.

The visual cortex exhibits reliable BOLD responses to natural facial expression videos stimuli in main experiment

In the main experiment, there were 5 participants who accomplished 10 sessions, each consisting of 4 training runs and 2 test runs, making a total of 60 runs (40 training and 20 test runs) in all. For each run, 30 (videos)*2 (repetitions) × 3 (seconds) betas were acquired. Z-score normalization was performed on the raw betas at each voxel for each run. The betas were then averaged across stimulus repetitions to generate a vector of betas. In general, 44 runs (1320 videos, 40 training runs, and 4 test runs)*90 beta series were acquired. Hence, the test-retest reliability of responses to 1320 videos in main experiment was evaluated through computing the Pearson correlation between the 90 beta series obtained from the even runs and odd runs on each vertex. The occipital cortex exhibits high test-retest reliability, which also extends into the regions of the temporal and parietal cortex (Fig. 4). Analysis results indicate that the fMRI data collected during the main experiment demonstrate reasonable test-retest reliability across natural facial expression videos.

Noise ceiling estimation

To evaluate multivariate reliability of the NFED, noise ceilings were computed for each vertex in the entire brain utilizing the testing set. The noise ceiling refers to the highest level of accuracy that a model is capable of achieving, considering the presence of noise in the data60,61. In our case, the beta values estimated in the GLM are the data of interest. Z-score normalization was performed on the raw beta values at each voxel for each participant individually. The values were then averaged across 3 beta values (beta1, beta2, beta3) and across stimulus repetitions to generate a (n_stimuli × 1) vector of betas.

The Monte Carlo simulations were conducted to assess the noise ceiling following the general framework outlined in prior research60,62. In these simulations, a known signal is produced, along with noisy measurements of this signal, following which the R2 between the measurements and the signal is computed. Specifically, the beta weights and their standard errors were initially gathered through simulations. Since each stimulus was shown only 10 times, the standard errors of the individual beta values are comparatively noisy. Thus, a combined standard error was computed and thought of as the normal distribution’s standard deviation that represents the noise. Subsequently, the mean of the beta values was calculated and considered as the normal distribution’s mean that represents the signal. The amount of variance that can be attributed to noise was subtracted from the total amount of variance in the data to estimate the signal distribution’s standard deviation, and the outcome was forced to be non-negative, and subsequently the square root was calculated. Ultimately, we produced a signal by extracting random values from the signal distribution, created a noisy measurement of this signal by adding random values extracted from the noise distribution to the signal, and computed the R2 between the measurement and the signal. A total of 1000 simulations (100 signals, 10 measurements per signal) were conducted and the average R2 value was taken as the noise ceiling.

According to the approach described above, we estimated the noise ceiling for all participants. Surface mappings of noise ceiling outcomes indicate the areas where responses to the NFED stimuli are reliable. The occipital cortex exhibits high noise ceilings, which also extend into the regions of the temporal and parietal cortex (Fig. 5a). We presented the quantifications of the average noise ceiling level for the whole-brain for each participant (Fig. 5b). The average whole-brain noise ceiling across participants was 34.75% ± 8.16% (with a minimum mean of 25.08 and a maximum of 47.78), which has comparability with previous datasets45.

Manually defined regions of interest (ROIs)

The pRF and fLoc experiments are capable of successfully defining subject-specific functional ROIs. We conducted a pRF experiment and an fLoc experiment during session 1 to facilitate precise-scale subject-specific analyses. To map the retinotopic representation in the low-level visual regions of each participant, we utilized the data obtained from the pRF experiment. The spatial patterns of polar angles through individual data analysis using the pRF model closely resemble those obtained from the Human Connectome Project 7 T Retinotopy Dataset (Fig. 6a). The fLoc experiment was utilized to map high-level regions that show selectivity for categories. The brain areas related to facial and facial semantic recognition can be easily identified using the fLoc experiment (Fig. 6b). In general, these analyses indicate that NFED’s functional localizer effectively delineates subject-specific visual regions with good quality.

NFED provides ROIs. We defined various ROIs through fLoc and pRF experiments. Here, example outcomes for subject 1, subject 4, and subject 5 are displayed. (a) Early visual regions. The outcomes are displayed on the sphere surface of FreeSurfer. (b) Regions selective for faces. We computed t-values by the contrast of faces against all other categories to define areas. The outcomes are displayed on the inflated surface of FreeSurfer.

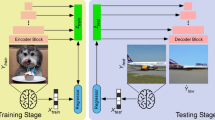

The hierarchical correspondences between the DCNN and the human brain

Many studies have revealed hierarchical correspondences in the characterization between DCNN and the human brain63,64,65 by encoding models. In this study, the data from the main, fLoc, and pRF experiments were combined to construct an encoding model aimed at replicating the hierarchical correspondence between the DCNN and the human brain. We constructed encoding models to establish a mapping between the neural characterizations from the fMRI response at each vertex and the artificial characterizations extracted from different layers of the pre-trained VideoMAEv266. Specifically, a linear regression model was optimized for each vertex to combine the outputs of units from a single layer of the DCNN, resulting in the most accurate prediction of the fMRI response to the training set videos.

The principal components derived from the outputs of the DCNN were recognized and utilized as regressors to account for the signal of the fMRI vertex. Given the training set videos, a much smaller number of components could largely explain the output of each layer in the CNN. For the first through fortieth layers, we performed PCA to extract 60 components for each layer (information loss was negligible (1%)). Although there was a significant reduction in dimension, the loss of information could be neglected, and the overfitting during the training of the encoding model was greatly alleviated by the reduction in feature dimension67.

After training an individual encoding model for each vertex, the fMRI responses to the testing set videos could be predicted utilizing these models. For each vertex, we evaluated the accuracy of prediction by measuring the correlation between the measured and predicted fMRI responses. The encoding models exhibited reasonably high accuracies in predicting cortical responses across almost the entire visual cortex (Fig. 7a). In addition to the ventral visual stream, the regions that could be predicted extended even further to encompass the dorsal visual stream, frontal cortices, temporal, and parietal (Fig. 7a).

Cortical predictability based on encoding models. (a) Model-predicted accuracy in cortical responses to facial expression video stimuli is assessed by measuring the Pearson correlation between the measured and predicted responses during the testing video. (b) The predicted accuracy of encoding models for different ROIs using different layers of CNNs. The accuracy of predictions for each ROI was calculated by averaging across the top 100 vertices within the ROI and across participants. The error bars in the graph represent the standard error, while the curves stand for the average performance.

Example regions in different levels of the visual hierarchy were chosen as ROIs: V1, V2, V3, V4 (ventromedial visual areas), LO (lateral occipital), FFA, OFA, pSTS, TPJ, IPS, MT (middle temporal), V3A, V3B. We selected the top 100 vertices with better encoding performance in each ROI for further analysis. As shown in Fig. 7b, the encoding correlation in low-level visual regions exhibits a gradual decrease as the layers of VideoMAEv2 ascend. Conversely, there is a contrasting trend in the encoding accuracy observed in the high-level visual regions. In summary, NFED validates an early-to-early and late-to-late correspondence pattern between DCNN layers and the human visual cortex.

Metadata-driven analysis

The metadata was leveraged to reveal that spatial and temporal stimulus characteristics were encoded in the brain. In order to perform numerical analyses on text-based data, we feed the text into a video-language model (InternVideo)68 to produce model embeddings. Subsequently, we establish a connection between the model embeddings and brain responses by employing Representational Similarity Analysis (RSA)69, which primarily involves correlating the Representational Dissimilarity Matrix (RDM) at each vertex defined by the brain responses with an RDM defined by the metadata (Fig. 8a).

Semantic analysis of facial expression videos based on metadata encoding. (a) RSA approach based on metadata: Video metadata is provided as input to a video-language model for the purpose of generating a vector embedding. In a similar manner, we extract brain responses evoked by facial expression videos within a searchlight disk, producing a vector embedding at each vertex. The pairwise similarity of the brain embeddings evoked by the video and the metadata embeddings is separately computed to construct an n_stimuli × n_stimuli RDM. Subsequently, we correlate the metadata RDM with the voxel-wise RDMs generated through searchlight analysis. (b) Metadata RDMs: To produce vector embeddings, we fed the metadata for “expressions” and “actions” into a video-language model. We used cosine distance to compute the RDMs, and their visual representation is shown here. (c) Correlation of RDMs at each vertex in the brain with metadata RDMs across the entire brain: We computed the Spearman correlation between the metadata RDM and each RDM at each vertex in the brain for all participants. (d) Correlation based on ROI: The average correlations across participants within the ROI are shown. We conducted permutation tests to detect whether the Pearson correlation of voxel responses in each brain region is significantly higher than the null hypothesis distribution. Voxels with a Pearson correlation higher than 0.0126 are considered accurately predictable, indicating a significant difference from the null hypothesis (p < 0.05). The 95% confidence interval is depicted by the error bars.

For establishing the metadata RDM, the action and expression labels from 120 testing set videos were fed into a video-language model (InternVideo)68. This process generated vector embeddings for each label. A single 120 × 120 RDM for the expression and action metadata was produced by calculating the pairwise cosine distance between the vector embeddings of each video. Figure 8b shows the RDMs for the expression and action, respectively.

A searchlight analysis70 was performed to produce the RDMs at each vertex. Specifically, a searchlight disk of two dimensions, with a radius of 3 mm around the vertex v, was then defined. Subsequently, the betas (3 betas averaged over repetitions and z-scored across conditions) for all conditions in the testing set were extracted at every vertex. Therefore, each facial expression video stimulus possesses a corresponding beta vector, one from each vertex in the disk. To generate an RDM at the central vertex v, the Pearson correlation for all pairs of stimulus vectors was calculated. This process is repeated for all vectors in the entire brain for each participant. Next, we conducted Spearman correlation between the metadata RDM and the RDMs at each vertex for all participants. The results from the whole-brain analysis (Fig. 8c) indicate that the correlations between the two types of metadata are predominantly significant in the ventral visual regions, dorsal visual regions, and face-selective regions. For the ROI analysis (Fig. 8d), we conducted permutation tests to detect whether the Pearson correlation of voxel responses in each brain region is significantly higher than the null hypothesis distribution. Specifically, we randomly shuffle the metadata RDM and perform Pearson correlation with the searchlight-based RDM at each voxel for the ROI analysis (Fig. 8d). Repeat this process 10,000 times to obtain the null hypothesis distribution. From the null distribution, it can be concluded that for all voxel responses, if their Pearson correlation is higher than 0.0126, it indicates that the Pearson correlation of the voxel response is significantly higher than the null hypothesis (p < 0.05). In this paper, such voxels are referred to as accurately predictable.

Usage Notes

The NFED offers researchers a unique resource for understanding the visual processing of a large number of short natural facial expression videos. Initially, given a large number of short natural facial expression videos along with corresponding BOLD responses recordings, NFED has the potential to offer fine-grained investigation into how the brain represents wide-ranging visual characteristics. Specifically, it facilitates studies on neural encoding and decoding based on fMRI. Neural encoding aims at predicting brain responses to perceived natural facial expression videos, while neural decoding aims at classifying, identifying or reconstructing perceived natural facial expression based on brain responses. Secondly, the NFED includes annotated semantic metadata for the facial expression videos. Additionally, to enhance the potential of NFED, we annotated a series of semantic metadata for natural facial expression videos.

Although NFED is considered by us to be a distinctive resource for exploring neural representation of a large number of short natural facial expression videos, the limitations of this dataset also should be acknowledged. Specifically, the NFED experiment includes data from only five subjects, which limits its suitability for making an fMRI group analysis.

Code availability

The entire code for data pre-processing, data organization, GLM, and validation is available at https://zenodo.org/records/13919442 (https://zenodo.org/account/settings/github/repository/chenpanp/NFED_fmri). The entire code, the data required to run the code, and the results are also available available at https://openneuro.org/datasets/ds005047 (derivatives/validation/code).

References

Tracy, J. L., Randles, D. & Steckler, C. M. The nonverbal communication of emotions. Curr. Opin. Behav. Sci. 3, 25–30 (2015).

Ekman, P. Facial Expression and Emotion. American Psychologist. 48(4), 384–392 (1993).

Chen, J., Wang, Z., Li, Z., Peng, D. & Fang, Y. Disturbances of affective cognition in mood disorders. Sci. China Life Sci. 64, 938–941 (2021).

Fang, F. & Hu, H. Recent progress on mechanisms of human cognition and brain disorders. Sci. China Life Sci. 64, 843–846 (2021).

Giordano, B. L. et al. The representational dynamics of perceived voice emotions evolve from categories to dimensions. Nat. Hum. Behav. 5, 1203–1213 (2021).

Pitcher, D. & Ungerleider, L. G. Evidence for a Third Visual Pathway Specialized for Social Perception. Trends Cogn. Sci. 25, 100–110 (2021).

Allison, T., Puce, A. & McCarthy, G. Social perception from visual cues: role of the STS region. Trends Cogn. Sci. 4, 267–278 (2000).

Lee, L. C. et al. Neural responses to rigidly moving faces displaying shifts in social attention investigated with fMRI and MEG. Neuropsychologia 48, 477–490 (2010).

Pelphrey, K. A., Singerman, J. D., Allison, T. & McCarthy, G. Brain activation evoked by perception of gaze shifts: the influence of context. Neuropsychologia 41, 156–170 (2003).

Puce, A., Allison, T., Bentin, S., Gore, J. C. & McCarthy, G. Temporal Cortex Activation in Humans Viewing Eye and Mouth Movements. J. Neurosci. 18, 2188–2199 (1998).

Grèzes, J., Pichon, S. & De Gelder, B. Perceiving fear in dynamic body expressions. NeuroImage 35, 959–967 (2007).

Pichon, S., De Gelder, B. & Grèzes, J. Two different faces of threat. Comparing the neural systems for recognizing fear and anger in dynamic body expressions. NeuroImage 47, 1873–1883 (2009).

Kret, M. E., Pichon, S., Grèzes, J. & De Gelder, B. Similarities and differences in perceiving threat from dynamic faces and bodies. An fMRI study. NeuroImage 54, 1755–1762 (2011).

Grill-Spector, K., Knouf, N. & Kanwisher, N. The fusiform face area subserves face perception, not generic within-category identification. Nat. Neurosci. 7, 555–562 (2004).

Yovel, G. & Kanwisher, N. Face PerceptionDomain Specific, Not Process Specific. Neuron 44, 889–898 (2004).

Kanwisher, N., McDermott, J. & Chun, M. M. The Fusiform Face Area: A Module in Human Extrastriate Cortex Specialized for Face Perception. Neuroscience. 17(11), 4302–4311 (1997).

Jacob, H. et al. Cerebral integration of verbal and nonverbal emotional cues: Impact of individual nonverbal dominance. NeuroImage 61, 738–747 (2012).

Bernstein, M., Erez, Y., Blank, I. & Yovel, G. An Integrated Neural Framework for Dynamic and Static Face Processing. Sci. Rep. 8, 7036 (2018).

McCarthy, G. Face-Specific Processing in the Human Fusiforrn Gyms. Neuroscience 9(5), 605–610 (1997).

Rotshtein, P., Henson, R. N. A., Treves, A., Driver, J. & Dolan, R. J. Morphing Marilyn into Maggie dissociates physical and identity face representations in the brain. Nat. Neurosci. 8, 107–113 (2005).

Zhang, Z. et al. Decoding the temporal representation of facial expression in face- selective regions. NeuroImage 283, 120442 (2023).

Tsantani, M. et al. FFA and OFA Encode Distinct Types of Face Identity Information. J. Neurosci. 41, 1952–1969 (2021).

VanRullen, R. & Reddy, L. Reconstructing faces from fMRI patterns using deep generative neural networks. Commun. Biol. 2, 193 (2019).

Güçlütürk, Y., Güçlü, U., Seeliger, K. & Bosch, S. Reconstructing perceived faces from brain activations with deep adversarial neural decoding. NIPS. 12, 4249–4260 (2017).

Du, C., Du, C., Huang, L., Wang, H. & He, H. Structured Neural Decoding With Multitask Transfer Learning of Deep Neural Network Representations. IEEE Trans. Neural Netw. Learn. Syst. 33, 600–614 (2022).

Visconti Di Oleggio Castello, M., Chauhan, V., Jiahui, G. & Gobbini, M. I. An fMRI dataset in response to “The Grand Budapest Hotel”, a socially-rich, naturalistic movie. Sci. Data 7, 383 (2020).

Haxby, J. V., Guntupalli, J. S., Nastase, S. A. & Feilong, M. Hyperalignment: Modeling shared information encoded in idiosyncratic cortical topographies. eLife 9, e56601 (2020).

Wu, M. C.-K., David, S. V. & Gallant, J. L. Complete functional characterization of sensory neurons by system identification. Annu. Rev. Neurosci. 29, 477–505 (2006).

Vanderwal, T., Eilbott, J. & Castellanos, F. X. Movies in the magnet: Naturalistic paradigms in developmental functional neuroimaging. Dev. Cogn. Neurosci. 36, 100600 (2019).

Berkes, P., Orbán, G., Lengyel, M. & Fiser, J. Spontaneous Cortical Activity Reveals Hallmarks of an Optimal Internal Model of the Environment. Science 331, 83–87 (2011).

Olshausen, B. A. & Field, D. J. Natural image statistics and efficient coding. Network: Computation in Neural Systems, 7(2), 333–339.

Olshausen, B. A. Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature 381, 607–609 (1996).

Smyth, D., Willmore, B., Baker, G. E., Thompson, I. D. & Tolhurst, D. J. The Receptive- Field Organization of Simple Cells in Primary Visual Cortex of Ferrets under Natural Scene Stimulation. J. Neurosci. 23, 4746–4759 (2003).

Hasson, U., Furman, O., Clark, D., Dudai, Y. & Davachi, L. Enhanced Intersubject Correlations during Movie Viewing Correlate with Successful Episodic Encoding. Neuron 57, 452–462 (2008).

Roberts, J. A. Fixational eye movements during viewing of dynamic natural scenes. Front. Psychol. 4, 797 (2013).

Lahner, B. et al. Modeling short visual events through the BOLD moments video fMRI dataset and metadata. Nat. Commun. 15, 6241 (2024).

Jiang, X. et al. Dfew: A large-scale database for recognizing dynamic facial expressions inthe wild. In Proceedings of the 28th ACM International Conference on Multimedia, 2881–2889(2020).

Lee, J., Kim, S., Kim, S., Park, J. & Sohn, K. Context-Aware Emotion Recognition Networks. in 2019 IEEE/CVF International Conference on Computer Vision (ICCV) 10142–10151, https://doi.org/10.1109/ICCV.2019.01024 (IEEE, Seoul, Korea (South), 2019).

Barrouillet, P., Bernardin, S. & Camos, V. Time Constraints and Resource Sharing in Adults’ Working Memory Spans. J. Exp. Psychol. Gen. 133, 83–100 (2004).

Schneider, W. X. Selective visual processing across competition episodes: a theory of task-driven visual attention and working memory. Philos. Trans. R. Soc. B Biol. Sci. 368, 20130060 (2013).

Schultz, J. & Pilz, K. S. Natural facial motion enhances cortical responses to faces. Exp. Brain Res. 194, 465–475 (2009).

Sonkusare, S., Breakspear, M. & Guo, C. Naturalistic Stimuli in Neuroscience: Critically Acclaimed. Trends Cogn. Sci. 23, 699–714 (2019).

Benson, N. C. et al. The Human Connectome Project 7 Tesla retinotopy dataset: Description and population receptive field analysis. J. Vis. 18, 23 (2018).

Stigliani, A., Weiner, K. S. & Grill-Spector, K. Temporal Processing Capacity in High-Level Visual Cortex Is Domain Specific. J. Neurosci. 35, 12412–12424 (2015).

Allen, E. J. et al. A massive 7T fMRI dataset to bridge cognitive neuroscience and artificial intelligence. Nat. Neurosci. 25, 116–126 (2022).

Kay, K. et al. A critical assessment of data quality and venous effects in sub-millimeter fMRI. NeuroImage 189, 847–869 (2019).

Kang, X., Yund, E. W., Herron, T. J. & Woods, D. L. Improving the resolution of functional brain imaging: analyzing functional data in anatomical space. Magn. Reson. Imaging 25, 1070–1078 (2007).

Kay, K. N. & Yeatman, J. D. Bottom-up and top-down computations in word-and face- selective cortex. eLife 6, e22341 (2017).

Prince, J. S. et al. Improving the accuracy of single-trial fMRI response estimates using GLMsingle. eLife 11, e77599 (2022).

Kay, K. N., Winawer, J., Mezer, A. & Wandell, B. A. Compressive spatial summation in human visual cortex. J. Neurophysiol. 110, 481–494 (2013).

Charest, I., Kriegeskorte, N. & Kay, K. N. GLMdenoise improves multivariate pattern analysis of fMRI data. NeuroImage 183, 606–616 (2018).

Kay, K. N., Rokem, A., Winawer, J., Dougherty, R. F. & Wandell, B. A. GLMdenoise: a fast, automated technique for denoising task-based fMRI data. Front. Neurosci. 7, (2013).

Gorgolewski, K. J. The brain imaging data structure, a format for organizing and describing outputs of neuroimaging experiments. Sci. DATA. 3, 160044 (2016).

Pan, C. et al. An fMRI dataset in response to large-scale short natural dynamic facial expression videos. OpenNeuro https://doi.org/10.18112/openneuro.ds005047.v1.0.7 (2024).

Garcia-Dias, R. et al. Neuroharmony: A new tool for harmonizing volumetric MRI data from unseen scanners. NeuroImage 220, 117127 (2020).

Power, J. D., Barnes, K. A., Snyder, A. Z., Schlaggar, B. L. & Petersen, S. E. Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. NeuroImage 59, 2142–2154 (2012).

Welvaert, M. & Rosseel, Y. On the Definition of Signal-To-Noise Ratio and Contrast-To- Noise Ratio for fMRI Data. PLoS ONE 8, e77089 (2013).

Nastase, S. A., Halchenko, Y. O., Connolly, A. C., Gobbini, M. I. & Haxby, J. V. Neural Responses to Naturalistic Clips of Behaving Animals in Two Different Task Contexts. Front. Neurosci. 12, 316 (2018).

Sengupta, A. A studyforrest extension, retinotopic mapping and localization of higher visual areas. Sci. DATA. 3, 160093 (2016).

David, S. V. & Gallant, J. L. Predicting neuronal responses during natural vision. Netw. Comput. Neural Syst. 16, 239–260 (2005).

Sahani, M. & Linden, J. F. How Linear are Auditory Cortical Responses? NIPS. 8, 125–132 (2002).

Lage-Castellanos, A., Valente, G., Formisano, E. & De Martino, F. Methods for computing the maximum performance of computational models of fMRI responses. PLOS Comput. Biol. 15, e1006397 (2019).

Yamins, D. L. K. et al. Performance-optimized hierarchical models predict neural responses in higher visual cortex. Proc. Natl. Acad. Sci. 111, 8619–8624 (2014).

Güçlü, U. & van Gerven, M. A. Deep neural networks reveal a gradient in the complexity of neural representations across the ventral stream. J. Neurosci. 35, 10005–10014 (2015).

Lindsay, G. W. Convolutional Neural Networks as a Model of the Visual System: Past, Present, and Future. J. Cogn. Neurosci. 33, 2017–2031 (2021).

Wang, L. et al. VideoMAE V2: Scaling Video Masked Autoencoders with Dual Masking. in 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 14549– 14560, https://doi.org/10.1109/CVPR52729.2023.01398 (IEEE, Vancouver, BC, Canada, 2023).

Wen, H. et al. Neural Encoding and Decoding with Deep Learning for Dynamic Natural Vision. Cereb. Cortex 28, 4136–4160 (2018).

Wang, Y. et al. InternVideo: General Video Foundation Models via Generative and Discriminative Learning. Preprint at http://arxiv.org/abs/2212.03191 (2022).

Kriegeskorte, N., Mur, M. & Bandettini, P. Representational similarity analysis – connecting the branches of systems neuroscience. Front. Syst. Neurosci. 2, 1662–5137 (2008).

Chen, Y. et al. Cortical surface-based searchlight decoding. NeuroImage 56, 582–592 (2011).

Acknowledgements

This research was supported by two Key Programs of National Natural Science Foundation of China (62106285,82071884) and Major Projects of Technological Innovation 2030 of China (2022ZD0208500).

Author information

Authors and Affiliations

Contributions

B.Y. conceived of the idea and supervised the research. P.C., C.Z., B.L., L.T., L.W., S.M., L.C., Z.Y. contributed to discussion, experimental design,execution,and manuscript structure. P.C., B.L. visualizes experimental results. P.C., C.Z., B.L., B.Y. wrote the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Chen, P., Zhang, C., Li, B. et al. An fMRI dataset in response to large-scale short natural dynamic facial expression videos. Sci Data 11, 1247 (2024). https://doi.org/10.1038/s41597-024-04088-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41597-024-04088-0