Abstract

Ultrawidefield fundus (UWF) images have a wide imaging range (200° of the retinal region), which offers the opportunity to show more information for ophthalmic diseases. Image quality assessment (IQA) is a prerequisite for applying UWF and is crucial for developing artificial intelligence-driven diagnosis and screening systems. Most image quality systems have been applied to the assessments of natural images, but whether these systems are suitable for evaluating the UWF image quality remains debatable. Additionally, existing IQA datasets only provide photographs of diabetic retinopathy (DR) patients and quality evaluation results applicable for natural image, neglecting patients’ clinical information. To address these issues, we established a real-world clinical practice ultra-widefield fundus images dataset, with 700 high-resolution UWF images and corresponding clinical information from six common fundus diseases and healthy volunteers. The image quality is annotated by three ophthalmologists based on the field of view, illumination, artifact, contrast, and overall quality. This dataset illustrates the distribution of UWF image quality across diseases in clinical practice, offering a foundation for developing effective IQA systems.

Similar content being viewed by others

Background and Summary

Compared with traditional fundus imaging systems, the ultrawidefield fundus (UWF) imaging system, a revolutionary technology that allows visualization of up to 200 degrees of the fundus, captures more of the peripheral retina. This method is a noncontact approach that does not require pupil dilation1. By utilizing the wide field view of UWF imaging systems, researchers and practitioners can better identify and screen fundus diseases2. Moreover, with the development of artificial intelligence (AI), many deep learning models/algorithms to reduce the burden on clinicians have been proposed3. For example, deep learning models have been successfully applied to the automatic detection and classification of retinal diseases using UWF images4. Moreover, these models are capable of performing complex tasks such as segmenting anatomical structures, quantifying disease progression, and predicting treatment outcomes5,6,7. Image quality may affect the accuracy of these models/algorithms. Good image quality is an important aspect of clinical practice. Clinical interpretability can be affected by poor contrast, poor focus, or modality-specific artifacts, including illumination or shadows, and may even be impossible8. Therefore, evaluating image quality is considered an integral step in the application of these models/algorithms9. However, this approach can be time-consuming for ophthalmologists.

Automated image quality assessment (IQA) system models/algorithms that can help optimize image acquisition and improve the accuracy of automated computational image analysis have been proposed10,11. However, since most models are applied to natural images, whether these systems are suitable for evaluating the quality of clinical UWF images of fundus diseases remains debatable. However, the development of automated systems, especially UWF IQA systems, faces difficulties. First, medical images are subject to strict privacy protection, making them difficult to obtain. Second, fundus diseases such as retinal vitreous hemorrhage, retinal detachment, vitreous opacity and degeneration can influence image quality, but these images can still be used for clinical diagnosis, a decision that should be determined by ophthalmologists.

The training of automated IQA systems on UWF images requires large datasets with IQA results from ophthalmologists. Several researchers have designed automated IQA AI systems specifically for UWF images based on the IQA results from ophthalmologists. However, none of the datasets have been publicly published. Currently, there are only a few UWF image datasets, including PRIME-FP2012, DeepDRiD13, MSHF14, and the dataset established by Liu. et al.15. The details of these datasets are summarized in Table 1. However, these datasets still have limitations. First, these datasets mainly contain UWF images from patients with diabetic retinopathy (DR). Although peripheral lesions on UWF images from DR patients have received considerable attention, lesions alone do not reflect the diversity of clinical fundus diseases, so they are not suitable for developing AI systems for clinical practice. Second, most of the datasets have not undergone IQA, or the IQA system used is based on traditional fundus images. The modality of UWF images is slightly different from that of traditional fundus images. Given the ability of UWF imaging to visualize a wide range of the retina, it is important to evaluate the field of view of UWF images. Third, all of the datasets lacked clinical information such as age, sex, and diagnosis. These demographic data are vital for analyzing image quality and offer a baseline for building associative models to determine whether image quality is affected by age, sex, or disease.

In this study, we proposed an ultrawidefield fundus image dataset consisting of 700 images of patients with six common fundus diseases (i.e., diabetic retinopathy (DR), age-related macular degeneration (AMD), retinal vein occlusion (RVO), pathological myopia (PM), uveitis, and retinal detachment (RD)) and healthy volunteers, with corresponding clinical data. All the images had a high resolution of 3900 × 3072, and the image quality was evaluated by three ophthalmologists in terms of field of view, illumination, contrast, artifacts, and overall quality. We believe that this multidisease UWF image dataset will support real-world clinical practice by providing an excellent clinical application of image quality assessment in the field of ophthalmology.

Methods

Data collection

Data from patients with six common fundus diseases, namely, diabetic retinopathy (DR), age-related macular degeneration (AMD), retinal vein occlusion (RVO), pathological myopia (PM), uveitis, and retinal detachment (RD), as well as healthy volunteers, were retrospectively collected from medical records, and the corresponding clinical information, including diagnosis, age, sex, and laterality, was extracted. Only patients with a clearly ascertained diagnosis were included. The diagnosis was confirmed by one experienced ophthalmologic specialist, and the criteria complied with the updated American Academy of Ophthalmology Clinical Practice Guidelines16,17,18,19,20. A total of 700 UWF images taken between January 2023 and January 2024 using an Optos 200Tx system (Optos, Dunfermline, United Kingdom) were obtained from Zhejiang Provincial People’s Hospital (Fig. 1). All the images covered a field of 200 degrees of the retina with a high resolution of 3900 × 3072. The details of the image parameters can be found in Table 1. All the images and clinical data were deidentified. This study protocol was reviewed and approved by the Institutional Review Board of Zhejiang Provincial People’s Hospital (KY 2024039). The study adhered to the tenets of the Declaration of Helsinki. Written consent was provided by every participant before the examinations. All participants were informed that the images would be used for research purposes, and they explicitly authorized the publication of any images or data collected during the study.

Workflow for establishing the dataset. (a) Data collection. The medical records of patients with six common ophthalmic diseases and healthy volunteers at Zhejiang Provincial People’s Hospital were retrospectively retrieved, and their clinical information and 700 UWF images were collected. (b) Annotation preparation. Three ophthalmologists were trained in the annotation process and tested. (c) Image quality assessment. Three ophthalmologists independently annotated 700 images on the basis of field of view, illumination, contrast, artifacts, and overall quality. The dataset comprised 100 images of patients who were diagnosed with DR, AMD, RVO, PM, uveitis, or RD and 100 images from healthy volunteers.

Quality evaluation

An IQA annotation team for UWF images was established with three junior ophthalmologists (for annotation) and one expert ophthalmologist (for annotation guidance and summary). Binary IQA annotations were generated: each image was classified as good or poor quality in the five categories (field of view, illumination, contrast, artifacts, and overall quality) (Table 2 and Fig. 2).

Examples of UWF images of low and high quality. (a) An image with a poor field of view from an AMD patient. (b) An image with poor illumination from a PM patient. (c) An image with low contrast and illumination from an RD patient. (d) An image with artifacts of light spot and cloudiness in the vitreous body from a uveitis patient. (e) An image with artifacts of vitreous hemorrhage and proliferation from a DR patient. (f) An image of good overall quality from a healthy volunteer.

The expert ophthalmologist first introduced the IQA standards and provided training to three junior ophthalmologists using twenty UWF images from an educational set. A test including twenty UWF images was subsequently performed to analyze the intra- and interobserver agreement among the three junior ophthalmologists. Each junior ophthalmologist made two annotations of the twenty images. The second annotation took place three days after the first annotation. The annotation results were used to analyze and calculate the kappa coefficients of the five quality categories. This process was repeated until the expert ophthalmologist was satisfied with the results of the annotations. Finally, the three junior ophthalmologists independently annotated the 700 UWF images on the basis of field of view, illumination, contrast, artifacts, and overall quality. All annotations were processed on monitors with the same parameters (a 21.5-inch monitor with a resolution of 3072 × 1920, a pixel pitch of 0.275 mm, and a brightness of 300 cd/m2). The final decision was made by an expert ophthalmologist, who reviewed the annotations made by all three junior ophthalmologists. The details of the workflow of the IQA annotation process are shown in Fig. 1.

Data Records

The UWF IQA dataset has been deposited as a zipped file on Figshare21. Upon extraction, the contents are organized into one folder (named “Original UWF Images”) and three Microsoft Office Excel lists (including “Ground Truth.xlsx”, “Demographics of the participants.xlsx”, and “Individual Quality Assessment.xlsx”). The “Original UWF Images” folder contains seven subfolders, and each subfolder contains 100 UWF images of patients with a specific type of fundus disease. The dataset includes seven patient types, including DR, AMD, RVO, PM, uveitis, RD, and healthy patients. The images are named “XXX-n.jpg”, where “XXX” refers to the patient type and “n” indicates the number of images. In the file “Ground Truth.xlsx”, there are six columns. The first column shows the image ID. The subsequent columns show the ground truth of the image quality assessment, including “Field of View”, “Contrast”, “Illumination”, “Artifacts”, and “Overall Quality”. The IQA result is either 0 (i.e., poor quality) or 1 (i.e., good quality). In the file “Demographics of the participants.xlsx”, there are seven columns. The first column shows the image ID, the second column shows the patient ID, the third column shows the eye ID, the fourth column shows the eye category, the fifth column shows the age of the patient, the sixth column shows the sex of the patient, and the last column shows the diagnosis of the patient. Finally, the file “Individual Quality Assessment.xlsx” with scores of each image annotated by three annotators is formatted in the same way as the file “Ground Truth.xlsx”.

Technical Validation

Dataset characteristics

The dataset contained 700 UWF images and corresponding clinical information from patients with six common fundus diseases and healthy volunteers. All the images had a high resolution of 3900 × 3072 pixels. These images were collected from 689 eyes of 602 patients, including 307 females and 295 males. The average age of the DR patients was 57.19 ± 11.41 years. The average age of the AMD patients was 71.63 ± 9.63 years. The average age of the RVO patients was 64.01 ± 10.47 years. The average age of the PM patients was 53.59 ± 14.38 years. The average age of the uveitis patients was 44.51 ± 16.35 years. The average age of the RD patients was 47.15 ± 17.68 years. The average age of the healthy patients was 36.45 ± 14.72 years. All participants in the study were of Asian descent. Patients were categorized into distinct age groups according to the age classification criteria outlined by the World Health Organization (WHO). Specifically, the dataset included 19 images depicting teenagers under 18 years of age (0.27%), 192 images depicting adults aged 18 to 44 (27.43%), 190 images depicting middle-aged individuals aged 45 to 59 (27.14%), and 299 images depicting individuals over 60 years of age (42.71%). The distribution of images categorized by sex and age range is presented in Table 3.

Image quality distribution

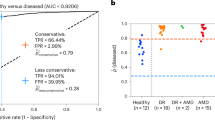

All the images were evaluated by three ophthalmologists according to five image quality perspectives, including field of view, illumination, artifacts, contrast, and overall quality. The details are shown in Table 4. There were 133 UWF images with a field of view of less than 70% of the fundus, accounting for 19% of images, while the proportion rose to 45% in the AMD patients (Fig. 3a). The reason for this distribution might be that AMD patients were generally elderly with eyelid laxity and could not cooperate well during image acquisition. There were 126 UWF images with uneven or insufficient illumination, accounting for 18%, whereas the proportion rose to 43% in the PM patients (Fig. 3b). The eye axial length in PM patients is longer and may cause much greater absorption and divergence of optical energy in the axial pathway. There were 186 UWF images with low contrast, accounting for 26.57%, while the proportion rose to 49% in the RD patients (Fig. 3c). RD patients usually cannot focus well on light, and the unevenness of the retina and vitreous hemorrhage may also explain this observation. There were 251 UWF images with artifacts, accounting for 35.86%, while the proportion rose to 47% in the uveitis patients (Fig. 3d). Uveitis is a general term for inflammation of the iris, ciliary body, and choroid tissue. Uveitis was generally comorbid with vitreous opacities, causing artifacts in the images. In total, 117 images were of poor overall quality. Healthy eyes tended to have better image quality from all five quality perspectives, and 97% of the images had good overall quality (Fig. 3e). This dataset included images from patients with six fundus diseases for which clinical image quality was evaluated from five perspectives that reflect the ophthalmic clinical reality.

Distribution of image quality across different types of patients. (a) The distribution of “field of view”. The proportion of UWF images with poor fields of view is the largest in AMD patients. (b) The distribution of “illumination”. The proportion of UWF images with poor illumination is the largest in PM patients. (c) The distribution of “contrast”. The proportion of UWF images with poor contrast is the largest in RD patients. (d) The distribution of “artifacts”. The proportion of UWF images with artifacts is the largest in uveitis patients. (d) The distribution of “overall quality”. The proportion of UWF images with good overall quality is the largest among healthy volunteers.

Validation of the annotation

In this study, the evaluation of interannotator consistency regarding the five quality perspectives was conducted utilizing the Fleiss kappa coefficient as a statistical measure. For field of view and illumination, the kappa coefficients were 0.735 and 0.830, respectively. for. For contrast and artifacts, the kappa coefficients were 0.828 and 0.761, respectively. Finally, the kappa coefficient was 0.940 for overall quality. These results showed substantial agreement between different annotators, indicating that the ultrawidefield fundus image dataset was reliable for clinical image quality assessment.

Usage Notes

One of the great potential uses of this dataset is that it could be used to develop automatic IQA systems for UWF images suitable for clinical practice. The dataset is accessible at the link mentioned above. The dataset can be split according to the user’s research purpose. We highly encourage users to cite this paper and acknowledge the contribution of this dataset in their studies. We hope that this dataset contributes to the development of automatic IQA systems for UWF images.

Code availability

No novel code was used in the construction of the dataset.

References

Cai, S. & Liu, T. Y. A. The Role of Ultra-Widefield Fundus Imaging and Fluorescein Angiography in Diagnosis and Treatment of Diabetic Retinopathy. Curr Diab Rep 21, 30, https://doi.org/10.1007/s11892-021-01398-0 (2021).

Oh, B.-L. et al. Role of Ultra-widefield Imaging in the evaluation of Long-term change of highly myopic fundus. Acta Ophthalmol 100, e977–e985, https://doi.org/10.1111/aos.15009 (2022).

Ting, D. S. W. et al. Artificial intelligence and deep learning in ophthalmology. Br J Ophthalmol 103, 167–175, https://doi.org/10.1136/bjophthalmol-2018-313173 (2019).

Sun, G. et al. Deep Learning for the Detection of Multiple Fundus Diseases Using Ultra-widefield Images. Ophthalmol Ther 12, 895–907, https://doi.org/10.1007/s40123-022-00627-3 (2023).

Nunez do Rio, J. M. et al. Deep Learning-Based Segmentation and Quantification of Retinal Capillary Non-Perfusion on Ultra-Wide-Field Retinal Fluorescein Angiography. J Clin Med 9, https://doi.org/10.3390/jcm9082537 (2020).

Wang, Y. et al. Development and validation of a deep learning model to predict axial length from ultra-wide field images. Eye (Lond) 38, 1296–1300, https://doi.org/10.1038/s41433-023-02885-2 (2024).

Zhao, X. et al. Deep-Learning-Based Hemoglobin Concentration Prediction and Anemia Screening Using Ultra-Wide Field Fundus Images. Front Cell Dev Biol 10, 888268, https://doi.org/10.3389/fcell.2022.888268 (2022).

König, M. et al. Quality assessment of colour fundus and fluorescein angiography images using deep learning. Br J Ophthalmol 108, 98–104, https://doi.org/10.1136/bjo-2022-321963 (2023).

Al-Sheikh, M., Ghasemi Falavarjani, K., Akil, H. & Sadda, S. R. Impact of image quality on OCT angiography based quantitative measurements. Int J Retina Vitreous 3, 13, https://doi.org/10.1186/s40942-017-0068-9 (2017).

Madhusudana, P. C., Birkbeck, N., Wang, Y., Adsumilli, B. & Bovik, A. C. Image Quality Assessment Using Contrastive Learning. IEEE Trans Image Process 31, 4149–4161, https://doi.org/10.1109/TIP.2022.3181496 (2022).

Si, J., Huang, B., Yang, H., Lin, W. & Pan, Z. A no-Reference Stereoscopic Image Quality Assessment Network Based on Binocular Interaction and Fusion Mechanisms. IEEE Trans Image Process 31, 3066–3080, https://doi.org/10.1109/TIP.2022.3164537 (2022).

Ding, L., Kuriyan, A. E., Ramchandran, R. S., Wykoff, C. C. & Sharma, G. Weakly-Supervised Vessel Detection in Ultra-Widefield Fundus Photography via Iterative Multi-Modal Registration and Learning. IEEE Trans Med Imaging 40, 2748–2758, https://doi.org/10.1109/TMI.2020.3027665 (2021).

Liu, R. et al. DeepDRiD: Diabetic Retinopathy-Grading and Image Quality Estimation Challenge. Patterns (N Y) 3, 100512, https://doi.org/10.1016/j.patter.2022.100512 (2022).

Jin, K. et al. MSHF: A Multi-Source Heterogeneous Fundus (MSHF) Dataset for Image Quality Assessment. Sci Data 10, 286, https://doi.org/10.1038/s41597-023-02188-x (2023).

Liu, H., Teng, L., Fan, L., Sun, Y. & Li, H. A new ultra-wide-field fundus dataset to diabetic retinopathy grading using hybrid preprocessing methods. Computers in biology and medicine 157, 106750, https://doi.org/10.1016/j.compbiomed.2023.106750 (2023).

Flaxel, C. J. et al. Retinal Vein Occlusions Preferred Practice Pattern®. Ophthalmology 127, P288–P320, https://doi.org/10.1016/j.ophtha.2019.09.029 (2020).

Jacobs, D. S. et al. Refractive Errors Preferred Practice Pattern®. Ophthalmology 130, https://doi.org/10.1016/j.ophtha.2022.10.031 (2023).

Flaxel, C. J. et al. Posterior Vitreous Detachment, Retinal Breaks, and Lattice Degeneration Preferred Practice Pattern®. Ophthalmology 127, P146–P181, https://doi.org/10.1016/j.ophtha.2019.09.027 (2020).

Miller, K. M. et al. Cataract in the Adult Eye Preferred Practice Pattern. Ophthalmology 129, https://doi.org/10.1016/j.ophtha.2021.10.006 (2022).

Dick, A. D. et al. Guidance on Noncorticosteroid Systemic Immunomodulatory Therapy in Noninfectious Uveitis: Fundamentals Of Care for UveitiS (FOCUS) Initiative. Ophthalmology 125, 757–773, https://doi.org/10.1016/j.ophtha.2017.11.017 (2018).

He, S.-C. et al. Open Ultra-Widefield Fundus Images Dataset with Disease Diagnosis and Clinical Image Quality Assessment. Figshare. https://doi.org/10.6084/m9.figshare.26936446 (2024).

Acknowledgements

The authors acknowledge Science and Technology Fund Project of Guizhou Provincial Health Commission (Research on 3D quantitative evaluation system of artificial intelligence for diabetes macular edema based on OCT), Project on Scientific and Technological Research of Traditional Chinese Medicine and Ethnic Medicine in Guizhou Province (Research on traditional Chinese medicine visual diagnosis assisted by deep learning technology based on anterior segment photography), and Zhejiang Medical and Health Science and Technology Plan Project (2023KY915).

Author information

Authors and Affiliations

Contributions

S.L.J. and Y.X. had full access to all the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis. Study concept and design: S.L.J. and Y.X. Acquisition, analysis, or interpretation of data: H.S.C., Z.X.X., Y.S.C., G.H.Y., S.Y.J., Z.X.P., W.J. Drafting the manuscript: H.S.C., Y.X. Critical revision of the manuscript for important intellectual content: S.L.J., X.W.B. Study supervision: S.L.J., Y.X.

Corresponding authors

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

He, S., Ye, X., Xie, W. et al. Open ultrawidefield fundus image dataset with disease diagnosis and clinical image quality assessment. Sci Data 11, 1251 (2024). https://doi.org/10.1038/s41597-024-04113-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-024-04113-2