Abstract

Oral diseases affect nearly 3.5 billion people, and medical resources are limited, which makes access to oral health services nontrivial. Imaging-based machine learning technology is one of the most promising technologies to improve oral medical services and reduce patient costs. The development of machine learning technology requires publicly accessible datasets. However, previous public dental datasets have several limitations: a small volume of computed tomography (CT) images, a lack of multimodal data, and a lack of complexity and diversity of data. These issues are detrimental to the development of the field of dentistry. Thus, to solve these problems, this paper introduces a new dental dataset that contains 169 patients, three commonly used dental image modalities, and images of various health conditions of the oral cavity. The proposed dataset has good potential to facilitate research on oral medical services, such as reconstructing the 3D structure of assisting clinicians in diagnosis and treatment, image translation, and image segmentation.

Similar content being viewed by others

Background & Summary

According to the Global Burden of Disease Study in 2019 (https://ghdx.healthdata.org/gbd-results-tool), oral diseases affect nearly 3.5 billion people, posing a large health burden for society. The World Health Organization (WHO) report also states that oral care is expensive and usually outside the components of universal health coverage. People, particularly in low- and middle-income countries, cannot afford such services (https://www.who.int/team/noncommunicable-diseases/global-status-report-on-oral-health-2022). As there are vast numbers of sufferers and a shortage of medical resources, accurate, inexpensive, and accessible methods for diagnosing and treating disease are highly important for three purposes: (1) to improve the dental health care service; (2) to reduce patient costs; (3) to cover more patients, particularly those in remote areas. However, traditional dental diagnosis and treatment methods (e.g., dentists taking X-rays of patients and then obtaining diagnosis and treatment strategies manually)1 have difficulty meeting the requirements of high efficiency, low cost, and accessibility. As one of the most promising technologies for improving medical services and reducing health burdens, imaging-based machine learning technology has been widely introduced into dentistry for assisting clinicians in diagnosis and treatment, image translation, and image segmentation, etc2,3,4,5,6,7,8,9,10.

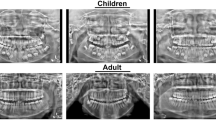

Based on the advantages of radiographs in bone imaging, as shown in Fig. 1, three radiographs have become the most common method to assist dentists in obtaining a diagnosis and developing treatment strategies. First, CT is a 3D image that provides high-resolution anatomical information on the patient’s case11 and is most commonly used for implant planning because it provides accurate information on the height and width of the jaw and the position of important structures12,13. However, the price of CT machines produced in European and American countries is at least 74,350 dollars, and the radiation is 58.9 − 1025.4μSv14. Second, extraoral radiographs (e.g., panoramic radiographs (PaX-ray)) show both the mandible and the surrounding oral and maxillofacial structures, including the temporomandibular joints12, and the radiation is 5.5 − 22.0μSv14. However, because it is a two-dimensional image, it cannot provide accurate measurement information of the jaw. The inherent magnification and overlapping of teeth in the technique varies depending on the machine12. Third, intraoral X-rays such as periapical radiographs (PeX-ray) provide information on the entire tooth from the crown to the root and are often used to rule out lesions at the apex of the tooth, which may occur when the tooth has become nonvital12, and the price of intraoral X-rays is much lower than the above two radiographs, and the radiation is < 5μSv14. However, a PeX-ray can only provide bone information within 2-3 mm around the apex of the tooth and is limited to teeth in one arch12.

The multimodal dental dataset is expected to facilitate advancements in fields such as assisting doctors in diagnosis, image translation, and image segmentation. CBCT images, panoramic radiographs, and periapical radiographs are the three most commonly used types of clinical dental imaging. Researchers can leverage this image data to train models that assist doctors in diagnosis and treatment, including the diagnosis of oral diseases15,16, automatic measurements in orthodontics17,18, and preoperative planning for dental implants etc19,20. The dataset provides a CSV file indicating whether CBCT files contain dental implants, which can be used for implant detection tasks in dentistry. However, it lacks specific disease annotations. Researchers can annotate the dataset according to their tasks, thereby facilitating model training and evaluation.

One of the common tasks in image translation is to convert images from one domain to another. According to the aforementioned report by the WHO, three-quarters of the world’s population threatened by oral diseases reside in low- and middle-income regions. Compared to CBCT, panoramic and periapical radiographs are much cheaper and expose patients to much less radiation. If it were possible to reconstruct CT scans from panoramic or a small number of periapical radiographs, it would significantly reduce patients’ radiation exposure and costs, which is particularly important for low- and middle-income populations. Research on reconstructing CT scans from panoramic images already exists21, and the dataset containing data from different modalities can further advance this task.

Similarly, the multimodal oral dataset supports segmentation tasks for teeth and alveolar bone22,23. While segmentation predominantly occurs on oral CT datasets, publicly available CBCT datasets are scarce. Our dataset consists of 329 CBCT data from 169 patients, addressing this issue and empowering researchers to effectively explore segmentation tasks.

Despite the high potential of imaging-based machine learning in contributing to dentistry research and clinical usage, oral image datasets are limited to machine learning research. We surveyed all studies mentioned in three recent overviews of dentistry, which involve 74 works, and only 2 studies were based on publicly available oral datasets7,24,25. These two datasets are the Tufts Dental Database (Panetta et al., 2022)26 and the Virtual Skeleton Database (Kistler et al., 2013)27. The privateness of datasets prevents third parties from objectively evaluating and exploring a study, which is detrimental to the development of the field of dentistry. Conversely, to our knowledge, there are five publicly available datasets, as shown in Table 1. However, current publicly available oral datasets also have several limitations.

-

First, the limited number of cases in available datasets, especially CT data, poses a challenge to the development of data-driven deep learning. As shown in Table 1, only one CT dataset is available among the majority of public datasets, which mainly consist of PaX-ray. In addition, among the 74 studies we surveyed, only 13 (17.6%) used CT data from private datasets. Furthermore, among those studies that included more than 100 patients, only 5 (6.7%) made use of CT data7,24,25.

-

Second, the absence of paired data for different modalities in these datasets makes it impossible to compare techniques across modalities. Furthermore, these datasets do not support the development of multi-scenario applications, which refer to applications that must be used in various scenarios due to different medical or other conditions, each requiring data from different modalities. As Table 1 shows, no publicly available datasets currently contain data for all the modalities mentioned above.

-

Third, current public datasets lack diversity and complexity and are often biased towards overhealth and overdisease28, which renders them unable to accurately represent the real clinical setting. In addition, models trained on datasets with these flaws suffer from data drift, resulting in a good performance during training but poor performance during a real deployment. Ultimately, techniques developed based on these datasets are difficult to implement in actual clinical settings.

Based on the above considerations, we present a publicly accessible multimodal dental dataset29 that is useful for machine learning research and clinic services. First, the dataset includes 329 CBCT images. All CBCT image data were collected from 169 patients using Smart3D-X (Beijing Langshi Instrument Co., Ltd., Beijing, China) (Fig. 3a). A total of 67 patients had more than one CBCT image taken at different times. Second, this dataset has the three most common modalities of data: CBCT images, panoramic radiographs, and periapical radiographs. The periapical radiograph is generated from the CBCT using cxr-ct (https://github.com/KendallPark/cxr-ct)(Fig. 3a). In this dataset29, 188 CBCT images have paired periapical radiographs. All panoramic radiographs have paired CBCT images and periapical radiographs. Finally, to keep the characteristics of the real clinical setting (such as variety), the dataset contains various types of patients (e.g., the entire upper jaw has no teeth, all dentures, irregular teeth, and implanted teeth), as shown in Fig. 2, encouraging other researchers in the field to use it to develop and test their methods of assisting clinicians in diagnosis and treatment, image translation, and image segmentation, etc.

Methods

Ethics statement

This research has received approval from the Ethics Committee of Guilin Medical University (Approval No: GLMC20230502). The approved content encompasses the collection of imaging data, reconstruction of patients’ oral three-dimensional models, and sharing of imaging data. Within this dataset, all personally identifiable information, except for the patient’s gender and age, has been either removed or regenerated to align with U.S. HIPAA regulations. Moreover, the dataset is exclusively restricted for legitimate scientific research purposes. Additionally, informed consent has been obtained from the patients.

Patient characteristics

Considering the potential hazards of obtaining radiological images, this study did not design a prospective experiment to recruit volunteers for unnecessary radiological imaging examinations to obtain data but used existing patient data. We collected data from all adult patients who visited dental hospitals from 2021 to 2022. After excluding data with quality issues, attempts were made to obtain informed consent from the users, ultimately obtaining informed consent from 169 patients, as shown in Table 2. Eight patients simultaneously had data for three different modalities.

Data collection

The dataset29 contains 329 volumetric oral cavity CBCT scans, encompassing data from 169 patients, along with 8 panoramic radiographs, each corresponding to a different patient. Additionally, there are 16,203 periapical radiographs available, with three different angle views for each tooth, totaling 5,401 teeth, corresponding to 188 CBCT files.

CBCT is a variation of traditional CT that uses a cone-shaped X-ray beam to capture the data of the oral cavity and creates a 3D representation inside the oral cavity30,31. Compared to traditional CT, CBCT has many advantages, such as low cost, easy accessibility, and low radiation exposure, and it has been widely used in the field of dentistry32. All CBCT images in the dataset are from a CBCT machine that uses a two-dimensional flat panel detector to collect object cone beam ray projection data and a large diameter cone X-ray beam for scanning and performs 180°–360° synchronous rotation of the patient’s head on the plane for the acquisition of volumetric image data of the entire scanned area33 (Fig. 3a). All images are reconstructed using the Filted Back-Projection (FBP) reconstruction method, and the T-MAR artifact correction function is used to automatically identify high-density substances in the mouth and remove artifacts by deep learning (Fig. 3b). Among the 329 medical records we collected, the output size of 327 images is set to 640 × 640, these images’ slice thickness is 0.25 mm, and the pixel spacing is 0.25 mm × 0.25mm. The output size of 2 images is set to 550 × 550, the slice thickness of these images is 0.15 mm, and the pixel spacing is 0.15 mm × 0.15 mm. All images are saved in the Digital Imaging and Communications in Medicine (DICOM) format34.

A panoramic radiograph also uses a cone-shaped X-ray beam to capture the data of the oral cavity and creates a single flat 2D image of the curved structure of the entire mouth (Fig. 3a). Compared to traditional CBCT, the panoramic radiograph only generates approximately 1/40 radiation but lacks spatial structure information. All panoramic radiograph images in the dataset are obtained from the CBCT machine using the principles of narrow slot and circular orbital tomography principles. The machine rotates 180° around the patient for data acquisition. The output size of the images is set to 1468 × 2904, the thickness of these images is 0.075 mm, and the pixel spacing is 0.075 mm × 0.075 mm.

A periapical radiograph is typically used by the X-ray beam to capture the data of the oral cavity and creates a 2D image of the teeth. Compared to the other radiographs mentioned above, the periapical radiograph only focuses on a small part of the oral cavity (usually covering 3-4 teeth) through the built-in film or intraoral X-ray sensors, generating little radiation. In the real clinical setting, periapical radiograph images are obtained from a portable handheld X-ray generator and the built-in film (the size of the film usually contains 40 mm × 30 mm). However, it is nontrivial to collect many periapical radiograph images, particularly paired CBCT, panoramic, and periapical radiographs. First, taking radiographs multiple times can cause patients to receive unnecessary radiation doses. Second, although obtaining dental films is simple, to obtain complete oral information, ensure the complexity and diversity of data, and meet the needs of developing machine learning technology, 10-30 data collections are required. Finally, in current dental hospitals, the built-in film of a patient is usually handed over to the patient and is not stored as data in the hospital.

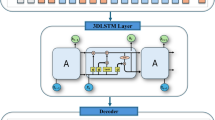

Considering that a CT image is obtained by a rotating X-ray source, the CT image contains all the information of a single X-ray image. Thus, many researchers focus on using CT images to generate the corresponding X-ray image and have achieved good results35,36,37. In this study, to obtain periapical radiographs, we generated them from CBCT images using the Siddon-Jacobs ray-tracing algorithm38,39, which is one of the methods for computing DRR(Digitally Reconstructured Radiograph). The Siddon-Jacobs ray-tracing algorithm simulates the process of X-rays passing through the human body and being attenuated by human tissue to generate radiographic images. Due to its convenience and efficiency, it is the most commonly used method for generating computed DRRs40,41,42. Furthermore, research has shown that the images generated using this algorithm exhibit errors within an acceptable range when compared to real images43. Additionally, the dataset is continuously updated, and in the future, we will integrate emerging technologies to generate periapical radiographs.

Figure 4 shows that periapical radiograph generation consists of four steps. First, a 60 mm × 50 mm × 50 mm cube is cut out from the 3D CBCT image of the patient’s tooth. The midline lm passes through the teeth, and the line ls of the cube is tangent to outsize the face. For a patient, 20-32 cubes are obtained. Second, to apply the Siddon-Jacobs ray-tracing algorithm on the cube, a rotation is applied to the cube to ensure that the cube’s direction is the same. The outside of the face faces the positive direction of the y-axis, and the teeth face the positive direction of the z-axis. Third, the X-ray process is simulated by propagating incident X-ray photons (from a radiation source) through a cube using the Siddon-Jacobs ray-tracing algorithm of the Insight Segmentation and Registration Toolkit (ITK) imaging package. When using this algorithm, we set the distance between the X-ray source and the cube as 1000mm, add a random value of 0-5mm, and set three angles of X-ray incidence, which are 20-25 degrees to the left and 5-10 degrees to the left, and 20-25 degrees to the right, to generate periapical radiographs with different angles. Finally, considering the size of the built-in film of adults, there are usually two sizes of images in real life. Therefore, the periapical radiograph generated above will be cut as 40 mm × 30 mm. In the dataset, there are a total of 329 CBCT files. We attempted to label each tooth in every file, however, severe tooth loss in some CBCT image files hindered accurate annotation of each tooth’s position. Additionally, tooth incompleteness issues emerged after segmentation from annotated files. These data were removed following expert quality control procedures. Nonetheless, even after removal, we still have 188 PeX file data, comprising 16,203 images of 5,401 teeth from three different angles. For machine learning, this still represents a considerable amount of data.

Privacy

To ensure the protection of patient privacy, all demographic-sensitive information of patients, except for gender and age, has been either deleted or replaced with new values. Patient names and IDs have been replaced with randomly generated new IDs. Other IDs in the files, such as StudyInstanceUID, have also been regenerated. The date of birth has been removed, and other dates (e.g., study time, etc.) have been randomly offset to fall between 2200 and 2300. However, the chronological order of timestamps for multiple visits by each patient has been retained. The dataset does not include individuals under the age of 18 or patients aged 89 and above.

Data statistics

The demographics of the patients are summarized in Table 2 and Fig. 5a. As shown in Fig. 5a, the number of patients who chose to have CBCT images taken was far greater than the number of patients who chose to have PaX-rays taken. Possible reasons for this disparity are that PaX-ray is two-dimensional and has significant limitations, including distortion, lack of spatial structure information, etc.; thus, oral surgeons prefer to take CBCT images so that the pathology can be evaluated in 3 dimensions and the pathology of the lesion can be determined12. Second, if the patient has a plan for dental implants, CBCT is the primary choice. In CBCT images, the number of female patients exceeds that of male patients, and this trend is also observed among patients who have had multiple visits Table 3.

The age distribution of the patients is shown in Fig. 5b using a boxplot, which indicates that the patients who underwent panoramic radiographs were younger. Table 4 presents the imaging settings for different types of images, with peak kilovoltage (kVp) and X-ray tube current affecting the radiation exposure dose and slice thickness representing the axial resolution34. As depicted in Table 4, the slice thickness is mostly set to 0.25 mm, accounting for 99.7% of CBCT images. The remaining two were set to 0.15 mm. For all CBCT images, the kVp was 100. The X-ray tube current was typically between 6-8 mA. For panoramic radiographs, the slice thickness was 0.25 mm, kVp was 100, and X-ray Tube Current was set to 10 mA for patients.

Data Records

The multimodal dental dataset29 has been released on PhysioNet for users to download. As illustrated in Fig. 6, the dataset is structured hierarchically. CBCT images and panoramic and periapical radiographs are organized into separate folders at the top-level directory. CBCT images and panoramic radiographs are saved in DICOM format, while periapical radiographs are generated by cutting dental slices from CBCT images and irradiating them from three angles. The resulting periapical radiographs are stored in TIF format under the PeX-ray folder within three subfolders. The files within these three folders are named using the patient’s ID followed by an underscore and a number to indicate the patient’s visit number. For example, ‘0006_0’ denotes data from the first visit of patient 0006. In addition to these three folders, the remaining CSV files are as follows: CBCT_Info.csv, PaX_Info.csv, PeX_Info.csv, Patient_Statistics_Info.csv, and Implant_Marking_Info.csv. CBCT_Info.csv contains patient age and gender information for each CBCT file, along with details about the CBCT file itself, such as tube current and tube voltage used for the CBCT image acquisition, as well as the dimensions of the file, etc. PaX_Info.csv is similar to CBCT_Info.csv but specifically records information related to panoramic radiographs. PeX_Info.csv provides statistics on the number of periapical radiographs from different angles for each patient. Patient_Statistics_Info.csv offers patient-level statistics, indicating whether each patient has data for these three modalities and the corresponding file names. Implant_Marking_Info.csv marks whether patients have dental implants. The specific meaning of each column in every CSV file is detailed in Table 5. All files are sorted in ascending order based on patient ID.

Structure of the data included in the multimodal dental dataset29.

Technical Validation

To obtain high-quality standard data, quality control and calibration of the CBCT scanning device are essential for CBCT and panoramic radiographs. Therefore, an autocalibration procedure is executed daily to ensure calibrated and accurate performance of the CBCT scanner31. Additionally, the manufacturer of the CBCT scanner conducts an annual quality control service to maintain the high quality of the CBCT image.

For periapical radiographs, the performance of cutting CBCT to generate periapical radiographs and the Siddon-Jacobs ray tracing algorithm is key to obtaining standard high-quality data. Therefore, we organized 13 people to label the CBCT images and record the labeled data in the file. One person was selected as the person in charge; the 13 people were divided into groups of two, and the remaining one was in a separate group. Each person was required to label approximately 25 CBCT images. After labeling, the quality of the labels was checked by mutual inspection within each group. The process for checking the labels was as follows: first, we sliced the CBCT images based on these labels and then used 3D Slicer software to inspect each slice to see whether the tooth corresponding to the label was in the middle of the slice and whether the height of the section included the entire portion of the tooth. If there were quality issues with the labeled data, the person who labeled the file had to re-label it. After the checking was completed, we sent all the labeled files to the responsible person, who checked all the labeled files again to ensure the correctness of the labels and the quality of the obtained periapical radiographs. There are currently 188 labeled files that meet the requirements, and the rest will be updated in the future.

Usage Notes

Currently, there is a shortage of publicly available dental datasets, particularly lacking CBCT data. The establishment of the multimodal dental dataset29 aims to provide a broader range of diverse data types to facilitate the advancement of machine learning in dental healthcare services. To access the data, researchers are required to complete the following steps:

-

Become a credentialed user of the PhysioNet platform.

-

Complete the mandatory training.

-

Submit a data access request and await approval.

Once the application is approved, researchers will be granted access to the data.

Code availability

The code developed in this study is publicly available from the github website (https://github.com/wenjing567/dental-cxr-ct.git).

References

Rashid, U. et al. A hybrid mask rcnn-based tool to localize dental cavities from real-time mixed photographic images. PeerJ Computer Science 8, e888 (2022).

Cui, Z., Li, C. & Wang, W. Toothnet: automatic tooth instance segmentation and identification from cone beam ct images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 6368–6377 (2019).

Lee, D.-W., Kim, S.-Y., Jeong, S.-N. & Lee, J.-H. Artificial intelligence in fractured dental implant detection and classification: evaluation using dataset from two dental hospitals. Diagnostics 11, 233 (2021).

Zhang, X. et al. Development and evaluation of deep learning for screening dental caries from oral photographs. Oral diseases 28, 173–181 (2022).

Hwang, J.-J., Jung, Y.-H., Cho, B.-H. & Heo, M.-S. An overview of deep learning in the field of dentistry. Imaging science in dentistry 49, 1–7 (2019).

Nguyen, T. T., Larrivée, N., Lee, A., Bilaniuk, O. & Durand, R. Use of artificial intelligence in dentistry: current clinical trends and research advances. J Can Dent Assoc 87, 1488–2159 (2021).

Khanagar, S. B. et al. Developments, application, and performance of artificial intelligence in dentistry–a systematic review. Journal of dental sciences 16, 508–522 (2021).

Carrillo-Perez, F. et al. Applications of artificial intelligence in dentistry: A comprehensive review. Journal of Esthetic and Restorative Dentistry 34, 259–280 (2022).

Paavilainen, P., Akram, S. U. & Kannala, J. Bridging the gap between paired and unpaired medical image translation. In Deep Generative Models, and Data Augmentation, Labelling, and Imperfections, 35–44 (Springer, 2021).

Jang, W. S. et al. Accurate detection for dental implant and peri-implant tissue by transfer learning of faster r-cnn: a diagnostic accuracy study. BMC Oral Health 22, 1–7 (2022).

Dillenseger, J.-L., Laguitton, S. & Delabrousse, E. Fast simulation of ultrasound images from a ct volume. Computers in biology and medicine 39, 180–186 (2009).

Koenig, L. J. Imaging of the jaws. In Seminars in Ultrasound, CT and MRI (Elsevier, 2015).

Abrahams, J. J. Dental ct imaging: a look at the jaw. Radiology 219, 334–345 (2001).

Brooks, S. L. Cbct dosimetry: orthodontic considerations. In Seminars in Orthodontics, vol. 15, 14–18 (Elsevier, 2009).

Zhang, K., Wu, J., Chen, H. & Lyu, P. An effective teeth recognition method using label tree with cascade network structure. Computerized Medical Imaging and Graphics 68, 61–70 (2018).

Lee, J.-H., Kim, D.-H., Jeong, S.-N. & Choi, S.-H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. Journal of dentistry 77, 106–111 (2018).

Mamatha, J. et al. Cone beam computed tomography-dawn of a new imaging modality in orthodontics. Journal of International Oral Health: JIOH 7, 96 (2015).

Kapila, S. & Nervina, J. Cbct in orthodontics: assessment of treatment outcomes and indications for its use. Dentomaxillofacial radiology 44, 20140282 (2015).

Bornstein, M. M., Scarfe, W. C., Vaughn, V. M. & Jacobs, R. Cone beam computed tomography in implant dentistry: a systematic review focusing on guidelines, indications, and radiation dose risks. International journal of oral & maxillofacial implants 29 (2014).

Harris, D. et al. Eao guidelines for the use of diagnostic imaging in implant dentistry 2011. a consensus workshop organized by the european association for osseointegration at the medical university of warsaw. Clinical oral implants research 23, 1243–1253 (2012).

Song, W., Liang, Y., Yang, J., Wang, K. & He, L. Oral-3d: reconstructing the 3d structure of oral cavity from panoramic x-ray. In Proceedings of the AAAI conference on artificial intelligence, vol. 35, 566–573 (2021).

Cui, Z. et al. A fully automatic ai system for tooth and alveolar bone segmentation from cone-beam ct images. Nature communications 13, 2096 (2022).

Polizzi, A. et al. Tooth automatic segmentation from cbct images: a systematic review. Clinical Oral Investigations 27, 3363–3378 (2023).

Corbella, S., Srinivas, S. & Cabitza, F. Applications of deep learning in dentistry. Oral Surgery, Oral Medicine, Oral Pathology and Oral Radiology 132, 225–238 (2021).

Ren, R., Luo, H., Su, C., Yao, Y. & Liao, W. Machine learning in dental, oral and craniofacial imaging: a review of recent progress. PeerJ 9, e11451 (2021).

Panetta, K., Rajendran, R., Ramesh, A., Rao, S. P. & Agaian, S. Tufts dental database: a multimodal panoramic x-ray dataset for benchmarking diagnostic systems. IEEE journal of biomedical and health informatics 26, 1650–1659 (2021).

Kistler, M. et al. The virtual skeleton database: an open access repository for biomedical research and collaboration. Journal of medical Internet research 15, e2930 (2013).

Panetta, K., Rajendran, R., Ramesh, A., Rao, S. P. & Agaian, S. Tufts dental database: A multimodal panoramic x-ray dataset for benchmarking diagnostic systems. IEEE Journal of Biomedical and Health Informatics (2021).

Liu, W., Huang, Y. & Tang, S. A multimodal dental dataset facilitating machine learning research and clinic services. physionet https://doi.org/10.13026/h1tt-fc69 (2024).

Alamri, H. M., Sadrameli, M., Alshalhoob, M. A. & Alshehri, M. Applications of cbct in dental practice: a review of the literature. General dentistry 60, 390–400 (2012).

Afshar, P. et al. Covid-ct-md, covid-19 computed tomography scan dataset applicable in machine learning and deep learning. Scientific Data 8, 1–8 (2021).

De Vos, W., Casselman, J. & Swennen, G. Cone-beam computerized tomography (cbct) imaging of the oral and maxillofacial region: a systematic review of the literature. International journal of oral and maxillofacial surgery 38, 609–625 (2009).

Scarfe, W. C., Farman, A. G. & Sukovic, P. Clinical applications of cone-beam computed tomography in dental practice. Journal 72, 75–80 (2006).

Afshar, P. et al. Covid-ct-md, covid-19 computed tomography scan dataset applicable in machine learning and deep learning. Scientific Data 8, 121 (2021).

Moturu, A. & Chang, A. Creation of synthetic x-rays to train a neural network to detect lung cancer. Journal Beyond Sciences Initiative, University of Toronto, in Toronto (2018).

Teixeira, B. et al. Generating synthetic x-ray images of a person from the surface geometry. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 9059–9067 (2018).

Henzler, P., Rasche, V., Ropinski, T. & Ritschel, T. Single-image tomography: 3d volumes from 2d cranial x-rays. In Computer Graphics Forum, vol. 37, 377–388 (Wiley Online Library, 2018).

Jacobs, F., Sundermann, E., De Sutter, B., Christiaens, M. & Lemahieu, I. A fast algorithm to calculate the exact radiological path through a pixel or voxel space. Journal of computing and information technology 6, 89–94 (1998).

Siddon, R. L. Fast calculation of the exact radiological path for a three-dimensional ct array. Medical physics 12, 252–255 (1985).

Liu, S. et al. 2d/3d multimode medical image registration based on normalized cross-correlation. Applied Sciences 12, 2828 (2022).

Quan, T. M. et al. Xpgan: X-ray projected generative adversarial network for improving covid-19 image classification. In 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI), 1509–1513 (IEEE, 2021).

Akbari-Shandiz, M. et al. Mri vs ct-based 2d-3d auto-registration accuracy for quantifying shoulder motion using biplane video-radiography. Journal of biomechanics 82, 375–380 (2019).

Bollet, M. A. et al. Can digitally reconstructed radiographs (drrs) replace simulation films in prostate cancer conformal radiotherapy? International Journal of Radiation Oncology* Biology* Physics 57, 1122–1130 (2003).

Abdi, A. H., Kasaei, S. & Mehdizadeh, M. Automatic segmentation of mandible in panoramic x-ray. Journal of Medical Imaging 2, 044003 (2015).

Wang, C.-W. et al. A benchmark for comparison of dental radiography analysis algorithms. Medical image analysis 31, 63–76 (2016).

Acknowledgements

This study was supported by the Project of Guangxi Science and Technology, China (No. GuiKeAD20297004) and the National Natural Science Foundation of China (Grant No. U21A20474, Grant No.61967002).

Author information

Authors and Affiliations

Contributions

Y.H. conceptualized this study, collected and analyzed the data, and wrote the manuscript. W.L. conceptualized this study and revised the manuscript. C.Y. conceptualized this study and analyzed the data. W.L., X.M., X.G., X.L., X.L., and L.M. labeled and processed the data. L.M., S.T., Z.Z., and J.Z. directed the project and revised the manuscript. All authors have read and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Huang, Y., Liu, W., Yao, C. et al. A multimodal dental dataset facilitating machine learning research and clinic services. Sci Data 11, 1291 (2024). https://doi.org/10.1038/s41597-024-04130-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41597-024-04130-1

This article is cited by

-

BRAR-anchored multimodal dataset of panoramic radiographs for periodontal bone resorption grading

Scientific Data (2025)

-

Localisation and classification of multi-stage caries on CBCT images with a 3D convolutional neural network

Clinical Oral Investigations (2025)

-

HGA-SyTSHH: CBCT Dental Segmentation Despite Metal Artifacts

Journal of Shanghai Jiaotong University (Science) (2025)