Abstract

The rare earth elements Sm and Nd significantly address fundamental questions about crustal growth, such as its spatiotemporal evolution and the interplay between orogenesis and crustal accretion. Their relative immobility during high-grade metamorphism makes the Sm-Nd isotopic system crucial for inferring crustal formation times. Historically, data have been disseminated sporadically in the scientific literature due to complicated and costly sampling procedures, resulting in a fragmented knowledge base. However, the scattering of critical geoscience data across multiple publications poses significant challenges regarding human capital and time. In response, we present an automated tabular extraction method for harvesting tabular geoscience data. We collect 10,624 Sm-Nd data entries from 9,138 tables in over 20,000 geoscience publications using this method. We manually selected 2,118 data points from it to supplement the previously constructed global Sm-Nd dataset, increasing its sample count by over 20%. Our automatic data collection methodology enhances the efficiency of data acquisition processes spanning various scientific domains.

Similar content being viewed by others

Background & Summary

In igneous science, it is critical to efficiently collect spatial, temporal, and geochemical data from myriad samples. Such data play a prominent role in understanding high-level geological phenomena such as reconstructing ancient plate kinematics, delineating processes of continental convergence and dispersal, assessing crustal growth, and investigating the deep material structure of orogens1,2,3,4,5,6,7. Determining the formation time of continental crustal protoliths within metamorphic terrains is a significant challenge. This complexity is primarily due to the fluctuating effects of tectonothermal events on isotopic systems. Such events can lead to redistributing parent-daughter elements and consequently to partial or complete re-equilibration of isotopic systems on scales ranging from individual minerals to entire outcrops. In particular, Samarium (Sm) and Neodymium (Nd), two rare earth elements that are essential for analyzing crustal evolution and metamorphic processes, are often regarded as relatively immobile during high-grade metamorphism8,9. As a result, the Sm-Nd isotopic system has been used extensively to derive ages ranging from the most recent metamorphic events to the original formation of the crust10,11,12,13. Groundbreaking studies have highlighted the importance of the Sm-Nd isotope. These studies have investigated the provenance and petrogenesis of igneous rocks14,15, identified allochthonous terranes within orogens16,17,18, and investigated the dynamics of continental crustal growth3,19,20,21,22.

Despite its potential, synthesizing Sm-Nd isotope data, which spans vast spatial and temporal dimensions and cuts across diverse scientific landscapes, presents formidable challenges. These arise primarily from the complex procedures required to extract large datasets from various scientific publications efficiently. By nature, the collection of geoscience data faces numerous challenges, ranging from issues of non-reproducibility and inherent uncertainties to the complexities arising from their multidimensional characteristics, substantial computational demands, and the necessity for periodic updates23. In the burgeoning era of big data, the international geoscience community is grappling with an unprecedented surge in the volume and complexity of data structures24,25. Traditional methods, which rely heavily on manual data collection and categorization, must be reconsidered when dealing with the vast volumes of information from diverse data sources and the intricate demands of database construction26,27,28. Despite the increased emphasis that modern magmatic databases place on both the volume and integrity of their samples, the effectiveness of data collection strategies remains underexplored29,30.

With the advent of neural network-based techniques, the challenges of parsing and extracting target elements from vast Portable Document Format (PDF) documents have been significantly alleviated. The techniques propelling these advancements can be bifurcated into two primary domains: methods anchored in visual analysis31,32,33 and those founded on pre-trained language models (PLMs)34,35,36,37,38. Both approaches have showcased noteworthy efficacy in their designated domains. Notwithstanding, it is pivotal to note that these techniques predominantly concentrate on visual element recognition or deeply engage with natural language processing (NLP) in end-to-end networks. Although proficient within their respective scopes, such specialization may not fully cater to the burgeoning demand for swift and exhaustive data extraction from intricate tabular formats. Historically, methodologies, as exemplified by platforms like Chronos39, GeoSciNet40, GeoDeepDive41, SciSpace42, and GeoDeepShovel43, have leaned on neural network paradigms explicitly tailored for scientific literature analysis. However, despite their significant contributions, most of these methods are predominantly tailored for single-document analysis, consequently constraining their applicability to data extraction from individual documents.

In light of this limitation, we present an innovative approach, illustrated in Fig. 1, that bifurcates the process into two core modules: document retrieval and tabular data aggregation. Unlike these efforts in geoscience data aggregation44,45,46, our approach provides a more cohesive workflow that seamlessly integrates document retrieval and tabular data collection. Such integration dramatically increases the efficiency of data acquisition from geoscience repositories. Using this tool, we obtained 10,624 Sm-Nd datasets from 9,138 tables spanning more than 20,000 geoscience articles. Extensive curation of this vast repository resulted in 2,118 meticulously curated datasets, expanding the previously released global Sm-Nd compendium by over 20% as presented in the research of Wang et al.6.

An overview of the automatic tabular data searching and collection tool workflow, comprising three main stages: (A) document retrieval, (B) tabular data collection, and (C) data processing. The document retrieval stage begins with precise metadata extraction from a curated set of PDF documents, followed by keyword query optimization through a comprehensive data transformation protocol designed to enhance retrieval accuracy and relevance. A computer vision (CV)-based approach is applied to improve the tabular data collection process, which is structured around four key stages: table region detection, text detection, table structure recognition, and table content reconstruction. After tabular data extraction, the data processing stage is conducted using several methods, including data localization, data augmentation, data standardization, metadata integration, and manual validation.

Methods

Document Retrieval

Figure 1(A) illustrates the core components of our PDF parsing protocol, which integrates metadata extraction and advanced keyword querying techniques. The beginning of our process is anchored in the meticulous extraction of metadata from a curated selection of PDF documents. In this phase, we utilized CERMINE47, an automated tool designed for the precise extraction of structured metadata from a wide range of scientific publications. This crucial phase ensures the comprehensive and precise capture of essential metadata elements, encompassing, but not limited to, the title, authorship, abstract, affiliations, keywords, and detailed bibliographic information, such as the journal name, volume, issue, and Digital Object Identifier (DOI). A notable feature of CERMINE is its ability to capture the rich content structure, encapsulating textual narratives, segmented sections, illustrative figures, tabular data, and accompanying captions while preserving the integrity of layout specifics and element positions. The captured metadata is then transformed into Extensible Markup Language (XML) documents to facilitate an enhanced scheme of analytical review and organizational refinement.

The following phase is characterized by optimizing the keyword query efficiency, which is realized by implementing a multifaceted data transformation protocol. Integral to this process is the conversion of all tokens to lowercase, the substitution of spaces for non-alphanumeric characters, the elimination of common stop words, and the expansion of standard abbreviations, culminating in a standardized and normalized data set that enhances consistency and comparative accuracy in subsequent phases of data retrieval. In the context of our focus study on Sm-Nd isotopes, a meticulously constructed keyword matrix, described in Table 1, coupled with specialized filtering rules, enhances the precision and speed of target document identification and extraction.

-

1.

The inclusion criteria encompass terms such as ϵNd (epsilon neodymium, which denotes the neodymium isotopic composition), Sm-Nd (samarium-neodymium, an isotopic system used for dating geological processes), Sm-Nd-Hf (a combined system of samarium-neodymium and hafnium isotopes for more comprehensive geochronological studies), 143Nd/144Nd (the ratio of neodymium isotopes applied in isotope geochemistry), Nd isotope (pertaining to studies of neodymium isotopes), and TDM (depleted mantle model age, which estimates the time a rock was extracted from the mantle). These terms must be present in the title or abstract for inclusion.

-

2.

The criteria further extend to the inclusion of terms related to geological and mineralogical descriptors, including felsic, granite, granitic, pluton, plutonic, magmatic, magma, diorite, or rhyolite, in the title or abstract.

Our methodology culminates in selecting scholarly articles that meet the two enumerated criteria, ensuring a comprehensive and targeted inclusion of documents intrinsically aligned with our study’s thematic and conceptual underpinnings. This multifaceted approach underscores a synthesis of methodological rigor and technological sophistication, embodying a blueprint for enhanced accuracy and efficiency in extracting and analyzing complex scientific data.

Tabular Data Collection

Figure 2 presents the pipeline developed for extracting tabular data from PDF documents, a task made complex by the heterogeneous nature of these files. The strategy is structured around four key stages: table region detection, text detection, table structure recognition, and table content reconstruction. A significant challenge in this process arises from the lack of a universal encoding standard for PDF files, particularly with scanned documents and those using non-Unicode Transformation Format (UTF)-8 encoding, which often results in issues such as garbled text and the loss of essential layout information. To address this, each page containing tabular data is first converted into images, after which a computer vision (CV)-based approach is applied to enhance the accuracy of table recognition and reconstruction. Upon completing the process, an Excel spreadsheet is generated, integrating the extracted structure information and text content.

Table Region Detection

Our table region detection model was developed using Fast Region-based Convolutional Network (Fast R-CNN)48, a machine learning algorithm acclaimed for its accuracy and efficiency. Fast R-CNN is instrumental in accurately identifying and precisely localizing table regions in various documents. The model’s effectiveness is further enhanced by its training on TableBank49, a robust and comprehensive dataset curated explicitly to refine table detection algorithms. Our model demonstrated 97% accuracy on the TableBank dataset throughout the training phase.

As a practical application of the refined model, we set out to identify tables embedded in academic PDFs. Using PDF parsing techniques, we extract metadata to detect the presence of embedded table tags within document pages. When such tags are detected, the corresponding pages are converted to images, setting the stage for more granular analysis. Figure 3 provides an overview of the table region detection process. The table detection neural network identifies and localizes tables within the document, returning both the table position and corresponding page information. This process begins by feeding the input image into a deep convolutional neural network (ConvNet), which extracts convolutional feature maps that capture the spatial characteristics of the image. The region of interest (RoI) projection then maps the detected table regions onto these feature maps. Following this, the RoI pooling layer resizes the regions to a fixed size to ensure uniformity across subsequent stages. The pooled feature vectors are then passed through fully connected layers (FCs), where high-level features are extracted. A Softmax layer is used to classify the regions, and a bounding box regressor further refines the detected table boundaries to achieve more precise localization. This approach improves table detection accuracy by integrating convolutional feature extraction, RoI projection, and bounding box regression, offering a robust solution for extracting tabular data from document images. In cases where the network confirms the presence of a table, it provides not only the specific page number but also the precise relative position of the table on that page.

An overview of the table region detection workflow. The process starts with a deep convolutional neural network (ConvNet) that extracts feature maps from the input image. Region of Interest (RoI) projection maps the detected table areas onto these feature maps. Next, the RoI pooling layer resizes the mapped regions to a uniform size for further processing. Fully connected layers (FCs) extract high-level features, and a Softmax layer classifies the regions. A bounding box regressor refines the table boundaries, providing both the table’s precise position and its page location.

Text Detection

Our methodology begins with an assessment to determine if the PDF pages with tables have an accompanying text layer. In cases where this layer is missing, we employ easyOCR50, a reputable Optical Character Recognition (OCR) tool celebrated for its precision and efficiency, to introduce a text layer. This addition ensures that the text aligns accurately with its corresponding positions in the image. Once integrated, we meticulously organize the text layer to maintain visual and structural integrity. Subsequently, an exhaustive inventory of text dimensions across the entire PDF document is prepared, offering an in-depth analysis of text characteristics.

The average text width, derived from this inventory, is instrumental in estimating text spacing - a critical parameter in our study. This estimated text spacing is not merely a numerical value but serves as a cornerstone for deciphering the intricate structure of embedded tables within the document. It plays a pivotal role in enhancing the accuracy and efficiency of table extraction and analysis, thus contributing profoundly to the rigor and precision of our research outcomes.

Table Structure Recognition

To accurately delineate sections within tables, our first approach is to capture images of tables, focusing on their outer borders and relative position within a PDF document. The images are then subjected to a sophisticated pre-processing stage. Each input image is first converted to grayscale, followed by adaptive thresholding for binarization. Morphological operations, namely image erosion and dilation, are then applied to identify vertical and horizontal lines. This meticulous process separates text and boundary lines while eliminating unnecessary noise. During the post-processing phase, we categorize table morphologies to determine the presence or absence of internal borderlines. This determination depends on detecting vertical and horizontal lines within the table. A pixel count that exceeds a predetermined threshold indicates the presence of these internal border lines. As shown in Fig. 4, we assiduously extract both vertical and horizontal lines, noting intersection points where they exist. Any superfluous intersections are methodically pruned, resulting in a streamlined set of internal frame intersection coordinates. We assess whether adjacent points connect to form internal frame lines using this refined dataset. These connections are established during validation, culminating in a structural representation that highlights tables equipped with internal frame lines.

On the other hand, as shown in Fig. 5, for tables that do not have internal frame lines, our methodology focuses on their identification by analyzing the maximum connected intervals between texts. The procedure includes the following steps, ensuring a comprehensive approach to the extraction of complex tabular structures:

-

Image Processing. The input image is first converted to grayscale in the preliminary image preprocessing stage. Adaptive thresholding is then applied to achieve binarization. Subsequent operations include applying morphological techniques, specifically image erosion and dilation, to identify vertical and horizontal lines. Lines exceeding a certain length, determined by a predefined threshold, are systematically eliminated. After these steps, the image is subjected to another thresholding process that normalizes the pixel values: the values are set to 0 for blank areas and 255 for areas populated with text. This process ensures optimal contrast and improved clarity within the image.

-

Horizontal Frame Lines Identification. We use a systematic pixel-by-pixel scan from top to bottom of the image. Rows with a pixel value sum of zero are marked as potential zones containing horizontal internal frame lines. By merging all adjacent potential zones and locating the midpoints of these continuous zero-value regions, we derive the precise locations of the internal frame lines that segment rows within the table.

-

Vertical Frame Lines Identification. A sequential vertical scan is performed between adjacent horizontal internal frame lines. Each column’s summation of pixel values facilitates the distinction between text and blank areas. We merge contiguous blank areas that are vertically aligned and eliminate those that are the height of a single line. The vertical lines that intersect the largest cumulative blank areas are designated as the vertical internal frame lines of the table, with each iteration marking the intersecting areas as traversed until all blank areas are accounted for.

-

Table Structure Recognition. The intersection of the border and the vertical inner border lines delineates the primary unit cells of the table. Each cell’s vertical lines are carefully examined to determine their intersection with text-containing zones. Segments of vertical lines that intersect text are excised, resulting in a horizontal merging of cells. This rigorous process reveals the table’s refined internal frame line structure after cell merging.

An overview of tabular structure recognition without frames. (A) presents the initial table lacking frames. (B) illustrates the result of image processing. (C) exhibits the construction of vertical and horizontal lines. (D) indicates the table structure post-cell merging recognition. (E) displays the final output following comprehensive table structure recognition.

This systematic methodology ensures accurate and efficient identification and extraction of table structures, especially those without explicit internal boundaries, by analyzing and processing the interconnectedness of text intervals and boundaries.

Table Content Construction

Using the reconstructed internal border structure of the table, we determine the rectangular frame coordinates for each cell within the PDF document. We then extract text information from coincident areas of the PDF text layer. After eliminating and adjusting for spaces, we determine the exact content of the table cell. By integrating the internal border lines and the corresponding cell contents, we construct an Excel spreadsheet, ensuring that the data associated with the merged cells is meticulously documented and preserved.

Data Processing

After extracting the tabular data, we embarked on a rigorous data processing regimen concentrating on the Sm-Nd isotope data. The following methods were employed, adhering to the data processing framework outlined in the research of Wang et al.6:

-

Data Localization. The initial step is to locate the table that contains the Sm and Nd ratio, uniquely defined as the 147Sm/ 144Nd field. This preliminary stage allows the extraction of the Sm-Nd isotope data from the current literature, supplemented by its unique identifier, referred to as the Sample Identifier (ID).

-

Data Augmentation. It is worth noting that the extracted sample data often lacks several key fields, resulting in a remarkably sparse dataset. Based on the sample number derived from the previous step, additional searches are performed in other tables to enrich specific fields, such as the geographic coordinates of the sample. In cases where certain fields remain empty, the title and abstract of the literature are examined according to predefined protocols to retrieve and embed potentially correlating data.

-

Data Standardization. As part of the data collection methodology supporting the research in6, we employed the same data standardization process to ensure technical validation and consistency across datasets. Specifically, building on the initial data obtained, a comprehensive data normalization process was implemented for key datasets. This adjustment not only facilitates efficient data categorization and interpretation but also enables deeper exploration of the integrated data. By recalculating measures, we achieved a unified parameter-based data conversion, enhancing the robustness of the dataset. This approach ensured consistency with the original literature data, thereby solidifying the credibility of the derived results.

For the computation of Nd values, we adopted the chondritic standards, precisely (143Nd/144Nd)CHUR = 0.512638, (147Sm /144 Nd)CHUR = 0.196715951, (143Nd /144Nd)DM = 0.51315, and (147Sm /144Nd)DM = 0.21372, as representative of the contemporary Depleted mantle52,53. CHUR stands for Chondritic Uniform Reservoir and DM stands for Depleted Mantle.

The analytical process focuses on the calculation of three primary standardized parameters, including εNd, TDM1, and TDM2. These are derived according to the following mathematical formulations54:

The parameters fcc, fs, and fdm represent the fSm/Nd values of the average continental crust, the sample, and the depleted mantle, respectively. Specifically, fcc is set at 0.4, fdm is 0.08592, and t signifies the intrusive age of the granite. The calculation for fSm/Nd is derived from the following equation:

The two-stage model ages are derived by assuming that the protolith of granitic magmas has a fSm/Nd ratio characteristic of average continental crust. This approach is particularly favored for felsic igneous rocks to account for changes in fSm/Nd ratios that can be induced by geological processes such as partial melting, fractional crystallization, magma mixing, and hydrothermal alteration, among others. Our recalculated data are in close agreement with the original figures. In particular, certain literature sources provide only limited measurements, including values of ϵNd(t) and TDM, which we have directly incorporated into our research55.

-

Metadata Integration. Drawing a parallel between the acquired data and the metadata of the original article, we initiated a seamless integration process. This merging step connected the article’s metadata, including author, year, journal, title, volume, page, and DOI, with the table data. This approach laid a robust foundation for subsequent manual review and ensured thorough data traceability throughout the entire augmentation process.

-

Manual Validation. As a final step, meticulous manual evaluations are performed to confirm the usability and integrity of the data.

Data Records

All collected data presented in this study are available on the Figshare repository: https://doi.org/10.6084/m9.figshare.24054231.v256. This data set consists of 12 files. Descriptions of these data records are as follows.

The “Sm-Nd data collection (Automated)” file provides a comprehensive overview of data systematically extracted from the geoscientific literature. Each dataset within this compilation is linked to its primary source through a detailed metadata framework. This framework includes several elements: “Ref. Author” (representing the author of the referenced paper), “Ref. Year” (representing the year of publication), “Ref. Journal” (identifying the publishing journal), “Title” (specifying the title of the referenced article), “Volume” (the specific volume of the publication), “Page” (specifying the exact page of data extraction), and “DOI” (the Digital Object Identifier, a tool that ensures unambiguous source referencing). In addition to this basic data, the automated process also collects specific target data: It collects sample identification (“sample”), geographic coordinates, and location descriptors (“Nation/Region/GeoTectonic Unit/Groups”, “Tectonic Unit”, “Sub-Tectonic Unit/Sub-Groups”, “Longitude”, “Latitude”), character attributes, “Latitude”), character attributes ("Lithology”, “Pluton”), chronological details ("Age(Ma)”), and isotopic measurements (“Sm”, “Nd”, “147Sm/144Nd”, “143Nd/144Nd”, “2σ”, “fSm/Nd”, “ϵNd(t)”, “TDM1”, “TDM2”). Notably, TDM1 and TDM2 represent the one-stage and two-stage depleted mantle model ages, respectively, providing insight into the extraction and processing history of crustal materials. It is important to note that the data in this file, having been collected through an automated extraction tool, may contain noise, outliers, and unnormalized values. To ensure the accuracy and relevance of the data for further research, it will be necessary to conduct appropriate data cleaning and processing tailored to the specific requirements of the study.

The “Sm-Nd data collection (Annotation)” file encompasses a detailed description of 2,119 manually annotated data points. These have been integrated to enhance the previously established global Sm-Nd dataset. Through meticulous data tracing, we have ensured the completion and validation of all fields. To promote uniformity, we revised the terminologies: “Nation/Region/GeoTectonic unit/Groups” has been standardized to “GeoTectonic unit”, while “Subtectonic unit/Sub groups” has been recategorized as “Subtectonic unit”. Following a thorough recalculation and normalization process, we introduced new parameters, including “Calcul. ϵNd(0)” (calculated neodymium isotopic composition at the present time), “Recal. ϵNd(t)” (recalculated neodymium isotopic composition at time t), “Recalcu. TDM1 (Ga)” (recalculated one-stage depleted mantle model age in billion years), and “Recalcul. TDM2 (Ga)” (recalculated two-stage depleted mantle model age in billion years). These updates were informed by foundational historical data labeled as “Orig. ϵNd(0)” (original neodymium isotopic composition at the present time), “Origin ϵNd(t)” (original neodymium isotopic composition at time t), “Origi. TDM1 (Ma)” (original one-stage depleted mantle model age in million years), and “Origi. TDM2 (Ma)” (original two-stage depleted mantle model age in million years). Consequently, the data nomenclature has been refined to “Mapped ϵNd(t)” (mapped neodymium isotopic composition at time t) and “Mapped TDM(Ga)” (mapped depleted mantle model age in billion years), facilitating clearer interpretation and consistency across datasets.

The additional files in this repository correspond to the data released in the previous work6, which includes a subset that was manually annotated. This repository presents the complete dataset, integrating both manually collected data and data extracted through the automated method described in this paper. This comprehensive dataset provides a full workflow for data collection, enabling more robust analysis and facilitating related research efforts.

Technical Validation

Consistency Validation

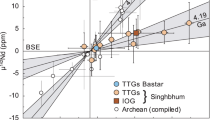

As previously discussed, collecting Sm-Nd isotope data from intermediate to acidic igneous rocks is essential for assessing the material composition and crustal development within orogenic belts6. To ensure the robustness of our data, we conducted a comparative analysis with the Sm-Nd isotope dataset manually curated by Wang et al. Among the 10,624 data entries, we identified 2,118 new target data points that meet both criteria: intermediate to acidic igneous rock composition and geographic location within two of the eight major orogenic belts. This addition significantly strengthens the dataset’s comprehensiveness and relevance. Previously, these data points had remained obscured within extensive literature collections, largely due to the inherent constraints of manual collection methodologies. Following a verification process conducted by geology experts, the majority of data fields were found to align accurately, with an overall accuracy rate reaching 84.04%, aside from isolated exceptions. This degree of consistency underscores the dataset’s validity for further analyses.

Our evaluation was based on three primary criteria:

-

We recalibrated the ϵNd values and Nd mode ages employing consistent parameters and juxtaposed them with the values delineated in the original article of19. Data points were deemed valid if the recalibrated results were closely aligned or were analogous to the original values.

-

For samples that incorporated geological information within the table, we executed spatial casts to authenticate the spatial positioning of the orogenic belt. The data were validated upon successful correlation with the location details specified in the article.

-

Given that the samples discussed in this study predominantly comprise medium-acidic rocks, it is noteworthy that the ϵNd(t) values of the 2,118 felsic and intermediate magmatic rocks exhibit a robust linear association with TDM2. This underscores the efficacy of automatic data collection, suggesting its comparability to manually curated data, especially in isotope mapping studies.

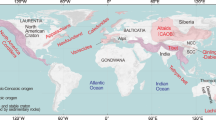

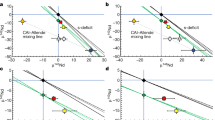

Distribution Validation

In Figs. 6 and 7, we present a comparative distribution analysis between the expanded dataset of newly collected data and the dataset proposed by6. This analysis highlights differences in data distributions, focusing on isotopic domains (ϵNd (t)) and two-stage Nd-depleted mantle model ages (TDM2). The box plots in Figs. 6 and 7 illustrate that the distribution of the new dataset is consistent with the patterns observed across the eight orogens identified in6, further underscoring the effectiveness of our methodology. Using a refined and methodologically advanced approach, we have improved the granularity of data representation, with significant advancements in geologically complex regions such as the Cordillera, Appalachians, Tethyan Tibet, Variscides, and Qinling-Dabie orogens. Empirical analysis reveals an average increase in data volume of 22.45%, facilitating a more comprehensive and nuanced depiction of these orogenic zones. The expanded Sm-Nd isotope dataset for intermediate to acidic igneous rocks within these belts enhances its capacity to rigorously address scientific questions. Specifically, this enriched dataset provides a more robust foundation for classifying orogenic belts by crustal material composition, offering stronger evidence for this classification framework.

Distribution validation of the original dataset and the dataset obtained through our methodology, illustrating the frequency distribution of ϵ Nd(t) values for the eight orogens. The blue diagram depicts the ϵ Nd(t) percentages from the original dataset, while the orange diagram reflects the percentages from our acquired dataset. In the box plot representation, the pink box delineates the ϵ Nd(t)value distribution of the original dataset, whereas the yellow box characterizes the ϵ Nd(t) value distribution from our newly acquired dataset.

Distribution validation of the original dataset and the dataset obtained through our methodology, illustrating the frequency distribution of TDM2 values for the eight orogens. The blue diagram depicts the TDM2 percentages from the original dataset, while the orange diagram reflects the percentages from our acquired dataset. In the box plot representation, the pink box delineates the TDM2 value distribution of the original dataset, whereas the yellow box characterizes the TDM2 value distribution from our newly acquired dataset.

Efficiency Evaluation

As shown in Fig. 8, our research delved into an intensive analytical evaluation focused on unraveling the competence of various data collection modalities. Our research juxtaposed automatic and manual data collection techniques and anchored our evaluation on key variables, including time efficiency and data fill rate. This included vital components such as position, attribute, age, sample, and meta-information. Our in-depth comparative analysis revealed marked differences between the two methodologies. One notable observation was the fragmentation of critical data, particularly age and position, interspersed among the various textual and graphical components embedded in the scientific literature. The diverse nature of this information dissemination poses a notable challenge to automatic data extraction, resulting in noticeable variances compared to the precision and coherence inherent in manual extraction techniques.

The extraction of detailed specimen information, particularly related to sample characteristics such as tectonic units, is an area where automated mechanisms demonstrate superior precision and speed. The incorporation of a carefully curated keyword dictionary further enhances the efficiency of automatic extraction, making it not only faster but also significantly more comprehensive. Our analysis of the temporal dynamics involved in data extraction and integration provides empirical evidence of this improved efficiency. To evaluate the time efficiency of our automated method, we conducted a comparative analysis. Nine graduate students with relevant academic backgrounds were tasked with manually collecting 1,000 isotope data entries from a geoscience literature database filtered by keywords, based on their individual research approaches. They also populated the data using the keywords outlined in Table 1. For comparison, we measured the time required for the automated extraction process to retrieve 9,000 data entries from the same database. The results showed that each student required, on average, over 10 hours to manually collect 100 data entries. This comparison demonstrates that the manual process is 27 times more time-consuming than the automated method, highlighting the operational efficiency of automation, especially in time-sensitive contexts. This distinction accentuates the functional efficacy of automatic processes, particularly in contexts where the alacrity of data extraction and the integrality of information are paramount. The detailed comparison of data fill rates is illustrated in Table 2.

Limitations

To offer a clearer understanding of our current method, we have identified three main limitations: the constraints of the automated tabular extraction method, the inherent limitations within the dataset, and the broader challenges associated with the overall data collection paradigm.

Automated Tabular Extraction Method

During the automated data collection process, we faced three primary challenges: the diversity of PDF file encoding, the variability in table data definitions, and the complexity of extracting temporal and geographical information by integrating text and images.

Diversity of PDF File Encoding: Many PDF documents were scanned as images without text layer encoding, requiring data extraction to rely solely on Optical Character Recognition (OCR) tools. However, the nuanced differences in specific symbols and technical terms within academic tables often led to misinterpretations by general-purpose OCR software, undermining the accuracy of data capture.

Variability in Table Data Definitions: The absence of standardized data definitions presented significant obstacles to automated data extraction. This lack of standardization necessitated the manual design and compilation of various header definition formats to normalize headers. However, this process could not account for all potential header formats, limiting the efficiency of table data extraction and challenging the generalizability of these data processing workflows.

Integration of Text and Images for Temporal and Geographical Information: For example, in processing Sm-Nd isotope data, essential information such as geographic coordinates and chronological details is often embedded within the text and images of academic papers, making it difficult to extract directly from tables. Current algorithms, which primarily focus on single-modal table data processing, struggle to address tasks requiring the integration of data from both text and images. Consequently, significant human intervention is still needed to support the extraction of such complex data.

The Sm-Nd Isotopic Dataset

This study’s dataset is limited to open-access, English-language literature databases, excluding data published on official geological survey websites across various countries and regions, as well as in non-English journals, from the systematic collection. The dataset’s comprehensiveness is also impacted by the accessibility of open-source documents, as many studies containing Sm-Nd isotope data remain restricted from public access. Furthermore, data extraction in this study is governed by retrieval rules established within the paper, which introduce certain inherent limitations. Nonetheless, these rules are designed to effectively encompass primary sources with Sm-Nd isotope data within the accessible literature.

Data Collection Paradigm

The data processing workflow outlined in this paper is tailored specifically for Sm-Nd isotope data. Extending this approach to other academic fields requires the development of a comprehensive and specialized keyword list, which necessitates substantial input from domain experts to ensure accurate data capture. This reliance on expert knowledge for keyword compilation inherently limits the generalizability and adaptability of the data collection method across diverse research domains.

Usage Notes

The Sm-Nd isotope dataset provides a valuable resource for studies of orogens, their compositional architecture, and crustal growth patterns3,19,20,21,22. This dataset facilitates a nuanced understanding of orogen characterization and categorization16,17,18. For example, the Nd isotope mapping method, which uses Sm-Nd data in conjunction with age and position information, provides a robust approach to studying crustal growth6,7,14,15 presented Nd isotope mapping results from eight emblematic Phanerozoic orogens. Their research delineates the crustal growth patterns inherent to each orogenic belt and introduces an innovative methodology that quantitatively characterizes orogens based on their compositional architecture via isotopic mapping. This method elucidates the intricate relationships between orogenesis and continental growth. In addition, we have pioneered our automated tabular data collection method, which significantly increases the efficiency of data collection and results in a richer, more comprehensive data set.

Code availability

The tabular collection code is available from https://github.com/sjtugzx/tabularDataCollection. Instructions for use are available with the code.

References

Tarney, J. & Jones, C. Trace element geochemistry of orogenic igneous rocks and crustal growth models. Journal of the Geological Society 151, 855–868 (1994).

Condie, K. Growth of continental crust: a balance between preservation and recycling. Mineralogical Magazine 78, 623–637 (2014).

Cawood, P. A. et al. Accretionary orogens through earth history. Geological Society, London, Special Publications 318, 1–36 (2009).

Carminati, E., Lustrino, M. & Doglioni, C. Geodynamic evolution of the central and western mediterranean: Tectonics vs. igneous petrology constraints. Tectonophysics 579, 173–192 (2012).

Wang, X. et al. Quantifying knowledge from the perspective of information structurization. Plos one 18, e0279314 (2023).

Wang, T. et al. Quantitative characterization of orogens through isotopic mapping. Communications Earth & Environment 4, 110 (2023).

Wang, T. et al. Voluminous continental growth of the altaids and its control on metallogeny. National Science Review (2023).

Green, T., Brunfelt, A. & Heier, K. Rare earth element distribution in anorthosites and associated high grade metamorphic rocks, lofoten-vesteraalen, norway. Earth and Planetary Science Letters 7, 93–98 (1969).

Pride, C. & Muecke, G. Rare earth element geochemistry of the scourian complex nw scotland—evidence for the granite-granulite link. Contributions to Mineralogy and Petrology 73, 403–412 (1980).

WASSERBURG, G. Early archean sm-nd model ages from a tonalitic gneiss, northern michigan. Selected Studies of Archean Gneisses and Lower Proterozoic Rocks, Southern Canadian Shield 182, 135 (1980).

Moorbath, S., Powell, J. L. & Taylor, P. N. Isotopic evidence for the age and origin of the “grey gneiss” complex of the southern outer hebrides, scotland. Journal of the Geological Society 131, 213–222 (1975).

Hamilton, P., Evensen, N., O’nions, R. & Tarney, J. Sm—nd systematics of lewisian gneisses: implications for the origin of granulites. Nature 277, 25–28 (1979).

Jacobsen, S. B. & Wasserburg, G. Interpretation of nd, sr and pb isotope data from archean migmatites in lofoten-vesterålen, norway. Earth and Planetary Science Letters 41, 245–253 (1978).

Gruau, G., Lecuyer, C., Bernard-Griffiths, J. & Morin, N. Origin and petrogenesis of the trinity ophiolite complex (california): new constraints from ree and nd isotope data. Journal of Petrology 229–242 (1991).

Lambert, D. et al. Re—os and sm—nd isotope geochemistry of the stillwater complex, montana: implications for the petrogenesis of the jm reef. Journal of Petrology 35, 1717–1753 (1994).

Dickin, A. Nd isotope mapping of a cryptic continental suture, grenville province of ontario. Precambrian Research 91, 433–444 (1998).

Dickin, A. Crustal formation in the grenville province: Nd-isotope evidence. Canadian Journal of Earth Sciences 37, 165–181 (2000).

Wang, T. et al. Nd–sr isotopic mapping of the chinese altai and implications for continental growth in the central asian orogenic belt. Lithos 110, 359–372 (2009).

Goldstein, S., O’nions, R. & Hamilton, P. A sm-nd isotopic study of atmospheric dusts and particulates from major river systems. Earth and planetary Science letters 70, 221–236 (1984).

Cawood, P. A., Strachan, R. A., Pisarevsky, S. A., Gladkochub, D. P. & Murphy, J. B. Linking collisional and accretionary orogens during rodinia assembly and breakup: Implications for models of supercontinent cycles. Earth and Planetary Science Letters 449, 118–126 (2016).

Collins, W. J., Belousova, E. A., Kemp, A. I. & Murphy, J. B. Two contrasting phanerozoic orogenic systems revealed by hafnium isotope data. Nature Geoscience 4, 333–337 (2011).

Collins, W. Hot orogens, tectonic switching, and creation of continental crust. Geology 30, 535–538 (2002).

Sudmanns, M. et al. Big earth data: disruptive changes in earth observation data management and analysis? International Journal of Digital Earth 13, 832–850 (2020).

Cai, L. & Zhu, Y. The challenges of data quality and data quality assessment in the big data era. Data science journal 14, 2–2 (2015).

Ahmed, E. et al. The role of big data analytics in internet of things. Computer Networks 129, 459–471 (2017).

Miller, H. J. & Han, J.Geographic data mining and knowledge discovery (CRC press, 2009).

Chen, H. & Xiao, K. The design and implementation of the geological data acquisition system based on mobile gis. In 2011 19th International Conference on Geoinformatics, 1–6 (IEEE, 2011).

Last, W. M. & Smol, J. P.Tracking environmental change using lake sediments: volume 2: physical and geochemical methods, 2 (Springer Science & Business Media, 2002).

Sarbas, B. The georoc database as part of a growing geoinformatics network. In Geoinformatics 2008—data to knowledge, 42–43 (USGS, 2008).

Gard, M., Hasterok, D. & Halpin, J. A. Global whole-rock geochemical database compilation. Earth System Science Data 11, 1553–1566 (2019).

Ren, S., He, K., Girshick, R. & Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Advances in neural information processing systems 28 (2015).

He, K., Gkioxari, G., Dollár, P. & Girshick, R. Mask r-cnn. In Proceedings of the IEEE international conference on computer vision, 2961–2969 (2017).

Redmon, J. & Farhadi, A. Yolov3: An incremental improvement. arXiv preprint arXiv:1804.02767 (2018).

Garncarek, Ł. et al. Lambert: Layout-aware language modeling for information extraction. In Document Analysis and Recognition–ICDAR 2021: 16th International Conference, Lausanne, Switzerland, September 5–10, 2021, Proceedings, Part I, 532–547 (Springer, 2021).

Yu, W., Lu, N., Qi, X., Gong, P. & Xiao, R. Pick: processing key information extraction from documents using improved graph learning-convolutional networks. In 2020 25th International Conference on Pattern Recognition (ICPR), 4363–4370 (IEEE, 2021).

Xu, Y. et al. Layoutlm: Pre-training of text and layout for document image understanding. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 1192–1200 (2020).

Zhang, P. et al. Trie: end-to-end text reading and information extraction for document understanding. In Proceedings of the 28th ACM International Conference on Multimedia, 1413–1422 (2020).

Rahman, W. et al. Integrating multimodal information in large pretrained transformers. In Proceedings of the conference. Association for Computational Linguistics. Meeting, 2020, 2359 (NIH Public Access, 2020).

Cervato, C. et al. The chronos system: geoinformatics for sedimentary geology and paleobiology. In 2005 IEEE International Symposium on Mass Storage Systems and Technology, 182–186 (IEEE, 2005).

Snyder, W., Lehnert, K., Ito, E., Harms, U. & Klump, J. Geoscinet: Building a global geoinformatics partnership. In AGU Fall Meeting Abstracts, 2008, IN31D–03 (2008).

Zhang, C. et al. Geodeepdive: statistical inference using familiar data-processing languages. In Proceedings of the 2013 ACM SIGMOD International Conference on Management of Data, 993–996 (2013).

Khan, A., Kim, T., Byun, H. & Kim, Y. Scispace: A scientific collaboration workspace for geo-distributed hpc data centers. Future Generation Computer Systems 101, 398–409 (2019).

Zhang, S. et al. Geodeepshovel: A platform for building scientific database from geoscience literature with ai assistance. Geoscience Data Journal (2022).

Niu, X. An ontology driven relational geochemical database for the earth’s critical zone: Czchemdb. Journal of Environmental Informatics 23 (2014).

Boone, S. C. et al. Ausgeochem: An open platform for geochemical data preservation, dissemination and synthesis. Geostandards and Geoanalytical Research 46, 245–259 (2022).

Rodriguez-Corcho, A. F. et al. The colombian geochronological database (cgd). International Geology Review 64, 1635–1669 (2022).

Tkaczyk, D., Szostek, P., Fedoryszak, M., Dendek, P. J. & Bolikowski, Ł. Cermine: automatic extraction of structured metadata from scientific literature. International Journal on Document Analysis and Recognition (IJDAR) 18, 317–335 (2015).

Girshick, R. Fast r-cnn. In Proceedings of the IEEE international conference on computer vision, 1440–1448 (2015).

Li, M. et al. Tablebank: Table benchmark for image-based table detection and recognition. In Proceedings of the Twelfth Language Resources and Evaluation Conference, 1918–1925 (2020).

Jeeva, C. et al. Intelligent image text reader using easy ocr, nrclex & nltk. In 2022 International Conference on Power, Energy, Control and Transmission Systems (ICPECTS), 1–6 (IEEE, 2022).

Jacobsen, S. & Wasserburg, G. Sm-nd isotopic evolution of chondrites and achondrites, ii. Earth and Planetary Science Letters 67, 137–150 (1984).

White, W. M. & Hofmann, A. W. Sr and nd isotope geochemistry of oceanic basalts and mantle evolution. Nature 296, 821–825 (1982).

Peucat, J., Vidal, P., Bernard-Griffiths, J. & Condie, K. Sr, nd, and pb isotopic systematics in the archean low-to high-grade transition zone of southern india: syn-accretion vs. post-accretion granulites. The Journal of Geology 97, 537–549 (1989).

DePaolo, D. J. & Wasserburg, G. Nd isotopic variations and petrogenetic models. Geophysical research letters 3, 249–252 (1976).

Keto, L. S. & Jacobsen, S. B. Nd and sr isotopic variations of early paleozoic oceans. Earth and Planetary Science Letters 84, 27–41 (1987).

Guo, Z. et al. Sm-nd isotope data compilation from geoscientific literature. figshare https://doi.org/10.6084/m9.figshare.24054231.v2 (2023).

Author information

Authors and Affiliations

Contributions

Zhixin Guo: Conceptualization (equal); methodology (equal); software (equal); validation (lead); writing - original draft (lead). Tao Wang: Conceptualization (equal); data validation (equal). Chaoyang Wang: Conceptualization (equal); data validation (equal). Jianping Zhou: Software (equal); writing - review and editing (equal). Guanjie Zheng: Conceptualization (equal); formal analysis (equal); methodology (equal); project administration (equal); supervision (lead); writing - review and editing (equal). Xinbing Wang: Project administration (equal); supervision (equal). Chenghu Zhou: Project administration (equal).

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Guo, Z., Wang, T., Wang, C. et al. Sm-Nd Isotope Data Compilation from Geoscientific Literature Using an Automated Tabular Extraction Method. Sci Data 12, 203 (2025). https://doi.org/10.1038/s41597-024-04229-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-024-04229-5