Abstract

Edible oil may be mixed with tiny solid impurities like raw material fragments, hair, metal fragments and etc. during the production and manufacturing process. For food safety reasons, these tiny impurities need to be detected in the quality control process. As compared with manual detection ways, computer vision-based impurity detection methods can greatly reduce the workload and improve the detection efficiency and accuracy. However, there are currently no datasets for the detection of solid impurities in edible oils. For this reason, a dual-modal edible oil impurity (DMEOI) dataset is developed and made publicly available. The dataset is composed of 14520 event and full-pic images and annotated in two ways. Five common edible oils and four typical impurities are included in the dataset. The DMEOI dataset can be used for liquid impurity detection in either single-modal or dual-modal way. To prove the usability of the dataset, four object detection algorithms are applied and compared.

Similar content being viewed by others

Background & Summary

With the continuous progress of science and technology, the global production of soybean, peanut, rapeseed and other oilseeds is growing. This also makes the corresponding pressing and processing industry develop rapidly. Edible oil producers have become an important part of the food industry. The quality of their products is directly related to people’s health and life safety. According to the current domestic and international vegetable oil standards, the insoluble impurity content in edible oil should not exceed 0.05%1,2,3,4,5. For the above two reasons, the edible oil producers will perform the impurities detection before the sale. With the continuous development of automated production, edible oil production lines are constantly being built. The demand for impurities detection is also increasing. Depending on the type of impurities and quality requirements, the common oil impurity detection methods include the chemical analysis, gas chromatography, liquid chromatography, elemental analysis, particle size analysis, and etc. However, these methods are mainly used to analyze the presence of soluble impurities in liquids. As compared with liquid impurities, solid impurities are more difficult to dissolve and have a well-defined shape and volume. Therefore, the detection of impurities in this state is usually carried out by human visual inspection or some simple physical methods. However, human visual inspection is inefficient and unstable. Besides, continuous operations can lead to mental and visual fatigue6. Computer vision-based inspection technologies can reduce the physical labor and improve the detection efficiency. However, these methods are too complex and have relatively low robustness. The method based on deep learning improves the efficiency and applicability of detection. Thus, the detection of impurities can be accomplished using models trained by deep learning networks.

Existing impurity datasets can be divided into two categories. The first one contains the sand and gravel impurities mixed with materials like the wheat grain and tobacco filaments. The other category is solid impurities contained in liquids, such as the glass fibers or hairs which are contained in liquor and medicines. H. Zhang7 collected 746 images of tea impurities through web search. The impurities include the dead leaves, small stones, hard tea stems, small bugs and so on. And then the images were input into the YOLOv5 network to realize the tea impurity detection. L. Tang et al.8 used GaiaField line scan camera to capture images of tobacco impurities. The impurities were plastic items, cotton and wood products, and plant fragments. Dian Rong et al.9 collected 1264 impurity images using an industrial camera. A two-stage convolutional network was used to accomplish the real-time segmentation and detection of impurities in pecan body images. Junlu Xu et al.10 rotated the medicine bottle to oscillate the impurities to capture the images. Then the impurity objects were obtained using the frame difference method. And a pattern recognition technique was used to detect the foreign matters in the pharmaceutical solution. Yao Kang et al.11 put the impurities such as black dregs, white dregs and fibers into the bottled white wine. And they used an industrial camera to produce a dataset of impurities in white wine.

When the temperature is 22 °C, the viscosity value of the water, soybean oil, rapeseed oil, sesame oil, peanut oil and olive oil is 0.9579, 57.1, 63.5, 63.5, 72.2 and 74.1mPa·s respectively12. Among which, the rapeseed and sesame oils have the same viscosity values, and the peanut and olive oils have the similar viscosity values. The viscosity values of the five oil samples increased gradually at an interval of 5–10 mPa·s. Oil are more viscous than water at the same temperature, so they will exhibit slower fluidity when being shaken. Their bubble formation will also be slower than water. The higher the viscosity value of the oil, the slower the bubbles will dissipate and the less fluid the liquid will be. This characteristic of oil leads to the generation of small bubbles inside the liquid that are not easily dispersed, which has an impact on the accuracy of impurity detection. Moreover, the size of the impurity points inside the oil bottle is small, and it is difficult to extract their texture characteristics. Some of the impurity points have different and irregular shapes, and it is difficult to extract the contour of a single impurity point.

In actual liquid impurity detection process, images captured by visible light cameras are sensitive to environmental lighting or other factors. When the bottle is specular reflective, the camera cannot clearly capture the impurities. And when the color of the liquid is similar to the impurities, the impurities are easy to be confused with the background13. In contrast to visible light cameras that capture the full range of appearance features, event cameras only output localized appearance features with local pixel-level brightness changes14. Event cameras are bio-inspired sensors with high dynamic range, low latency, and high temporal resolution. The portion of the output with brightness change information is white and the rest is black. Each output pixel point is represented as an event and the event is denoted as (t, x, y, p). Where, (x, y) is the pixel coordinates, t is the timestamp and p is the polarity.

The impurities contained within different liquids vary due to differences in product formulation, manufacturing processes and quality control. Moreover, the small size and huge number of the impurity points lead to a labor-intensive and time-consuming labeling process. Image labeling also requires specialized knowledge and experience to ensure the accuracy and reliability of the annotation results. For these reasons, the field of impurities detection still lacks a more comprehensive and larger dataset for impurity species. In this paper, a dual-modal edible oil impurity dataset (DMEOI) was developed by combining the event mode of the event camera with the full-picture mode for image acquisition.

The main contributions of this paper are as follows:

-

(1)

A dual-modal edible oil impurity dataset is developed. The sample images contain five common edible oils. The impurity samples contain the hairs, tin beads, metal fragments and raw material fragments. Different impurity sizes are simulated using the tin beads with the diameter of 0.5 mm, 0.4 mm, 0.3 mm, 0.2 mm respectively.

-

(2)

Two types of high-quality manual annotations are provided according to the type of impurities. The first is a uniform labeling of tin beads of different sizes, metal fragments, raw material fragments and hairs as “impurity”. The second is to label the different impurities according to their respective types.

-

(3)

The effectiveness of the DMEOI dataset is evaluated using various existing object detection algorithms. The usefulness of the dataset for future research on computer vision-based edible oil impurity detection is explored.

Methods

The image acquisition system consists of four parts: the LED light source, the event camera, the transparent conveyor belt and the oil bottle. In this paper, the event camera is fixed on a tripod and faces the oil bottle. It is connected to the computer via a USB cable. The light source is placed under the transparent conveyor belt. The image acquisition device is directly arranged in the production line and has no harsh requirements in the factory production environment. Figure 1 shows the structural layout diagram of the image capturing procedure.

Lighting options

The core of a machine vision system is the image acquisition and processing, and image quality is critical to the entire vision system. By choosing the appropriate light sources and designing a reasonable illumination method, the features of the object can be highlighted, and the background and interference information will be filtered and faded to the maximum extent15. Available illumination devices include the strip light source, ring light source, surface light source, coaxial light source and so on. The ring light source can provide different angles of illumination, highlighting the three-dimensional effective information of the object. It can effectively solve the problem of diagonal shadows in lighting. Coaxial light is mainly used for detecting the flat objects with a strong degree of reflection. It is mainly used to detect bumps, scratches, cracks and foreign objects on the flat and smooth object surfaces. Strip light source is suitable for imaging occasions with large-size features16,17,18.

Compared to several other light sources, surface light sources provide the uniform illumination. It can reduce the influences of shadows and reflections, and decrease the noises and interferences in the image. It is widely used in object detection scenarios19. In this paper, a surface light source is applied in the impurity image acquisition process. The light source is white in color and its input voltage is 24 V. The model is KM-FL240175-W, and the size is 255 mm × 190 mm. It is placed under the conveyor belt and illuminates from the bottom up.

Image acquisition device

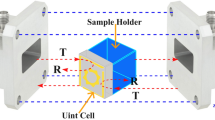

Common event cameras are categorized as the Dynamic Vision Sensors (DVS), Asynchronous Time-Based Image Sensors (ATIS), and Dynamic Active Pixel Vision Sensors (DAVIS), depending on the manufacturer and technical specifications. The DVS is the most basic and first to be developed as an event camera. The ATIS pixel structure contains two sensors capable of providing event information along with some grayscale information. The DAVIS camera combines a DVS camera with a conventional active pixel sensor (APS) camera. It is capable of outputting scene events and grayscale information simultaneously. Considering the performance, the application cost and the technical support from the manufacturer, a CeleX5 event camera from the CelePixel Technology Co. Ltd. is used to collect event-based and visible light images of edible oil impurities. The event camera model is CeleX5_MP with a resolution of 1280 × 800. The lens resolution is 10 mega pixels and the focal length is 16 mm. The power consumptions in the event mode and full-picture mode are 390 mW and 470 mW respectively. Besides, the camera has a dynamic range of 120 dB, a delay of 1μs/min, and an input voltage of 3.3 V. Traditional cameras have a dynamic range of only 80 dB and a delay of 1 ms. In contrast, event camera has a higher dynamic range and lower latency. Each pixel in CeleX5 event camera’s sensor can individually monitor the relative change in light intensity and report an event if it reaches a certain threshold. Row and column arbitration circuits process the pixel excitation signals in real time and ensure that they are processed one by one in an orderly fashion even when multiple requests are received simultaneously. The sensor outputs a continuous stream of asynchronous data based on the events that are excited20,21. Its working principle is shown in Fig. 2.

Basic working principle of the CeleX5 Chipset35.

CeleX5 event camera has two modes: the event mode and the full-frame mode. Event mode includes the event off-pixel timestamp mode, event in-pixel timestamp mode, and event intensity mode. The full-frame mode includes the full-picture mode and optical-flow mode. Event off-pixel timestamp mode detects dynamic information in the field-of-view and outputs only the row/column address of the detected events. Event in-pixel timestamp mode outputs the row/column address of the detected event and outputs the internal timestamp information. However, there is no brightness information in this mode. Event intensity mode outputs the row/column address and luminance information of the detected event, but there is no optical-flow information in this mode. Full-picture mode generates image frames with pixel luminance information, and the luminance information of all the pixels is sampled simultaneously at a certain moment. Optical-flow mode generates image frames with optical-flow information. In this paper, we use the event off-pixel timestamp mode and full-picture mode of the camera to output edible oil impurity images.

Event image preprocessing

Noises are commonly present in images. For event cameras, there are three reasons for generating noises during the imaging process. Firstly, the event camera is sensitive to junction leakage current and photocurrent, which increases the impact of background noises. Secondly, as compared with traditional cameras, the event camera does not have the smoothing function, which amplifies the background noises22. Thirdly, under low contrast conditions, the logarithmic conversion operation further amplifies the noises23. Therefore, it is necessary to remove the noises from the event images before dataset labeling.

The purpose of image filtering is to suppress the noise of the target image whilst preserve detailed features as much as possible. Common filtering algorithms include the median filtering, mean filtering, Gaussian filtering and bilateral filtering24. The median value of a median filter is a robust statistic that is not easily affected by discrete noise points. The background noises in the event image are mostly salt and pepper noises scattering in the image. Therefore, the median filter can effectively remove this noise and keep the object’s edges clear. Besides, when compared with other filtering methods, median filtering is simple and efficient. In this paper, a median filter with size 3-by-3 is used to reduce the noises in event images. The results of the event image before and after filtering are shown in Fig. 3. In the actual application, classical filtering algorithms can be chosen for image preprocessing according to the characteristics of the noises.

Data Records

The dual-modal dataset DMEOI of the event and visible light images is deposited at Figshare repository25. Sample images of different sizes of impurities are shown in Fig. 4. Sample images of different types of impurities are shown in Fig. 5.

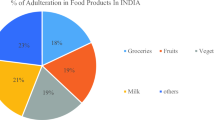

DMEOI is a dual-modal oil impurity dataset collected using a CeleX5 event camera. The five types of oil samples in the dataset are peanut, soybean, olive, sesame, and rapeseed oil. The impurity samples contain the tin beads of different sizes, hairs, metal fragments, and raw material fragments. There are a total of 10,432 images of tin bead impurities, 2,138 images of hair impurities, 570 images of metal fragments, and 1,382 images of raw material fragments. The number of visible light images and event images accounts for half each. The details of the dataset are shown in Table 1 and the percentage of each type of impurity image is shown in Fig. 6.

The DMEOI dataset is a VOC dataset stored separately in different modes. The structural information of the storage folder is shown in Fig. 7. Subfiles are created with the name of the mode and contain a variety of edible oils. Impurities are stored separately according to their respective species names. In event mode, the original images are stored in folders according to the name of impurities. The denoised images are stored in the “denoised” folder under different names. The images of the peanut oil and olive oil are stored in the same folder. Detailed information is stored in the introduction text file. The remaining images of the oil impurities are stored separately. The XML file of the impurity label is stored in the “Annotations” folder. Impurity images are stored in “JPEGImages” folder. Visible light images are stored in the same way as event images. The same images from different modals have the same name in the folder. The raw video files collected are also included in the dataset for subsequent use.

For ease of use, two types of labels are provided in the dataset. The first labels the tin beads of different sizes, metal fragments, raw material fragments and hair as “impurity”. The second labels the 0.2 mm, 0.3 mm, 0.4 mm, and 0.5 mm tin beads as “tin beads”, metal fragments as “metals”, hair as “hairs”, raw material fragments as “raw materials”.

Technical Validation

In this paper, four object detection methods, SSD (Single Shot Multi-Box Detector), RetinaNet, YOLOv5 and YOLOv7, are used to test the validity of the DMEOI dataset and evaluate the usability of the dataset. SSD model is a feed-forward convolutional neural network. It combines the anchor mechanism of Faster-RCNN and the regression idea of YOLO algorithm. Multi-scale regression is performed on every part of the entire input image. Various fixed scale bounding boxes are used to predict the probability of the object being in the image26. This network replaces the last two fully connected layers of the VGG16 network with convolutional layers. Six feature layers with different scales have also been added to Conv4_3 for extracting multi-scale features27. The SSD algorithm not only maintains the fast-computing performance of the YOLO algorithm, but also has an accuracy comparable to that of the Faster-RCNN. The RetinaNet network mainly consists of the ResNet backbone network, the FPN (Feature Pyramid Network) feature pyramid, the classification sub-network, and the edge regression sub-network28. FPN provides top-down paths with lateral connections that up-sample low- resolution feature maps located at higher levels. The lateral connections fuse top-down elements with matching resolution bottom-up elements to generate a multi-scale element pyramid29. Meanwhile, the model integrates the Focal-Loss loss function. It effectively solves the category imbalance problem and difficult sample imbalance problem in object detection. YOLOv5 backbone layer adopts CSP (Cross Stage Partial Network) module as the main backbone network. The features of the input image are extracted five times by a series of standard convolutional layers (Con_BN_Silu)30. After convolution, the C3 module is used for feature stacking. The SPPF (Spatial Pyramid Pooling Fast) module performs pooling on the features and outputs three scales of feature layers. The Con_BN_Silu module enhances the nonlinear fitting ability of the detection model. The C3 module uses the ResNet residual network concept to divide the feature layer into two parts. The SPPF module performs a pooling operation through Con_BN_Silu convolution and three consecutive 5 × 5 pooling layers to extract a new feature layer31. The feature fusion layer consists of two paths top-down and bottom-up to achieve feature fusion at different scales. The detection layer divides the fused feature layer into three sets of grid scales. For each grid, a priori boxes with different aspect ratios are generated based on the location information. The bounding box and category probability of the detected object are then determined by matching the object to the most appropriate prior frame based on its characteristics32. Compared with YOLOv5, YOLOv7 adopts the ELAN (Effective Long-Range Aggregation Network) architecture. By adjusting the gradient routing, the training and inference efficiency of the model is improved. The training method of auxiliary head is also introduced. The auxiliary head improves the detection accuracy without affecting the inference time. In addition, YOLOv7 uses the Silu function as the activation function to enhance the nonlinear fitting ability of the model33.

This experiment is performed on a NVIDIA RTX4090 GPU with CUDA version 11.7, torch version 2.0.1, torchvision version 0.15.2, and python version 3.8. The SSD model training rounds is 12,000 and the learning rate is 0.0001; RetinaNet model training rounds is 100; YOLOv5 model training rounds is 1000, batch size is 16; YOLOv7 model training rounds is 1000, batch size is 16.

Precision is the proportion of true positive cases in the sample of predicted positive cases. Recall is the proportion of predicted positive cases out of all samples that are true positive cases34.

Where TP (True Positive) means actual positive and predicted positive; FN (False Negative) means actual positive and predicted negative; and FP (False Positive) means actual negative and predicted positive.

In this paper, different labeled datasets are fed into the deep learning network for training and detecting. Firstly, the dataset labeled as “impurity” is fed into the network for training. This part of the dataset contains 5,712 images, among which 80% of the images are training set, 10% of the images are validation set and remaining 10% of the images are test set. Various impurities are equally distributed in the three subsets. Event images and visible light images are input into the network for training respectively, and the experimental results are shown in Table 2. In the models trained from the four deep learning networks, the detection accuracy rates for event images are higher than those for visible light images. Among them, the RetinaNet network has the best detection performance for impurities in this dataset. The detection accuracy of both the event and full-pic modal images are higher than 80%. The SSD model has the worst detection performance. The detection accuracy rate of both images is lower than 15%.

Next, the datasets labeled as “tin beads”, “hairs”, “metals”, “raw materials” are input into the deep learning network for training. This part of the dataset contains 8,808 images, among which 80% of the images are training set, 10% of the images are validation set and the remaining 10% of the images are test set. Various impurity labels are distributed equally in the three subsets. Event images and visible light images are fed into the network separately for training. The experimental results are shown in Tables 3, 4.

The model trained from the event images is more effective in detecting hairs, metal fragments and raw material fragments. In terms of detection accuracy, the YOLOv7 network achieves 100% accuracy for metal impurities. The YOLOv5 network has a detection precision of more than 94% for both raw material fragments and hair strands. In terms of detection recall rate, the YOLOv7 network has a recall rate of 81.2% for hairs, and the YOLOv5 network has a recall rate higher than 80% for both hair strands and metals. The RetinaNet network has a 100% detection recall rate for tin bead impurities and raw material fragments, but the precision rate is lower.

The model trained from the visible light images has better detection results for hairs and raw material fragments. Compared with the other networks, the YOLOv5 network had the highest precision for the detection of the four impurities and the highest recall for the detection of hair and metal fragments. The accuracy of detection of hair impurities was 100% and the recall of detection was 93.5%. The YOLOv7 network has the highest detection recall rate of 73.9% for tin beads. The RetinaNet network has 100% detection recall rate for raw material fragments, but the detection precision rate is much lower.

It can be seen from Table 4 that, except for the tin bead impurity, the four models trained based on event images outperforms the models trained based on visible light images in terms of the detection accuracy. As compared with the visible light image, the event image removes the background redundant information. The edge profile information of the impurity object is highlighted. This contributes to the detection accuracy. However, for the detection of tin bead impurities, the model trained on visible light images shows better performance. This may be because the tin bead object in the event image has fewer features to learn. Learning only the contour information of the object may easily lead to false detection. Therefore, the event images can be trained in combination with the visible light images to achieve better detection results.

Usage Notes

DMEOI is a Pascal VOC format dataset. Before training, users need to first create four text files “train”, “val”, “test” and “trainval”. Then they can use the scripting tool to divide the images and XML in the dataset into different folders according to a certain proportion, and store the path in the four text files. This dataset can be flexibly converted from the VOC format to COCO or YOLO format according to specific requirements of various deep learning networks. Users may use the common conversion tools, custom scripts or online conversion platforms to upload the XML files from the dataset, and then configure the output format to COCO standard JSON file or YOLO standard txt file. After the conversion, the desired format of dataset is generated. It can be used for oil impurities detection in either single-modal or dual-modal way. It can also be used to study the performance of deep learning networks for detecting tiny weak impurities. This dataset contains only the images related to edible oil impurities. Industrial oils, e.g., motor oils, lubricants, and etc. may be considered to be added to this dataset in the future. There are two issues that need concerns when users decide to expand this dataset or build a similar dataset. First issue is how to choose a lens with the appropriate focal length. Generally, this can be determined by the following equation, according to the properties of similar triangles in plane analytic geometry.

where f denotes the focal length, h denotes the height of imaging sensor target surface, D and H are the object distance and object height respectively. In this paper, the width ™ are 3.2 cm and 8.9 cm respectively. The object distance is around 15 cm. When referring to the datasheet, it can be seen that CeleX5 image sensor has a resolution of 1280 × 800, with each pixel featuring an area of 9.8 × 9.8 μm. So, the height of sensor target surface is 9.8 × 800 μm. When substituting the values of the variables into the above equation, we get the theoretical focal length f = 13.2 mm. In practice, a lens with the focal length of 16 mm is chosen.

Second issue is that, during the video capturing process, the solid impurities should keep suspended in the liquid so that they are easier to be detected. Shaking the bottle or an emergency stop in the production line can easily meet this requirement.

Code availability

The generation of this data does not involve the use of any code. In addition, no code is required to access or analyze this dataset. However, the DMEOI dataset is available at Figshare repository to reproduce the object detection results.

References

GB/T 1534-2017, Peanut oil (in Chinese) [S], State Administration of Market Supervision and Administration, (2017).

GB/T 1535-2017, Soybean oil (in Chinese) [S], State Administration of Market Supervision and Administration, (2017).

GB/T 1536-2021, Rapeseed oil (in Chinese) [S], State Administration of Market Supervision and Administration, (2021).

GB/T 8233-2018, Sesame oil (in Chinese) [S], State Administration of Market Supervision and Administration, (2018).

GB/T 23347-2021, Olive oil (in Chinese) [S], State Administration of Market Supervision and Administration, (2021).

Shao, Z., Zhang, Y., Zhang, W. & Zhou, J. Detection of impurities in bottled Baijiu based on machine vision (in Chinese) [J]. Journal of Sichuan University (Natural Science Edition) 56(02), 235–240 (2019).

Zhang, H. Design of Tea Impurity Detection System Based on YOLOv5 Deep Learning Algorithm, 2023 International Conference on Network, Multimedia and Information Technology (NMITCON), pp. 1–6, https://doi.org/10.1109/NMITCON58196.2023.10276036 Bengaluru, India, (2023).

Tang, L. et al. Tobacco Impurities Detection with Deep Image Segmentation Method on Hyperspectral Imaging, 2023 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC), pp. 1–5, https://doi.org/10.1109/ICSPCC59353.2023.10400280 ZHENGZHOU, China, (2023).

Rong, D., Wang, H., Xie, L., Ying, Y., Zhang, Y. Impurity detection of juglans using deep learning and machine vision, Computers and Electronics in Agriculture, 178, 105764, ISSN 0168-1699 (2020).

Xu, J., Li, X. & Shi, S. Research on the Application of Visual Detection Algorithm for Visible Foreign Bodies in Large Infusion (in Chinese) [J]. Industrial Control Computer 33(06), 19–21 (2020).

Yao, K., Yang, P. & Ma, S. A method for detecting impurities in liquids based on feature classification (in Chinese) [J]. Semiconductor Optoelectronics 40(05), 719–725 (2019).

Sahasrabudhe, S. N., Rodriguez-Martinez, V., O’Meara, M. & Farkas, B. E. Density, viscosity, and surface tension of five vegetable oils at elevated temperatures: Measurement and modeling. International Journal of Food Properties 20(sup2), 1965–1981, https://doi.org/10.1080/10942912.2017.1360905 (2017).

He, W. et al A Trajectory-based Attention Model for Sequential Impurity Detection, Neurocomputing, 410, 271–283, ISSN 0925-2312 (2020).

Ryan, C. et al. Real-Time Multi-Task Facial Analytics with Event Cameras. IEEE Access 11, 76964–76976, https://doi.org/10.1109/ACCESS.2023.3297500 (2023).

Yang, H. and Hui, S., Analysis of Light Source Selection and Lighting Technology in Machine Vision, 2021 International Conference on Computer Engineering and Application (ICCEA), pp. 511–514, https://doi.org/10.1109/ICCEA53728.2021.00106 Kunming, China, (2021).

Chen, Y., Kang, Y., Liu, Y., Liu, L. and Cai, X. Parallel Light Source Based Machine Vision for Length Measurement of Shaft Part Chamfer, 2023 8th International Conference on Communication, Image and Signal Processing (CCISP), pp. 240–244, https://doi.org/10.1109/CCISP59915.2023.10355840 Chengdu, China, (2023).

T. Botterill, S. Mills, R. Green and T. Lotz Optimising Light Source Positions to Minimise Illumination Variation for 3D Vision, 2012 Second International Conference on 3D Imaging, Modeling, Processing, Visualization & Transmission, pp. 222–229, https://doi.org/10.1109/3DIMPVT.2012.13 Zurich, Switzerland, (2012).

Zhang, Y. & Lu, G. An Imaging Method for Sculptured Surface Defect Detection Based on Compound Lighting and Image Fusion, 2022 Global Reliability and Prognostics and Health Management (PHM-Yantai), pp. 1–4, https://doi.org/10.1109/PHM-Yantai55411.2022.9942136 Yantai, China, (2022).

Liu, H., Yan, X. & Hui, H. Positioning method of hole-type parts with internal ribs based on parallel backlighting (in Chinese) [J]. Journal of Lighting Engineering 32(02), 63–68 (2021).

Zhou, X., Liu, Q., Chan, S. & Chen, S. A review of visual tracking algorithms based on event cameras (in Chinese) [J]. Small Microcomputer Systems 41(11), 2325–2332 (2020).

Ding, S. et al. E-MLB: Multilevel Benchmark for Event-Based Camera Denoising. IEEE Transactions on Multimedia 26, 65–76, https://doi.org/10.1109/TMM.2023.3260638 (2024).

Wu, T., Gong, C., Kong, D., Xu, S. & Liu, Q. A Novel Visual Object Detection and Distance Estimation Method for HDR Scenes based on Event Camera, 2021 7th International Conference on Computer and Communications (ICCC), pp. 636-640 Chengdu, China, (2021).

Yan, C., Wang, X., Zhang, X. & Li, X. Adaptive Event Address Map Denoising for Event Cameras, in IEEE Sensors Journal, vol. 22, no. 4, pp. 3417–3429, 15 Feb.15, https://doi.org/10.1109/JSEN.2021.3139635 (2022).

Zhong, W. & Luo, Q. Iterative bilateral median filtering based on gray scale features and the principle of plurality (in Chinese) [J]. Infrared Technology 45(12), 1330–1336 (2023).

Wang, H. Dual-modal edible oil impurity dataset for weak feature detection. figshare https://doi.org/10.6084/m9.figshare.27321708 (2024).

Zhang, H., Huang, W. & Qi, J. Design and implementation of object image detection interface system based on PyQt5 and improved SSD algorithm, 2022 IEEE 10th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), pp. 2086–2090, https://doi.org/10.1109/ITAIC54216.2022.9836494 Chongqing, China, (2022).

Liu, W. et al. SSD: Single Shot MultiBox Detector, Computer Vision – ECCV 2016. ECCV 2016. Lecture Notes in Computer Science(), 9905, 2016.

M. I. Daoud, A. Al-Ali, M. Z. Ali, I. Hababeh & R. Alazrai Detecting the Regions-of-Interest that Enclose the Tumors in Breast Ultrasound Images Using the Retinanet Model, 2023 10th International Conference on Electrical and Electronics Engineering (ICEEE), pp. 36–40, https://doi.org/10.1109/ICEEE59925.2023.00014 Istanbul, Turkiye, (2023).

Lin, T., P. Goyal, R. Girshick, He, K. & P. Dollár Focal Loss for Dense Object Detection, IEEE Transactions on Pattern Analysis and Machine Intelligence, 42, no. 2, pp. 318–327, Feb (2020).

Sudars, K. et al. YOLOv5 Deep Neural Network for Quince and Raspberry Detection on RGB Images, 2022 Workshop on Microwave Theory and Techniques in Wireless Communications (MTTW), pp. 19–22 Riga, Latvia, (2022).

Lu, Z., Ding, L., Wang, Z., Dong, L. & Guo, Z. Road Condition Detection Based on Deep Learning YOLOv5 Network, 2023 IEEE 3rd International Conference on Electronic Technology, Communication and Information (ICETCI), pp. 497–501, https://doi.org/10.1109/ICETCI57876.2023.10176545 Changchun, China, (2023).

Huang, M., Wang, B., Wan, J. & Zhou, C. Improved blood cell detection method based on YOLOv5 algorithm, 2023 IEEE 6th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), pp. 992–996, https://doi.org/10.1109/ITNEC56291.2023.10082206 Chongqing, China, (2023).

Cao, S., Xiao, J., Meng, X. & Yang, W. MFDF-YOLOv7: YOLOv7-Based Multiscale Feature Dynamic Fusion Small Object Detection Algorithm, 2023 5th International Conference on Frontiers Technology of Information and Computer (ICFTIC), pp. 463–471, https://doi.org/10.1109/ICFTIC59930.2023.10454141 Qiangdao, China, (2023).

Liu, S., Wang, Y., Yu, Q. Liu, H. & Peng, Z. Ceam-yolov7: Improved yolov7 based on channel expansion and attention mechanism for driver distraction behavior detection, IEEE Access, 10, pp. 129 116–129 124, (2022).

CelePixel Technology Co. Ltd. CelePixel CeleX-5 Chipset SDK Reference. China: Shanghai.

Acknowledgements

This work is supported by the Foundation of Scientific and Technological Breakthroughs Project for Social Development under the Shanghai Science and Technology Innovation Action Plan [Grant number 21DZ1205000].

Author information

Authors and Affiliations

Contributions

Huiyu Wang, Qianghua Chen and Ming Li had the initial idea for this study. Huiyu Wang, Qianghua Chen, Jianding Zhao and Liwen Xu established the datasets, i.e., Dual-modal edible oil impurity dataset for weak feature detection. Ying Zhao verifed the data. Huiyu Wang and Jianding Zhao designed and performed the technical validation. Huiyu Wang, Qianghua Chen and Jianding Zhao drafted the paper. Ming Li, Ying Zhao, Qinpei Zhao and Qin Lu jointly supervised the work. All authors participated in verifying the data and revising the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, H., Chen, Q., Zhao, J. et al. Dual-modal edible oil impurity dataset for weak feature detection. Sci Data 11, 1426 (2024). https://doi.org/10.1038/s41597-024-04305-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-024-04305-w