Abstract

The combination of unmanned aerial vehicles (UAVs) and deep learning has potential applicability in various complex search and rescue scenes. However, due to the presence of environmental occlusions such as trees, the performance of UAVs mounted with different optical payloads in detecting missing persons is poor. To the best of our knowledge, currently available non-occluded human target datasets are insufficient to address the challenges of automatic recognition for partially occluded human targets. To address this problem, we collected a UAV-based infrared thermal imaging dataset for outdoor, partially occluded person detection (POP). POP is composed of 8768 labeled thermal images collected from different environmental scenes. After training with popular object detection networks, our dataset performed stable average precision for partially occluded person detection and short response time. In addition, high precision of object detection by POP trained networks was not attenuated until the occlusion rate exceeded 70%. We expected POP would extend present methodologies for the search of human objects under complex occluded circumstances.

Similar content being viewed by others

Background & Summary

People frequently get lost or become injured in wilderness due to a variety of factors, including disasters, travels in unfamiliar regions and wars, etc. Methods for accurately and quickly locating missing persons are urgently needed in this field1,2. Traditional search and rescue methods, such as the use of animals and manual searches3, are limited by high costs, low efficiency in large-area, and a high potential of safety hazards. With advantages such as a free-flight view, low costs for maintenance and convenient deployment, unmanned aerial vehicle (UAV) technology has enormous potential in the search for missing persons4,5, making it an ideal platform for data and image collection6,7.

In the field of deep learning, general datasets such as The Pattern Analysis, Statistical Modeling and Computational Learning Visual Object Classes(PASCAL VOC)8,9 and Microsoft Common Objects in Context (MS COCO)10,11 have been employed in the training and evaluation of object detection networks. The development of datasets for specific scenarios has significantly improved the performance of algorithms for tasks such as object detection and object tracking via UAVs/unmanned ground vehicles (UGVs). Table 1 lists the main characteristics of the currently available public person detection datasets. The Campus12,13 and Vision Meets Drones (VisDrone) datasets14,15 consist of visible-light images of pedestrians, cyclists, and vehicles collected and annotated by UAVs and have promoted the performance of small object detection algorithms obtained by UAVs. The Benchmarking IR Dataset for Surveillance with Aerial Intelligence (BIRDSAI)16,17 dataset consists of thermal images of animals and humans collected by a fixed-wing UAV, providing support in curbing illegal animal poaching and trafficking. The Search and Rescue at Universidad de Málaga (UMA-SAR)18,19 dataset is a multi-modal dataset collected by ground vehicles consisting of visible-light images, infrared thermal images, Light-laser Detection and Ranging (LiDAR) data, and inertial measurement unit (IMU) data, and has provided strong data support in the field of post-disaster ground rescue. Wilderness Search and Rescue Dataset (WiSARD)20,21 and a high-altitude infrared thermal dataset for Unmanned Aerial Vehicle-based object detection (HIT-UAV)22,23 are datasets consisting of mid-altitude and high-altitude infrared thermal images of pedestrians and vehicles obtained by UAVs and aiming at large-area object detection in nonoccluded environments. The Airborne Optical Sectioning dataset (AOS dataset)4,24 utilizes drones with infrared cameras to capture images of people lying on the ground in forests without occlusion, for human target identification after the occlude of human body are removed through the AOS algorithm.

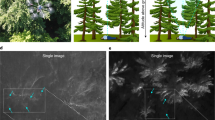

In real wilderness, missing persons are often occluded by objects such as trees. How to precisely locate the occluded human targets is one of the most crucial challenges in the field of search and rescue (Fig. 1). Although the existing general and specialized datasets for human target detection (Table 1) already contain human target labels in visible and/or infrared light channels, they are almost collected in open field without occlusion. Therefore, networks trained with these datasets can identify nonoccluded human targets in the wild very well25,26, but fail to detect the occluded ones (both literatures4,27,28,29,30 and our technical validation (Table 2 and Fig. 2) have verified this problem). By further analyzing our preliminary data, we found that the features of partially occluded targets are severely blurred in visible light images, while incomplete imaging residuals still remain in infrared images (Fig. 1). Based on the above observations and assumptions, we collected a large number of labeled residual images of occluded human targets in infrared images, thus forming the first UAV-based infrared thermal imaging dataset for partially occluded person (POP) detection. This dataset provides strong data support for the large-scale real-time search and rescue of missing persons in complex environments, especially for partially occluded persons.

Visible-light and infrared thermal images of different scenes obtained by a UAV from the same viewing angle. The two-channel images have been aligned. Infrared thermal imaging patterns can clearly identify human objects in partially occluded environments. However, in a visible-light image, if a human object is partially occluded, it can be difficult to distinguish him or her from the surrounding environment, thus making human object detection very difficult. The red arrows indicate the visible-light signal and thermal signal of the partially obscured volunteer.

Sample diagrams of detection performance of the YOLO-COCO-COCO model and YOLO-HIT-HIT model in previous examples the partially occluded persons in Fig. 8. The red box shows the results and scores of the corresponding network in the test set. Dotted blue boxes represent unrecognized objects, and solid blue boxes represent misidentified objects. White insets are partially enlarged images of the results for better visibility. The three columns in the figure represent three groups of images of different scenes and are numbered S1, S2, and S3.

The POP31 dataset includes a total of 8768 images (5548 that can be used for model training, 2320 for model validation and 900 for model testing) collected at several different outdoor scenarioes and annotations in the form of 25811 label boxes. To evaluate this dataset comprehensively, we used it to train and test popular network models, including RTMDet32, PP-YOLOE + s33, YOLOv5s34, YOLOv8s35 and DINO36, all of which are the latest object detection frameworks released after 2020. These models are characterized by their efficiency, high accuracy, and multi-platform compatibility, and they hold a certain level of academic representativeness and widespread usage in the field of object detection. Concurrently, these five models are classified as one-stage object detectors, capable of concurrently predicting object locations and categories in a single forward pass. This attribute endows them with faster inference speeds, making them suitable for real-time applications and aligning with the potential application scenarios of the POP dataset. The POP dataset fed into those object detection networks performs an average precision of more than 0.8 and average response time of lower than 0.04 s, indicating that POP has potential in developing new models for identifying missing persons under partial occlusion in the wilderness.

Methods

A DJI Matrice 30T37 UAV was used to collect the images of the POP dataset. Details on the parameters of the UAV are shown in Table 3. In this section, we will introduce the criterion of the participants recruitment, acquisition of the infrared thermal images, labeling of the objects from the image data, dataset generation and evaluation of the network fed with our dataset.

Human subjects

We recruited nine qualified volunteers who were over 18 year-old and in good health on our campus. These participants were informed about the whole process and their tasks of this study. Especially, they were notified their infrared image data, which did not contain any personal identities, would be shared in the research community as an open accessed dataset. Informed written consents were collected from all those participants. The entire process of the study was approved by the Ethics Committee of the First Affiliated Hospital of the Air Force Medical University (protocol number: KY20242250-C-1).

Data acquisition

As shown in Fig. 3, the UAV is equipped with a wide camera, a zoom camera, a thermal camera and a laser module. The thermal camera can record infrared thermal images (response band: 8–14 μm) with a resolution of 1280 × 1024 (width × height).

The POP dataset is captured with DJI Matrice30T. (a) Structure of the DJI Matrice30T; (b) Four payload modules of the DJI Matrice30T (including the laser module, wide camera, zoom camera and thermal camera); (c-e) Three images captured simultaneously by the three cameras of the DJI Matrice30T: (c) Sample image taken by the DJI Matrice30T thermal camera; (d) Sample image taken by the DJI Matrice30T wide camera; (d) Sample image taken by the DJI Matrice30T zoom camera. The imaging ranges of images (c,e) are shown in the orange and cyan boxes in image (d). The red arrows in images (c,e) indicate the infrared and visible light signals of partially obscured volunteers.

Under different flight altitudes and weather conditions, images of a variety of complex environments that missing persons may encounter were collected to form the POP dataset. The flight altitude of the UAV has a substantial impact on the accuracy of object detection; as the altitude increases, the area covered by a single image enlarges, but the object detection accuracy decreases since fewer pixels are dedicated to the individual human body, especially when the targets were obscured by surrounding objects. Therefore, the relationship between the accuracy of object detection and the flying altitude of the UAV needs to be balanced to achieve optimal detecting performance22. Based on the performance and task requirements of the thermal camera mounted on the DJI Matrice30T UAV, we set the flight altitudes of the UAV to approximately 30 m, 50 m, and 70 m for acquiring images to populate the POP dataset (Fig. 4). Temperature distributions and optical changes under different weather conditions may also affect thermal images of the human body38,39. To improve the robustness of object detection networks in different environments, we chose to acquire images under four weather conditions: cloudy, overcast, foggy and sunny. Figure 5 shows sample images of the dataset under the different weather conditions. To simulate occlusion in a real environment, we further chose different obscuring objects (such as cedars, privets, and weeping willows) at different sites. The duration of a single drone flight is approximately 25 minutes to ensure an optimal battery life and flight safety. During the image collection, we set the pitch angle of the gimbal of the UAV to −90°; that is, the gimbal allowed images to be shot vertically.

Overall, a total of 19 conditions were planned for image acquisition, numbered F1-F16 and T1-T3, accounting for the different imaging recording sites, occluders, weather conditions, and occlusions. Table 4 shows the basic information of the different experimental areas.

Besides the acquisition of the POP dataset, we also conduct another experiment for occlusion rate analysis. Nine trials of experiments were conducted with distinct occlusion modes. In each trial, a participant was asked to lie down randomly in different scenes close to some botanic occluders. The pitch angle of the UAV gimbal was set to −90°. The UAV flight speed was set to approximately 1 m/s, and sampling frequency of the thermal video were 30 frames/second. The thermal images were extracted from the video stream at a sampling frequency of one image/second. Along the flight route we pre-planned, The participant started with his body completely exposed from the perspective of the UAV, with no occlusion; then, he was gradually obscured by the trees until completely occluded. As a result, we could precisely quantify the occlusion rate of each thermal image and generally classify the occlusion mode by the participants’ lying posture. In detail, we defined the occlusion ratio as follows: at each flight altitude, we calculated the ratio of the area of the human body occluded by trees to the total area of the nonoccluded human body. The obscured area is estimated by the corresponding number of pixels; however, because this value cannot be precisely determined, the following equation is used:

By dividing both sides by \({{\rm{n}}}_{{\rm{occlu}}{\rm{ded}}}\), the occlusion rate can be calculated as follows:

where λ represents the occlusion rate, noccluded and nvisible represents the partially occluded and nonoccluded area of the person in the thermal image respectively. ntotal represents the total area of the trapped person in the thermal image without occlusion. Images of the participants completely unobscured by trees were used as the baseline to count the number of pixels they occupied, that is, ntotal. For each occluded participant, the number of pixels nonoccluded was counted to determine nvisible. When the occlusion rate was less than 5%, the object was considered unobscured (the occlusion rate was set to 0%). The data corresponding to a 10% occlusion rate covered the frames of images for occlusion rates between 5% and 15%. Similarly, when the occlusion rate was greater than 95%, the person was considered completely occluded (i.e., the occlusion rate was set to 100%).

Data labeling and dataset generation

We invited three specialized data annotation engineers to label the collected thermal images. We randomly shuffled the images and assigned them randomly to the annotators, who then annotated them according to a fixed annotation process, described as follows:

-

1.

If there is a notable difference between the object and the background, a rectangular bounding box is drawn around the object as tightly as possible;

-

2.

If an object falls on the edge of the image, it is not labeled;

-

3.

If the locations of the objects could not be clearly identified, the images were reinspected with knowledge of the objects’ ground-truth locations. Objects that could be identified with the naked eye were annotated as above, but those that could not be identified with the naked eye were not.All the images were independently annotated by the three engineers and cross-validated to ensure accuracy and consistency. Figure 6 shows sample images with standard labeled boxes. Once the annotation process was complete, we exported all the data in COCO format. Additionally, we developed a dataset format conversion tool that can convert the label boxes of the dataset to VOC format or YOLO format to provide dataset support for subsequent training of the corresponding object detection networks.

Metrics of network performance

We evaluated network performance with a commonly used evaluation indicator, the mean average precision (mAP). Intersection over Union (IoU) is a measure of the overlap between the predicted bounding box and the ground truth bounding box. The metric of mAP@0.5 is employed to assess the precision of an object detection model. mAP@0.5:0.95 represents the average mAP calculated at multiple IoU thresholds ranging from 0.5 to 0.95 with steps of 0.05. Compared to mAP@0.5, mAP@0.5:0.95 offers a more comprehensive assessment of the model’s performance. It not only evaluates the model’s ability to roughly localize objects but also considers its ability to precisely locate them.

Data Records

The POP dataset is available at OSF repository31. Users can download the dataset to train the corresponding object detection network. The dataset is available for unrestricted use, allowing users to freely copy, share, and distribute the data in any format or medium. In addition, users have the flexibility to adjust, remix, convert, and build. We provide annotations in COCO format for easy use by interested scholars.

Dataset structure

The purpose of the original dataset structure is to improve the readability of the dataset. All images are stored in JPG format and divided into a training set (F1-F12), validation set (F13-F16) and test set (T1-T3), according to the characteristics of the scene. Each image uses the same naming format: <scene_ID>_<image_ID>_<height>.JPG. <scene_ID> represents the sequence ID of the shooting site, <image_ID> represents the ID number of the image (starting from 0000 for each scene to improve readability and facilitate data management), and <height> indicates the height at which the image was captured relative to the take-off point. The folder structure of the POP dataset is given in Fig. 7.

Properties

A total of 8768 images were included in POP dataset (5548 in the training set, 2320 in the validation set and 900 in the testing set), and 25811 boxes in total were labeled. Figure 8(a) shows a histogram of the number of labeled boxes in the different object detection scenes. Based on the vegetation coverage of the shooting site, we reasonably planned the number of images to cover the shooting location in each scene. The flying altitudes of the UAV were set to 30 m, 50 m, and 70 m. Figure 8(b) shows the number of images in the dataset acquired at the different flight altitudes. To improve the robustness of the dataset to ensure effective object detection under various weather conditions, we chose typical days with cloudy, overcast, foggy and sunny weather for data acquisition. Figure 8(c) shows the number of images in this dataset under the different weather conditions. From the view of UAV, the missing person may be surrounded by random wild circumstances: partially occluded by trees and vegetation or exposed to an open field without occlusion. To better perform the task of searching for trapped persons in a real wild environment and improve the ability of related models to detect persons trapped in a nonoccluded environment, the POP dataset also includes some thermal imaging images of trapped persons in a nonoccluded environment. Figure 8(d) shows the number of labeling boxes in partial occlusion and no occlusion environments in the dataset.

Technical Validation

In this section, a comprehensive evaluation and validation were made for the usage of our proposed POP dataset. Firstly, we showed the POP dataset fed into popular object detection networks could identified hunman targets in partial occlusion efficiently. Then, we verified that existing datasets for nonoccluded person detection failed to accomplish that task. Furthermore, we demonstrated the intrinsic properties of the POP dataset; especially, under what level of occlusion the POP dataset could properly perform the identification of the human target. All the experiments were performed on an NVIDIA RTX 4090 GPU (NVIDIA Corporation, California, USA).

Performance of POP on occluded human object detection

We used the POP dataset to train five well-developed object detection networks, namely, RTMDet, PP-YOLOE + s, YOLOv5s, YOLOv8s, and DINO (RTMDet and DINO were trained, validated, and tested in the open source toolbox MMDetection40, while PP-YOLOE + s was trained, validated, and tested in the open source toolbox MMYOLO41). The pretrained models for YOLOv5s, YOLOv8s and PP-YOLOE + s were obtained from official sources, while for RTMDet and DINO, CSPNeXt-s and ResNet-50 were used as pre-trained models, respectively. The number of epochs was set to 100, the batch size was 16, and the learning rate was set to 1e-3. During the prediction task in the test set, we set the minimum confidence threshold for detection to 0.5; detection boxes lower than this threshold were ignored. Detailed initial parameters setting for those network models were listed in Table 5.

Table 6 listed the values of the evaluation metrics of the above object detection networks from the POP dataset. The training curve of those network models were shown in Fig. 9. In addition, we also applied those pre-trained networks to perform object detection tasks on the test set and example results were shown in Fig. 10. Our analysis shows that after training with five popular networks, POP dataset performs stable detection accuracy (mAP@0.5 ≥ 0.74, mAP@0.5:0.95 ≥ 0.5, precision ≥ 0.83 and recall ≥ 0.75) and short response time (FPS ≥ 25.5 frames/s, namely response time ≤ 0.039 s) for partially occluded human target in wilderness. It indicates that the POP dataset can quickly and accurately identify occluded human targets and is compatible with common object detection networks.

Sample diagrams of the running results of the five object detection networks in the test set. The red box shows the results and scores of the corresponding network in the test set. Dotted blue boxes represent unrecognized objects, and solid blue boxes represent misidentified objects. White insets are partially enlarged images of the results for better visibility. The three columns in the figure represent three groups of images of different scenes and are numbered S1, S2, and S3.

Furthermore, among the five object detection networks, YOLOv8s had the highest object detection accuracy (mAP@0.5 and mAP@0.5:0.95), the highest FPS and therefore may be more suitable for offline deployment in UAV onboard edge computing devices to achieve real-time object detection in real wild search and rescue missions (Table 6). The remaining analyses on detection tasks in this paper will refer to YOLOv8s as the object detection network in terms of training, validation, and testing.

Existing datasets fail to detect occluded missing person

In order to verify the accuracy and feasibility of the existing general dataset and specialized dataset to perform missing person detection in a partially occluded environment, we compared performances of two YOLOv8s networks, one (YOLO-COCO-POP) was trained by all person categories in COCO training and validation dataset and POP test dataset, the other (YOLO-COCO-COCO) was trained by COCO training and validation dataset and POP test dataset as the baseline. A similar training scheme were used to verify the performance of HIT-UAV on partially occluded person detection. mAP@0.5, mAP@0.5:0.95, precision and recall were used to evaluate performances of those networks. Model parameters remained same as in previous training and validation of YOLOv8s model. The comparison results are shown in Table 2. From Table 2, YOLO-COCO-POP and YOLO-HIT-POP show a significant decline in detection accuracy when performing object detection tasks in a partially occluded environment. mAP@0.5 and mAP@0.5:0.95 declined by an average of 0.695 and 0.46. Precision and recall declined by an average of 0.615 and 0.59.

Meanwhile, we used YOLO-COCO-COCO model and YOLO-HIT-HIT model to verify the detection performance of the previous examples of partially occluded persons in Fig. 10, and the results are shown in Fig. 2. For a total of eight volunteer targets in the three scenes in Fig. 2, the model trained on YOLO-COCO-COCO failed to detect the target, and the model trained on YOLO-HIT-HIT correctly detected only one target, which may be related to the morphology of the person captured by HIT-UAV. Person in HIT-UAV tends to be in a standing or walking state, and there are almost no supine or lateral recumbent targets, so pedestrians in S1 can be recognized very well, but it often faces great difficulties when aiming at supine or lateral recumbent targets, especially under environmental occlusion.

As can be seen from Fig. 2 and Table 2, it is illustrated that current publicly available object detection datasets often face great difficulties in performing the task of partially occluded human object detection in wilderness. However, the specialized dataset POP can achieve better results for that complicated task (as shown in Table 6 and Fig. 10).

Effects of the occlusion rate and flight altitude on the performance of POP

The above results show that our POP dataset can perform object detection in a partially occluded environment with good accuracy, but there is still a substantial question that to what extent of occlusion the POP dataset can maintain good performance. Since the occlusion rate of each person in wilderness is very random and is difficult to control and accurately calculate, we designed an experiment specialized for occlusion rate analysis.

Nine trials of experiments were conducted with distinct occlusion modes. For each image in each trial, we both calculated the occlusion rate of the participant and the corresponding object detection accuracy (represented by AP value) of the POP-trained YOLOv8s network at a minimum confidence threshold of detection of 0.5. If the participant was fail to detect by the network, the corresponding AP value was recorded as 0. Several samples of thermal video stream with different flight altitudes and occlusion modes, as well as corresponding identification results by the POP-trained YOLOv8 network, were uploaded in OSF repository31.

Python342 and SciPy43 were used to perform the further data statistics. To determine the minimum threshold of the performance of the POP-trained YOLOv8s object detection network in terms of the occlusion rate, we performed Kruskal-Wallis test combined with multiple comparisons on the distributions of the AP values of the total images corresponding to the different occlusion rates. Nonparametric Mann‒Whitney U tests44 were used for comparison between two non-normal groups (p value < 0.05 was considered to be statistically significant), and Bonferroni45,46 correction for multiple comparisons was employed. The p value of Kruskal-Wallis test calculated by SciPy is 3.3e-74, which is much less than 0.05, and it is considered to have a significant difference. Comparison between groups with adjacent occlusion rate were executed. The number of the multiple comparisons was n = 10, so the significant p value after correction was < 0.005 (Fig. 11). The results of the statistical analysis revealed that the AP (depicted as the median [first quartile, third quartile]) corresponding to an 80% occlusion rate was significantly lower than that corresponding to an 70% occlusion rate (0.616 [0.737, 0.530] vs. 0.786 [0.911, 0.699], p = 2.9e-5). Similarly, the AP corresponding to a 90% occlusion rate was significantly lower than that corresponding to an 80% occlusion rate (0.523 [0.671, 0.0] vs. 0.616 [0.737, 0.530], p = 4.0e-4). Finally, the AP corresponding to a 100% occlusion rate was significantly lower than that corresponding to a 90% occlusion rate (0.0 [0.0, 0.0] vs. 0.523 [0.671, 0.0], p = 6.8e-12).

When the occlusion rate is less than 70%, we can clearly observe most of the human silhouette and shape, while the median detection accuracy of POP-trained YOLOv8s exceeded 0.78, indicating good object detection performance. However, when the occlusion rate exceeds 70%, there is a considerable loss of the silhouette and morphological features of the human body, and the accuracy of object detection decreased significantly. When the occlusion rate was 90%, the participants could not be detected in one quarter of images (Fig. 11; when the occlusion rate was 90%, the third quartile value was 0), and the average object detection result was close to chance level.

Moreover, we analyzed the relationship between different occlusion modes and the AP of object detection (Fig. 12). Different occlusion modes represent different sequences of occluded human parts and thus have different silhouettes and morphological characteristics. Initially, the human body has a clear contour shape, but as the occlusion rate continuously increases, the body contour gradually disappears, which lead to a significant decrease in feature prominence for the network identification and corresponding rapid AP decline. Different tendency of AP drops could be due to the different feature decay gradients of the three occlusion modes. These findings also confirm that the pose and silhouette of the trapped person may affect the accuracy of object detection.

Relationship between the occlusion rate and recognition accuracy for different occlusion modes (the occlusion rate is shown in the upper left corner of the images). Occlusion mode 1 represents occlusion from head to foot; occlusion mode 2 represents occlusion from right leg to head; and occlusion mode 3 represents occlusion from foot to head.

Usage Notes

Researchers can use POP to modify the structure of the present object detection network, implement model pruning and Distillation47 for the improvement of network model performance and generalization. Furthermore, researchers can use POP to train corresponding object detection networks to explore diverse search and rescue modes in the complex environment. Also, POP can assist manned helicopters or other aircraft with infrared cameras to extend their detection capability in routine search and rescue tasks. Pedestrian detection tasks in partially occluded environments also have a wide range of application prospects in the field of autonomous driving, and POP can also provide potential data support for them. In the future, with the emergence of a fast detection network architecture that is more suitable for detecting small targets under large scales, it may realizes the implementation of on-board edge computing devices (e.g., Jetson Xavier NX48, Raspberry Pi49, etc.)50,51, and real-time casualty search.

Limitations:

-

1.

Limited flight altitude. The current POP dataset collects image at heights ranging from 30 to 70 meters, focusing on the low-altitude region and lacking high-altitude data.This limitation is due to the constraints imposed by the parameter settings of the infrared thermal imaging camera mounted on the DJI Matrice 30 T. When the drone flies further to 90 m, the actual pixels of its target will be further reduced. It is calculated that at a height of 90 m, when the occlusion rate is 70%, the proportion of the number of exposed pixels to the total number of pixels will be less than 0.002%, and the very few pixels will cause the target to be almost unrecognizable, resulting in the low efficient of object detection. As shown in Table 3, the pixel spacing and image size of the infrared thermal imaging camera currently mounted on the DJI Matrice 30 T drone are relatively low, which leads to significant difficulties when conducting search missions in partially occluded environments at higher altitudes.

-

2.

The number of images in the dataset is limited. Compared with other general datasets like MS COCO and VisDrone, the POP dataset contains a relatively smaller number of images. This is due to the constraints on data collection under current environmental conditions (e.g. restrictions on the chosen of occlusion modes and spots of data collection, etc.), and the actual process of data collection is relatively challenging. We plan to employ broader and more efficient data collection techniques in subsequent research to enrich the POP dataset, thereby further enhancing the representativeness of our study.

-

3.

The occlusion scenarios are relatively monotonous. In the wilderness, plants are the primary source of occlusion for missing persons. To address the current issue, we selected tall trees as occluding objects and used the DJI M30T drone equipped with an infrared thermal imaging camera for image acquisition, and creating the POP dataset. Nevertheless, the actual environments encountered by missing persons is typically more complex. We plan to incorporate more sources of occlusion in subsequent research, such as various types of buildings, vehicles, and terrains.

Code availability

The data processing code can be freely found in OSF repository31. The code is written in Python and includes an algorithm for converting the labelled boxes from the COCO format to the VOC format or YOLO format, providing support for subsequent object detection network training.

References

Lyu, M., Zhao, Y., Huang, C. & Huang, H. Unmanned aerial vehicles for search and rescue: a survey. Remote Sens. 15, 3266 (2023).

Li, J., Zhang, G., Jiang, C. & Zhang, W. A survey of maritime unmanned search system: theory, applications and future directions. Ocean Eng. 285, 115359 (2023).

Diverio, S. et al. A simulated avalanche search and rescue mission induces temporary physiological and behavioural changes in military dogs. Physiol. Behav. 163, 193–202 (2016).

Schedl, D. C., Kurmi, I. & Bimber, O. Search and rescue with airborne optical sectioning. Nat. Mach. Intell. 2, 783–790 (2020).

Tian, Y. et al. Search and rescue under the forest canopy using multiple UAVs. Int. J. Robot. Res. 39, 1201–1221 (2020).

Román, A. et al. ShetlandsUAVmetry: Unmanned aerial vehicle-based photogrammetric dataset for Antarctic environmental research. Sci. Data 11, 202 (2024).

Dong, Y. et al. A 30-m annual corn residue coverage dataset from 2013 to 2021 in Northeast China. Sci. Data 11, 216 (2024).

Everingham, M., Van Gool, L., Williams, C. K. I., Winn, J. & Zisserman, A. The Pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 88, 303–338 (2010).

Everingham, M. et al. The PASCAL visual object classes. PASCAL http://host.robots.ox.ac.uk/pascal/VOC/ (2012).

Lin, T.-Y. et al. Microsoft COCO: Common objects in context. in Computer Vision – ECCV 2014 (eds. Fleet, D., Pajdla, T., Schiele, B. & Tuytelaars, T.) 740–755. https://doi.org/10.1007/978-3-319-10602-1_48 (Springer International Publishing, Zurich, Switzerland, 2014).

Lin, T. Y. et al. Microsoft COCO: Common objects in context. Cocodataset https://cocodataset.org/#download (2017).

Robicquet, A., Sadeghian, A., Alahi, A. & Savarese, S. Learning social etiquette: Human trajectory understanding in crowded scenes. in Computer Vision – ECCV 2016 (eds. Leibe, B., Matas, J., Sebe, N. & Welling, M.) vol. 9912 549–565 (Springer International Publishing, Cham, 2016).

Kothari, P., Kreiss, S. & Alahi, A. Human trajectory forecasting in crowds: a deep learning perspective. Zenodo https://doi.org/10.1109/TITS.2021.3069362 (2021).

Cao, Y. et al. VisDrone-DET2021: The vision meets drone object detection challenge results. in 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW) 2847–2854. https://doi.org/10.1109/ICCVW54120.2021.00319 (IEEE, Montreal, BC, Canada, 2021).

Zhu, P., Wen, L., Bian, X., Ling, H. & Hu, Q. Vision meets drones: a challenge. Aiskyeye http://aiskyeye.com/submit-2023/object-detection-2/ (2023).

Bondi, E. et al. BIRDSAI: A dataset for detection and tracking in aerial thermal infrared videos. in 2020 IEEE Winter Conference on Applications of Computer Vision (WACV) 1736–1745. https://doi.org/10.1109/WACV45572.2020.9093284 (IEEE, Snowmass Village, CO, USA, 2020).

Bondi, E. et al. BIRDSAI: A dataset for detection and tracking in aerial thermal infrared videos. Elizabeth Bondi-Kelly https://sites.google.com/view/elizabethbondi/dataset (2020).

Morales, J., Vázquez-Martín, R., Mandow, A., Morilla-Cabello, D. & García-Cerezo, A. The UMA-SAR dataset: multimodal data collection from a ground vehicle during outdoor disaster response training exercises. Int. J. Robot. Res. 40, 835–847 (2021).

Morales, J., Vázquez-Martín, R., Mandow, A., Morilla-Cabello, D. & García-Cerezo, A. T. U. M. A.- SAR Dataset: Multimodal data collection from a ground vehicle during outdoor disaster response training exercises. Universidad de Málaga https://www.uma.es/robotics-and-mechatronics/sar-datasets (2019).

Broyles, D., Hayner, C. R. & Leung, K. WiSARD: A labeled visual and thermal image dataset for wilderness search and rescue. in 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 9467–9474. https://doi.org/10.1109/IROS47612.2022.9981298 (IEEE, Kyoto, Japan, 2022).

Broyles, D., Hayner, C. R. & Leung, K. WiSARD: a labeled visual and thermal image dataset for wilderness search and rescue. WiSARD https://sites.google.com/uw.edu/wisard/ (2022).

Suo, J. et al. HIT-UAV: a high-altitude infrared thermal dataset for Unmanned Aerial Vehicle-based object detection. Sci. Data 10, 227 (2023).

Suo, J. HIT-UAV: a high-altitude infrared thermal dataset for unmanned aerial vehicle-based object detection. Zenodo https://doi.org/10.5281/zenodo.7633134 (2023).

Schedl, D. C., Kurmi, I. & Bimber, O. Data: Search and rescue with airborne optical sectioning. Zenodo https://doi.org/10.5281/zenodo.4024677 (2020).

Jain, A. et al. AI-enabled object detection in UAVs: challenges, design choices, and research directions. IEEE Netw. 35, 129–135 (2021).

Mittal, P., Singh, R. & Sharma, A. Deep learning-based object detection in low-altitude UAV datasets: a survey. Image Vis. Comput. 104, 104046 (2020).

Sundaram, N. & Meena, S. D. Integrated animal monitoring system with animal detection and classification capabilities: a review on image modality, techniques, applications, and challenges. Artif. Intell. Rev. 56, 1–51 (2023).

Yeom, S. Thermal image tracking for search and rescue missions with a drone. Drones 8, 53 (2024).

Tang, S., Andriluka, M. & Schiele, B. Detection and tracking of occluded people. Int. J. Comput. Vis. 110, 58–69 (2014).

Li, X., He, M., Liu, Y., Luo, H. & Ju, M. SPCS: a spatial pyramid convolutional shuffle module for YOLO to detect occluded object. Complex Intell. Syst. 9, 301–315 (2023).

Song, Z. et al. An infrared dataset for partially occluded person detection in complex environment for search and rescue. osf https://doi.org/10.17605/OSF.IO/KMCVA (2024).

He, P. et al. The survey of one-stage anchor-free real-time object detection algorithms. in Sixth Conference on Frontiers in Optical Imaging and Technology: Imaging Detection and Target Recognition (eds. Xu, J. & Zuo, C.) 2. https://doi.org/10.1117/12.3012931 (SPIE, Nanjing, China, 2024).

Issaoui, H., ElAdel, A. & Zaied, M. Object detection using convolutional neural networks: A comprehensive review. in 2024 IEEE 27th International Symposium on Real-Time Distributed Computing (ISORC) 1–6. https://doi.org/10.1109/ISORC61049.2024.10551342 (IEEE, Tunis, Tunisia, 2024).

Jocher, G. ultralytics/yolov5:v7.0 - YOLOv5 SOTA realtime instance segmentation. https://github.com/ultralytics/yolov5 (2022).

Jocher, G. ultralytics/ultralytics:v8.2.0 - YOLOv8-world and YOLOv9-C/E models. https://github.com/ultralytics/ultralytics (2023).

Zhang, H. et al. DINO: DETR with improved denoising anchor boxes for end-to-end object detection. in The Eleventh International Conference on Learning Representations, ICLR 2023 (OpenReview.net, Kigali, Rwanda, 2023).

DJI. Matrice 30 series - Industrial grade mapping inspection drones. DJI https://enterprise.dji.com/matrice-30 (2021).

Tian, X., Fang, L. & Liu, W. The influencing factors and an error correction method of the use of infrared thermography in human facial skin temperature. Build. Environ. 244, 110736 (2023).

Schiavon, G. et al. Infrared thermography for the evaluation of inflammatory and degenerative joint diseases: a systematic review. CARTILAGE 13, 1790S–1801S (2021).

Williams, F., Kuncheva, L. I., Rodríguez, J. J. & Hennessey, S. L. Combination of object tracking and object detection for animal recognition. in 2022 IEEE 5th International Conference on Image Processing Applications and Systems (IPAS) 1–6. https://doi.org/10.1109/IPAS55744.2022.10053017 (IEEE, Genova, Italy, 2022).

MMYOLO Contributors. MMYOLO: OpenMMLab YOLO series toolbox and benchmark. OpenMMLab (2024).

Python.Python https://www.python.org/.

Virtanen, P. et al. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat. Methods 17, 261–272 (2020).

The Corsini Encyclopedia of Psychology. https://doi.org/10.1002/9780470479216 (Wiley, 2010).

Sedgwick, P. Multiple significance tests: the Bonferroni correction. BMJ 344, e509 (2012).

Cabin, R. J. & Mitchell, R. J. To Bonferroni or not to Bonferroni: when and how are the questions. Bull. Ecol. Soc. Am. 81, 246–248 (2000).

Feng, H., Zhang, L., Yang, X. & Liu, Z. Enhancing class-incremental object detection in remote sensing through instance-aware distillation. Neurocomputing 583, 127552 (2024).

NVIDIA. The world’s smallest AI supercomputer. NVIDIA https://www.nvidia.com/en-us/autonomous-machines/embedded-systems/jetson-xavier-nx/ (2019).

Raspberry Pi Foundation. Teach, learn, and make with the Raspberry Pi Foundation. Raspberry Pi Foundation https://www.raspberrypi.org/ (2024).

Wasule, S., Khadatkar, G., Pendke, V. & Rane, P. Xavier vision: Pioneering autonomous vehicle perception with YOLO v8 on Jetson Xavier NX. in 2023 IEEE Pune Section International Conference (PuneCon) 1–6. https://doi.org/10.1109/PuneCon58714.2023.10450077 (IEEE, Pune, India, 2023).

Ma, B. et al. Using an improved lightweight YOLOv8 model for real-time detection of multi-stage apple fruit in complex orchard environments. Artif. Intell. Agric. 11, 70–82 (2024).

Acknowledgements

This work was supported in part by the Key Project of Comprehensive Research under Grant No.KJ2022A000308, in part by the Innovation chain of key industries in Shaanxi province under Grant 2021ZDLGY09-07, in part by the National Natural Science Foundation of China under Grant 62276146.

Author information

Authors and Affiliations

Contributions

Zhuoyuan Song collected the data, annotated the images, and wrote the paper. Yili Yan designed the experiments, reviewed, and edited the paper. Yixin Cao reviewed the paper. Shengzhi Jin and Yu Jing were responsible for flying the drone and taking infrared thermal images. Lei Chen was responsible for processing the infrared thermal images. Fugui Qi, Tao Lei, and Zhao Li were responsible for annotating the images. Juanjuan Xia coded the tools. Xiangyang Liang reviewed the paper and supervised the work. Guohua Lu supervised the work and provided funding. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Song, Z., Yan, Y., Cao, Y. et al. An infrared dataset for partially occluded person detection in complex environment for search and rescue. Sci Data 12, 300 (2025). https://doi.org/10.1038/s41597-025-04600-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-025-04600-0