Abstract

Methane decomposition using single-atom alloy (SAA) catalysts, known for uniform active sites and high selectivity, significantly enhances hydrogen production efficiency without CO2 emissions. This study introduces a comprehensive database of C-H dissociation energy barriers on SAA surfaces, generated through machine learning (ML) and density functional theory (DFT). First-principles DFT calculations were utilized to determine dissociation energy barriers for various SAA surfaces, and ML models were trained on these results to predict energy barriers for a wide range of SAA surface compositions. The resulting dataset, comprising 10,950 entries with descriptors and energy barriers, as main predictive outcomes, has been validated against existing DFT calculations confirming the reliability of the ML predictions. This dataset provides valuable insights into the catalytic mechanisms of SAAs and supports the development of efficient, low-emission hydrogen production technologies. All data and computational tools are publicly accessible for further advancements in catalysis and sustainable energy solutions.

Similar content being viewed by others

Background & Summary

The pursuit of sustainable energy solutions necessitates the development of efficient processes for hydrogen production1,2,3, with methane decomposition emerging as a pivotal pathway4,5,6. However, traditional methane decomposition methods, such as dry reforming that integrates CO2 hydrogenation, face significant challenges including stringent reaction conditions and the complexity of catalytic reactions which often result in undesired byproducts and decreased selectivity7,8,9.

To address these challenges, our research focuses on optimizing methane decomposition using single-atom alloy (SAA) catalysts. SAAs are renowned for their high selectivity and efficiency, attributed to their uniform active sites10,11,12, which offer precise control over the catalytic process and potentially simplify methane decomposition to produce hydrogen without CO2 emissions13,14,15.

Several databases, such as the Open Catalyst Project16 and Catalyst Hub17, provide substantial data on catalytic reactions, particularly with a focus on thermodynamic properties like adsorption and reaction energies. However, DFT calculations tend to emphasize these thermodynamic aspects, leaving gaps in kinetic parameters, especially reaction energy barriers. Data related to methane dry reforming, in particular, is urgently needed to bridge these gaps.

To accelerate the development and optimization of SAAs for methane decomposition, machine learning (ML) and density functional theory (DFT) techniques are employed. These widely utilized computational tools predict and validate the dissociation energy barriers on various SAA surfaces, identifying catalysts with optimal performance18,19,20,21,22,23,24. This approach not only enhances the efficiency of catalyst screening but also significantly reduces the time and resources typically required for experimental testing25.

The process of dehydrogenating CHx species (where x ranges from 1 to 4) can be understood as a series of sequential steps, each involving the elimination of one hydrogen atom. Numerous studies have indicated that among the four-step dehydrogenation pathways for CHx, the dissociation barrier of CH is the highest26,27. Additionally, as shown in Supplementary Fig. 1, both the initial and final steps in the dehydrogenation of CH4 exhibit significant energy barriers that are positively correlated.

Based on these findings, a variety of SAAs are developed, and DFT is utilized to calculate the energy barrier for C-H bond dissociation. The structures and adsorption sites for different SAA surfaces are illustrated in Supplementary Figures 2, 3, resulting in a dataset containing 689 DFT-calculated energy barrier values. The outcome of DFT is utilized as training set and test set. The DFT dataset utilized as training set contains 623 energy barrier data for C-H dissociation. A comprehensive sampling in transition metals is conducted and several metals’ surfaces are selected as the substrates. The DFT dataset utilized as the test set contains 66 energy barrier data and the sample are concentrated on surfaces that are not included in the training set but appear on the prediction set. For each metal, the surface with the largest area proportion, including Ag322, Au332, Cd001, Hg101, Mn110, Mo110, Nb321, Pd111, Rh111, Tc100, is chosen. This dataset is not involved in the model training and only utilized as validation. The distribution of the training set, prediction set and test set is discussed below. All the SAA surfaces are created by substituting one metal to substrate metal surface on the top layer. The single atom metal replacement site is selected by automatically cutting the surface through Pymatgen, and then identifying the surface core atom for replacement.

The ML technique further make predictions after training. The elements of dopant and substrate metals are shown in Fig. 1, containing 30 types of transition metals. It is found the SAAs with host metals like Fe, Co and Ni showing a high dissociation activity, which is in good agreement with previous experimental findings28,29,30,31,32,33. In the test for different models, GBDT model performs the best, with the r2, RMSE and MAE being 0.921, 0.130 eV and 0.094 eV, respectively. The C-H dissociation energy barrier data is predicted by this model. The distribution of C-H dissociation energy barriers is also depicted in Fig. 1, including 8,638 energy barrier values of reaction occurring on top site. For dopant metal atoms loaded on these host metals, Co, Ru, Re, Os and Ir demonstrate noteworthy catalytic performance. The analysis of feature importance for all employed descriptors shows that doped_weighted_surface_energy is the most significant descriptor and account for over 40% of overall feature importance, seen in Supplementary Figure 4. This descriptor is the weighted surface energy of the doped metal atom and is related to the ability of the doped atom to gain and lose electrons. Other highly ranked attributes include com_top_d_e_number, host_molar_volume, com_top_d-band, CN-B3 + 1-top05, com_top_electronegativity, and host_surface_energy and host_surface_work_function. A detailed discussion of the results of machine learning can be found in the previous work28, and only the parts related to the database we present are outlined here.

By leveraging advanced ML models and focusing on SAAs, this database focuses on the optimization of methane decomposition using single-atom alloy catalysts. ML model are trained with the DFT results, utilizing the optimized descriptors from Material Project34, Pymatgen35 and expert knowledge. The classification of reaction active sites improves the accuracy of ML models. C-H energy barriers on the top site of various SAA surfaces are predicted and tested further.

Methods

The methodology applied in this work mainly includes two parts: DFT calculations to generate data for training and testing, and ML to make predictions. The overall workflow is shown in Fig. 2. Detail information of each step is explained below.

Workflow for producing C-H dissociation energy barriers database generation. We start by searching for the transition state of C-H dissociation on various SAA surfaces with DFT and obtain the energy barriers. These values are subsequently utilized to train the ML models, where high relevant descriptors are selected after feature engineering. The source of origin descriptors is explained below. We first classify the prediction set with the reaction sites. Then different models are trained on various reaction sites. The database, containing 8638 entries of top site is predicted by the new model and show high accuracy in further tests.

DFT Calculations

First-principles calculations were performed using the Vienna Ab initio Simulation Package (VASP)36,37. The electron-ion interactions were treated using the Projector Augmented-Wave (PAW)38,39 method. The exchange-correlation potential was performed within the Generalized Gradient Approximation (GGA)40 using the Perdew-Burke-Ernzerhof (PBE) functional. A plane-wave basis set with a cutoff energy of 400 eV was employed. Additionally, intermolecular van der Waals interactions were corrected by the Grimme’s DFT-D3 method41.

For surface optimization, the energy convergence criterion for electronic self-consistent calculations was set to 1 × 10−5 eV, and the convergence criterion for the Hellmann–Feynman forces on the free atoms during ionic relaxation was set to 2 × 10−2 eV Å−1. Brillouin zone sampling was performed using the Monkhorst-Pack method, with a sampling standard such that k × a > 30, where k is the number of K-points and a is the lattice constant42. Transition state searches were conducted using the Climbing Image-Nudged Elastic Band (CI-NEB) method43 and the dimer method44, with transition states validated by frequency analysis to ensure only one imaginary frequency.

Models of various transition metals in their most stable phases were obtained from the Materials Project (MP) database34 and optimized using DFT. Potential surfaces were generated by slicing these models and replacing one substrate metal atom with a dopant atom on the topmost surface layer. For each surface model, a vacuum layer thickness of 15 Å was used. The lattice dimensions a and b were ensured to be greater than 6 Å through supercell expansion, with a sufficient number of layers selected to ensure a thickness greater than 5 Å. During surface optimization, the top two layers were fully relaxed, while the remaining lower layers of metal atoms were kept fixed at their bulk equilibrium positions.

Machine learning

Feature engineering

An automated methodology was developed to select and refine descriptors for single-atom alloy catalysts. The descriptors utilized fall into three main groups: those characterizing the elemental properties and the substrate’s surface, those linked to the substrate surface’s coordination specifics, and innovative descriptors developed from combining elemental properties with coordination numbers based on domain expertise. Elemental and surface property descriptors were extracted from the MP and Pymatgen databases34,35. Information on the metal surface structure, including the coordination numbers of single atoms in the uppermost layer, was derived through automated surface analysis using Pymatgen35. These included the coordination number within a top 0.2 radius (CN-B3 + 1-top02), a top 0.5 radius (CN-B3 + 1-top05), a top 1 radius (CN-B3 + 1-top), and the total coordination number (CN-B3 + 1). The BrunnerNN_real and MinimumDistanceNN methods were employed to assess coordination numbers. Additionally, descriptors quantifying the coordination number in various radii atop the metal surface were formulated. For the active center depends not only on the monatomic metal, but also on the host metal of the monatomic metal coordination. Therefore, the total electronegativity, number of d-electrons, d-band center of the monatomic metal and its coordinated host metal were calculated by means of coordination number weighting. These innovative descriptors integrate the attributes of the single-atom metal with those of its host. After discarding descriptors with incomplete data, a comprehensive set of 85 feature descriptors was compiled, all of which were autonomously generated without the need for further theoretical computations.

To evaluate the efficacy of feature sets, the analysis utilized the Pearson correlation coefficient (p), calculated as outlined in formula (1):

Here, fi and Fi represent the features being compared, \(\bar{{\rm{F}}}\) and \(\bar{{\rm{F}}}\) as the mean of the features. The p value ranges from −1.0 to 1.0, where a higher absolute value indicates a stronger correlation.

The analysis of the Pearson correlation coefficients for the 85 descriptors revealed high correlations among some, indicating redundant information. To address this, two methods were applied: Mutual Information Regression (MIC) and Recursive Feature Elimination with Cross-Validation (RFECV) methods45, both facilitated by the Scikit-Learn package46. These methods aided in identifying and eliminating highly correlated features based on their importance rankings. Post-screening, the Pearson correlation coefficient between all retained descriptors was kept below 0.847. In the calculation of Pearson correlation coefficient, when the Pearson correlation coefficient of two features is greater than our set value, the features with little mutual information are discarded.

Furthermore, mutual information is defined in Eq. (2):

It measures the dependency between a pair of random variables, (X, Y), across the space (X × Y). Here, P(X, Y) denotes their joint distribution, while PX and PY represent their respective marginal distributions, and DKL indicates the Kullback-Leibler divergence, a measure of how one probability distribution diverges from a second, expected probability distribution.

Model selection

In this research, we utilized a diverse array of ML techniques to assess and compare their prediction capabilities. We employed nine different ML classification algorithms: Multilayer Perceptron Classifier (MLPC), Gradient Boosting Classifier (GBC), Random Forest Classifier (RFC), Extra Tree Classifier (ETC), Decision Tree Classifier (DTC), K-Neighbors Classifier (KNC), Linear Support Vector Classifier (LSVC), Ridge Classifier (RidgeC), and ETC + KNC. In addition to these classification methods, we applied fifteen ML regression algorithms: Multilayer Perceptron Regression (MLP), Extreme Gradient Boosting Regression (XGB), Gradient Boosting Regression (GBDT), Random Forest Regression (RF), Extra Tree Regression (ETR), Adaptive Boost Regression (ADAB), Linear Regression (LR), Ridge Regression (RIDGE), Lasso Regression (LAS), Bayesian Ridge Regression (BAY), Bayesian ARD Regression (ARD), Gaussian Process Regression (GPR), Linear Support Vector Regression (LSVR), Support Vector Regression (SVR), and K-Neighbor Regression (KNN). Except for XGB, all other algorithms were implemented using the Scikit-Learn package46.

To optimize the performance of these algorithms, hyperparameter optimization was conducted through a grid search method, leveraging a randomly sampled training set. This method involved exploring various combinations of hyperparameters defined within a specific search space for each algorithm to identify the most effective settings. The objective was to determine the optimal hyperparameters that produced the best results on the test data from training set, thereby enhancing the predictive accuracy of the models by systematically testing a wide range of hyperparameter configurations.

Once the optimal hyperparameters were identified, we assessed the trained ML models’ performance, including predictive accuracy and generalization capabilities. The models were trained using random subsets constituting 80% of the dataset, termed the training set, with the remaining 20% serving as the test set for validating model predictions. Given the dependency of prediction accuracy and difficulty on the division between training and test sets, the training phase was replicated 1000 times for each ML regression algorithm to minimize the randomness effect.

The effectiveness of each ML regression model was quantified using three statistical measures: the coefficient of determination (r²), mean absolute error (MAE), and root mean square error (RMSE). These metrics were calculated to evaluate the models’ prediction errors. Furthermore, the mean, minimum, and maximum values of these indices were employed as benchmarks to assess performance comprehensively. The goal was to identify a model that consistently performs well and exhibits minimal fluctuations across a substantial number of tests, ensuring robustness and reliability in predictive scenarios.

The r2, RMSE, and MAE are defined as:

where Yi signifies the values obtained from DFT calculations, yi represents the predictions generated by the machine learning models, and Y̅ represents the average of all DFT values. For an effective model, the coefficient of determination, denoted as r2, should ideally approach 1, indicating that the model accounts for most of the variance in the observed data. Additionally, lower values of both RMSE and MAE, ideally approaching zero, reflect higher precision and accuracy of the model’s predictions.

For classification purposes, it was essential to account for the possibility of multiple reaction sites on a single SAA surface. Consequently, a multi-label classification approach was implemented to accommodate multiple potential reactions per SAA. In evaluating the ML model, the recall rate was prioritized as the primary metric to minimize any oversight, setting aside the precision measure. The Extra Trees Classifier (ETC) and K-Neighbors Classifier (KNC) emerged as the top models based on recall performance and were selected for the prediction tasks. The predictions made by these two models were aggregated to further reduce the risk of missing any reactions. The classification outcomes from these nine models on the test set are detailed in Supplementary Table 1.

The recall score is defined as follows:

where TP is the number of correct positive predictions, TN is the number of correct negative predictions.

Data Records

The structure files obtained from DFT calculations can be accessed via Figshare at https://figshare.com/articles/dataset/SAA_C-H_/2583655048. The DFT data utilized as training set can be accessed at https://doi.org/10.6084/m9.figshare.2764583149. The descriptors used for ML and the resulting data can be downloaded at https://doi.org/10.6084/m9.figshare.2620000750. The scripts used to download data from the MP and process it using Pymatgen are available on Zenodo at https://zenodo.org/records/813329451.

The dataset, organized in a CSV spreadsheet, includes 10,950 entries detailing descriptors and predictions of the C-H dissociation energy barriers on SAA surfaces28. Each row represents a different chemical composition, while each column denotes a property of that composition. Specifically, 8,638 entries focus on top sites, which are predicted by ML and tested.

The initial five columns of the dataset provide a comprehensive overview of each system’s base material, reaction surface, surface proportion, dopant atom, and the space group of the base material. These fundamental properties, essential for understanding the reaction context, are sourced from the MP database34. As the initialism of fundamental, these properties are classified as F in Supplementary Table 2. Note these properties are not involved in the training, but utilized to distinct reaction systems.

Subsequent to these fundamental details, the dataset expands to include 85 columns that describe various properties of the systems, derived from MP or computed using Pymatgen. These properties are categorized in the methods section and Supplementary Table 2 as D1, D2, and D3. The descriptors, which characterize the elemental properties and the substrate’s surface, are categorized as D1. Those linked to the substrate surface’s coordination specifics are categorized as D2. The innovative descriptors developed from combining elemental properties with coordination numbers based on domain expertise are categorized as D3. Most descriptors are named by the properties and easily understood. The detailed description can be found in Supplementary Table 2.

The dataset also includes columns that indicate the adsorption sites based on ML predictions of reaction pathways, marking a ‘1’ for presence and ‘0’ for absence at specific sites. Finally, predictive outcomes, for example energy barriers, are provided. These outcomes, along with the reaction sites, are categorized as Outcome (O) in Supplementary Table 2, illustrating the results of machine learning analyses.

The DFT test set is depicted in Supplementary Table 3. The host surfaces and dopants describe the catalytic system. The C-H dissociation energy barriers, obtained from DFT and ML predictions, are listed together to compare.

Technical Validation

Comparison to DFT calculations

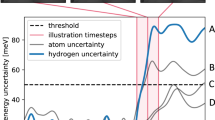

This work reported energy barriers of C-H dissociation, generated by ML method. DFT is widely used in transition state calculations because of the high accuracy and reproducibility. Hence, we investigated the C-H dissociation energy barriers of various SAA surfaces. The outcome was used as the test set. Note that the data contained in training set were excluded. The composition of training set, prediction set and test set can be seen in Fig. 3, detailed in Supplementary Table 3. The dot falling onto the reference line (y = x) indicated the values of C-H dissociation energy barriers obtained by two methods are totally agreed. The r2 and RMSE were 0.881 and 0.145 eV, showing high consistency.

The comparison of C-H dissociation barriers obtained by ML and DFT is shown in Fig. 4. DFT calculation results are labelled as yellow and green dots in the figure. Light blue dots represent the predictions of the ML method. Blue dots indicate the accumulation of light blue, reflecting the predictions concentrating on same host surface. The training set is obtained by DFT first and the prediction set is determined subsequently. The test set is randomly selected in the SAAs, which are predicted and not appear in the training set. The SAAs, which are represented by the blue dots far from yellow dots in the figure, are more likely to be tested. The dots, representing DFT calculation results, cover the whole circle area, showing that the sampling is reasonable and the sample is representative enough.

We also compared our database with other previous researches. The previous researches which investigated C-H dissociation barriers were mainly first-principles studies. The database we predicted showing strong agreement with them, as can be seen in Table 1.

Code availability

The scripts utilized are compatible with Python 3.9. All code and data are released under the MIT License. Detailed usage instructions and dependencies are provided in the README file accompanying the scripts on Zenodo.

References

Hydrogen could help China’s heavy industry to get greener. Nature 610, 234-234 (2022).

Castelvecchi, D. The hydrogen revolution. Nature 611, 440–443 (2022).

He, M. Y., Sun, Y. H. & Han, B. X. Green Carbon Science: Efficient Carbon Resource Processing, Utilization, and Recycling towards Carbon Neutrality. Angew. Chem. Int. Ed. 61, e202112835 (2022).

Bayat, N., Rezaei, M. & Meshkani, F. Hydrogen and carbon nanofibers synthesis by methane decomposition over Ni-Pd/Al2O3 catalyst. Int. J. Hydrogen Energy 41, 5494–5503 (2016).

Al-Fatesh, A. S. et al. Production of hydrogen by catalytic methane decomposition over alumina supported mono-, bi- and tri-metallic catalysts. Int. J. Hydrogen Energy 41, 22932–22940 (2016).

Pudukudy, M., Yaakob, Z. & Akmal, Z. S. Direct decomposition of methane over SBA-15 supported Ni, Co and Fe based bimetallic catalysts. Appl. Surf. Sci. 330, 418–430 (2015).

Sánchez-Bastardo, N., Schlögl, R. & Ruland, H. Methane Pyrolysis for Zero-Emission Hydrogen Production: A Potential Bridge Technology from Fossil Fuels to a Renewable and Sustainable Hydrogen Economy. Industrial & Engineering Chemistry Research 60, 11855–11881 (2021).

Fulcheri, L., Rohani, V. J., Wyse, E., Hardman, N. & Dames, E. An energy-efficient plasma methane pyrolysis process for high yields of carbon black and hydrogen. Int. J. Hydrogen Energy 48, 2920–2928 (2023).

Kim, J. et al. Catalytic methane pyrolysis for simultaneous production of hydrogen and graphitic carbon using a ceramic sparger in a molten NiSn alloy. Carbon 207, 1–12 (2023).

Wu, X. K. et al. Unraveling the catalytically active phase of carbon dioxide hydrogenation to methanol on Zn/Cu alloy: Single atom versus small cluster. J. Energy Chem. 61, 582–593 (2021).

Tu, R. et al. Single-atom alloy Ir/Ni catalyst boosts CO2 methanation mechanochemistry. Nanoscale Horiz. 8 (2023).

Zhang, T. F. et al. Single-Atom Ru Alloyed with Ni Nanoparticles Boosts CO2 Methanation. Small 20 (2024).

Zhang, S., Wang, R. Y., Zhang, X. & Zhao, H. Recent advances in single-atom alloys: preparation methods and applications in heterogeneous catalysis. RSC Adv. 14, 3936–3951 (2024).

Ren, Y. G., Liu, X. J., Zhang, Z. J. & Shen, X. J. Methane activation on single-atom Ir-doped metal nanoparticles from first principles. Phys. Chem. Chem. Phys 23, 15564–15573 (2021).

Bhati, M., Dhumal, J. & Joshi, K. Lowering the C-H bond activation barrier of methane by means of SAC@Cu(111): periodic DFT investigations. New J. Chem. 46, 70–74 (2021).

Chanussot, L. et al. Open Catalyst 2020 (OC20) Dataset and Community Challenges. ACS Catal. 11, 6059–6072 (2021).

Winther, K. T. et al. Catalysis-Hub.org, an open electronic structure database for surface reactions. Scientific Data 6 (2019).

Nolen, M. A., Tacey, S. A., Kwon, S. & Farberow, C. A. Theoretical assessments of CO2 activation and hydrogenation pathways on transition-metal surfaces. Appl. Surf. Sci. 637 (2023).

Xu, X. Y., Xu, H. Y., Guo, H. S. & Zhao, C. Y. Mechanism investigations on CO oxidation catalyzed by Fe -doped graphene: A theoretical study. Appl. Surf. Sci. 523 (2020).

Schumann, J., Stamatakis, M., Michaelides, A. & Reocreux, R. Ten-electron count rule for the binding of adsorbates on single-atom alloy catalysts. Nature Chemistry 16, 154–156 (2024).

Chen, A., Zhang, X., Chen, L. T., Yao, S. & Zhou, Z. A Machine Learning Model on Simple Features for CO2 Reduction Electrocatalysts. Journal of Physical Chemistry C 124, 22471–22478 (2020).

Fanourgakis, G. S., Gkagkas, K., Tylianakis, E. & Froudakis, G. E. A Universal Machine Learning Algorithm for Large-Scale Screening of Materials. J. Am. Chem. Soc. 142, 3814–3822 (2020).

Zhang, X. Y., Zhang, K. X. & Lee, Y. J. Machine Learning Enabled Tailor-Made Design of Application-Specific Metal-Organic Frameworks. ACS Appl. Mater. Interfaces 12, 734–743 (2020).

Wan, X. H. et al. Machine-Learning-Accelerated Catalytic Activity Predictions of Transition Metal Phthalocyanine Dual-Metal-Site Catalysts for CO2 Reduction. J. Phys. Chem. Lett. 12, 6111–6118 (2021).

Zhong, M. et al. Accelerated discovery of CO2 electrocatalysts using active machine learning. Nature 581, 178−+ (2020).

Li, K., Jiao, M. G., Wang, Y. & Wu, Z. J. CH4 dissociation on NiM(111) (M = Co, Rh, Ir) surface: A first-principles study. Surf Sci. 617, 149–155 (2013).

Zhang, R. G., Duan, T., Ling, L. X. & Wang, B. J. CH4 dehydrogenation on Cu(111), Cu@Cu(111), Rh@Cu(111) and RhCu(111) surfaces: A comparison studies of catalytic activity. Appl. Surf. Sci. 341, 100–108 (2015).

Sun, J. et al. Machine learning aided design of single-atom alloy catalysts for methane cracking. Nat. Commun. 15 (2024).

Ashik, U. P. M., Daud, W. M. A. W. & Abbas, H. F. Production of greenhouse gas free hydrogen by thermocatalytic decomposition of methane - A review. Renew Sust Energ Rev 44, 221–256 (2015).

Alves, L., Pereira, V., Lagarteira, T. & Mendes, A. Catalytic methane decomposition to boost the energy transition: Scientific and technological advancements. Renew Sust Energ Rev 137 (2021).

Pham, C. Q. et al. Production of hydrogen and value-added carbon materials by catalytic methane decomposition: a review. Environ. Chem. Lett. 20, 2339–2359 (2022).

Amin, A. M., Croiset, E. & Epling, W. Review of methane catalytic cracking for hydrogen production. Int. J. Hydrogen Energy 36, 2904–2935 (2011).

Naikoo, G. A. et al. Thermocatalytic Hydrogen Production Through Decomposition of Methane-A Review. Front Chem 9 (2021).

Jain, A. et al. Commentary: The Materials Project: A materials genome approach to accelerating materials innovation. Apl Materials 1 (2013).

Sun, W. H. & Ceder, G. Efficient creation and convergence of surface slabs. Surf Sci. 617, 53–59 (2013).

Kresse, G. & Furthmuller, J. Efficiency of ab-initio total energy calculations for metals and semiconductors using a plane-wave basis set. Comput. Mater. Sci. 6, 15–50 (1996).

Kresse, G. & Furthmuller, J. Efficient iterative schemes for ab initio total-energy calculations using a plane-wave basis set. Phys. Rev. B 54, 11169–11186 (1996).

Blochl, P. E. Projector Augmented-Wave Method. Phys. Rev. B 50, 17953–17979 (1994).

Kresse, G. & Joubert, D. From ultrasoft pseudopotentials to the projector augmented-wave method. Phys. Rev. B 59, 1758–1775 (1999).

Perdew, J. P., Burke, K. & Ernzerhof, M. Generalized gradient approximation made simple. Phys. Rev. Lett. 77, 3865–3868 (1996).

Grimme, S., Ehrlich, S. & Goerigk, L. Effect of the Damping Function in Dispersion Corrected Density Functional Theory. J. Comput. Chem. 32, 1456–1465 (2011).

Monkhorst, H. J. & Pack, J. D. Special Points for Brillouin-Zone Integrations. Phys. Rev. B 13, 5188–5192 (1976).

Henkelman, G., Uberuaga, B. P. & Jónsson, H. A climbing image nudged elastic band method for finding saddle points and minimum energy paths. J. Chem. Phys. 113, 9901–9904 (2000).

Henkelman, G. & Jónsson, H. A dimer method for finding saddle points on high dimensional potential surfaces using only first derivatives. J. Chem. Phys. 111, 7010–7022 (1999).

Guyon, I., Weston, J., Barnhill, S. & Vapnik, V. Gene selection for cancer classification using support vector machines. Machine Learning 46, 389–422 (2002).

Pedregosa, F. et al. Scikit-learn: Machine Learning in Python. Journal of Machine Learning Research 12, 2825–2830 (2011).

Profillidis, V. A. & Botzoris, G. N. Modeling of transport demand: analyzing, calculating and forecasting transport demand. Vol. 5.6 (Elsevier, 2019).

Sun, J. & Wang, H. Structure of SAA for C-H dissociation from DFT. figshare https://doi.org/10.6084/m9.figshare.25836550.v5 (2024).

Sun, J. & Wang, H. DFT dataset with descriptors utilized in training. figshare https://doi.org/10.6084/m9.figshare.27645831 (2024).

Sun, J. & Wang, H. Single-Atom Alloy Dataset for Machine Learning. figshare https://doi.org/10.6084/m9.figshare.26200007 (2024).

Sun, J. Surface construction and descriptor acquisitione. zenodo https://doi.org/10.5281/zenodo.8133294 (2023).

Liu, H. Y., Zhang, R. G., Yan, R. X., Wang, B. J. & Xie, K. C. CH4 dissociation on NiCo (111) surface: A first-principles study. Appl. Surf. Sci. 257, 8955–8964 (2011).

Liu, H. Y. et al. Insight into CH4 dissociation on NiCu catalyst: A first-principles study. Appl. Surf. Sci. 258, 8177–8184 (2012).

Qi, Q. H., Wang, X. J., Chen, L. & Li, B. T. Methane dissociation on Pt(111), Ir(111) and PtIr(111) surface: A density functional theory study. Appl. Surf. Sci. 284, 784–791 (2013).

van Grootel, P. W., van Santen, R. A. & Hensen, E. J. M. Methane Dissociation on High and Low Indices Rh Surfaces. Journal of Physical Chemistry C 115, 13027–13034 (2011).

Hao, X. B., Wang, Q., Li, D. B., Zhang, R. G. & Wang, B. J. The adsorption and dissociation of methane on cobalt surfaces: thermochemistry and reaction barriers. RSC Adv. 4, 43004–43011 (2014).

Zhang, R. G., Song, L. Z. & Wang, Y. H. Insight into the adsorption and dissociation of CH4 on Pt(h k l) surfaces: A theoretical study. Appl. Surf. Sci. 258, 7154–7160 (2012).

Acknowledgements

This work was supported by the National Key Research Program of China (Grant No. 2022YFA1503101), Science and Technology Development Fund, Macau SAR (FDCT No. 0030/2022/AGJ), Collaborative Innovation Center of Suzhou Nano Science & Technology, Priority Academic Program Development of Jiangsu Higher Education Institutions (PAPD), 111 Project, and Joint International Research Laboratory of Carbon-Based Functional Materials and Devices, Jiangsu Funding Program for Excellent Postdoctoral Talent.

Author information

Authors and Affiliations

Contributions

Youyong li and Weiqiao Deng conceived and supervised the research. Jikai Sun and Huan Wang performed the DFT calculations. Jikai Sun performed the ML training and prediction. Huan Wang and Jikai Sun tested the database. Huan Wang wrote the paper. All authors discussed the results and commented on the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, H., Sun, J., Li, Y. et al. Machine learning and DFT database for C-H dissociation on single-atom alloy surfaces in methane decomposition. Sci Data 12, 648 (2025). https://doi.org/10.1038/s41597-025-04885-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-025-04885-1