Abstract

The performance and scope of computer vision methods applied to ophthalmic images is highly dependent on the availability of labelled training data. While there are a number of colour fundus photography datasets, FOVEA is to the best of our knowledge the first dataset that matches high-quality annotations in the intraoperative domain with those in the preoperative one. It comprises data from 40 patients collected at Moorfields Eye Hospital (London, UK) and includes preoperative and intraoperative retinal vessel and optic disc annotations performed by two independent clinical research fellows, as well as short video clips showing the retinal fundus though biomicroscopy imaging in the intraoperative setting. The annotations were validated and converted into binary segmentation masks, with the code used available on GitHub. We expect this data to be useful for deep learning applications aimed at supporting surgeons during vitreoretinal surgery procedures e.g. by localising points of interest or registering additional imaging modalities.

Similar content being viewed by others

Background

Preoperative as well as intraoperative imaging in ophthalmology has long been an integral part of the process of identifying, localising, and monitoring disease in advance of any surgical treatment, as well as for orientation during procedures1. Traditionally, assessment of preoperative images relied exclusively on clinicians and grading centres. The emergence of capable deep learning algorithms in recent years, however, has shifted this focus towards the efficiency and accuracy of modern computer vision methods. These have the potential to alleviate workload, improve surgical outcomes, increase patient satisfaction, and leverage data from a multiplicity of sources for tasks intractable for clinical professionals2.

This development both feeds off the availability and in turn necessitates the further collection of clinical data from a variety sources, as the benefit of large manually labelled datasets to the performance of deep learning models has been well established. However, current developments such as extensive and powerful foundation models – a term coined by Stanford University Human-Centered Artificial Intelligence for “models [...] trained on broad data [...] that can be adapted to a wide range of downstream tasks”3 – are making the use of few-shot methods more viable4. Other current approaches include the use of contrastive learning, or even extracting knowledge inherent in a diffusion model trained on unlabelled data5. While not eliminating the need for manual labelling in the target domain, this does underline the importance of high-quality annotations over sheer quantity.

There exist a number of public datasets such as STARE6, DRIVE7, CHASE_DB18, and more recently FIVES9 for retinal vessel segmentation, or ORIGA10, G102011, and PAPILA12 for optic disc segmentation. However, all of these are exclusively based on preoperative colour fundus photography – and the availability of such data has facilitated a large and varied body of work e.g. on preoperative retinal vessel segmentation13 or tasks like the detection and grading of spontaneous retinal venous pulsations14.

Unfortunately, in the much more variable and therefore challenging intraoperative domain much less has been achieved. In tasks such as intraoperatively building a vessel map15,16, noise in the vessel detection obtained from a more traditional exploratory algorithm is explicitly noted as a limiting factor. In-domain labels therefore have great potential to help intraoperative localisation and segmentation by unlocking the use of deep learning methods and serving as a validation benchmark.

It is in this context that we present FOVEA, a dataset for retinal Fundus images with Optic disc and retinal VEssel Annotations, consisting of high-resolution preoperative fundus photography and intraoperative microscopy frames from 40 patients, each annotated by two independent clinical research fellows at Moorfields Eye Hospital (London, UK) and then processed and validated to ensure consistency and quality. To our knowledge, FOVEA is the first dataset to include high-quality annotations of combined preoperative and intraoperative retinal fundus images.

The provision of such labels with the matching annotations of preoperative images from the same patients also provides an opportunity to register retinal features in the preoperative domain with features detected during surgery. This can further improve orientation during procedures, especially relevant in the context of novel robotic tools17 which may partially rely on computer vision for navigation. Additionally, this could allow augmented reality to move past applications in training18 to providing clinicians with an enhanced surgical environment during actual procedures offering up information inherent in additional imaging modalities such as optical coherence tomography.

Methods

The FOVEA dataset19 has been carefully processed to obtain a high-quality, validated benchmark. The overall method can be divided into five steps which will be explained in full detail in the following sections. Figure 1 shows an overview of the process.

Overview of the method. In a first step, data in the preoperative (blue path) and intraoperative (red path) domain was acquired from consenting adult patients at Moorfields Eye Hospital (London, UK). Then, data not satisfying quality conditions or belonging to patients with certain conditions was removed, resulting in a total of 40 patients in the final dataset. In the intraoperative case, a specific frame was selected from the 30 s clips obtained from the beginning of vitreoretinal surgeries. Annotation was performed according to a set protocol, by two independently acting clinical experts. The raw annotations were then processed to obtain clean binary masks. Finally, the data was fully anonymised and provided with a new sequential identifier from 1 to 40, resulting in a final FOVEA dataset containing a total of 601 files.

Acquisition

All data was collected at Moorfields Eye Hospital between 2017 and 2021, from adult patients undergoing pars plana vitrectomy in the course of standard of care treatment. A number of different conditions were present in this initial patient population, most commonly epiretinal membrane and macular hole, but also optic disc pit maculopathy, posterior intraocular lens dislocation, and different types of floaters.

Informed consent for the use of this data in scientific journals as well as its publication was obtained from the patients, with the national data opt-out service being applied. All data was acquired in accordance with the 1964 Helsinki declaration and its later amendments or comparable ethical standards, and the study was approved by the Medical Ethics Committee of Moorfields Eye Hospital (IRAS #261512). The dataset is also fully anonymised. Each record was assigned a sequential identifier – FOVEA001 to FOVEA040 – not linked to the patient’s hospital number. It has been verified that no data contains any burned-in patient identifier, or identifying metadata.

The colour fundus photography (CFP) images were acquired using a Topcon 3D OCT-1000 fundus camera after pupillary pharmacological dilation with 1.0% tropicamide and 2.5% phenylephrine during the preoperative review. Intraoperative footage was acquired using a Zeiss Lumera 700 ophthalmic microscope with an integrated RESIGHT fundus viewing system wide angle aspheric, achromatic 128D lens. Once switched on, the fundus viewing system inverted the image on the X and Y axis, and deactivated the microscope illuminating system. For this reason, videos were recorded with a fibre optic endoilluminator with a xenon straight light source.

Selection

To ensure the quality of the dataset, the imaging data collected at Moorfields Eye Hospital was pared down to 40 records by removing patients whose preoperative or intraoperative fundus images were not in line with the typical image quality, in terms of sharpness and illumination. Additionally, patients with pathologies rendering the fundus images unsuitable for annotation or non-representative for the larger patient population were also removed. Such pathologies include mainly choroideremia, with its characteristic absence of pigmentation and clearly visible choroidal vessels20, and also myopic foveoschisis, similarly altering the appearance of the fundus due to thinning of the retinal pigment epithelium and the separation of retinal layers21.

For the intraoperative vessel annotation, a frame had to be selected from the ophthalmic microscope recordings. To this end, first 750 frames – 30 seconds of video at a frame rate of 25 Hz, included in this dataset – were clipped from the start of the procedure, showing an exploration of the retinal fundus previous to the planned surgical intervention itself taking place. Then, the most suitable frame was selected by visual inspection, with the intent being to maximise the visible retinal fundus area as well as the vessel tree, while minimising any unwanted phenomena such as motion blur, tools or their shadows, and uneven lighting.

Annotation

Two clinical research fellows independently annotated the optic disc and the retinal vessels in the preoperative as well as the intraoperative retinal fundus image. They both followed a simple annotation protocol:

-

Optic disc annotation: The optic disc was identified as the round or ovoid structure nasal to the macula, from which the retinal vessels emerge. The entire surface of the optic disc was annotated as a contiguous segment, starting by delineation of the edges but disregarding any peripapillary features, such as peripapillary atrophy or scleral crescent.

-

Retinal vessel annotation: The standard for retinal vessel annotation was to begin at the vessel emergence from the optic nerve, following the vascular path continuously along the arcades including bifurcations into secondary and tertiary branches. Isolated vessels were excluded unless the discontinuity was clearly caused by the image field of view. The entire calibre of each vessel was annotated following its natural curvature. If there was ambiguity about whether a vessel derived from the retinal or choroidal plexus, it was disregarded.

-

Annotation sessions were not to last more than 1 continuous hour with at least 30 minutes rest afterwards. The maximum duration of annotation per day was 4 hours.

-

Once an annotation had been completed, it was saved and not reviewed again or edited by the annotator.

In order to preserve the independence of their respective work, no communication on specific annotations and no final review or consensus processing took place between the annotators.

A small pilot study was performed to ascertain the best-suited image presentation approach for manual annotations. This trial saw both clinicians annotate a number of frames twice for three image options: full RGB, grayscale, and green channel only. Based on intra-annotator concordance and qualitative feedback, it was decided to move ahead with the use of the green colour image channel. This matches the expectation that red-free images would aid in the task of highlighting retinal but not choroidal vessels, due to green light being absorbed by blood but reflected by the retinal pigment epithelium1.

Both optic disc and vessel annotations were performed on an iPad Pro, using a pressure-sensitive Apple Pencil (2nd gen) and Procreate, a raster graphics editor. This process took an average of one hour per clinician for each of the 80 images annotated.

Processing

The annotations could be exported from Procreate as psd files and were subsequently converted to png files. They are grayscale images representing a drawn annotation of the optic disc and retinal vessels, respectively. However, this is not a binary mask, but contains smooth vessel outlines with a graduation from 0 to 1. This is due to the annotation method and represents the most natural-looking segmentation of the vessels, matching the more gradual vessel edges found in most images. This ‘raw’ annotation is included in the dataset along with the green channel image used for the annotation, but it has two issues:

-

A visual inspection revealed a small number of areas of annotated pixels seemingly uncorrelated to any visible vessel structure. This is most likely the result of accidental pen touches, and should be removed in the interest of a higher quality annotation mask.

-

For most applications such as deep learning segmentation models, a binary mask is required.

The latter can be easily achieved by thresholding the raw annotation – the most balanced choice of threshold value being 0.5. The former is addressed by finding contiguous regions of annotation in the binary mask, establishing their respective pixel count, and then deleting all with surface area less than 20 (empirically set). However, it was found that in some images these two steps also led to the unwanted deletion of small vessel branches. This is due to the light touch required while annotating very thin vessels with the pressure-sensitive pencil, resulting in parts of the annotation falling below the binarisation threshold value. These pixels are then eliminated from the annotation which causes gaps in the vessel tree, and removing contiguous regions below a certain size originally aimed at eliminating accidental pencil touches will ultimately delete such small branches entirely.

Rather manually fine-tuning the threshold values to find the right trade-off, we opted for a solution that does not introduce further bias during data processing: we apply an pixelwise OR operation to the binary mask as derived above, and the skeletonised binary mask obtained by using a threshold value of 0.1:

where:

a = raw annotation with values from 0 to 1

bin (⋅) = binarisation operation outputting 0 or 1

skel (⋅) = skeletonisation operation running on a binary mask

Combining the binarised mask with the skeleton removes any new gaps in the vessel tree, and using the binarisation threshold of 0.1 avoids very light accidental touches close to thin vessels being taken into account during the skeletonisation process. The ultimate result is a contiguous binary mask that does not contain annotation mistakes such as accidental pencil touches.

Data Records

The FOVEA dataset can be downloaded from figshare19. Inside the FOVEA.zip archive there are 601 files: 560 images, 40 video clips, and one json file. More specifically, FOVEA comprises:

-

40 preoperative RGB colour fundus images

-

40 intraoperative RGB colour video clips

-

40 RGB colour fundus images, one from each video clip

-

160 raw annotations, one for each of the two annotators, for each of the 80 colour fundus images in total. It contains, in the order of the RGB channels, the vessel annotation, the optic disc annotation, and the green channel of the image used for annotation

-

320 binary annotation masks, one for the vessel and one for the optic disc segmentation in each raw annotation

-

A metadata file containing information about the video frame rate and resolution, the colour fundus image dimensions, the main condition present in each dataset record, and the frame index (zero indexed) at which the intraoperative colour fundus image was selected from the respective video clips

File names conform to the following scheme: \(\mathrm{FOVEA}\left|\begin{array}{l}{\mathtt{1}}\\ {\mathtt{2}}\\ \vdots \\ {\mathtt{40}}\end{array}\right|\,-\,\left|\begin{array}{l}{\mathtt{p}}\\ {\mathtt{i}}\end{array}\right|\,-\,\left|\begin{array}{l}\mathrm{img}\\ \mathrm{vid}\\ \mathrm{raw}\\ \mathrm{ve}\\ \mathrm{od}\end{array}\right|\,-\,\left|\begin{array}{l}{\mathtt{1}}\\ {\mathtt{2}}\end{array}\right|\), with the four choices corresponding to:

-

–

the sequential identifier: 1 through 40

-

–

the domain: preoperative or intraoperative

-

–

the type: image, video (only intraoperative), raw annotation, vessel map, optical disc map

-

–

the annotator: 1 or 2

Table 1 contains all relevant technical information for each file type, and Fig. 2 shows an example of a partial data record, with corresponding file names.

A partial record from the FOVEA dataset, showing the image, the raw annotation and both binary masks in the preoperative (a) to (d) as well as the intraoperative case (e) to (h), for one out of the two annotators. Note that due to the imaging setup during surgery, the intraoperative microscopy is rotated by 180∘ compared to the preoperative colour fundus photography. Filenames are given in the sub-captions.

Technical Validation

The entire dataset was extensively analysed in order to validate the final output and ensure the consistency and quality of the annotations. This section will justify the choices made in the processing step, analyse the result, and illustrate a use of the dataset in the context of current deep learning methods.

Processing

The approach of combining the skeleton of the vessel annotation to the mask obtained by thresholding the raw annotation can be validated both visually and statistically. Figure 3 illustrates the issue: very fine retinal vessels were annotated with very little pressure on the Apple pencil to obtain a narrow pencil stroke, but this also leads to low values in the raw annotation which can easily fall below the chosen binarisation threshold, fragmenting the vessel.

A crop of the vessel annotation of FOVEA003_p by annotator 1, demonstrating the post-processing of raw annotations. (a) The raw vessel annotation in red, showing different intensities due to the pressure-sensitive nature of the Apple pencil used. (b) The raw annotation thresholded using a value of 0.5. While perfectly viable for the larger vessels, it leaves the thin vessel in the middle fragmented - eliminating small isolated areas of annotation would delete this entire vessel branch. (c) The skeleton of the mask obtained from thresholding the raw annotation with a value of 0.1 overlaid on to the middle image. The skeleton pixels serve to close the gaps and will preserve the continuity of the vessel tree.

Simply reducing the threshold value is an option, but will also widen all vessel segmentations, which may be undesirable. This is avoided by using the skeleton to fill the gaps instead. Figure 3 shows it to be well-suited to reconnecting the full vessel tree. At the same time, this will not affect isolated single areas of annotations caused by accidentally brushing the iPad screen with the pencil: they can still be eliminated by simply removing areas below a size limit.

This is also confirmed by evaluating the masks statistically: across the entire dataset of 160 vessel annotations, combining with the skeleton reduces the count of isolated annotated areas with a size below 100 px from 1093 to 163, and their mean size from 12 px (95th percentile: 51 px) to 3 px (95th percentile: 6 px). This is a very good indication that all remaining unconnected areas smaller than e.g. 20 px are accidental pencil touches and can be removed without losing any part of the actual vessel tree.

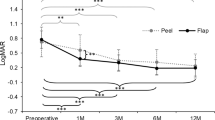

Statistical Analysis

Figure 4 shows the coverage of the annotations as a percentage of the total number of pixels per image across the dataset. It is clear that significantly fewer pixels were annotated for intraoperative data. This is to be expected, as the intraoperative field of view is larger and a lower zoom level is in use - as evidenced by optic disc areas being reduced 7 to 8-fold in images taken during surgery - both reducing the vessel widths as measured in pixels. Additionally, parts of the visible fundus are often occluded, and image clarity is generally much reduced with many smaller vessels not being visible.

Mean values across the dataset can be gleaned from Table 2. Annotator 1 (henceforth “A1”) consistently annotated fewer pixels than annotator 2 (henceforth “A2”) – an observation borne out by the mean across the dataset: 5.04% vs 5.71% for the preoperative and 0.92% vs 1.04% for the intraoperative fundus. A similar trend holds true for the optic disc annotations, with 1.51% vs 1.60% and 0.25% vs 0.29%, respectively.

However, extending the analysis to the total length of the skeleton of the retinal vessel segmentation shows a different picture: in this context, the numbers no longer significantly different: 0.85% for both annotators in the preoperative domain, and 0.37% vs 0.38% in the intraoperative domain. This means that both annotators put the same effort into following the vessel tree - and though finer branches often do not coincide between annotators (see Fig. 5), both end up with a similarly complex structure. However, they do tend to disagree on the width of vessels: A2 appears to be interpreting more of the often blurry vessel edges as belonging to the vessels than A1. Another explanation is a systematic difference in annotation technique between the two annotators, caused for example by the length of individual pencil strokes (see the Methods section).

A comparison of annotations in the preoperative (left, FOVEA006) and intraoperative (right,FOVEA012) domain. (a), (b) Partially transparent overlay of annotation 1 (blue) and annotation 2 (green) over a crop of the original image, highlighting disagreements between the annotators. (c), (d) The same crop, with white pixels as the intersection between the two annotators, and blue and red as the skeletons of the pixels marked by annotators 1 and 2, respectively. (e), (f) Smaller original image patches contained in the above crops, illustrating the difficulties of selecting a definitive vessel path.

In fact, these numbers allow us to calculate a mean annotated retinal vessel width. For preoperative images, the results are 5.9 px and 6.7 px, for intraoperative images 2.5 px and 2.8 px for A1 and A2, respectively. As a rough estimation and neglecting possible differences in vessel width distributions across the vessel tree, this results in an absolute scale difference between preoperative and intraoperative images in the dataset of about 2.4, at the given resolutions. This is a good match with the average ratio of optic disc areas, the square root of which can be interpreted as the scale difference: 2.4 for A1 and 2.5 for A2 – demonstrating the consistency of the annotations across domains and classes.

Table 2 also contains data on the Dice score, formulated as:

where:

A = Annotated by both A1 and A2

B = Annotated by A2 but not A1

C = Annotated by A1 but not A2

D = Annotated by neither A1 nor A2

It is very high for the optic discs, and 0.68 and 0.52 for the preoperative and intraoperative vessels. As a comparison, the retinal vessel annotations in the 28 records of the (preoperative) CHASE_DB1 dataset achieve a value of 0.78. However, it is worth noting that all subjects of the CHASE_DB1 dataset were healthy, and the individual images are at a significantly lower resolution (4 times fewer pixels overall) which hides most of the most ambiguous vessels where the highest inter-annotator disagreement would be expected.

Additionally, we also calculated the extended Cohen’s Kappa coefficient22, a newer attempt at assessing the agreement between two annotators in the field of semantic segmentation:

Despite a different formulation, the values are extremely similar to the Dice score. Values above 0.4 can be seen as moderate agreement, above 0.6 as substantial agreement, and above 0.8 as almost perfect agreement23. Again, the lower value of agreement in the intraoperative domain reflects the much increased difficulty of annotations already mentioned at the start of this section.

Visual Analysis

Figure 5 shows one annotation comparison in each domain. This underscores the difficulty of the task, and illustrates how A1 annotated some vessels that A2 did not, and vice versa. The intersection of their annotations is a viable ground truth for thicker vessels, but becomes quickly segmented when progressing to the thinnest ones, where just two pixels offset in the annotation can mean the binary masks no longer overlap fully. Note that we do not make a qualitative judgement, as both annotations should be seen as individual and independent interpretations of the ground truth made by subject experts.

Usage Notes

Smaller retinal vessel branches are often difficult to distinguish clearly. This is especially true for intraoperative imaging modalities with their increased incidence of blur, inadequate illumination, or artefacts. Different annotators will often disagree, not only on the annotation of thin vessel branches, but also on other points such as the exact interpretation of vessel widths in the presence of blur. Some datasets such as FIVES9 avoid this by using a more complex annotation workflow aimed at finding a common ground truth amongst a group of annotators. In this case, the goal was to provide two independent ground truths annotations by subject experts, each with their own interpretation of the data.

It is therefore up to the dataset user to decide which annotation to use, or how to combine them. To facilitate this, we not only supply the raw annotations along with the binary masks derived from them (see Methods), but also a number of Python methods which will speed up the process. With their help, the dataset can be easily reprocessed e.g. using different threshold values for the conversion into binary masks, or by removing all but the main vessel tree, i.e. the largest contiguous area of annotated pixels.

As with any data, users should be mindful of potential biases and limitations. This dataset originates from a specific hospital and a relatively small patient group. Some degree of variability across institutions is expected due to local demographic factors such as age and gender, differing patient conditions or clinical practices, as well as variations in imaging equipment. This is likely to cause subtle differences in the data distribution which may impact generalisation to that domain.

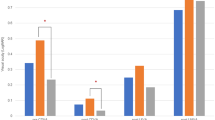

Segmentation Application

To demonstrate the value of the data especially in the intraoperative domain, we fine-tuned a Segment Anything Model (SAM)24 using the Learnable Prompt SAM repository4 on the FOVEA dataset to predict retinal vessel segmentations. With this, we also establish a performance baseline on this new dataset.

We used the ViT-Base version of the SAM and the default SGD optimiser. To determine the effect of the loss function, we tested both the standard cross-entropy as well as Lovász-Softmax25. Data train/test splits are 30/10, our suggested standard for the dataset, and 1/39, with FOVEA011 serving as the single training image. The 10 records used as the test set for the 30/10 split are: 7, 12, 26, 30, 32, 33, 37, 38, 39, and 40. They were manually chosen to balance retinal conditions, fundus appearance, and image quality between the training and test set. Code to automatically split the FOVEA dataset according to our suggestion as well as any other desired split is given in our code repository (see the Code Availability section). The learning rate was set to 0.1 and the batch size to 5. All experiments were run on a single Quadro RTX 8000 GPU with 48 GB of RAM.

An important detail is the way the images are made to fit in the model, which expects input dimensions of 1024 × 1024 px:

-

Intraoperative data (1080 × 1920 px) was cropped randomly during training, and cropped at the centre during testing. Alternatively, five crops – one at each corner and one in the centre – were extracted and run separately, with the resulting 1024 × 1024 px outputs combined to a single 1080 × 1920 px prediction. This was performed by polling patches on each pixel they cover, and setting a “vote” majority threshold above which the pixel would be accepted as containing a vessel segmentation. Setting the threshold at 0 is equivalent to obtaining the union of all five patch outputs, while a value of 0.5 requires e.g. both patches to agree in areas covered by two patches.

-

Preoperative data (1934 × 1960 px) was either cropped to 1024 × 1024 px – randomly during training, from the centre during testing – or resized to fit. While technically disregarding the aspect ratio, the almost square nature of the preoperative images means no noticeable distortion was introduced. This resizing operation also brings the mean size of optic disc and vessel features closer in line with the intraoperative data (see the Statistical Analysis section).

Results are listed in Table 3. Some straight-forward observations can be made: as usual, training and testing in the same domain results in better performance. Intraoperative Dice scores are worse than preoperative ones, matching differences in inter-annotator agreement listed in Table 2. The Lovász-Softmax loss performance confirms previous observations showing it to achieve better results than cross-entropy on some semantic segmentation tasks26.

Statistics calculated for each test frame show the model trained with cross-entropy is more hesitant to predict a vessel, meaning that there are fewer true positives but also fewer false positives – therefore, polling patches with a threshold of 0 improves results noticeably as it does not accumulate as many disparate false positives around the image. Training with Lovász-Softmax increases both true and false positives, therefore majority polling with a threshold of 0.5 is preferred, yielding slight increase in performance. This effect is also visible in Fig. 6.

A comparison of the effect of using cross-entropy. (a) and the Lovász-Softmax loss (b) on a cropped view of the segmentation prediction. The image is FOVEA007_i, and the model was trained with i-30/10-1 (see Table 3). Green are true positives, red false positives, white false negatives, black true negatives.

The best-performing model was also evaluated on four of the videos in the FOVEA dataset, all belonging to the test split and therefore representing unseen data. Results can be viewed on the Robotics and Vision in Medicine YouTube channel as one continuous clip with a black screen inserted for 1 s between the individual videos, showing an overlay of the segmentation prediction on the original image. Notable failure moments include a zoomed-in section at 00:11 as well as a number of strongly blurred frames at 00:40.

In all experiments, testing on resized instead of merely cropped preoperative data leads to better performance, as this matches the scale of features present in the training data better. The only exception is, unsurprisingly, when the training data itself is made from preoperative image crops. While not unexpected, this result stresses the importance of considering relative feature scales when moving across domains.

Interestingly, even training on a single intraoperative frame is sufficient to obtain a useful output on the 39 remaining images, though training for too many steps tends to degrade the result. This demonstrates the capabilities inherent to extensively pre-trained foundation models despite their training images being unrelated to the target domain, and reinforces the point that even a small number of high-quality annotations in a new domain can be powerfully leveraged with modern deep learning methods.

Making use of both annotations available for each image in the dataset by randomly loading either one or the other mask during training increases performance. We presume the increased diversity in the annotation reduces overfitting on the training set, resulting in better generalisation to the test set. This is supported by the following observation: the overfitting when going from 10000 to 30000 steps using the i-1/39-1 split, indicated by a decrease in performance across the board, is absent for i-1/39-12 (Table 3), i.e. when using both annotations. The single additional ground truth, though of the same image, adds enough diversity for the model to generalise better to the unseen test set of 39 images.

It is important to note that these results were obtained without fine-tuning hyperparameters. We also did not develop a more sophisticated data training pipeline, which we would expect to be especially beneficial given the low amount of data. For example, the albumentation27 augmentations (https://albumentations.ai/docs/getting_started/transforms_and_targets) “FancyPCA” would provide more realistic colour variations, while “ElasticTransform” would increase the geometric variety – and applying more scaling transforms and some amount of random blur might well avoid the failure modes observed in the videos. For appropriate applications, the larger amount of labelled data already available in the preoperative domain could be leveraged as an additional pre-training step before fine-tuning on the intraoperative data. Using a larger SAM, e.g. ViT-Huge, is likely to improve performance as well. Finally, it should be noted that techniques such as k-fold cross validation would provide more robust results, though this goes beyond the scope of this work.

Code availability

Code used in the Methods and Technical Validation sections as well as additional functions to process, explore and visualise the dataset are available from https://github.com/rvimlab/FOVEA under the MIT license. It runs in a current Python 3.12 environment, and the dependencies are available in the same repository. The README file contains information on the correct usage, and documentation is present for all code. A second repository, https://github.com/rvimlab/LearnablePromptSAM, was cloned and adapted from https://github.com/Qsingle/LearnablePromptSAM. Again, the README file contains information about the usage of the added files, and the code itself is documented.

References

Bernardes, R., Serranho, P. & Lobo, C. Digital ocular fundus imaging: A review. Ophthalmologica 226, 161–181 (2011).

Tong, Y., Lu, W., Yu, Y. & Shen, Y. Application of machine learning in ophthalmic imaging modalities. Eye and Vision 7, 1–15 (2020).

Bommasani, R. et al. On the opportunities and risks of foundation models. arXiv preprint arXiv:2108.07258 (2021).

Qiu, Z., Hu, Y., Li, H. & Liu, J. Learnable ophthalmology SAM. arXiv preprint arXiv:2304.13425 (2023).

Baranchuk, D., Voynov, A., Rubachev, I., Khrulkov, V. & Babenko, A. Label-efficient semantic segmentation with diffusion models. In Proceedings of the International Conference on Learning Representations (2022).

Hoover, A., Kouznetsova, V. & Goldbaum, M. Locating blood vessels in retinal images by piecewise threshold probing of a matched filter response. IEEE Transactions on Medical imaging 19, 203–210 (2000).

Staal, J., Abràmoff, M. D., Niemeijer, M., Viergever, M. A. & Van Ginneken, B. Ridge-based vessel segmentation in color images of the retina. IEEE transactions on medical imaging 23, 501–509 (2004).

Owen, C. G. et al. Measuring retinal vessel tortuosity in 10-year-old children: Validation of the computer-assisted image analysis of the retina (CAIAR) program. Investigative ophthalmology & visual science 50, 2004–2010 (2009).

Jin, K. et al. FIVES: A fundus image dataset for artificial intelligence based vessel segmentation. Scientific Data 9, 475, https://doi.org/10.1038/s41597-022-01564-3 (2022).

Zhang, Z. et al. ORIGA-light: An online retinal fundus image database for glaucoma analysis and research. In 2010 Annual international conference of the IEEE engineering in medicine and biology, 3065–3068 (IEEE, 2010).

Bajwa, M. N. et al. G1020: A benchmark retinal fundus image dataset for computer-aided glaucoma detection. In 2020 International Joint Conference on Neural Networks (IJCNN), 1–7 (IEEE, 2020).

Kovalyk, O. et al. PAPILA: Dataset with fundus images and clinical data of both eyes of the same patient for glaucoma assessment. Scientific Data 9, 291, https://doi.org/10.1038/s41597-022-01388-1 (2022).

Chen, C., Chuah, J. H., Ali, R. & Wang, Y. Retinal vessel segmentation using deep learning: A review. IEEE Access 9, 111985–112004 (2021).

Panahi, A. et al. Autonomous assessment of spontaneous retinal venous pulsations in fundus videos using a deep learning framework. Scientific Reports 13, 14445 (2023).

Braun, D., Yang, S., Martel, J. N., Riviere, C. N. & Becker, B. C. EyeSLAM: Real-time simultaneous localization and mapping of retinal vessels during intraocular microsurgery. The International Journal of Medical Robotics and Computer Assisted Surgery 14, e1848 (2018).

Mukherjee, S., Kaess, M., Martel, J. N. & Riviere, C. N. EyeSAM: Graph-based localization and mapping of retinal vasculature during intraocular microsurgery. International journal of computer assisted radiology and surgery 14, 819–828 (2019).

Vander Poorten, E. et al. Robotic retinal surgery. In Handbook of robotic and image-guided surgery, 627–672 (Elsevier, 2020).

Ropelato, S., Menozzi, M., Michel, D. & Siegrist, M. Augmented reality microsurgery: A tool for training micromanipulations in ophthalmic surgery using augmented reality. Simulation in Healthcare 15, 122–127 (2020).

Ravasio, C. S., Flores-Sanchez, B., Bloch, E., Bergeles, C. & Da Cruz, L. FOVEA: Preoperative and intraoperative fetinal fundus images with optic disc and retinal vessel annotations. figshare https://doi.org/10.6084/m9.figshare.28329338 (2025).

Pennesi, M. E., Birch, D. G., Duncan, J. L., Bennett, J. & Girach, A. Choroideremia: Retinal degeneration with an unmet need. Retina (Philadelphia, Pa.) 39, 2059 (2019).

Ang, M. et al. Imaging in myopia: Potential biomarkers, current challenges and future developments. British Journal of Ophthalmology 103, 855–862 (2019).

Yang, F. et al. Assessing inter-annotator agreement for medical image segmentation. IEEE Access 11, 21300–21312 (2023).

Landis, J. & Koch, G. The measurement of observer agreement for categorical data. Biometrics 33, 159174 (1977).

Kirillov, A. et al. Segment anything. Proceedings of the IEEE/CVF international conference on computer vision (2023).

Berman, M., Triki, A. R. & Blaschko, M. B. The lovász-softmax loss: A tractable surrogate for the optimization of the intersection-over-union measure in neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, 4413–4421 (2018).

Pissas, T., Ravasio, C. S., Da Cruz, L. & Bergeles, C. Effective semantic segmentation in cataract surgery: What matters most? In Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, September 27–October 1, 2021, Proceedings, Part IV 24, 509–518 (Springer, 2021).

Buslaev, A. et al. Albumentations: Fast and flexible image augmentations. Information 11 (2020).

Acknowledgements

This work was supported by The Michael Uren Foundation, and by core funding from the Wellcome/EPSRC Centre for Medical Engineering [WT203148/Z/16/Z]. For the purpose of Open Access, the Author has applied a CC BY public copyright license to any Author Accepted Manuscript version arising from this submission. We would like to thank Dr Sepehr Jalali for advice on the annotation protocol and the pilot annotation study.

Author information

Authors and Affiliations

Contributions

C.S.R. performed the data processing, analysis, and validation, conducted the deep learning experiments, wrote all the code, and wrote the manuscript. B.F.S. annotated the data. EB developed the annotation protocol, carried out the data selection, and annotated the data. L.D.C. and C.B. initiated, guided and funded the research and helped develop the annotation protocol. All authors advised on and reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ravasio, C.S., Flores-Sánchez, B., Bloch, E. et al. FOVEA: Preoperative and intraoperative retinal fundus images with optic disc and retinal vessel annotations. Sci Data 12, 703 (2025). https://doi.org/10.1038/s41597-025-04965-2

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41597-025-04965-2