Abstract

In everyday environments, partially occluded objects are more common than fully visible ones. Despite their visual incompleteness, the human brain can reconstruct these objects to form coherent perceptual representations, a phenomenon referred to as amodal completion. However, current computer vision systems still struggle to accurately infer the hidden portions of occluded objects. While the neural mechanisms underlying amodal completion have been partially explored, existing findings often lack consistency, likely due to limited sample sizes and varied stimulus materials. To address these gaps, we introduce a novel fMRI dataset,the Occluded Image Interpretation Dataset (OIID), which captures human perception during image interpretation under different levels of occlusion. This dataset includes fMRI responses and behavioral data from 65 participants. The OIID enables researchers to identify the brain regions involved in processing occluded images and examines individual differences in functional responses. Our work contributes to a deeper understanding of how the human brain interprets incomplete visual information and offers valuable insights for advancing both theoretical research and related practical applications in amodal completion fields.

Similar content being viewed by others

Background & Summary

In daily environments, partially occluded objects are more prevalent than fully visible ones. Despite their visual incompleteness, the human brain adeptly and spontaneously reconstructs these objects, forming coherent perceptual representations. This phenomenon, known as amodal completion (the unconscious perception of complete mental representations for occluded objects)1,2,3, is not only crucial in everyday life but also plays a significant role in specialized fields4,5. This capability not only exemplifies the predictive nature of visual processing but also holds profound implications for real-world applications: in medical imaging, radiologists routinely diagnose pathologies from partially obscured scans; in AI systems, occlusion handling remains a key challenge for robust object recognition. Inverse Synthetic Aperture Radar (ISAR) image interpretation represents a quintessential task in occluded image interpretation. Investigating the neural mechanisms underlying modal completion in this context can facilitate the screening and training of professionals in the field, thereby enhancing the efficiency of ISAR image interpretation tasks.

Current computer vision systems are capable of matching human performance in interpreting the visible aspects of objects; however, they struggle to accurately infer the hidden portions of objects that are partially obscured6,7,8. The objective of image amodal completion is to endow computers with amodal completion capabilities analogous to those of humans, enabling them to comprehend a complete object even when parts of it are occluded9. Nevertheless, the neural mechanisms underlying this complex process of recognizing occluded images and the functional differences observed among individuals with varying levels of proficiency remain poorly understood10. Recent neuroscientific research has begun to elucidate the role of amodal completion in the recognition of occluded objects11,12,13,14. Functional magnetic resonance imaging (fMRI), with its superior spatial resolution, is extensively utilized to locate and analyze dynamic brain responses during this task9. Despite this, conflicting findings persist in the literature. Some studies propose that lower-level visual areas play a pivotal role in this process3,15, whereas others emphasize the significance of higher-level visual regions16,17. According to the review by Thielen et al. on amodal completion10, these discrepancies may primarily arise from variations in stimulus materials. Critically, differing degrees of occlusion—ranging from partial to near-total masking—fundamentally alter the computational demands of perceptual completion18. For instance, lightly occluded objects may engage local feature interpolation mechanisms in early visual areas, whereas heavily occluded stimuli likely recruit higher-order prefrontal and parietal regions to resolve ambiguity through top-down priors.

To address these issues, we designed a study involving three levels of masking in image recognition tasks and collected fMRI data from 65 participants. The aim is to provide a comprehensive dataset that can offer a more accurate identification of the brain regions involved in masked image recognition and explore functional differences among individuals. By doing so, we hope to gain deeper insights into how the brain processes incomplete visual information and contribute to the advancement of both theoretical understanding and practical applications in this field.

The Occluded Image Interpretation Dataset (OIID) is categorized by levels of occlusion and includes comprehensive MRI and behaviour data, enabling researchers to:

-

1.

Identify brain regions specifically involved in processing occluded images and explore individual differences in brain activity related to the interpretation of partially visible objects, investigating the neural bases of these differences.

-

2.

Study the neural mechanisms underlying the brain’s ability to complete missing visual information, focusing on amodal completion in cases of highly occluded objects.

-

3.

Provide insights and data to enhance and validate biologically inspired computational models of visual perception and object recognition under occlusion conditions.

Methods

Participants

The study included a total of 65 healthy participants, comprising 33 males and 32 females, with an age range of 19–26 years (mean ± SD: 22.4 ± 1.8 years). All participants were right-handed, had normal or corrected-to-normal vision, and reported no history of psychiatric or neurological disorders. Prior to undergoing Magnetic Resonance Imaging (MRI), participants provided written informed consent for study participation, anonymized data analysis, and open sharing of de-identified data. The study was approved by the Ethical Committee of Henan Provincial People’s Hospital (Approval No.: 2022-168), explicitly authorizing open data publication for any purpose. Monetary compensation was provided upon the successful completion of the experiment. The experimental protocol strictly adhered to relevant ethical standards and regulatory requirements.

Stimuli

ISAR imaging is widely employed in the aerospace sector and exemplifies the challenge of interpreting occlusion images19. Owing to factors such as target motion, Doppler shifts, misaligned angles, and complex data processing, ISAR images often suffer from low resolution and blurring, closely resembling low-quality obscured images20. Consequently, ISAR images were selected as the experimental stimuli in this study.

The initial stimuli were sourced from the publicly available SynISAR dataset5 (https://github.com/malenie/Synthetic_ISAR_images_of_aircrafts; GNU GPLv3 license), a collection of ISAR images representing several distinct aircraft models. The full stimuli set is available in the OpenNeuro repository under /derivatives/stimuli_dataset/stimuli_original. The dataset contains 181 images per aircraft type, covering rotation angles from 0° to 180° in 1° increments. From this complete angular set, we systematically selected 150 images per aircraft model for occlusion processing, ensuring uniform angular distribution across three occlusion levels (50 images per level). Crucially, each base image was exclusively assigned to a single occlusion condition, maintaining strict independence between experimental conditions through this non-repeating sampling design. Figure 1b (i) provides an illustrative example of the two aircraft models chosen for analysis in this study. To simulate varying degrees of image degradation, black square masks of different sizes were systematically introduced at random locations within the ISAR images. The occlusion level was defined as the proportion of the masked area to the total image area. Drawing inspiration from a prior study on occluded image recognition, three occlusion levels were adopted: 10%, 70%, and 90%13. This dataset focuses on the recognition of highly occluded objects, and therefore selects two levels of high occlusion (70% occlusion and 90% occlusion) as the main tasks for recognition. To mitigate potential confounds associated with complete unmasking, the 10% masking level was designated as the baseline condition. This approach ensured that participants were consistently exposed to at least some degree of image occlusion.

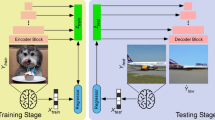

The experimental design of the OIID. (a) Diagram Summarizing the Procedures Employed in MRI Data Collection. (b) (i) Occlusion Image Collection. Aircraft targets were subjected to three levels of occlusion to produce a set of stimuli. (ii) Task Execution Procedure. A total of three types of occluded image stimuli were presented randomly in 10 blocks during each run. Participants were required to make a decision within a 4-second timeframe upon the object’s appearance and indicate their selection by pressing a button.

Ultimately, the stimuli comprised six experimental conditions corresponding to the combinations of two aircraft models and three occlusion levels. For each condition, 50 occluded images were generated by applying random mask placements. This resulted in a total of 300 experimental stimuli for image interpretation.

Experimental design

Throughout the entire experiment, MRI, behavioral, and NASA Task Load Index (NASA-TLX)21 psychological scale data were collected. The MRI data acquisition process is illustrated in Fig. 1a. Participants completed the collection of functional images (task-based fMRI and resting-state fMRI) and structural images according to the protocol. Visual stimuli were displayed via a BOLDscreen 32 monitor (horizontal × vertical resolution: 1920 × 1080 px; refresh rate: 120 Hz; physical dimensions: 89 cm (W) × 50 cm (H)). Participants viewed the display through an MR-compatible mirror system affixed to the head coil assembly. The experimental protocol was implemented using Experiment Builder software (v2.2.299) for precise control of stimulus presentation and response recording. During the task-state fMRI scans, button presses were recorded to document participants’ responses and reaction times.

Prior to the commencement of the task, participants were briefed to carefully examine clear images of the two aircraft and to memorize their distinctive features. Each participant completed two task runs separated by a 1-minute resting interval. Each run lasted 1008 seconds (16.8 minutes), beginning with a 12-second initial rest period and concluding with an 8-second terminal rest period to allow for hemodynamic stabilization. The two runs employed a fixed pseudo-randomized block order, with identical sequences across runs while maintaining condition counterbalancing. Each run consisted of 30 blocks (10 per occlusion condition) presented with 12-second inter-block intervals. Within each block, 5 trials of identical occlusion levels were presented, featuring 3-second stimulus presentations followed by 1-second inter-trial intervals.

Participants were required to use their right index finger to press a button corresponding to aircraft A, and their right middle finger to press a button corresponding to aircraft B (see Fig. 1b ii). In our experiment, participants were explicitly instructed to prioritize accuracy over speed. The exact wording of the instructions was: “Your primary goal is to make correct identifications. Please respond as accurately as possible, while also trying to complete the selection within 4 seconds without excessive deliberation.” To ensure participants remained awake during scanning sessions, the Eyelink 1000 Plus eye-tracking system was employed to verify participants’ open-eye state throughout scanning sessions.

The NASA Task Load Index (NASA-TLX), a validated multidimensional assessment tool for quantifying subjective workload perception, was administered post-MRI scanning to evaluate participants’ cognitive demands under various occlusion conditions. This instrument measures six distinct dimensions: (1) Mental Demand, (2) Physical Demand, (3) Temporal Demand, (4) Performance, (5) Effort, and (6) Frustration. For each occlusion condition, participants rated each dimension using a 10-point scale (1 = extremely low, 10 = extremely high), while performance was inversely anchored (1 = perfect success, 10 = complete failure). The composite workload score was calculated through summation of all dimension scores. This implementation allows systematic comparison of task-induced cognitive strain across experimental conditions.

MRI acquisition

MRI images were collected at the Henan Key Laboratory of Imaging and Intelligent Processing in Zhengzhou, China, using a Siemens MAGNETOM Prisma scanner with a 64-channel head coil and a magnetic field strength of 3 Tesla. To mitigate the influence of scanner noise, earplugs were inserted, while adjustable padded head clamps were applied to limit head motion.

Functional MRI

The study utilized bold-oxygenation-level-dependent (BOLD) fMRI data acquired through a Siemens multi-band, gradient-echo accelerated echo-planar imaging (EPI) T2*-weighted sequence. Specifically, the imaging parameters included 64 co-planar slices aligned with the AC/PC plane, with an in-plane resolution of 2 × 2 mm, 2 mm slice thickness, multiband acceleration factor of 2, no interslice gap (0 mm), and a field of view measuring 216 × 216 mm. The scanning protocol featured a repetition time (TR) of 2000 ms, echo time (TE) of 30 ms, a flip angle of 78°, and a bandwidth of 2204 Hz/Px. Additionally, the echo spacing was set at 0.54 ms, with the phase-encoding direction from anterior to posterior (AP). Prescan Normalize: Off; Slice orientation: axial, coronal, sagittal; Auto-align: Head > Brain; Shim mode: B0 Shim mode Standard, B1 Shim mode TrueForm; Slice acquisition order:Interleaved.

Structural MRI

Structural T1w images for anatomical reference were obtained using a three-dimensional magnetization-prepared rapid acquisition gradient echo sequence. A total of 192 sagittal slices were generated, each with a slice thickness of 1 mm. The voxel size was uniformly set at 1 × 1 × 1 mm, while the field of view measured 256 × 256 mm. Imaging parameters consisted of a TR of 2300 ms, TE of 2.26 ms, TI of 900 ms, and a flip angle of 8°. Prescan normalization: turned on; Acceleration Factor = 2.

MRI Preprocessing

The images acquired from the Siemens scanner in DICOM format were converted into the Neuroimaging Informatics Technology Initiative (NIfTI) format through a conversion process. Following this, the organization of these images was performed in accordance with the Brain Imaging Data Structure (BIDS) using HeuDiConv (https://github.com/nipy/heudiconv). PyDeface (https://github.com/poldracklab/pydeface) was then employed to ensure the anonymization of the anatomical T1w images by removing facial characteristics. The data were then preprocessed and analyzed to produce the derived data for sharing and quality validation (Fig. 2).

Summary of data processing pipeline and shared data. The raw data underwent preprocessing procedures and was then mapped onto the standard fsLR surface using ciftify. Subsequently, a surface-based general linear model (GLM) analysis was conducted to ensure data quality. Both the original dataset and the processed data resulting from preprocessing and GLM analysis are available for sharing.

The MRI data were preprocessed using fMRIPrep 20.2.022. For detailed information about the fMRIPrep pipeline, please refer to the online documentation (https://fmriprep.org). For structural MRI data, intensity correction and skull stripping of T1-weighted images were performed using ANTs23, followed by brain tissue segmentation using FAST, and brain surfaces were reconstructed using FreeSurfer24. For functional MRI data, head motion correction was performed using MCFLIRT, slice timing correction was implemented via AFNI 3 dTshift, and susceptibility distortion correction was applied using SDCflows with phase-encoding reversed acquisition data. Finally, the functional data were registered to four standard spaces: T1w (individual volume space), fsnative (individual surface space), MNI152NLin6Asym:res-2 (standard volume space), and fsLR 91k (standard surface space).

Data Records

The data were organized in accordance with the BIDS format25 and has been deposited in the OpenNeuro repository under accession number ds00522626 (https://doi.org/10.18112/openneuro.ds005226.v1.0.8). Each participant’s raw data was stored in folders labeled as “sub- < subID >” (Fig. 3c). The preprocessed volume data were kept in the “derivatives/preprocessed_data” folders (Fig. 3d), whereas the preprocessed structural data were stored in the “derivatives/recon-all-FreeSurfer” folders (Fig. 3e).

The OIID’s folder structure. (a) The overall directory organization of the OIID. (b) The folder structure of the stimulus materials. (c) The folder structure of raw data obtained from a participant. (d) The folder structure of preprocessed functional data. (e) The folder structure of preprocessed structural data. (f) The folder structure of the stimulus materials and associated processed files. (g) The folder structure of technical validation.

Stimulus

The folder labeled as the “stimuli” contains the set of 300 stimulus images utilized throughout the experiment (Fig. 3b). This set comprises 150 images of aircraft A and 150 images of aircraft B, with varying levels of occlusion at 10%, 70%, and 90%. The complete stimulus generation pipeline (original images, masks, and processing code) is fully available in /derivatives/stimuli_dataset (Fig. 3f).

Raw MRI data

Each participant’s directory contains 2 subfolders: “sub- < subID > /ses-anat” and “sub- < subID > /ses-01” (seen in Fig. 3c). Within the “ses-anat” subfolder, there is a single folder named “anat”. The “ses-01” subfolder includes subfolder titled “func”. The scan details for the functional scan are stored in a file named “sub- < subID > _ses-01_scans.tsv”. The task events were recorded in files named “sub- < subID > /ses-01/func/sub- < subID > _ses-01_task-image_run- < runID > _events.tsv” for each individual task run.

Functional data from preprocessing

The preprocessed functional data was stored in the “derivatives/preprocessed_data/” folder (Fig. 3d). This folder contains four subfolders: “space-MNI”, “space-T1w”, “space-fsLR”, and “space-fsnative”, which respectively contain functional imaging data registered to different standard spaces.

Structural data from preprocessing

The outcomes of cortical surface reconstruction were stored in folders named “recon-all-FreeSurfer/sub- < subID >” (Fig. 2e).

Validation

The technical validation code was stored in the directory labeled “derivatives/validation/code”. The outcomes of technical validation were saved in the folder named “derivatives/validation/results” (Fig. 3g).

Technical Validation

Stimulus. To quantitatively validate the spatial distribution of occlusions, we generated 2D occlusion histograms for each occlusion level (10%, 70%, 90%). For each image in a given occlusion level, we aggregated the binary occlusion masks across all trials, then computed the normalized frequency of occlusion occurrence at each pixel location (Fig. 4). The resulting histograms confirmed that occlusions were uniformly distributed without spatial bias.

Spatial distribution of occlusion patterns across three coverage levels. (a) 10% occlusion, (b) 70% occlusion, (c) 90% occlusion. Heatmaps display the probability density of occlusion occurrence at each pixel location (n = 100 images per condition), calculated by aggregating binary occlusion masks across all samples within each coverage level. Color bars indicate occlusion probability, ranging from 0 (never occluded) to 1 (always occluded).

Framewise displacement (FD)

To assess the quality of the structural data acquired from OIID, four essential metrics27 refer to Fig. 5a) were utilized: Coefficient of Joint Variation (CJV), Contrast-to-Noise Ratio (CNR), Signal-to-Noise Ratio in Gray Matter (SNR_GM), and Signal-to-Noise Ratio in White Matter (SNR_WM). In detail, CJV compares the white matter (WM) and gray matter (GM). CNR evaluates the correspondence between the contrast of GM and WM with the noise in the image. SNR examines the correlation between the average signal measurements and the noise in the image. The SNR evaluation is performed separately for GM and WM.

Fundamental data quality assessment of the OIID. (a) The scatter plot demonstrates the data quality assessment of the T1-weighted structural scan, with each data point representing a participant’s data value. Within the plot, a downward arrow indicates that a lower value corresponds to superior data quality, whereas an upward arrow suggests that a higher value signifies better data quality. \({\rm{\mu }}\) represents the mean brightness of the image, while \({\rm{\sigma }}\) represents the standard deviation. (b) The violin plots showcase the Framewise Displacement (FD) for each participant across all runs. The pink bars, black dots, and error bars lines respectively depict the quartile range, mean, and the minimum and maximum values. Notably, participant motion within the OIID was generally minimal, as indicated by a mean FD well below 0.5 mm for all participants.

To ensure the quality of the functional scans conducted by OIID, we evaluated the level of head movement for each individual. The degree of head motion for each participant was measured using the framewise displacement (FD)28 metric. Our examination revealed that none of the participants had volumes with an FD exceeding 0.5 mm (as shown in Fig. 5b), a commonly accepted threshold in the scientific literature for identifying significant head motion in scans. The average FD for all participants was calculated to be 0.15 mm, ranging from a minimum mean of 0.02 mm to a maximum of 0.17 mm (refer to Fig. 5b). These findings indicate that participants were successful in effectively managing head motion throughout the duration of the scan.

Temporal signal-to-noise ratio (tSNR)

The tSNR, a widely used metric for assessing fMRI sensitivity to detect brain activation, was computed by dividing the temporal mean of the preprocessed time series at each voxel by its temporal standard deviation for each run29. Individual tSNR maps for the primary experiment were generated by averaging these values across runs. Group-level tSNR maps were then obtained by averaging across all participants (Fig. 6b). Across participants, the mean whole-brain tSNR was 74.32 ± 4.47, with the lowest mean being 52.76 and the highest mean being 89.66 (Fig. 6a), demonstrating consistency with previous studies30,31,32. As anticipated, there was variability in tSNR levels across different brain regions, with higher levels observed in dorsal areas and lower levels in the anterior temporal cortex and orbito-frontal cortex. In conclusion, these results indicate robust tSNR levels in the primary experiment.

The BOLD signal’s reliability analysis

To quantify test-retest reliability, we performed voxel-wise general linear model (GLM) analyses using SPM12 to estimate beta values in MNI space that reflected neural responses to each image presentation. Specifically, functional data from each run were modeled by convolving the stimulus onset timing of individual images with a canonical hemodynamic response function (HRF). The design matrix for the GLM consisted of separate regressors for each of the 150 individual image presentations. Each image regressor was created by convolving a delta function (representing the onset time of that specific image) with the canonical HRF. This resulted in 150 image-specific regressors per run, capturing the unique BOLD response associated with viewing each occluded image. Additionally, the design matrix included the following covariates: (1) Six rigid-body motion parameters derived from realignment to account for head movement effects; (2) High-pass filtering with a 128-s (0.008 Hz) cutoff frequency to remove low-frequency drift components. For each participant, we computed Pearson correlation coefficients between the beta values corresponding to each of the 150 images in Run 1 and Run 2. Group-averaged reliability maps were generated by averaging these correlation coefficients across all 65 participants.

The occipital cortex exhibited high test-retest reliability, and this consistency extended to temporal and parietal regions (Fig. 7). These regions exhibited substantial overlap with the amodal completion-related brain network reported by Thielen et al.10. As the core hub for primary visual processing, the consistent activation of the occipital cortex throughout the task period indicates temporal stability in low-level feature extraction (e.g., edges, contours) of occluded images. The temporal lobe, responsible for object recognition (e.g., part-whole integration), and the parietal lobe, engaged in spatial attentional allocation, reflect the robust engagement of higher-order cognitive processing under occlusion conditions.

Behavioral data analysis

Behavioral analyses demonstrated systematic variations across occlusion levels, with repeated-measures ANOVAs (Greenhouse-Geisser corrected) revealing highly significant main effects on all measured parameters: NASA-TLX scores (F(2,128) = 38.40, p < 0.001, η2 = 0.375), recognition accuracy (F(2,128) = 616.95, p < 0.001, η2 = 0.906), and reaction time (F(2,128) = 244.43, p < 0.001, η2 = 0.792) (Fig. 8). Bonferroni-corrected post-hoc tests confirmed monotonic trends, showing progressive increases in both subjective workload (NASA-TLX) and reaction time alongside decreasing recognition accuracy as occlusion intensified from 10% to 90% (all pairwise comparisons: p < 0.001). Through the implementation of tasks featuring varying degrees of occlusion, we have effectively designed an image interpretation task that presents a gradient of cognitive demands, mirroring real-world work scenarios more accurately.

Behavioral measures across occlusion levels. (a) Object recognition accuracy (%), (b) Reaction time (ms), and (c) NASA-TLX workload scores. Statistical significance was assessed using repeated-measures ANOVA with Greenhouse-Geisser correction (NASA-TLX: F(2,128) = 38.40, p < 0.001, η2 = 0.375; Accuracy: F(2,128) = 616.95, p < 0.001, η2 = 0.906; RT: F(2,128) = 244.43, p < 0.001, η2 = 0.792) followed by Bonferroni-corrected pairwise comparisons (p < 0.001 for all inter-level comparisons).

Usage Notes

The OIID provides researchers with a unique resource for investigating the visual processing of partially occluded objects in natural scenes. Given the prevalence of partially visible objects in everyday environments, understanding how the brain reconstructs and perceives these incomplete representations is crucial for both theoretical research and practical applications. This dataset addresses the limitations of previous studies by providing a large, standardized fMRI dataset of human perception under different occlusion conditions. It includes fMRI responses and behavioral data from 65 participants exposed to image stimuli with varying degrees of occlusion (10%, 70%, and 90%). Overall, the OIID is designed to advance our understanding of how the human brain handles incomplete visual information and to provide a foundation for improving artificial systems’ ability to interpret partially occluded objects.

Code availability

The code is available at OpenNeuro (https://openneuro.org/datasets/ds005226/versions/1.0.826; directory: derivatives/validation/code) and GitHub (https://github.com/Neptune0108l/OIID).

References

Lier, R. van. Investigating global effects in visual occlusion: from a partly occluded square to the back of a tree-trunk. Acta Psychol. (Amst.) 102, 203–220 (1999).

Yun, X., Hazenberg, S. J. & van Lier, R. Temporal properties of amodal completion: Influences of knowledge. Vision Res. 145, 21–30 (2018).

Ban, H. et al. Topographic Representation of an Occluded Object and the Effects of Spatiotemporal Context in Human Early Visual Areas. J. Neurosci. 33, 16992–17007 (2013).

Kamboj, P., Banerjee, A. & Gupta, S. K. S. Expert Knowledge Driven Human-AI Collaboration for Medical Imaging: A Study on Epileptic Seizure Onset Zone Identification. IEEE Trans. Artif. Intell. 5, 5352–5368 (2024).

Vatsavayi, V. K. & Kondaveeti, H. K. Efficient ISAR image classification using MECSM representation. J. King Saud Univ. - Comput. Inf. Sci. 30, 356–372 (2018).

Li, A., Shao, C., Zhou, L., Wang, Y. & Gao, T. Complete object feature diffusion network for occluded person re-identification. Digit. Signal Process. 159, 104998 (2025).

Chandler, B. & Mingolla, E. Mitigation of Effects of Occlusion on Object Recognition with Deep Neural Networks through Low-Level Image Completion. Comput. Intell. Neurosci. 2016, 6425257 (2016).

Wang, Y. et al. Gradual recovery based occluded digit images recognition. Multimed. Tools Appl. 78, 2571–2586 (2019).

Olson, I. R., Gatenby, J. C., Leung, H.-C., Skudlarski, P. & Gore, J. C. Neuronal representation of occluded objects in the human brain. Neuropsychologia 42, 95–104 (2004).

Thielen, J., Bosch, S. E., van Leeuwen, T. M., van Gerven, M. A. J. & van Lier, R. Neuroimaging Findings on Amodal Completion: A Review. Percept. 10, 204166951984004 (2019).

Assad, J. A. & Maunsell, J. H. R. Neuronal correlates of inferred motion in primate posterior parietal cortex. Nature 373, 518–521 (1995).

Baker, C., Keysers, C., Jellema, T., Wicker, B. & Perrett, D. Neuronal representation of disappearing and hidden objects in temporal cortex of the macaque. Exp. Brain Res. 140, 375–381 (2001).

Rajaei, K., Mohsenzadeh, Y., Ebrahimpour, R. & Khaligh-Razavi, S.-M. Beyond core object recognition: Recurrent processes account for object recognition under occlusion. PLOS Comput. Biol. 15, e1007001 (2019).

Chen, S., Töllner, T., Müller, H. J. & Conci, M. Object maintenance beyond their visible parts in working memory. Journal of neurophysiology 119(1), 347–355 (2017).

de Haas, B. & Schwarzkopf, D. S. Spatially selective responses to Kanizsa and occlusion stimuli in human visual cortex. Sci. Rep. 8, 611 (2018).

Bushnell, B. N., Harding, P. J., Kosai, Y. & Pasupathy, A. Partial Occlusion Modulates Contour-Based Shape Encoding in Primate Area V4. J. Neurosci. 31, 4012–4024 (2011).

Hulme, O. J. & Zeki, S. The sightless view: neural correlates of occluded objects. Cereb. Cortex N. Y. N 1991 17, 1197–1205 (2007).

Erlikhman, G. & Caplovitz, G. P. Decoding information about dynamically occluded objects in visual cortex. NeuroImage 146, 778–788 (2017).

Song, X. A joint resource allocation method for multiple targets tracking in distributed MIMO radar systems. EURASIP J. Adv. Signal Process. 2018, 65 (2018).

Liao, H. et al. Meta-Learning Based Domain Prior With Application to Optical-ISAR Image Translation. IEEE Trans. Circuits Syst. Video Technol. 34, 7041–7056 (2024).

Hart, S. G. & Staveland, L. E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. in Advances in Psychology 52, 139–183 (1988).

Esteban, O. et al. fMRIPrep: a robust preprocessing pipeline for functional MRI. Nat. Methods 16, 111–116 (2019).

Avants, B. B., Epstein, C. L., Grossman, M. & Gee, J. C. Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Med. Image Anal. 12, 26–41 (2008).

Fischl, B. FreeSurfer. NeuroImage 62, 774–781 (2012).

Gorgolewski, K. J. The brain imaging data structure, a format for organizing and describing outputs of neuroimaging experiments. Sci Data 3, 160044 (2016).

Bao, L. et al. An fMRI Dataset on Occluded Image Interpretation for Human Amodal Completion Research. OpenNeuro https://doi.org/10.18112/openneuro.ds005226.v1.0.8 (2024).

Bosco, P. et al. Quality assessment, variability and reproducibility of anatomical measurements derived from T1-weighted brain imaging: The RIN–Neuroimaging Network case study. Phys. Med. 110, 102577 (2023).

Power, J. D., Barnes, K. A., Snyder, A. Z., Schlaggar, B. L. & Petersen, S. E. Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. NeuroImage 59(3), 2142–2154 (2012).

Welvaert, M. & Rosseel, Y. On the Definition of Signal-To-Noise Ratio and Contrast-To-Noise Ratio for fMRI Data. PLOS ONE 8, e77089 (2013).

Visconti di Oleggio Castello, M., Chauhan, V., Jiahui, G. & Gobbini, M. I. An fMRI dataset in response to “The Grand Budapest Hotel”, a socially-rich, naturalistic movie. Sci. Data 7, 383 (2020).

Nastase, S. A. Neural Responses to Naturalistic Clips of Behaving Animals in Two Different Task Contexts. Front. Neurosci. 12, 00316 (2018).

Sengupta, A. et al. A studyforrest extension, retinotopic mapping and localization of higher visual areas. Sci. Data 3, 160093 (2016).

Acknowledgements

The funding for this study was provided by the Major Projects of Technological Innovation 2030 of China (Grant number: 2022ZD0208500), two Key Programs from the National Natural Science Foundation of China (Grant numbers: 62106285, 82071884), and the Central Plains Science and Technology Innovation Leading Talent Project (Grant numbers: 244200510015).

Author information

Authors and Affiliations

Contributions

B.Y., L.T. conceived of the idea and supervised the research. B.L., L.T., C.Z., P.C., L.W., L.C., Z.Y. H.G. contributed to discussion, experimental design, execution, and manuscript structure. B.L., P.C. visualizes experimental results. B.L., L.T. wrote the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Li, B., Tong, L., Zhang, C. et al. An fMRI Dataset on Occluded Image Interpretation for Human Amodal Completion Research. Sci Data 12, 1111 (2025). https://doi.org/10.1038/s41597-025-05414-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41597-025-05414-w