Abstract

Modern technologies such as liquid fuels (hydrogen, oxygen), superconductivity, and quantum technology require materials to serve at very low temperatures, pushing the bounds of material performance by demanding a combination of strength and toughness to tackle various challenges. Steel alloys are among the most commonly used materials in cryogenic applications. Meanwhile, aluminum and titanium alloys are increasingly recognized for their potential in aerospace and the transportation sectors. Emerging multi-principal element alloys such as medium-entropy and high-entropy alloys offer superior low-temperature mechanical performance, greatly expanding the space for material design. A comprehensive dataset of these low-temperature metallic alloys has been curated from literature and made available in an open repository to meet the need for validated data sources in research and development. The dataset construction workflow incorporates automated extraction using state-of-the-art machine learning and natural language processing techniques, supplemented by manual inspection and correction to improve data extraction efficiency and ensure dataset quality. The product dataset encompasses key performance parameters such as yield strength, tensile strength, elongation at fracture, and Charpy impact energy. The accompanying metadata, detailing material types, chemical compositions, processing and testing conditions, are provided in a standardized format to promote data-driven research in material screening, design, and discovery.

Similar content being viewed by others

Background & Summary

The term ‘cryogenics’ is defined for applications at T < 120 K1. A wide spectrum of technologies is deployed in low-temperature (low-T) environments (Fig. 1), such as quantum technologies (e.g., quantum computing, quantum communication, quantum sensing), superconducting-enabled applications (e.g., magnetic resonance imaging or MRI, magnetic levitation or maglev) (1–4.2 K), aerospace or transportation technologies powered by liquid hydrogen (LH2, 14–20 K) or oxygen (LOX, 54–90 K), and liquefied natural gas (LNG, 91–112 K) (Fig. 1a). In these low and discrete temperature ranges, metallic alloys may behave differently from those at room temperature (RT) where material behaviors are better understood (Fig. 1b,c).

Low-temperature (low-T) technology and applications. (a) Cryogenic engineering applications, which include a quantum computer (https://commons.wikimedia.org/w/index.php?search=Dmitrmipt&title=Special:MediaSearch&type=image), magnetic resonance imaging (MRI) scanner employing NbTi superconducting magnets (https://commons.wikimedia.org/wiki/File:MRI-Philips.jpg), liquid hydrogen storage tank for rockets (https://commons.wikimedia.org/wiki/File:SLS_Liquid_Hydrogen_Tank_Test_Article_(NASA_20181029-SLS_LH2_STA_lift).jpg), high-temperature superconducting maglev trains (https://commons.wikimedia.org/wiki/File:Shanghai_Maglev_2.jpg), high-temperature superconducting cables (https://commons.wikimedia.org/wiki/File:High-Temperature_Superconducting_Cables_(5884863158).jpg), and liquefied natural gas storage tank (https://commons.wikimedia.org/wiki/File:National_Grid_LNG_Tank.jpg). The temperature range is not to scale. (b) Literature data map (1991-2023) ranked by the alloy types and temperatures21. (c) Crystal structures, strength and Charpy impact tests, and representative mechanical properties6. (d) Refrigerants and their temperature ranges21. The lengths correspond to the number of data points in the dataset.

Achieving precise control of low temperatures poses various challenges for refrigeration techniques. In low-T experiments, materials are generally either directly immersed in refrigerants or cooled by refrigeration systems. The use of refrigeration systems provides more precise temperature control and can attain lower temperatures. Dilution refrigerators can achieve base temperatures in the milli-Kelvin range2. The effective temperature range of these refrigeration methods is largely determined by the working medium and is not continuous, such as liquid helium (1–4.2 K), liquid hydrogen (14–20 K), and liquid nitrogen (63–77 K) (Fig. 1).

Extreme cold presents significant challenges for maintaining certain material performance, such as toughness, ductility, and fracture resistance, making material selection critical in the design of low-T technologies3,4 (Fig. 1d). At low temperatures, materials generally exhibit increased strength but reduced ductility and toughness5,6. A ductile-to-brittle transition (DBT) may occur, which limits the yield of materials and is sensitive to the atomic-level structures7. This transition is more pronounced in body-centered cubic (BCC) materials, which are less ductile compared to hexagonal closely-packed (HCP) and face-centered cubic (FCC) materials8 (Fig. 1c). Multi-principal element alloys (MPEAs) such as medium-entropy alloys (MEAs) and high-entropy alloys (HEAs) exhibit comparable strength-ductility and strength-toughness balances to conventional low-T alloys (such as FCC-structured steels)9,10,11,12. Owing to their vast compositional design space, these complex alloys demonstrate remarkable potential for material selection. A standardized dataset containing the key material properties and a wide range of temperature covering the full, discontinuous spectrum of aforementioned refrigeration techniques could effectively guide the development of low-T applications.

For instance, hydrogen energy has drawn notable attention recently for its significance in high energy density and sustainability13,14. LH2 is a key propellant in space rockets, often combined with liquid oxygen for its high energy density and specific impulse, and its high specific heat allows it to effectively cool rocket engine nozzles and other critical parts15. LH2 is also used in civil applications such as long-range vehicles, ships and aircraft for the high energy density, low working pressure, and reduced CO2 emission16,17,18,19. The storage and transportation of LH2 in metallic containers can lead to hydrogen embrittlement, which poses potential risks of catastrophic failure under load. The same material and interface challenges are observed in superconductors and quantum technology, where the reliability and packaging of quantum circuits at low temperatures are of critical concern20. To address these issues, a large number of experimental studies have been conducted at high costs in low-T environments. As a representative example, these openly released data notably demonstrate that 300-series austenitic stainless steels show minimal hydrogen embrittlement at LH2 temperatures21, supporting their safe use as LH2 containers22,23.

Due to the varied temperature ranges of specific refrigeration techniques, the scientific studies and technical reports on the mechanical properties of metallic alloys are quite diverse and the reported data are often heterogeneous. Each temperature regime exhibits distinct characteristics. For instance, research at temperatures between 1–4.2 K typically investigates the low-T mechanical performance of structural materials used in superconducting systems. In contrast, studies focusing on the temperature range of 14–20 K often explore hydrogen effects. Collecting these research data could broaden our understanding of the composition-processing-microstructure-properties relationship of materials in current cryogenic applications and highlight potential challenges in the advancement of future technologies.

The FAIR principles (Findability, Accessibility, Interoperability, and Reusability)24 are increasingly vital in materials science and engineering. Standardized reporting formats for experimental reports and datasets are necessary to produce comprehensive, clean, and usable data, preventing the waste of resources and advancing material research in the data science and machine intelligence era25,26. Following these concepts, we construct a comprehensive dataset21 on the mechanical properties of metallic alloys at low-T conditions. The codes and scripts used to construct and manage the dataset21 are also openly released. Our dataset21 includes tensile and impact test data reported in 715 scientific articles and serves as a reference for the development and deployment of future low-T technologies.

Methods

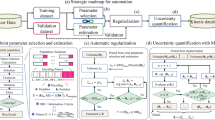

The workflow follows our previous work on fatigue data of advanced alloys26,27,28, which includes three key steps of content acquisition, data extraction, and dataset construction. In this study, we enhance the pipelines by incorporating a feature to extract textual content from Portable Document Format (PDF) files and utilizing advanced language models to process the full text in a single request, thereby improving both efficiency and quality. The product dataset21 consists of both research metadata and scientific data. Metadata includes information such as titles, authors, the sources of publication, the years of publication, and digital object identifiers (DOIs). Scientific data includes research data such as material types, chemical compositions, processing and test conditions, and mechanical properties. For the lack of a unified data description for low-T mechanical properties reported in figures and tables, manual extraction and correction are needed. The final data are published in standardized form for reuse.

Content acquisition

With the compiled keywords of ‘Temperature’, ‘Property’, and ‘Alloy’ into a search formula (Table 1), Web of Science (WoS, https://www.webofscience.com/wos) returned 8,439 records of journal articles. The metadata are obtained through the ‘export’ function. WoS applies stemming rules to the search queries. Stemming removes suffixes such as ‘-ing’ and ‘-es’ from words in a search query to expand the search and retrieve additional, relevant records. The articles are classified through the natural language processing (NLP) model, Robustly Optimized Bidirectional Encoder Representations from Transformers (BERT) Pretraining Approach (RoBERTa)29, according to their abstracts. After manual inspection and correction, the valid results for the current study include 715 articles. The original documents are collected through their DOIs in Extensible Markup Language (XML), Hypertext Markup Language (HTML), and PDF formats under valid licenses through Tsinghua University Library (https://lib.tsinghua.edu.cn). The full-text articles from Elsevier are retrieved using its Developer Portal via an official Application Programming Interface (API, https://dev.elsevier.com/documentation/FullTextRetrievalAPI.wadl). Articles from other publishers are automatically downloaded using the open-source code article-downloader30 (https://github.com/olivettigroup/article-downloader), or manually downloaded from their official websites (e.g., https://www.mdpi.com, https://onlinelibrary.wiley.com, https://link.springer.com). XML/HTML documents can be automatically parsed and converted into structured text through computer codes, while PDF requires manual processing in a large part.

Data extraction

Images in the articles are directly collected from the XML/HTML documents or extracted from the PDF documents using PyMuPDF31 (https://pypi.org/project/PyMuPDF/). Figures with multiple panels are automatically segmented using a rule-based code. Figures presenting mechanical properties are screened by the convolutional neural network (CNN) model, ResNet32 (https://pytorch.org/hub/pytorch_vision_resnet/). Strength and fracture elongation data in images are extracted by using our in-house MATLAB code, IMageEXtractor28 (https://github.com/xuzpgroup/ZianZhang/tree/main/FatigueData-AM2022/IMEX).

Table data in XML/HTML files are collected in their original forms by using table extractor33 (https://github.com/olivettigroup/table_extractor) and saved as a JavaScript Object Notation (JSON)34 files, while table data in PDF files are extracted manually. Only the relevant data, screened using Generative Pre-Trained Transformers (GPT-3.535, https://platform.openai.com/docs/models/gpt-3.5-turbo), Generative Language Model (GLM-436, https://github.com/THUDM/GLM-4), and manual efforts, respectively, are retained in the product dataset21.

Text in the XML/HTML and PDF documents are extracted using TEXTract28 (https://github.com/xuzpgroup/ZianZhang/tree/main/FatigueData-AM2022/TEXTract) and PDFDataExtractor37 (https://github.com/cat-lemonade/PDFDataExtractor), respectively. For literature prior to 1991, only scanned image PDFs are available, which were converted into TXT files for text mining tasks using two large-scale pre-trained language models, GPT-3.535 and GLM-436, for comparative studies. The tasks are batch processed through their official APIs.

The detailed steps of text mining using GPT-3.5 are explained as follows. The section titles are filtered by the keywords (Table 2) to retrieve paragraphs related to the experimental methods and data. GPT-3.5 is used to extract the information of materials, processing and testing conditions, and mechanical properties from relevant paragraphs. The prompts in GPT include task descriptions, examples, and the text to be processed. The task description asks GPT to extract data from the text and return them in the JSON format. Each example is a text-completion pair to inform the content (the paragraphs) and format (JSON) of the data to be extracted. The text to be processed is placed at the end of the prompt. As limited by the maximum tokens (4,096) in GPT-3.5, we provide only two examples per request and extract data from only one paragraph at a time. The data extracted from each paragraph are manually matched into aligned material-processing-experiment entries, and associated with figure and table data.

The same prompts are chosen in text mining using GLM-4. GLM-4 supports significantly more input and output tokens compared to GPT-3.5. This capability allows it to process the entire article text in a single session. The text and table data can be automatically aligned, simplifying the subsequent steps of dataset integration. However, the association with figure data must be performed manually.

This two-model approach demonstrates the generalizability of our methodology, which can be incorporated into automated platforms with further development. However, large language model (LLM) based text processing faces challenges such as incorrect or missing content, diverse data formats, and the inability to extract the composition-processing-testing-performance relationship. These issues cannot be resolved with rules in the current study. To mitigate the issues, we manually process the data through content proofreading and data formatting, a step that is time-intensive and requires specialized domain knowledge. In the future, prompt engineering38 and fine-tuning39 techniques in the scientific and engineering domains should be developed to enhance efficiency.

Dataset integration and data correction

In the construction of the product dataset21, the mechanical data extracted from text, figures and tables are paired with the type and composition of materials, processing and test conditions (e.g., temperatures, sample geometries, refrigeration techniques), and stored as distinct data entries. To ensure data quality that is essential for scientific and engineering uses, manual inspection and correction are conducted on the raw data generated by GPT-3.5 and GLM-4. A unified language for ‘low-T alloys’ (ULLTA) is proposed to standardize the data representation (Fig. 2). The product dataset21 is exported to a JSON file and proofread by comparing it to the PDF files of all source documents.

Dataset structures21. The dataset of low-T alloys is formatted into a hierarchical tree structure. The name of each tree node is highlighted in yellow. Keys are defined for easy access by scripts. Each node has its specific data type.

Data Records

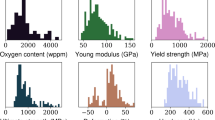

The AlloyData-2024LT dataset is available as a JSON file at figshare (https://doi.org/10.6084/m9.figshare.2591226721), serving as a valuable resource for the understanding and advancement of low-T alloys. The JSON file is formatted into a hierarchical tree structure (Fig. 2). The tree node where the data value is stored is called the data entry. Data entries include string and numeric data types. Text data is stored as a string. The release years of publications, rating scores and mechanical properties are defined as numbers, and other numeric data such as processing parameters, ingot size, and experimental temperature are stored in the form of a numeric array. The tree nodes used to group data entries are called data structures. Numerous structures, encompassing articles and data records, are compiled into a structure array. To facilitate programming implementation and data acquisition, keys are defined for data entries, structures, and structure arrays (Fig. 2, Tables 3, 4, and 5).

The root node of the top-level data structures is AlloyData-2024LT, containing child nodes of articles and a default unit system (e.g., MPa for stress, K for temperature). Raw numeric data are converted to the default units of data entries. Articles are stored in a struct array. Each of them contains two structs, which are the metadata and scientific data. Metadata contains data entries such as the publication information of the articles. Scientific data stores a struct array of data records. Each data record corresponds to a specific condition of experimental tests. A scientific data record contains 4 sub-structs (‘Materials’, ‘Processing’, ‘Testing’, and ‘Mechanical properties’) as defined in Table 5. Incomplete data specifications in literature hinder data mining and reduce data credibility and reusability. A rating score, based on the weighted sum of non-empty entries, quantifies the completeness of data records in source documents27. The processing parameters are organized as the ‘proc_para’ sub-struct array, and the content of each sub-struct depends on the types of material processing. For surface treatments, the array ‘surf_para’ is defined in the same way as processing parameters.

The terminology of data types is largely inherited from MATLAB40 (https://www.mathworks.com/help/matlab/data-types.html). For the JSON file, the struct is defined as a dictionary, and all types of arrays are defined as lists. With the dataset structures outlined above, the data entries are explained here in more detail. The sub-struct array ‘mech_prop’ stores the mechanical properties of the alloy, including yield strength, ultimate tensile strength, elongation at fracture, and Charpy impact energy. ‘ingot_desc’, ‘spec_desc’, and ‘spec_shape’ describe the shape and cross-section of the ingot and specimen, respectively. ‘spec_size’ defines the specimen dimensions, including the longitudinal length, diameter for round specimens, outer and inner diameters for annular specimens, and width and thickness for rectangular cross-sections. The ingot dimensions are defined in the same way and stored in ‘ingot_size’. In the numeric arrays of other data entries, a single value stands for a specific value or the mean, and two values stand for the lower and upper bounds, respectively. For the convenience of comparison between the string data, a unified nomenclature is used for data entries such as types of processing, materials, and machines. In our dataset21, data entries not reported explicitly are recorded as empty lists and strings.

Technical Validation

For technical validation of the dataset quality, we take a two-step strategy combining automated processing and manual correction. Firstly, data in the source text and table are extracted using LLMs35,36, for comparative studies, and data in the figures were collected using our in-house tool28. The performance metrics of figure, text, and table processing show that the F1 scores of automated extraction are 55–92% (Table 3). GPT-3.5 achieves an F1 score of 89%, while GLM-4 achieves an F1 score of 91%, indicating that the LLMs are capable of understanding most of the text and can help reduce the human effort required to construct extraction rules or build NLP models. The F1 score for multi-panel image segmentation is 92%, but the precision of image auto-classification through code is low (39%), suggesting that the results of automatic image classification were unreliable. Therefore, the classification results are manually reviewed and corrected prior to image data extraction to ensure the reliability of the outcomes.

The low precision in image classification arises from the lack of standardized image formats for mechanical properties of low-T alloys. This variability in image content and features negatively impacts the performance of ResNet32, particularly on small datasets. The use of IMageEXtractor28 allows automated processing and assisted calibration of the coordinate axes and data points. To ensure full accuracy of the dataset for end uses in low-T alloy applications, the data records extracted from figures, tables, and texts are manually checked and corrected by two individuals in two rounds. The accuracy after the first round of verification reached 97%, and after the second round, 50 randomly selected documents are checked, which confirms that the accuracy of extraction is improved to be 100%. The manual checking and correction process requires substantial human effort, averaging ~ 30 minutes per article.

Our dataset21 compiles literature data from WoS up to May 31, 2024 (Fig. 1b). Compared to materials handbooks6,41 and commercial databases, our dataset21 offers several key advantages. It is freely accessible, adheres to the FAIR principles24 for data sharing, contains comprehensive metadata and scientific data from the literature, often omitted in other sources. Notably, data in materials handbooks typically present only fitted curves rather than original data points6,41, restricting their use in engineering references and data-centric research. In contrast, our dataset21 includes original experimental data points along with processing and testing conditions, enabling better statistical analysis of the composition-processing-microstructure-performance relationship. Moreover, unlike published materials handbooks, our dataset21 includes the latest research developments, such as those involving multi-principal element alloys, and is dynamic and updatable using the codes and scripts openly released with this paper21. This comprehensive dataset21 captures the temporal evolution of research on alloys at low temperatures, associated cooling technologies, and temperature ranges of exploration, thereby offering macro-level guidance for researchers in setting research directions, optimizing resource allocation, and improving research and development efficiency. These capabilities further facilitate advanced material screening, design, discovery, and automated laboratory studies enabled by state-of-the-art artificial intelligence techniques25,26.

Code availability

The automated extraction of information from texts, figures, and tables is based on open-source codes and models. Our in-house codes and scripts for literature acquisition, text mining using LLMs, data extraction from figures and tables, data management, and data visualization are publicly available on GitHub (https://github.com/xuzpgroup/HaoxuanTang/tree/main/LowTData)27,28. These resources can be used under the CC-BY license with appropriate acknowledgment of this article. Users can utilize the script ext_property.py to access and analyze material data at specific temperatures. A template script, add_entry.py, is provided for adding rarely reported entries such as grain sizes and moduli. External data imports into our dataset21 can be performed in the ULLTA format using the script import_ullta.py. Dataset scoring can be executed using the script cal_rate_score.py, allowing customization of weights by the users. More detailed instructions and information are available in the accompanying README.md file for users to consult for guidance on using the codes and scripts.

References

Timmerhaus, K. D. & Reed, R. P. Cryogenic Engineering: Fifty Years of Progress (Springer Science & Business Media, 2007).

Zu, H., Dai, W. & De Waele, A. Development of dilution refrigerators: A review. Cryogenics 121, 103390 (2022).

Qiu, Y., Yang, H., Tong, L. & Wang, L. Research progress of cryogenic materials for storage and transportation of liquid hydrogen. Metals 11, 1101 (2021).

Anoop, C. et al. A review on steels for cryogenic applications. Mater. Perform. Charact. 10, 16–88 (2021).

Duthil, P. Material Properties at Low Temperature. In CAS-CERN Accelerator School: Superconductivity for Accelerators, 77–95 (CERN, 2014).

McClintock, R. M. & Gibbons, H. P. Mechanical Properties of Structural Materials at Low Temperatures: A Compilation from the Literature Vol. 13 (National Bureau of Standards, 1960).

Pineau, A., Benzerga, A. A. & Pardoen, T. Failure of metals I: Brittle and ductile fracture. Acta Mater. 107, 424–483 (2016).

Zhang, Y., Ma, E., Sun, J. & Han, W. A unified model for ductile-to-brittle transition in body-centered cubic metals. J. Mater. Sci. Technol. 141, 193–198 (2023).

Tong, Y. et al. Outstanding tensile properties of a precipitation-strengthened FeCoNiCrTi0.2 high-entropy alloy at room and cryogenic temperatures. Acta Mater. 165, 228–240 (2019).

Bian, B. et al. A novel cobalt-free FeMnCrNi medium-entropy alloy with exceptional yield strength and ductility at cryogenic temperature. J. Alloys Compd. 827, 153981 (2020).

Zhang, D. et al. Superior strength-ductility synergy and strain hardenability of Al/Ta Co-doped NiCoCr twinned medium entropy alloy for cryogenic applications. Acta Mater. 220, 117288 (2021).

Liu, D. et al. Exceptional fracture toughness of CrCoNi-based medium-and high-entropy alloys at 20 Kelvin. Science 378, 978–983 (2022).

Yang, Y. et al. Status and challenges of applications and industry chain technologies of hydrogen in the context of carbon neutrality. J. Cleaner Prod. 376, 134347 (2022).

Zhou, Y. et al. Green hydrogen: A promising way to the carbon-free society. Chin. J. Chem. Eng. 43, 2–13 (2022).

Cecere, D., Giacomazzi, E. & Ingenito, A. A review on hydrogen industrial aerospace applications. Int. J. Hydrogen Energy 39, 10731–10747 (2014).

Kumar, S. et al. Synergy of green hydrogen sector with offshore industries: Opportunities and challenges for a safe and sustainable hydrogen economy. J. Cleaner Prod. 384, 135545 (2023).

Liu, W. et al. The production and application of hydrogen in steel industry. Int. J. Hydrogen Energy 46, 10548–10569 (2021).

Tollefson, J. et al. Hydrogen vehicles: Fuel of the future. Nature 464, 1262–1264 (2010).

Li, M. et al. Review on the research of hydrogen storage system fast refueling in fuel cell vehicle. Int. J. Hydrogen Energy 44, 10677–10693 (2019).

Brecht, T. L. Micromachined Quantum Circuits. Ph.D. Thesis, Yale University (2017).

Tang, H. & Xu, Z. Mechanical performance dataset for alloy with applications at low temperatures. figshare. https://doi.org/10.6084/m9.figshare.25912267 (2024).

Fukuyama, S. et al. Effect of temperature on hydrogen environment embrittlement of type 316 series austenitic stainless steels at low temperatures. J. Jpn. Inst. Met. 67, 456–459 (2003).

Deimel, P. & Sattler, E. Austenitic steels of different composition in liquid and gaseous hydrogen. Corros. Sci. 50, 1598–1607 (2008).

Wilkinson, M. D. et al. The FAIR guiding principles for scientific data management and stewardship. Sci. Data 3, 160018 (2016).

Calderón, L. A. Á. et al. Management of reference data in materials science and engineering exemplified for creep data of a single-crystalline Ni-based superalloy. Acta Mater. 286, 120735 (2025).

Xu, Z. & Zhang, Z. The need for standardizing fatigue data reporting. Nat. Mater. 23, 866–868 (2024).

Zhang, Z., Tang, H. & Xu, Z. Fatigue database of complex metallic alloys. Sci. Data 10, 447 (2023).

Zhang, Z. & Xu, Z. Fatigue database of additively manufactured alloys. Sci. Data 10, 249 (2023).

Liu, Y. et al. RoBERTa: A robustly optimized BERT pretraining approach. Preprint at https://arxiv.org/abs/1907.11692 (2019).

Kim, E. et al. Machine-learned and codified synthesis parameters of oxide materials. Sci. Data 4, 1–9 (2017).

Adhikari, N. S. & Agarwal, S. A comparative study of PDF parsing tools across diverse document categories. Preprint at https://arxiv.org/abs/2410.09871 (2025).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. Proc. IEEE Int. Conf. Comput. Vis. Pattern Recognit. 770–778 (2016).

Jensen, Z. et al. A machine learning approach to zeolite synthesis enabled by automatic literature data extraction. ACS Cent. Sci. 5, 892–899 (2019).

Taylor, M.Introduction to JavaScript Object Notation: A To-the-Point Guide to JSON. (CreateSpace Independent Publishing Platform, North Charleston, 2014).

Brown, T. et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 33, 1877–1901 (2020).

Team GLM et al. ChatGLM: A Family of Large Language Models from GLM-130B to GLM-4 All Tools. Preprint at https://arxiv.org/abs/2406.12793 (2024).

Zhu, M. & Cole, J. M. PDFDataExtractor: A tool for reading scientific text and interpreting metadata from the typeset literature in the portable document format. J. Chem. Inf. Model. 62, 1633–1643 (2022).

Polak, M. P. & Morgan, D. Extracting accurate materials data from research papers with conversational language models and prompt engineering. Nat. Commun. 15, 1569 (2024).

Min, B. et al. Recent advances in natural language processing via large pre-trained language models: A survey. ACM Comput. Surv. 56, 1–40 (2023).

Gilat, A. MATLAB: An Introduction with Applications. (John Wiley & Sons, Hoboken, 2017).

Koshelev, P. F. Mechanical properties of materials at low temperatures. Strength Mater. 3, 286–291 (1971).

Acknowledgements

The work was supported by Beijing Municipal Science and Technology Commission via grant Z231100007123015 and National Natural Science Foundation of China via grants 12425201 and 52090032.

Author information

Authors and Affiliations

Contributions

Z.X. conceived and supervised the research. H.T. performed the work. All authors participated in discussing the results and preparing the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Tang, H., Chen, Z., Yao, X. et al. Mechanical performance dataset for alloy with applications at low temperatures. Sci Data 12, 1235 (2025). https://doi.org/10.1038/s41597-025-05512-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-025-05512-9