Abstract

Self-objectification, marked by an overemphasis on how one’s appearance is viewed by others, promotes increased body surveillance and dissatisfaction. Natural conversations centered around appearance, such as “fat talk”—where individuals, often women, engage in negative or self-deprecating remarks about their bodies or weight—are commonly used to induce a state of self-objectification. However, there is a notable lack of public datasets on brain signals during fat talk. In this dataset, we collected brain data from 31 female participants (aged 19.55 ± 0.89 years) using a 40-channel portable near-infrared device during fat talk and non-fat talk (topics such as travel and home decoration), primarily covering the frontal and parietal areas. Data analyses of subjective reports and fNIRS data revealed an increase in body surveillance and dissatisfaction, suggesting a significant activation of the self-objectification state. This dataset can be utilized to explore fNIRS data processing during natural interpersonal conversations and to gain insights into emotional and cognitive responses under conditions of self-dysregulation.

Similar content being viewed by others

Background & Summary

Self-objectification, where individuals relinquish their subjectivity, not only causes various psychological and physiological problems such as depression, anxiety, eating disorders, and substance abuse, but also increases aggressive and violent behaviour toward others, reducing social vitality and creativity1,2. This phenomenon occurs when individuals place excessive importance on their appearance, neglecting intrinsic qualities such as abilities and character. According to objectification theory, self-objectification originates from the aesthetic standards perpetuated by social media and interpersonal interactions, which are conceptualized as sexual objectification3. A recent review found that sexual objectification—prominently social media and interpersonal interactions—has a moderate and positive impact on self-objectification (r = 0.41), with the correlation being both significant and robust4.

In recent years, several neuroimaging methods, such as EEG and fMRI, have been applied to detect brain activity during sexual objectification and self-objectification5. EEG evidence indicates that for non-objectified (non-nude male/female) images, inverted images evoke a greater N170 amplitude in the temporal lobe than upright images. However, for objectified (nude male/female) images, similar to images of objects (e.g., shoes), N170 amplitudes are similar in both inverted and upright images6,7,8. These findings suggest that individuals process objectified images similarly to objects, using analytical processing of the relationships among parts rather than focusing on the overall configuration. fMRI studies indicate that sexual objectification reduces social cognitive processing and interoception by decreasing activity in the medial prefrontal and cingulate cortices9,10. Furthermore, self-objectification levels are negatively correlated with spontaneous activity in the inferior frontal gyrus, which is positively related to interoception11. Notably, previous research was either conducted in a resting state with no experimental task or under highly controlled experimental stimuli and tasks. While these methods offer the advantage of minimizing confounding factors and revealing fundamental principles of cognitive processes in the brain, their ecological validity has been increasingly questioned: Can the insights gained from such controlled laboratory studies be applied to real-world situations?

Over the past decade, natural stimuli and naturalistic settings have sparked significant interest in neuroscience, leading to breakthroughs across various applications. Natural stimuli are valuable because their properties align with the brain’s perceptual systems, a result of our biological adaptation to the environment, leading to their constant processing by the brain12,13. Therefore, naturalistic stimuli and settings allow researchers to more accurately simulate and understand brain functions and social cognition during social interactions. For example, by simulating everyday conversational scenarios, researchers can observe how the brain processes genuine social information and emotional responses, rather than depending on highly controlled experimental settings. Participants also behave more naturally in these realistic social contexts. In addition, objectification and self-objectification are inherently social processes. Conversations centered around appearance, such as “fat talk”—where individuals, often women, engage in negative or self-deprecating remarks about their bodies or weight—are typical examples of natural stimuli and settings14,15. Individuals are almost daily exposed to appearance-related social evaluations through social media or everyday interactions, which can lead to self-objectification. However, there is a notable lack of publicly available datasets related to neural activity in response to objectification and self-objectification with natural stimuli and naturalistic settings.

In the current paper, we recorded 40-channel fNIRS signals of the brain simultaneously in 31 healthy female participants (aged 18–21 years) engaging in natural conversations. All participants were asked to engage in a fat talk to induce self-objectification. Both before and after the fat talk, participants were required to engage in appearance-unrelated conversations, such as discussing travel experiences or room decoration. A series of questionnaires were utilized to assess participants’ levels of body surveillance, body dissatisfaction, and emotional experiences, after each conversation. Throughout the three conversations, participants were recorded on video, while their brain activity in the prefrontal and frontoparietal regions was measured using fNIRS. Notably, fNIRS holds distinct advantages for investigating neural response in the context of natural and dynamic social interactions, as it exhibits greater tolerance to motion artifacts and lower sensitivity to electromagnetic interference than other neuroimaging methods16. This dataset can be used to examine fNIRS data processing in the context of natural interpersonal conversations and to gain insights into how emotional and cognitive responses are influenced by self-dysregulation.

Methods

Participants

Thirty-one female participants (M ± SD = 19.55 ± 0.89 years) were recruited through campus advertisements. All participants were right-handed, possessed either normal vision or vision corrected to normal, and had no record of neurological or psychiatric disorders. This study received ethical approval from the Human Participants Ethics Committee of Shanghai Normal University (2022-069). Prior to the experiment, all participants were provided with detailed information about the study, including how their data (i.e., interview transcripts, subjective reports, fNIRS data, and behavioral data) would be used and shared. All participants provided informed consent and voluntarily agreed to take part in the study. The consent explicitly included permission for the anonymous and open publication of their data, including interview transcripts. Strict measures were taken to protect their confidentiality, ensuring that all information remained secure and was used only for research purposes. We closely followed ethical guidelines and institutional review board regulations to maintain privacy and ethical standards. Upon completing the experiment, each participant received a compensation of 35 RMB.

Procedures

Upon entering the laboratory, participants were introduced to a female confederate and engaged in a two-minute warm-up to foster mutual familiarity. Then, they were asked to subsequently engage in three segments of dialogue with the confederate: the pre-test talk, fat talk, and post-test talk (Fig. 1). Each conversation was initiated by the confederate who posed questions, and participants were required to answer and elaborate in detail. Notably, throughout the conversation, the confederate consistently assumes the role of the questioner, guiding participants to explore and discuss relevant topics. Each conversation lasted for five minutes.

During the pre-test talk, the confederate’s questions focused on travel (e.g., “Which scenic spots do you prefer? How do you plan your travel itinerary? How do you map out your travel route?”) or dormitory (e.g., “What style of interior design do you find appealing? How do you organize your space? What wallpapers do you recommend?”). During the fat talk, the confederate’s inquired explore participants’ bodies, asking questions like “Which part of your body brings you the most satisfaction”, “What part of your body do you find least satisfying”, “Which specific aspect of your body would you like to alter” and so on. These questions were designed to prompt participants to provide their own negative self-evaluations on the body. The content of the post-test talk mirrored that of the pre-test talk. Specifically, each participant was tasked with discussing travel, obesity, and dormitory, with the sequence of discussing travel and dormitory being randomly balanced.

Throughout each conversation, fNIRS was used to record brain signals in the frontoparietal regions of each participant. Additionally, both video and audio recordings were made of the entire conversation. After each conversation, participants completed a series of questionnaires via a data collection platform to assess their levels of body surveillance, body dissatisfaction, and emotional experiences. Following the completion of the questionnaires, participants rested for 1 minute before beginning the next segment of conversation.

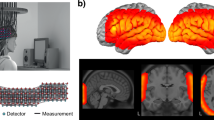

fNIRS data acquisition

The brain data were collected by using the NIRSport device, manufactured by Hanxiang Company. The data was obtained at a sample rate of 7.6 Hz. Two 4 × 4 probe boards were placed on the head of each participant, and contained 16 emitters and 16 detectors. These components collectively constituted 40 measurement channels, with a 3-cm separation between optodes (Fig. 1). The alignment of fNIRS channels with measurement points on the cerebral cortex was established through the application of the virtual registration method17. This method has been validated through a multi-subject study examining anatomic craniocerebral correlation. The possible MNI coordinates of each channel (Table 1) were obtained by using the built-in software of the NIRSport device (i.e., NIRSite). The brain regions corresponding to the channels were identified using the Automated Anatomical Labeling atlas18 and verified using data from Neurosynth, a platform for the large-scale and automated synthesis of fMRI data.

Subjective measures

After each conversation, participants were required to complete several questionnaires about body surveillance, body image, and emotional experiences. To measure participants’ body surveillance, the Objectified Body Consciousness Scale (OBCS) were used19. The eight items of OBCS were evaluated on a Likert 7-point scale (“1 = Strongly Disagree” to “7 = Strongly Agree”). Total scores, obtained by summing responses, provide insights into respondents’ attitudes regarding appearance and clothing comfort.

The Body Image State Scale (BISS) was employed to evaluate an individual’s perception of their body image20. It encompasses thoughts about appearance, body shape, weight, attractiveness, and comparisons with one’s typical state and others. With six items, the BISS utilizes a Likert 9-point scoring system, where higher scores signify a more positive body image perception by the individual.

Six items from The Positive and Negative Affect Schedule (PANAS) were used to assess emotional experiences potentially related to self-objectification21. These items included Excited, Upset, Afraid, Nervous, Hostile, and Irritable. Responses were recorded on a percentage scale, with higher scores reflecting a more intense emotional experience.

Behavioral data coding

Based on the start and end times of the three conversation segments, each video was trimmed to 5 minutes, resulting in a total of 15 minutes of data divided into three 5-minute clips. These clips were processed using motion energy analysis to obtain behavioral data. Following standard research practices, the regions of interest (ROIs) were defined as the head and upper body22. The head ROI extended from a few inches above the participant’s head to the bottom of the chin, while the body ROI included the area from the bottom of the head ROI, beneath the chin, down to the horizontal surface of the couch. Motion energy parameters were recorded in a text file for each video segment.

Data Records

The dataset was shared anonymously, and the dataset contains no identifying information from the participants. The dataset23 is available online via the Open Science Framework (https://doi.org/10.17605/OSF.IO/HMVSU). It is organized by data type, with each type presented separately for each participant. The dataset comprises four types of data: subjective reports, fNIRS data, behavioral data, and conversation transcript, with information from 31 participants for each type (Table 2). Each data type is stored in its own folder.

The subjective report folder contains two files: demographic information (demographics.csv) and questionnaire data (questionnaire.csv). The demographic file includes participant IDs, age (years), gender, height (cm), weight (kg), and BMI. The questionnaire file contains the OBCS, BISS, and PANAS scores for each participant. Specifically, the column names include participant ID and subjective ratings under different conditions (i.e., pre, fat, post).

The fNIRS data folder contains individual subfolders for each participant. Since there are 31 participants, there are 31 subfolders, each containing a corresponding.csv file. It’s important to note that, due to the default settings in NIRScout, the subfolders are named based on the collection date. For example, for Participant 1, the folder is named “2023-06-02_004,” where “2023-06-02” refers to the collection date, and “004” indicates the fourth data set collected after the machine was powered on. The.csv file contains a mapping between the folder name and the participant ID. This file has two columns: the first column represents the participant ID, and the second column lists the corresponding folder name. Within each participant’s subfolder, raw fNIRS signals are saved in two files with.wl1 and.wl2 extensions, which correspond to photodiode readings at the two wavelengths used in fNIRS. The raw data is also stored in both.nirs and.snirf formats. The.nirs files can be imported into software like NIRS_SPM and Homer2 for data analysis, while the.snirf files are compatible with Homer3. In addition, the conversation start and end times are recorded in a .tri file. Each row in the .tri file contains data formatted as follows (using the first row from Participant 1’s .tri file as an example): ‘2023-06-02T11:13:03.356801;1673;8;4;2;42.’ Here, ‘2023-06-02T11:13:03.356801’ represents the year, month, day, followed by ‘T’ and the hour, minute, second, and fractional second. ‘1673’ is the data sample number, ‘8’ is the step within the data sample, ‘4’ is the hardware ID, ‘2’ is the trigger marker, and ‘42’ is the sample within the step. Furthermore, the .tri file includes the following key time points: (i) The first and second rows represent the start and end times of the pre-test talk. (ii) The fourth and fifth rows represent the start and end times of the fat talk. (iii) The seventh and eighth rows represent the start and end times of the post-test talk.

The behavioral data folder contains motion energy data for each participant, with a total of 93.txt files. Each participant has three text files (.txt) corresponding to the three conversation conditions. The three files for the same participant are distinguished by suffixes representing these conditions. For example, for Participant 1, the folder contains three.txt files: 1_pre, 1_fat, and 1_post. Each text file includes two columns: the first column represents head motion energy, and the second column represents body motion energy.

The conversation data folder contains dialogue records for each participant, also with a total of 186.txt files. Each participant has six text files (.txt), corresponding to the three conversation conditions and two language versions. For the same participant, these six files are distinguished by suffixes representing the conditions (pre-test talk, fat talk, post-test talk) and language versions (Chinese, English). For example, for Participant 1, the folder contains six.txt files. Specifically, three of these files are the Chinese conversation documents corresponding to the three conversational tasks, named 1_pre, 1_fat, and 1_post respectively. Correspondingly, there are also three English versions of these documents, named 1_pre_translated, 1_fat_translated, and 1_post_translated. Each text file includes the female confederate’s questions, the participant’s responses, and their corresponding timestamps. It is important to note that each conversation is in Chinese, but we also provide the corresponding English translation.

Technical Validation

fNIRS data processing

First, a quality check was performed on the fNIRS data by using roprietary MATLAB scripts (MathWorks, Natick, MA, USA) and Homer3 toolbox24. Specifically, the time series of each channel was examined to detect artifacts and signal drift caused by motion. This process is carried out at the following stages: raw light intensity data, raw optical density data, and raw hemoglobin concentration data (i.e., HbO and HbR). In addition, power spectral density analysis was conducted to assess the presence of physiological components such as respiration and heartbeat in the power spectra of HbO and HbR25. The presence of a heartbeat signal in the power spectral density of HbO and HbR time series is considered a key indicator of high-quality probe-scalp coupling. In adults, the heartbeat frequency is typically around 1 Hz, while respiration-related components generally appear within the range of approximately 0.1–0.7 Hz. Figure 2 presents the time series and power spectral density plots of a typical participant.

Quality check of fNIRS data for a typical participant. (A) Time series of each channel. The first row displays the time courses of raw optical density data for all channels in each wavelength (i.e., 760 nm and 850 nm). The second row shows an image plot of the time courses of raw optical density (also referred to as greyplots). The third row shows the time courses of HbO and HbR. (B) Power spectral density at the channel level for HbO and HbR signals.

After the data quality check, data preprocessing was performed (see details on https://doi.org/10.17605/OSF.IO/HMVSU). For each channel of each participant, the raw optical intensity data were converted into changes in optical density (Fig. 3). The modified Beer-Lambert law was then applied to transform the optical density data into HbO and HbR concentration values. Subsequently, the Correlation-based Signal Improvement was employed to correct for motion artifacts caused by head movements26. Next, we implemented wavelet transform denoising techniques to reduce globle and physiological noise27. Given that the HbO signal is notably sensitive to alterations in cerebral blood flow as measured by fNIRS28, our research emphasized the HbO time series.

The preprocessed data were then entered into group analysis by using NIRS_SPM toolbox. Specifically, a general linear model, encompassing condition effects, a mean, and a linear trend, was employed to calculate parameter estimates. The estimate, denoted as beta, captured the levels of brain activation across various channels during distinct conditions (i.e., the pre-test, fat, and post-test talks), was obtained. The beta values were then subjected to a repeated measures analysis of variance (ANOVA) by using SPSS, with false discovery rate correction applied to account for data collected simultaneously from numerous spatially adjacent channels (i.e., 40 channels). Figure 4 displays the ANOVA-identified significant channels (7, 11, 12, and 27) before correction for multiple comparisons, which are spatially contiguous and situated within neighboring brain regions. After correction for multiple comparisons, only channel 7 remained significant, which is approximately located in the left inferior frontal cortex. This result aligns with previous findings that have linked the activity of inferior frontal cortex to body surveillance11.

Subjective data processing

A group-level analysis was conducted on subjective scores at the three time points. Specifically, the one-way repeated measures ANOVA for Condition (pre-test talk, fat talk, post-test talk) was conducted on the scores of body surveillance, body satisfaction, and the emotional levels of Excited, Upset, Afraid, Nervous, Hostile, and Irritable. If the main effect was significant, further simple effects analysis was performed to compare differences between the conditions. Figure 5 illustrates the variations in body dissatisfaction and body surveillance levels among participants following different types of conversations. Body dissatisfaction and body surveillance increased after the fat talk compared to the pre-test talk. This is consistent with previous findings, which showed that fat talk was positively correlated with body surveillance and body dissatisfaction29.

Usage Notes

This dataset provides an opportunity to explore effective fNIRS data processing algorithms during natural conversations. It is particularly useful for examining methods to effectively mitigate head motion artifacts, as some degree of head movement is unavoidable during such conversations. Specifically, this dataset can be used to assess and compare the efficacy of various head motion artifact removal techniques, including wavelet filtering, spline interpolation, Kalman filtering, autoregressive algorithms, and short channel separation correction30.

Furthermore, this dataset can be used to investigate changes in functional connectivity associated with self-objectification. In particular, a thorough analysis of the relationships between subjective reports, behavioral data, dialogue data, and neural data can reveal possible cognitive and emotional shifts that occur during the activation of self-objectification. For example, dialogue data can be analyzed using EmoAtlas to assess emotional and semantic components31, or the data can be encoded and combined with corresponding behavioral and neural data extracted from specific time windows for targeted analysis. These data also provide insights into how individuals restore their cognitive and emotional equilibrium as they recover from the state of self-objectification.

Code availability

The subjective and fNIRS dataset presented in this manuscript includes raw data that have not been processed by any script or software-based procedure. The behavioral dataset was analysed from video recordings using the motion energy analysis toolbox. Additionally, the code for fNIRS data quality validation and preprocessing has been shared (see details on https://doi.org/10.17605/OSF.IO/HMVSU).

References

Ward, L. M., Daniels, E. A., Zurbriggen, E. L. & Rosenscruggs, D. The sources and consequences of sexual objectification. Nat. Rev. Psychol. 2, 496–513 (2023).

Poon, K.-T., Chen, Z., Teng, F. & Wong, W.-Y. The effect of objectification on aggression. J. Exp. Soc. Psychol. 87, 103940 (2020).

Fredrickson, B. L. & Roberts, T.-A. Objectification Theory: Toward understanding women’s lived experiences and mental health risks. Psychol. Women Q. 21, 173–206 (1997).

Karsay, K., Knoll, J. & Matthes, J. Sexualizing media use and self-objectification: A meta-analysis. Psychol. Women Q. 42, 9–28 (2018).

Bernard, P., Cogoni, C. & Carnaghi, A. The sexualization-objectification Link: sexualization affects the way people see and feel toward others. Curr. Dir. Psychol. Sci. 29, 134–139 (2020).

Bernard, P. et al. The neural correlates of cognitive objectification: An ERP study on the Body inversion effect associated with sexualized bodies. Soc. Psychol. Personal. Sci. 9, 550–559 (2018).

Bernard, P. et al. Revealing clothing does not make the object: ERP evidences that cognitive objectification is driven by posture suggestiveness, not by revealing clothing. Pers. Soc. Psychol. Bull. 45, 16–36 (2019).

Xiao, L., Li, B., Zheng, L. & Wang, F. The relationship between social power and sexual objectification: Behavioral and ERP data. Front. Psychol. 10, 57 (2019).

Cikara, M., Eberhardt, J. L. & Fiske, S. T. From agents to objects: Sexist attitudes and neural responses to sexualized targets. J. Cogn. Neurosci. 23, 540–551 (2011).

Cogoni, C., Carnaghi, A. & Silani, G. Reduced empathic responses for sexually objectified women: An fMRI investigation. Cortex 99, 258–272 (2018).

Du, X. et al. The relationship between brain neural correlates, self-objectification, and interoceptive sensibility. Behav. Brain Res. 439, 114227 (2023).

Dikker, S. et al. Crowdsourcing neuroscience: Inter-brain coupling during face-to-face interactions outside the laboratory. NeuroImage 227, 117436 (2021).

Sonkusare, S., Breakspear, M. & Guo, C. Naturalistic stimuli in neuroscience: Critically acclaimed. Trends Cogn. Sci. 23, 699–714 (2019).

Jones, M. D., Crowther, J. H. & Ciesla, J. A. A naturalistic study of fat talk and its behavioral and affective consequences. Body Image 11, 337–345 (2014).

Linder, J. R. & Daniels, E. A. Sexy vs. sporty: The effects of viewing media images of athletes on self-objectification in college students. Sex Roles 78, 27–39 (2018).

Egetemeir, J., Stenneken, P., Koehler, S., Fallgatter, A. J. & Herrmann, M. J. Exploring the neural basis of real-life joint action: Measuring brain activation during joint table setting with Functional Near-Infrared Spectroscopy. Front. Hum. Neurosci. 5 (2011).

Tsuzuki, D. et al. Virtual spatial registration of stand-alone fNIRS data to MNI space. NeuroImage 34, 1506–1518 (2007).

Tzourio-Mazoyer, N. et al. Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. NeuroImage 15, 273–289 (2002).

Breines, J. G., Crocker, J. & Garcia, J. A. Self-objectification and well-being in women’s daily lives. Pers. Soc. Psychol. Bull. 34, 583–598 (2008).

Cash, T., Fleming, E., Alindogan, J., Steadman, L. & Whitehead, A. Beyond body image as a trait: The development and validation of the Body Image States Scale. Eat Disord. 10, 103–113 (2002).

Watson, D., Clark, L. A. & Tellegen, A. Development and validation of brief measures of positive and negative affect: The PANAS scales. J. Pers. Soc. Psychol. 54, 1063–1070 (1988).

Lopes-Rocha, A. C. et al. Motion energy analysis during speech tasks in medication-naïve individuals with at-risk mental states for psychosis. Schizophr. 8, 73 (2022).

Hu, Y. Dataset of natural conversations about appearance using fNIRS. OSF https://doi.org/10.17605/OSF.IO/HMVSU (2025).

Huppert, T. J., Diamond, S. G., Franceschini, M. A. & Boas, D. A. HomER: A review of time-series analysis methods for near-infrared spectroscopy of the brain. Appl. Opt. 48, D280 (2009).

Blanco, B., Molnar, M., Carreiras, M. & Caballero-Gaudes, C. Open access dataset of task-free hemodynamic activity in 4-month-old infants during sleep using fNIRS. Sci. Data 9(1), 102 (2022).

Cui, X., Bray, S. & Reiss, A. L. Functional near infrared spectroscopy (NIRS) signal improvement based on negative correlation between oxygenated and deoxygenated hemoglobin dynamics. NeuroImage 49, 3039–3046 (2010).

Duan, L. et al. Wavelet-based method for removing global physiological noise in functional near-infrared spectroscopy. Biomed. Opt. Express 9, 3805 (2018).

Cui, X., Bryant, D. M. & Reiss, A. L. NIRS-based hyperscanning reveals increased interpersonal coherence in superior frontal cortex during cooperation. NeuroImage 59, 2430–2437 (2012).

Shannon, A. & Mills, J. S. Correlates, causes, and consequences of fat talk: A review. Body Image 15, 158–172 (2015).

Hu, Y. et al. How to calculate and validate inter-brain synchronization in a fNIRS hyperscanning study. J. Vis. Exp. 175, e62801 (2021).

Semeraro, A. et al. EmoAtlas: An emotional network analyzer of texts that merges psychological lexicons, artificial intelligence, and network science. Behav. Res. Methods 57(2), 77 (2025).

Acknowledgements

We would like to thank Jianmei Shi, Qi Zhou, Mei Fu, and Liming Yue for their assistance in data collection. This work was supported by the Zhejiang Provincial Natural Science Foundation of China (No. LMS25C090002), and the National Natural Science Foundation of China (Nos. 62207025, 62337001) to Yafeng Pan.

Author information

Authors and Affiliations

Contributions

Yinying Hu and Xiangping Gao conceptualized the study. Yinying Hu selected the data and prepared the dataset for publication. Yinying Hu and Yafeng Pan performed the dataset anonymization. Yinying Hu and Yuqi Liu organized the dataset and assessed its quality. All authors wrote the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Hu, Y., Liu, Y., Hou, Y. et al. Dataset of natural conversations about appearance using fNIRS. Sci Data 12, 1486 (2025). https://doi.org/10.1038/s41597-025-05574-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41597-025-05574-9