Abstract

Fusion–Fission Optimization (FuFiO) is proposed as a new metaheuristic algorithm that simulates the tendency of nuclei to increase their binding energy and achieve higher levels of stability. In this algorithm, nuclei are divided into two groups, namely stable and unstable. Each nucleus can interact with other nuclei using three different types of nuclear reactions, including fusion, fission, and β-decay. These reactions establish the stabilization process of unstable nuclei through which they gradually turn into stable nuclei. A set of 120 mathematical benchmark test functions are selected to evaluate the performance of the proposed algorithm. The results of the FuFiO algorithm and its related non-parametric statistical tests are compared with those of other metaheuristic algorithms to make a valid judgment. Furthermore, as some highly-complicated problems, the test functions of two recent Competitions on Evolutionary Computation, namely CEC-2017 and CEC-2019, are solved and analyzed. The obtained results show that the FuFiO algorithm is superior to the other metaheuristic algorithms in most of the examined cases.

Similar content being viewed by others

Introduction

Optimization is a branch of applied mathematics that is widely used in various scientific disciplines because many problems can be expressed in the form of an optimization problem. Obviously, with the present rate of progress in all scientific fields, we face a variety of new real-world problems that have become more complex, such that conventional mathematical methods, such as exact optimizers, cannot solve them efficiently. In particular, exact optimizers do not have sufficient efficiency in dealing with many non-continuous, non-differentiable, and large-scale real-world multimodal problems1.

Early studies in the field of nature-inspired computation demonstrated that some numerical methods developed based on the behavior of natural creatures can solve real-world problems more effectively than exact methods2. Metaheuristic methods are numerical techniques that combine the heuristic rules of natural phenomena with a randomization process. Notably, over the past few decades, many researchers have concluded that developing and enhancing metaheuristic algorithms are practically-effective and computationally-efficient approaches to tackling complex and challenging unsolved real-world optimization problems3,4,5,6,7,8. A key advantage of metaheuristic methods is that they are problem-independent algorithms which provide acceptable solutions to complex and highly nonlinear problems in a reasonable time. Furthermore, they generally do not need any significant contributions to the algorithm structure from implementers, but it is only needed that they formulate the problem according to the requirements of the chosen metaheuristic. The point worth mentioning is that the core operation of the metaheuristic approaches is based on non-gradient procedures, where there is no need for cumbersome computations such as calculations of derivatives and multivariable generalizations. Moreover, randomization components enable metaheuristic algorithms to perform generally better than conventional methods. In particular, their stochastic nature enables them to escape from local optima and move toward global optimum on the search space of large-scale and challenging optimization problems.

Conventionally, two general criteria are used to classify metaheuristic methods: (1) the number of agents, and (2) the origin of inspiration. Based on the first criterion, metaheuristic algorithms can be divided into two groups: (1) single-solution-based algorithms, and (2) population-based algorithms. Also, according to inspiration, metaheuristic algorithms are divided into two main categories, namely Evolutionary Algorithms (EAs) and Swarm Intelligence (SI) algorithms. Single-solution-based methods try to modify one solution (agent) during the search process like what goes in the Simulated Annealing (SA) algorithm9; on the other hand, in population-based algorithms, a population of solutions is used to find the optimal answer similar to the simulation process in the Particle Swarm Optimization (PSO) algorithm10.

In EAs, the genetic evolution process is the main origin. Evolutionary Programming (EP)2, Evolutionary Strategy (ES)11, Genetic Algorithm (GA)12, and Differential Evolution (DE) are among the most famous methods in this domain. Besides, Simon13 proposed the Biogeography-Based Optimization (BBO) algorithm, which is used for global recombination and uniform crossover. Also, SI algorithms are based on the simulation of the collective behavior of creatures. SI algorithms are classified into three categories as follows. The first category is associated with the behavioral models of animals such as PSO10, Ant Colony Optimization (ACO)14, Artificial Bee Colony (ABC)15, Firefly Algorithm (FA)16, Cuckoo Search (CS)17, Bat Algorithm (BA)18, Eagle Strategy (ES)19, Krill Herd (KH)20, Flower Pollination Algorithm (FPA)21, Grey Wolf Optimizer (GWO)22, Ant Lion Optimizer (ALO)23, Grasshopper Optimization Algorithm (GOA)24, Symbiotic Organisms Search (SOS)25,26, Moth Flame Optimizer (MFO)27, Dragonfly Algorithm (DA)28, Salp Swarm Algorithm (SSA)29, Crow Search Algorithm (CSA)30, Whale Optimization Algorithm (WOA)31,32, Developed Swarm Optimizer (DSO)33, Spotted hyena optimizer (SHO)34, Farmland fertility algorithm (FFA)35,36, African Vultures Optimization (AVO)37, Bald Eagle Search Algorithm (BES)38,39 Tree Seed Algorithm (TSA)40,41, and Artificial Gorilla Troops (GTO) optimizer42. The second category concerns algorithms based on the physical and mathematical laws, such as Simulated Annealing (SA)9, Big Bang–Big Crunch optimization (BB–BC)43, Charged System Search (CSS)44,45, Chaos Game Optimization (CGO)46,47, Gravitational Search Algorithm (GSA)48, Sine Cosine Algorithm (SCA)49, Multi-Verse Optimizer (MVO)50, Atom Search Optimization (ASO)51, Crystal Structure Algorithm (CryStAl)52,53,54,55, and Electromagnetic field optimization (EFO)56. The third category includes algorithms that mimic various optimal behaviors of humans, for example, Imperialist Competitive Algorithm (ICA)57, Teaching Learning Based Optimization (TLBO)58, Interior Search Algorithm (ISA)59, and Stochastic Paint Optimizer (SPO)60.

Though there is a wide range of metaheuristic methods developed over the past few decades, they solve problems with different accuracies and time efficiencies; that is, one algorithm may not solve a specific problem with a desired accuracy or within a reasonable time, whereas another algorithm may be capable of achieving this goal. Therefore, computational time and accuracy are two essential considerations in developing novel metaheuristic methods. In other words, new robust methods are developed for more efficient search in the space of problems, and to find more accurate solutions to complex and large-scale problems in less time than previous ones. Therefore, there is an ongoing ambition in the optimization community to develop novel high-performance optimizers which can solve challenging problems more efficiently. In other words, each algorithm has particular advantages and disadvantages that are listed in Table 1 for the abovementioned algorithms.

The contribution of this paper is to develop a new physics-based metaheuristic algorithm called Fusion Fission Optimization (FuFiO) algorithm. The proposed algorithm simulates the tendency of nuclei to increase their binding energy and achieve higher levels of stability. In the FuFiO algorithm, the nuclei are divided into two groups, namely stable and unstable, based on their fitness. Each nucleus can interact with other nuclei using three different types of nuclear reactions, including fusion, fission, and \(\beta\)-decay. These reactions establish the stabilization process of unstable nuclei through which they gradually turn into stable nuclei.

The performance of the FuFiO algorithm is also examined and explained in two steps as follows. In the first step, FuFiO and seven other metaheuristic algorithms are used to solve a complete set of 120 benchmark mathematical test functions (including 60 fixed-dimensional and 60 N-dimensional test functions). Then, to make a valid judgment about the performance of the FuFiO algorithm, the obtained statistical results of FuFiO and the other algorithms are utilized as a dataset to be analyzed by non-parametric statistical methods. In the second step, to compare the ability of the proposed algorithm with state-of-the-art algorithms, the single-objective real-parameter numerical optimization problems of the recent Competitions on Evolutionary Computation (CEC 2017) including sets of 10-, 30-, 50-, and 100- dimensional benchmark test functions are considered. It should be noted that in this work, the main novelty is two-fold. First, the source of inspiration is provided by some fundamental aspects of nuclear physics. Second, that is of higher importance and rigor, the theory of nuclear binding energy to generate stable nuclei is used to develop the equations of a metaheuristic method for the first time. In this model, the tendency of nuclei to increase their binding energy and achieve higher levels of stability using nuclear reactions, including fusion, fission, and β-decay, is considered the central principle to develop the three main steps of the new algorithm.

The rest of this paper is organized as follows: “Fusion–fission optimization (FuFiO) algorithm” section describes the background, inspiration, mathematical model, and implementation of the proposed algorithm. “FuFiO validation” section explains comparative metaheuristics, mathematical functions, comparative results, and statistical analyses. “Analyses based on competitions on evolutionary computation (CEC)” section compares the performance of the FuFiO algorithm on the CEC-2017 and CEC-2019 special season with state-of-the-art algorithms. Finally, conclusions are given in “Conclusions and future work” section.

Fusion–fission optimization (FuFiO) algorithm

In the following sub-sections, the general principles of nuclear reactions, nuclear binding energy, and nuclear stability are discussed as an inspirational basis for the development of the Fusion–Fission Optimization (FuFiO) algorithm.

Inspiration

In nuclear physics, the minimum energy needed to dismantle the nucleus of an atom into its constituent nucleons, i.e., the collection of protons (Z) and neutrons (N), is called nuclear binding energy. The strong nuclear force that attracts the nucleons to each other has a positive value and creates this nuclear binding energy. Therefore, a nucleus with more binding energy provides more stability93. Importantly, the Coulomb repulsive force of protons reduces the nuclear attraction force and decreases the binding energy. Consequently, the stability of the nucleus further decreases when more protons are replaced with neutrons. Also, in the nuclei, most of the paired protons are close to each other such that their repulsive force decreases the strong nuclear force, leading to instability.

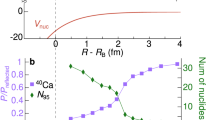

The concept of average nuclear binding energy, denoted by \({B}_{Avg}\), is generally used to evaluate the stability of nuclei. \({B}_{Avg}\) is the amount of energy required to disassemble every single nucleon from the nucleus, which is defined as the nuclear binding energy per nucleon in the nucleus. As \({B}_{Avg}\) increases, disassembling every single nucleon from the nucleus becomes progressively more difficult; in other words, the most stable nucleus corresponds to the highest \({B}_{Avg}\). The experimental diagram of \({B}_{Avg}\) associated with mass number \(A\) is shown in Fig. 1. According to this diagram, the binding energy reaches its peak at \(A=56\) (\({}^{56}\mathrm{Fe}\)), and in \(A>56\), the rate of energy reduction is low, such that the diagram has a relatively flat behavior due to saturation. The \({}^{56}\mathrm{Fe}\) nucleus divides the diagram into two parts, namely fusion and fission. The nuclei of the fusion part tend to participate in a fusion reaction, whereas in the fission part, each nucleus tends to participate in a fission reaction.

Experimental binding energy \({B}_{Avg}(A, Z)\) with respect to mass number A49.

Fusion is a nuclear reaction and occurs when two highly-energetic stable nuclei slam together to form a heavier stable nucleus. In the sun, this reaction creates a lot of energy through the fusion of two hydrogen nuclei to form one helium nucleus. On the other hand, fission is a nuclear reaction in which a larger unstable nucleus is split into two smaller (stable or unstable) nuclei due to a hit by a smaller stable or unstable one. This type of reaction is used to produce a lot of energy in nuclear power reactors through the fission of Uranium and Plutonium nuclei by neutrons. The procedures of nuclear fusion and fission are illustrated in Fig. 2a,b, respectively.

In nuclear processes, in addition to fusion and fission, there is another process called \(\beta\)-decay. The two types of \(\beta\)-decay are known as \({\beta }^{-}\) and \({\beta }^{+}\). In \({\beta }^{-}\)-decay, a neutron is converted to a proton, and the process creates an electron and an electron antineutrino (\(\overline{v }\)), while in \({\beta }^{-}\)-decay, a proton is converted to a neutron and the process creates a positron and an electron neutrino (\(v\))94. Also, neutrino and antineutrino particles have no essential role in reactions because they have considerably smaller masses compared to other particles. Therefore, protons and neutrons are the main factors in \({\beta }^{\pm }\)-decays. In Fig. 3, the schematic representations of \({\beta }^{-}\)- and \({\beta }^{+}\)-decays are presented.

Mathematical model

In this section, we describe the mathematical model of the FuFiO algorithm, which is developed based on the tendency of nuclei to increase their binding energy and get a higher level of stability using nuclear reactions, including fusion, fission, and \(\beta\)-decay. Importantly, as a nucleus with a higher level of binding energy is considered a better solution, the FuFiO algorithm will move in a direction that increases the binding energy of the nuclei. FuFiO is designed as a population-based metaheuristic method in which a set of nuclei are considered as the agents of the population. Each agent of the population has a specific position, and each of them has a particular dimension (d) which is determined by the number of problem variables. Therefore, the nuclei move in a d-dimensional space, and are represented in the form of a matrix as follows:

where \(i (i = 1, 2, 3, \dots , n)\) is the counter of nucleus and \(j(j=1, 2, 3, \dots , d)\) is the counter of design variables; \(n\) is the population size; \(X\) is the matrix of positions of all nuclei updated in each iteration of algorithm; \({X}_{i}\) is the position of the i-th nucleus; and \({x}_{i}^{j}\) is the j-th design variable of the i-th nucleus the initial value of which is determined randomly as follows:

where \({x}_{i}^{j}\left(0\right)\) represents the initial position of the j-th design variable of the i-th nucleus; \({ub}^{j}\) and \({lb}^{j}\) are respectively the maximum and minimum possible values for the j-th design variable; and \(r\) is a random number in the interval [0,1]. The set of initial \({x}_{i}^{j}\left(0\right)\) s will create \({X}^{0}\) that represents the initial position of nuclei. Furthermore, in the FuFiO method, the nuclei are divided into two groups, namely stable and unstable nuclei, based on the level of binding energy. Depending on the types of reacting nuclei, nuclear reactions (i.e., fusion, fission, and \(\beta\)-decay) are regarded differently. In other words, as illustrated in Fig. 4, three different types of reaction can be considered in each group for nuclei to update their positions.

The mathematical formulation of each reaction in each group modeled as follows:

Group 1: Stable nucleus

If the i-th nucleus is stable (\({X}_{i}^{stable}\)), one of the following three reactions is selected randomly:

Reaction 1: In this reaction, the i-th nucleus slams with another stable nucleus. The new position is determined as follows:

where r is a random vector in [0,1] and \({X}_{j}^{stable}\) is a stable nucleus selected randomly from other stable nuclei. This reaction simulates fusion, where two stable nuclei slam together to produce a new nucleus. Figure 5 shows a schematic view of this reaction, from which it can be seen that the new solution is a random point generated in the reaction space using \(r\) and \(1-r\).

Reaction 2: If the i-th nucleus interacts with an unstable nucleus, this collision produces a new solution expressed as:

where \({X}_{j}^{unstable}\) is an unstable nucleus selected randomly from other unstable nuclei. The process of this reaction, shown in Fig. 6, simulates the rule of fission, where a stable nucleus is hit by an unstable one.

Reaction 3: If the i-th nucleus decays, the new solution will be generated as follows:

where \(p\) denotes a random subset of problem variables; \(d\) is the set of all variables; \(k\) is the counter of variables; \(R\) is a random nucleus; and \(UB\) and \(LB\) are the vectors of the lower and upper bound of variables, respectively. This reaction models the process of \(\beta\)-decay in a stable nucleus as presented in Fig. 7.

Group 2: Unstable nucleus

In the second group, if the i-th nucleus is unstable (\({X}_{i}^{unstable}\)), one of the following three reactions will be used randomly to update the i-th nucleus:

Reaction 1: If the unstable nucleus slams with another unstable nucleus, the new position is obtained as follows:

where \(r\) is a random vector in interval [0,1] and \({X}_{j}^{unstable}\) is an unstable nucleus selected randomly from other unstable nuclei. As illustrated in Fig. 8, this reaction simulates the rule of fission where an unstable nucleus is hit by an unstable one.

Reaction 2: If the unstable nucleus, \({X}_{i}^{unstable}\), interacts with a stable nucleus, the new position is as follows:

where \({X}_{j}^{stable}\) is a randomly selected stable nucleus from stable nuclei. The process of this reaction, which establishes a fission model of stable and unstable nuclei, is shown in Fig. 9.

Reaction 3: If the i-th unstable nucleus decays, the new position is defined as follows:

where \(p\) denotes a random subset of variables; \(d\) is the set of all variables; \(k\) is the counter of variables; and \({X}_{j}^{stable}\) is a randomly selected nucleus from stable nuclei. As presented in Fig. 10, this reaction models the \(\beta\)-decay process of an unstable nucleus.

Both third reactions in the stable and unstable groups represent the \({\beta }^{\pm }\)-decays. In the former reaction, a random set of decision variables takes new random values between their corresponding allowable lower and upper bounds, whereas, in the latter one, a random subset of decision variables takes their new values from the corresponding decision variables of a randomly-chosen stable solution. Importantly, the \({\beta }^{\pm }\)-decays are considered as mutation operators to escape from local optima.

Stable and unstable nuclei

The level of binding energy of a nucleus determines whether it is stable or unstable, and in the FuFiO algorithm, the objective function value, \(F(X)\), is used to specify the group of agents. In other words, in the FuFiO algorithm, a nucleus with a better \(F(X)\) is considered to be more stable. Moreover, as can be seen from Fig. 1, the \({}^{56}Fe\) nucleus is the boundary of stable and unstable groups. This boundary is also considered in the FuFiO algorithm to distinguish stable nucleus from unstable ones. To this end, the nucleus is evaluated in each iteration and a set of better ones is considered as the set of stable nuclei. The size of stable nuclei is determined as follows:

where \({S}_{z}\) is the size of stable nuclei at each iteration; \(fix\) is a function that rounds its argument to the nearest integer number; \(n\) is the population size; \({L}_{s}\) and \({U}_{s}\) are the minimum and maximum percent of stable nuclei at the start and the end of the algorithm, respectively; \(Iter\) is the counter of iterations; and \(MaxIter\) is the maximum iteration of the algorithm. In Eq. (9), the size of stable particles is determined dynamically as the algorithm progresses. Also, in determining \({S}_{z}\), the two parameters \({L}_{s}\) and \({U}_{s}\) should be fine-tuned. The values of \({L}_{s}\) and \({U}_{s}\) are considered 10% and 70%, respectively. This formulation increases the size of stable nuclei from 10 to 70% at the end of the algorithm. In addition, the value of \({U}_{s}\) is naturally adopted in which the ratio of stable nuclei to unstable nuclei is assumed to be around 70%.

Boundary handling

In solving an optimization problem with \(d\) variables, optimizers search in a d-dimensional search space. Each of these dimensions has its upper and lower boundaries, and the variables of found solutions should be placed in the interval of boundaries. Given that some variables may violate boundaries during their movements, in the FuFiO algorithm, the following equations, which replace violated boundaries with violated variables, are used to return them within the boundaries:

where \({x}_{i new}^{j}\) is the j-th design variable of the i-th new solution \({X}_{i}^{new}\), and min and max are operators that return the minimum and maximum of \({(x}_{i}^{j}, {ub}^{j})\) and \({(x}_{i}^{j}, {lb}^{j})\), respectively.

Replacement strategy

In each reaction, a new position \({X}_{i}^{new}\) is generated to be replaced with the current position of the i-th nucleus \({X}_{i}\). This replacement will take place whenever the new solution has a better level of binding energy than the current one. This procedure is formulated as follows:

Selection of reactions

In the FuFiO algorithm, nuclei are categorized into two groups; in each group, three different reactions are developed, of which one is randomly selected to generate a new solution. It should be noted that different groups and reactions do not represent different phases of the algorithm. In other words, the FuFiO algorithm has one phase, wherein for each nucleus in each iteration, one of the reactions is randomly selected according to the group of the nucleus to generate the new solutions, as shown in Fig. 11.

Terminating criterion

In metaheuristics, the search process will be finished after satisfying a terminating criterion, following that the best result will be reported. Some of the most common stop criteria are as follows:

-

The best result is equal to the minimum specified value determined for the objective function.

-

The optimization process will be terminated after a fixed number of iterations.

-

The value of the objective function does not change during the specified period.

-

The optimization process time has reached a predetermined value.

Implementation of FuFiO

Based on the concepts developed in previous sections, the FuFiO algorithm is implemented in two levels as follows:

Level 1: Initialization

-

Step 1: Determine the number of nucleus (\(nPop\)), maximum number of iterations (\(MaxIter\)), and variable bounds \(UB\) and \(LB\).

-

Step 2: Determine the parameters of FuFiO, namely \({L}_{s}\) and \({U}_{s}\).

-

Step 4: Calculate the objective function of initial solutions.

Level 2: Nuclear reaction

In each iteration of the FuFiO algorithm, all of the agents will perform the following steps:

-

Step 1: \({S}_{z}\) is updated (Eq. (9)).

-

Step 2: Population is sorted according to \(F(X)\).

-

Step 3: Stable and unstable nuclei are determined.

-

Step 4: The group of current nucleus is determined.

-

Step 5: The new solution is generated using the selected reaction (Eqs. (3), (4), (5), (6), (7), and (8)).

-

Step 6: The new solution is clamped as Eq. (10).

-

Step 7: The new solution is evaluated and objective function \(F(X)\) is calculated.

-

Step 8: The new solution is checked to replace the current solution as Eq. (11).

-

Step 9: Nuclear reaction level is repeated until a terminating criterion is satisfied.

The flowchart of the FuFiO algorithm is illustrated in Fig. 12.

FuFiO validation

The No Free Lunch (NFL) theorem95 is one of the most famous theories which have been cited many times in literature to pave the way for introducing new metaheuristic algorithms. This theorem has logically proved that no algorithm can solve all types of problems. However, the NFL theorem is used here for a different purpose. In other words, it is used here to validate the capability of the FuFiO algorithm in solving various problems compared to other algorithms. To this end, in this study, 120 benchmark test functions are considered to challenge the performance of the proposed algorithm in solving different types of problems. Also, another application of these problems is to create a dataset to be used in non-parametric statistical analyses to examine the performance of the proposed algorithm more thoroughly.

In this section, first, the description of the test problems is presented; then, a number of rival metaheuristics with their settings are reviewed. Subsequently, the evaluation metrics and comparative results are explained; and finally, the results of non-parametric statistical methods will be presented.

Test functions

To evaluate the capability of the proposed algorithm in handling various types of benchmark functions with different properties, a set of 120 mathematical problems has been used. Based on their dimensions, these problems have been categorized into two groups: (1) fixed-dimensional problems, and (2) N-dimensional problems.

Amongst these functions, F1 to F60 are fixed-dimensional functions, with dimensions of 2 to 10. The second group of problems, F61 to F120, includes 60 N-dimensional test functions, the dimensions of which are considered to be equal to 30. The details of the mathematical functions in these two groups are presented in Tables 2 and 3, respectively. In these tables, C, NC, D, ND, S, NS, Sc, NSC, U, and M denote Continuous, Non-Continuous, Differentiable, Non-Differentiable, Separable, Non-Separable, Scalable, Non-Scalable, Unimodal, and Multi-modal, respectively. In addition, R, D, and Min represent the variables range, variables dimension, and the global minimum of the functions, respectively.

Metaheuristic algorithms for comparative studies

To investigate the overall performance of the FuFiO algorithm, its results should be compared with those of other methods. The selected metaheuristics for this purpose are FA, CS, Jaya, TEO, SCA, MVO, and CSA algorithms, of which the most recent and improved versions are utilized here. Among the selected methods, only SCA is parameter-free, whereas the other metaheuristics have some specific parameters that should be tuned carefully. Table 4 presents a summary of these parameters, adopted from the literature, that we have utilized in our evaluations.

Generally speaking, the performance of a powerful and versatile algorithm should be independent of the problem that is to be solved. In other words, for a good algorithm, parameter tunning should not be of crucial importance. Considering this point, we developed the FuFiO algorithm in a way that there are only two extra parameters, namely Ls and Us. We performed a statistical study on the effect of these parameters and found out that if they are chosen from within predefined limits, determining the exact values of them is not necessary. Knowing that Ls and Us are respectively the minimum and maximum percentages of stable nuclei at the beginning and end of the algorithm, Ls should be a small value, e.g. 0.1–0.4, whereas Us should be in the range of 0.5–0.9. In this study, we considered Ls and Us to be 0.1 and 0.7, respectively.

Numerical results

This section presents the results of the FuFiO and other methods in dealing with benchmark problems. In this study, due to the random nature of metaheuristics, each algorithm is independently run 50 times for each problem. Then, the statistical results of these runs are utilized to analyze the algorithms. The population size for each of the methods is set to be 50, and the maximum Number of Function Evaluations (NFEs) is considered 150,000 for all of the metaheuristics. The tolerance of 1 × 10−12 from the optimal solution is considered as the terminating criterion, and the NFEs are counted until the algorithm stops. The statistical results of the fixed-dimensional and N-dimensional benchmark problems are presented in Tables 5 and 6, respectively. These results include the minimum (Min), average (Mean), maximum (Max), Standard deviation (Std. Dev.), and mean of the NFEs of each algorithm. Moreover, the last row of each function shows the rank of algorithms, where the ranking is based on the value of the Means.

Non-parametric statistical analyses

Non-parametric statistical methods are useful tools for comparing and ranking the performance of metaheuristic algorithms. In this study, four well-known non-parametric tests including the Wilcoxon Signed-Rank98, Friedman99, Friedman Aligned Ranks100, and Quade101 tests, are used to analyze the ability of algorithms in solving benchmark problems; in all of these tests, the significance level, \(\alpha\), is 0.05102.

The results of the Wilcoxon Signed-Rank test are presented in Table 7, which shows that the R+ of FuFiO is less than the R− of all the other methods, which means that FuFiO performs better than all of the compared ones. Furthermore, the p-values show that the FuFiO algorithm significantly outperforms other algorithms in solving benchmark problems, except in competition with the CS and CSA algorithms in solving the fixed-dimensional problems.

The Friedman test is a ranking method the results of which are presented in Table 8. According to this test, the FuFiO algorithm is placed in the first rank in all types of problems.

In the Friedman Aligned Rank test, the average of each set of values is calculated and then subtracted from the results. Subsequently, this method ranks algorithms based on their corresponding shifted values which are called aligned ranks. The results of this test, presented in Table 9, show that the FuFiO algorithm gains the first rank in solving both fixed- and N-dimensional benchmark problems.

The Quade test can be considered as an extension of the Wilcoxon Signed-Rank test for comparing multiple algorithms, making it often more effective than the previous tests. The results of the Quade test are presented in Table 10, showing that the FuFiO method is ranked first in comparison with the other methods for all types of problems.

The final statistical method considered here is the analysis of variance (ANOVA) test, which compares the variance of results across the means of various algorithms. In this research, the ANOVA test has been employed with a significance level of 5% to study the efficiency and relative performance of optimizers. The results of this test are presented in Table 11. According to these results, the p-values indicate significant differences between the means in the majority of the considered problems. Besides, the results of the ANOVA test for four fixed-dimension and four N-dimension problems are plotted in Figs. 13 and 14, respectively.

Analyses based on competitions on evolutionary computation (CEC)

In this section, the performance of the FuFiO algorithm is investigated using the single-objective real-parameter numerical optimization problems of two recent Competitions on Evolutionary Computation, namely CEC-2017 and CEC-2019 benchmark test functions. Then, the computational time and complexity of FuFiO is compared with other state-of-the-art algorithms.

Comparative analyses based on the CEC-2017 test functions

To investigate the ability of FuFiO in solving more difficult problems, the CEC 2017 Special Season on single-objective problems are utilized in this sub-section. To establish and perform a comparative analysis, four state-of-the-art algorithms including the Effective Butterfly Optimizer with Covariance Matrix Adapted Retreat (EBOwithCMAR)103, ensemble sinusoidal differential covariance matrix adaptation with Euclidean neighborhood (LSHADE-cnEpSin)104, Multi-Method-based Orthogonal Experimental Design (MM_OED)105], and Teaching Learning Based Optimization with Focused Learning (TLBO-FL)106 are considered. Table 12 contains a list of these problems the mathematical details of which was presented by the CEC 2017 committee107.

The statistical results of FuFiO and the other algorithms in solving 10-, 30-, 50- and 100-dimensional problems are presented in Tables 13, 14, 15, and 16, respectively. These results are based on 51 independent runs. An error value is considered in this study such that when it is less than 10−8, the error is considered zero. The total number of function evaluations for each test problem is taken as 10000D, where D is the problem dimension. The results confirm that the FuFiO method can provide very competitive results.

Computational time and complexity analyses

A complete computational time and complexity analysis is conducted to evaluate the FuFiO algorithm. Awad et al. have proposed a simple procedure to analyze the complexity of metaheuristic algorithms in the CEC-2017 instructions107, in which complexity is reflected by four times, namely \({T}_{0}\), \({T}_{1}\), \({T}_{2}\), and \(\widehat{{T}_{2}}\), as follows: \({T}_{0}\) is the computing time of the test program shown in Fig. 15; \({T}_{1}\) is given by the time of 200,000 evaluations of \({F}_{18}\) by itself with D dimensions; \({T}_{2}\) is the total computing time of the FuFiO algorithm in 200,000 evaluations of the same D-dimensional \({F}_{18}\); and \(\widehat{{T}_{2}}\) denotes the mean value of five different runs of \({T}_{2}\).

The complexity results of the FuFiO algorithm and other methods in 10, 30, 50, and 100 dimensions are presented in Table 17, which demonstrate that FuFiO can perform competitively.

The key metric in evaluating the running time of an algorithm is computational complexity, which is defined based on its structure. According to Big O notation, the complexity of the FuFiO algorithm is calculated based on the number of nuclei n, number of design variables d, maximum number of iterations t, and the sorting mechanism of nuclei in each iteration as follows:

Comparative analyses based on the CEC-2019 test functions

In this sub-section, the problems defined by the CEC-2019 Special Season are utilized. Different physics-based methods including the Gravitational Search Algorithm (GSA)86 and Electromagnetic Field Optimization (EFO)56. Furthermore, three recently-developed evolutionary methods including the Farmland Fertility Algorithm (FFA)35, African Vultures Optimization Algorithm (AVOA)37, and Artificial Gorilla Troops Optimizer (GTO)42, are considered for this comparative study. Table 18 presents the properties of the CEC-2019 examples108.

The statistical results of the algorithms are presented in Table 19. These results are based on 50 independent runs, but for reporting the final result, we select the best 25 ones according to the CEC-2019 rules. An error value is considered in this study such that when it is less than 10−10, the error is considered zero. The total number of function evaluations for each test problem is taken as 106. A conclusion concerning the statistical results is also added to the table. The final output shows that FuFiO is placed in the second place with a very small difference while its stability in finding results is so far better that the other methods based on the standard divination values. Moreover, the ANOVA test has been employed with a significance level of 5% and the related results for all problems are plotted in Fig. 16. The results show a good performance of the present method for many of the examined functions.

Conclusions and future work

Inspired by the concept of nuclei stability in physics, we developed a swarm-based intelligence metaheuristic method, called Fusion Fission Optimization (FuFiO), to deal with various optimization problems. In this method, three nuclear reactions including fusion, fission, and \(\beta\)-decay are modeled to simulate the tendency to change a stable nuclei.

The effectiveness of the FuFiO algorithm in solving optimization problems with better results can be related to its mechanism for creating the right balance between exploration and exploitation. Also, in the FuFiO method, three different reactions are proposed for each group with novel formulations. The search procedure of each reaction in each group can be interpreted as follows:

-

Fusion: Through this reaction, a nucleus in the stable group slams with another stable nucleus and exploits the search space. On the other hand, this operator explores the search space in the unstable group because the unstable nuclei slam with each other.

-

Fission: Through this reaction, in the first group, a stable nucleus slams with an unstable one that explores the search space around the stable nucleus. On the other hand, in the second group, the fission operator guides the unstable nuclei toward the stable region to exploit it.

-

\({\varvec{\beta}}\)-decay: According to these operators, a stable nucleus slams with a randomly-generated nucleus, which results in exploration. However, in the second group, \(\beta\)-decay generates the new solution by a uniform crossover between the unstable nucleus and a stable one to transfer some stable features to the unstable nucleus.

The right balance between exploration and exploitation is guaranteed by randomness in selecting the reactions in each group algorithm.

To examine the performance of FuFiO in comparison with seven well-known optimizers, an extensive set of 120 benchmark problems were considered, where the obtained results were used as the inputs of several non-parametric statistical methods. The results of statistical analysis showed that the FuFiO algorithm has a superior performance in solving all considered types of problems. To further investigate the ability of FuFiO in solving complex optimization problems, the CEC 2017 and CEC 2019 was utilized. The results showed that the FuFiO algorithm can perform competitively when compared to the state-of-the-art algorithms.

Despite the good performance of FuFiO in solving different well-studied mathematical problems, this method, like other metaheuristics, may have some limitations for solving difficult constrained or engineering problems. The main reason is the influence of the utilized constraint-handling approach on the performance of the proposed method. In addition, for more complex problems where each function evaluation needs a considerable amount of time, applying this method may need further investigations. Importantly, not the advantages of the new method, but its limitations open up a new avenue to improve or adapt it for applications in other fields.

Future studies concerning the FuFiO algorithm can be classified into two main categories. The first category contains investigations in which FuFiO is utilized as an optimization solver in dealing with complex real-world optimization problems. The second category concerns modifying the FuFiO algorithm to enhance its computational accuracy and efficiency. To this end, various kinds of modification can be designed, some of which are as follows:

-

1.

The proposed algorithm has two parameters, namely \({U}_{s}\) and \({L}_{s}\). The value of \({U}_{s}\) is determined according to the natural ratio of stable nuclei, whereas the value of \({L}_{s}\) is decided empirically. These parameters and their effects should be studied more thoroughly.

-

2.

In this paper, as the first version of the algorithm, the value of \({S}_{z}\) is determined through a deterministic procedure. A more advanced approach could be developed to define the size of stable nuclei.

-

3.

For updating the position of nuclei, in each group, three different reactions are modeled. In order to enhance the performance of the algorithm, developing new formulations for reactions could be advantageous.

-

4.

In each reaction, another stable or unstable nucleus, \({X}_{j}\), is selected randomly. Using a more thoughtful, systematic selection method could improve the performance of the algorithm.

-

5.

During the updating process, a reaction is randomly selected without any specific rule. Developing a deterministic, adaptive, or self-adaptive approach to choosing an appropriate reaction could improve the algorithm.

In addition to the abovementioned approaches, one may use alternative strategies to improving the FuFiO algorithm. For example, as a conventional approach, the hybridization of the proposed algorithm with other popular metaheuristic algorithms could lead to the development of more robust optimization algorithms.

Data availability

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Change history

02 September 2022

A Correction to this paper has been published: https://doi.org/10.1038/s41598-022-18952-9

References

Wu, G., Pedrycz, W., Suganthan, P. N. & Mallipeddi, R. A variable reduction strategy for evolutionary algorithms handling equality constraints. Appl. Soft Comput. J. 37, 774–786. https://doi.org/10.1016/j.asoc.2015.09.007 (2015).

Fogel, L. J., Owens, A. J. & Walsh, M. J. Intelligent decision making through a simulation of evolution. Behav. Sci. 11, 253–272. https://doi.org/10.1002/bs.3830110403 (1966).

Simpson, A. R., Dandy, G. C. & Murphy, L. J. Genetic algorithms compared to other techniques for pipe optimization. J. Water Resour. Plan. Manag. 120, 423–443. https://doi.org/10.1061/(asce)0733-9496(1994)120:4(423) (1994).

Spall, J. C. Introduction to Stochastic Search and Optimization (Wiley, Hoboken, 2003). https://doi.org/10.1002/0471722138.

Boussaïd, I., Lepagnot, J. & Siarry, P. A survey on optimization metaheuristics. In: Information Sciences (Elsevier, 2013) pp. 82–117. https://doi.org/10.1016/j.ins.2013.02.041.

Biswas, A., Mishra, K. K., Tiwari, S. & Misra, A. K. Physics-inspired optimization algorithms: A survey. J. Optim. 2013, 1–16. https://doi.org/10.1155/2013/438152 (2013).

Gogna, A. & Tayal, A. Metaheuristics: Review and application. J. Exp. Theor. Artif. Intell. 25, 503–526. https://doi.org/10.1080/0952813X.2013.782347 (2013).

AbWahab, M. N., Nefti-Meziani, S. & Atyabi, A. A comprehensive review of swarm optimization algorithms. PLoS ONE 10, e0122827. https://doi.org/10.1371/journal.pone.0122827 (2015).

Kirkpatrick, S., Gelatt, C. D. & Vecchi, M. P. Optimization by simulated annealing. Science 220, 671–680. https://doi.org/10.1126/science.220.4598.671 (1983).

Kennedy, J.& Eberhart, R. Particle swarm optimization. In: Proceedings of ICNN’95 - International Conference on Neural Networks (IEEE, 1995) pp. 1942–1948. https://doi.org/10.1109/ICNN.1995.488968.

Rechenberg, I. Evolutionsstrategien. In: 1978: pp. 83–114. https://doi.org/10.1007/978-3-642-81283-5_8.

Holland, J. H. Adaptation in natural and artificial systems: An introductory analysis with applications to biology, control, and artificial intelligence (1975). http://mitpress.mit.edu/catalog/item/default.asp?ttype=2&tid=8929. Accessed December 25, 2020.

Simon, D. Biogeography-based optimization. IEEE Trans. Evol. Comput. 12, 702–713. https://doi.org/10.1109/TEVC.2008.919004 (2008).

Dorigo, M., Birattari, M. & Magazine, T.S.-I.C.I. Ant colony optimization: Artificial ants as a computational intelligence technique, (n.d.).

Karaboga, D. & Basturk, B. Artificial Bee Colony (ABC) optimization algorithm for solving constrained optimization problems. In: Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 2007: pp. 789–798. https://doi.org/10.1007/978-3-540-72950-1_77.

Yang, X. S. Firefly algorithm, stochastic test functions and design optimization. Int. J. Bio-Inspired Comput. 2, 78–84. https://doi.org/10.1504/IJBIC.2010.032124 (2010).

Yang, X.-S. & Deb, S. Engineering optimisation by cuckoo search. Int. J. Math. Model. Numer. Optim. 1, 330–343 (2010).

Yang, X. S. A new metaheuristic Bat-inspired Algorithm. Stud. Comput. Intell. 284, 65–74. https://doi.org/10.1007/978-3-642-12538-6_6 (2010).

Yang, X. S. & Deb, S. Eagle strategy using Lévy walk and firefly algorithms for stochastic optimization. Stud. Comput. Intell. 284, 101–111. https://doi.org/10.1007/978-3-642-12538-6_9 (2010).

Gandomi, A. H. & Alavi, A. H. Krill herd: A new bio-inspired optimization algorithm. Commun. Nonlinear Sci. Numer. Simul. 17, 4831–4845. https://doi.org/10.1016/j.cnsns.2012.05.010 (2012).

Yang, X. S. Flower pollination algorithm for global optimization. In: Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 2012: pp. 240–249. https://doi.org/10.1007/978-3-642-32894-7_27.

Mirjalili, S., Mirjalili, S. M. & Lewis, A. Grey Wolf optimizer. Adv. Eng. Softw. 69, 46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007 (2014).

Mirjalili, S. The ant lion optimizer. Adv. Eng. Softw. 83, 80–98. https://doi.org/10.1016/j.advengsoft.2015.01.010 (2015).

Saremi, S., Mirjalili, S. & Lewis, A. Grasshopper optimisation algorithm: Theory and application. Adv. Eng. Softw. 105, 30–47. https://doi.org/10.1016/j.advengsoft.2017.01.004 (2017).

Cheng, M. Y. & Prayogo, D. Symbiotic organisms search: A new metaheuristic optimization algorithm. Comput. Struct. 139, 98–112. https://doi.org/10.1016/j.compstruc.2014.03.007 (2014).

Goldanloo, M. J. & Gharehchopogh, F. S. A hybrid OBL-based firefly algorithm with symbiotic organisms search algorithm for solving continuous optimization problems. J. Supercomput. 78, 3998–4031. https://doi.org/10.1007/s11227-021-04015-9 (2021).

Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl. Based Syst. 89, 228–249. https://doi.org/10.1016/j.knosys.2015.07.006 (2015).

Mirjalili, S. Dragonfly algorithm: A new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput. Appl. 27, 1053–1073. https://doi.org/10.1007/s00521-015-1920-1 (2016).

Mirjalili, S. et al. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 114, 163–191. https://doi.org/10.1016/j.advengsoft.2017.07.002 (2017).

Askarzadeh, A. A novel metaheuristic method for solving constrained engineering optimization problems: Crow search algorithm. Comput. Struct. 169, 1–12. https://doi.org/10.1016/j.compstruc.2016.03.001 (2016).

Mirjalili, S. & Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 95, 51–67. https://doi.org/10.1016/j.advengsoft.2016.01.008 (2016).

Asghari, K., Masdari, M., Gharehchopogh, F. S. & Saneifard, R. Multi-swarm and chaotic whale-particle swarm optimization algorithm with a selection method based on roulette wheel. Expert Syst. https://doi.org/10.1111/exsy.12779 (2021).

Sheikholeslami, R. & Talatahari, S. Developed swarm optimizer: A new method for sizing optimization of water distribution systems. J. Comput. Civ. Eng. 30, 04016005. https://doi.org/10.1061/(asce)cp.1943-5487.0000552 (2016).

Dhiman, G. & Kumar, V. Spotted hyena optimizer: A novel bio-inspired based metaheuristic technique for engineering applications. Adv. Eng. Softw. 114, 48–70. https://doi.org/10.1016/j.advengsoft.2017.05.014 (2017).

Shayanfar, H. & Gharehchopogh, F. S. Farmland fertility: A new metaheuristic algorithm for solving continuous optimization problems. Appl. Soft Comput. 71, 728–746. https://doi.org/10.1016/j.asoc.2018.07.033 (2018).

Gharehchopogh, F. S., Farnad, B. & Alizadeh, A. A modified farmland fertility algorithm for solving constrained engineering problems. Concurr. Comput. Pract. Exp. https://doi.org/10.1002/cpe.6310 (2021).

Abdollahzadeh, B., Gharehchopogh, F. S. & Mirjalili, S. African vultures optimization algorithm: A new nature-inspired metaheuristic algorithm for global optimization problems. Comput. Ind. Eng. 158, 107408. https://doi.org/10.1016/j.cie.2021.107408 (2021).

Alsattar, H. A., Zaidan, A. A. & Zaidan, B. B. Novel meta-heuristic bald eagle search optimisation algorithm. Artif. Intell. Rev. 53, 2237–2264. https://doi.org/10.1007/s10462-019-09732-5 (2019).

Gharehchopogh, F. S. & Rostampnah, B. A New model-based bald eagle search algorithm with sine cosine algorithm for data clustering. J. Adv. Comput. Eng. Technol. 7(3), 177–186 (2021).

Kiran, M. S. TSA: Tree-seed algorithm for continuous optimization. Expert Syst. Appl. 42, 6686–6698. https://doi.org/10.1016/j.eswa.2015.04.055 (2015).

Sahman, M. A., Cinar, A. C., Saritas, I. & Yasar, A. Tree-seed algorithm in solving real-life optimization problems. IOP Conf. Ser. Mater. Sci. Eng. 675, 012030. https://doi.org/10.1088/1757-899x/675/1/012030 (2019).

Abdollahzadeh, B., Gharehchopogh, F. S. & Mirjalili, S. Artificial gorilla troops optimizer: A new nature-inspired metaheuristic algorithm for global optimization problems. Int. J. Intell. Syst. 36, 5887–5958. https://doi.org/10.1002/int.22535 (2021).

Erol, O. K. & Eksin, I. A new optimization method: Big Bang-Big Crunch. Adv. Eng. Softw. 37, 106–111. https://doi.org/10.1016/j.advengsoft.2005.04.005 (2006).

Kaveh, A. & Talatahari, S. A novel heuristic optimization method: Charged system search. Acta Mech. 213, 267–289. https://doi.org/10.1007/s00707-009-0270-4 (2010).

Yazdchi, M., ForoughiAsl, A., Talatahari, S. & Sareh, P. Metaheuristically optimized nano-MgO additive in freeze–thaw resistant concrete: A charged system search-based approach. Eng. Res. Express 3, 035001. https://doi.org/10.1088/2631-8695/ac0dca (2021).

Talatahari, S. & Azizi, M. Chaos Game Optimization: A Novel Metaheuristic Algorithm (Springer, Dordrecht, 2020). https://doi.org/10.1007/s10462-020-09867-w.

Talatahari, S. & Azizi, M. Optimization of constrained mathematical and engineering design problems using chaos game optimization. Comput. Ind. Eng. 145, 106560. https://doi.org/10.1016/j.cie.2020.106560 (2020).

Rashedi, E., Nezamabadi-pour, H. & Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 179, 2232–2248. https://doi.org/10.1016/j.ins.2009.03.004 (2009).

Mirjalili, S. SCA: A Sine Cosine Algorithm for solving optimization problems. Knowl. Based Syst. 96, 120–133. https://doi.org/10.1016/j.knosys.2015.12.022 (2016).

Mirjalili, S., Mirjalili, S. M. & Hatamlou, A. Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 27, 495–513. https://doi.org/10.1007/s00521-015-1870-7 (2016).

Zhao, W., Wang, L. & Zhang, Z. Atom search optimization and its application to solve a hydrogeologic parameter estimation problem. Knowl. Based Syst. 163, 283–304. https://doi.org/10.1016/j.knosys.2018.08.030 (2019).

Talatahari, S., Azizi, M., Tolouei, M., Talatahari, B. & Sareh, P. Crystal structure algorithm (CryStAl): A metaheuristic optimization method. IEEE Access 9, 71244–71261. https://doi.org/10.1109/ACCESS.2021.3079161 (2021).

Khodadadi, N., Azizi, M., Talatahari, S. & Sareh, P. Multi-objective crystal structure algorithm (MOCryStAl): Introduction and performance evaluation. IEEE Access 9, 117795–117812. https://doi.org/10.1109/ACCESS.2021.3106487 (2021).

Azizi, M., Talatahari, S. & Sareh, P. Design optimization of fuzzy controllers in building structures using the crystal structure algorithm (CryStAl). Adv. Eng. Inform. 52, 101616. https://doi.org/10.1016/j.aei.2022.101616 (2022).

Talatahari, B., Azizi, M., Talatahari, S., Tolouei, M. & Sareh, P. Crystal structure optimization approach to problem solving in mechanical engineering design. Multidiscip. Model. Mater. Struct. 18, 1–23. https://doi.org/10.1108/MMMS-10-2021-0174 (2022).

H. Abedinpourshotorban, S. M. Shamsuddin, Z. Beheshti, D. N. A. Jawawi, Electromagnetic field optimization: A physics-inspired metaheuristic optimization algorithm. 26 (2016) 8–22. https://doi.org/10.1016/j.swevo.2015.07.002

Atashpaz-Gargari, E. & Lucas, C. Imperialist competitive algorithm: An algorithm for optimization inspired by imperialistic competition. IEEE Congr. Evol. Comput. 2007, 4661–4667. https://doi.org/10.1109/CEC.2007.4425083 (2007).

Rao, R. V., Savsani, V. J. & Vakharia, D. P. Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. CAD Comput. Aided Des. 43, 303–315. https://doi.org/10.1016/j.cad.2010.12.015 (2011).

Gandomi, A. H. Interior search algorithm (ISA): A novel approach for global optimization. ISA Trans. 53, 1168–1183. https://doi.org/10.1016/j.isatra.2014.03.018 (2014).

Kaveh, A., Talatahari, S. & Khodadadi, N. Stochastic Paint Optimizer: Theory and Application in Civil Engineering (Springer, London, 2020). https://doi.org/10.1007/s00366-020-01179-5.

Bajpai, P. & Kumar, M. Genetic algorithm: An approach to solve global optimization problems. Indian J. Comput. Sci. Eng. 1(3), 199–206 (2010).

Deng, W. et al. An improved differential evolution algorithm and its application in optimization problem. Soft. Comput. 25, 5277–5298. https://doi.org/10.1007/s00500-020-05527-x (2021).

Guo, W., Chen, M., Wang, L., Mao, Y. & Wu, Q. A survey of biogeography-based optimization. Neural Comput. Appl. 28, 1909–1926. https://doi.org/10.1007/s00521-016-2179-x (2016).

Yazdani, D. & Meybodi, M. R. A novel Artificial Bee Colony algorithm for global optimization. In: 2014 4th International Conference on Computer and Knowledge Engineering (ICCKE). (2014) https://doi.org/10.1109/iccke.2014.6993393

Dorigo, M. & Stützle, T. Ant colony optimization: Overview and recent advances. In: International Series in Operations Research and Management Science. (2018) 311–351. https://doi.org/10.1007/978-3-319-91086-4_10.

Xia, X. et al. Triple archives particle swarm optimization. IEEE Trans. Cybern. 50, 4862–4875. https://doi.org/10.1109/tcyb.2019.2943928 (2020).

Li, J., Wei, X., Li, B. & Zeng, Z. A survey on firefly algorithms. Neurocomputing 500, 662–678. https://doi.org/10.1016/j.neucom.2022.05.100 (2022).

Salgotra, R., Singh, U. & Saha, S. New cuckoo search algorithms with enhanced exploration and exploitation properties. Expert Syst. Appl. 95, 384–420. https://doi.org/10.1016/j.eswa.2017.11.044 (2018).

Wang, Y. et al. A novel bat algorithm with multiple strategies coupling for numerical optimization. Mathematics 7, 135. https://doi.org/10.3390/math7020135 (2019).

Bolaji, A. L., Al-Betar, M. A., Awadallah, M. A., Khader, A. T. & Abualigah, L. M. A comprehensive review: Krill Herd algorithm (KH) and its applications. Appl. Soft Comput. 49, 437–446. https://doi.org/10.1016/j.asoc.2016.08.041 (2016).

Yang, X.-S., Karamanoglu, M. & He, X. Flower pollination algorithm: A novel approach for multiobjective optimization. Eng. Optim. 46, 1222–1237. https://doi.org/10.1080/0305215x.2013.832237 (2013).

Hatta, N. M., Zain, A. M., Sallehuddin, R., Shayfull, Z. & Yusoff, Y. Recent studies on optimisation method of Grey Wolf Optimiser (GWO): A review (2014–2017). Artif. Intell. Rev. 52, 2651–2683. https://doi.org/10.1007/s10462-018-9634-2 (2018).

Abualigah, L., Shehab, M., Alshinwan, M., Mirjalili, S. & Elaziz, M. A. Ant lion optimizer: A comprehensive survey of its variants and applications. Arch. Comput. Methods Eng. 28, 1397–1416. https://doi.org/10.1007/s11831-020-09420-6 (2020).

Abualigah, L. & Diabat, A. A comprehensive survey of the Grasshopper optimization algorithm: Results, variants, and applications. Neural Comput. Appl. 32, 15533–15556. https://doi.org/10.1007/s00521-020-04789-8 (2020).

Gharehchopogh, F. S., Shayanfar, H. & Gholizadeh, H. A comprehensive survey on symbiotic organisms search algorithms. Artif. Intell. Rev. 53, 2265–2312. https://doi.org/10.1007/s10462-019-09733-4 (2019).

Shehab, M. et al. Moth–flame optimization algorithm: Variants and applications. Neural Comput. Appl. 32, 9859–9884. https://doi.org/10.1007/s00521-019-04570-6 (2019).

Alshinwan, M. et al. Dragonfly algorithm: A comprehensive survey of its results, variants, and applications. Multimed. Tools Appl. 80, 14979–15016. https://doi.org/10.1007/s11042-020-10255-3 (2021).

Abualigah, L., Shehab, M., Alshinwan, M. & Alabool, H. Salp swarm algorithm: a comprehensive survey. Neural Comput. Appl. 32, 11195–11215. https://doi.org/10.1007/s00521-019-04629-4 (2019).

Hussien, A. G. et al. Crow search algorithm: Theory, recent advances, and applications. IEEE Access 8, 173548–173565. https://doi.org/10.1109/access.2020.3024108 (2020).

Gharehchopogh, F. S. & Gholizadeh, H. A comprehensive survey: Whale optimization algorithm and its applications. Swarm Evol. Comput. 8, 1–24. https://doi.org/10.1016/j.swevo.2019.03.004 (2019).

Ghafori, S. & Gharehchopogh, F. S. Advances in spotted hyena optimizer: A comprehensive survey. Arch. Comput. Methods Eng. 29, 1569–1590. https://doi.org/10.1007/s11831-021-09624-4 (2021).

Kiran, M. S. & Hakli, H. A tree–seed algorithm based on intelligent search mechanisms for continuous optimization. Appl. Soft Comput. 98, 106938. https://doi.org/10.1016/j.asoc.2020.106938 (2021).

Guilmeau, T., Chouzenoux, E. & Elvira, V. Simulated annealing: A review and a new scheme. In 2021 IEEE Statistical Signal Processing Workshop (SSP). (2021). https://doi.org/10.1109/ssp49050.2021.9513782.

Tang, H., Zhou, J., Xue, S. & Xie, L. Big ban–big crunch optimization for parameter estimation in structural systems. Mech. Syst. Signal Process. 24, 2888–2897. https://doi.org/10.1016/j.ymssp.2010.03.012 (2010).

Talatahari, S. & Azizi, M. An extensive review of charged system search algorithm for engineering optimization applications. In Springer Tracts in Nature-Inspired Computing. (2021) 309–334. https://doi.org/10.1007/978-981-33-6773-9_14.

Mittal, H., Tripathi, A., Pandey, A. C. & Pal, R. Gravitational search algorithm: A comprehensive analysis of recent variants. Multimed. Tools Appl. 80, 7581–7608. https://doi.org/10.1007/s11042-020-09831-4 (2020).

Abualigah, L. & Diabat, A. Advances in sine cosine algorithm: A comprehensive survey. Artif. Intell. Rev. 54, 2567–2608. https://doi.org/10.1007/s10462-020-09909-3 (2021).

Abualigah, L. Multi-verse optimizer algorithm: A comprehensive survey of its results, variants, and applications. Neural Comput. Appl. 32, 12381–12401. https://doi.org/10.1007/s00521-020-04839-1 (2020).

Cheng, J., Xu, P. & Xiong, Y. An improved artificial electric field algorithm and its application in neural network optimization. Comput. Electr. Eng. 101, 108111. https://doi.org/10.1016/j.compeleceng.2022.108111 (2022).

Hosseini, S. & AlKhaled, A. A survey on the Imperialist Competitive Algorithm metaheuristic: Implementation in engineering domain and directions for future research. Appl. Soft Comput. 24, 1078–1094. https://doi.org/10.1016/j.asoc.2014.08.024 (2014).

Xue, R. & Wu, Z. A survey of application and classification on teaching–learning-based optimization algorithm. IEEE Access 8, 1062–1079. https://doi.org/10.1109/access.2019.2960388 (2020).

Kler, D., Rana, K. P. S. & Kumar, V. Parameter extraction of fuel cells using hybrid interior search algorithm. Int. J. Energy Res. 43, 2854–2880. https://doi.org/10.1002/er.4424 (2019).

Bethe, H. A. & Morrison, P. Elementary Nuclear Theory (Dover Publications, 2006).

Basdevant, J.-L., Rich, J. & Spiro, M. Fundamentals in Nuclear Physics: From Nuclear Structure to Cosmology (Springer, 2005).

Wolpert, D. H. & Macready, W. G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1, 67–82. https://doi.org/10.1109/4235.585893 (1997).

Jamil, M. & Yang, X. S. A literature survey of benchmark functions for global optimisation problems. Int. J. Math. Model. Numer. Optim. 4, 150–194. https://doi.org/10.1504/IJMMNO.2013.055204 (2013).

Jamil, M., Yang, X. S. & Zepernick, H. J. D. Test functions for global optimization: A comprehensive survey. Swarm Intell. Bio-Inspired Comput. https://doi.org/10.1016/B978-0-12-405163-8.00008-9 (2013).

Scheff, S. W. Nonparametric statistics. In: Fundamental Statistical Principles for the Neurobiologist (Elsevier, 2016), pp. 157–182. https://doi.org/10.1016/B978-0-12-804753-8.00008-7.

Friedman, M. The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J. Am. Stat. Assoc. 32, 675–701. https://doi.org/10.1080/01621459.1937.10503522 (1937).

García, S., Fernández, A., Luengo, J. & Herrera, F. Advanced nonparametric tests for multiple comparisons in the design of experiments in computational intelligence and data mining: Experimental analysis of power. Inf. Sci. 180, 2044–2064. https://doi.org/10.1016/j.ins.2009.12.010 (2010).

Quade, D. Using weighted rankings in the analysis of complete blocks with additive block effects. J. Am. Stat. Assoc. 74, 680–683. https://doi.org/10.1080/01621459.1979.10481670 (1979).

Derrac, J., García, S., Molina, D. & Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 1, 3–18. https://doi.org/10.1016/j.swevo.2011.02.002 (2011).

Kumar, A., Misra, R. K. & Singh, D. Improving the local search capability of effective butterfly optimizer using covariance matrix adapted retreat phase. In: 2017 IEEE Congress on Evolutionary Computation, CEC 2017—Proceedings (Institute of Electrical and Electronics Engineers Inc., 2017), pp. 1835–1842. https://doi.org/10.1109/CEC.2017.7969524.

Awad, N. H., Ali, M. Z. & Suganthan, P. N. Ensemble sinusoidal differential covariance matrix adaptation with Euclidean neighborhood for solving CEC2017 benchmark problems. In: 2017 IEEE Congress on Evolutionary Computation, CEC 2017—Proceedings (Institute of Electrical and Electronics Engineers Inc., 2017), pp. 372–379. https://doi.org/10.1109/CEC.2017.7969336.

Sallam, K. M., Elsayed, S. M., Sarker, R. A. & Essam, D. L. Multi-method based orthogonal experimental design algorithm for solving CEC2017 competition problems. In 2017 IEEE Congress on Evolutionary Computation, CEC 2017 - Proceedings. (2017) 1350–1357. https://doi.org/10.1109/CEC.2017.7969461.

Kommadath, R. & Kotecha, P. Teaching learning based optimization with focused learning and its performance on CEC2017 functions. In: 2017 IEEE Congress on Evolutionary Computation, CEC 2017—Proceedings (2017), pp. 2397–2403. https://doi.org/10.1109/CEC.2017.7969595.

Awad, N. H., Ali, M. Z., Suganthan, P. N., Liang, J. J. & Qu, B. Y. Problem definitions and evaluation criteria for the CEC 2017 special session and competition on single objective real-parameter numerical optimization (2017).

Price, K. V., Awad, N. H., Ali, M. Z. & Suganthan, P. N. The 100-digit challenge: problem definitions and evaluation criteria for the 100-digit challenge special session and competition on single objective numerical optimization (2019).

Author information

Authors and Affiliations

Contributions

All authors contributed to the analysis and discussion of the results and to the writing and reviewing of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this Article was revised: The original version of this Article contained an error in the spelling of the author Nima Darabi which was incorrectly given as Nima Darabai, and errors in two Equations and Figure 13.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nouhi, B., Darabi, N., Sareh, P. et al. The fusion–fission optimization (FuFiO) algorithm. Sci Rep 12, 12396 (2022). https://doi.org/10.1038/s41598-022-16498-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-16498-4

This article is cited by

-

QSHO: Quantum spotted hyena optimizer for global optimization

Artificial Intelligence Review (2025)