Abstract

To improve patients’ adherence to continuous positive airway pressure (CPAP) therapy, this study aimed to clarify whether machine learning-based data analysis can identify the factors related to poor CPAP adherence (i.e., CPAP usage that does not reach four hours per day for five days a week). We developed a CPAP adherence prediction model using logistic regression and learn-to-rank machine learning with a pairwise approach. We then investigated adherence prediction performance targeting a 12-week period and the top ten factors correlating to poor CPAP adherence. The CPAP logs of 219 patients with obstructive sleep apnea (OSA) obtained from clinical treatment at Kyoto University Hospital were used. The highest adherence prediction accuracy obtained was an F1 score of 0.864. Out of the top ten factors obtained with the highest prediction accuracy, four were consistent with already-known clinical knowledge. The factors for better CPAP adherence indicate that air leakage should be avoided, mask pressure should be kept constant, and CPAP usage duration should be longer and kept constant. The results indicate that machine learning is an adequate method for investigating factors related to poor CPAP adherence.

Similar content being viewed by others

Introduction

Obstructive sleep apnea syndrome (OSAS) is a highly prevalent sleep disorder that can cause sleep deprivation and excessive daytime sleepiness. OSAS is characterized by repetitive episodes of partial or complete upper airway obstruction during sleep1. Treatment is crucial because OSAS is known to negatively impact quality of life (QoL)1 and is associated with an increased risk of cardiovascular disease2,3. Continuous positive airway pressure (CPAP) therapy4 is the standard treatment for OSAS5. Although CPAP therapy is known to suppress excessive daytime sleepiness, improve QoL6, and decrease the risk of cardiovascular outcomes7,8, a significant proportion of patients underuse or discontinue CPAP9. Therefore, ensuring CPAP adherence still remains an important clinical issue. Because CPAP machines can record several usage-related parameters on a daily basis (see “Preprocessing” section for details), Schwab et al.10 stated that studies should examine the usefulness of the CPAP logs and how the parameters affect OSA outcomes.

However, the relationship between CPAP usage-related parameters and CPAP adherence has yet to be investigated. Most clinical studies have only evaluated a single factor or a few factors to determine a method for ensuring CPAP adherence5,10,11,12,13,14. Meanwhile, machine learning-based data analysis has been gaining attention; using a large amount of data, machine learning enables predictive analytics while uncovering hidden patterns and unknown correlations. Several studies have shown its usefulness for the management of chronic disease treatment. For example, a study targeting the hospital visits of diabetic patients15 revealed factors possibly correlated to missed scheduled clinical appointments while also predicting whether a target patient will miss a scheduled clinical appointment. Although Araujo et al.16 and Scioscia et al.17 applied machine learning to CPAP logs, their studies focused on predicting CPAP adherence rather than investigating usage-related factors associated with adherence.

In our study, we aim to clarify whether machine learning-based data analysis is effective for identifying CPAP usage-related parameters associated with adherence while also predicting adherence. In particular, we focus on revealing factors possibly correlated to poor adherence which can be avoided.

Methods

We designed a machine learning model to verify the aforementioned hypothesis. Our study uses the same model for different purposes in its training phase and test phase. In the training phase, we use the model with a huge amount of training data (e.g., previously collected CPAP logs from many individuals) to investigate factors possibly correlated to poor CPAP adherence. In the test phase, we use the model with recent CPAP logs of a target patient to predict whether this patient’s CPAP adherence will become poor within a target period.

On the basis of the definition by the Centers for Medicare and Medicaid Services (CMS)5, this study defines good adherence as usage of the CPAP machine for more than four hours per night and more than five nights a week (i.e., 70% of nights in a week), whereas poor adherence is not good CPAP adherence.

The proposed model comprises two parts: preprocessing and a machine learning-based model. The details of each part are described below.

Preprocessing

Preprocessing comprises feature calculation and data standardization.

First, a total of eighteen weekly features are calculated as shown in Table 1. Although a CPAP machine provides a set of measured data per day, in this study we calculate all features from every seven consecutive days to suppress night-to-night variability. The CPAP machine used in this study was made by ResMed (ResMed Inc., San Diego, CA, USA). The daily recorded data comprises two qualitative values (sex and CPAP mode: auto-titration (auto) or fixed-titration) and five quantitative values (usage duration, air leakage, apnea index (AI), apnea hypopnea index (AHI), and daily average mask pressure). In addition to the original qualitative values provided by the CPAP machine, we calculate two additional qualitative features, daily severity based on AHI (Normal, Mild, Moderate, Severe)18 and the daily presence of OSAS based on AI as defined by Guilleminault et al.19. To calculate these two qualitative features, we first identify the daily value of each one and then set the most frequent value in a week as the weekly feature value.

The last step of preprocessing is data standardization. When targeting several different types of quantitative values, the difference in each data range causes different updating volumes of the weight corresponding to each feature in the training phase. Data standardization converts the data to a standardized value with the same scale. We use Eq. (1) to calculate the standardized value ã, whose mean value is 0 and standard deviation is 1. In Eq. (1), μ denotes the mean value of feature a, whereas σ denotes its standard deviation.

Machine learning-based model

We built a prediction model using logistic regression and a learn-to-rank (LTR) machine learning algorithm with a pairwise approach20. In the training phase, the proposed model aims to rank all patients in accordance with the risk of poor CPAP adherence, giving a higher rank to a patient whose CPAP adherence worsens within a shorter period. Through this ranking process, the proposed model calculates a weight vector that indicates the correlation between each parameter and poor CPAP adherence. In the test phase, the proposed model with the calculated weight vector identifies the target patient among all patients used in the training phase. Because the order of patients reflects the number of weeks until poor CPAP adherence, we can predict whether the target patient’s CPAP adherence becomes poor within a target period by determining a threshold for the rank. The details of the model are described below step by step.

Auxiliary parameter used for model training

Ensuring a sufficient amount of data for the training is quite important when applying machine learning for a limited amount of data. In general, we cannot use data shorter than the target period. Because of this, we may easily result in unsuccessful predictions due to a lack of training data.

Making the best of the limited number of CPAP usage logs for training the model, this study uses the number of weeks of each patient’s CPAP adherence as an auxiliary parameter. This duration is calculated by either of the following two. The first is the number of weeks until the first instance of poor CPAP adherence PA (px, tx), where px is the patient and tx is the number of weeks from the first week until the week when poor CPAP adherence was observed for the first time. When a patient px exhibits poor CPAP adherence, we calculate PA (px, tx). The second is the number of weeks of continuous good CPAP adherence GA (py, ty), where py is the patient and ty is the number of weeks from the first week until the most recent week when good CPAP adherence was observed. When a patient py was able to continuously maintain good adherence without exhibiting poor CPAP adherence, we calculated GA (py, ty).

Basic design of logistic regression model

This study modeled the probability ym,n that the CPAP adherence of patient pm at time tm would become poor earlier than another patient pn at tn using logistic function expressed as Eq. (2).

here, \(w\) is a weight vector, and xm and xn are feature vectors of patients pm and pn, respectively.

Mathematically, the logit of Eq. (2) is expressed as Eq. (3).

Because the logit is the inverse function of the logistic function, the logit value indicates the probability: logit(P) < 0 indicates P < 0.5, logit(P) = 0 indicates P = 0.5, and logit(P) > 0 indicates P > 0.5. Therefore, we substitute y in Eqs. (2) and (3) for either +1 or − 1 depending on the duration until poor CPAP adherence, i.e., y = +1 when PA (pm, tm) is shorter than PA (pn, tn) or when PA (pm, tm) is shorter than GA (pn, tn), and y = − 1 when PA (pm, tm) is longer than PA (pn, tn).

Model design and training with L2-norm regularization

The aim of the training process is to rank all patients in the training data in accordance with the duration until poor CPAP adherence by repeating the ranking on every pair of patients.

For this ranking, the model uses the logit value w∙xm as the PA risk score to measure the risk of a patient pm’s CPAP adherence becoming poor at tm. Here, the PA risk score is a specific case of Eq. (3) assuming y = +1 while setting an imaginary patient whose feature xn is 0. In other words, the proposed model aims to rank all patients in the training data through comparison with the same imaginary patient. This ranking is only conducted in the following two cases: when PA (pm, tm) is shorter than PA (pn, tn), and when PA (pm, tm) is shorter than GA (pn, tn). Through the training process, the model calculates w to match the value of the PA risk score and the number of weeks until poor CPAP adherence, giving a larger PA risk score to the earlier occurrence of poor CPAP adherence.

To mitigate overfitting to the training data set and improve the model’s generalizability for the new data, we used an L2-norm regularization as in the previous study15. The model estimates w as \(\hat{w}\) by using the following equation.

here, \(w_{2}^{2}\) is an L2-norm regularizer (i.e., the squared L2-norm of w), which acts as a penalty to provide large absolute weight values for certain features that occur frequently in the training data. The symbol λ is a hyperparameter for regularization. We need to tune hyperparameter λ while comparing the performance of the model.

Predicting CPAP adherence using proposed model

In the test phase, the model estimates CPAP adherence based on the PA risk score of the target patient using the estimated \(\hat{w}.\) Because we designed the PA risk score to reflect the number of weeks until poor CPAP adherence, we can predict whether the target patient’s CPAP adherence becomes poor within a target period by determining a threshold for the PA risk score.

Evaluation

We applied our model to the CPAP logs of the participants to predict whether their CPAP adherence became poor within twelve weeks (approximately three months). On the basis of a weight vector calculated through the training phase, we investigated the CPAP usage-related parameters possibly correlated with poor adherence.

In this study, we obtained the patient data with the opt-out consent, which is specified by Article 8-1-(2)-"a"-"i" of Ethical Guidelines for Medical and Biological Research Involving Human Subjects of Japan. We posted the introduction of this evaluation on a webpage and set a waiver period. During this period, all participants were eligible to opt-out of this evaluation by declaring their waiver. All evaluations were conducted in accordance with the protocol approved by the Ethics Committee of Kyoto University Hospital (R1821).

Participants

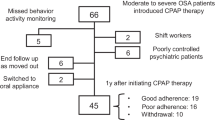

A total of 354 patients who used CPAP machines (ResMed Inc, San Diego, CA, USA) from January 1, 2007 to December 31, 2018, were eligible for this retrospective study. Note that their OSAS diagnosis criterion was an AHI greater than 20 in polysomnography reports.

The exclusion criteria included insufficient data quality and certain diseases (e.g., depression12), as shown in Fig. 1. Note that this study excluded participants transferred from other hospitals as a part of insufficient data quality because we cannot calculate an auxiliary parameter due to the lack of a CPAP initiation date.

After excluding participants on the basis of the aforementioned criteria, 219 patients were selected. Table 2 summarizes the clinical background of these participants.

Data used for evaluation

The CPAP logs obtained through the clinical CPAP treatment were used for retrospective data analysis. We only used the data from nights when CPAP was used for more than thirty minutes. This is because AI and AHI can be erroneous when the CPAP usage duration is short as AI and AHI are indices showing the target event per hour.

Regarding the CPAP adherence of the target 219 patients, we confirmed that two patients had GA (i.e., 8 and 101 weeks), 145 patients had PA, and the remaining 72 patients experienced poor CPAP adherence in the first week and cannot calculate either PA or GA. The histogram of the number of weeks until the first poor CPAP adherence among 145 patients who had PA is shown in Fig. 2. Technically, the following evaluation only targets the data of 147 patients who had GA or PA.

Evaluation methods

We evaluated the results in terms of the prediction performance of CPAP adherence and the top ten CPAP usage-related parameters possibly correlated with poor CPAP adherence.

Before investigating both the prediction performance and the top ten CPAP usage-related parameters possibly correlated with poor CPAP adherence, technically, we need to determine the hyperparameter λ that optimizes ranking performance. As a pre-evaluation, this study evaluated whether the model correctly ranked the participants in the test dataset using five-fold cross-validation. The k-fold cross-validation is a statistical validation method, where k is a user-specified number (usually 5 or 10)21. When performing five-fold cross-validation, the data is first partitioned into five subsets of approximately equal size, and then a sequence of models is trained and tested five times. For each test, one of the subsets is used as the test data and the rest of the four subsets are used for the training data. After all trials, the accuracy is obtained as the average across all five trials. To determine the appropriate hyperparameter λ, we evaluated seven different hyperparameters (λ = 0.1, 0.2, 0.5, 1.0, 2.0, 5.0, 10.0) in each cross-validation test.

After determining the hyperparameter λ, we evaluated the prediction performance of CPAP adherence using the PA risk scores and their corresponding duration until poor CPAP adherence. For this evaluation, we used the receiver operating characteristic (ROC) curve22 plotted in 0.5 increments of the PA risk score.

Regarding the top ten CPAP usage-related parameters possibly correlated with poor CPAP adherence, this study investigated a weight vector \(\hat{w}\) calculated with the optimal hyperparameter λ. Because a larger absolute value indicates a stronger correlation, we selected the features with the ten largest absolute value weights as the top ten factors and evaluated whether they are consistent with common clinical knowledge.

Results

Pre-evaluation on ranking accuracy for determining Hyperparameter λ

The ranking accuracy of our model was 0.692 ± 0.010 and reached the highest at 0.706 when the hyperparameter λ = 0.2. Therefore, we set the hyperparameter λ = 0.2 for the following evaluation.

Prediction performance

Figure 3 shows the ROC curve. The area under the curve (AUC) of the ROC was estimated as 0.763, indicating moderate accuracy22. The optimal cut-off point of the PA risk score based on the Youden index22 was − 0.5, where the F1 score was 0.864, precision was 0.872, and recall was 0.856, respectively.

CPAP usage-related factors related to poor adherence

Table 3 lists the top ten CPAP usage-related parameters possibly correlated with poor CPAP adherence and their interpretations obtained from the highest ranking accuracy (hyperparameter λ = 0.2). For the weights shown in Table 3, a positive number indicates a positive correlation and a negative number indicates a negative correlation. For example, the top factor, average duration of usage in a week, has a strong negative correlation (− 9.38) with poor CPAP adherence. This indicates that participants using CPAP for shorter durations may have poor CPAP adherence in the future.

Discussion

To our knowledge, our study is the first to use machine learning to investigate CPAP usage-related parameters possibly correlated with poor CPAP adherence while also predicting future CPAP adherence. We developed a model using logistic regression and LTR machine-learning algorithm with a pairwise approach20, then applied it to the CPAP logs obtained from clinical treatment in one hospital. Overall, the results indicated that our model can be a fair method for both predicting CPAP adherence and investigating the factors correlated to poor adherence.

Regarding adherence prediction performance, our model using one week of CPAP logs was able to predict the risk of poor CPAP adherence within twelve weeks with an AUC value of 0.763, and its prediction performance at the optimal cut-off point yielded an F1 score of 0.864. Compared with the results of a previous study16, which used 13 days (approximately two weeks) of CPAP logs for predicting the risk of poor adherence within 180 days (approximately 26 weeks after) with an F1 score of 0.54, our proposed model showed good performance.

We identified six factors regarding CPAP usage-related parameters possibly correlated to poor adherence. Among these six factors, four were consistent with previous clinical knowledge13,14: Factors 1 and 2 are consistent with the discussion presented by Chai-Coetzer et al.13, and Factors 7 and 9 are consistent with the discussion by Valentin et al.14. Thus, the results of our pilot study indicate that machine learning is an adequate method for investigating factors related to poor CPAP adherence. Overall, the feasible factors identified by this retrospective study indicated that the following would improve CPAP adherence: avoiding air leakage, keeping a constant mask pressure, and longer and constant CPAP usage duration. Meanwhile, a detailed analysis of the data used in this study indicated that the remaining four factors may be strongly biased. For Factor 3, 98.2% of the data were obtained from auto mode; for Factor 4, 86.8% of the data were from men; for Factor 8, 96.3% of data were classified as “normal”; and for Factor 10, 92.8% of data were classified as “normal.” In theory, machine learning may overvalue a feature when it repeatedly shows the same tendency; for example, machine learning may overvalue women more than men if the CPAP adherence in female patients is consistently poor, whereas it varies among male patients. At this moment, we cannot determine whether these four factors are surely correlated with poor CPAP adherence. Further studies on additional patients will be needed to clarify the relationship between the four factors and CPAP adherence. It should be noted that these imbalanced conditions were quite common under clinical OSAS treatment settings; these biases simply indicate that the applied dataset was not eccentric but can be considered a valid subset of Japanese real-world data.

To improve prediction accuracy, we need to modify the model in consideration of real-world CPAP usage. In this study, our model focuses on predicting poor CPAP adherence using weekly features. Although weekly features could reflect basic characteristics of night-to-night CPAP usage, they may unintentionally suppress specific characteristics that occurred within a week (e.g., temporarily discontinuation of CPAP use subsequent to the specific CPAP use in the previous night). In addition, the current model does not consider how to handle data that resumes after discontinuation (e.g., trip) as well as partial data (e.g., hospital transfer). In addition, the model may also need to consider the date of CPAP use. Physicians have pointed out that CPAP adherence can change depending on the season (e.g., adherence may worsen in the change of season from winter to spring due to pollinosis). Future studies should consider these perspectives to clarify CPAP use and improve adherence.

The main limitation of this research is the lack of causal relation analysis, which is a common limitation in retrospective research. Since this study was a pilot and retrospective study, the results only indicate correlations. Therefore, we first intend to verify whether the factors indicated in this study can improve clinical CPAP adherence through a prospective study. Once the relationship between the factors and clinical CPAP adherence has been confirmed, patients of CPAP therapy can be advised on the basis of these parameters. Another limitation of our study is the small scale. All aforementioned implications were obtained from retrospective data analysis using previously collected clinical CPAP logs from only one hospital, so the calculated weight vector only conceals characteristics inherent in this data theoretically. To verify the consistency of the results, a large-scale study including other hospitals is necessary for deeper investigation.

Conclusion

We developed a CPAP adherence prediction model using logistic regression and learn-to-rank machine learning, and then applied it to CPAP logs obtained from clinical treatment provided by one hospital. The results of retrospective data analysis indicate that machine learning is sufficient for investigating factors related to poor CPAP adherence. The factors obtained from retrospective data analysis support previous clinical knowledge for improving CPAP adherence.

In general, machine learning can be considered a promising method for uncovering medical knowledge. Although this study did not provide new findings, it shows the potential of machine learning for achieve the prospective goal. Towards this end, we will need to collect a larger amount of data with various parameters and apply machine learning predictors for deeper investigation.

Data availability

The datasets generated and/or analyzed during the current study are not publicly available due to Articles 27 and 28 of the Japanese Personal Information Protection Law that prohibits any "Personal Information Handling Business Operators" to share any personal data including "Special Care-Required Personal Information" like medical history without obtaining in advance a principal's consent, but are available from the corresponding author on reasonable request following Article 20-2-5 of the Personal Information Protection Law.

Code availability

Not applicable (cannot share the source file of program code due to the protection of business secrecy).

Change history

18 January 2023

The original online version of this Article was revised: The original version of this Article contained errors in the Methods and Evaluation sections. The original Article has been corrected

References

Jordan, A. S., McSharry, D. G. & Malhotra, A. Adult obstructive sleep apnoea. Lancet 383, 736–747. https://doi.org/10.1016/S0140-6736(13)60734-5 (2014).

Marin, J. M., Carrizo, S. J., Vicente, E. & Agusti, A. G. Long-term cardiovascular outcomes in men with obstructive sleep apnoea-hypopnoea with or without treatment with continuous positive airway pressure: An observational study. Lancet 365, 1046–1053. https://doi.org/10.1016/S0140-6736(05)71141-7 (2005).

Marin, J. M. et al. Association between treated and untreated obstructive sleep apnea and risk of hypertension. JAMA 307(20), 2169–2176. https://doi.org/10.1001/jama.2012.3418 (2012).

Sullivan, C. E., Issa, F. G., Berthon-Jones, M. & Eves, L. Reversal of obstructive sleep apnoea by continuous positive airway pressure applied through the nares. Lancet 317(8225), 862–865. https://doi.org/10.1016/s0140-6736(81)92140-1 (1981).

Mehrtash, M., Bakker, J. P. & Ayas, N. Predictors of continuous positive airway pressure adherence in patients with obstructive sleep apnea. Lung 197, 115–121. https://doi.org/10.1007/s00408-018-00193-1 (2019).

Jenkinson, C., Davies, R. J., Mullins, R. & Stradling, J. R. Comparison of therapeutic and subtherapeutic nasal continuous positive airway pressure for obstructive sleep apnoea: A randomised prospective parallel trial. Lancet 353(9170), 2100–2105. https://doi.org/10.1016/S0140-6736(98)10532-9 (1999).

Barbé, F. et al. Effect of continuous positive airway pressure on the incidence of hypertension and cardiovascular events in nonsleepy patients with obstructive sleep apnea: A randomized controlled trial. JAMA 307(20), 2161–2168. https://doi.org/10.1001/jama.2012.4366 (2012).

Peker, Y. et al. Effect of positive airway pressure on cardiovascular outcomes in coronary artery disease patients with nonsleepy obstructive sleep apnea: The RICCADSA randomized controlled trial. Am. J. Respir. Crit. Care Med. 194(5), 613–620. https://doi.org/10.1164/rccm.201601-0088OC (2016).

McArdle, N. et al. Long-term use of CPAP therapy for sleep apnea/hypopnea syndrome. Am J Respir Crit Care Med. 159(4), 1108–1114. https://doi.org/10.1164/ajrccm.159.4.9807111 (1999).

Schwab, R. J. et al. An official american thoracic society statement: Continuous positive airway pressure adherence tracking systems. Am. J. Respir. Crit. Care Med. 188(5), 613–620. https://doi.org/10.1164/rccm.201307-1282ST (2013).

Sawyer, A. M. et al. A systematic review of CPAP adherence across age groups: Clinical and empiric insights for developing CPAP adherence interventions. Sleep Med. Rev. 15(6), 343–356. https://doi.org/10.1016/j.smrv.2011.01.003 (2011).

Law, M., Naughton, M., Ho, S., Roebuck, T. & Dabscheck, E. Depression may reduce adherence during CPAP titration trial. J. Clin. Sleep Med. 10(2), 163–169. https://doi.org/10.5664/jcsm.3444 (2014).

Chai-Coetzer, C. L. et al. Predictors of long-term adherence to continuous positive airway pressure therapy in patients with obstructive sleep apnea and cardiovascular disease in the save study. Sleep 36(12), 1929–1937. https://doi.org/10.5665/sleep.3232 (2013).

Valentin, A., Subramanian, S., Quan, S. F., Berry, R. B. & Parthasarathy, S. Air leak is associated with poor adherence to autoPAP therapy. Sleep 34(6), 801–806. https://doi.org/10.5665/SLEEP.1054 (2011).

Kurasawa, H. et al. Machine-learning-based prediction of a missed scheduled clinical appointment by patients with diabetes. J. Diabetes Sci. Technol. 10(3), 730–736. https://doi.org/10.1177/1932296815614866 (2016).

Araujo, M., Bhojwani, R., Srivastava, J., Kazaglis, L., Iber, C. ML approach for early detection of sleep apnea treatment abandonment. in Proceedings of the 2018 International Conference on Digital Health. https://doi.org/10.1145/3194658.3194681 (2018).

Scioscia, G. et al. Machine learning-based prediction of adherence to continuous positive airway pressure (CPAP) in obstructive sleep apnea (OSA). Inform. Health Soc. Care. 47(3), 274–282. https://doi.org/10.1080/17538157.2021.1990300 (2021).

American Academy of Sleep Medicine Task Force. Sleep-related breathing disorders in adults: Recommendations for syndrome definition and measurement techniques in clinical research. The report of an american academy of sleep medicine task force. Sleep 22(5), 667–689. https://doi.org/10.1093/sleep/22.5.667 (1999).

Guilleminault, C., Tilkian, A. & Dement, W. C. The sleep apnea syndromes. Annu. Rev. Med. 27, 465–484. https://doi.org/10.1146/annurev.me.27.020176.002341 (1976).

Liu, T.-Y. Learning to Rank for Information Retrieval (Springer, Berlin, 2011).

Müller, A. C. & Guido, S. Introduction to Machine Learning with Python: A Guide for Data Scientists (O’Reilly Media, Inc., California, 2016).

Akobeng, A. K. Understanding diagnostic tests 3: Receiver operating characteristic curves. Acta Paediatr. 96(5), 644–647. https://doi.org/10.1111/j.1651-2227.2006.00178.x (2007).

Acknowledgements

The authors thank Tomoko Toki, Satoko Tamura, and Yuko Furusawa for their administrative support.

Conference presentation

The initial evaluation of this study was presented as a poster presentation at the 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (IEEE EMBC’19), Berlin, Germany, July 23–27, 2019.

(Note: This conference presentation was submitted as a 1-page short paper, which does not have any cited information. We conducted additional analysis and summarized it for this submission).

Eguchi K, Azuma S, Indo T, Takeyama H, Chin K, Nambu M, Kuroda T, A Pilot Study of Predicting CPAP Adherence Based on the Statistical Values of Previous CPAP Usage Duration and Related Parameters.

Funding

This work was funded by Kyoto University Hospital and Nippon Telegraph and Telephone (NTT) Corporation as joint research. This study was also supported by grants from the Japan Agency for Medical Research and Development (JPek0210116, JPek0210150, and JPwm0425018), grants from the Ministry of Education, Culture, Sports, Science and Technology in Japan (JSPS KAKENHI 17H04182 and 20H03690), and a grant from Comprehensive Research on Life-Style Related Diseases including Cardiovascular Diseases and Diabetes Mellitus (21FA1004) from the Ministry of Health, Labor and Welfare in Japan.

Author information

Authors and Affiliations

Contributions

Conceptualization, K.E., M.N., H.T., S.A., K.C. and T.K.; software, K.E.; data curation, K.E. and H.T.; formal analysis, K.E., T.Y. and M.N.; project administration, S.A., K.C. and T.K.; writing—original draft preparation, K.E.; writing—review and editing, K.E., T.Y., M.N., H.T., S.A., K.C. and T.K.

Corresponding author

Ethics declarations

Competing interests

The Department of Real World Data R&D of Kyoto University is funded by endowments from Nippon Telegraph and Telephone (NTT) Corporation, Canon Medical Systems Corporation, and H.U. Group Holdings, Inc. to Kyoto University. The Preemptive Medicine & Lifestyle-Related Disease Research Center is funded by Resorttrust, Inc. The Department of Respiratory Care and Sleep Control Medicine of Kyoto University is funded by endowments from Philips-Respironics, ResMed, Fukuda Denshi, and Fukuda Lifetec-Keiji to Kyoto University. The Department of Sleep Medicine and Respiratory Care of Nihon University is funded by endowments from Philips-Respironics, ResMed, Fukuda Denshi, and Fukuda Lifetec-Tokyo to Nihon University. Aside from the above, the authors have no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Eguchi, K., Yabuuchi, T., Nambu, M. et al. Investigation on factors related to poor CPAP adherence using machine learning: a pilot study. Sci Rep 12, 19563 (2022). https://doi.org/10.1038/s41598-022-21932-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-21932-8

This article is cited by

-

Awakening Sleep Medicine: The Transformative Role of Artificial Intelligence in Sleep Health

Current Sleep Medicine Reports (2025)

-

Automatic prediction of obstructive sleep apnea in patients with temporomandibular disorder based on multidata and machine learning

Scientific Reports (2024)