Abstract

Advanced deep learning architectures consist of tens of fully connected and convolutional hidden layers, currently extended to hundreds, are far from their biological realization. Their implausible biological dynamics relies on changing a weight in a non-local manner, as the number of routes between an output unit and a weight is typically large, using the backpropagation technique. Here, a 3-layer tree architecture inspired by experimental-based dendritic tree adaptations is developed and applied to the offline and online learning of the CIFAR-10 database. The proposed architecture outperforms the achievable success rates of the 5-layer convolutional LeNet. Moreover, the highly pruned tree backpropagation approach of the proposed architecture, where a single route connects an output unit and a weight, represents an efficient dendritic deep learning.

Similar content being viewed by others

Introduction

Traditionally, deep learning (DL) stems from human brain dynamics, where the connection strength between two neurons is modified following their relative activities via synaptic plasticity1,2. However, these two learning scenarios are essentially different3. First, efficient DL architectures require tens of feedforward hidden layers4,5, currently extended to hundreds6,7, whereas brain dynamics consist of only a few feedforward layers8,9,10.

Second, DL architectures typically consist of many hidden layers that are mostly convolutional layers. The response of each convolutional layer is sensitive to the presence of a particular pattern or symmetry in limited areas of the input, where its repeated operations in the subsequent hidden layers are expected to reveal large-scale features characterizing a class of inputs11,12,13,14,15. Similar operations have been observed in the visual cortex; however, approximated convolutional wirings have been confirmed mainly from the retinal input to the first hidden layer8,16.

The final complex aspect is the implausible biological realization of the backpropagation (BP) technique17,18, an essential component in the current implementation of DL, which changes a weight in a non-local manner. In a supervised learning scenario, an input is presented to the feedforward network, and the distance between the current and desired output is computed using a given error function. Subsequently, each weight is updated to minimize the error function using the following BP procedure. Each route between the weight and an output unit contributes to its modification, where all components along this route, weights and nonlinear nodal activation functions, are combined. Finally, weights belonging to a convolutional layer are made equal to establish a filter. The number of routes between an output unit and a weight is typically large (Fig. 1a,b); thus, this enormous transportation of precise weight information can be performed effectively using fast vector and matrix operations implemented by parallel GPUs. However, this process is evidently beyond biological realization. Note that convolutional and fully connected layers are the main contributors to this enormous parallel transportation, excluding an adjacent convolution to the input layer and a fully connected layer adjacent to the output units19.

Examined convolutional LeNet-5 and Tree-3 architectures. (a) The convolutional LeNet-5 for the CIFAR-10 database consists of 32 × 32 RGB input images belonging to the 10 output labels. Layer 1 consists of six (5 × 5) convolutional filters followed by (2 × 2) max-pooling, Layer 2 consists of 16 (5 × 5) convolutional filters and Layers 3–5 have three fully connected hidden layers of sizes 400, 120, and 84, which are finally connected to the 10 output units. (b) Scheme of the routes affecting the updating of a weight belonging to Layer 1 in panel (a) (dashed red line) during the BP procedure. The weight is connected to one of the output units via multiple routes (dashed red lines) and can exceed one million (“Methods” section). Note that all weights in Layer 1 are equalized to 6 × (5 × 5) weights belonging to the six convolutional filters. (c) Examined Tree-3 structure consisting of M branches with the same 32 × 32 RGB input images. Layer 1 consists of 3 × K (5 × 5) convolutional filters and K filters for each of the RGB input images. Each branch consists of the same 3 × K filters, followed by (2 × 2) max-pooling which results in (14 × 14) output units for each filter. Layer 2 consists of a tree (non-overlapping) sampling (2 × 2 × 7 units) across the K filters for each RGB color in each branch, resulting in 21 (7 × 3) outputs for each branch. Layer 3 fully connects the 21 × M outputs of the M branches of Layer 2 to the 10 output units. (d) Scheme of a single route (dashed black line) connecting an updated weight in Layer 1 (in panel c), during the BP procedure, to an output unit.

This study presents a learning approach based on tree architectures, where each weight is connected to an output unit via only a single route (Fig. 1c,d). This type of tree architectures represents a step toward a plausible biological learning realization19 and is inspired by recent experimental evidence on dendritic20 and sub-dendritic adaptations19 and their nonlinear amplification21,22,23. In particular, the timescales of dendritic adaptation depend on the training frequency and can be reduced to several seconds only24. The question at the center of our work is whether learning on a tree architecture, inspired by dendritic tree learning, can achieve success rates comparable to those obtained from more structured architectures consisting of several fully connected and convolutional layers. Here, the success rates of the proposed Tree-3 architecture, with only three hidden layers, are demonstrated to outperform the achievable LeNet-5 success rates on the CIFAR-10 database25. The LeNet-5 architecture (Fig. 1a) consists of five hidden layers, two convolutional layers, and three fully connected layers, such that a weight can be connected to an output through a large number of routes, which can exceed a million (Fig. 1b).

Methods

The Tree-3 architecture and initial weights

The Tree-3 architecture (Fig. 1c) consists of M = 16 branches. The first layer of each branch consists of K (6 or 15) filters of size (5 × 5) for each one of the three RGB channels. Each channel is convolved with its own set of K filters, resulting in 3 × K different filters. The convolutional layer filters are identical among the M branches. The first layer terminates with a max-pooling consisting of (2 × 2) non-overlapped squares. The second layer consists of a tree sampling. For the CIFAR-10 dataset, this layer connects hidden units from the first layer using non-overlapping rectangles of size (2 × 2 × 7), two consecutive rows of the 14 × 14 square, without shared-weights, but with summation over the depth of K filters, yielding an output of (16 × 3 × 7) hidden units. The third layer fully connects the (16 × 3 × 7) hidden units of the second layer with the 10 output units, representing the 10 different labels. For the MNIST dataset, the input is (28 × 28) and after the (5 × 5) convolution the output of each filter is (24 × 24) which terminates as (12 × 12) hidden units after performing the max-pooling. The tree sampling layer connects hidden units from the first layer using non-overlapping rectangles of size (4 × 4 × 3), without shared-weights, but with summation over the depth of K filters, resulting in a layer of (16 × 3) hidden units. The third layer fully connects the (16 × 3) hidden units of the second layer with the 10 output units, representing the 10 different labels. For online learning, the ReLU activation function is used, whereas Sigmoid is used for offline learning, except for K = 15, M = 80, where the ReLU activation function is used. All weights are initialized using a Gaussian distribution with zero mean and standard deviation according to He normal initialization26.

Details of the weight, input, and output sizes for each layer of the Tree-3 architecture are summarized below.

Type | Weight size | Input size | Output size |

|---|---|---|---|

Conv2d | \(3\mathrm{ x }\mathrm{K x }5\mathrm{ x }5\) groups = 3 | \(3\mathrm{ x }32\mathrm{ x }32\) | \(3\mathrm{K x }28\mathrm{ x }28\) |

MaxPool2d | \(2\mathrm{ x }2\) | \(3\mathrm{K x }28\mathrm{ x }28\) | \(3\mathrm{K x }14\mathrm{ x }14\) |

Tree Sampling | \(3\mathrm{K x M x }14\mathrm{ x }14\) | \(3\mathrm{K x }14\mathrm{ x }14\) | \(3\mathrm{M x }7\) |

FC | \(21\mathrm{M x }10\) | \(3\mathrm{M x }7\) | \(10\) |

Data preprocessing

Each input pixel of an image is divided by the maximal value for a pixel, 255, and next multiplied by 2 and subtracted by 1, such that its range is [− 1, 1]. The performance was enhanced by using simple data augmentation derived from original images, such as flipping and translation of up to two pixels for each direction. For offline learning with K = 15 and M = 80, the translation was up to four pixels for each direction.

Optimization

The cross-entropy cost function was selected for the classification task and was minimized using the stochastic gradient descent algorithm. The maximal accuracy was determined by searching over the hyper-parameters, i.e., learning rate, momentum constant and weight decay. Cross validation was confirmed using several validation databases each consisting of 10,000 random examples as in the test set. The averaged results were within the standard deviation (Std) of the reported average success rates. Nesterov momentum27 and L2 regularization method28 were used.

Number of paths: LeNet-5

The number of different routes between a weight emerging from the input image to the first hidden layer and a single output unit is calculated as follows (Fig. 1b). Consider an output-hidden unit of the first hidden layer, belonging to one of the (14 × 14) output hidden units of a filter at a given branch. This hidden unit contributes to a maximum of 25 different convolutional operations for each filter at the second convolutional layer. The output of this layer results in 16 × 25 different routes. The max-pooling of the second layer reduces the number of different routes to 16 × 25/4 = 100. Each of these routes splits to 120 in the third fully connected layer and splits again to 84 in the fourth fully connected layer. Hence, the total number of routes is 100 × 120 × 84 = 1,008,000 different routes.

Hyper-parameters for offline learning (Table 1, upper panel)

The hyper-parameters η (learning rate), μ (momentum constant27) and α (regularization L228), were optimized for offline learning with 200 epochs. For LeNet-5, using mini-batch size of 100, η = 0.1, μ = 0.9 and α = 1e−4. For Tree-3 (K = 6 or 15, M = 16 or M = 80), using mini-batch size of 100, η = 0.075, μ = 0.965 and α = 5e−5 and for 10 Tree-3 (K = 15, M = 80) architectures where each one has one output only, using mini-batch size of 100, η = 0.05, μ = 0.97 and α = 5e−5. The learning-rate scheduler for LeNet-5, η = 0.01, 0.005, 0.001 for epochs = [0, 100), [100, 150), [150, 200], respectively. For Tree-3 (K = 6, M = 16) η = 0.075, 0.05, 0.01, 0.005, 0.001, 0.0001) for epochs = [0, 50), [50, 70), [70, 100), [100, 150), [150, 175), [175,200], respectively. For Tree-3 (K = 15, M = 16) η = 0.075, 0.05, 0.01, 0.0075, 0.003 for epochs = [0, 50), [50, 70), [70, 100), [100, 150), [150,200], respectively. For Tree-3 (K = 15, M = 80) and 10 Tree-3 (K = 15, M = 80), η decays by a factor of 0.6 every 20 epochs. For Tree-3, the weight decay constant changes after epoch 50 to 1e−5. For the MNIST dataset the optimized hyper-parameters were a mini-batch size of 100, η = 0.1, μ = 0.9 and α = 5e−4. The learning rate scheduler was the same as for Tree-3 (K = 15, M = 16), on the CIFAR-10 dataset.

Hyper-parameters for online learning (Table 1, bottom panel)

The hyper-parameters mini-batch size, η (learning rate), μ (momentum constant27) and α (regularization L228), were optimized for online learning using the following three different dataset sizes (50k, 25k, 12.5k) examples. For LeNet-5, using mini-batch sizes of (100, 100, 50), η = (0.012, 0.017, 0.012), μ = (0.96, 0.96, 0.94) and α = (1e−4, 3e−3, 8e−3), respectively. For Tree-3 (K = 6, M = 16), using mini-batch sizes of (100, 100, 50), η = (0.02, 0.03, 0.02), μ = (0.965, 0.965, 0.965) and α = (5e−7, 5e−6, 5e−5), respectively.

Ten Tree-3 architectures

Each Tree-3 architecture has only one output unit representing a class. The ten architectures have a common convolution layer and are trained in parallel, where eventually the softmax function is applied on the output of the ten different architectures.

Pruned BP

The gradient of a weight emerging from an input unit connected an output via a single route (Tree-3 architecture, Fig. 1c), with non-zero ReLU activation function, is given by \(\Delta \left({W}^{Conv}\right)=Input\cdot {W}^{Tree}\cdot {W}^{FC}\cdot (Output{-}Outpu{t}_{desired})\), otherwise, its value is equal to zero.

Statistics

Statistics of the average success rates and their standard deviations for online and offline learning simulations were obtained using 20 samples. The statistics of the percentage of zero gradients and their standard deviations in Fig. 2 were obtained using 10 different samples each trained over 200 epochs.

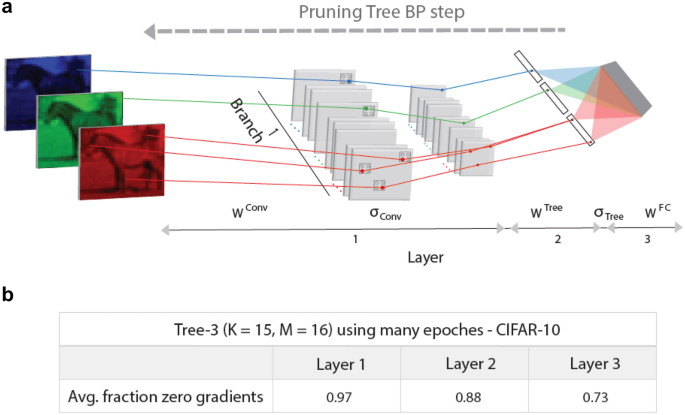

BP step on highly pruning Tree-3 architecture. (a) Scheme of a BP step in the first branch of a highly pruning Tree-3 architecture (Fig. 1d). The gray squares in the first layer represent convolutional hidden units, \({\sigma }_{Conv}\), and max-pooling hidden units that are equal zero, except several denoted by RGB dots. The non-zero tree output hidden units, \({\sigma }_{Tree}\), are denoted by black dots. The updated weights with nonzero gradients, in first layer, \({W}^{Conv}\), second layer, \({W}^{Tree}\), and third fully connected layer, \({W}^{FC}\), are denoted by RGB lines. (b) Fraction of zero gradients, averaged over the test set, and their standard deviations for the tree layers of Tree-3 architecture (K = 15, M = 16), after many epochs (“Methods” section).

Hardware and software

We used Google Colab Pro and its available GPUs. We used Pytorch for all the programming processes.

Results

The Tree-3 architecture consists of M parallel branches, each receiving the same (32 × 32) RGB inputs (Fig. 1c). Each branch is connected to a convolutional layer, consisting of K (5 × 5) filters for each of the three RGB input colors followed by (2 × 2) max-pooling, resulting in a (14 × 14) output layer for each filter in each branch. The 3 × K filters are identical among the M branches. After a non-overlapping (2 × 2 × 7) sampling across K filters belonging to the same branch, a tree sampling, each branch leads to 21 (3 × 7) outputs. Hence, the second layer consists of 21 × M outputs of the M branches that are now fully connected to the 10 output units, representing the 10 possible labels. Thus, each weight in Tree-3 is connected to an output unit via a single route only (Fig. 1d).

The asymptotic success rates after many epochs using the entire dataset, including data augmentation, for Tree-3 using the BP algorithm (“Methods” section) are presented in Table 1 (upper panel), along with the success rates for LeNet-5. These results indicate that the success rate of Tree-3 outperforms that of LeNet-5. The parallel tree branches (Fig. 1c) successfully replace the second convolutional layer in LeNet-5 as well as the several fully connected layers (Fig. 1a).

For M = 16, the success rates are improved when K is increased from 6 to 15 (Table 1, upper panel). In addition, for K = 15, success rates are improved when M is increased from 16 to 80 (Table 1, upper panel), where M = 80 is selected according to the following argument. In deep learning algorithms, the product of the size of the feature maps and their amount remains constant along most of the convolutional layers. For instance, in VGG-Net29, when the size of the feature maps shrinks from 112 × 112 to 56 × 56, the number of filters accordingly increases from 128 to 256. Similarly, in LeNet-5, when the size of the feature maps shrinks from 14 × 14 in the first convolutional layer to 5 × 5 in the second layer (Fig. 1a), the number of filters increases from 6 to 16 ((14/5) × (6/16) ≃ 1). For the Tree-3 architecture (Fig. 1c), the size of the feature maps shrinks from 14 × 14 to 7 × 1 (which can be approximated as √7 × √7); hence, the number of M branches (representing the amount of filters) has to increase to 15 × (14/√7) ≃ 80. Indeed, the success rate increases from ~ 0.765 for M = 16 to ~ 0.79 for M = 80 (Table 1, upper panel). The optimization of the success rates on the M and K grid deserves further research.

The Tree-3 architecture (Fig. 1c) deviates from a biological dendritic morphology. Although each weight, a dendritic segment, is connected to each output unit via one route, the architecture consists of 10 output units. We repeated our simulations for 10 Tree-3 architectures, where each one has only one output, and with the same cost function for the 10 output units (“Methods” section). The results indicate that for K = 15 and M = 80, the success rates are improved to ~ 0.815.

In comparison to LeNet-5, the advantage of Tree-3 is enhanced when success rates were optimized for the online learning scenario, where each input was trained only once. Optimized success rates for online learning with 50K (the entire dataset), 25K, and 12.5K training inputs indicate that Tree-3 outperformed LeNet-5 by 6–8% (Table 1, lower panel).

Preliminary results indicate that the reported success rates of Tree-3 on CIFAR-10 can be further enhanced by a few percentage using the following two approaches. The first is adding weight crosses19 to the 25 weights composed of the 5 × 5 convolutional filters (Fig. 1c). The second is to include an additional convolutional layer with a 1 × 1 filter30 after the first convolution layer. This 1 × 1 convolutional layer preserves the tree architecture because it functions as a weighted sum of previous filters.

A similar comparison between the success rates of Tree-3 and LeNet-5 (Fig. 1) was also performed for the MNIST database31. The results indicate that the success rate of Tree-3 with many epochs is comparable to or even slightly better than that of LeNet-5. The reported success rate of LeNet-5 without augmentation was 0.990517, whereas Tree-3 obtained 0.9907 (“Methods” section), which can be further enhanced using an additional 1 × 1 convolutional layer. This Tree-3 results on MNIST are significantly better than the recently achievable success rates for tree architectures without a convolution layer adjacent to the input19.

Discussion

An efficient approach for learning on tree architectures was demonstrated, where each weight is connected to an output unit via a single route only. The gradient, \(\Delta\), of a weight emerging from an input unit, \({W}^{Conv}\) (Fig. 2a), is given in the BP procedure as follows:

where \({\sigma }_{Conv}^{\prime}\) and \({\sigma }_{Tree}^{\prime}\) are the derivatives of the current activation function of the hidden units in the convolutional and tree layers along the route, respectively (Fig. 2a). The last term denotes the difference between the current output and the desired one. When ReLU activation function is used, the gradient is simplified as follows:

otherwise, the gradient is equal to zero. The simulations performed over each presented input in the test set indicate that the average fraction of zero gradients in the BP procedure was approximately 0.97, 0.88, and 0.72 for \({W}^{Conv}, {W}^{Tree}\), and \({W}^{FC}\), respectively (Fig. 2a,b). Since almost all possible gradients belong to \({W}^{Conv}\), the fraction of zero gradients in an entire BP step is ~ 0.97 (“Methods” section). The simulations also indicate that these dominated zero gradient fractions (Fig. 2b) hold since the initial learning of a few hundred of presented inputs within the first epoch. These results indicate that dynamically, the number of necessary weight updates is minimized because the tree is being significantly pruned, which also reduces the complexity and the energy consumption of the learning algorithm. Noted that the strength distribution of the absolute values of the remaining ~ 0.03 fraction of non-zero gradients is wide and dominated by small values, likewise with the distribution of the relative weight changes, |\(\Delta /W\)| (Supplementary Fig. S1). These small changes might be dynamically neglected in each BP step without affecting the success rates, such that the required fraction of gradients might be minimized further, which may also be suitable to the biological reality of a limited signal-to-noise ratio. Preliminary results indicate that similar behavior occurs for the Sigmoid activation function, where large fractions of the absolute gradient values or relative weight changes are several order of magnitudes smaller than the dominating ones (Supplementary Fig. S2). This indicates that the simplified utilization of BP for a high pruning tree might also apply to this scenario. From initial tests, it has been observed that dynamically neglecting absolute value gradients below a threshold, resulting in only 0.6% active gradients, barely affects the success rates.

In the Tree-3 architecture with K = 6 and M = 16, the maximal required number of calculated gradients in the convolutional layer, dominating the entire number of gradients, is ~ 5.7 million (5 × 5 × 3 × 6 × 28 × 28 × 16) (Fig. 1c). In the pruning tree BP, only ~ 0.6% is actually updated; hence, the remaining necessary updates are ~ 34,000, where the calculation of each one consists of ~ 2 operations only (see the abovementioned equation). In contrast, for LeNet-5, the maximal number of calculated gradients in the first convolutional layer is ~ 352,000 (5 × 5 × 3 × 6 × 28 × 28), where the max-pooling layer reduces the number to ~ 88,000. However, because each weight is connected to an output unit via an enormous number of routes (Fig. 1b and “Methods” section), the computational complexity of each gradient is large. Hence, in principle, the computational complexity of LeNet-5 is significantly greater than that of the Tree-3 architecture with similar success rates; however, its efficient realization requires a new type of hardware.

Tree architectures represent a step toward a plausible biological realization of efficient dendritic learning and its powerful computation, where DL can be realized by highly pruned dendritic trees of a single or several neurons. The usefulness of a single convolutional layer adjacent to the input layer in preserving the tree architecture is evident. It enhances the success rates compared to tree architectures without convolution19 and is consistent with the observed approximated convolutional wirings adjacent to the retinal input layer8,16. The efficient implementation of the BP technique on tree architectures, specifically for the dynamic of a highly pruning tree, requires a new type of hardware. Such hardware must be different from GPUs, which are better fitted to the current DL implementations, consisting of many convolutional and fully connected layers, based on vector and matrix multiplications.

Tree architecture learning is also expected to minimize the probability of occurrence and the effect of vanishing and exploding gradients, one of the challenges in DL32. In conventional DL architectures, such as that in Fig. 1a, each weight is updated via the summation of a large number of gradients associated with all its routes connecting the output units (Fig. 1b). Even if the gradient of each route is small, the summation can be large and lead to weight explosion, a reality which is not considered in the tree architectures. This possible advantage of shallow tree architectures in minimizing the effect of vanishing or exploding gradients, calls for their quantitative examination in further research.

The introduction of parallel branches instead of the second convolutional layer in LeNet-5 improved the success rates while preserving the tree structure. Each one of the M branches has the same convolutional filters emerging from the input layer. Nevertheless, as their weights in the second and the third layers differ, they have different effects on the output units (Fig. 1c). The first convolutional layer reveals feature maps in the inputs, similar to the function of the first convolutional layer in LeNet-5. Higher-order correlations among these feature maps are expected to be expressed via the summation of the depth of K different filters belonging to each branch, similar to the second convolutional layer in LeNet-513,14,15. Preliminary simulations indicate that extending the tree architecture to the case where each branch can have different convolutional filters results in similar success rates. Hence, equalizing the filters among the branches after each BP step is unnecessary. The possibility that large-scale and deeper tree architectures, with an extended number of branches and filters, can compete with state-of-the-art CIFAR-10 success rates deserves further research. The first step was exemplified regarding to LeNet-5 and demonstrated the advantage of the dendritic learning concept and its powerful computation.

Data availability

Source data are provided with this paper. All data supporting the plots within this paper along with other findings of this study are available from the corresponding author upon reasonable request.

Code availability

The simulation code is provided with this paper, parallel to its publication, in GitHub.

References

Hebb, D. O. The Organization of Behavior: A Neuropsychological Theory (Psychology Press, 2005).

Payeur, A., Guerguiev, J., Zenke, F., Richards, B. A. & Naud, R. Burst-dependent synaptic plasticity can coordinate learning in hierarchical circuits. Nat. Neurosci. 24, 1010–1019 (2021).

Shai, A. & Larkum, M. E. Deep learning: Branching into brains. Elife 6, e33066 (2017).

He, K., Zhang, X., Ren, S. & Sun, J. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 770–778.

Wightman, R., Touvron, H. & Jégou, H. Resnet strikes back: An improved training procedure in timm. arXiv preprint https://arxiv.org/abs/2110.00476 (2021).

Huang, G., Liu, Z., Van Der Maaten, L. & Weinberger, K. Q. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 4700–4708.

Han, D., Kim, J. & Kim, J. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 5927–5935.

Fukushima, K. Cognitron: A self-organizing multilayered neural network. Biol. Cybern. 20, 121–136 (1975).

LeCun, Y. & Bengio, Y. Convolutional networks for images, speech, and time series. Handb. Brain Theory Neural Netw. 3361, 1995 (1995).

Serre, T. et al. A theory of Object Recognition: Computations and Circuits in the Feedforward Path of the Ventral Stream in Primate Visual Cortex (Massachusetts Inst of Tech Cambridge MA Center for Biological and Computational Learning, 2005).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25, 1097–1105 (2012).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Howard, A. G. et al. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv preprint https://arxiv.org/abs/1704.04861 (2017).

Kaiser, L., Gomez, A. N. & Chollet, F. Depthwise separable convolutions for neural machine translation. arXiv preprint https://arxiv.org/abs/1706.03059 (2017).

Chollet, F. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 1251–1258.

Hubel, D. H. & Wiesel, T. N. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. 160, 106 (1962).

LeCun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 86, 2278–2324 (1998).

Richards, B. A. et al. A deep learning framework for neuroscience. Nat. Neurosci. 22, 1761–1770 (2019).

Hodassman, S., Vardi, R., Tugendhaft, Y., Goldental, A. & Kanter, I. Efficient dendritic learning as an alternative to synaptic plasticity hypothesis. Sci. Rep. 12, 1–12 (2022).

Sardi, S. et al. Adaptive nodes enrich nonlinear cooperative learning beyond traditional adaptation by links. Sci. Rep. 8, 1–10 (2018).

Waters, J., Larkum, M., Sakmann, B. & Helmchen, F. Supralinear Ca2+ influx into dendritic tufts of layer 2/3 neocortical pyramidal neurons in vitro and in vivo. J. Neurosci. 23, 8558–8567 (2003).

Gidon, A. et al. Dendritic action potentials and computation in human layer 2/3 cortical neurons. Science 367, 83–87 (2020).

Poirazi, P. & Papoutsi, A. Illuminating dendritic function with computational models. Nat. Rev. Neurosci. 21, 303–321 (2020).

Sardi, S. et al. Brain experiments imply adaptation mechanisms which outperform common AI learning algorithms. Sci. Rep. 10, 1–10 (2020).

Krizhevsky, A. & Hinton, G. Learning Multiple Layers of Features from Tiny Images (2009).

Glorot, X. & Bengio, Y. in Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, 249–256 (JMLR Workshop and Conference Proceedings).

Botev, A., Lever, G. & Barber, D. in 2017 International Joint Conference on Neural Networks (IJCNN). 1899–1903 (IEEE).

Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 61, 85–117 (2015).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint https://arxiv.org/abs/1409.1556 (2014).

Lin, M., Chen, Q. & Yan, S. Network in network. arXiv preprint https://arxiv.org/abs/1312.4400 (2013).

LeCun, Y. et al. Learning algorithms for classification: A comparison on handwritten digit recognition. Neural Netw. Stat. Mech. Perspect. 261, 2 (1995).

Bengio, Y., Simard, P. & Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 5, 157–166 (1994).

Acknowledgements

I.K. acknowledges partial financial support of the Israel Ministry Science and Technology, via the grant, Brain-inspired ultra-fast and ultra-sharp machines for AI-assisted healthcare, via collaboration between Italy and Israel. S.H. acknowledges the support of the Israel Ministry Science and Technology.

Author information

Authors and Affiliations

Contributions

Y.M. conducted all simulations together with I.B.N. and Y.T., where S.H. contributed to the simulations on MNIST and preparation of the figures. I.K. initiated the theoretical study of the examined tree architectures and supervised all aspects of the work. All authors commented on the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Meir, Y., Ben-Noam, I., Tzach, Y. et al. Learning on tree architectures outperforms a convolutional feedforward network. Sci Rep 13, 962 (2023). https://doi.org/10.1038/s41598-023-27986-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-27986-6

This article is cited by

-

A polynomial proxy model approach to verifiable decentralized federated learning

Scientific Reports (2024)

-

Enhancing the accuracies by performing pooling decisions adjacent to the output layer

Scientific Reports (2023)