Abstract

The reservoir computing (RC) is increasingly used to learn the synchronization behavior of chaotic systems as well as the dynamical behavior of complex systems, but it is scarcely applied in studying synchronization of non-smooth chaotic systems likely due to its complexity leading to the unimpressive effect. Here proposes a simulated annealing-based differential evolution (SADE) algorithm for the optimal parameter selection in the reservoir, and constructs an improved RC model for synchronization, which can work well not only for non-smooth chaotic systems but for smooth ones. Extensive simulations show that the trained RC model with optimal parameters has far longer prediction time than those with empirical and random parameters. More importantly, the well-trained RC system can be well synchronized to its original chaotic system as well as its replicate RC system via one shared signal, whereas the traditional RC system with empirical or random parameters fails for some chaotic systems, particularly for some non-smooth chaotic systems.

Similar content being viewed by others

Introduction

Chaotic systems have been widely used to many fields1,2,3,4, such as secure communication, image encryption, electronic circuits and so on5,6, due to the excellent dynamical characteristic. Since the 1990s, investigations on theory and its applications of chaotic systems have being increasingly paid attention to by lots of researchers, including on the prediction of chaotic time series as well as on synchronization between chaotic systems, and there emerge a large number of publications7,8,9,10,11,12.

In recent years, the great success of machine learning in artificial intelligence stirs up new research on the prediction of chaotic systems and their dynamical behaviors. For example, some tranditional machine learning methods, such as Recurrent Neural Network (RNN)13, Long Short-Term Memory (LSTM)14, Temporal Convolutional Network (TCN)15 and Manifold Learning16, are applied to predict the chaotic time series, respectively. Also, some hybrid machine learning methods17,18 are developed to deal with the prediction of chaotic time series, where the hybrid machine learning model proposed in the article17 is based on the switching mechanism set by Markov chain, and the other hybrid scheme18 proposed for a long-time and high-accuracy prediction is based on knowledge model and machine learning technique.

Among machine learning methods, reservoir computing (RC) method19,20 also called Echo State Network (ESN) and Liquid State Machine (LSM) by Jaeger21 and Maass22 respectively, greatly attracts the attention of researchers due to its superior learning and prediction ability, and has been efficiently applied to the prediction of phase coherence23, speech recognition24, extreme event25, and other dynamical behaviors. Furtherly, some improved methods26,27,28,29,30 of RC are also proposed for enhancing the ability in forecasting. For instance, Haluszczynski et al.27 improve the prediction performance of RC via reducing network size of the reservoir. Gao et al.29 modify RC method by preprocessing the input data via empirical wavelet transforms. Gauthier et al.28 develop a novel RC with less training meta-parameters (called the next generation reservoir computing) by using the nonlinear vector autoregression on the basis of the equivalence between the reservoir computing and the nonlinear vector autoregression. But, it is still difficult on the whole to develop a general RC method for data from nonlinear systems, particularly from chaotic systems.

Very recently, researchers devote themselves to the prediction of complex dynamical behaviors (such as, synchronization and chimera state) of chaotic systems by using RC method, and have published a few publications31,32,33,34,35,36. At the aspect of chimera state (a coexistence of coherent and non-coherent phases in coupled dynamical systems), Ganaie et al.31 propose a distinctive approach using machine learning techniques to characterize different dynamical phases and identify chimera states from a given spatial profile. Kushwahaet et al.32 use different supervised machine learning algorithms to make model-free predictions of factors characterizing the strength of chimeras and isolated states.

At the aspect of synchronization, Ibáñez-Soria et al.36 make use of RC to successfully detect generalized synchronization between two coupled Rössler systems. Guo et al.33 investigate the transfer learning of chaotic systems via RC method from the perspective of synchronization-based state inference. Weng et al.34 adopt RC model to reconstruct chaotic attractors and achieve synchronization and cascade synchronization between well-trained chaotic systems by sharing one common signal. Hu et al.35 train chaotic attractors via RC method, and realize synchronization between linearly coupled reservoir computers by control the coupling strength.

However, all above mentioned works aim at smooth chaotic systems, few investigations involve in the prediction and learning of non-smooth chaotic systems whose dynamical behaviors may be more complicated than the smooth ones since the non-differential or discontinuous vector field in non-smooth systems easily lead to the singularity. No coincidentally, our extensive simulations show that the traditional RC method has poor prediction effect for some non-smooth chaotic time series, especially for discrete-time chaotic systems, please see Table 1 and Fig. 1. On the other hand, non-smooth chaotic systems have many applications in engineering fields, and they are frequently used to model circuit systems, such as Chua circuit system. Motivated by the above aspects, the paper proposes a hybrid RC method with optimal parameter selection algorithm for improving the prediction ability for non-smooth as well as smooth chaotic systems, and based on the improved RC model we carry out synchronization between well-trained RC systems via the variable substitution control.

Results

In this section, based on the improved Reservoir Computing (RC) machine learning model, we will use chaotic data to check that the proposed RC model with SADE algorithm can work well for the non-smooth chaotic systems as well as the smooth chaotic systems, and to verify the availability of PC synchronization between the constructed and trained RC systems.To begin with, the fourth-order Runge-Kutta method is used to calculate numerical solutions of smooth chaotic systems (such as, Lorenz and Rössler systems shown in37,38) and piecewise smooth chaotic systems (such as Chua and PLUC systems), the observation time step \(\Delta t=0.02\), and the numerical solution is transformed into the data at the range of \([-1,1]\) for convenience. For all the used chaotic systems, the first 3000 observations are used to train the RC system after discarding the leading appropriate amount of observations to eliminate transient states, and the first 100 observations of the trained RC system are discarded for the steady running.

Furthermore, the basic parameters of RC model are set as follows. In Eq. 9, \(\sigma =1\) for which the weight \(W_{in}\) is randomly selected from the uniform distribution of \((-\sigma ,\sigma )\), the leakage rate of the reservoir is \(\alpha =0.2\), and \(\lambda =1\times 10^{-8}\) in Eq. 10.

Main parameters selection and model-free prediction

This part will employ SADE algorithm mentioned in the previous section to select the appropriate parameters (N, p, r) for sparse Erdös-Rényi (ER) random network of RC systems, make model-free prediction of the trained RC system with the selected parameters, and compare prediction effect with those with empirical parameters as well as randomly-selected one.

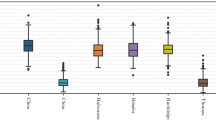

Comparison of model-free prediction effect between optimal parameters (red dashed line) based on SADE algorithm, empirical parameters (purple dash-dot line) and random parameters (cyan dotted line) for Hénon (a), Ikeda (b), Chua (c), PLUC (d), Lorenz (e) and Rössler (f) chaotic systems. Here the cyan, purple, red solid vertical lines represent the prediction time (or step) span respectively for random, empirical and optimal parameters at the same square mean error listed in Table 1.

Based on the presented SADE algorithm, the selected parameters in ER random networks and the prediction duration of RC for different chaotic systems are listed in Table 1, where each in 50 experiments has the same prediction duration for the improved RC at the given precision threshold. It can be seen that for PLUC chaotic system, the corresponding trained RC system can well predict up to 20 seconds (about 31 units of Lyapunov time) at the square mean prediction error of least \(10^{-3}\) order. But for smooth chaotic systems, Lorenz and Rössler systems, surprisingly, the trained RC for Rössler system can predict up to 110 seconds (about 9 units of Lyapunov time) at the prediction error of least \(10^{-8}\) order. Similarly, the trained RC also performed well for Lorenz system, and the prediction time is up to 20 units of Lyapunov time at the error of \(10^{-4}\) order, more 7 and 11 units than the empirical and randomly-selected parameter approaches, respectively. For the discrete Hénon and Ikeda chaotic systems, the trained RC can predict up to 60 steps (about 25 and 8 units of Lyapunov time, respectively) at the prediction error of least \(10^{-3}\) order and \(10^{-5}\) order, respectively.

More importantly, for all the considered chaotic systems including discrete, piecewise smooth, and smooth chaotic systems, the prediction time duration of RC with optimally-selected parameters is far longer at the same of prediction precision threshold than those with empirical parameters (\(N=500\), \(p=0.25\) and \(r=0.95\)) as well as those with randomly-selected parameters, see the last two columns in Table 1, and the corresponding state variable evolution shown in Fig. 1.

Specifically, the Lorenz-trained RC system with optimal parameters can predict up to 15-seconds time span, about 20 units of Lyapunov time, at the error of \(5.67\times 10^{-4}\), but that with empirical parameters (random parameters) just can predict up to about 10 (6.6)-seconds time span, about 13 (9) units of Lyapunov time, at the same error. Surprisingly, for another smooth chaotic system, Rössler system, the trained RC system with optimal parameters can reach up to 110-seconds prediction time span, about 9 units of Lyapunov time, with high accuracy (\(8.89\times 10^{-8}\) order error), but those with empirical parameters and with randomly-selected parameters has much less prediction time at the same of error, 22.96 seconds and 31.88 seconds (about 2 and 3 units of Lyapunov time), respectively. Please see Fig. 1e,f for the state evolution.

Particularly for non-smooth chaotic systems, the trained RC systems with optimal parameters have the overwhelming prediction effect. For example, at the same error, the Chua-trained RC system with optimal parameters has 40-seconds prediction time, far bigger than those with empirical parameters (18.78-seconds prediction time) and with randomly-selected parameters (11.66-seconds prediction time), as shown in Fig. 1c. For PLUC system, there are huge difference of prediction effect between using optimal parameters and using empirical (or randomly-selected) parameters, the former reaches up to 20-seconds prediction time at the error listed in Table 1, and the latter just predicts less than 1-second time span at the same error, as shown in Fig. 1d. For discrete Hénon and Ikeda chaotic systems, their trained RC systems with optimal parameters can predict up to 60 steps, three time as much as those with empirical (or randomly-selected) parameters where both have the same prediction steps, exactly as cyan and purple vertical lines overlap each other in Fig. 1a,b.

In brief, compared with the traditional RC systems (using empirical or randomly-selected parameters), the improved RC systems based on SADE parameter selection algorithm can make a far better prediction not only for non-smooth chaotic systems, but for smooth chaotic systems (such as Lorenz system and Rössler system). In other words, the improved RC system can better learn the chaotic systems including smooth and non-smooth ones. In next section, the advantage will be shown again for PC synchronization behaviors between chaotic system and its trained RC system as well as between two trained RC systems.

State variable evolution plots of synchronization between two RC systems trained from (a) Chua system, (b) PLUC system, (c) Hénon system, and (d) Ikeda system, respectively. Here the drive variable is the same as in Fig. 2.

PC synchronization between chaotic systems and trained RC systems

Next, we use the proposed RC model based on SADE algorithm to train the RC systems for all the chaotic systems under consideration, and perform PC synchronization between the chaotic system and the trained RC system, between two trained RC systems, and between a chaotic system and two trained RC systems through the corresponding drive variables shown in Table 2.

Consequently, synchronization behavior of four non-smooth chaotic systems are shown in Figs. 2, 3, and 4 respectively for the cases between the chaotic system and its trained RC system, between two trained systems, and between Ikeda system and its two trained RC systems where the x component of Ikeda system is used to drive the first RC system, and the y component of the first RC system is used to drive the second RC system. The results demonstrate the good realization of PC synchronization, implying the availability of the improved RC model with SADE algorithm for non-smooth chaotic systems, of course as well as for smooth chaotic systems (synchronization evolution plots are not provided here).

However, using empirical parameters and randomly-selected parameters, not all four non-smooth chaotic systems can be synchronized to their corresponding trained RC systems. As shown in Figs. 5 and 6, for PLUC system and Ikeda system, the synchronization error does not tend to zero as time goes, implying the failure of synchronization between the chaotic system and its trained RC system, as well as between two trained RC systems. Further, for Chua system, synchronization also fails between two trained RC systems with randomly-selected parameters (see Fig. 6a). These show the advantage of the proposed RC model with SADE algorithm and the importance of main parameters of ER network in RC model.

Interestingly, whatever using the empirical parameter or randomly-selected parameter, let alone the optimal parameter, the RC system trained from Hénon system works well, and it is able to be well synchronized with the original Hénon system as well as with its replicate RC system. A possible reason is the fact that the error \(\Delta {\varvec{w}}\equiv 0\) in (8) as x is the drive variable.

Synchronization error between the chaotic system and its trained RC systems with different types of parameters where RC models are trained from (a) Chua system, (b) PLUC system, (c) Hénon system, and (d) Ikeda system, respectively. Here the drive variable is the same as in Fig. 2.

Synchronization error between two trained RC systems with different types of parameters where RC models are trained from (a) Chua system, (b) PLUC system, (c) Hénon system, and (d) Ikeda system, respectively. Here the drive variable is the same as in Fig. 2.

Discussion

In summary, for better learning synchronization behaviors of non-smooth chaotic systems, the paper has proposed an improved RC model based on SADE algorithm, which is capable of training up a set of optimal parameters in the reservoir, and thus of making a long-term prediction for chaotic systems. Also, it can work well for synchronization between the chaotic systems and well-trained RC systems. Significantly, the experiments have shown that the proposed method is effective not only for non-smooth chaotic systems, but for smooth chaotic systems. In other words, compared with the traditional RC, our RC based on SADE has a wider range of applications. Extensive simulations have shown that the proposed RC model has significant longer prediction time than the traditional RC model with empirical parameters or random parameters, and the trained RC systems are well synchronized to their original chaotic systems as well as the corresponding replicate RC systems whereas the traditional RC system can not yet work well.

Just because of the perfect prediction ability for chaotic systems, the well-trained RC system is more closely approximated to the original chaotic system, and thus it can successfully demonstrate synchronization behavior occurring in the drive-response chaotic system described in the second section. Theoretically, if the RC is sufficiently approximated to the original system, the variable driving the original drive-response system into synchronization is able to drive the trained RC system and its replica (or the original system) into synchronization. Conversely, the variable not driving the original one into synchronization should not drive two trained RC systems into synchronization.

Although the simple grid search approach may yield a as at least good result as the SADE approach in this work does, it spends far more time to find the optimal parameters than the SADE method. On the other hand, compared with the empirical or randomly-selected parameter approaches, the proposed method has significant advantage and performs well even for non-smooth chaotic systems, as shown in the above section. As a trade-off method, the proposed RC model with SADE algorithm is a good choice for training chaotic data and achieving PC synchronization. It may be applicable to many other chaotic systems including some complicated piecewise smooth chaotic systems only if avoiding the overfitting problem. Besides PC synchronization in the drive-response system where the coupling is unidirectional, the proposed RC model may be used to realize synchronization in the bi-directionally coupled non-smooth chaotic systems even the coupled networked chaotic systems.

Methods

Non-smooth chaotic systems

Here briefly reviews the used non-smooth chaotic systems, including piecewise smooth chaotic systems (Chua system and a piecewise linear unified chaotic system) and discrete chaotic systems (Hénon map and Ikeda map).

Chua System39:

where \(f(x)=\frac{2}{7}x-\frac{3}{14}(|x+1|-|x-1|)\). The phase diagram is shown in Fig. 7a.

Piecewise Linear Unified Chaotic (PLUC) System40:

where sgn(y) is the symbolic function, namely \(sgn(y)=1\) when \(y>0\), \(sgn(y)=0\) when \(y=0\) and \(sgn(y)=-1\) otherwise. The phase diagram is shown in Fig. 7.

Hénon Map41:

Ikeda Map42:

The phase diagrams of Hénon Map and Ikeda Map are shown in Fig. 8.

PC synchronization principles

In early years, Pecora and Carroll presented a variable substitution method43,44 for achieving synchronization between chaotic systems. The basic idea is to split the original system into two subsystems, copy one of the subsystems, and then replace the corresponding variable of the replicated subsystem with the variable (called the drive variable) of the other subsystem, and eventually realize synchronization between the replicated subsystem and the original system. The diagram is clearly shown in Fig. 9, and the mathematical model is described as follows.

For an n-dimensional chaotic system,

Divide arbitrarily it into two subsystems, for instance,

and

where \({\varvec{v}}=(u_{1},u_{2},\ldots ,u_{m})\) and \({\varvec{w}}=(u_{m+1},u_{m+2},\ldots ,u_{n})\).

Now create a new system \(\frac{d{\varvec{w}}'}{dt}=h({\varvec{v}}',{\varvec{w}}')\) identical to the subsystem of \(\frac{d{\varvec{w}}}{dt}=h({\varvec{v}},{\varvec{w}})\), substitute the variable \(\varvec{v}'\) in the function \(h({\varvec{v}}',{\varvec{w}}')\) with the corresponding variable \({\varvec{v}}\) (called the drive variable), and get the called response system \(\frac{d{\varvec{w}}'}{dt}=h({\varvec{v}},{\varvec{w}}')\). Together with the drive system (5), it follows that

The subsystems of \({\varvec{w}}\) and \({\varvec{w}}'\) is synchronized each other, called PC synchronization here, if the error \(\Delta {\varvec{w}}={\varvec{w}}-{\varvec{w}}'\rightarrow 0\) as \(t\rightarrow \infty\), which can be theoretically checked through the negative conditional Lyapunov exponent of the drive-response system (8). Through our checking, the system can achieve synchronization when the appropriate variables listed in Table 2 are selected as the drive variable.

In recent years, RC model is frequently applied and studied on account of its excellent predictive effect for nonlinear time series. As many researchers know, the reservoir consisting of ER random network structures plays a key role in this machine learning model, which can well characterize the connected neurons in the recurrent neural network, and is with strong dynamical characteristics.

In general, the parameters (network size N, connected probability p and network spectral radius r) of ER random networks in the reservoir are selected by experience, but the parameters have significant impact on the prediction effect, and therefore the inappropriate selection may lead to the poor prediction. In this section, we introduce a simulated annealing-based differential evolution (SADE) method for parameter selection, and present a reservoir computing system with SADE algorithm. Furtherly, we introduce how to realize PC synchronization via the presented reservoir computing model.

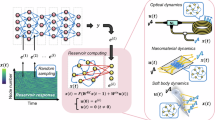

The reservoir computing system is composed of the input layer, the reservoir part, and the output layer. Denote \({\varvec{u}}(t)=(u_1(t),u_2(t),\ldots ,u_n(t))^T\) the input vector in the input layer, \({\varvec{r}}(t)=(r_1(t),r_2(t),\ldots ,r_m(t))^T\) the state vector in the reservoir, and \({\varvec{y}}(t)=(y_1(t),y_2(t),\ldots ,y_l(t))^T\) the output vector in the output layer. Then, the run of reservoir computers can be divided into three parts: Initialization, Training and Prediction, which are described as follows:

Reservoir computing systems with SADE parameter selection algorithms

-

Initialization: Generate the reservoir’s adjacency matrix A and the input weight matrix \(W_{in}\). In general, A is a sparse ER random network matrix with the appropriate network size N, connected probability p and the spectral radius r, and its non-zero entries are randomly chosen from the uniform distribution of \([-1,1]\). The elements of matrix \(W_{in}\) are randomly chosen from the uniform distribution of (-\(\sigma , \sigma\)).

-

Training: Train the output weight matrix \(W_{out}\) by using the training data set. Here we update the reservoir network state vector \({\varvec{r}}(t)\) according to the following iterative law:

$$\begin{aligned} {\varvec{r}}(t+1)=(1-\alpha ){\varvec{r}}(t)+\alpha \tanh \left[ A{\varvec{r}}(t)+W_{in}\left( \begin{array}{c} b_{in}\\ {\varvec{u}}(t) \end{array}\right) \;\right] , \end{aligned}$$(9)where \(\alpha\) is the “leakage” rate limited in the interval of [0, 1], \(\tanh\) is the hyperbolic tangent function, \(b_{in}=1\) is the offset, and A and \(W_{in}\) are the mentioned above adjacency matrix and input weights, respectively. To eliminate the transient state of the reservoir computer with the initial \({\varvec{r}}(0)\), the first \(\tau\) time-steps states are removed out. Next, the state vector and the training data denoted \(\{{\varvec{s}}(t)|t=\tau , \tau +1, \ldots , \tau +T\}\) are used to train the output \(W_{out}\). Here the training data is taken as the input data \(\{{\varvec{u}}(t)|t=\tau , \tau +1, \ldots , \tau +T\}\) where \({\varvec{u}}(t)\) is used to predict next forward \({\varvec{u}}(t+1)\). According to the reference20, the output weights \(W_{out}\) can be calculated by

$$\begin{aligned} W_{out}=YX^T(XX^T+\lambda I)^{-1}. \end{aligned}$$(10)Where I is an identity matrix and \(\lambda\) is a ridge regression parameter for avoiding overfitting. X is the matrix whose t-th column is \((b_{out},{\varvec{s}}(t),{\varvec{r}}(t))^T\), and Y is the one whose t-th column is \({\varvec{s}}(t+1)\).

-

Prediction: With the trained output weights, the reservoir computing system based on Eqs. 9, 10, 11 can run autonomously, and use the current output vector \({\varvec{y}}(t)\) of (11) to predict \({\varvec{u}}(t+1)\) at the next moment, and so on.

$$\begin{aligned} {\varvec{y}}(t)=W_{out}\left( \begin{array}{c} b_{out}\\ {\varvec{u}}(t)\\ {\varvec{r}}(t) \end{array}\right) . \end{aligned}$$(11)

As mentioned in the beginning of this section, the number of nodes N, the connected probability p and the spectral radius r in ER random network of the reservoir, have a great impact on the prediction effect particularly for some chaotic systems. Our experiments have shown that the parameters selected by experience lead to poor prediction for some discrete chaotic systems and non-smooth chaotic systems.

The schematic diagram for SADE algorithm, where each cycle includes evolution and annealing process. Each circle represents one individual with genes of N, p, and r, and different shades of color mean different groups. After some cycles of differential evolution and simulated annealing, the parameter corresponding to the best individual is chosen to generate the reservoir network of RC.

For better prediction, we will introduce the simulated annealing-based differential evolution algorithm (SADE) to select the optimal parameters via which we train the reservoir computing system and then realize synchronization between trained reservoir computing systems.

The SADE algorithm is a combination of the differential evolution and the simulated annealing algorithms, where the former is a type of evolution algorithm with three steps of mutation, crossover and selection, and it aims to retain the better individual and eliminates the inferior individual until the fitness is met45. It can improve the population of feasible solutions and find the global optimal solution more quickly. The latter is an optimization algorithm imitating the physical process of solid annealing, and it can avoid being trapped into local optimal solution via the cooling procedure and the Metropolis rule. So, simulated annealing combined with different evolution algorithm may greatly improve the effect of optimizing parameters, and is employed to obtain the optimal parameters (N, p, r) for the adjacency matrix A in the reservoir computing system. The schematic diagram is illustrated in Fig. 10.

Based on the SADE algorithm, the parameter selection procedure includes four main phases. First, the initialization phase of population individuals, i.e., generating a three-dimensional parameter space (N, p, r). Second, the differential evolution phase for better solutions. Specifically, update the parameter setting for reservoir computing system, one can train the updated RC system through the chaotic time series, and then make the prediction via the trained RC system. If the fitness (here the mean square error is used) meets the requirement, the set of parameters is believed as the optimal parameters. Otherwise, mutation, crossover and selection operations are done to generate the new parameter individual, and then turns to the simulated annealing phase for further optimizing the parameter. Repeat the above operation until the fitness meets the requirement. Finally, it is the optimal parameters outputting phase for system prediction. Please see the Appendix for the detailed steps and procedure shown in shown in Fig. S1.

In general, compared with the differential evolution algorithm, the simulated annealing-based differential evolution algorithm has stronger global search ability, and provides the optimal parameters for better prediction. As shown in our extensive simulations (not given here), the prediction accuracy via SADE algorithm is least an order of magnitude higher than that via differential evolution algorithm whether for smooth chaotic systems or for non-smooth chaotic systems. Especially for non-smooth chaotic systems, the prediction accuracy can be improved up to three order of magnitude.

Reservoir computing models for PC synchronization

Suppose \({\varvec{u}}(t+1)=F({\varvec{u}}(t))\) is the chaotic system to be learned, and \({\varvec{u}}'(t+1)={\hat{F}}({\varvec{u}}'(t))\) is the trained RC system after learning the chaotic system.

Take a three-dimensional chaotic system as an example, Fig. 11a illustrates the diagram of PC synchronization between the chaotic system and the trained RC system via the drive signal of x component, and the detailed implementation process is described as follows:

-

Initiation. Generating the chaotic time series from \({\varvec{u}}(t+1)=F({\varvec{u}}(t))\) with the initial value \({\varvec{u}}_0=(x_0,y_0,z_0)^T\), and \({\varvec{u}}'(t)={\hat{F}}({\varvec{u}}'(t))\) with the initial value \({\varvec{u}}'_0=(x_0,y'_0,z'_0)^T\) where \(y'_0\) and \(z'_0\) different from \(y_0\) and \(z_0\) are randomly chosen.

-

Substitution and update. Replacing \(x'_t\) of the previous output \((x'_t,y'_t,z'_t)^T\) with \(x_t\) of the chaotic data, getting the vector \({\varvec{u}}'(t+1)=(x_t,y'_t,z'_t)^T\), and then it is used as the next input vector to generate new iteration.

-

Checking. If the errors \(\Delta y(t)=|y'_t-y_t|\) and \(\Delta z(t)=|z'_t-z_t|\) converge to zero as time, then the response system (the trained RC system) is synchronized with the drive system (the chaotic system) via the x component.

Similarly, we can create another replica of the trained RC system, and to realize the synchronization between two trained RC systems. The diagram is shown in Fig. 11b.

Suppose

is the trained RC system where the input \({\varvec{u}}'=(x'_t,y'_t,z'_t)^T\), and create the replicate RC system denoted as

where the input \({\varvec{u}}''=(x'_t,y''_t,z''_t)^T\) for which the initial value \(y''_0\) and \(z''_0\) are different from \(y'_0\) and \(z'_0\), and \(x'_t\) is the drive variable come from the system (12).

According to the principle of PC synchronization, at each iteration, \(x''_{t+1}\) compenont of the output \({\varvec{u}}''(t+1)=(x''_{t+1},y''_{t+1},z''_{t+1})^T\) in (13) is replaced with \(x'_{t+1}\) of the output \({\varvec{u}}'(t+1)=(x'_{t+1},y'_{t+1},z'_{t+1})^T\) in (12), and the vector \((x'_{t+1},y''_{t+1},z''_{t+1})^T\) is used as the next input in (13) to perform the new iteration. Finally, using the error to check whether the synchronization between two RC systems is achieved or not.

For the cascade synchronization between a chaotic system and two RC systems shown in Fig. 11c, take x and y components as the drive variables, and suppose the chaotic system and two trained RC systems are described as

where \({\varvec{u}}(t)=(x_t,y_t,z_t)^T\), \({\varvec{u}}'(t)=(x_t,y'_t,z'_t)^T\) and \({\varvec{u}}''(t)=(x''_t,y'_t,z''_t)^T\), and the initial values \({\varvec{u}}_0=(x_0,y_0,z_0)^T\), \({\varvec{u}}'_0=(x_0,y'_0,z'_0)^T\) and \({\varvec{u}}''_0=(x''_0,y'_0,z''_0)^T\). Here, the chaotic system (14a) is called the drive system, RC systems (14b) and (14c) are called the first and second response system, respectively.

At each new iteration, the vector \((x_{t+1},y'_{t+1},z'_{t+1})^T\) obtained by replacing \(x'_{t+1}\) of the output \({\varvec{u}}'(t+1)=(x'_{t+1},y'_{t+1},z'_{t+1})^T\) in (14b) with \(x_{t+1}\) of the output \({\varvec{u}}(t+1)=(x_{t+1},y_{t+1},z_{t+1})^T\) in (14a), is used as the input vector in (14b) for next iteration. Following up, the vector \((x''_{t+1},y'_{t+1},z''_{t+1})^T\) obtained by replacing \(y''_{t+1}\) of the output \({\varvec{u}}''(t+1)=(x''_{t+1},y''_{t+1},z''_{t+1})^T\) in (14c) with \(y'_{t+1}\) of the output \({\varvec{u}}'(t+1)=(x'_{t+1},y'_{t+1},z'_{t+1})^T\) in (14b), is used as the input vector in (14c) for next iteration. Finally, one can check the error between \({\varvec{u}}\), \({\varvec{u}}'\) and \({\varvec{u}}''\) for the cascade synchronization between the chaotic system and two response RC systems.

Data availability

All data generated and all programs used during the current study are available from the corresponding author on reasonable request.

References

Shoreh, A.-H., Kuznetsov, N. & Mokaev, T. New adaptive synchronization algorithm for a general class of complex hyperchaotic systems with unknown parameters and its application to secure communication. Phys. A 586, 126466 (2022).

Bertozzi, A. L., Franco, E., Mohler, G., Short, M. B. & Sledge, D. The challenges of modeling and forecasting the spread of covid-19. Proc. Natl. Acad. Sci. USA 117, 16732–16738 (2020).

Wang, B. et al. Intelligent parameter identification and prediction of variable time fractional derivative and application in a symmetric chaotic financial system. Chaos Soliton. Fract. 154, 111590 (2022).

Lin, H. & Liu, S.-Q. Circuit dynamics in lobster stomatogastric ganglion based on winnerless competition network. Phys. A 560, 125107 (2020).

Keuninckx, L. et al. Encryption key distribution via chaos synchronization. Sci. Rep. 7, 43428 (2017).

Ma, C. et al. Dynamical analysis of a new chaotic system: Asymmetric multistability, offset boosting control and circuit realization. Nonlinear Dyn. 103, 2867–2880 (2021).

Kantz, H. & Schreiber, T. Nonlinear Time Series Analysis (Cambridge University Press, 2003), 2 edn.

Wang, S. Dynamics, synchronization control of a class of discrete quantum game chaotic map. Physica A600, 127596 (2022).

Wu, C. W. Generalized Hamiltonian dynamics and chaos in evolutionary games on networks. Phys. A 597, 127281 (2022).

Lu, J., Liu, H. & Chen, J. Synchronization Complex Dynamical Networks (Higher Education Press (In Chinese), 2016).

Tang, L., Wu, X., Lü, J., Lu, J.-A. & D’Souza, R. M. Master stability functions for complete, intralayer, and interlayer synchronization in multiplex networks of coupled rössler oscillators. Phys. Rev. E 99, 012304 (2019).

Lahav, N. et al. Topological synchronization of chaotic systems. Sci. Rep. 12, 2508 (2022).

Han, M., Xi, J., Xu, S. & Yin, F.-L. Prediction of chaotic time series based on the recurrent predictor neural network. IEEE Trans. Signal Process. 52, 3409–3416 (2004).

Cheng, W. et al. High-efficiency chaotic time series prediction based on time convolution neural network. Chaos Soliton. Fract. 152, 111304 (2021).

Kavuran, G. When machine learning meets fractional-order chaotic signals: Detecting dynamical variations. Chaos Soliton. Fract. 157, 111908 (2022).

Han, M., Feng, S., Chen, C. P., Xu, M. & Qiu, T. Structured manifold broad learning system: A manifold perspective for large-scale chaotic time series analysis and prediction. IEEE Trans. Knowl. Data Eng. 31, 1809–1821 (2018).

Ouyang, T., Huang, H., He, Y. & Tang, Z. Chaotic wind power time series prediction via switching data-driven modes. Renew. Energy 145, 270–281 (2020).

Pathak, J. et al. Hybrid forecasting of chaotic processes: Using machine learning in conjunction with a knowledge-based model. Chaos 28, 041101 (2018).

Verstraeten, D., Schrauwen, B., D\(^{\prime }\)Haene, M. & Stroobandt, D. An experimental unification of reservoir computing methods. Neural Netw.20, 391–403 (2007).

Dutoit, X. et al. Pruning and regularization in reservoir computing. Neurocomputing 72, 1534–1546 (2009).

Jaeger, H. The”echo state”approach to analysing and training recurrent neural networks. In GMD\(-\)German National Research Institute for Computer Science, 148 (2001).

Maass, W., Natschläger, T. & Markram, H. Real-time computing without stable states: A new framework for neural computation based on perturbations. Neural Comput. 14, 2531–2560 (2002).

Zhang, C., Jiang, J., Qu, S.-X. & Lai, Y.-C. Predicting phase and sensing phase coherence in chaotic systems with machine learning. Chaos 30, 083114 (2020).

Moon, J. et al. Temporal data classification and forecasting using a memristor-based reservoir computing system. Nat. Electron. 2, 480–487 (2019).

Pyragas, V. & Pyragas, K. Using reservoir computer to predict and prevent extreme events. Phys. Lett. A 384, 126591 (2020).

Choi, J. & Kim, P. Reservoir computing based on quenched chaos. Chaos Soliton. Fract. 140, 110131 (2020).

Haluszczynski, A., Aumeier, J., Herteux, J. & Räth, C. Reducing network size and improving prediction stability of reservoir computing. Chaos 30, 063136 (2020).

Gauthier, D. J., Bollt, E., Griffith, A. & Barbosa, W. A. S. Next generation reservoir computing. Nat. Commun. 12, 5564 (2021).

Gao, R., Du, L., Duru, O. & Yuen, K. F. Time series forecasting based on echo state network and empirical wavelet transformation. Appl. Soft Comput. 102, 107111 (2021).

Fan, H., Jiang, J., Zhang, C., Wang, X. & Lai, Y.-C. Long-term prediction of chaotic systems with machine learning. Phys. Rev. Res. 2, 012080 (2020).

Ganaie, M. A., Ghosh, S., Mendola, N., Tanveer, M. & Jalan, S. Identification of chimera using machine learning. Chaos 30, 063128 (2020).

Kushwaha, N., Mendola, N. K., Ghosh, S., Kachhvah, A. D. & Jalan, S. Machine learning assisted chimera and solitary states in networks. Front. Phys. 9, 513969 (2021).

Guo, Y. et al. Transfer learning of chaotic systems. Chaos 31, 011104 (2021).

Weng, T., Yang, H., Gu, C., Zhang, J. & Small, M. Synchronization of chaotic systems and their machine-learning models. Phy. Rev. E 99, 42203 (2019).

Hu, W., Zhang, Y., Ma, R., Dai, Q. & Yang, J. Synchronization between two linearly coupled reservoir computers. Chaos Soliton. Fract. 157, 111882 (2022).

Ibáñez Soria, D., Garcia-Ojalvo, J., Soria-Frisch, A. & Ruffini, G. Detection of generalized synchronization using echo state networks. Chaos 28, 033118 (2018).

Lorenz, E. N. Deterministic nonperiodic flow. J. Atmos. Sci. 20, 130–141 (1963).

Rössler, O. An equation for continuous chaos. Phys. Lett. A 57, 397–398 (1976).

Chen, G. & Lü, J. Dynamic analysis, control and synchronization of Lorenz system family. (Science Press (In Chinese), 2003).

Tang, L. & Liang, J. Comparison of the influences of nodal dynamics on network synchronized regions. Chin. J. Phys. 56, 1488–1496 (2018).

Hénon, M. A two-dimensional mapping with a strange attractor. Commun. Math. Phys. 50, 69–77 (1976).

Ikeda, K. Multiple-valued stationary state and its instability of the transmitted light by a ring cavity system. Opt. Commun. 30, 257–261 (1979).

Pecora, L. M. & Carroll, T. L. Synchronization in chaotic system. Phys. Rev. Lett. 64, 821 (1990).

Pecora, L. M. & Carroll, T. L. Synchronization of chaotic systems. Chaos 25, 097611 (2015).

Liu, K., Du, X. & Kang, L. Differential evolution algorithm based on simulated annealing. In Advances in Computation and Intelligence, 120–126 (Springer Berlin Heidelberg, 2007).

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grants 62076104, 61573004, 11871231 and 11901215, in part by the Natural Science Foundation of Fujian Province under Grants 2019J01065 and 2021J01303.

Author information

Authors and Affiliations

Contributions

G.W. performed the numerical calculations and prepared the figures, L.T. conceived of the study and supervised the whole research progress, all authors wrote and reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wu, G., Tang, L. & Liang, J. Synchronization of non-smooth chaotic systems via an improved reservoir computing. Sci Rep 14, 229 (2024). https://doi.org/10.1038/s41598-023-50690-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-023-50690-4

This article is cited by

-

Cell Fate Dynamics Reconstruction Identifies TPT1 and PTPRZ1 Feedback Loops as Master Regulators of Differentiation in Pediatric Glioblastoma-Immune Cell Networks

Interdisciplinary Sciences: Computational Life Sciences (2025)