Abstract

The proposed AI-based diagnostic system aims to predict the respiratory support required for COVID-19 patients by analyzing the correlation between COVID-19 lesions and the level of respiratory support provided to the patients. Computed tomography (CT) imaging will be used to analyze the three levels of respiratory support received by the patient: Level 0 (minimum support), Level 1 (non-invasive support such as soft oxygen), and Level 2 (invasive support such as mechanical ventilation). The system will begin by segmenting the COVID-19 lesions from the CT images and creating an appearance model for each lesion using a 2D, rotation-invariant, Markov–Gibbs random field (MGRF) model. Three MGRF-based models will be created, one for each level of respiratory support. This suggests that the system will be able to differentiate between different levels of severity in COVID-19 patients. The system will decide for each patient using a neural network-based fusion system, which combines the estimates of the Gibbs energy from the three MGRF-based models. The proposed system were assessed using 307 COVID-19-infected patients, achieving an accuracy of \(97.72\%\pm 1.57\), a sensitivity of \(97.76\%\pm 4.08\), and a specificity of \(98.87\%\pm 2.09\), indicating a high level of prediction accuracy.

Similar content being viewed by others

Introduction

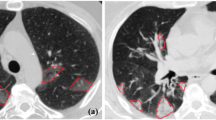

The severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) virus emerged at the end of 2019 and caused the coronavirus disease of 2019 (COVID-19)1. The virus quickly spread across the world, resulting in severe impacts on the global economy and public health2. In March 2020, the World Health Organization (WHO) declared COVID-19 a global pandemic due to its rapid spread3. The severity of COVID-19 infection varied among individuals4. Based on the respiratory support provided to COVID-19 patients, the severity of COVID-19 infection was classified into three categories: minimal support (level 0), non-invasive support (level 1), and invasive support (level 2)5. Individuals with level-0 COVID-19 required minimal support for treatment, while those with level-1 COVID-19 had moderate symptoms and required non-invasive ventilation (e.g., soft oxygen) to recover. Individuals with level-2 COVID-19 had severe symptoms and required invasive ventilation (e.g., mechanical ventilation) for recovery. Figure 1 depicts the three respiratory support levels that COVID-19 patients may require during treatment and their correlation with CT images. COVID-19 has affected a staggering 758 million people worldwide, with 6.859 million deaths attributed to incorrect or delayed identification of COVID-19 severity6. Thus, identifying the severity of COVID-19 is crucial to determine the appropriate treatment for infected individuals7. Since the beginning of the pandemic, physicians have relied on imaging data such as X-ray and CT scans to diagnose the severity of COVID-198. However, this method of classification has resulted in incorrect identification of severity, leading to inappropriate treatment and, in some cases, fatalities.

Many recent studies have proposed AI-based computer-aided diagnosis (CAD) systems that can detect COVID-19 severity, but they rely only on imaging data, leading to inaccurate results. In this paper, we present a new CAD system that predicts the respiratory support level (i.e., the severity of COVID-19) needed for each COVID-19 patient by analyzing the relationship between their CT scan volume and respiratory support, with the following contributions: (1) we propose an automatic segmentation system that delineates the lung regions from CT scans. (2) This proposed segmentation system is also used to extract lesion regions from region of interest selected by a radiologist. (3) A 3D rotation-invariant MGRF model is employed to represent the discrimination between different respiratory support levels. (4) Two stages of neural networks are utilized to predict the required respiratory support level for each patient by combining the results of each model.

Related work

The emergence of COVID-19 led to the development of CAD systems for diagnosing and classifying its severity. Cabitza et al.9 developed machine learning models that accurately detect COVID-19 using blood test results. The models were built and validated with data from 371 COVID-19-positive patients and 526 COVID-19-negative patients, achieving an overall accuracy of 95.7%. Yao et al.10 developed a machine-learning model that predicts the severity of COVID-19 using routine blood and urine test results. They used data from 205 COVID-19 patients and employed feature selection techniques and various machine-learning algorithms to build and validate their model. The results revealed that the model accurately predicted the severity of COVID-19 based on the patient’s blood and urine test results. Brinati et al.11 investigated the feasibility of detecting COVID-19 infection from routine blood tests using machine learning algorithms. They collected data from 279 COVID-19-positive and 277 COVID-19-negative patients and utilized five different machine learning models to classify patients as COVID-19 positive or negative based on their blood test results. The results indicated that the models accurately detected COVID-19 infection from routine blood tests with an overall accuracy ranging from 83.3 to 97.8%. Aktar et al.12 proposed a CAD system for identifying COVID-19 disease using a Reverse Transcription-Polymerase Chain Reaction (RT-PCR) test. Their system had two phases: feature selection and diagnosis. The authors used chi-square, Pearson correlation, and Student t algorithms in the feature selection phase to choose blood parameters that could distinguish between healthy and COVID-19 patients. In the diagnosis phase, they employed different machine-learning algorithms, including a decision tree, random forest, gradient boosting machine, and support vector machine (SVM), to detect COVID-19 infection. The authors created a new dataset by combining two datasets to evaluate the system’s performance. The experimental results demonstrated that the random forest algorithm outperformed others with 92% accuracy. Zhang et al.13 proposed a CAD system that can determine the severity of COVID-19 infection by analyzing patients’ blood test results. The system categorizes patients into either mild or severe cases. The authors employed six different classifiers, including random forest, naive Bayes, SVM, k-nearest neighbors (KNN), logistic regression, and neural networks, to determine the severity of the disease. They tested the performance of the system on a dataset of 422 COVID-19-positive patients from the Shenzhen Third People’s Hospital. The dataset included 38 blood test features for each patient. The authors found that naive Bayes outperformed the other classifiers, with an area under the curve (AUC) of 0.90. Previous CAD systems had shown that classification based on blood tests alone could not achieve high accuracy, and researchers had started using imaging data, such as CT and X-ray, to develop high-performance CAD systems.

Shahin et al.14 proposed a CAD system for detecting COVID-19 using CT images of patients, which grades the severity of the infection based on imaging data. The system comprises two main steps. In the first step, the authors used a modified version of the k-means algorithm to enhance the visibility and contrast of ground glass opacities. In the second step, they employed SVM to diagnose each patient. According to their experimental results, the system achieved an accuracy of 80%. On the other hand, Kogilavani et al.15 proposed a deep-learning CAD system that uses patients’ CT images for COVID-19 classification. They employed various convolutional neural network (CNN) architectures, including VGG16, DeseNet121, MobileNet, NASNet, Xception, and EfficientNet to classify patients into COVID-19 or non-COVID-19 groups. The authors used a dataset of 3833 patients collected from Kaggle to train and test their system. Their experimental results showed that the VGG16 architecture outperformed the other classifiers, achieving an accuracy of 97.68%. Yu et al.16 presented a COVID-19 severity identification system that utilized a patient’s CT volume to identify the severity of the disease. The system employed four deep-learning algorithms, including ResNet-50, Inception-V3, ResNet-101, and DenseNet-201, to extract features from CT images. These features were then fed into a machine learning algorithm to identify each case as severe or non-severe. The authors tested their system using a dataset consisting of 202 COVID-19-positive patients collected from three hospitals in Anhui, China. The authors evaluated their system using five machine learning algorithms, including linear SVM, KNN, linear discriminant, Adaboost decision tree, and cubic SVM. The results showed that using DenseNet-201 with a cubic SVM model outperformed the other models, achieving an accuracy of 95.20%. Nigam et al.17 proposed a deep-learning CAD system that could identify the presence of COVID-19 infection based on a patient’s X-ray. The system used VGG16, DenseNet121, Xception, NASNet, and EfficientNet architectures to detect COVID-19 infection. To evaluate the system’s performance, the authors used a dataset consisting of 16,634 patients collected from various hospitals in India. The results showed that the EfficientNet architecture outperformed the others, achieving an accuracy of 93.48%. Alqudah et al.18 conducted a study to compare the performance of several hybrid machine learning models, including CNNs, random forest (RF), SVM, and KNN, for the detection of COVID-19 from chest X-ray images. To evaluate the models’ performance, they used a dataset consisting of 1057 chest X-ray images, including 506 COVID-19-positive and 551 COVID-19-negative cases. The results showed that the CNN-based models outperformed the other models in terms of accuracy, sensitivity, specificity, and AUC. The best-performing CNN model achieved an accuracy of 96%, a sensitivity of 95%, a specificity of 97%, and an AUC of 0.99.

AI-based techniques have shown promise in assisting with the diagnosis and grading of COVID-19 severity using CT images, as demonstrated by the studies mentioned above. However, it is important to note that there are no published studies that specifically examine the correlation between radiology findings of COVID-19 lesions and the required respiratory support in infected patients. In this paper, we aim to investigate the potential correlation between the radiology features of COVID-19 lesions and the required respiratory support in infected patients. This investigation could provide valuable insights into the management of COVID-19 patients and may lead to improved treatment strategies. Below, will describe in detail the major steps of the proposed system.

Method

In this paper, we proposed a new CAD system that predicts the respiratory support level required for each COVID-19 patient to recover from infection. The CAD system consists of four main steps. Firstly, the patient’s lung is segmented, followed by extraction of the lesion in the second step. To estimate the texture and morphology of the lesions, we trained an appearance model using the MGRF algorithm in the third step. The algorithm is applied three times to represent the lesion appearance at the three respiratory support levels. Finally, a two-stage neural network is used to diagnose and grade each patient into one of the three levels. Figure 2 illustrates the main steps of our CAD system.

Lung segmentation

To design a high-performance CAD system, we must use the markers extracted directly from COVID-lesions. To achieve this goal, we designed our system to start by extracting the lung region, followed by COVID-lesion segmentation. This two-step sequential design ensures accurate segmentation of COVID-lesions and avoids errors in cases where the lesions are close to the chest region. We achieved lung segmentation using our previously published method in19. This approach utilizes the first and second-order appearance models of lung and chest tissues to accurately segment the lung area, taking into account the similarity in appearance of certain lung tissues to other chest tissues such as bronchi and arteries. We extracted the first-order appearance model of the CT image using discrete Gaussian kernels and used a new version of the expectation-maximization algorithm to calculate the model parameters. The MGRF algorithm estimated the second-order appearance model by representing the pairwise interaction of the 3D lung tissues. Figure 3 shows the results of our segmentation method applied to three individuals with varying respiratory support levels needed during COVID-19 infection. For more details on our segmentation method, please refer to19,20.

An illustrative example of the proposed segmentation approach for three patients requiring different levels of respiratory support during COVID-19 infection at 2D axial (first row), sagittal (second rows), and coronal (third row) cross-sections: (a) Level 0, (b) Level 1, and (c) Level 2. The images showcase the accuracy of the segmentation approach for various respiratory support levels.

COVID-lesion segmentation

First, an expert radiologist carefully selects the region of interest around the largest cross-section over COVID-19 lesions aiming to enhance the accuracy of lesion detection. Then, we used the segmentation models mentioned above to differentiate between normal lung tissues and COVID-19 lesions. In addition, we employed connected region analysis as an extra step. Figure 4 showcases some of the segmented COVID-19 lesions.

An illustrative example of the proposed lesion segmentation approach on three COVID-19 patients with varying respiratory support requirements at 2D axial (first row), sagittal (second rows), and coronal (third row) cross-sections: (a) minimal support (Level 0), (b) non-invasive support (Level 1), and (c) invasive support (Level 2).

Learning the appearance of COVID-19 lesions

The proposed approach considers each COVID-lesion as the realization of a piecewise stationary Markov–Gibbs random field (MGRF)21,22,23,24,25,26,27. The MGRF is constructed such that joint probabilities, i.e., voxel-voxel interactions, are central-symmetric. This means that the appearance of infected lung regions is modeled as a random process, in which the probability of each voxel being infected depends on the appearance of neighboring voxels. The use of a central-symmetric system of voxel–voxel interactions is crucial because it captures the circular symmetry of the lesion structure and allows for precise modeling of the appearance of COVID-19 lesions. Denote the neighborhood system of the MGRF by \(\textbf{n}\); then every \(n_{\nu } \in \textbf{n}, \nu = 1, \ldots , N\), is specified by a pair of positive real numbers \((\underline{d}_{\nu }, \overline{d}_{\nu })\). The \(n_{\nu }\)-neighborhood of voxel x is the set \(\{x' \mid \underline{d}_{\nu } < \Vert x - x' \Vert \le \overline{d}_{\nu }\}\), where \(\Vert \cdot \Vert\) denotes Euclidean distance. Figure 5 illustrates such a neighborhood system with \(\underline{d}_{\nu } = \nu - \frac{1}{2}\) and \(\overline{d}_{\nu } = \nu + \frac{1}{2}\).

Consider an image pair \((\textbf{g}, \textbf{m})\) from our training data set comprising a CT slice and its ground truth labeling, respectively. Denote by \(\textbf{R}\) the set of “object” voxels, i.e. voxels within the infected lung region. Then the restricted neighborhood system is the set of voxels

Finally let \(f_{0}\) and \(f_{\nu }\) denote empirical distributions (i.e., relative frequency histograms) of gray levels in \(\textbf{R}\) and gray level co-occurrences (i.e., the frequency of co-occurrences) in \(c_{\nu }\), respectively:21,28

The empirical distribution (i.e., Eq. 1) represents the fundamental principle used to determine the frequency of each estimated Gibbs energy value within COVID lesions. Conversely, the frequency of co-occurrences (i.e., Eq. 2) signifies the joint occurrence frequency of two Gibbs energy values, which plays a pivotal role in constructing the second-order appearance of COVID-19 lesions.

The MGRF distribution of object voxel gray levels within an element of the training data \((\textbf{g}_{t}, \textbf{m}_{t}), t = 1, \ldots , T\) is the Gibbs distribution

where \(\rho _{\nu } = |c_{\nu }| / |\textbf{R}|\) is the mean size of the restricted neighborhoods relative to the size of the entire sublattice \(\textbf{R}\). Since the premise of using the MGRF model is that lungs affected by a particular pathology (in this case COVID-19) will produce CT features alike in appearance, it is practical to approximate some of the quantities in Eq. (3) by their averages with respect to set of training images. Namely, \(|\textbf{R}_{t}| \approx R_{\textsf{ob}} = \frac{1}{T}\sum _{t=1}^{T}|\textbf{R}_t|\), and \(|c_{\nu ,t}| \approx c_{\nu ,\textsf{ob}} = \frac{1}{T}\sum _{t=1}^{T}|c_{\nu ,t}|\). With the condition that elements of the training set are statistically independent (e.g., if each CT image is taken from a different patient), the Gibbs distribution may be further simplified28:

Here, the estimated restricted neighborhood size \(\rho _{\nu } = c_{\nu ,\textsf{ob}} / R_\textsf{ob}\), and estimated weights \(\textbf{F}_{0,\textsf{ob}}\) and \(\textbf{F}_{\nu ,\textsf{ob}}\) again denote average values with respect to the set of training images.

Structural zeros can arise when there are little training data to identify the MGRF model, e.g., if only a relatively small lung region is affected by the disease. Then, by chance, some gray levels do not occur, or do not co-occur with certain other gray levels, in the training data and the corresponding elements of the weight vectors are zero. Test data where these values do occur then have zero likelihood. We deal with this potential problem by using pseudo-counts. For Eqs. (1) and (2), substitute the following, modified versions:

Here Q is the number of discrete gray levels. The parameter \(\varepsilon\) can be chosen according to several criteria. Following28, we set \(\varepsilon\) to effect unit pseudo-count in the denominator, i.e. \(\varepsilon := 1/Q\) (Eq. 4) or \(\varepsilon := 1/Q^2\) (Eq. 5).

It remains only to estimate the Gibbs potentials. Using the same analytical approach as in28, these are approximated using the centered, training-set averaged, normalized histograms:

With the model now fully specified, the Gibbs energy of the lesion, or affected region of the lung, \(\textbf{b}\) within a test image \(\textbf{g}\) is obtained from the equation

Here, \(\textbf{N}^\prime\) is a selected top-rank index subset of the neighborhood system \(\textbf{n}\), and \(\textbf{F}_{0}(\textbf{g}, \textbf{b})\) and \(\textbf{F}_{\nu }(\textbf{g}, \textbf{b})\) are just the histogram and normalized co-occurrence matrix, respectively, of object voxels in the test data. The training procedure for the MGRF model is summarized in Algorithm 1.

Algorithm 1. MGRF training model.

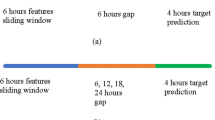

Neural network based grading system

We designed a two-stage neural network to determine the level of respiratory support that each patient needed to recover from COVID-19. In the first stage, we use three feed-forward neural networks for training and testing the three estimated CDF percentiles separately. Therefore, we combined the testing results of the three neural networks and fed them into the final feed-forward neural network, in which we identified the appropriate respiratory support level needed for each patient. We used a backpropagation algorithm to train and test the four neural networks. Algorithm 2 shows the steps of the backpropagation method, which we followed to train our networks. Our neural network performance increased using the best values for the model’s hyperparameters. Several approaches can be used to identify the optimal values for hyperparameters. We used a random search strategy, which depended on randomly selected points from the hyperparameter space, and then tested the model’s performance on the training data for each random sample. The number of hidden layers in the feedforward network, the size of each hidden layer, and the hidden layer neurons’ activation function were all hyperparameters. A random search revealed that the rectified linear unit (ReLU) activation function and three hidden layers of 9, 10, and 21 neurons, respectively, were the values of the optimal hyperparameters for our system.

Algorithm 2. Backpropagation algorithm.

Performance evaluation metrics

To evaluate the performance of our diagnostic system with three outcome levels, we used Cohen’s Kappa, which is a statistical measure of inter-rater agreement commonly used in such situations. One of the main benefits of using Cohen’s Kappa is its ability to take into account the possibility of chance agreement among raters, which is particularly important when the outcome prevalence is low or the categories are imbalanced. Moreover, Cohen’s Kappa can provide valuable insights into the sources of disagreement among raters, which can be used to improve the accuracy of the diagnostic system. The Cohen’s Kappa coefficient for a three-level diagnostic system is calculated as follows:

where \({Acc}_o\) is the observed accuracy between the output of the proposed diagnostic system and the ground truth and \({Acc}_e\) is the expected agreement between the outcome of the proposed diagnostic system and our gold standard reference standard. For a three-level diagnostic system, \({Acc}_o\) and \({Acc}_e\) are calculated as:

and

where \(TP_{L2}\) is the number of true positives for the Level 2 respiratory support, high-risk category, \(TP_{L1}\) is the number of true positives for the Level 1 respiratory support, \(TP_{L0}\) is the number of true positives for the Level 1 respiratory support, \(FN_{L2}\) is the number of false negatives for the Level 2 respiratory support, \(FN_{L1}\) is the number of false negatives for the Level 1 respiratory support, \(FN_{L0}\) is the number of false negatives for the Level 0 respiratory support, \(FP_{L2}\) is the number of false positives for the Level 2 respiratory support, and N is the total number of subjects.

In addition to reporting the observed accuracy and Cohen’s Kappa, we will report the true positive rate (i.e., sensitivity) and true negative rate (i.e., specificity) for each respiratory level.

All methods were carried out in accordance with relevant guidelines and regulations. The Institutional Review Board (IRB) of University of Louisville approved the study and its methods and confirmed that the research study followed all appropriate protocols and legal requirements. Patients (or, in the case of deceased patients, next of kin or legal guardian) provided informed consent.

Experimental results

To test the performance of our proposed method, we collected a dataset consisting of 307 CT chest volumes from the University of Louisville, USA. Based on the support repository provided for each patient, the dataset was categorized into three distinct groups. These groups included 167 cases with minimum support (level 0), 69 cases with non-invasive support (level 1), and 71 cases with invasive support (level 2).

To assess the performance of our CAD system, cross-validation approaches with 4, 5, and 10 folds are employed. Additionally, other machine learning classifiers are utilized to highlight the potential of our system performance. The performance of our system is shown in Table 1. As shown in Table 1, the proposed system outperform all other classifiers, achieving an accuracy of \(97.72\pm 1.57\) and a kappa of \(96.58 \pm 2.36\), using 10-fold cross-validation. Moreover, using 5-folds and 4-folds cross validation, the system consistently demonstrates a high accuracy of \(93.79 \pm 1.37\) and \(92.77 \pm 1.7\), and kappa of \(90.68 \pm 2.05\) and \(89.18 \pm 2.52\), respectively. These outcomes highlight the promise of the proposed system in predicting the respiratory support level for COVID-19 patients. Also, the area under the receiver operating characteristic (ROC) curve (AUC) for each of the three categories are estimated to show the performance of the proposed method in differentiating between the three categories, as shown in Fig. 6. The figure demonstrates the capability of the proposed method for distinguishing between the three levels, achieving AUC of 0.9957, 0.9923, and 0.9984 for levels 0, 1, and 2, respectively, using 10-fold cross-validation. To visually demonstrate the capability of the MRGF models in distinguishing between three levels, Fig. 7 shows the color map of Gibbs energy estimated from three MGRF models for three patients with different respiratory support requirements. As shown in the figure, the Gibbs energy estimated from the MGRF model tuned using a given level of respiratory support is higher for a patient requiring that particular level, compared to other models. This confirms the capability of the proposed system in effectively differentiating between the three levels of respiratory support.

Due to the varying length of Gibbs energy for each patient is different, CDF percentiles are utilized as new, scale-invariant representations of the estimated Gibbs energy. Figure 8 shows the mean and standard deviation of CDF percentile for three respiratory support levels, as estimated from three models. As shown in the figure, representing the patient with the CDF percentiles leads to an obvious separation between the three respiratory support levels, which demonstrates the system’s ability to effectively distinguish between the different COVID-19 respiratory support levels. However, some of these features partially overlap with those associated with different respiratory support levels. Thus, the decisions/probabilities of each model are fused using another neural network to increase the system’s performance and separability between the three levels, as presented in Fig. 9. As demonstrated in the figure, it is easy for the fused neural network to predict the respiratory support level needed for each patient. All of these results show the system’s accuracy and effectiveness in correctly predicting the patient’s need for respiratory support based on CT scans. Hence, this could result in more precise diagnoses, better treatment strategies, and better patient outcomes.

Discussion

Individuals with COVID-19 infection suffer from respiratory impairments and Acute Respiratory Distress Syndrome (ARDS). So, many COVID-19 patients need mechanical ventilation or soft oxygen to recover from the infection35. COVID-19 patients who need an intensive care unit (ICU) may suffer from acute renal failure and organ dysfunction, such as heart failure. Unfortunately, the death rate of COVID-19 infection for patients who needed ICU reached 97% at the early stages of the epidemic36,37. So, identifying the individuals that need ICU from the beginning of the COVID-19 infection is the only way to fight COVID-19 and reduce its death rate. This paper proposes a new, fast (with an average processing time of \(66.55 \pm 25.06\) s), automated, and accurate CAD system that predicts the respiratory support level needed for each patient to recover from COVID-19 infection. To do this, this system identifies the correlation between the patient’s CT image and the respiratory support level. Our empirical results show that our proposed method achieved an accuracy of 97.72%. Several related studies used AI techniques to diagnose or grade the severity of COVID-19 infection using X-rays, CT scans, or medical data9,10,11,12,13,14,15,16,17,18,38,39,40,41,42,43, or to diagnose other diseases44,45,46,47,48,49,50,51,52,53,54. In these studies, many machine learning algorithms are adopted, such as CNN, SVM, KNN, VGG16, and Xception. These studies attained accuracy rates of 92.0–97.68%. However, they suffer from some drawbacks: (1) many recent works are based on deep learning algorithms with many convolution layers to perform feature extraction steps, which may increase the time required to identify COVID-19 severity. The increase in processing time is an acceptable trade-off, since deep learning automatically extracts relevant features instead of relying on a prior set of hand-crafted features. (2) Several existing studies have proposed a CAD system to classify the COVID-19 infection without determining the disease severity. (3) All recent works were based on clinical or imaging data without showing the correlation between these data and the repository support level that each patient had already preserved. AI has demonstrated its value in medical applications and gained widespread acceptance due to its high accuracy and predictability. By synergistically incorporating AI with chest imaging and other clinical data, it has the potential to significantly augment therapeutic outcomes. AI holds significant potential in detecting lung inflammation in CT medical imaging during the COVID-19 diagnosis stage. Furthermore, it offers the capability to precisely segment areas of interest from CT scans, further advancing the diagnostic process. Thus, acquiring self-learned characteristics for diagnosis or other applications becomes straightforward. By integrating multimodal data, including clinical and epidemiological information, within an AI framework, it becomes feasible to generate comprehensive insights for detecting and treating COVID-19 patients and potentially curbing the spread of this pandemic.

Conclusions

As mentioned in this paper, it is evident that COVID-19 resulted in a significant mortality rate primarily attributed to the erroneous or delayed determination of the necessary level of respiratory support for each patient. So, utilizing AI techniques to accurately identify the necessary respiratory support level for each patient at the onset of the infection becomes an imperative and unavoidable. In this paper, we proposed a new CAD system that utilizes CT chest volumes to accurately predict the required respiratory support level for each COVID-19 patient’s recovery. Once the respiratory support level is determined for each patient, physicians can then provide personalized treatment recommendations, which have the potential to significantly reduce mortality rates. The results of this study demonstrated the promise of integrating spatial MGRF model with machine learning to predict respiratory support level in COVID-19 patients. However, this study has some limitations. One of them is the need for improvement in lesion segmentation, as the proposed segmentation relies heavily on the selection of the region of interest by a radiologist. Furthermore, additional investigation is necessary to assess how well the system performs with an external dataset. In the future, we intend to propose a fully automatic lesion segmentation system as well as improve the proposed system’s accuracy by extracting additional features that can be discovered through deep learning. Moreover, we intend to assess the efficacy of the grading system used in this paper (i.e., the levels of respiratory support) by conducting a comparative analysis with established radiology grading techniques.

Data availability

Correspondence and requests for materials should be addressed to Ayman El-Baz.

References

Huang, F. et al. Identifying covid-19 severity-related SARS-COV-2 mutation using a machine learning method. Life 12, 806 (2022).

Gambhir, E., Jain, R., Gupta, A. & Tomer, U. Regression analysis of covid-19 using machine learning algorithms. In 2020 International Conference on Smart Electronics and Communication (ICOSEC), 65–71 (IEEE, 2020).

Saadat, S., Rawtani, D. & Hussain, C. M. Environmental perspective of covid-19. Sci. Total Environ. 728, 138870 (2020).

Verity, R. et al. Estimates of the severity of covid-19 disease. MedRxiv 2020-03 (2020).

Montrief, T., Ramzy, M., Long, B., Gottlieb, M. & Hercz, D. Covid-19 respiratory support in the emergency department setting. Am. J. Emerg. Med. 38, 2160–2168 (2020).

WHO. WHO Coronavirus (COVID-19) Dashboard—covid19.who.int. https://covid19.who.int/. Accessed 06 Mar 2023 (2023).

Rahmani, A. M. & Mirmahaleh, S. Y. H. Coronavirus disease (covid-19) prevention and treatment methods and effective parameters: A systematic literature review. Sustain. Cities Soc. 64, 102568 (2021).

Borakati, A., Perera, A., Johnson, J. & Sood, T. Diagnostic accuracy of X-ray versus CT in covid-19: A propensity-matched database study. BMJ Open 10, e042946 (2020).

Cabitza, F. et al. Development, evaluation, and validation of machine learning models for covid-19 detection based on routine blood tests. Clin. Chem. Lab. Med. 59, 421–431 (2021).

Yao, H. et al. Severity detection for the coronavirus disease 2019 (covid-19) patients using a machine learning model based on the blood and urine tests. Front. Cell Dev. Biol. 683, 25 (2020).

Brinati, D. et al. Detection of covid-19 infection from routine blood exams with machine learning: A feasibility study. J. Med. Syst. 44, 1–12 (2020).

Aktar, S. et al. Machine learning approach to predicting covid-19 disease severity based on clinical blood test data: Statistical analysis and model development. JMIR Med. Inform. 9, e25884 (2021).

Zhang, R.-K., Xiao, Q., Zhu, S.-L., Lin, H.-Y. & Tang, M. Using different machine learning models to classify patients into mild and severe cases of covid-19 based on multivariate blood testing. J. Med. Virol. 94, 357–365 (2022).

Shahin, O. R., Abd El-Aziz, R. M. & Taloba, A. I. Detection and classification of covid-19 in ct-lungs screening using machine learning techniques. J. Interdiscip. Math. 25, 791–813 (2022).

Kogilavani, S. et al. Covid-19 detection based on lung ct scan using deep learning techniques. Comput. Math. Methods Med. 20, 22 (2022).

Yu, Z. et al. Rapid identification of covid-19 severity in ct scans through classification of deep features. Biomed. Eng. Online 19, 1–13 (2020).

Nigam, B. et al. Covid-19: Automatic detection from x-ray images by utilizing deep learning methods. Expert Syst. Appl. 176, 114883 (2021).

Alqudah, A. M., Qazan, S., Alquran, H., Qasmieh, I. A. & Alqudah, A. Covid-19 detection from x-ray images using different artificial intelligence hybrid models. Jordan J. Electr. Eng. 6, 168–178 (2020).

Sharafeldeen, A., Elsharkawy, M., Alghamdi, N. S., Soliman, A. & El-Baz, A. Precise segmentation of COVID-19 infected lung from CT images based on adaptive first-order appearance model with morphological/anatomical constraints. Sensors 21, 5482. https://doi.org/10.3390/s21165482 (2021).

Sharafeldeen, A. et al. Accurate segmentation for pathological lung based on integration of 3d appearance and surface models. In 2023 IEEE International Conference on Image Processing (ICIP), https://doi.org/10.1109/icip49359.2023.10222525 (IEEE, 2023).

El-Baz, A. S., Gimel’farb, G. L. & Suri, J. S. Stochastic Modeling for Medical Image Analysis (CRC Press, 2016).

Sharafeldeen, A. et al. Precise higher-order reflectivity and morphology models for early diagnosis of diabetic retinopathy using OCT images. Sci. Rep.https://doi.org/10.1038/s41598-021-83735-7 (2021).

Elsharkawy, M. et al. Early assessment of lung function in coronavirus patients using invariant markers from chest x-rays images. Sci. Rep.https://doi.org/10.1038/s41598-021-91305-0 (2021).

Farahat, I. S. et al. The role of 3d ct imaging in the accurate diagnosis of lung function in coronavirus patients. Diagnostics 12, 696 (2022).

Elsharkawy, M. et al. Diabetic retinopathy diagnostic CAD system using 3d-oct higher order spatial appearance model. In 2022 IEEE 19th International Symposium on Biomedical Imaging (ISBI), https://doi.org/10.1109/isbi52829.2022.9761508 (IEEE, 2022).

Alghamdi, N. S. et al. Segmentation of infant brain using nonnegative matrix factorization. Appl. Sci. 12, 5377. https://doi.org/10.3390/app12115377 (2022).

Elsharkawy, M. et al. A novel computer-aided diagnostic system for early detection of diabetic retinopathy using 3d-OCT higher-order spatial appearance model. Diagnostics 12, 461. https://doi.org/10.3390/diagnostics12020461 (2022).

Gimel’farb, G. L. Image Textures and Gibbs Random Fields (Springer, 1999).

Speiser, J. L., Miller, M. E., Tooze, J. & Ip, E. A comparison of random forest variable selection methods for classification prediction modeling. Expert Syst. Appl. 134, 93–101. https://doi.org/10.1016/j.eswa.2019.05.028 (2019).

Kotsiantis, S. B. Decision trees: A recent overview. Artif. Intell. Rev. 39, 261–283. https://doi.org/10.1007/s10462-011-9272-4 (2011).

Yang, F.-J. An implementation of Naive Bayes classifier. In 2018 International Conference on Computational Science and Computational Intelligence (CSCI), 301–306. https://doi.org/10.1109/CSCI46756.2018.00065 (2018).

Pisner, D. A. & Schnyer, D. M. Chapter 6–support vector machine. In Machine Learning (eds Mechelli, A. & Vieira, S.) 101–121 (Academic Press, 2020). https://doi.org/10.1016/B978-0-12-815739-8.00006-7.

Zhang, S., Li, X., Zong, M., Zhu, X. & Cheng, D. Learning k for knn classification. ACM Trans. Intell. Syst. Technol. 8, 1–19. https://doi.org/10.1145/2990508 (2017).

Freund, Y. & Schapire, R. E. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 55, 119–139. https://doi.org/10.1006/jcss.1997.1504 (1997).

Arentz, M. et al. Characteristics and outcomes of 21 critically ill patients with covid-19 in Washington state. JAMA 323, 1612–1614 (2020).

Richardson, S. et al. Presenting characteristics, comorbidities, and outcomes among 5700 patients hospitalized with covid-19 in the New York city area. JAMA 323, 2052–2059 (2020).

Jiang, X. et al. Towards an artificial intelligence framework for data-driven prediction of coronavirus clinical severity. Comput. Mater. Contin. 63, 537–551 (2020).

Bhargava, A., Bansal, A. & Goyal, V. Machine learning-based automatic detection of novel coronavirus (covid-19) disease. Multimed. Tools Appl. 81, 13731–13750 (2022).

Fahmy, D. et al. How AI can help in the diagnostic dilemma of pulmonary nodules. Cancers 14, 1840. https://doi.org/10.3390/cancers14071840 (2022).

Batouty, N. M. et al. State of the art: Lung cancer staging using updated imaging modalities. Bioengineering 9, 493. https://doi.org/10.3390/bioengineering9100493 (2022).

Chieregato, M. et al. A hybrid machine learning/deep learning covid-19 severity predictive model from ct images and clinical data. Sci. Rep. 12, 1–15 (2022).

Adhikari, N. C. D. Infection severity detection of covid19 from x-rays and ct scans using artificial intelligence. Int. J. Comput. 38, 73–92 (2020).

Balaha, H. M., El-Gendy, E. M. & Saafan, M. M. CovH2SD: A COVID-19 detection approach based on Harris Hawks Optimization and stacked deep learning. Expert Syst. Appl. 186, 115805. https://doi.org/10.1016/j.eswa.2021.115805 (2021).

Sharafeldeen, A. et al. Texture and shape analysis of diffusion-weighted imaging for thyroid nodules classification using machine learning. Med. Phys. 49, 988–999. https://doi.org/10.1002/mp.15399 (2021).

Sandhu, H. S. et al. Automated diagnosis of diabetic retinopathy using clinical biomarkers, optical coherence tomography, and optical coherence tomography angiography. Am. J. Ophthalmol. 216, 201–206. https://doi.org/10.1016/j.ajo.2020.01.016 (2020).

Sharafeldeen, A. et al. Diabetic retinopathy detection using 3d oct features. In 2023 IEEE 20th International Symposium on Biomedical Imaging (ISBI). https://doi.org/10.1109/isbi53787.2023.10230785 (IEEE, 2023).

Elsharkawy, M. et al. The role of different retinal imaging modalities in predicting progression of diabetic retinopathy: A survey. Sensors 22, 3490. https://doi.org/10.3390/s22093490 (2022).

Saleh, G. A. et al. Impact of imaging biomarkers and AI on breast cancer management: A brief review. Cancers 15, 5216. https://doi.org/10.3390/cancers15215216 (2023).

Sharafeldeen, A. et al. Thyroid cancer diagnostic system using magnetic resonance imaging. In 2022 26th International Conference on Pattern Recognition (ICPR). https://doi.org/10.1109/icpr56361.2022.9956125 (IEEE, 2022).

Elgafi, M. et al. Detection of diabetic retinopathy using extracted 3d features from oct images. Sensors 22, 7833. https://doi.org/10.3390/s22207833 (2022).

Haggag, S. et al. A computer-aided diagnostic system for diabetic retinopathy based on local and global extracted features. Appl. Sci. 12, 8326. https://doi.org/10.3390/app12168326 (2022).

Baghdadi, N. A, Malki, A., Balaha, H. M., Badawy, M., Elhosseini, M. A3C-TL-GTO: Alzheimer automatic accurate classification using transfer learning and artificial gorilla troops optimizer. Sensors 22(11), 4250. https://doi.org/10.3390/s22114250 (2022).

Balaha, H. M., Shaban, A. O., El-Gendy, E. M. Saafan, M. M. A multi-variate heart disease optimization and recognition framework abstract. Neural Comput. Applic. 34(18) 15907–15944. https://doi.org/10.1007/s00521-022-07241-1 (2022).

Yousif, N. R., Balaha, H. M., Haikal, A. Y., El-Gendy, E. M. A generic optimization and learning framework for Parkinson disease via speech and handwritten records abstract. J. Ambient Intell. Humaniz. Comput. 14(8), 10673–10693. https://doi.org/10.1007/s12652-022-04342-6 (2023).

Acknowledgements

Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2024R40), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia. Dr. Ghazal acknowledges the support from Abu Dhabi University’s Office of Research and Sponsored Programs (Grant #19300792).

Author information

Authors and Affiliations

Contributions

I.S.F., A.S., M.G., N.S.A., A.M., J.C., E.V.B., H.Z., T.T., W.A., A.E.T., S.E., and A.E.-B.: conceptualization, developing the proposed methodology for the analysis, formal analysis, and review and edit the manuscript. I.S.F., and A.S.: software, validation and visualization, and prepared initial draft. A.E.-B.: project administration. M.G., N.S.A., W.A., A.E.T., S.E., and A.E.-B.: project directors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Farahat, I.S., Sharafeldeen, A., Ghazal, M. et al. An AI-based novel system for predicting respiratory support in COVID-19 patients through CT imaging analysis. Sci Rep 14, 851 (2024). https://doi.org/10.1038/s41598-023-51053-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-023-51053-9

This article is cited by

-

AI-driven genetic algorithm-optimized lung segmentation for precision in early lung cancer diagnosis

Scientific Reports (2025)

-

AI based medical imagery diagnosis for COVID-19 disease examination and remedy

Scientific Reports (2025)

-

IoT-based bed and ventilator management system during the COVID-19 pandemic

Scientific Reports (2025)

-

A hybrid inception-dilated-ResNet architecture for deep learning-based prediction of COVID-19 severity

Scientific Reports (2025)

-

Metaheuristic optimizers integrated with vision transformer model for severity detection and classification via multimodal COVID-19 images

Scientific Reports (2025)