Abstract

Land use land cover (LULC) maps are crucial for various applications, such as disaster management, natural resource conservation, biodiversity evaluation, climate modeling, etc. The Japan Aerospace Exploration Agency (JAXA) has released several high-resolution LULC maps for national and regional scales. Vietnam, due to its rich biodiversity and cultural diversity, is a target country for the production of high-resolution LULC maps. This study introduces a high-resolution and high-accuracy LULC map for Vietnam, utilizing a CNN approach that performs convolution over a time-feature domain instead of the typical geospatial domain employed by conventional CNNs. By using multi-temporal data spanning 6 seasons, the produced LULC map achieved a high overall accuracy of 90.5% ± 1.2%, surpassing other 10-meter LULC maps for Vietnam in terms of accuracy and/or the ability to capture detailed features. In addition, a straightforward and practical approach was proposed for generating cloud-free multi-temporal Sentinel-2 images, particularly suitable for cloudy regions. This study marks the first implementation of the time-feature CNN approach for the creation of a high-accuracy LULC map in a tropical cloudy country.

Similar content being viewed by others

Introduction

Land use land cover (LULC) maps provide valuable information for understanding different anthropogenic-related processes, including climate change1, urban expansion2, urban heat island3, and sediment-related disasters4. Recently, several high-resolution global LULC products have been released, such as Google’s Dynamic World (DW)5, ESA’s World Cover (ESA)6, and ESRI Land Cover (ESRI)7. These LULC products have garnered the widespread attention due to their high spatial resolution of 10 m and their capability to provide a time series of LULC maps on a global scale. While these recent global products have significantly improved the spatial detail of LULC maps, their applicability at national scales is limited due to coarse LULC category systems and uncertainties in accuracy when applied to national or local scales. For example, in the aforementioned global LULC maps, all forest types were combined into a single category such as “tree”. Users requiring more detailed forest categorization cannot use these global maps for their work. Given these limitations, the creation of national LULC maps becomes necessary. Several studies have undertaken the mapping of LULC in response to national or regional demands, including the production of high-resolution LULC maps of Japan8,9, LULC maps of China for the years 1980-201510, a 10-m European LULC map11, and a LULC map of West Africa12.

In recent years, deep learning has gained significant traction in remote-sensing-related studies13, driven by technological advancements, such as data augmentation, non-linear activation, high-performance graphics processing unit (GPU), and cloud computing14. Several studies have indicated that deep learning consistently outperformed shallow machine learning methods in terms of overall accuracy15,16. For instance, deep learning methods achieved impressive results, with a median accuracy of approximately 95% for scene classification, around 92% for object detection, and about 91% for LULC classification using benchmark datasets17. Among the deep learning methods, Convolutional Neural Networks (CNNs) have emerged as one of the most popular and powerful algorithms for satellite remote sensing applications, particularly in LULC classification18. The compatibility of CNNs with satellite images can be attributed to their design, which is well-suited for processing multi-dimensional arrays19. Consequently, numerous studies have successfully applied CNNs to map LULC using various types of satellite images, including high-resolution20,21,22, medium-resolution23,24,25, and hyperspectral images26,27,28. A review of 200 papers on remote sensing applications using deep learning by Ma et al. (2019)17 revealed that most of LULC classification studies focused on high-resolution (\(\le\) 10 m) and hyperspectral images to harness their rich spatial or spectral information. Surprisingly, medium-resolution images (e.g., 30 m) received less attention despite their popularity and accessibility. Additionally, the review highlighted that the majority of studies relied on single images, with only a few attempting to analyze multi-temporal remote sensing data for LULC classification using CNNs. The limitation of applying CNNs to multi-temporal data is primarily due to their inherent design, which mainly processes single images. In response to this limitation, some studies have explored alternative approaches, such as Recurrent Neural Networks (RNNs)29,30, or hybrid models combining CNNs and RNNs for LULC classification31,32,33. However, these approaches often entail high computational costs and complex model structures, which can pose challenges for large-scale LULC mapping efforts.

On the other hand, the spatial convolution process, when combined with down-sampling methods like max-pooling, presents challenges due to information loss and reduced spatial resolution. To tackle these challenges, numerous studies have explored different approaches. Some have sought to simplify the standard Convolutional Neural Network (CNN) architecture by employing a homogeneous network consisting exclusively of convolutional layers, coupled with dimensionality reduction through increased stride34. Others have focused on multi-scale context aggregation, achieved through dilated convolutions without pooling or sub-sampling35. Additionally, there have been attempts to employ deconvolutional networks to recover spatial resolution compromised by down-sampling36. These studies have introduced valuable approaches to address information loss-related challenges, primarily in the context of scene classification or object detection tasks. However, the application of these methods to pixel-level classification, particularly in land use land cover (LULC) classification using satellite images, remains uncertain.

To overcome the limitations of conventional Convolutional Neural Networks (CNNs) in maintaining spatial details of LULC maps, Hirayama et al. (2022) introduced a software package, namely SACLASS29 that employs multitemporal satellite images with a standard CNN architecture for LULC mapping. SACLASS2 was used to create a 12-category LULC map for Japan with an overall accuracy of \(88.85\%\), surpassing recent LULC products for Japan in terms of spatial detail. In Hirayama’s method, a time series of images was generated for four seasons, encompassing winter, spring, summer, and autumn. The seasonal data were cleverly organized in a manner that enabled the extraction of both temporal and feature (bands and indices) information from individual pixels. Notably, this approach offers flexibility in the selection of satellite images and exhibits substantial potential for large-scale LULC mapping. However, a comprehensive exploration of its capabilities is insufficient, signifying a need for further research to fully tap into the potential of this approach.

Vietnam has attracted considerable attention from numerous international organizations for biodiversity and environmental conservation, primarily due to its remarkable levels of biodiversity, cultural diversity, and rapid economic development. In fact, Vietnam was ranked in the top 16 countries globally in terms of biodiversity richness37. The country is home to 54 ethnic groups, each contributing to its rich cultural heritage and distinct land use practices. However, the country faces pressing challenges due to a critical rate of biodiversity loss38 and environmental degradation39. These environmental issues are primarily attributed to the consequences of rapid population growth and economic expansion witnessed in recent decades. Among the direct contributors, the change in LULC stands out as one of the most significant issues. LULC change has led to the degradation and loss of critical ecosystems and habitats, endangering numerous species, causing degradation of natural resources, and triggering natural disasters. Hence, it becomes imperative to regularly map LULC on a national scale. This mapping effort plays a pivotal role in providing crucial information for biodiversity evaluation and natural disaster countermeasures for Vietnam.

JAXA has released several LULC products for Mainland Vietnam, including 50-m LULC maps from 2015 to 201840, 10-m LULC maps for 2007 and 201641, 30-m LULC maps from 1990 to 202042. This study is part of a series of research efforts focused on enhancing JAXA’s LULC mapping for Vietnam. Our objective is to create a high-resolution and high-accuracy LULC map by adopting and extending Hirayama’s SACLASS2 software to incorporate 6 seasons of satellite data. In this paper, Hirayama’s approach is denoted as the “time-feature CNN” approach. The contributions of this paper can be summarized as follows: (1) Providing a new and reliable LULC map for Vietnam by employing the time-feature CNN approach with the integration of 6 seasons of satellite data; (2) Proposing a straightforward and practical method for constructing a composite dataset of 6 seasons for Sentinel-2, facilitating its application in tropical, cloudy countries.

The remainder of this paper is organized in the following order: study area, results, discussions, and methods and materials.

Study area

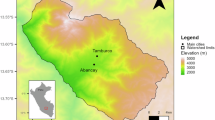

The study area encompasses Mainland Vietnam, covering approximately about 324,000 km\(^2\). Mainland Vietnam is characterized by diverse landscapes, featuring high mountains exceeding 1000 m in elevation, as well as hilly terrain that stretches from the northwest to the southeast. These highlands occupy about three-quarters of the total area. The remaining one-quarter of the land consists of low-lying areas, including the Red River Delta in the north and the Mekong River Delta in the south. These lowlands are complemented by flat coastal regions (see Fig. 1). Vietnam experiences a varied climate, transitioning from subtropical in the north to tropical in the south. The rainy or wet season, often referred to as the cloudy season, prevails from May to October. Since the economic revolution in 1986, Vietnam has undergone rapid changes in land use and land cover. Recent years have witnessed a swift urbanization process, resulting in the transformation of numerous farmlands and green areas into urban lands43. Despite this urbanization trend, Vietnam has managed to achieve a transition from net forest loss to net gain, primarily driven by the expansion of plantation forests through reforestation programs in recent decades44,45.

The study area is Mainland Vietnam, excluding some remote islands in the east. (a) The location of Vietnam on the world map, (b) The elevation map of Mainland Vietnam. A large area is covered by high mountains (> 1000 m). The flat lands are distributed along the coastal line (blue areas) and the two big deltas provide a majority of agricultural products for the country.

Results

Time series of Sentinel-2 images

To generate cloud-free Sentinel-2 composite images for the 6 seasons in 2020, we employed a straightforward approach that incorporated data from 2019, 2020, and 2021. The inclusion of data from both 2019 and 2021 served a dual purpose: it addressed data gaps caused by cloud masking and eliminated pixels with abnormal values. A comprehensive description of our approach can be found in the “Satellite images pre-processing” section. After a meticulous assessment of the resulting images, most of missing data were successfully replaced by valid data (refer to the link provided in the “Data Availability” section). While a few pixels with missing data persisted in the final images, addressing these would require a more intricate solution. At this stage, we accepted these minor data gaps for the sake of maintaining the simplicity and the practicality in our approach, particularly since Synthetic Aperture Radar (SAR) data would subsequently compensate for any missing optical data.

Figure 2 illustrates the enhancements achieved in Sentinel-2 images through our approach when compared to the conventional method (the normal approach). The improvements can be described in two main aspects: (1) Our approach effectively addressed the missing data problem by leveraging information from other years of the same season or from different seasons in the same year; (2) This approach successfully reduced the presence of undesirable pixels, such as cloudy pixels or abnormal values pixels due to incompleteness of the atmospheric correction. In the normal approach, clouds led to substantial missing data from Season 3 (S3) to Season 5 (S5). Conversely, our approach effectively filled these missing data.

Comparison between the normal approach and our approach for creating a time series of Sentinel-2 images with 6 seasons (S1-S6), an example for a part of Mekong River Delta in Vietnam. All images of 6 seasons, both for the normal approach and our approach, were produced using free Sentinel-2 level 2A images provided by ESA via Google Earth Engine46, by applying the “median()” method available on Google Earth Engine47. This figure was organized and presented using QGIS 3.10.4-A Coruna (https://qgis.org/en/site/).

LULC map of Mainland Vietnam

By fusing multi-temporal optical and SAR images, our LULC map (see Fig. 4) achieved an impressive overall accuracy of \(90.5\% \pm 1.2\%\) (see Supplementary Table S6). The average user’s and producer’s accuracy are also notably high, standing at \(90.2\% \pm 1.7\%\) and \(90.2\% \pm 1.6\%\), respectively. Nearly all categories exhibited an accuracy rate exceeding 85% for both user’s and producer’s accuracy. Specifically, the categories of water, urban/built-up, and rice demonstrated the highest user’s and producer’s accuracy, while other crops and grass/shrub categories exhibited the lowest accuracy (see Fig. 3). The lower accuracy in the other crops and grass/shrub categories may be attributed to the intra-category variability. Agriculture in Vietnam is well-known for its heterogeneous and fragmented lands, characterized by small-scale farming. This fact caused a diverse range of crop types with various cultivation practices, creating a higher possibility of misclassification from crops into other categories.

Within the forest category, there were misclassifications between evergreen forests and plantation forests. Plantation forests are typically characterized by the homogeneity of plant types and a cycle of harvesting. However, the presence of vigorous understory vegetation, common in tropical climates, along with the extended harvesting cycle spanning 10 to 20 years, results in plantation forests that are notably dense and appear very dark in satellite images. Consequently, this density and darkness sometimes lead to the misclassification of plantation forests as natural forests in satellite imagery.

To examine the performance of the classification method with different combinations of input images, a comparison was implemented between the resulting LULC map generated by using only optical images (Case 1), using only SAR images (Case 2), and fusing optical and SAR images (Case 3). The specifics of image selection are provided in Table 1.

The same CNN structure was employed (see Fig. 10) for all three cases, with the same number of training data (121,521 points). Our accuracy assessment for three cases relied on a validation dataset of 600 points described in the “Methods and materials” section. The results revealed a significant improvement in both the user’s and producer’s accuracy for most of the categories when combining optical and SAR data (see Fig. 3 and Supplementary Table S1–S6). Using only SAR images produced a LULC map with the lowest overall accuracy of \(79.6\% \pm 1.6\%\), while employing only optical data produced an overall accuracy of \(82.7\% \pm 1.5\%\). Remarkably, the integration of optical and SAR images achieved the highest overall accuracy of \(90.5\% \pm 1.2\%\). Figure 5 visually compares the classification results for three cases to a true-color Sentinel-2 image. In Case 1, the misclassification of grass/shrub and barren to urban/built-up areas caused overestimation for this category (see Fig. 5b). Case 2 improved urban/built-up area classification compared to Case 1, but finer objects like narrow roads remained a challenge (see Fig. 5c). Notably, Case 3 outperformed the other cases, offering a clear representation of road networks. The fusion of optical and SAR data not only resolved misclassifications present in the optical-only case but also accurately identified small features (see Fig. 5d).

Comparison of LULC maps for three cases. (a) Sentinel-2 RGB image, (b) LULC map for Case 1 (optical only), (c) LULC map for Case 2 (SAR only), (d) LULC for Case 3 (optical + SAR). The true-color satellite image in (a) was produced using the Sentinel-2 level 2A image (Red: band 4, Green: band 3, and Blue: band 2), provided for free by ESA via Google Earth Engine46. The maps in (b), (c) and (d) are classified LULC maps using SACLASS-2 software version 1.0 produced by Hirayama et al. (2022)9. This figure was organized and presented using QGIS 3.10.4-A Coruna (https://qgis.org/en/site/).

Comparison with other LULC products

For the purpose of comparing with our LULC map, this study selected available 10-m LULC maps for Vietnam, including three global LULC products for 2020 (DW, ESA, and ESRI), and one national LULC product from 2016 released by JAXA. To obtain DW’s LULC map, we computed the mode of all daily LULC maps of DW in 2020 for the “label” band using Google Earth Engine. ESRI’s, ESA’s, and JAXA’s product can be directly downloaded from their respective websites. To facilitate a meaningful comparison among these different LULC products, all the maps were reclassified to a common LULC category system, consisting of 7 categories: (1) water, (2) urban/built-up, (3) tree, (4) barren, (5) grass/shrub, (6) flooded vegetation (including mangrove trees), and (7) crops. Following this reclassification, the accuracy assessment was performed for the global LULC maps using the validation dataset comprising 600 points, as described in the “Methods and materials” section. The overall accuracy and its standard deviation for JAXA’s 2016 map were calculated based on the original error matrix provided with the LULC map on the JAXA’s website48. Our map achieved an overall accuracy of 93.2% ± 1.7%, surpassing DW with 80.1% ± 2.5%, ESA with 84.3% ± 2.3%, ESRI with 71.6% ± 2.9%, and JAXA’s 2016 map with 88.4% ± 0.6% (see Supplementary Table S7-S16).

A pairwise comparison was conducted by measuring the difference between our map and the selected LULC maps. Two pixels of the same location were considered a match if they belonged to the same LULC category. The proportion of matched pixels represents the level of agreement between the two maps. Our map had the highest agreement level with ESA’s map, with 76% of matched pixels (see Fig. 6c), followed by 74% for DW (see Fig. 6a), 71% for ESRI (see Fig. 6b), and 67% for JAXA’s 2016 map (see Fig. 6d). By visual comparison, Fig. 6c presents slightly fewer red areas (mismatched pixels) than 6a, 6c, and 6d, especially for the northwest, the Central highland, and the southeast.

To evaluate category-level agreement, the number of matched pixels was calculated for each LULC category in each comparison case. The percentage of matched pixels for a category was computed by dividing the number of matched pixels by the total number of pixels for that category. The total number of pixels for each category was obtained from our LULC map. The level of agreement was categorized based on the percentage of matched pixels: high agreement (\(\ge\) 60%), average agreement (30% - 60%), and low agreement (\(\le\) 30%). Figure 7 presents spider charts, illustrating the percentage of matched pixels for each LULC category. The results revealed that the agreement was not equally distributed among the categories. Specifically, the categories of barren, grass/shrub, and flooded vegetation exhibited significantly lower agreement compared to the others. DW and ESRI demonstrated a strong agreement with our product for water, urban/built-up, tree, and crops but showed low agreement for barren, grass/shrub, and flooded vegetation (see Fig. 7a,b). In contrast, ESA showed a better agreement with our map for barren, grass/shrub, and flooded vegetation, but a lower agreement for water and urban/built-up (see Fig. 7c). Our map and JAXA’s 2016 map showed high agreement for crops and tree category, low agreement for flooded vegetation, and average agreement for the other categories (see Fig. 7d).

A visual comparison was conducted with PlanetScrope’s images at 5 sites (see Fig. 8). In Site 1, DW and ESRI misclassified dense mangrove forests as the tree category, ESA overestimated mangrove forests and JAXA’s 2016 map misclassified water as crops. Site 2 and Site 3 displayed areas with solar panels in the center of the images. While all maps misclassified the solar panels in Site 2, our map accurately detected the shape and border of the solar panels. In Site 3, our map correctly classified solar panels, whereas the other maps misclassified them into grass/shrub (DW), crops (ESRI), barren (ESA), and trees (JAXA’s 2016 map). In Site 4, all the maps well classified the crop areas except for ESA, which classified rice fields into flood vegetation, while DW and ESRI tend to overestimate urban/built-up areas. In Site 5, our map and ESA outperformed the others in classifying the runway of the airport and the grassland in the golf course near the airport. In general, our map has addressed limitations existing in the other LUC maps and achieved significantly higher accuracy than other LULC maps for Vietnam.

The visual comparison between our map and the other LULC maps in 5 sites, using PlanetScope’s images as a validation. Site 1: Comprising a mangrove forest on the left half and a combination of mangroves and water areas for aquatic farming on the right half. Site 2 and Site 3: Areas with solar panels in the center of the images. Site 4: An area encompassing paddy fields in the lower half and other crops in the upper half. Site 5: Tan Son Nhat Airport and its surrounding area in Ho Chi Minh City.

Discussion

Improvements of our LULC map

The produced 12-category LULC map has higher overall accuracy (\(90.5\% \pm 1.2\%\)) than the other 10-m LULC maps for Vietnam. Our previous study49 revealed that increasing the number of LULC categories by one could result in an approximate 1% decrease in overall accuracy. This decline in overall accuracy is attributed to the higher likelihood of misclassification within the expanded set of categories. Therefore, an overall accuracy of about 88% is considered a standard overall accuracy for a LULC map with 12 categories. Several factors contributed to the high accuracy of our map: (1) An extensive training dataset consisting of 121,521 points covers a diverse range of land cover conditions, supporting our CNN model in accurately detecting various landscapes; (2) The combination of optical and SAR images mitigated their own limitation, significantly enhancing the map’s accuracy; (3) The utilization of the time-feature CNN approach improved the capability of preserving spatial details in the output LULC map.

Among the global LULC products, ESA was better than the other global LULC maps in terms of pixel-level accuracy assessment. In addition, ESA’s map also exhibited better spatial detail than DW and ESRI, attributed to its use of the Random Forest classifier, whereas DW and ESRI relied on deep learning with spatial convolution. A visual comparison highlighted much salt/pepper noise in the ESA’s map and the clustered and generalized patterns50 in DW’s and ESRI’s map. Furthermore, several limitations were identified in the other LULC products as follows: (1) ESA’s map tend to misclassify paddy field into flooded vegetation such as herbaceous wetland; (2) DW and ESRI tend to overestimate urban/built-up areas; (3) JAXA’s map of Vietnam in 2016 misclassified aquatic farming into rice. In optical imagery, distinguishing impervious surfaces from bare soil proves a challenge due to their similar high spectral values, resulting in misclassifications. The misclassifications are evident in LULC products reliant solely on optical images, such as DW’s map and ESRI’s map (as seen in the top-right corner of Site 4 in Fig. 8). By integrating ALOS-2/PALSAR-2 images with Sentinel-2 images, our map effectively mitigates the confusion between barren and urban/built-up areas. This is attributed to the distinct backscattering characteristics of flat bare soil in PALSAR-2 images, which differ significantly from urban. However, it’s worth noting that challenges persist in regions where rough-surfaced bare soil may lead to misclassification with urban/built-up, as highlighted by Sun et al. (2019)51. The utilization of L-band ALOS-2/PALSAR-2 data offers the advantage of capturing information beneath dense vegetation canopies, enhancing the mapping accuracy of flooded vegetation52 . Additionally, C-band Sentinel-1 data proves advantageous for rice mapping53. As a result, the combination of L-band SAR and C-band SAR with optical imagery in our mapping methodology addresses and minimizes the misclassifications between rice paddies and flooded vegetation. This is a notable improvement over the ESA’s map, which used only C-band SAR and optical images (Site 4 in Fig. 8).

In terms of spatial detail, our map outperformed DW and ESRI in resolving the fine LULC features but with slightly less detail than ESA’s map. Two factors influenced the spatial detail of our map: (1) The use of PALSAR-2/ScanSAR and AW3D images with a spatial resolution of 25 m and 30 m, which limited the ability to reflect 10-m-spatial-resolution features in Sentinel-2 images onto final LULC map; (2) The process of converting data type from “float” to “byte” after the normalization, which could lead to loss of information due to the equalization of pixels with a small difference in their values. The second factor revolves around the trade-off between spatial detail and computational cost. When using data in “float” type, the information loss could be reduced, but at the expense of significantly increased computational demand. As a result, we must strike a balance between the level of detail in the output map and the computational cost that we are willing to incur.

Overall, the Hirayama’s time-feature convolution approach allows us to avoid the geospatial down-sampling, thus preserving the spatial resolution of the output to a great extent. This addresses the limitation of the conventional spatial convolution used in previous global LULC products while allowing the use of a standard CNN architecture. The time-feature CNN approach proved several advantages as follows: (1) Allowing the integration of data from multiple sources (satellite images, meteorological data, climate data, etc.) without creating sub-models for different data sources, resulting in a relatively simple model structure; (2) The ability to work with data from various temporal and spatial resolutions, providing flexibility in sensor imagery combination; (3) The robustness in detecting categories with significant seasonal differences, such as deciduous forests and seasonal crops, due to its design for time series data.

The practicability of utilizing multi-year data for cloud-free optical image generation

In our study, we employed Sentinel-2 images from 2019, 2020, and 2021 to create median-composite images for the year 2020. Consequently, the output LULC map incorporated not only the LULC information of 2020 but also of 2019 and 2021 to some extent. While this approach may not be ideal for areas characterized by significant year-to-year changes in LULC, it is highly effective for places, where the LULC exhibits relatively small annual changes. Importantly, this approach stands out for its simplicity and ease of implementation when compared to complex methods used to recover missing data in optical imagery54,55. This advantage makes our approach readily applicable to missing data recovery on a large scale using Google Earth Engine.

The demand for high-resolution LULC maps in Vietnam

In response to the 2030 Agenda and Sustainable Development Goals (SDGs), the Vietnamese government has implemented several key national strategies and action plans. These include the National Action Plan for the implementation of the 2030 sustainable development agenda56, the National Climate Change Strategy to 205057, the National Action Programme on REDD+ by 203058. Notably, the Vietnamese government has set ambitious targets for land use and the forestry sector, aiming to reduce greenhouse emissions by 70% and increase carbon absorption sinks by 20% by 2030. To achieve these SDGs, evaluating the current condition, status, and trend of national natural resources, including LULC at the national scale, is of utmost importance. For such context, high-resolution LULC maps play a critical role in providing necessary information for land use management, forest monitoring under the REDD+ program, and the conservation of natural ecosystems of the country.

Potentials of time-feature CNN for future studies

The time-feature CNN approach proposed by Hirayama et al. (2022)9 is different from the conventional CNNs by the capability to perform convolution across temporal and spectral domains, instead of spatial domain. Unlike spatial convolution, which works on group of spatially correlated pixels, the time-feature convolution approach necessitates the consideration of pixels in the time-series profile and the spectral profile. In the time-series profile, features were organized based on the continuity of the time sequence, spanning from Season 1 to Season 6 (Fig. 9). As for the spectral profile, our emphasis is placed on grouping features primarily by the sensor and secondarily by high-to-low spatial resolution. The spectral bands of Sentinel-2 were sequenced from short to long wavelength, aligning with the electromagnetic spectrum’s natural order. Although vegetation indices are independent, they were positioned after the spectral bands, reflecting their derivation from Sentinel-2’s bands. Following Sentinel-2, features from Sentinel-1, PALSAR-2/ScanSAR, AW3D, OpenStreet map, Longitude, and Latitude were incorporated. It is obvious that the continuity of spectral profile is obstructed by the independence of feature bands from different sensors. In addition, there is a dearth of prior studies addressing the optimal arrangement of the spectral profile for LULC classification. It is, therefore, strongly recommended that future studies explore the optimal organization of data from different sensors to improve the accuracy of LULC classification. On the contrary, an alternative approach can be to independently extract features from different sensors and then combine them. This approach may help reduce uncertainties linked to the arrangement of spectral profiles from different sensors.

Recently, the availability of high spatial resolution satellite sensors (\(\le\) 10 m) has created numerous opportunities to acquire high-resolution time-series data on a large scale. The flexibility of utilizing time-series data from multiple sensors makes the application of the time-feature CNN for regional and global LULC mapping highly feasible. This approach has the potential to reduce discrepancies in global LULC maps when applying them to national and local scales, thanks to its ability to preserve geospatial patterns. Nevertheless, further research is needed to optimize the model’s structure and select optimal hyperparameters. Additionally, exploring the use of time-feature CNN with high-resolution images (\(\le\) 3m) for seasonal vegetation monitoring is a promising research direction.

The coarse resolution image may influence the high spatial resolution image in presenting spatial details when they are combined. To address this, studies on feature-level fusion, result-level fusion, or combined models based on time-feature CNN should be conducted to figure out the suitable option for the combination of low-resolution and high-resolution images. Lastly, the time-feature CNN approach, which relies on point-based training data, offers the advantage of reducing the time required for training data collection, as these data can be shared with pixel-based machine learning studies.

Conclusions

This study successfully generated a 10-m LULC map of Vietnam for the year 2020 with an impressive overall accuracy of \(90.5\% \pm 1.2\%\), surpassing the accuracy of existing 10-m LULC maps for Vietnam. The produced map by the time-feature Convolutional Neural Network showed substantial enhancements compared to other global LULC maps, including: (1) The map’s spatial detail outperformed LULC products utilizing the spatial convolution approach such as DW’s and ESRI’s map; (2) Notable improvements were observed in addressing limitations present in other products, including the confusion of paddy fields with flooded vegetation in ESA’s map, the overestimation of urban/built-up areas in DW’s and ESRI’s maps, and the inaccurate classification of aquatic farming as rice fields in JAXA’s map; (3) The produced map stands out with 12 distinct categories, surpassing the 9 categories of Dynamic World, 11 categories of ESA World Cover, and 10 categories of ESRI Land Cover; (4) Our map offers a detailed classification legend for forest categories (evergreen, deciduous, plantation, and mangrove), a separate identification of rice and other crops, and a dedicated category for aquaculture.

This study proposes a straightforward and practical approach for constructing a time series of optical images spanning 6 seasons using data from multiple years. This method is easily implemented on Google Earth Engine and adaptable to various satellite images for data gap filling. Its simplicity is robust for a large-scale application. The time-feature CNN approach exhibits significant potential for producing high-accuracy LULC maps for cloudy regions by leveraging a combination of optical and SAR images. Given these achievements, further exploration of the proposed CNN approach is recommended, including the investigations of the model’s structure, the hyperparameter selection, and the feature arrangement to comprehensively understand its capabilities.

Methods and materials

Classification algorithm

In this section, this study provide a comprehensive description of the time-feature CNN approach originally developed by Hirayama et al. (2022)9. Unlike conventional CNNs that perform convolution on the geospatial domain, this approach was designed to operate on a time-feature domain. Consequently, the input data for the CNN model consists of not an array of pixels covering a geospatial area but an array of a time series of features (bands or indices) for a single pixel. Initially, this method was applied to create a LULC map of Japan using satellite data with 4 seasons. However, it is believed that increasing the number of seasons might help to provide useful information for the discrimination among the LULC categories. As a result, the modifications were made on the original source code of SACLASS2 software to enable the processing of satellite data within 6 seasons. Details of the method are described as follows:

Initially, the satellite images from different sensors underwent the re-projection to the Geographic Coordinate System. The integration of satellite data from different sensors was executed on the basis of a tile, covering an area of 1\(^\text {o}\)x1\(^\text {o}\) (about 100 km\(^2\)). Totally, 60 tiles are needed to fully cover area of Mainland Vietnam. This study employed GDAL (Geospatial Data Abstraction Library) and NumPy in Python environment to read the TIFF (Tagged Image File Format) files of satellite images and converted them into NumPy array. It is essential to highlight that our satellite data includes features (spectral bands, polarizations, vegetation indices, etc.) exhibiting different value range. Sentinel-2 bands, for instance, range from 0 to about 10,000, while values of vegetation indices are from -1 to 1. To ensure an equal contribution from all features to the classification result, a normalization step was applied. This entailed the conversion value of all features to [0, 1] range (see Equation 1). Following the normalization, features with a spatial resolution greater than 10 m were resampled to 10 m, employing the bilinear interpolation. Subsequently, for each 1\(^\text {o}\)x1\(^\text {o}\) tile, all features for 6 seasons were combined and stored in the form of a NumPy array, referred to as a “combined image”.

Data in the combined image were arranged in a way that each pixel is considered as a function of time and feature. Here, time \(\in\) {S1, S2, S3, S4, S5, S6} (see Table 5) and feature \(\in\) {B2, B3, ..., GSI, VH, VV, ..., Lat, Lon}. Each pixel can be visualized as a two-dimensional grid, with a time axis on the horizontal dimension and a feature axis on the vertical dimension. Figure 9 shows examples of the grids in the form of greyscale color images. In the figure, there are 12 small images representing examples of training data for 12 LULC categories. The larger image is an enlarged view of one of the small images, providing a clearer depiction of the training data. It is worth noting that while a majority of features such as those from Sentinel-2, Sentinel-1, and PALSAR-2/ScanSAR exhibit seasonal variation, other features remain constant for all seasons. To preserve the local characteristics of the input data, latitude and longitude were added as features, positioning them at the edge of the image.

where \(N _{b,i}\) is the normalized value of pixel i of feature b, \(b _{i}\) is the value of pixel i of feature b before normalization, \(b _{min}\) is the minimum value of feature b, and \(b _{max}\) is the maximum value of feature b over the study area.

After processing satellite data, raster images were formed from the training points. These training points were collected for each 1\(^\text {o}\)x1\(^\text {o}\) tile and then exported to a text file. Each line in the text file contains data for a training point and includes a label of a category (numbered between 1 and 12 representing 12 LULC categories), latitude, and longitude information. To convert the latitude and longitude of a training point to indices of the corresponding row and column in a 1\(^\text {o}\)x1\(^\text {o}\) raster, the methods in Equation 2 and Equation 3 were used.

where \(x_{i}\) and \(y_{i}\) are the corresponding column and row of the training point i in the 1\(^\text {o}\)x1\(^\text {o}\) raster, \(lon_{i}\) and \(lat_{i}\) are the longitude and latitude of the training point i, \(lon_{min}\) and \(lat_{min}\) are the minimum longitude and minimum latitude of the \(1^\text {o}\)x\(1^\text {o}\) raster, \(column_{tot}\) and \(row_{tot}\) are the total column and row of the 1\(^\text {o}\)x1\(^\text {o}\) raster. In this study, each pixel has a size of 100 m\(^2\), which is equivalent to 8x\(10^{-9}\) degree\(^2\). Within 1 degree\(^2\), there are a total of 11133\(^2\) pixels. With the row and column indices, the value of the pixel was determined based on the numeric label representing the corresponding LULC category. The raster of training points, referred to as a training image, contains pixels with data and no data. Hereinafter, training pixels were referred as the pixels containing valid data. At the position of each training pixel in the combined image, the data were collected. For each 1\(^\text {o}\)x1\(^\text {o}\) tile, the data for all training pixels were collected and aggregated into a NumPy array. This Numpy array is the training dataset for the CNN model.

The CNN architecture consists of a sequence of two convolution layers followed by one max pooling layer, as illustrated in Fig. 10. To prevent overfitting to the local characteristics of the input data, a dropout layer was applied before the fully connected layer. Activation functions were used as follows: ReLU for the convolution layers and softmax for the output layer. The loss function was multiclass tolerance entropy. To search for the optimal calculation condition, preliminary training process was performed on the model. In this process, 80% of the training data was used for training, while the remaining 20% served as the validation set. Based on the experimental results, Adam36 was selected as an optimization algorithm, with a batch size of 128. Early stopping was implemented with a patience parameter set to 10 epochs, and the learning reduction had a patience parameter of 2 epochs. The training process terminated after 23 epochs. Instead of employing data augmentation techniques, the complete set of 121,521 training points was used for the final learning of the model.

Examples of input data for the CNN model. The small images are 12 examples of training data for 12 LULC categories. The large image illustrates the structure of a training point with a horizontal axis (time axis, consisting of 6 seasons) and a vertical axis (feature axis, consisting of 40 features). This structure of input data is an extending version of the data structure proposed by Hirayama et al. (2022)9 (4 seasons and 30 features).

The structure of the CNN model used in this study by modifying the original CNN proposed by Hirayama et al. (2022)9. There are 4 pairs of convolutional layers and 4 max-pooling layers. The convolutional layers use ReLU as the activation function and the Dense 1 layer (fully connected layer) uses the softmax function.

Reference data collection and accuracy assessment

To collect a large amount of training data, this study used the visual interpretation method on Sentinel-2 images, Google Earth Pro, Google Street View, and GPS photos taken from field surveys in 2018, 2019, 2020, and 2021. Our training data were collected in a point-based form and stored as vector data. Each training point represents a homogeneous LULC type with an area of 100 m\(^2\). To ensure a dense distribution of training data (see Fig. 11a), our team dedicated a tremendous effort to interpret about 171,000 samples for 12 LULC categories (see Table 2). 70% of the samples were used for the training process and the remaining 30% were used for preliminary accuracy assessment of the resulting map. The user’s accuracy derived from this preliminary accuracy assessment was used to calculate the sample size for random sampling of the validation data.

The validation dataset was collected separately from the training dataset using the stratified random sampling method. Our initial step involved estimating the sample size (n) using Equation 4, a method proposed by Cochran et al. (1977)59 and subsequently elaborated by Olofsson et al. (2014)60.

where N is the number of pixels in the LULC map used to create random samples, \({S(\hat{O})}\) is the desired standard error of the overall accuracy, \(W_{i}\) is the area proportion of LULC category i on the map, \(S_{i}\) is the standard deviation of the stratum i. \(S_{i}\) was calculated based on the user’s accuracy (\(U_{i}\)) of the category i (\(S_{i} = \sqrt{U_{i}(1 - U_{i})}\)). Our objective is to achieve 1% for the standard error of the overall accuracy, resulting in a sample size of 583 (rounded up to 600). Using Qgis software, we conducted a random distribution process to distribute 600 points to the corresponding stratum of the LULC map (see Fig. 11b). The true LULC type of all random points were identified through the interpretation of Google Earth Pro, Sentinel-2, and Planet Scope’s images.

To evaluate the accuracy of the LULC map in a statistically robust manner, we followed a recommended methodology proposed by Olofsson et al. (2014). Firstly, an error matrix of sample count was constructed based on the validation data. Table 3 showed a generalized example of the error matrix of sample count for a LULC map with k categories. Secondly, an estimated error matrix (see Table 4) was created by multiplying each row of Table 3 by the area proportion of the category in that row. The estimated area proportion in cell i,j of Table 4 was calculated using Equation 5.

where \(W_{i}\) is the area proportion of the category i on the map (\(W_{i} = {A_{m,i}} \div {A_{tot}}\), in which \(A_{m,i}\) is mapped area of category i and \(A_{tot}\) is the total area of the map), \(n_{ij}\) and \(n_{i\cdot }\) are from Table 3. The user’s accuracy (\(\hat{U}_{i}\)), the producer’s accuracy (\(\hat{P}_{i}\)) of category i, and overall accuracy (\(\hat{O}\)) are as follows:

The estimated variance of the overall accuracy [\(\hat{V}(\hat{O})\)], user’s accuracy [\(\hat{V}(\hat{U_{i}})\)], and producer’s accuracy [\(\hat{V}(\hat{P_{i}})\)] of category i are as follows:

It is noteworthy that there is no \(W_{i}^2\frac{n_{ii}}{n_{i\cdot }}(1-\frac{n_{ii}}{n_{i\cdot }})/(n_{i\cdot }-1)\) in the second term of Equation 12, because of \(j \ne i\)

Satellite images pre-processing

This study used satellite images from multiple sensors, including Sentinel-2, Sentinel-1, ALOS-2/PALSAR-2, ALOS/PRISM, and OpenStreetMap data. To capture seasonal variations, a median-composite image for each two-month period was created, except for ALOS/PRISM and OpenStreetMap data. Table 5 described details of input satellite data used in this study.

Sentinel-2

Sentinel-2 Level-2A (L2A) dataset46 provides radiometrically calibrated surface reflectance images, which is an advanced processing level from the Level-1C using Sen2Cor software61. Our study utilized both 10-m bands (B2, B3, B4, and B8) and 20-m bands (B5, B6, B7, B8A, B11, B12). We selected images with less than 30% of cloud to preliminary filter heavy cloud-contaminated images. After that, the cloud and its shadow were masked using a combination of Sentinel-2 Cloud Probability (s2cloudless), Cloud Displacement Index (CDI), and Directional Distance Transform (DDT). This cloud masking technique was applied to extensively mask cloud and cloud shadow of L2A images5.

During the investigation of Sentinel-2 images for Mainland Vietnam, the heavily cloud-contaminated images occurred from May to October, resulting in large areas of missing data for images in these months. To fill data gaps caused by the cloud and eliminate bad pixels, this study applied a simple and practical approach using a time series of multiple years as follows:

Firstly, monthly median-composite images were created for the years 2019, 2020, and 2021. Subsequently, median-composite images of 6 seasons were generated using the previously derived monthly images (see Equation 12).

where:

t \(\in\) {1,3,5,7,9,11},

Img_t_t+1_20 is the median-composite image between time t and time t+1 in 2020,

Median() refers to the median method for image collection in Google Earth Engine,

Img_t-1_20, Img_t_20, Img_t+1_20, and Img_t+2_20 are the median-composite images for time t-1, t, t+1, and t+2 in 2020, respectively,

Img_t_19 and Img_t+1_19 are the median-composite images for time t and time t+1 in 2019, respectively,

Img_t_21 and Img_t+1_21 are the median-composite images for time t and time t+1 in 2021, respectively.

In Equation 12, we doubled Img_t_20 and Img_t+1_20 for the sake of increasing their weights. Because our strategy is to ensure a major contribution of data in time t and t+1 to the resulting median-composite image.

Secondly, in the cases of \(t=1\) (January) and \(t=11\) (November), the Img_t-1_20 and Img_t+2_20 were removed, respectively. Because there are no such images for these seasons in 2020. In case \(t=5\), \(t=7\), and \(t=9\), we added Img_t-1_19, Img_t+2_19, Img_t-1_21, and Img_t+2_21 to Equation 12. Because large areas of missing data for those seasons required more images to fill the data gap.

The pre-processing for Sentinel-2 was implemented on Google Earth Engine, and the resulting images were downloaded to our local computer. To increase the number of feature bands, several vegetation indices were used (see from Equation 13 to 19), including Normalized Difference Vegetation Index (NDVI), Enhanced Vegetation Index (EVI), Green Normalized Difference Vegetation Index (GNDVI), Green-Red Vegetation Index (GRVI), Normalized Difference Water Index 1 (NDWI1), Normalized Difference Water Index 2 (NDWI2), Topsoil Grain Size Index (GSI).

Sentinel-1

This study used the Sentinel-1 GRD (Ground Range Detected) product on Google Earth Engine62. The GRD product provides calibrated and ortho-corrected images of 10-m C-band SAR (Synthetic Aperture Radar), including thermal noise removal, and radiometric calibration with pixels containing \(\sigma ^0\) values in the Decibel unit. For slope correction, the angular-based radiometric slope correction method63 was applied using the default model for vegetation covers and the Digital Elevation Model (DEM) from the Shuttle Radar Topography Mission product. To reduce the speckle noises, the Refined Lee Filter method was applied with a 3x3 moving window. The javascript code for the speckle filter was acquired from the supplementary material of Hird et al. (2017)64. Dual-band cross-polarization (VV and VH) were used for the calculation of the Difference and Ratio indices (see Equations 20 and 21). Furthermore, we incorporated two texture metrics for each polarization, specifically Sum Average and Dissimilarity, using the “ee.Image.glcTexture()” function in Google Earth Engine. The processed images have 8 bands, including \(\gamma _{VH}^0\), \(\gamma _{VV}^0\), Difference, Ratio, and 4 textured bands.

ALOS-2/PALSAR-2/ScanSAR data

The PALSAR/PALSAR-2 data of JAXA have been the most frequently used L-band SAR for forest mapping and coastal area change analysis65. Because of the ability of L-band microwaves to penetrate through dense vegetation, it has a good capability to detect dense forests and flooded vegetation such as mangrove forests. This study used 25-m PALSAR-2/ScanSAR level 2.2 images provided by JAXA via Google Earth Engine66. The level 2.2 product provides ortho-rectified and slop-corrected images using ALOS World 3D (AW3D) data. To remove the speckle noise, we applied a Refined Lee Filter with a 5x5 moving window and converted the pixel value from digital number (DN) to gamma-naught (unit in decibel) using Equation 22, in which CF is the calibration factor with the value of -83 dB67. HH and HV polarizations were used for creating radar indices, including Difference (see Equation 23), Ratio (see Equation 24), Normalized Difference Index (NDI) (see Equation 25), NLI index (see Equation 26)41, and two textured metrics (Sum Average and Dissimilarity) for each polarization. The processed image contains 10 bands, including \(\gamma _{HH}^0\), \(\gamma _{HH}^0\), Difference, Ratio, NDI, NLI, and 4 textured bands.

ALOS World 3D and OpenStreetMap data

Auxiliary data such as digital surface model (DSM) and OpenStreetMap are popularly used to improve the accuracy of land use land cover classification68,69,70,71. This study used altitude and slope derived from ALOS World 3D (AW3D) with 30 m spatial resolution72. Regarding OpenStreetMap data, vector data of road networks for 2020 were used, containing information on primary roads, secondary roads, and residential roads73. A distance to road map was derived from the raster map of the road network, which was the result of the conversion process from vector to raster on QGIS software.

Data availability

The 10-m LULC map of 2020 for Vietnam is available on the website of the Earth Observation Research Center (EORC) of JAXA. Users can download the map by accessing the following link: https://www.eorc.jaxa.jp/ALOS/en/dataset/lulc/lulc_vnm_v2309_e.htm. The thumbnail views of Sentinel-2 RGB images can be found at the following link: https://pen.envr.tsukuba.ac.jp/~phantom/S2_6seasons/. Due to the large file size of Sentinel-2 images, users who want to obtain 6 seasons of Sentinel-2 for Vietnam can directly send the request to the corresponding author. The satellite data can be shared after considering the user’s requests. In addition, the reference data can be shared upon a reasonable request.

References

Feddema, J. J. et al. The importance of land-cover change in simulating future climates. Science 310, 1674–1678 (2005).

Naikoo, M. W. et al. Analyses of land use land cover (lulc) change and built-up expansion in the suburb of a metropolitan city: Spatio-temporal analysis of delhi ncr using landsat datasets. J. Urban Manag. 9, 347–359 (2020).

Karakuş, C. B. The impact of land use/land cover (lulc) changes on land surface temperature in sivas city center and its surroundings and assessment of urban heat island. Asia-Pac. J. Atmos. Sci. 55, 669–684 (2019).

Glade, T. Landslide occurrence as a response to land use change: a review of evidence from new zealand. CATENA 51, 297–314 (2003).

Brown, C. F. et al. Dynamic world, near real-time global 10 m land use land cover mapping. Sci. Data 9, 1–17 (2022).

Zanaga, D. et al. Esa worldcover 10 m 2020 v100. zenodo (2021).

Karra, K. et al. Global land use/land cover with sentinel 2 and deep learning. In 2021 IEEE international geoscience and remote sensing symposium IGARSS, 4704–4707 (IEEE, 2021).

Takahashi, M. et al. Jaxa high resolution land-use and land-cover map of japan. In 2013 IEEE International Geoscience and Remote Sensing Symposium-IGARSS, 2384–2387 (IEEE, 2013).

Hirayama, S. et al. Generation of high-resolution land use and land cover maps in japan version 21.11. J. Remote Sens. Soc. Japan42, 199–216 (2022).

Xu, Y. et al. Annual 30-m land use/land cover maps of china for 1980–2015 from the integration of avhrr, modis and landsat data using the bfast algorithm. Sci. China Earth Sci. 63, 1390–1407 (2020).

Mirmazloumi, S. M. et al. Elulc-10, a 10 m european land use and land cover map using sentinel and landsat data in google earth engine. Remote Sensing 14, 3041 (2022).

Asenso Barnieh, B., Jia, L., Menenti, M., Zhou, J. & Zeng, Y. Mapping land use land cover transitions at different spatiotemporal scales in west africa. Sustainability 12, 8565 (2020).

Digra, M., Dhir, R. & Sharma, N. Land use land cover classification of remote sensing images based on the deep learning approaches: a statistical analysis and review. Arab. J. Geosci. 15, 1003 (2022).

Kattenborn, T., Leitloff, J., Schiefer, F. & Hinz, S. Review on convolutional neural networks (cnn) in vegetation remote sensing. ISPRS J. Photogramm. Remote. Sens. 173, 24–49 (2021).

Moharram, M. A. & Sundaram, D. M. Land use and land cover classification with hyperspectral data: A comprehensive review of methods, challenges and future directions. Neurocomputing (2023).

Campos-Taberner, M. et al. Understanding deep learning in land use classification based on sentinel-2 time series. Sci. Rep. 10, 17188 (2020).

Ma, L. et al. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote. Sens. 152, 166–177 (2019).

Maggiori, E., Tarabalka, Y., Charpiat, G. & Alliez, P. Convolutional neural networks for large-scale remote-sensing image classification. IEEE Trans. Geosci. Remote Sens. 55, 645–657 (2016).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. nature 521, 436–444 (2015).

Mohammadi, M. & Sharifi, A. Evaluation of convolutional neural networks for urban mapping using satellite images. J. Indian Soc. Remote Sens. 49, 2125–2131 (2021).

Saralioglu, E. & Gungor, O. Semantic segmentation of land cover from high resolution multispectral satellite images by spectral-spatial convolutional neural network. Geocarto Int. 37, 657–677 (2022).

Zhang, C., Wei, S., Ji, S. & Lu, M. Detecting large-scale urban land cover changes from very high resolution remote sensing images using cnn-based classification. ISPRS Int. J. Geo Inf. 8, 189 (2019).

Zhang, M., Lin, H., Wang, G., Sun, H. & Fu, J. Mapping paddy rice using a convolutional neural network (cnn) with landsat 8 datasets in the dongting lake area, china. Remote Sensing 10, 1840 (2018).

Ali, K. & Johnson, B. A. Land-use and land-cover classification in semi-arid areas from medium-resolution remote-sensing imagery: A deep learning approach. Sensors 22, 8750 (2022).

Zaabar, N., Niculescu, S. & Kamel, M. M. Application of convolutional neural networks with object-based image analysis for land cover and land use mapping in coastal areas: A case study in ain témouchent, algeria. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 15, 5177–5189 (2022).

Bhosle, K. & Musande, V. Evaluation of deep learning cnn model for land use land cover classification and crop identification using hyperspectral remote sensing images. J. Indian Soc. Remote Sens. 47, 1949–1958 (2019).

Guidici, D. & Clark, M. L. One-dimensional convolutional neural network land-cover classification of multi-seasonal hyperspectral imagery in the san francisco bay area, california. Remote Sens. 9, 629 (2017).

Hasan, H., Shafri, H. Z. & Habshi, M. A comparison between support vector machine (svm) and convolutional neural network (cnn) models for hyperspectral image classification. In IOP Conference Series: Earth and Environmental Science, vol. 357, 012035 (IOP Publishing, 2019).

Ienco, D., Gaetano, R., Dupaquier, C. & Maurel, P. Land cover classification via multitemporal spatial data by deep recurrent neural networks. IEEE Geosci. Remote Sens. Lett. 14, 1685–1689 (2017).

Benedetti, P. et al. A deep learning architecture for multiscale multimodal multitemporal satellite data fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 11, 4939–4949 (2018).

Dou, P., Shen, H., Li, Z. & Guan, X. Time series remote sensing image classification framework using combination of deep learning and multiple classifiers system. Int. J. Appl. Earth Obs. Geoinf. 103, 102477 (2021).

Ienco, D., Interdonato, R., Gaetano, R. & Minh, D. H. T. Combining sentinel-1 and sentinel-2 satellite image time series for land cover mapping via a multi-source deep learning architecture. ISPRS J. Photogramm. Remote. Sens. 158, 11–22 (2019).

Song, H., Liu, Q., Wang, G., Hang, R. & Huang, B. Spatiotemporal satellite image fusion using deep convolutional neural networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 11, 821–829 (2018).

Springenberg, J. T., Dosovitskiy, A., Brox, T. & Riedmiller, M. Striving for simplicity: The all convolutional net. arXiv preprint arXiv:1412.6806 (2014).

Yu, F. & Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv preprint arXiv:1511.07122 (2015).

Zeiler, M. D., Krishnan, D., Taylor, G. W. & Fergus, R. Deconvolutional networks. In 2010 IEEE Computer Society Conference on computer vision and pattern recognition, 2528–2535 (IEEE, 2010).

Thuaire, B. et al. Assessing the biodiversity in viet nam: Analysis of impacts from economic sectors. Tech. Rep., World Wide Fund for Nature (WWF-Vietnam) (2021). https://www.biodev2030.org/wp-content/uploads/2022/01/Final-Report_Biodiversity-assessment-in-Vietnam-Analysis-of-impact-of-economic-sectors-ENG.pdf [Accessed: August 25, 2023].

Nguyen, M.-H. & Jones, T. E. Predictors of support for biodiversity loss countermeasure and bushmeat consumption among vietnamese urban residents. Conserv. Sci. Pr. 4, e12822 (2022).

Shahbaz, M., Haouas, I. & Van Hoang, T. H. Economic growth and environmental degradation in vietnam: is the environmental kuznets curve a complete picture?. Emerg. Mark. Rev. 38, 197–218 (2019).

Truong, V. T. et al. Jaxa annual forest cover maps for vietnam during 2015–2018 using alos-2/palsar-2 and auxiliary data. Remote Sensing 11, 2412 (2019).

Hoang, T. T., Truong, V. T., Hayashi, M., Tadono, T. & Nasahara, K. N. New jaxa high-resolution land use/land cover map for vietnam aiming for natural forest and plantation forest monitoring. Remote Sensing 12, 2707 (2020).

Phan, D. C. et al. First comprehensive quantification of annual land use/cover from 1990 to 2020 across mainland vietnam. Sci. Rep. 11, 1–20 (2021).

Fan, P. et al. Urbanization, economic development, environmental and social changes in transitional economies: Vietnam after doimoi. Landsc. Urban Plan. 187, 145–155 (2019).

Meyfroidt, P. & Lambin, E. F. The causes of the reforestation in vietnam. Land Use Policy 25, 182–197 (2008).

McElwee, P. Reforesting “bare hills” in vietnam: Social and environmental consequences of the 5 million hectare reforestation program. Ambio: A J. Human Environ.38, 325–333 (2009).

European Union/ESA/Copernicus. Sentinel-2 msi: Multispectral instrument, level-2a. https://developers.google.com/earth-engine/datasets/catalog/COPERNICUS_S2_SR [Accessed: August 1, 2023].

Gorelick, N. et al. Google earth engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ.https://doi.org/10.1016/j.rse.2017.06.031 (2017).

Japan Aerospace Agency, Earth Observation Research Center (JAXA-EORC). “high-resolution land use and land cover map of mainland vietnam” (2020). https://www.eorc.jaxa.jp/ALOS/en/dataset/lulc/lulc_vnm_v2006_e.htm [Accessed: August 29, 2023].

Truong, V. T., Phan, C. D., Nasahara, K. N. & Tadono, T. How does land use/land cover map’s accuracy depend on number of classification classes?. SOLA 15, 28–31 (2019).

Venter, Z. S., Barton, D. N., Chakraborty, T., Simensen, T. & Singh, G. Global 10 m land use land cover datasets: A comparison of dynamic world, world cover and esri land cover. Remote Sensing 14, 4101 (2022).

Sun, Z., Xu, R., Du, W., Wang, L. & Lu, D. High-resolution urban land mapping in china from sentinel 1a/2 imagery based on google earth engine. Remote Sensing 11, 752 (2019).

Salem, A. & Hashemi-Beni, L. Inundated vegetation mapping using sar data: A comparison of polarization configurations of uavsar l-band and sentinel c-band. Remote Sensing 14, 6374 (2022).

Kraatz, S. et al. Comparison between dense l-band and c-band synthetic aperture radar (sar) time series for crop area mapping over a nisar calibration-validation site. Agronomy 11, 273 (2021).

Zhou, Y. et al. For-backward lstm-based missing data reconstruction for time-series landsat images. GIScience & Remote Sensing 59, 410–430 (2022).

Tahsin, S., Medeiros, S. C., Hooshyar, M. & Singh, A. Optical cloud pixel recovery via machine learning. Remote Sensing 9, 527 (2017).

The Socialist Republic of Vietnam. The national action plan for the implementation of the 2030 sustainable development agenda (issued in conjunction with decision no. 622/qd-ttg dated 10th may 2017 by the prime minister) (2017). https://vietnam.un.org/en/4123-national-action-plan-implementation-2030-sustainable-development-agenda [Accessed: September 3, 2023].

Nguyen, L. & Sarah, G. Vietnam: National strategy on climate change and the action plan on methane emissions reduction. Tech. Rep., United States Department of Agriculture, Foreign Agricultural Service (2022). https://www.fas.usda.gov/data/vietnam-vietnam-issues-national-strategy-climate-change-2050-and-action-plan-methane-emissions [Accessed: September 3, 2023].

The Socialist Republic of Vietnam. Decision on approval of the national action programme on the reduction of green-house gas emissions through the reduction of deforestation and forest degradation, sustainable management of forest resources, and conservation and enhancement of forest carbon stocks (redd+) by 2030 (2017). https://faolex.fao.org/docs/pdf/vie189912.pdf [Accessed: September 3, 2023].

Cochran, W. G. Sampling techniques (John Wiley & Sons, 1977).

Olofsson, P. et al. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 148, 42–57 (2014).

Main-Knorn, M. et al. Sen2cor for sentinel-2. In Image and Signal Processing for Remote Sensing XXIII, vol. 10427, 37–48 (SPIE, 2017).

European Union/ESA/Copernicus. Sentinel-1 sar grd: C-band synthetic aperture radar ground range detected, log scaling. https://developers.google.com/earth-engine/datasets/catalog/COPERNICUS_S1_GRD [Accessed: July 24, 2023].

Vollrath, A., Mullissa, A. & Reiche, J. Angular-based radiometric slope correction for sentinel-1 on google earth engine. Remote Sensing 12, 1867 (2020).

Hird, J. N., DeLancey, E. R., McDermid, G. J. & Kariyeva, J. Google earth engine, open-access satellite data, and machine learning in support of large-area probabilistic wetland mapping. Remote Sensing 9, 1315 (2017).

Ottinger, M. & Kuenzer, C. Spaceborne l-band synthetic aperture radar data for geoscientific analyses in coastal land applications: A review. Remote Sensing 12, 2228 (2020).

Japan Aerospace Agency, Earth Observation Research Center (JAXA-EORC). Palsar-2 scansar level 2.2. https://developers.google.com/earth-engine/datasets/catalog/JAXA_ALOS_PALSAR-2_Level2_2_ScanSAR [Accessed: August 2, 2023].

Shimada, M., Isoguchi, O., Tadono, T. & Isono, K. Palsar radiometric and geometric calibration. IEEE Trans. Geosci. Remote Sens. 47, 3915–3932 (2009).

Hurskainen, P., Adhikari, H., Siljander, M., Pellikka, P. & Hemp, A. Auxiliary datasets improve accuracy of object-based land use/land cover classification in heterogeneous savanna landscapes. Remote Sens. Environ. 233, 111354 (2019).

Lee, D. G., Shin, Y. H. & Lee, D.-C. Land cover classification using segnet with slope, aspect, and multidirectional shaded relief images derived from digital surface model. J. Sensors 2020, 1–21 (2020).

Al-Najjar, H., Kalantar, B., Pradhan, B. et al. Land cover classification from fused dsm and uav images using convolutional neural networks. remote sens 11: 1–18 (2019).

Schultz, M., Voss, J., Auer, M., Carter, S. & Zipf, A. Open land cover from openstreetmap and remote sensing. Int. J. Appl. Earth Obs. Geoinf. 63, 206–213 (2017).

Japan Aerospace Agency, Earth Observation Research Center (JAXA EORC). Alos global digital surface model “alos world 3d - 30m (aw3d30)”. https://www.eorc.jaxa.jp/ALOS/en/dataset/aw3d30/aw3d30_e.htm [Accessed: August 2, 2023].

Geofabrik. Aopenstreetmap data extracts. http://download.geofabrik.de/ [Accessed: August 2, 2023].

Acknowledgements

The article processing charge (APC) of this paper was supported by the research contract JX-PSPC-558032 and Mountain Science Center of University of Tsukuba. We also thank the supports of the Project for Human Resource Development Scholarship by Japanese Grant Aid (JDS) under the management of Japan International Cooperation Center (JICE).

Author information

Authors and Affiliations

Contributions

V.T.T. the conception, design of the work, the acquisition, analysis, and interpretation of data, the original draft, and the revised manuscript; S.H. time-feature CNN algorithm and source code, methodology, the original draft, the revised manuscript; T.T.H and D.C.P. reference data, validation, the original draft, the revised manuscript; T.T. the conception, methodology, the original draft, the revised manuscript; K.N.N. the method of creating time-series optical images, the conception, design of the work, the original draft, editing, and comprehensively revised the manuscript. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Truong, V.T., Hirayama, S., Phan, D.C. et al. JAXA’s new high-resolution land use land cover map for Vietnam using a time-feature convolutional neural network. Sci Rep 14, 3926 (2024). https://doi.org/10.1038/s41598-024-54308-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-54308-1

This article is cited by

-

Mapping decadal land cover dynamics in Sicily’s coastal regions

Scientific Reports (2024)