Abstract

In engineering, redundancy is the duplication of vital systems for use in the event of failure. In studies of human cognition, redundancy often refers to the duplication of the signal. Scores of studies have shown the salutary effects of a combined auditory and visual signal over single modality, the advantage of processing complete faces over facial features, and more recently the advantage of two observers over one. But what if the signal (or the number of observers) is fixed and cannot be altered or augmented? Can people improve the efficiency of information processing by recruiting an additional, redundant system? Here we demonstrate that recruiting a second redundant system can, under reasonable assumptions about human capacity, result in improved performance. Recruiting a second redundant system may come with a higher energy cost, but may be worthwhile in high-stakes situations where processing information accurately is crucial.

Similar content being viewed by others

Introduction

Many natural as well as man-made systems are characterized by some form of redundancy. Redundancy is the duplication of vital systems for use in the event of failure1,2. For example, critical systems in the aviation industry have backup systems to ensure safe operation in case something goes wrong. Redundancy does not come without a cost; in aircrafts the cost could be in excess weight, space, or price. In the human body the cost could be in the energetic requirements needed to support duplicate systems. We ask what are the pros and cons of redundancy in the cognitive system, responsible for processing information. Specifically, we test whether redundancy can actually lead to improved efficiency in information processing, and if so under what conditions. The approach we take is to consider a popular class of decision making models called evidence-accumulation models3,4 which describe how humans accumulate evidence from the environment over time to make a decision.

(A) Depiction of a human processing an incoming signal using one information processing system. (B) Depiction of a human processing an incoming signal using an additional second, redundant information processing system. Recruiting such a second system may come with costs such as a higher resource demand. (C) When making ecologically plausible assumptions about human capacity, the recruitment of a second, redundant information processing system can result in improved performance as measured by reward rate across different levels of assumed information processing speed. The x-axis represents the speed of processing from low to high, as measured by drift rate v (see section “The diffusion decision model”). The y-axis represents the reward rate advantage of two processing systems over one processing system. Red bars indicate positive values suggesting a two systems advantage, whereas blue bars would have indicated an advantage for one system. This figure represents the outcome for a subsample of the parameter combinations. More complete results are reported in the text below.

Redundancy can occur either in the incoming information or the cognitive system processing this information. Redundancy of information sources has been studied extensively in psychology, often by comparing the detection of one signal when it is presented alone (e.g., is there a visual signal? yes/no) vs. the detection of any signal when multiple signals are presented (e.g., was there an auditory or a visual signal?). Common results have shown faster and more accurate responses on experimental trials with multiple (redundant) signals (redundant targets effect;5,6). In this article we focus on the second kind of redundancy—redundancy in the cognitive system itself, not the incoming information. Specifically, we are interested in investigating whether humans can improve their performance by recruiting a second, redundant system (Fig. 1B) that processes the same incoming information in the same way as the first system (Fig. 1A). This is highly relevant since humans often may not have control over external incoming information, but they may have control over whether or not to recruit a second, internal redundant processing system that may require spending resources that could otherwise be used elsewhere. To foreshadow our conclusions, we find that under ecologically plausible assumptions about human capacity, recruiting a second redundant cognitive system can indeed result in improved performance (Fig. 1C).

Evidence-accumulation models of human decision making

In this work, we consider the case of human decision-making. In the most commonly investigated scenario, a decision-maker has to choose between two competing alternatives. Such binary choice data are commonly analysed using evidence-accumulation models. These models assume that a decision-maker accumulates evidence until a decision threshold is reached. These models predict not only the choice, but also the time it takes to make that choice (i.e., response time, RT). One of the most popular evidence-accumulation models for analysing such choice-response-time data is the diffusion decision model (DDM;4). Here we investigate whether it is possible to obtain improved performance if one assumes that there is a second, redundant, diffusion process that accumulates evidence simultaneously. It is non-trivial to intuit the predictions of such systems’ redundancy. On the one hand adding another system could offer safety in case the first system fails to make a timely (and correct) decision. On the other hand, adding a redundant system could consume already-limited processing resources, and moreover it is not clear what should happen if two systems each end up at a different state; what should a decision maker do when facing conflicting recommendations? Our work offers a principled, model-based approach for investigating these issues. We outline below our modeling approach, and then report results from a comprehensive set of simulations that compare processing efficiency (reward rate) with one vs. two systems. To foreshadow the outcome, we have found that under ecologically plausible assumptions about human capacity, recruiting a second redundant cognitive system can indeed result in improved performance (Fig. 1C).

The diffusion decision model

Consider a simple decision task where on each trial, participants are presented with a flashing grid with different amounts of orange and blue. Their task is to indicate whether the presented grid contains more orange or more blue7. Practically, on each trial participants have to make a binary decision: either “More Orange” or “More Blue”8. A common approach to model such choices between two alternatives, and the time course of the decision, is by assuming that, on each trial, participants accumulate evidence in favor of either option. The DDM, depicted in Fig. 1A, assumes that a noisy diffusion process hovers between two boundaries until the amount of evidence surpasses either one bound (Response 1) or another (Response 2). In this example, Response 1 corresponds to “More Orange” and Response 2 to “More Blue”.

The standard DDM has seven parameters, however, for the present investigation, we focus only on the following relevant parameters: mean drift rate v, variability in drift rate \(s_v\), and response threshold a. [To briefly describe the remaining parameters, the DDM assumes that evidence accumulation begins at start point z. The time it takes to reach a decision boundary is referred to as decision time. The observed response time is obtained by adding the non-decision time parameter \(t_0\) to the decision time. The non-decision time captures processes such as stimulus encoding and motor processes for making the response (e.g., the time it takes to press a response button). It is commonly assumed that not only the drift rate varies across trials, but also the start point z (with variability \(s_z\)), and the non-decision time \(t_0\) (with variability \(s_{t_0}\)). In sum, the standard full DDM features the following seven parameters: threshold separation a, mean drift rate v, inter-trial variability of drift \(s_v\), start point z, inter-trial variability of start point \(s_z\), non-decision time \(t_0\), and inter-trial variability in non-decision time \(s_{t_0}\). The diffusion constant was fixed to \(s = 1\).] The drift rate corresponds to the rate of evidence accumulation and captures a person’s ability and the stimulus difficulty. Higher drift rates correspond to better performance. The DDM assumes that the drift rate on each trial corresponds to a draw from a normal distribution with mean v (i.e., the mean drift rate across trials), and standard deviation \(s_v\) (i.e., variability of drift rate across trials). The distance between the two decision bounds, a, corresponds to a participant’s caution. Higher values of a correspond to a more cautious response style, where more evidence is required to trigger a response.

The two diffusion model (2DDM)

Here we investigate a novel alternative set-up where there is a second, redundant, diffusion process that accumulates evidence simultaneously (Fig. 1B). We term this model the two diffusion model (2DDM; as opposed to the standard diffusion model, which we refer to as 1DDM). We assume that the two diffusion processes share the same parameters. However, conditional on these parameter values, the two accumulation processes operate independently. Although the mean drift rate v and its variability across trials \(s_v\) are identical for both processes, the drift rate for a particular trial will be slightly different for each process since it is obtained by independently sampling a value from a normal distribution with mean v and standard deviation \(s_v\). [The same holds for the other parameters that vary across trials (i.e., z and \(t_0\)).] Now that we have introduced the second redundant process we need to specify how the two processes are combined to make a final decision.

Decision rule

When multiple decision processes (diffusion, in this example) contribute to a single response, there needs to be a mechanism for combining the outcomes of the different processes into a single, actionable decision. Here we assume that the decision-maker accumulates evidence until both processes are on the same side of a decision boundary (i.e., both above the top boundary, or below the bottom one). [In initial simulations not reported here, we have also explored a rule that assumes the decision-maker waits until both processes have crossed a decision boundary for the first time. If both processes finish at the same boundary, the choice naturally corresponds to that boundary, and RT is set to the time the slower process has crossed the boundary (plus non-decision time). If the two processes finish at opposite boundaries, the response is randomly set to one of the two options and RT is set to the time the slower process finished. However, this rule seemed less plausible to us then the one used here. Furthermore, the rule used here can be considered as a conservative boundary case since waiting for both accumulators to agree results in slower RTs than other conceivable decision rules.] In other words, the decision-maker waits until the two processes agree. Consequently, it is not enough for a process to have crossed the boundary once if, at the time the other process crosses the boundary, the first process is again below threshold. This implies that it is possible that a process crosses boundaries more than once in order for both processes to agree at the same time. This also implies a conservative decision strategy for the 2DDM, since waiting for both accumulators to agree results in slower RTs than waiting for just the one (faster) process to complete. How can a 2DDM system ever be efficient? The critical point here is that a second redundant system, as in the 2DDM, slows down overall decisions, but may offer a corrective mechanism that overcomes erroneous decisions made by the 1DDM. This is visualised in Fig. 2. In this example, Response 1 is the correct choice, however, the 1DDM incorrectly ends up at the boundary corresponding to Response 2 (top-panel). In the 2DDM (bottom-panel), one process erroneously crossing the boundary corresponding to the incorrect choice does not necessarily result in an incorrect choice since a response is only triggered once both processes agree.

The 2DDM offers a corrective mechanism that can overcome erroneous decisions. In this example, Response 1 is the correct choice, however, the 1DDM incorrectly ends up at the boundary corresponding to Response 2 (top-panel). In the 2DDM (bottom-panel), one process erroneously crossing the wrong boundary does not necessarily result in an incorrect choice since a response is only triggered once both processes agree.

Quantifying performance

Below we report the outcomes of a number of simulation studies for investigating whether adding a second, redundant, diffusion process can result in improved performance. Performance in decision tasks could be quantified as the accuracy of choices, or their speed. We opted for a measure that takes both speed and accuracy into account, and operationalised improved performance via the concept of reward rate. For the interested reader we also present plots of the mean response time for correct and incorrect responses, and accuracy (see Fig. 4 and Appendix B in the online supplement). To allude to the results, we find that by and large mean RT for incorrect responses tends to be slower than for correct responses for any given value of threshold and drift rate. The reward rate is defined as the proportion of correct trials divided by the average duration of a trial9:

where D is the inter-trial interval and \(D_p\) is a time-out after an incorrect response. Here we assume that the inter-trial interval is given by \(D = 1s\) which can be considered to be a medium value (e.g.,10). For simplicity, we assume that there is no time-out after an incorrect response (i.e., \(D_p = 0s\)). In each case, we report results for the two diffusion process cognitive architecture (2DDM), but for comparison also results for the standard set-up that assumes only one diffusion process (1DDM).

Unlimited, limited, and fixed capacity

Adding a second redundant process may come with a cost. For instance, adding a second process could reduce the amount of resources available to each process. This can be captured with the concept of capacity11,12. When comparing the 2DDM to the 1DDM one can make different assumptions about the overall capacity of the cognitive system. Broadly speaking, processing capacity (sometimes referred to as workload capacity) can be thought of as the amount of resources available for processing. Capacity could be limited, whereby adding more processes takes a toll on the overall efficiency of each component, or it could be unlimited, whereby despite heavier load each process can be completed at the same rate11,12. Specifically, in the 1DDM setting, there is one stream of evidence accumulating, whereas in the 2DDM setting, there are two. Making the assumption that the drift rate v is identical for the 1DDM and both processes of the 2DDM effectively corresponds to assuming unlimited capacity. Unlimited capacity can be defined such that the speed of a process does not change when the second process is added13. Since the drift rate v measures the rate of information processing, setting the drift rate v to the same value in the 1DDM and both processes of the 2DDM results in unlimited capacity. This also means that the total information available in the 2DDM cognitive system is increased compared to the 1DDM. If one wishes to equate the total information available in the 1DDM and the 2DDM (a specific case of limited capacity, henceforth referred to as fixed capacity), one can simply divide the two drift rates in the 2DDM by the number of involved processes (i.e., 2). Furthermore, we also need to adjust the drift rate variability parameter \(s_v\) and the diffusion constant s by a factor of \(\sqrt{2}\). [The reasons for these adjustments are: (1) the drift rate on any given trial for each of the two processes in the 2DDM is a sample from a normal distribution with mean v and standard deviation \(s_v\), (2) at any time t, the total evidence accumulated across the two processes in the 2DDM is the sum of two normally distributed variables where the diffusion noise standard deviation for each process is given by s.] Let us denote the conversion factor by l:

where \(l = 1\) in the case of unlimited capacity and \(l = 2\) in the case of fixed capacity. Values between 1 and 2 correspond to what can be conceptualised as limited capacity. Note that \(C = 1 / l\) can be interpreted as the workload capacity coefficient presented in articles such as13. This article (and similar other articles) typically find that human workload capacity C(t) in simple perceptual judgments is roughly between 0.7 and 0.9 for most t (equivalent to \(1.1< l < 1.4\)) indicating limited capacity. These estimates will be revisited in the discussion to put our results into context.

Threshold allowed to differ across 1DDM and 2DDM

In the simulations reported below, we assume that response caution, as represented by the threshold parameter, can be different for the 1DDM and 2DDM. We allow threshold to be different in these two model architectures since it is typically assumed that participants have at least some control over their threshold setting14. In contrast, it is typically assumed that humans do not have control over the setting of the rate of information processing, drift rate v (e.g.,14; but see15).

Since the 2DDM is inherently more cautious by implementing a decision rule that waits for both processes to agree, the threshold value has a different meaning in the 1DDM and 2DDM which complicates comparing the two cognitive architectures in a fair manner. Here we opted for an approach that computes reward rate for each architecture across a range of plausible threshold settings and then compares the two based on the maximum reward rate obtained within that threshold range. This can be thought of as a human who sets their threshold in an optimal manner.

Results

Unlimited capacity

Unlimited capacity (\(l = 1\)). Reward rate for different threshold settings. Each panel displays the results for a different drift rate setting. The dotted lines indicate the maximum reward rate. The shading gradient indicates the proportion of trials simulated from the 2DDM that took longer than five seconds and have been excluded from the analysis. For most panels and most threshold settings, this proportion is negligible.

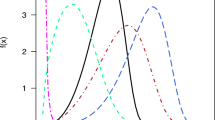

Each panel displays the difference in the maximum reward rate across threshold settings in the 2DDM and 1DDM, for a different setting of l. Positive values, represented by red bars, correspond to cases in which the 2DDM resulted in a higher maximum reward rate, negative values, represented by blue bars, show cases in which the 1DDM resulted in a higher reward rate.

First, we investigated the case of unlimited capacity (\(l = 1\)). Figure 3 displays the reward rate for different threshold settings. Each panel of Fig. 3 corresponds to a specific drift rate setting. The shading gradient indicates the proportion of trials simulated from the 2DDM that took longer than five seconds and have been excluded from the analysis. For most panels and most threshold settings, this proportion is negligible. The results for the 1DDM are displayed in blue, the ones for the 2DDM in red. Figure 3 demonstrates that, in the case of unlimited capacity, the maximum reward rate achieved by the 2DDM is higher than the one achieved by the 1DDM across all settings of drift rate. The top-left panel of Fig. 5 displays the difference between the maximum reward rate achieved by the 2DDM and 1DDM. Red bars correspond to cases in which the 2DDM resulted in a higher maximum reward rate, blue bars to cases in which the 1DDM resulted in a higher reward rate. Each bar of the plot corresponds to one panel of Fig. 3. In all cases, the 2DDM outperforms the 1DDM.

Figure 4 displays both mean RT for correct and incorrect responses, as well as accuracy, indicated by the size of the dots. As drift rate increases, accuracy increases and mean RT for correct and incorrect responses decreases. Within each panel, the diagonal line indicates where correct and incorrect RTs are identical for a given value of threshold and drift rate. Most dots fall above the diagonal line, indicating that mean RT for incorrect responses tends to be slower than for correct responses.

Fixed capacity

Next, we investigated the case of fixed capacity (\(l = 2\)). The bottom-right panel of Fig. 5 displays the difference between the maximum reward rate achieved by the 2DDM and 1DDM. Red bars correspond to cases in which the 2DDM resulted in a higher maximum reward rate, blue bars to cases in which the 1DDM resulted in a higher reward rate. The maximum reward rate achieved by the 2DDM is higher than the one achieved by the 1DDM across the majority of drift rate settings, however, there are also a substantial number of drift rate settings where the 1DDM achieves a higher reward rate.

Limited capacity

Finally, we investigated the case of limited capacity (i.e., \(1< l < 2)\). The top-right and bottom-left panels of Fig. 5 summarises the results for \(l = 1.25\) and \(l = 1.5\). Both for limited capacities \(l = 1.25\) and \(l = 1.5\), the 2DDM always achieves a higher maximum reward rate than the 1DDM. Considering all four panels of Fig. 5, it is apparent that as l increases, the difference in maximum reward rate in favour of the 2DDM decreases until, for \(l = 2\), it flips for some cases in favour of the 1DDM.

Discussion

In this article we investigated if there could be efficiency in redundancy. In engineering, redundancy is defined as the duplication of vital systems for use in the event of failure, and is common practice in the aviation and the automotive industries. Such duplication could appear wasteful and inefficient, but may be required to ensure safe operation in case of critical failures. In this article, we investigated whether a cognitive architecture that has built-in redundancy can have advantages over standard cognitive architectures and, if this is the case, under which conditions. We defined redundancy as an additional processing component of the cognitive system, not as an additional external signal. This distinction matters; for the most part, people cannot control their external environment but can arguably make modifications to their internal information-processing system by flexibly allocating resources. We showed through extensive model simulations that (at least under certain conditions) redundancy in the cognitive system could lead to improved efficiency, as gauged by reward rate.

When is there efficiency in redundancy?

We considered the case of human decision making and used the diffusion model as the building block for our investigations. Specifically, we compared data simulated from the standard diffusion model (1DDM) to data simulated from a modified diffusion model with two diffusion processes (2DDM) to make a response, thus featuring a redundancy component. In most cases, the 2DDM architecture resulted in more accurate but slower responses (see Fig. 4 and Appendix B in the online supplement), suggesting a trade-off between increased performance in terms of accuracy, but decreased performance in terms of speed. However, our simulations also suggest that this 2DDM architecture can outperform the standard single diffusion architecture in terms of a higher reward rate. This is predominantly the case when one does not assume a fixed capacity of the cognitive system. However, this assumption is supported by experimental data such as those presented in Eidels et al.13 who found that human workload capacity, C, in simple perceptual judgements is roughly between 0.7 and 0.9 (equivalent to \(1.1< l < 1.4\)). Our simulation results indicated that for this particular range, the 2DDM always outperforms the 1DDM in terms of maximum reward rate when threshold is set in an optimal manner. Overall, our findings suggest that it can be beneficial for humans to recruit a second, redundant cognitive system to process incoming information in order to increase their decision-making performance. This may come at the cost of spending more resources, but it may be worthwhile to invest these additional resources, for instance, in scenarios where the consequences of decisions are high.

Exemplary results for for a system with one (“1DDM”), two (“2DDM”), three (“3DDM”), and four accumulators (“4DDM”), for both the fixed capacity case and the unlimited capacity case. Upper row: Reward rate as a function of threshold a for one drift rate setting (i.e., \(v = 1.5\)). Bottom row: corresponding timeout proportions (i.e., trials that took longer than five seconds and have thus been excluded from the reward rate calculations that are displayed in the upper row), for each setting of threshold a.

Related work has studied the characteristics of ensembles of neurons that accumulate evidence. Specifically, Zandbelt et al.16, investigated whether the common assumption of representing human information processing by an evidence-accumulation model that features a single information accumulation process can approximate the more ecologically plausible scenario in which humans combine the output of a number of neurons which each accumulate evidence. Zandbelt et al., demonstrated that, under plausible assumptions, this is indeed the case for response time (RT) and Cox et al.17 generalised this finding to data that additionally feature choices. However, our aim was not to investigate whether single process evidence-accumulation models can approximate the more realistic underlying neuronal processes. Instead, we were concerned with the efficiency of calling a redundant system into action. First, we focused on the cognitive system as a more abstract unit as opposed to the neuronal details of it. Second, we investigated whether it can be beneficial for humans from a performance point of view—as measured by reward rate – to invest more resources and recruit such a second system, for instance, in situations where decision are highly consequential.

The limits of redundancy

The reader might ask what would happen if one were to add a third, fourth, etc., process to the system. Figure 6 (upper row) showcases for one drift rate setting (i.e., \(v = 1.5\)) how the reward rate behaves for a system with three accumulators (“3DDM”) and four accumulators (“4DDM”). The results are shown both for the assumption of unlimited capacity and fixed capacity. In order to achieve fixed capacity, for the 3DDM, \(l = 3\), and for the 4DDM, \(l = 4\). Both for fixed and unlimited capacity, efficiency approaches a limit. Specifically, for the fixed capacity case, the maximum reward rate for the 3DDM and 4DDM is lower than the ones for the 2DDM and 1DDM. For unlimited capacity, the maximum reward rate achieved by the 4DDM is lower than the one achieved by the 3DDM. Therefore, one can conclude that adding more than one or two additional accumulators will not result in increased efficiency. The bottom row of Fig. 6 displays for each of the four cognitive architectures (i.e., 1DDM, 2DDM, 3DDM, and 4DDM), the proportion of trials that took longer than five seconds. These were excluded from the analyses, because in a real experiment they may have been timeouts. As the number of processes increases, the proportion of timeouts increases, often to substantial values. This is another indication of the fact that adding more than one or two additional processes does not result in more efficient decision-making behaviour.

Redundancy in signals vs redundancy in systems

Effects of redundancy have been studied in psychology, especially since the 1970’s and 80’s, by Miller, Townsend and others12,13,18,19,20,21. Such experiments typically employed a redundant target design: on each trial the participant could have been presented with one signal or two signals (or no signal at all, ‘catch trials’), and was asked to respond upon detection of any of the signals (i.e., signal A alone, or B alone, or AB). In some studies the signals could be within the same modality (say, two dots of light), and in others they could be from different modalities (audio, visual). Trial type could be blocked, or mixed within a block (more often) and response times were calculated and plotted as distributions, separately, for signal A, signal B, and the double-signal AB. A common finding to many of these studies is a redundancy gain: responses on redundant target trials (AB) were faster than response times to any of the single signal conditions. Clearly, there is empirical evidence to the salutary effects of redundancy, as well as theoretical work explaining how they come about. [The exact account for these redundancy gains had been a matter for debate, with explanations spanning statistical facilitation to coactivation. The former account suggested that redundancy gains are a simple statistical artefact of choosing the faster of two (independent) stochastic processes, a ‘race model’. The latter account suggested that activation from two processes, or channels, is pooled into a single conduit, then compared against a single threshold, the ‘coactive model’. According to this account, activation is accrued more rapidly when pooled from two processes, than any one process alone, resulting in faster accumulation of evidence and hence faster responses with redundant signals. One could argue the debate had not been fully settled.]

The seminal work by Townsend and colleagues lays the foundations to our present work, but also differs in important ways. First, their work examined redundancy gains that emerge due to redundant signals, where additional information could be collected from the environment. Our present work takes this further to ask what happens when there is no additional information; can people improve the efficiency of information processing by recruiting an additional, redundant system relying still on the same (single) signal? If they can, we demonstrated the conditions under which such a redundant system would lead to improved performance. Second, it is relatively trivial to show that response times to redundant signals, or even redundant systems, would be faster than to a single signal in a canonical redundant target experiment. In such experiments, participants must respond upon detecting A, or B, or both (OR decision rule) and can stop after the first process terminated, saving time. This is not the mechanism we investigated here. The mechanism investigated in the present paper does not offer faster responses, and rather relies on an error-correction mechanism. The decision stopping rule requires both processes, A and B, to finish (AND decision rule) and responses to AB displays under this decision rule are known to be slower than A alone or B alone (see for example Table 4 in13; see also theoretical treatment by22 and23). However, while the system awaits the completion of the slower of the two processes to happen, an error in the faster process can be recovered. Responses using this AND rule are not a-priori faster, but can be more efficient (given certain conditions) when accuracy comes into play, either by examining RTs conditioned on accuracy, or by considering a natural measure that accounts for both RT and accuracy - Reward Rate.

Conclusion

Our study offers a novel, exciting theoretical insight: we have shown that recruiting a second, redundant system for information processing could result in improved reward rate, and hence ‘efficiency in redundancy’. A related question then arises: Can people harness at will another subsystem to aid processing? What would be the metabolic consequences of such recruitment? We leave these questions to future investigations.

Methods

We used a \(12 \times 10\) simulation design where we created twelve threshold conditions by varying a from 0.5 to 2.25 in equal steps, and ten drift rate conditions by varying v from 0.60 to 4.63 in equal steps. These parameter values are informed by a systematic review of parameter values obtained when fitting the DDM24. Specifically, the range of considered v values corresponds to the 10% and 90% quantiles of the reported values for the v parameter. For the a parameter, the maximum considered value corresponds to the 90% quantile of the reported values. For the 2DDM, we added two more lower threshold settings (resulting in a total of 14 possible threshold values), equally spaced as the 12 before. The reasons are (1) in the 2DDM, threshold values lower than in the 1DDM are plausible since the 2DDM produces inherently more accurate responses, and the values are based on a systematic review that focused on the 1DDM, (2) in order to find the maximum reward rate, we had to extend the possible threshold settings to lower values. For the 3DDM, and 4DDM, we added an additional lower threshold setting (resulting in a total of 15 possible threshold values).

For each condition, we simulated 10,000 trials for both the 1DDM and 2DDM using the following parameter settings: \(t_0 = 0.44\), \(z = a/2\), \(s_z = 0.46\), \(s_v = 1.13\), \(s_{t_0} = 0.17\). These parameter values correspond to the median of the reported values in24. Appendix A–C in the online supplement display results for two alternative settings of the start point variability parameter \(s_z\). The drift rate v, drift rate variability \(s_v\), and diffusion constant s were adjusted as described in Eq. (2) using the appropriate value of l. To simulate a decision rule that waits until both processes of the 2DDM agree, we needed to simulate the complete accumulation path for both processes which we achieved using the random walk approximation outlined in Tuerlinckx et al.25. We set the time increment to 0.001s. Furthermore, we censored the response time at 5s which seems plausible given typical trial timeouts in an experiment. Simulated trials that had an RT larger than 5 s were excluded from the analyses. Appendix C in the online supplement displays the results when applying two alternative rules for how to deal with trials that have an RT larger than five seconds. In our simulation, the proportion of 2DDM trials that had an RT larger than five seconds was negligible for most drift rate and threshold settings. All simulations were conducted in R26. Code for reproducing the analyses is available from https://osf.io/m5hg3/.

Data availibility

The datasets generated and/or analysed during the current study are available in the Open Science Framework (OSF) repository, https://osf.io/m5hg3/. Code for reproducing the analyses can be found at: https://osf.io/m5hg3/.

References

Middleton, D. H. Avionic Systems (Longman Publishing Group, 1989).

Downer, J. When failure is an option: Redundancy, reliability and regulation in complex technical systems. In Number DP 53 in CARR Discussion Papers. Centre for Analysis of Risk and Regulation, London School of Economics and Political Science (2009).

Brown, S. D. & Heathcote, A. The simplest complete model of choice reaction time: Linear ballistic accumulation. Cogn. Psychol. 57, 153–178 (2008).

Ratcliff, R. A theory of memory retrieval. Psychol. Rev. 85, 59–108 (1978).

Kinchla, R. A. Detecting target elements in multielement arrays: A confusability model. Percept. Psychophys. 15, 149–158 (1974).

Grice, G. R. & Gwynne, J. W. Dependence of target redundancy effects on noise conditions and number of targets. Percept. Psychophys. 42, 29–36 (1987).

Love, J., Gronau, Q., Brown, S., & Eidels, A. Trust in human-bot teaming: Applications of the judge advisor system. In Proceedings of the Annual Meeting of the Cognitive Science Society, vol. 45(2023). https://escholarship.org/uc/item/9gp3c088.

Ratcliff, R., Voskuilen, C. & Teodorescu, A. Modeling 2-alternative forced-choice tasks: Accounting for both magnitude and difference effects. Cogn. Psychol. 103, 1–22 (2018).

Bogacz, R., Hu, P. T., Holmes, P. J. & Cohen, J. D. Do humans produce the speed-accuracy trade-off that maximizes reward rate?. Q. J. Exp. Psychol. 63(5), 863–891 (2010).

Bogacz, R., Brown, E., Moehlis, J., Holmes, P. & Cohen, J. D. The physics of optimal decision making: A formal analysis of models of performance in two-alternative forced choice tasks. Psychol. Rev. 113, 700–765 (2006).

Townsend, J. T. & Ashby, F. G. Stochastic Modeling of Elementary Psychological Processes (CUP Archive, 1983).

Townsend, J. T. & Eidels, A. Workload capacity spaces: A unified methodology for response time measures of efficiency as workload is varied. Psychon. Bull. Rev. 18, 659–681 (2011).

Ami, E., James, T. T., Howard, C. H. & Lacey, A. P. Evaluating perceptual integration, Uniting response-time-and accuracy-based methodologies. Attent. Percept. Psychophys. 77, 659–680 (2015).

Donkin, C., Brown, S. & Heathcote, A. Drawing conclusions from choice response time models: A tutorial. J. Math. Psychol. 55, 140–151 (2011).

Rae, B., Heathcote, A., Donkin, C., Averell, L. & Brown, S. The hare and the tortoise: Emphasizing speed can change the evidence used to make decisions. J. Exp. Psychol. Learn. Mem. Cogn. 40, 1226–1243 (2014).

Zandbelt, B., Purcell, B. A., Palmeri, T. J., Logan, G. D. & Schall, J. D. Response times from ensembles of accumulators. Proc. Natl. Acad. Sci. 111(7), 2848–2853 (2014).

Cox, G. E., Logan, G. D., Schall, J. D, & Palmeri, T. Decision making by ensembles of accumulators. PsyArXiv 2020, 1–65 (2020). https://psyarxiv.com/qdk7b/.

Townsend, J. T. & Nozawa, G. Spatio-temporal properties of elementary perception: An investigation of parallel, serial, and coactive theories. J. Math. Psychol. 39, 321–359 (1995).

Townsend, J. T. & Ashby, F. G. Stochastic Modeling of Elementary Psychological Processes (Cambridge University Press, 1983).

Miller, J. Divided attention: Evidence for coactivation with redundant signals. Cogn. Psychol. 14, 247–279 (1982).

Miller, J. Channel interaction and the redundant-targets effect in bimodal divided attention. J. Exp. Psychol. Hum. Percept. Perform. 17, 160–169 (1991).

Colonius, H. & Vorberg, D. Distribution inequalities for parallel models with unlimited capacity. J. Math. Psychol. 38, 35–58 (1994).

Townsend, J. T. & Wenger, M. J. A theory of interactive parallel processing: New capacity measures and predictions for a response time inequality series. Psychol. Rev. 111, 1003–1035 (2004).

Tran, N.-H., Van, M. L., Heathcote, A. & Matzke, D. Systematic parameter reviews in cognitive modeling: Towards a robust and cumulative characterization of psychological processes in the diffusion decision model. Front. Psychol. 11, 608287 (2021).

Tuerlinckx, F., Maris, E., Ratcliff, R. & De Boeck, P. A comparison of four methods for simulating the diffusion process. Behav. Res. Methods Instrum. Comput. 33, 443–456 (2001).

R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria (2019). https://www.R-project.org/.

Acknowledgements

We thank Scott Brown and Guy Hawkins for helpful discussions.

Author information

Authors and Affiliations

Contributions

Q.F.G. wrote main manuscript and conducted analyses. A.E. conceptualization and wrote main manuscript. R.M. invaluable conceptual input. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Gronau, Q.F., Moran, R. & Eidels, A. Efficiency in redundancy. Sci Rep 14, 17109 (2024). https://doi.org/10.1038/s41598-024-68127-x

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-68127-x