Abstract

Fruit tree identification that is quick and precise lays the groundwork for scientifically evaluating orchard yields and dynamically monitoring planting areas. This study aims to evaluate the applicability of time series Sentinel-1/2 satellite data for fruit tree classification and to provide a new method for accurately extracting fruit tree species. Therefore, the study area selected is the Tarim Basin, the most important fruit-growing region in northwest China. The main focus is on identifying several major fruit tree species in this region. Time series Sentinel-1/2 satellite images acquired from the Google Earth Engine (GEE) platform are used for the study. A multi-scale segmentation approach is applied, and six categories of features including spectral, phenological, texture, polarization, vegetation index, and red edge index features are constructed. A total of forth-four features are extracted and optimized using the Vi feature importance index to determine the best time phase. Based on this, an object-oriented (OO) segmentation combined with the Random Forest (RF) method is used to identify fruit tree species. To find the best method for fruit tree identification, the results are compared with three other widely used traditional machine learning algorithms: Support Vector Machine (SVM), Gradient Boosting Decision Tree (GBDT), and Classification and Regression Tree (CART). The results show that: (1) the object-oriented segmentation method helps to improve the accuracy of fruit tree identification features, and September satellite images provide the best time window for fruit tree identification, with spectral, phenological, and texture features contributing the most to fruit tree species identification. (2) The RF model has higher accuracy in identifying fruit tree species than other machine learning models, with an overall accuracy (OA) and a kappa coefficient (KC) of 94.60% and 93.74% respectively, indicating that the combination of object-oriented segmentation and RF algorithm has great value and potential for fruit tree identification and classification. This method can be applied to large-scale fruit tree remote sensing classification and provides an effective technical means for monitoring fruit tree planting areas using medium-to-high-resolution remote sensing images.

Similar content being viewed by others

Introduction

China is the largest fruit producer and grower in the world1, with a diverse range of fruit species. The fruits from Xinjiang, which is in western China, are the most well-known among them. Xinjiang has cultivated fruit for a long time and is known for producing several excellent fruit species2. However, due to the large area of fruit tree cultivation in the region, the land is fragmented, and intercropping of fruit trees with grain crops is a common practice. The traditional method of dividing the planting area of fruit trees through manual surveys and hierarchical reporting not only consumes manpower and material resources but also suffers from subjectivity, poor timeliness, and lack of spatial–temporal perspectives3,4. Therefore, it is difficult to meet the demand for precise management of fruit trees5. Remote sensing technology, on the other hand, is widely used in agricultural resource surveys, such as estimating agricultural planting areas, due to its advantages of being objective, timely, and capable of obtaining large-scale ground area information6. For example, the MSI multispectral sensor carried by the Sentinel series of satellites provides a powerful technical means for extracting orchard planting areas, monitoring growth status, and estimating yields due to its high resolution, wide coverage, and strong timeliness7. In addition, the development of domestic and foreign remote sensing big data and cloud computing platforms such as Google Earth Engine (GEE), European Open Science Cloud (EOSC), Alibaba Cloud, and Pixel Information Expert (PIE) has strongly supported research on the extraction of various spatial scale land cover information8. Because of their massive store capacity, effective processing capability, and open-sharing mode, remote sensing cloud computing platforms—led by GEE—have quickly emerged as crucial instruments for extracting large-scale planting structures9. Determining the sorts of trees is crucial to defining and extracting planting sites for fruit trees. Crop identification research in the past has mostly concentrated on large-scale crops like cotton, corn, rice, winter wheat, and so forth10,11,12,13, with little research on fruit trees and forestry, and a concentration on southern tree species including citrus, mangroves, tea plantations, and rubber trees14,15,16,17. Research on forestry and fruit tree identification in the arid regions of Xinjiang is scarce, and studies using medium-to-high-resolution remote sensing images to identify fruit trees are relatively rare, mainly focusing on drones and close-range remote sensing18 (e.g., Ye et al.19 utilized ground-based hyperspectral sensor to detect the spectral reflectance of citrus canopies to identify the ripeness of citrus fruits, while Jorge et al.20 used a drone with LIDAR to produce three-dimensional structural models for identifying apricot trees).

The pixel-based classification method often results in “salt and pepper noise”, which affects classification accuracy21. In contrast, the object-oriented (OO) classification method considers factors such as pixel context, object scale, and spatial consistency, effectively handling salt and pepper noise in images and improving classification robustness. For example, Liu et al.22 examined the phenological features of rice using Sentinel-2 remote sensing imagery on the GEE platform. Several object-oriented machine-learning models were developed in light of this approach. The combination of object-oriented approaches and RF achieved the highest extraction accuracy for rice, with an overall accuracy (OA) of 96.83% and a kappa coefficient (KC) of 0.934. Zhao et al.18 integrated Sentinel-1, Sentinel-2, and SRTM data using GEE and applied various object-oriented and machine-learning methods to extract multiple fruit trees. The results showed that the object-oriented approach could effectively reduce noise and accurately extract the area of multiple fruit trees when combined with RF. Zhang et al.23 applied Sentinel-2A images to extract distribution information of bamboo forests using eCognition software, demonstrating that using the RF in an object-oriented classification method can effectively reduce the “salt and pepper effect”.

In land cover information extraction, the phenomenon of “different objects having the same spectrum” often occurs in single temporal images, and the spectra of different tree species’ organs vary with the season24. Therefore, obtaining the “key temporal phase” is crucial. It is necessary to consider not only the features of NDVI, VV/VH that can represent the temporal information of fruit trees, but also the contribution of the original features to the classification at each temporal phase, and comprehensively select the best time window.

With the development of computer interpretation technology, rich spectral features, texture features, phenological features, vegetation index features, etc., can be extracted from images to improve classification accuracy. However, as the dimensionality of features increases, it can lead to the “curse of dimensionality”25 resulting in decreased classification accuracy and slower computation speed due to increased complexity26. Therefore, it is crucial to solve the problem of selecting the optimal features from the original features for classification. Previous research has largely focused on the identification and classification of forest, crop, and grassland vegetation characteristics, with few studies specifically targeting orchards. For instance, Cheng et al.27 classified forests in the Qinling region using RF and the Random Forest Recursive Feature Elimination (RF-RFE) method to choose the best spectral–spatial–temporal (SST) features. They were able to achieve an OA and a KC of 86.88% and 0.86, respectively. Zhao et al.28 were able to achieve an OA of 83%, a 3% improvement over the initial feature combination, and an increase in the fine classification accuracy of grasslands. Furthermore, the use of classification models is essential for increasing classification accuracy. For instance, Loukika et al.29 used the RF algorithm in GEE to classify multi-temporal land use and land cover (LULC) in the Munneru River basin in India, achieving OA of 94.85% and 95.8% with Landsat-8 and Sentinel-2, respectively, outperforming SVM and CART models. Similarly, Mansaray et al.30 compared the accuracy of SVM and RF in rice mapping using multi-source imagery across 14 datasets and found that RF generally exhibited higher accuracy, with 10 out of 14 datasets showing overall accuracies superior to SVM, with the maximum difference reaching 4.1%. The aforementioned research shows that although attempts have been made to extract orchard trees, other elements of orchards, such as texture, spectral, temporal, and geographical features, have not been thoroughly investigated. Orchard tree identification is still restricted to currently available crop identification techniques, and long-term series of orchard tree identification and spatial distribution mapping are still absent. Research on orchard tree extraction based on medium-to-high-resolution images is therefore desperately needed, as is the integration of spectral, polarization, texture, and other data.

In summary, this study aims to use high-resolution images in GEE to select the key temporal phases and optimal feature combinations for fruit tree identification. It employs an object-oriented approach combined with machine learning to classify fruit tree species in the study area in detail, thus obtaining the spatial distribution area of fruit trees. This provides visual information for relevant departments during fruit tree surveys and aids in decision-making. The research objectives are as follows: 1. Use Sentinel-1/2 remote sensing images combined with six categories of classification features, including spectral, phenological, texture, vegetation index, red edge index, and polarization features, to extract the key temporal phases for fruit tree identification. 2. Use RF to evaluate the optimal classification combination based on feature importance. 3. The combination of object-oriented analysis with four machine learning methods—RF, CART, Gradient Boosting Decision Tree (GBDT), and SVM—establishes a rapid, accurate, and effective method for automated orchard extraction.

Study area and data

Study area

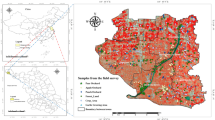

China’s Tarim Basin, located in Xinjiang, is well-known for its favorable growth conditions for specialty fruits, including apples, walnuts, aromatic pears, apricots, and jujubes31. The study area (Fig. 1) is situated on the northern border of the Tarim Basin, in the delta oasis of the Weigan River-Kuqa River. With geographic coordinates spanning from 82° 58′ to 83° 31′ E and 41° 18′ to 41° 32′ N, it is a classic alluvial fan oasis. With its continental arid environment, large temperature variations, high evaporation, plenty of solar radiation, extended sunshine hours, and other advantageous natural features, the region is well-suited for the growth of fruit farms and forestry32.

Study area: (a) China’s geographic coordinates are displayed in light blue, whereas the Xinjiang Uygur Autonomous Region is displayed in dark blue. (b) Dark green indicates the study area’s position within the Xinjiang Uygur Autonomous Region. (c) Within the study area, Akwustan Township, the main study region, is indicated in yellow. (d) Sentinel-2 imagery was uniformly and randomly marked at 495 sample points, which represent nine common land cover types, based on field surveys (Maps created in ArcMap 10.2, http://www.esri.com. Boundaries made with free vector data provided by National Catalogue Service for Geographic Information, https://www.webmap.cn/commres.do?method=dataDownload. The satellite imagery data source: Sentinel-2, https://developers.google.com/earth-engine/datasets/catalog?filter=COPERNICUS%2FS2).

Satellite data

Sentinel-1 data

Sentinel-1 is a set of two satellites with four acquisition modes for C-band synthetic aperture radar (SAR). In this study, the IW mode was utilized, which is the primary mode for land applications. The IW mode, which is the main mode for land applications, was used in this investigation. The vertical transmit/horizontal receive (VH) and vertical transmit/vertical receive (VV) bands are included in the dual-polarization that this mode offers33. The GEE platform provided Sentinel-1 radar data (data source: https://developers.google.com/earth-engine/datasets/catalog/COPERNICUS_S1_GRD#bands) for the period of January 1, 2022, to December 31, 2022. The median synthesis approach was used to create the final monthly composite images.

Sentinel-2 data

With a five-day revisit time, the Sentinel-2A/B satellites are outfitted with the Multi-Spectral Instrument (MSI), which comprises 13 bands encompassing the visible, near-infrared, and short-wave infrared spectra34. Sentinel-2 data were also acquired for this investigation using Level-1C products on the GEE platform (data source: https://developers.google.com/earth-engine/datasets/catalog/COPERNICUS_S2_HARMONIZED). Top-of-atmosphere reflectance (TOA) is provided by these products, which also experience geometric correction (including orthorectification and spatial registration) and radiometric calibration. The information was collected between January 1, 2022, and December 31, 2022. Owing to cloud and shadow noise, images with a cloud cover of less than 10% were chosen, and a cloud-free median composite image was produced. Furthermore, Sentinel-2 data for the full year 2022 was acquired in order to create a 10-day NDVI time series for phenological study.

Ground truth data for training and validation

Two field surveys were carried out by the research team between May 26 and June 3, 2021, and June 28 and July 7, 2022, respectively. Apples, walnuts, jujube, aromatic pears, little white apricots, and peaches were among the fruit tree planting varieties that were identified. However, the fruit tree species were separated into walnuts, jujubes, pears, and other fruit trees due to the scarcity of particular fruit plants. Additionally, other land cover types were delineated, including cropland, grassland, bareland, construction land, and water, resulting in a total of 495 typical land cover sample points. The detailed sample selection and classification are shown in Table 1. For validation purposes, the sample plot centers of characteristic fruit trees were delineated using high-resolution Google Earth remote sensing images (data source: https://www.google.com/intl/zh-CN/earth/about) combined with field survey data, based on the vector map data of characteristic forest and fruit industry resources in the Weiku Oasis in 2022 from the Xinjiang Academy of Forestry Sciences. Ultimately, there was an 8:2 ratio between the training and validation sets.

Methods

Technical route

The workflow of this study includes the following aspects: (1) sample collection and data preprocessing, (2) image segmentation using the Simple Nom Iterative Clustering (SNIC) algorithm, (3) construction of datasets for six classification features, (4) determination of key temporal phases for classification using multi-temporal feature change curves and contribution analysis, (5) optimization of classification features using RF feature importance, and (6) comparison of eight different models constructed using the original image, SNIC segmented image, and RF, CART, GBDT, and SVM algorithms to identify fruit trees and estimate the planting area, as shown in Fig. 2.

Technical workflow (Maps created in ArcMap 10.2, http://www.esri.com. Figures created in OriginPro 2022 SR1, https://www.originlab.com, and Visio 2021, https://visio.iruanhui.cn).

Hyperpixel segmentation based on simple non iterative clustering (SNIC)

SNIC is an advanced superpixel segmentation method based on simple linear iterative clustering (SLIC)35. It effectively eliminates the “salt and pepper” phenomenon in pixel-based classification by considering similar pixels as basic units and integrating multiple aspects such as spectral band statistical features, topological relationships, and adjacency relationships36. The most widely used remote sensing image segmentation algorithm at the moment is the multi-scale segmentation algorithm included in Definiens’ eCognition v.9.037. However, large-area remote sensing image segmentation is not a good fit for this segmentation algorithm, which is restricted to small-area remote sensing image segmentation. Large-area remote sensing image segmentation has become feasible with the rise of cloud computing systems such as GEE38. In this study, the original images were segmented in this study using the SNIC technique made available by Google Earth Engine’s object-oriented, for consistency, the method is referred to as the object-oriented approach throughout the article. The segmentation scale, which should not be excessively or underly segmented, has an impact on the accuracy of segmentation. Eight segmented images were produced for model training and comparison using seed pixel spacings of 10, 20, 30, 40, 50, 60, 70, and 80 in order to determine the ideal segmentation scale. Furthermore, three crucial characteristics must be established: neighborhood size of 256, connectedness of 8, and compactness of 5.

Classification feature dataset

Spectral bands

Rich spectrum information is provided by the original Sentinel-2 bands (Table 2), which span spectral ranges including visible, near-infrared, red-edge, and short-wave infrared. With spatial resolutions of 10 m, 20 m, and 60 m, Sentinel-2 has high detail capture capability, making it suitable for identifying fine surface features39. As a result, it is crucial to calculate vegetation indices, textural, phenological, and red-edge features from the original bands and to use them as classification features.

Vegetation index features

By examining the reflectance of vegetation in various bands, vegetation indices offer crucial information regarding the growth and health of fruit trees. They are of great significance in fruit tree identification40. The classification features in this investigation were determined using NDVI, EVI, and NDWI (Table 3). The most used index at the moment is NDVI, which can improve the index’s responsiveness to fruit trees by removing the influence of the sun’s zenith angle, topography, and atmosphere41. EVI is an improvement over the NDVI index, introducing the blue band to reduce the atmospheric impact on the index42. An indicator of vegetation used to track water content is the NDWI. The near-infrared reflectance of water in plant cells is high, and NDWI is a useful tool for monitoring the water content of fruit tree canopies43.

Red edge index features

The three unique red-edge bands (B5, B6, B7) and the narrow near-infrared band (B8a) of Sentinel-2 are particularly sensitive to vegetation growth conditions, giving them a significant advantage in crop classification and identification44,45. By incorporating three narrow-band red-edge normalized vegetation indices and two normalized difference red-edge indices into the classification features, the accuracy of machine learning classification is effectively improved, as shown in Table 4.

Polarization features

The VV and VH polarized bands in Sentinel-1 radar data are commonly used in radar remote sensing and play an important role in fruit tree monitoring and classification49. In this study, time series data for these two polarizations (Table 5) were obtained to explore the impact of multi-temporal polarization data on the accuracy of fruit tree identification and classification, selecting appropriate temporal phases for feature construction.

Texture features

Based on the vegetation spectral curve, plants exhibit the highest reflectance peak in the B8 near-infrared band, with significant reflectance differences in the near-infrared band50. Therefore, the B8 band of Sentinel-2 images was selected as the input image for the Gray-Level Co-occurrence Matrix (GLCM), and statistical measures of eight texture features were extracted using a sliding window size of 4. These features include angle second moment, contrast, correlation, variance, inverse difference moment, diversity, sum entropy, and entropy, as detailed in Table 6.

Phenological features

This study used the Timesat software51 to extract 13 phenological features, including the start and end dates of the growing season, the length of the growing season, and the base period, among others (Table 7). Sentinel-2 data were used to reconstruct a 10-day NDVI time series for 2022. According to the Timesat manual, we duplicated the 2022 time series twice, creating virtual data for 2021 and 2023, resulting in a total of 108 images, with 36 scenes per year. Next, three filtering algorithms were used to fit the NDVI curve, and the Savitsky–Golay (S–G) filter with the best-fitting effect was selected based on the comparison of correlation coefficients and standard deviations (Fig. 3). The relevant parameters were set as follows: season parameter: 1 (suitable for crops with one season per year), S-G window size: 9 (nptperyear/4), number of envelope iterations: 3, adaptation strength: 10 (strongest adaptation of the upper envelope), and season start/end method: 1 (seasonal amplitude).

Comparison of filtering algorithms (r represents the correlation coefficient, and SD represents the standard deviation. Figures created in OriginPro 2022 SR1, https://www.originlab.com).

Feature optimization

The RF algorithm is not only important in remote sensing image classification but also for feature optimization, The RF algorithm calculates feature importance using the out-of-bag (OOB) error52, evaluating the model as follows:

In the equation, Vi represents the importance of the ith feature variable, Ai denotes the out-of-bag correct rate for the ith feature, n is the total number of generated decision trees, n represents the moment when noise interference is added, and o represents the moment when no noise interference is added. \({B}_{{n}_{t}}^{i}\) represents the OOB error of the tth decision tree for the ith feature after adding noise interference, while \({B}_{{n}_{t}}^{i}\) represents the OOB error of the tth decision tree for the ith feature before adding noise interference. A considerable drop in OOB accuracy following noise addition suggests that the feature is highly important and has a major effect on the sample prediction outcomes. According to their relevance scores, the features in this study were arranged in decreasing order and incorporated one after the other into the classification model. Feature set optimization was carried out when it was found that the overall accuracy fluctuated and then stabilized.

Classification methods and accuracy evaluation

In this study, we employed four machine-learning algorithms: RF, SVM, CART, and GBDT. For RF, CART, and GBDT, the number of decision trees was set to 50. SVM was configured with the C-Support Vector Classification (C_SVC) problem type and the Radial Basis Function kernel (RBF), with gamma set to 1 and cost set to 10. Model accuracy was assessed using four metrics from the confusion matrix: Overall Accuracy (OA, %), Kappa Coefficient (KC, %), User Accuracy (UA, %), and Producer Accuracy (PA, %). In addition, this paper sets the area accuracy, which is used to validate the extraction accuracy of orchard areas by calculating the ratio of the difference between the surveyed data and the classified tree area to the surveyed data, thus reflecting the differences in the classification models.

Results and analysis

Image segmentation and determination of optimal segmentation scale

Segmenting the entire area, the OA and KC for each model vary with seed pixel spacing accuracy, as shown in Table 8. It can be observed that the OO + RF segmentation had the highest overall accuracy, and at seed pixel spacings of 10, 30, 40, and 70, the segmentation results of each model were relatively good. The descriptive statistics are shown in Table 9. A small, representative area within the study region was selected and segmented at different pixel spacings, as shown in Fig. 4. Over-segmentation can lead to similar pixel sets being divided into several different superpixels, with the segmentation units too small to express some features such as semantic or structural information in spectral and texture features. Under-segmentation can result in pixel sets containing superpixels with different attributes, where the segmentation units are too large, leading to large areas containing multiple different semantic and structural information, thus causing misclassification of land cover types53. Therefore, based on the above analysis, the optimal segmentation scale was determined to be a seed pixel spacing of 30.

The segmentation results under different seed pixel distances (Maps created in ArcMap 10.2, http://www.esri.com. The satellite imagery data source: Sentinel-2, https://developers.google.com/earth-engine/datasets/catalog?filter=COPERNICUS%2FS2).

Phenological parameter analysis

To analyze the phenological features, we selected nine types of land cover with five sample points each and averaged their values as phenological feature values. Using the S-G filter to reconstruct the NDVI time series, we extracted phenological information. As shown in Figs. 5 and 6, phenological metrics such as Start t., End t., Length, Peak t., Peak val., Ampl., Start val., End val., L.integral, and S.integral exhibit significant differentiation. The distinctions among different land cover types are particularly pronounced, especially for L.integral, S.integral, Start val., and End val., which align with the feature selection results discussed in “Feature set optimization analysis” section.

NDVI phenological features (Group the 13 phenological features into 3 sets based on their value ranges for comparative analysis. Figures created in OriginPro 2022 SR1, https://www.originlab.com).

NDVI phenological information chart (Maps created in ArcMap 10.2, http://www.esri.com. The satellite imagery data source: Sentinel-2, https://developers.google.com/earth-engine/datasets/catalog?filter=COPERNICUS%2FS2).

First, compared to other vegetation types like cropland and grassland, fruit trees have a longer growing season. The growth periods of these other vegetation types are shorter. Fruit trees are clearly a seasonal crop. Phenological features, such as growth and decline rates and integral values during the growing season, fluctuate significantly as a result. L.derive and L.integral have the highest degree of differentiation among the parameters. The average L.derive for fruit trees is 0.09, while cropland and grassland have respective averages of 0.04 and 0.01. Likewise, cropland and grassland have average L.integrals of 4.84 and 3.05, respectively, whereas fruit trees have an average L.integral of 9.43. For land cover types such as water, construction land, and bareland, the NDVI values remain relatively stable throughout the year. Consequently, their amplitude, growth, and decline rates, and integral values show minimal fluctuation, with most values hovering around 0–2. These characteristics make them easily distinguishable from fruit trees. However, differentiating among these land cover types based solely on phenological features remains challenging, necessitating additional classification features.

Secondly, when distinguishing among different fruit tree species, despite some clustering in phenological indicators such as the start, end, and length of the growing season, there are notable differences in NDVI values and start/end values of the growing season. For instance, pear trees and other fruit trees differ by 1 day at the start of the growing season, but the start values are 0.46 and 0.35, respectively, indicating significant variability. Additionally, parameters representing the accumulation of NDVI over time, such as large and small integrals, also provide differentiation. Pear trees have the highest integral values (14.20 and 12.91), followed by walnut trees (12.21 and 10.59). Jujube trees show a larger difference, with integral values of 6.25 and 5.65, while other fruit trees have the lowest values (5.06 and 4.69).

In summary, phenological feature parameters can effectively distinguish different species of fruit trees to a certain extent.

Selecting the optimal time window

Varied land cover types have varied phenological properties, which make it possible to identify them using time-series NDVI data. To determine the average NDVI values for each of the nine land cover types, we chose five sample locations for each of the monthly median images from 2022. Throughout the year, grassland and areas devoid of vegetation consistently display low NDVI values, as illustrated in Fig. 7. March has the lowest NDVI values for vegetation, which includes cropland and other fruit trees including walnut, jujube, and pear. The NDVI value for vegetation begins to rapidly increase and differentiate in April. June marks the peak NDVI values for jujube and walnut, at 0.35 and 0.39, respectively. September is when cropland and pear NDVI values peak at 0.44 and 0.30, respectively, The NDVI value of other fruit plants peaks in August at 0.37. From May to September, the differences between the various types of land cover are most noticeable. Senescence begins in September when the vegetation’s NDVI values start to fall quickly. We included the NDVI values’ standard deviation to further hone our research. The observable variations in standard deviation between different land cover types over the course of months show how effective NDVI is at differentiating between different fruit tree species.

Typical land cover NDVI curve and one standard deviation (Figures created in OriginPro 2022 SR1, https://www.originlab.com).

However, determining the optimal time window for classification requires further investigation. To address this, we introduced VV/VH polarization for differentiation (Fig. 8). In VV polarization, the values for walnut and other fruit trees in May are − 10.89 and − 12.79, respectively. In June, the values for cropland and jujube are − 5.65 and − 11.22, respectively. In July, the values for walnut and jujube are − 9.17 and − 10.54, respectively. In September, the values for water and construction land are − 11.20 and − 13.39, respectively. This approach compensates for the indistinct classifications based on NDVI alone. In VH polarization, it is evident that July, September, and October are the months with the greatest differentiation among land cover types.

VV/VH polarization characteristic curve (Figures created in OriginPro 2022 SR1, https://www.originlab.com).

By integrating NDVI values and VV/VH polarization data, we selected the growing season from April to October, during which land cover types are more distinguishable, to analyze the classification differences across different months in detail. Initially, we combined object-oriented and classification methods, choosing a seed pixel distance of 30 as the optimal segmentation scale to study the classification accuracy of land cover types over various months (Table 10). It was observed that September had the highest OA and KC among all models, with values of 94.34% and 93.44%, respectively. The OO + RF model achieved the highest classification accuracy across all months. Subsequently, we used the OO + RF model to analyze the importance of classification features in different months18, as shown in Fig. 9. April and September had the highest number of significant features. In April, the most influential features were NDre1 (Red Edge Index Feature, 16.01) and VH (Polarization Feature, 17.40). In September, the top-ranked features were B8_contrast (Texture Feature, 17.59) and Start t. (Phenological Feature, 16.57). Notably, B8_contrast had the highest feature contribution value in September at 17.59 and remained the most significant feature in August and October, with values of 16.61 and 16.64, respectively. The analysis of these classification features supports the feasibility of choosing September as the optimal time window.

Classification feature heat map (Figures created in OriginPro 2022 SR1, https://www.originlab.com).

Therefore, considering all factors comprehensively, selecting September as the classification window is optimal for both distinguishing land cover types and conducting subsequent feature optimization.

Feature set optimization analysis

Finally, September 2022 was chosen as the best time window. The feature importance was computed using the OO + RF model. Classification features were sequentially incorporated from high to low importance, and the overall accuracy change was observed. This process is illustrated in Fig. 10, when the number of classification features increased to 12 (indicated by the red point), the overall accuracy did not show significant improvement, reaching a stable state at 94.60%. However, upon further increase to 40 features (represented by the green point), the overall accuracy reached its maximum value of 94.86%, when all features were included, meaning there were 44 classification features, the overall accuracy reached 94.34%. This indicates that the method effectively reduces redundancy and enhances the model’s capability to identify fruit trees.

Feature importance analysis and feature selection: (a) Feature importance statistics and overall accuracy change chart, (b) feature selection strategy (Figures created in OriginPro 2022 SR1, https://www.originlab.com).

Overall, the importance ranking is as follows: spectral bands > phenological features > texture features > red edge index features > vegetation index features > polarization features. The three most significant contributors are texture features, phenological features, and spectral bands. Within the texture features, B8_contrast, B8_diss, B8_var, and B8_sent exhibit high contribution rates to classification. Among the phenological features, Start t., Peak t., Length, and L.deriv demonstrate high contribution rates to classification. In terms of spectral bands, B7, B3, B12, and B8 show high contribution rates to classification. Within the red edge index features, NDVIre3n has a significantly higher contribution rate compared to its other four indices. Among vegetation index features, NDVI has the highest contribution rate. Regarding polarization features, the contribution rates of VV and VH polarizations are approximately similar.

Three methods were employed for feature selection: 1. Selecting the top 12 features with the highest contribution rates, which correspond to when the overall accuracy reaches a stable state. These features encompass texture, phenological, and spectral features. 2. Selecting the highest contributing feature within each category, comprising B8_contrast, Start t., B7, NDVIre3n, NDVI, and VH. 3. Filtering the features based on their importance from high to low. Features were grouped in sets of five, resulting in nine possible combinations to choose from. The detailed methods are presented in Table 11. Ultimately, based on the criterion of overall accuracy, the first method was chosen for feature selection.

Classification results and accuracy evaluation

Classification results and accuracy assessment

The confusion matrix for land cover classification performance is illustrated in Fig. 11. Among the object-oriented classification models, the OO + RF model demonstrates the best classification performance, with 368 out of 397 training samples correctly classified. Specifically, the classification accuracy for cropland and construction land is the highest, reaching 100%. The classification accuracy for walnut and jujube trees in fruit trees is relatively high, with probabilities of correct classification at 96.55% and 91.67% respectively. Among pear trees, four samples were misclassified as walnut and jujube trees, while samples from other fruit trees were misclassified as pear and walnut trees. In the pixel-level classification model, the RF algorithm demonstrates the best classification performance, with 369 out of 397 training samples correctly classified. Among these, the classification accuracy for bareland is the highest, at 97.01%, only one sample was misclassified as water. The classification accuracy for walnuts and other fruit trees in the fruit tree species is relatively high, at 94.55% and 95.65% respectively. Within the walnut species, three samples were confused with other fruit trees, cropland, and bareland. Other fruit trees were confused with construction land. However, the classification accuracy for pear trees is relatively low, with an error rate of 25%. Moreover, SVM has the lowest accuracy when it comes to both pixel and object-oriented approaches, all classes are confused with the types of bareland. This is mainly explained by the unequal distribution of samples, which causes the model to focus too much on classes with larger numbers and underperform on classes with fewer samples.

Confusion matrix plot of land cover classification results (Figures created in OriginPro 2022 SR1, https://www.originlab.com).

To conduct a more comprehensive comparative analysis of fruit tree identification performance, evaluation metrics including UA, PA, OA, and KC were employed to assess the eight classification results. As illustrated in Fig. 12 and Table 12, the classification models are ranked in descending order of OA and KC as follows: OO + RF, RF, OO + CART, OO + GBDT, CART, GBDT, SVM, and OO + SVM. It can be observed that object-oriented approaches exhibit higher extraction precision. Compared to pixel-level classification, except for the SVM classification method, both OA and KC accuracy have improved. The GBDT classification method shows the greatest improvement in accuracy, with OA and KC increasing by 3.88 and 4.43 percentage points, respectively. The highest accuracy was achieved with the OO + RF method, with OA and KC reaching 94.60% and 93.74% respectively. Compared to pixel-level RF, OA, and KC have increased by 1.65 and 1.86 percentage points, respectively. The poorest performing model was the SVM model, where the inclusion of the object-oriented approach led to a decrease in accuracy rather than an improvement. Compared to the pixel-level model, both OA and KC decreased by 0.14 and 0.39 percentage points. Therefore, it is evident that the object-oriented approach can significantly enhance the accuracy of most models.

Comparison of UA and PA in fruit tree classification using different methods (Figures created in OriginPro 2022 SR1, https://www.originlab.com).

Next, regardless of whether it is based on pixel or object-oriented methods, the RF model outperforms other models in fruit tree identification effectiveness. In pixel-based methods, the RF model exhibits higher OA compared to the CART, GBDT, and SVM models by 2.77, 4.29, and 12.35 percentage points respectively. Similarly, the KC is higher by 3.17, 4.96, and 14.4 percentage points respectively. In object-oriented methods, the OA of the OO + RF model surpasses that of the CART, GBDT, and SVM models by 1.8, 2.06, and 14.14 percentage points respectively. Similarly, the KC is higher by 2.07, 2.39, and 16.65 percentage points respectively. Therefore, the RF model demonstrates superior capability in fruit tree extraction compared to other models.

Regarding UA and PA, the PA results are generally consistent with the confusion matrix analysis. The UA results reveal that the misclassification rate of SVM is excessively high, resulting in a 100% classification for all types except bareland. However, this comparison holds limited significance. Comparing the six models, it is evident that each model exhibits satisfactory classification performance. Among them, the model with the highest UA for walnuts is OO + CART, at 93.22%. Among the six models compared, it can be observed that each model demonstrates commendable classification performance. For walnut trees, the model with the highest UA is OO + CART, reaching 93.22%. For jujube trees, the model with the highest UA is GBDT, achieving 94.74%. Similarly, for pear trees, the model with the highest UA is OO + GBDT, reaching 100%. Lastly, for other fruit trees, the model with the highest UA is also OO + GBDT, at 93.33%. The model with the highest UA for cropland is OO + RF, achieving 98.53%. For grassland, the model with the highest UA is CART, reaching 94.44%. The model with the highest UA for construction land is OO + RF, achieving 100%. The model with the highest UA for bareland is OO + RF, reaching 94.59%. Similarly, the model with the highest UA for water is OO + CART, achieving 97.06%.

In conclusion, all models perform well in extraction when taking into account the accuracy of the extraction findings, with the exception of the SVM and OO + SVM models. The OO + RF model has the highest recognition efficacy out of all of them.

Fruit tree planting structure extraction results

Based on the aforementioned research findings, we selected the top 12 features with the highest contribution rate in September to extract the planting area and compared the classification results. However, the SVM and OO + SVM results incorrectly classified other land cover types as bareland, indicating that the SVM algorithm has a weaker discrimination ability for uneven sample sizes, thus resulting in large-scale extraction errors. Even after employing object-oriented analysis, erroneous extractions persist, indicating that the use of object-oriented analysis with SVM has limited effectiveness in improving the accuracy of fruit tree recognition. Therefore, the SVM and OO + SVM results are excluded from the extraction of fruit tree planting structures.

In this study, the remaining six classification models were utilized to identify fruit tree species. The remote sensing classification results encompass nine land cover types: walnut, jujube, pear, other fruit trees, cropland, grassland, construction land, bareland, and water. To facilitate the comparative analysis between the classification results and the vector map patch data from field forest fruit resource surveys, the classification results are illustrated in Fig. 13 (with dark green, light green, reddish-brown, ginger yellow, and white representing walnut, jujube, pear, other fruit trees, and other land cover types, respectively). Simultaneously, four typical regions were selected to compare the reliability of the six models at a smaller scale and contrast the extraction results. Among the six identification outcomes, the spatial distribution of fruit tree planting is relatively consistent. In the study area, the distribution of the four fruit tree species is mixed, forming strip-shaped belts on the north and south sides of the study area.

Extraction of multiple fruit tree areas (The first image selects four representative plots and calculates the planted area of fruit trees, (a–f) represents the recognition performance of six models. Maps created in ArcMap 10.2, http://www.esri.com. Figures created in OriginPro 2022 SR1, https://www.originlab.com. Boundaries made with free vector data provided by National Catalogue Service for Geographic Information, https://www.webmap.cn/commres.do?method=dataDownload. The satellite imagery data source: Sentinel-2, https://developers.google.com/earth-engine/datasets/catalog?filter=COPERNICUS%2FS2).

The study area is predominantly planted with walnuts, resulting in the largest and evenly distributed walnut planting area on both the north and south sides. Jujubes are mainly distributed in the northern part of the study area, including villages such as Tuertamu, Akwustan, Topakairik, and Xue’erkuleairik. Pear trees are primarily cultivated in the southeastern corner of the study area, including villages such as Boostan and Boostankuoshiterik. Other fruit trees including peaches, small white apricots, and apples exhibit a spatial distribution similar to that of walnuts but are more scattered. Further investigation reveals that pixel-level results exhibit more “salt and pepper noise”, which reduces the spatial continuity of fruit tree patches. Among them, the “salt and pepper noise” in the CART results is more pronounced, while the fruit tree patches in the object-oriented results are more regular, with less “salt and pepper noise”, resulting in a noticeable improvement. Among them, OO + RF exhibits the least “salt and pepper noise”. Therefore, the object-oriented approach significantly improves the classification results, with OO + RF showing the most substantial enhancement.

To fully demonstrate the positive impact of the RF algorithm on tree extraction and verify the superior extraction effectiveness of the object-oriented method, we quantified the tree areas. The results are illustrated in Fig. 13. The best extraction result is achieved with RF, with an area accuracy of 61.62%. Following RF is OO + RF, with an area accuracy of 59.38%. Among them, the model with the highest area accuracy for walnuts is RF, at 89.55%. For jujube, the highest area accuracy is achieved with RF, reaching 69.84%. As for pear, the RF model shows the highest area accuracy, accounting for 81.99%. Finally, for other fruit trees, the model with the highest area accuracy is OO + GBDT, at 65.65%. It can be observed that the area accuracy for other fruit trees is the lowest. This could be attributed to the high diversity and scattered distribution of other fruit tree species.

Discussion

Data source analysis

This work used GEE to implement a number of remote sensing image processing techniques, including SNIC, GLCM, RF, CART, GBDT, and SVM. Fruit tree species were identified and extracted using Sentinel-2 images. In contrast to other cloud computing platforms, GEE combines robust computational resources, high-speed processing capabilities, and an abundance of free remote sensing spatial data to suit research demands at various scales54. For this reason, the GEE cloud computing platform was selected for the study.

Additionally, remote sensing images such as MODIS and MERRA-2 are commonly used for large-scale vegetation mapping. However, it is difficult to gather high-precision information about fruit trees and to continuously monitor them because of their poor spatial resolution55. Although fruit trees over wide areas are difficult to identify using drone footage, it is frequently employed for accurate identification of specific fruit tree targets56,57,58. Sentinel imagery provides a greater temporal and geographical resolution than Landsat data, with a revisit duration of five days and a spatial resolution of ten meters. This fits in nicely with the classification requirements for fruit trees in this study59.

Feature variable analysis

In this study, six categories of classification features were constructed, namely spectral bands, phenological features, texture features, polarization features, vegetation index features, and red edge index features. Through comparison, it was found that spectral, phenological, and texture features contributed the most to fruit tree classification.

It was discovered that the spectral features B7, B3, B12, and B8 contributed significantly to classification. It is evident that the near-infrared and red-edge features provide the most contributions. Numerous studies have indicated that the unique red edge and near-infrared bands in Sentinel-2 are highly sensitive to vegetation growth conditions and physiological parameters. Moreover, the optimal spectral index combinations selected primarily involve the red edge, near-infrared, and red bands60,61. As a result, spectral features play a significant role in the classification process.

The phenological features Start t., Peak t., Length, and L.deriv were found to have high contributions to classification. The walnut growing season in the research area is from early April to late August, and the fruit ripens in early September. The fruits of jujube trees ripen in early October, while the trees’ phenological period runs from late April to late September. Peach trees have a phenological phase that runs from early March to late August, when the fruits ripen by the end of the month. Apricot trees, on the other hand, usually go through their phenological cycle around early April to late June, with the fruits reaching ripeness in mid-June. These indicators in phenological features directly reflect vegetation growth information, better highlighting the differences between different species of fruit trees62. The phenological information of other land cover types such as cropland and grassland differs more significantly from that of fruit trees. Therefore, phenological features can effectively distinguish between fruit tree species and other land cover types. It should be noted that the phenological data of the 10-day NDVI time series for the year 2022 may suffer from some quality issues and need replacement. The replacement methods include: 1. Using neighboring years’ data, 2. Substituting with multi-year average values (preceding and subsequent years), 3. Employing adjacent period data, and 4. Substituting with the average of preceding and subsequent period data. Through repeated experimentation and testing, it was found that these replacements have minimal impact on classification results, thus they can be used to replace the original imagery.

B8_contrast, B8_diss, B8_var, and B8_sent are texture features that contribute significantly to classification. According to the imaging, the research area’s orchards are represented as coarse-textured strips, grasslands as extended patches, and croplands as well-organized, fine-textured plots. Differentiating between different types of vegetation is made simple by their unique textural and structural characteristics. In orchards, walnut leaves are typically large and compound, with a relatively smooth surface63. They have a wide canopy and high canopy closure. Often, they are intercropped with maize or wheat, appearing as dark green in the images. Jujube has relatively smaller leaves compared to walnut trees, and their surfaces are rough. They have a lower canopy closure64. In autumn, their color appears orangish-red in the images. Pear trees generally have simple leaves that are oval or round in shape65, with a lighter color compared to walnut trees. Apricot tree leaves are elongated ovals with pointed tips66, while peach tree leaves have sharper tips and are more elongated in shape67. As a result, these textural characteristic indicators can be used to recognize different species of fruit trees.

The spectral, temporal, and structural markers of fruit trees are represented, respectively, by the three characteristics listed above. Furthermore, the categorization process incorporates vegetation index features, red edge index features, and radar polarization features. Ten additional combinations were set as controls in order to verify the contribution of these attributes to the classification. According to the experiment’s results, the top 12 categorization features—including spectral, phenological, and textural features—make up the best combination.

Classification model analysis

Four models of classification were used in this study: GBDT, RF, CART, and SVM. The first three models had satisfactory classification performance, with SVM showing the lowest accuracy68,69. Moreover, accuracy decreased as opposed to increased upon the incorporation of object-oriented analysis. This problem could be caused by a number of factors, such as incorrect feature extraction, insufficient model parameter tuning, sample imbalance, over-fitting, and so on70,71,72.

In this experiment, we ensured that only one type of label was assigned within each segmented representative land cover object. However, the number of training samples varied due to the uneven distribution of different land cover types. During the feature selection process, including all features or the top 12 features in the classification often resulted in misclassifying all land cover types into a single types. Conversely, when only one feature was used, the classification results were normal. This discrepancy is likely due to sample imbalance, causing the model to overly focus on the majority classes while performing poorly on the minority classes73. Additionally, the SVM model, originally designed for binary classification, struggles with multi-class problems, leading to suboptimal classification performance18. Appropriate sampling techniques, weight modifications made during sample processing, or model modifications to better handle multi-class classification jobs are required to overcome these problems.

Classification results analysis

The study area is located in Akwustan Township, Kuqa City, Aksu Prefecture, Southern Xinjiang. Statistics about the area of forests and orchards are difficult to come by in annual reports because of the situation at the township level. As a result, the computation makes use of vector data from the survey of forest resources and orchards. In order to classify fruit trees, this study uses Sentinel-2 imagery in conjunction with object-oriented and multi-feature approaches. This provides a means of monitoring orchards and woods utilizing medium-to-high-resolution images at the township level as well as at larger scales.

Fruit trees in the study area are frequently intercropped with crops like corn and wheat because of variations in the market and environmental factors like temperature and precipitation. This is especially true for the greatest planting area—walnuts. This is another important factor in the September imagery selection, since most crops have been harvested by September, which lowers categorization mistakes.

Currently, the extraction of orchard crops primarily focuses on major crops such as walnuts, jujubes, and pear trees. Little-scale orchards, such as apple trees, peach trees, and little white apricots, are difficult to distinguish and are categorized as “other fruit trees”. Moreover, the recognition of orchards in agroforestry systems has not been taken into account. Jin, et al. utilized Sentinel-2 and GF-2 imagery combined with object-oriented and decision-tree analysis. By leveraging the phenological information of major orchard crops in the study area and the differences in NDVI temporal data, they achieved the extraction of four species: intercropped walnuts, pure walnuts, jujube trees, and grapes74. As a result, there is a need for more research and it should be done when integrating high-resolution remote sensing imaging or UAV imagery to further refine orchard classification75.

The “salt and pepper” effect can be significantly lessened using object-oriented categorization as opposed to pixel-based classification techniques76. However, according to the research results, using object-oriented classification still leads to misclassification. Additionally, there is a deviation between the total area of fruit trees estimated by remote sensing and the actual surveyed area. This is because orchards in the study area contain multiple species of fruit trees, and the limited image resolution of remote sensing leads to poor identification accuracy for small and scattered fruit trees77. The best accuracy is seen in the classification of walnuts among the four species of fruit trees, nevertheless, other fruit trees are also incorrectly classified as walnuts. Based on field observations, it is possible that the high number of walnut plantations in the research area is a result of the recent robust walnut market. Young walnut trees are readily confused with other fruit trees due to their tighter spacing and lower canopy density. Pear trees and other fruit trees have low categorization accuracy because of their dispersed distribution. When dealing with intricate planting arrangements, the object-oriented approach leads to numerous misclassifications in area statistics, resulting in reduced area accuracy when contrasted with pixel-based approaches. As a result, noise has been significantly decreased and fruit tree recognition accuracy has increased thanks to machine learning and object-oriented models. Nevertheless, more investigation is required to use remote sensing data to locate small-scale distributed fruit trees.

Conclusion

This study uses the GEE platform and includes six categories with a total of forth-four features to classify fruit trees. Spectral, phenological, texture, polarization, vegetation index, and red edge index features are some of these characteristics. The object-oriented approach is integrated with GBDT, SVM, RF, and CART machine learning algorithms in the classification process. The research region chosen for this study is Kuqa City, Southern Xinjiang’s Akwustan Township. Finding the main varieties of fruit trees in the area is the goal. The findings show that:

-

(1)

The classification findings show that compared to pixel-based methods, the object-oriented classification method performs better overall. By using the SNIC segmentation strategy, the model’s accuracy is increased while also reducing the “salt and pepper” artifacts in the classification results. A seed pixel spacing of 30 is found to be the ideal segmentation scale for SNIC.

-

(2)

Taking into account the NDVI time series curves, phenological features, polarization time series curves, and the attribute importance of various classification features in different months, September is identified as the optimal time window for fruit tree identification.

-

(3)

Classification features were introduced successively from high to low priority using the OO + RF model to determine feature importance. This allowed for the observation of changes in overall accuracy. After comparing 9 different combinations of classification features, it was discovered that the features with the greatest contributions to classification were the spectral bands, phenological features, and textural features. Consequently, the 12 most significant categorization characteristics were chosen for further machine learning building.

-

(4)

The RF model outperforms other machine learning models in terms of recognition accuracy, the OO + RF technique yields the highest recognition accuracy, with OA and KC of 94.60% and 93.74%, respectively. These findings suggest that object-oriented analysis and the RF algorithm together have a great deal of value and potential for the identification and classification of fruit trees.

Data availability

The data that support the study are available from the corresponding author upon reasonable request.

References

Chen, J., Chen, C. & Yao, D. Analysis on the comparative advantage and export competitiveness of China’s fruit products. In International Conference on Transformations and Innovations in Management (ICTIM 2017) 476–486 (Atlantis Press, 2017).

Ilniyaz, O., Du, Q., Kurban, A., Kasimu, A. & Azadi, H. An explanatory spatio-temporal pattern analysis of the fruit industry in Xinjiang, China, between 1988 and 2017. Trans. GIS 26, 2383–2409 (2022).

Wu, M. et al. Monitoring cotton root rot by synthetic Sentinel-2 NDVI time series using improved spatial and temporal data fusion. Sci. Rep. 8, 2016 (2018).

Tan, C.-W. et al. Assessment of Fv/Fm absorbed by wheat canopies employing in-situ hyperspectral vegetation indexes. Sci. Rep. 8, 9525 (2018).

Qiu, S. et al. Multi-sensor information fusion based on machine learning for real applications in human activity recognition: State-of-the-art and research challenges. Inf. Fusion 80, 241–265 (2022).

Khanal, S., Kc, K., Fulton, J. P., Shearer, S. & Ozkan, E. Remote sensing in agriculture—Accomplishments, limitations, and opportunities. Remote Sens. 12, 3783 (2020).

Chaves, M., Picoli, M. & Sanches, I. Recent applications of Landsat 8/OLI and Sentinel-2/MSI for land use and land cover mapping: A systematic review. Remote Sens. 12, 3062 (2020).

Pricope, N., Mapes, K. & Woodward, K. Remote sensing of human–environment interactions in global change research: A review of advances, challenges and future directions. Remote Sens. 11, 2783 (2019).

Tamiminia, H. et al. Google Earth Engine for geo-big data applications: A meta-analysis and systematic review. ISPRS J. Photogramm. Remote Sens. 164, 152–170 (2020).

He, Y. et al. Examining rice distribution and cropping intensity in a mixed single-and double-cropping region in South China using all available Sentinel 1/2 images. Int. J. Appl. Earth Observ. Geoinf. 101, 102351 (2021).

Xuan, F. et al. Mapping crop type in Northeast China during 2013–2021 using automatic sampling and tile-based image classification. Int. J. Appl. Earth Observ. Geoinf. 117, 103178 (2023).

Yang, G. et al. AGTOC: A novel approach to winter wheat mapping by automatic generation of training samples and one-class classification on Google Earth Engine. Int. J. Appl. Earth Observ. Geoinf. 102, 102446 (2021).

Al-Shammari, D., Fuentes, I. M., Whelan, B., Filippi, P. & Bishop, T. Mapping of cotton fields within-season using phenology-based metrics derived from a time series of landsat imagery. Remote Sens. 12, 3038 (2020).

Tinh, P. H. et al. Distribution and drivers of Vietnam mangrove deforestation from 1995 to 2019. Mitig. Adapt. Strateg. Glob. Change 27, 29 (2022).

Kang, Y., Chen, Z., Li, L. & Zhang, Q. Construction of multidimensional features to identify tea plantations using multisource remote sensing data: A case study of Hangzhou city, China. Ecol. Inform. 77, 102185 (2023).

Liu, T.-H., Ehsani, R., Toudeshki, A., Zou, X.-J. & Wang, H.-J. Detection of citrus fruit and tree trunks in natural environments using a multi-elliptical boundary model. Comput. Ind. 99, 9–16 (2018).

Azizan, F. A., Kiloes, A. M., Astuti, I. S. & Abdul Aziz, A. Application of optical remote sensing in rubber plantations: A systematic review. Remote Sens. 13, 429 (2021).

Zhao, G. et al. Optimized extraction method of fruit planting distribution based on spectral and radar data fusion of key time phase. Remote Sens. 15, 4140 (2023).

Ye, X., Sakai, K., Okamoto, H. & Garciano, L. O. A ground-based hyperspectral imaging system for characterizing vegetation spectral features. Comput. Electron. Agric. 63, 13–21 (2008).

Torres-Sánchez, J. et al. Mapping the 3D structure of almond trees using UAV acquired photogrammetric point clouds and object-based image analysis. Biosyst. Eng. 176, 172–184 (2018).

Hadavand, A., Mokhtarzadeh, M., Zoej, M. J. V., Homayouni, S. & Saadatseresht, M. Using pixel-based and object-based methods to classify urban hyperspectral features. Geodesy Cartogr. 42, 92–105 (2016).

Liu, T. & Ren, H. Utilizing phenological features for object-oriented extraction of rice planting distribution on the Google Earth Engine platform. Trans. Chin. Soc. Agric. Eng. 38, 189–196 (2022).

Zhang, K., Guo, X., Kang, J. & Liu, J. Extraction of Moso bamboo forest distribution information using object-oriented approach combined with feature selection of multi-temporal Sentinel-2A images. J. Northeast For. Univ. 51, 61–68 (2023).

Carvalho, S., Schlerf, M., van Der Putten, W. H. & Skidmore, A. K. Hyperspectral reflectance of leaves and flowers of an outbreak species discriminates season and successional stage of vegetation. Int. J. Appl. Earth Observ. Geoinf. 24, 32–41 (2013).

Korn, F., Pagel, B.-U. & Faloutsos, C. On the” dimensionality curse” and the” self-similarity blessing”. IEEE Trans. Knowl. Data Eng. 13, 96–111 (2001).

Tsai, F. & Philpot, W. D. A derivative-aided hyperspectral image analysis system for land-cover classification. IEEE Trans. Geosci. Remote Sens. 40, 416–425 (2002).

Cheng, K. & Wang, J. Forest type classification based on integrated spectral–spatial–temporal features and random forest algorithm—A case study in the qinling mountains. Forests 10, 559 (2019).

Zhao, Y. et al. Classification of Zambian grasslands using random forest feature importance selection during the optimal phenological period. Ecol. Indic. 135, 108529 (2022).

Loukika, K. N., Keesara, V. R. & Sridhar, V. Analysis of land use and land cover using machine learning algorithms on google earth engine for Munneru River Basin, India. Sustainability 13, 13758 (2021).

Mansaray, L. R., Wang, F., Huang, J., Yang, L. & Kanu, A. S. Accuracies of support vector machine and random forest in rice mapping with Sentinel-1A, Landsat-8 and Sentinel-2A datasets. Geocarto Int. 35, 1088–1108 (2020).

Yue, J., Wang, Z., Feng, Z., Li, Z. & Wang, L. Remote sensing identification of fruit tree species in southern Xinjiang basin based on spectral and texture features. J. Xinjiang Agric. Univ. 38, 326–333 (2015).

Wang, X. et al. Microclimate, yield, and income of a jujube–cotton agroforestry system in Xinjiang, China. Ind. Crops Prod. 182, 114941 (2022).

Taghadosi, M. M., Hasanlou, M. & Eftekhari, K. Soil salinity mapping using dual-polarized SAR Sentinel-1 imagery. Int. J. Remote Sens. 40, 237–252 (2019).

Chen, F., Ming, C., Li, J., Wang, C. & Claverie, M. A comparison of Sentinel-2A and Sentinel-2B with preliminary results. In Proc. IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Valencia, Spain 22–28 (2018).

Mi, L. & Chen, Z. Superpixel-enhanced deep neural forest for remote sensing image semantic segmentation. ISPRS J. Photogramm. Remote Sens. 159, 140–152 (2020).

Zhu, L., Zhang, J. & Sun, Y. Remote sensing image change detection using superpixel cosegmentation. Information 12, 94 (2021).

Gupta, N. & Bhadauria, H. Object based information extraction from high resolution satellite imagery using eCognition. Int. J. Comput. Sci. Issues 11, 139 (2014).

Amani, M. et al. Google Earth Engine Cloud computing platform for remote sensing big data applications: A comprehensive review. IEEE J Sel. Top. Appl. Earth Observ. Remote Sens. 13, 5326–5350 (2020).

Phiri, D. et al. Sentinel-2 data for land cover/use mapping: A review. Remote Sens. 12, 2291 (2020).

Huang, Y., Ren, Z., Li, D. & Liu, X. Phenotypic techniques and applications in fruit trees: A review. Plant Methods 16, 1–22 (2020).

Carpintero, E., Mateos, L., Andreu, A. & González-Dugo, M. P. Effect of the differences in spectral response of Mediterranean tree canopies on the estimation of evapotranspiration using vegetation index-based crop coefficients. Agric. Water Manag. 238, 106201 (2020).

Jiang, Z., Huete, A. R., Didan, K. & Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 112, 3833–3845 (2008).

Sepulcre-Cantó, G. et al. Monitoring yield and fruit quality parameters in open-canopy tree crops under water stress. Implications for ASTER. Remote Sens. Environ. 107, 455–470 (2007).

Sun, Y., Qin, Q., Ren, H., Zhang, T. & Chen, S. Red-edge band vegetation indices for leaf area index estimation from Sentinel-2/MSI imagery. IEEE Trans. Geosci. Remote Sens. 58, 826–840 (2019).

Sun, C., Bian, Y., Zhou, T. & Pan, J. Using of multi-source and multi-temporal remote sensing data improves crop-type mapping in the subtropical agriculture region. Sensors 19, 2401 (2019).

Gitelson, A. & Merzlyak, M. N. Spectral reflectance changes associated with autumn senescence of Aesculus hippocastanum L. and Acer platanoides L. leaves. Spectral features and relation to chlorophyll estimation. J. Plant Physiol. 143, 286–292 (1994).

Fernández-Manso, A., Fernández-Manso, O. & Quintano, C. SENTINEL-2A red-edge spectral indices suitability for discriminating burn severity. Int. J. Appl. Earth Observ. Geoinf. 50, 170–175 (2016).

Barnes, E. et al. Coincident detection of crop water stress, nitrogen status and canopy density using ground based multispectral data. In Proc. Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, Vol. 1619 (2000).

Chabalala, Y., Adam, E. & Ali, K. A. Machine learning classification of fused Sentinel-1 and Sentinel-2 image data towards mapping fruit plantations in highly heterogenous landscapes. Remote Sens. 14, 2621 (2022).

Feng, Q. et al. Winter wheat identification in the Huang-Huai-Hai plain based on multi-source optical and radar data fusion. Trans. Chin. Soc. Agric. Mach. 54, 160–168 (2023).

Jönsson, P. & Eklundh, L. TIMESAT—A program for analyzing time-series of satellite sensor data. Comput. Geosci. 30, 833–845 (2004).

Huang, N., Lu, G. & Xu, D. A permutation importance-based feature selection method for short-term electricity load forecasting using random forest. Energies 9, 767 (2016).

Yang, L., Mansaray, L. R., Huang, J. & Wang, L. Optimal segmentation scale parameter, feature subset and classification algorithm for geographic object-based crop recognition using multisource satellite imagery. Remote Sens. 11, 514 (2019).

Liu, H. et al. Fine-grained wetland classification for national wetland reserves using multi-source remote sensing data and pixel information expert engine (PIE-Engine). GISci. Remote Sens. 60, 2286746 (2023).

Han, L. et al. A novel approach to mapping the spatial distribution of fruit trees using phenological characteristics. Agronomy 14, 150 (2024).

Ferreira, M. P. et al. Individual tree detection and species classification of Amazonian palms using UAV images and deep learning. For. Ecol. Manag. 475, 118397 (2020).

Chen, C.-J. et al. Identification of fruit tree pests with deep learning on embedded drone to achieve accurate pesticide spraying. IEEE Access 9, 21986–21997 (2021).

Hobart, M., Pflanz, M., Weltzien, C. & Schirrmann, M. Growth height determination of tree walls for precise monitoring in apple fruit production using UAV photogrammetry. Remote Sens. 12, 1656 (2020).

Chakhar, A., Hernández-López, D., Ballesteros, R. & Moreno, M. A. Irrigation detection using Sentinel-1 and Sentinel-2 time series on fruit tree orchards. Remote Sens. 16, 458 (2024).

Liu, Y., Qian, J. & Yue, H. Comprehensive evaluation of Sentinel-2 red edge and shortwave-infrared bands to estimate soil moisture. IEEE J Sel. Top. Appl. Earth Observ. Remote Sens. 14, 7448–7465 (2021).

Scheffler, D., Frantz, D. & Segl, K. Spectral harmonization and red edge prediction of Landsat-8 to Sentinel-2 using land cover optimized multivariate regressors. Remote Sens. Environ. 241, 111723 (2020).

Clevers, J. G., Kooistra, L. & Van den Brande, M. M. Using Sentinel-2 data for retrieving LAI and leaf and canopy chlorophyll content of a potato crop. Remote Sens. 9, 405 (2017).

McGranahan, G. & Leslie, C. Walnut. In Fruit Breeding (eds McGranahan, G. & Leslie, C.) 827–846 (Springer, 2012).

Li, X., Li, Y., Zhang, Z. & Li, X. Influences of environmental factors on leaf morphology of Chinese jujubes. PLoS ONE 10, e0127825 (2015).

Quinet, M. & Wesel, J.-P. Botany and taxonomy of pear. In The Pear Genome (ed. Korban, S. S.) 1–33 (Springer, 2019).

Hussain, S. Z., Naseer, B., Qadri, T., Fatima, T. & Bhat, T. A. Apricots (Prunus armeniaca)—Morphology, taxonomy, composition and health benefits. In Fruits Grown in Highland Regions of the Himalayas: Nutritional and Health Benefits (eds Hussain, S. Z. et al.) 91–102 (Springer, 2021).

Gupta, M., Arora, R. & Mandal, D. Peach. In Temperate Fruits (eds Gupta, M. et al.) 247–296 (Apple Academic Press, 2021).

Adugna, T., Xu, W. & Fan, J. Comparison of random forest and support vector machine classifiers for regional land cover mapping using coarse resolution FY-3C images. Remote Sens. 14, 574 (2022).

Zafari, A., Zurita-Milla, R. & Izquierdo-Verdiguier, E. Evaluating the performance of a random forest kernel for land cover classification. Remote Sens. 11, 575 (2019).

Yan, X. & Jia, M. A novel optimized SVM classification algorithm with multi-domain feature and its application to fault diagnosis of rolling bearing. Neurocomputing 313, 47–64 (2018).

Liu, B. et al. Supervised deep feature extraction for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 56, 1909–1921 (2017).

Kang, Q. et al. A distance-based weighted undersampling scheme for support vector machines and its application to imbalanced classification. IEEE Trans. Neural Netw. Learn. Syst. 29, 4152–4165 (2017).

Batuwita, R. & Palade, V. Class imbalance learning methods for support vector machines. In Imbalanced Learning: Foundations, Algorithms, and Applications (eds He, H. & Ma, Y.) 83–99 (Wiley, 2013).

Jin, J. et al. Remote Sensing information extraction of structural characteristics of forest and fruit trees in agricultural-forest intercropping patterns and oasis special forests. Trans. Chin. Soc. Agric. Eng. 38, 146–154 (2022).

Cheng, Z., Qi, L., Cheng, Y., Wu, Y. & Zhang, H. Interlacing orchard canopy separation and assessment using UAV images. Remote Sens. 12, 767 (2020).

Xue, H. et al. Object-oriented crop classification using time series sentinel images from Google Earth Engine. Remote Sens. 15, 1353 (2023).

Liu, M. et al. The impact of spatial resolution on the classification of vegetation types in highly fragmented planting areas based on unmanned aerial vehicle hyperspectral images. Remote Sens. 12, 146 (2020).

Acknowledgements

The authors would like to extend our gratitude to the following organizations for providing the remote sensing products utilized in this study: China National Centre for Basic Geographic Information, ESRI Corporation, European Space Agency. They also wish to acknowledge the Google Earth Engine platform for facilitating aspects of the analysis. Finally, they sincerely appreciate the valuable comments from reviewers and editors, which significantly helped us to improve the quality of this manuscript.

Funding

This research was funded by the Science & Technology Department of Xinjiang Uygur Autonomous Region, China (2021D01C055).

Author information

Authors and Affiliations

Contributions

Conceptualization, J.L. and M.S.; methodology, J.L.; software, J.L.; validation, J.L.; formal analysis, J.L., M.S., M.Z. and X.Z.; investigation, J.L., M.S., J.C., X.H., Z.X., M.Z., X.Z., A.R., X.Y., A.X.; resources, M.S.; data curation, J.L.; writing—original draft preparation, J.L.; writing—review and editing, J.L., M.S.; visualization, J.L.; supervision, J.L., M.S.; project administration, M.S.; funding acquisition, M.S. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Liang, J., Sawut, M., Cui, J. et al. Object-oriented multi-scale segmentation and multi-feature fusion-based method for identifying typical fruit trees in arid regions using Sentinel-1/2 satellite images. Sci Rep 14, 18230 (2024). https://doi.org/10.1038/s41598-024-68991-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-68991-7