Abstract

Academic achievement is a critical measure of intellectual ability, prompting extensive research into cognitive tasks as potential predictors. Neuroimaging technologies, such as functional near-infrared spectroscopy (fNIRS), offer insights into brain hemodynamics, allowing understanding of the link between cognitive performance and academic achievement. Herein, we explored the association between cognitive tasks and academic achievement by analyzing prefrontal fNIRS signals. A novel quantum annealer (QA) feature selection algorithm was applied to fNIRS data to identify cognitive tasks correlated with CSAT scores. Twelve features (signal mean, median, variance, peak, number of peaks, sum of peaks, range, minimum, kurtosis, skewness, standard deviation, and root mean square) were extracted from fNIRS signals at two time windows (10- and 60-s) to compare results from various feature variable conditions. The feature selection results from the QA-based and XGBoost regressor algorithms were compared to validate the former’s performance. In a two-step validation process using multiple linear regression models, model fitness (adjusted R2) and model prediction error (RMSE) values were calculated. The quantum annealer demonstrated comparable performance to classical machine learning models, and specific cognitive tasks, including verbal fluency, recognition, and the Corsi block tapping task, were correlated with academic achievement. Group analyses revealed stronger associations between Tower of London and N-back tasks with higher CSAT scores. Quantum annealing algorithms have significant potential in feature selection using fNIRS data, and represents a novel research approach. Future studies should explore predictors of academic achievement and cognitive ability.

Similar content being viewed by others

Introduction

Since the first intelligence quotient (IQ) test was developed in 1905, numerous scholars and organizations have contributed to the objective measurement and assessment of academic achievement1. Under the broader theme of evaluating human intellectual ability, researchers have investigated several potential predictors of academic achievement, such as intelligence and cognitive abilities. While classic approaches mainly focus on general intelligence and its positive correlation with academic achievement levels2,3, recent methods have attempted to identify and predict academic achievement based on the concept of multidimensional cognitive ability4,5. Accordingly, several cognitive tasks have been proposed for such an evaluation. The N-back task and Tower of London (ToL) task are both examples of cognitive tasks that assess advanced cognitive abilities such as working memory capacity or executive planning6,7. Previous studies8,9 have explored the relationship between cognitive task performance and academic achievement.

When assessing the results of cognitive tasks, basic dependent measure generally comprises behavioral performance, such as error rate or reaction time. In addition, many researchers10,11 have studied brain hemodynamics among participants. Neuroimaging technologies, such as functional magnetic resonance imaging (fMRI) and functional near-infrared spectroscopy (fNIRS), allow the quantification of neural activation and connectivity patterns during specific cognitive tasks. In one prior study, Herff et al.12 examined mental workload activation levels in the prefrontal cortex using fNIRS signals during the N-back task phase. fNIRS measures cortical activation by detecting changes in oxy- and deoxyhemoglobin concentrations using near-infrared light. It offers significant advantages over fMRI, including higher temporal resolution, cost efficiency, and portability13. Previous studies14,15 have investigated the relationship between cognitive performance and brain hemodynamics in the prefrontal regions, as well as their implications for academic achievement examinations.

The analysis of highly multidimensional neural dynamics data, statistical models, and machine learning models have been widely used because of their specialization in multivariate pattern analysis16,17. In one such study18, researchers identified a possible biomarker of pain using feature selection through machine learning in fNIRS dataset. Naseer et al.19 selected optimal feature sets from fNIRS signals using Support Vector Machine (SVM)20 that is widely applied to feature selection. Choi et al.21 proposed that XGBoost classifier22 can be investigate scholastic ability employing fNIRS feature selection method. Karamzadeh et al.23 discussed the importance of feature selection for detecting prefrontal hemodynamic biomarkers utilizing Decision Tree classifier24. These three (SVM, XGBoost, Decision Tree) classical machine learning models have been widely used in feature selection using fNIRS signals.

Recently, a quantum annealer-based feature selection algorithm was introduced and applied to neuroimaging data analysis25,26. Quantum annealer (QA) is a novel optimization method that specializes in combinatorial optimization executed using a quantum computer. Optimized combinations are sought in the feature set by minimizing a cost function, i.e., finding the ground state of the energy in a quantum system to find the global minimum in optimization tasks27. Although this was not the first attempt to employ a quantum annealer based method for neuroimaging data analysis, research on the relative importance of cognitive tasks in predicting academic achievement remains scarce in the field of quantum computing.

Therefore, in this study, we investigated the relationship between cognitive tasks and academic achievement using brain hemodynamics data measured with fNIRS devices based on the QA-based feature selection algorithm. To validate the results from the quantum annealer, we compared the feature selection performance with the XGBoost regressor that was selected among three model classical machine learning (CML) algorithms (support vector machine, decision tree, and XGBoost regressor). Moreover, the two step using a multiple linear regression (MLR) model was designed and applied in the comparison between the QA-based and the XGBoost regressor. The list of main contributions from this work is as follows:

-

We applied the QA-based feature selection algorithm to investigate the associated cognitive task with the CSAT score.

-

The feature selection result of QA-based algorithm was validated by the XGBoost regressor and two step validation process using the MLR model that was designed by authors.

-

This work showed the applicability of this algorithm to the neural dataset based on consistencies along with the reported association from previous studies.

Methods

Overview of the study

The following six steps were conducted to investigate the relationship between academic achievement and brain hemodynamics during cognitive task performance: First, fNIRS signals were collected from 100 college students during the performance of eight different cognitive tasks (including a resting-state session). Second, the fNIRS dataset was preprocessed to remove the artifacts and noise of the dataset. Third, 12 different features (signal mean, median, variance, peak, number of peaks, sum of peaks, range, minimum, kurtosis, skewness, standard deviation, and root mean square) were extracted from the preprocessed oxy-hemoglobin (HbO) and deoxy-hemoglobin (HbR) concentration signals during each of the eight experimental tasks. Fourth, the QA-based algorithm utilized the 12 extracted feature sets to investigate the features that were highly associated with the average CSAT score. To further validate the results from the quantum annealer, we compared their performances with those of classical machine learning (CML) models (i.e., support vector machine, decision tree, and XGBoost regressor). The XGBoost regressor was selected for further analysis because it exhibited the best performance among the three regressors. Fifth, the process for the performance validation of the QA feature selection method comprised two steps using a MLR model, as follows: (1) model fitness of the MLR model, and (2) model prediction error in the MLR model. Finally, the cognitive tasks that elicited signals with feature variables that were highly correlated with the CSAT scores were selected based on the results of the validation process. An overview of this study is presented in Fig. 1.

Experimental procedure

The experimental procedure during data collection was as follows: After the participants were provided with a brief introduction to the fNIRS signal collection procedure and provided written consent, the researchers informed them that the experiment comprised eight independent tasks, with a short break after every three tasks. During fNIRS data acquisition, the participants were instructed not to move their heads as much as possible.

First, before performing the seven cognitive tasks, participants underwent a 5-min resting session with the following instruction while wearing an fNIRS device, “At this stage, we require you to look at the ' + ' sign displayed in the center of the screen as comfortably as possible. We researchers are interested in natural neural activity during rest. If you look at other objects around you or think about something complicated, it becomes difficult to measure resting activity. Please look at the screen as comfortably as possible, but without falling asleep or closing your eyes.” Following a resting session, seven cognitive tasks (i.e., the Corsi block-tapping task, emotion task, recognition task, Stroop task, Tower of London task, N-back task, and verbal fluency task) were presented sequentially. Second, the Corsi block-tapping (CBT) task was performed to measure short-term visuospatial working memory. In this task, participants were asked to indicate consecutive locations of colored squares28. Third, participants were instructed to indicate the emotional state of the presented facial stimuli. Six choices (disgust, sadness, happiness, anger, surprise, or fear) were provided for each facial expression29. Fourth, a recognition task was conducted to investigate whether participants correctly recognized the former faces and their emotional states. The pictures from the previous task and a set of new face pictures were presented in a randomized order, and participants were instructed to respond to 1) whether they recognized the face and, if yes, 2) which emotional state the faces had beforehand30. Fifth, the participants performed the Stroop task, which is widely used to measure overall executive functions, such as selective attention and response inhibition31. Participants were presented with colored words and instructed to choose the correct color/meaning of the given words. Sixth, the Tower of London (ToL) task was performed. The ToL task, in which participants change the location of balls in a limited number of attempts, is often applied to measure executive planning and problem-solving abilities7. Seventh, an N-back test was used to measure working memory capacity32. In this task, the participants were asked to memorize the sequential position of a target stimulus and respond if its current position matched the position of the previous n-trials (hence, n-back). Lastly, the verbal fluency task (VFT) was performed to measure participants’ verbal memory and cognitive control33. Participants were asked to produce as many words as they could verbally under three different conditions (control, phonemic, and semantic). The order of task trials was randomized across participants within each task block.

The participants were compensated with 20,000 Korean Won per hour. All experiments were conducted following approval of the Institutional Review Board of Yonsei University (7,001,988–202,104-HR-659–06) and in accordance with the guidelines of the Declaration of Helsinki.

fNIRS measurement and preprocessing

One hundred undergraduate students participated in eight experimental tasks during fNIRS data collection (60 females, 40 males; mean age = 19.17 years). The students were recruited from 13 universities across South Korea. The participant pool was purposely distributed to diverse sources to broaden the range of collected cognitive performance levels. Data from seven participants were eliminated due to errors during the collection process, leaving 93 participants in total.

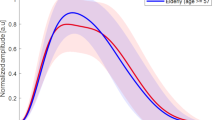

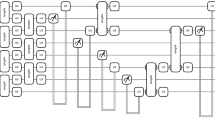

For data collection, we measured the prefrontal hemodynamic activity of the participants using the NIRSIT Lite device (OBELAB Inc., Seoul, Korea). Previous research has demonstrated that high-level cognitive abilities, such as executive function and academic achievement, are reflected in the prefrontal hemodynamical activity34,35,36,37. This device comprises five dual-wavelength laser diodes (780 and 850 nm) and seven photodetectors, forming 15 channels with a laser-detector distance of 3 cm. The optical signal from each channel was sampled at 8.138 Hz. Figure 2 shows the positions of the optodes in the NIRSIT Lite device38. The channel position of this device was set with the estimated coordinate information with the NFRI toolbox39. The detailed information of each channel with the corresponding brain region is depicted in Fig. 3. Moreover, the Montreal Neurologic Institute (MNI) coordinate for each channel of the NIRSIT Lite device was listed in Table 1. Furthermore, since the size of the device was designed regarding the average head size of Korean people, we assumed that the channel positions corresponded to the same brain regions across all participants.

After acquisition, raw fNIRS signals were preprocessed to eliminate physiological noise and channels with a low signal-to-noise ratio. The dataset was filtered using a bandpass range of 0.005–0.1 Hz. Channels with signal-to-noise ratios of < 30 dB were excluded from the final dataset. Body movement noise was removed by the temporal derivative distribution repair (TDDR) method40. In addition, the channel that has the short distance (< 10 mm) between source and detector was excluded with averaging to regress out extracerebral signals. To calculate the HbO and HbR signal after the filtering, the modified Beer-Lambert law (MBLL) was used with the differential path length factor (DPF) constant value 6 and 5.2 for 780 and 859 nm band41,42.

Feature extraction

Feature extraction from the preprocessed HbO and HbR signal datasets was performed as described below. Because the duration of the eight cognitive tasks varied, we needed to trim the fNIRS data to control the number and temporal sizes of the windows. The task duration of the ‘emotion’ task (i.e., 211 s) was the shortest among 8 tasks, and thus we trimmed the signal data to remain only the first 211 s in all eight tasks. Each feature (i.e., signal mean, median, variance, standard deviation, root mean square, peak, minimum, range, number of peaks, sum of peaks, skewness, and kurtosis) of each task phase was extracted from non-overlapping time windows of 10 s and 60 s. All HbO and HbR signals from 15 channels were averaged before feature extraction. Figure 4 depicts how we extracted the 10-s time windows from the overall task duration. More detailed definitions and calculation formulas of the twelve features are as below:

Signal mean

The mean values of the HbO and HbR signals in each time window were calculated using the following formula:

To calculate µw, the mean value for a given window, the summation of HbO and HbR signal data (denoted HbX) from the start point to the end point of the window was divided by the number of signal values in the window (denoted Nw). Mean signal values have been utilized in numerous fNIRS-BCI studies. For example, Hong et al.43 used the mean signal features to classify brain hemodynamic data from individuals with cognitive and motor impairments.

Signal median

The median values of HbO and HbR concentration signals were selected for each time window. Shin and Jeong44 used the median features of fNIRS data signals from the motor cortex to classify the neural hemodynamic responses during different motor tasks.

Signal variance and standard deviation

The signal variance values of HbO and HbR signal were calculated as follows:

where Variancew indicates the variance of feature values in each time window. Standard deviations were determined by taking the root of variance values. Multiple studies have reported the effectiveness of these features in fNIRS analysis, where neural data are classified into different task phases45,46.

Signal root mean square

The signal root mean square values of the HbO and HbR signals in each time window were calculated as follows:

The RMS feature values have previously been used in fNIRS analyses of emotion induction and language learning processes47,48.

Signal peak and minimum

Peak (highest) and minimum (lowest) values were extracted for each time window. Signal peak and minimum values have been used and validated in several fNIRS-BCI studies, mostly to improve the quality of BCI systems using classification methods49,50,51.

Signal range

The signal ranges were calculated as follows52:

The differences between the highest (denoted Hw) and lowest point (denoted Lw) of the concentration signal values were calculated for each time window.

Number of signal peaks

The number of peak values in each given window were calculated. Khan and Hong53 determined the number of peaks by measuring the local maxima of concentration changes in the HbO signals within each time window.

Sum of signal peaks

Additionally, the sum of all signal peak values were calculated. The local maxima were summed in each window to obtain the total peak values.

Signal skewness

The skewness value Skewnessw was calculated from the signal data in the given windows as follows:

In this formula, the standard deviation (denoted σ) divides the expectation of HbO or HbR signal (denoted EX).

Signal Kurtosis

The signal kurtosis values (denoted Kurtosisw) were calculated from the signal data in the given windows as follows:

Several previous studies have used signal skewness and kurtosis values in their fNIRS studies, both in research on sensory input processing and mental imagery interpretation54,55.

QA based feature selection method

To identify the features most strongly associated with the aforementioned feature sets with CSAT levels, a QA-based feature selection method was applied. This method is executed on quantum processing units (QPU) of D-Wave quantum computing hardware. A D-wave QPU was fundamentally built on the Ising model from statistical mechanics56. The Ising model comprises nodes and edges in a lattice structure. In D-wave quantum hardware, each node was physically converted into a superconducting flux qubit, while two states of qubits (i.e., spin-up and spin-down) were converted into binary values (+ 1 and − 1) in Ising models, respectively. The correlation values between the qubits were calculated to describe their relationships. The qubit states and relationships can be reflected in the objective function of the Ising model as follows:

where \({h}_{i}\) indicates the bias of the \({i}^{th}\) qubit, and \({s}_{i}\) represents the spin of the qubits in the D-wave QPU. The coupling strength between two qubits (\({s}_{i}\) and \({s}_{j}\)) is denoted by \({J}_{i,j}\). This model aims to determine the ground state of a quantum system by minimizing the energy state through an optimization process.

Furthermore, the Ising model can be translated into binary quadratic models for diverse optimization problems. The objective function formula of BQM is similar to that of the Ising model.

where \({Q}_{i,j}\) is elements in the \({i}^{th}\) row and \({j}^{th}\) column of a upper triangular matrix \(Q (Q\in {M}_{m\times n}(R))\) and \({x}_{i}\) represents \({i}^{th}\) binary values (\({x}_{i}\in \{\text{0,1}\}\)) in \(x\). We can determine the optimal solutions with a given coefficient matrix \(Q\) by minimizing the objective function \(f(x)\).

In this study, we calculated mutual information (MI) to reflect the correlation between features, and conditional mutual information (CMI) to apply the relationships between features and dependent variables. MI (8) and CMI (9) were defined as follows:

where \(H(X)\) denotes the marginal entropy of \(X\). It quantifies the amount of information contained in features. And \(H(X|Y)\) denotes the conditional entropy. Negative CMI values were used as diagonal terms, and MI values were applied as upper-triangular terms of the coefficient matrix \(Q\).

The BQM library, a part of the D-Wave Ocean software, transforms the objective function into a graph \(G=(V,E)\), where \(V\) signifies a set of vertices and \(E\) represents a set of edges connecting vertices encoding MIs and CMIs. This graph is embedded into the QPU of the D-Wave hardware. To determine the optimal feature combination, the BQM conducted an iterative optimization process for a specified number of features, denoted by \(k\), ranging from 1 to 168 using 10-s windows (1 to 24 using 60-s windows). Upon completion of the entire process, the relative importance of the features was established by counting their occurrence. For a more comprehensive understanding of graph-level implementations in quantum hardware, please refer to Harris et al.57.

CML models

To examine the feature selection performance of the QA-based algorithms, we utilized CML models as counterparts. Three CML algorithms (support vector machine, XGBoost regressor, and decision tree regressor) were compared to determine the relevant classical counterpart. The support vector machine (SVM) regressor was the first classification algorithm employed20. This supervised learning method specializes in predicting continuous outcomes by employing kernel functions to transform data into high-dimensional spaces. This transformation facilitates the discovery of regression functions that correspond to nonlinear relationships within the dataset. The primary aim is to maximize the margin between data points and a regression line, defining an epsilon (ε)-insensitive tube to penalize deviations beyond this margin. Support vectors, which are crucial data points that shape the position of the regression line, are integral to this process. Optimization occurs by identifying the hyperplane that minimizes the errors within this specified margin.

The decision tree regressor, which is the second classification algorithm, operates by recursively partitioning the dataset into smaller subsets and creating a tree-like structure in which each internal node represents a feature, each branch signifies a decision based on that feature, and each leaf node provides a regression output22. By selecting the most informative features at each step, typically based on criteria such as minimizing the variance or mean squared error, the algorithm constructs a tree to predict continuous target variables. The third algorithm was the XGBoost regressor24. This algorithm is an ensemble-learning technique that sequentially builds decision trees to refine and enhance predictions. By combining multiple shallow decision trees, it creates a robust predictor adept at capturing complex relationships in datasets. Based on operational processes similar to the decision tree algorithms, the predicted values and errors were calculated for each branch of the tree structure. All hyperparameters for three CML algorithms were set randomly (random selection). In addition, to evaluate the model performance with the reflection of the whole dataset, fivefold cross validation was applied in the model evaluation. Under this setting, model performances were checked based on the averaged index values. Applied hyperparameters are listed in Table 2.

Among the three CML algorithms, we selected the XGBoost regressor as a comparison method for the QA-based feature selection based on the regression performance of each algorithm. Detailed experimental results for the three CML models are presented in the Results section. From the two main feature selection methods (i.e., the QA-based feature selection method and XGBoost regressor for comparison), we needed to validate the feature selection results of the two methods with additional criteria.

Validation process with MLR models

As described above, we ranked the feature variables (168 variables from 10 s windows; 24 variables from 60 s windows) collected during each task phase using QA-based feature selection and the CML model (i.e., XGBoost regressor) algorithms. Using these data, we examined the validity of each rank structure and compared the results of the two algorithms. MLR models were used to evaluate the model fitness and performance of each algorithm. Multiple linear regression (MLR) is a common statistical method used to investigate the linear relationship between a dependent variable and multiple independent variables58. To validate the proposed algorithms, the MLR models used the same features and dependent variables as those of the QA-based feature selection algorithms. With 168 variables from 10 s windows, five different variable conditions were set based on the accumulative rank of each variable (i.e., top 10, top 20, top 30, top 40, and top 50). The same excluding two-variable conditions (i.e., top 10 and top 20) were set for 24 variables from the 60 s windows. The following two-step validation process was adopted to confirm that the results show the relative importance of each feature variable:

First, the MLR model fitness of the two algorithms was compared to examine how accurately the ranked features predicted the dependent variables. We calculated the adjusted R-squared value of the MLR models to compare the model fitness of each feature selection algorithm, with and without the stepwise option in the regression model. Higher model fitness values indicated that the model accurately captured the relatedness of the dependent variable (i.e., the CSAT score) and independent variables (i.e., selected features).

Second, the root mean square error (RMSE) values were calculated to compare the regression performance (i.e., model prediction error) of the two algorithms. Lower RMSE values indicate that the models show better regression performance, which means that the feature ranks reflect the relative importance of the features.

Tools

The fNIRS dataset was pre-processed using the NIRIST Lite Analysis Tool (version 3.3.0) and Python software (version 3.11; scikit-learn, version 1.2.1). We used D-Wave Ocean software (Python-based framework) to build a QA-based feature selection algorithm. The MLR models and XGBoost regressors were built and operationalized using Python (version 3.11; scikit-learn, version 1.2.1) and R (version 4.3.1).

Ethical declarations

This study was approved by the Institutional Review Board of Yonsei University (7,001,988-202,104-HR-659–06), and all participants provided informed consent to participate.

Results

To check the reliability of the fNIRS dataset that we collected and analyzed, we checked the cognitive task performances of 100 participants. The summarized cognitive task performance is listed in Table 3.

Algorithm performances of classical machine learning models

We calculated the evaluation metrics (RMSE values) of each of three CML regression models (the support vector machine, decision tree, and XGBoost regressor) to compare their performance. This process was conducted to select the model with the best performance for validating the performance of the QA algorithm. Twelve features of the pre-processed HbO and HbR signal data were used as independent variables to predict the average CSAT score for each participant in the regression process. For all 12 features (signal mean, median, variance, peak, number of peaks, sum of peaks, range, minimum, kurtosis, skewness, standard deviation, and root mean square), the XGBoost regressor showed the best performance (i.e., lowest RMSE values) among the three models for all twelve features. The average RMSE values of the models were as follows: support vector machine, 1.517; decision tree, 1.786; and XGBoost regressor, 1.229. Therefore, the feature selection results from the XGBoost regressor were employed in the validation process of the QA-based algorithm. The RMSE values of the three models are listed in Table 4.

Selected features from the QA-based feature selection algorithm

The QA-based feature selection algorithm ranked 168 variables from 10 s windows and 24 variables from 60 s windows based on relatedness with the average CSAT score. The top ten variables in each feature window were considered when selecting the final cognitive task. The VFT, CBT, and recognition tasks were the most selected cognitive tasks in the top 10 rank order. Tables 5 and 6 show the top 10 results of the cognitive task variables for the 10 s and 60 s windows.

In Table 5, each cell contains information regarding the cognitive task that each feature variable represents, the time window the feature was extracted from (0–20), and the number of times each feature was selected during the feature selection process. For example, ‘recog_9 (159)’ indicates the feature from the tenth window of recognition task phase, which was selected 159 times by quantum annealing algorithm. Table 6 has the same format, but the time windows range from 0 to 2.

Selected features from the XGBoost regression algorithms

The same process was performed using the XGBoost feature selection algorithm. Again, 168 variables from the 10 s windows and 24 variables from the 60 s windows were ranked based on their relatedness to the dependent variable. Among the top ten variables in each ranked feature list, the VFT, recognition, and emotion tasks were the most frequently selected. Tables 7 and 8 show the top 10 results of the cognitive task variables for the 10 s and 60 s windows. In Table 7, each cell contains information regarding the cognitive task the feature variable represents, the time window the feature was extracted from (0–20), and the F-score values. Table 8 again has the same format, but the time windows range from 0 to 2.

Results of three steps in the validation process

In the validation process, we first calculated and compared the model fitness values between the MLR models based on each algorithm (QA and XGBoost). When the model fitness values for the five different variable conditions (i.e., top 10, 20, 30, 40, and 50) were compared, the adjusted R2 values increased when more features were added to the models. In other words, there was an overall pattern of higher model fitness values in the ‘top 50’ condition, which used 50 feature variables to build the model, than in the ‘top 10’ condition, which used only 10 feature variables. Furthermore, when comparing the results from the QA algorithms with those of the XGBoost algorithm, we found that the features from the 60-s windows show higher similarity between the two algorithms. Tables 9 and 10 show the average model fitness (adjusted R2) values for each model and time window.

In Tables 9 and 10, each cell shows the fitness (adjusted R2) values of the QA and XGBoost models under different variable conditions. The adjusted R2 values calculated without the stepwise feature selection method are shown in parentheses.

Second, the model prediction error (RMSE) values of the MLR models were calculated. When the model prediction error values were compared between the two algorithms, the QA algorithm showed better or equal performance to that of XGBoost under the following six feature conditions: signal mean feature, 10 s window; signal mean feature, 60 s window; signal minimum feature, 60 s window; signal peak feature, 60 s window; signal variance feature, 10 s window; and signal variance feature, 60 s window. Tables 11 and 12 show the RMSE values for each model and time window.

Based on the results of the two-step validation process, we selected the cognitive tasks that were most highly associated with CSAT scores based on the ranked features under the following conditions: (1) signal mean, signal minimum, signal peak, and signal variance features and (2) 60-s windows. Table 13 lists the ranked features for the selected conditions. Overall, the VFT, recognition, and CBT tasks were the most frequently selected of the eight cognitive tasks.

Additional analysis: group comparison

For an additional analysis, we divided the participants into two groups based on their CSAT scores, and subsequently compared the results of the selected features from each group. Overall, the group with higher CSAT scores produced signal features while performing the ToL and N-back tasks which were more frequently in the top 10 orders than the group with lower scores. Tables A and B in the Supplementary Materials show the top 10 results of cognitive task variables for the 10 s and 60 s windows, comparing the results from both groups.

Using the selected feature list of the XGBoost algorithm, the results were compared between two groups with different CSAT score populations. Overall, the Stroop, CBT, and ToL task variables were highly associated with the dependent variable in the group with higher CSAT scores than in the group with lower scores. Tables C and D in the Supplementary Materials show the top 10 results of cognitive task variables for the 10 s and 60 s windows, comparing the results from both groups.

Applying the same two-step validation process with group comparison data, the following feature variables (i.e., signal range, 60 s window; signal minimum, 60 s window) in Table 14 showed the highest relatedness with average CSAT scores in each group.

Discussion

The feature selection results from the quantum annealing and classical machine learning models were compared to investigate the potential use of QA-based feature selection algorithms in neuroimaging data analysis. An fNIRS dataset collected during the eight cognitive tasks was used to extract 12 features for each of the two time-window conditions. Subsequently, the two-step validation process using multiple linear regression models was used for the comparison. The final cognitive tasks were selected based on their association with CSAT scores. Furthermore, we conducted a group comparison analysis by dividing the original dataset into two groups based on the average CSAT scores.

In the first validation process, comparing the model fitness (adjusted R2) values of each model, different time window conditions were taken into account. In the 60-s window condition (compared to 10-s), the QA model showed a higher scale of adjusted R2 values, which made it more comparable to the CML model. In previous studies using fNIRS feature extraction methods, authors compared window length conditions to find the proper length of HbO or HbR signals under their research design59,60. Though the window length condition needs to be evaluated with the consideration of research condition, some researchers reported that longer HbO or HbR signals was useful for the classification task with the machine learning algorithm61,62. Based on these reports, we concluded that QA algorithms could offer more reliable results in the longer window condition (i.e., 60-s than 10-s window), and this indicates that the feature selection results of QA algorithms represent a similar trend to that of the CML algorithms.

Second, the model prediction errors (RMSE) of the QA and CML models were compared. The RMSE values of the QA algorithms were lower than or similar to those of the CML algorithms under the four feature variable conditions (i.e., signal mean, signal minimum, signal peak, and signal variance). For the signal mean feature variable, the RMSE values were similar across the two models. Signal mean features have been shown to be important in previous studies analyzing fNIRS data using CML models63,64. Furthermore, under the feature variable conditions of the signal minimum, signal peak, and signal variance, the RMSE values of the QA model were lower than those of the CML model. These three features are widely used in the feature selection process of CML models50,65,66. Thus, the results indicate that the selected feature sets of the QA algorithms are comparable to those of the CML model (i.e., XGBoost regressor) algorithms under the four feature variable conditions.

Through aforementioned processes, we selected cognitive tasks based on the feature selection results of four feature variable conditions in a 60-s time window condition. The VFT, recognition, and CBT tasks were most closely associated with academic achievement (i.e., mean CSAT scores). In agreement with our results, previous studies have found a strong link between verbal fluency and academic achievement67,68. Basic recognition memory capacity is a predictor of academic achievement69. In one study, Siquara and colleagues70 showed that working memory performance measured using the Corsi block tapping task also successfully predicted academic achievement. In summary, three cognitive tasks (i.e., VFT, recognition, and CBT) that are highly linked to academic achievement were selected by the QA algorithms.

In addition, we conducted a group comparison analysis to compare the results between two separate datasets: a group with higher CSAT scores and a group with lower scores. The same two-step validation process was used in this study. Comparing model fitness (adjusted R2) values, we found that the adjusted R2 values of QA algorithms were higher than those of CML algorithms in the following feature variable conditions: signal mean (60-s window), signal minimum (60-s window), and signal range (60-s window). Considering the model prediction error (RMSE) values together, the signal minimum (60-s window) and signal range (60-s window) feature conditions were selected. Here, again, these results showed that better prediction performance can be found under the longer window length condition71. Signal minima and range features have been used in neural hemodynamic data analysis using machine learning models72,73,74.

For the final cognitive tasks, the ToL and N-back tasks were more frequently selected for the group with higher CSAT scores than for their lower counterparts. This indicates that these tasks were more highly associated with the academic achievement of the group with higher CSAT scores than those with lower scores. Both tasks are known predictors of certain kinds of academic and cognitive ability. The Tower of London task, widely known as a measure of executive planning ability, is associated with academic achievement, particularly in mathematics75. Luo and Zhou76 predicted children’s academic achievement levels using EEG biomarkers during N-back tasks.

This study has several implications. First, we applied QA-based algorithms to the feature selection process of neural hemodynamics (i.e., fNIRS) data. The results indicate that QA-based algorithms are comparable to CML algorithms and are feasible for multidimensional neural hemodynamic data analysis. Second, specific cognitive tasks (i.e., recognition, verbal fluency, Tower of London, and N-back) were selected based on the relative importance of feature variables and showed their associations with students’ academic achievement levels (i.e., averaged CSAT scores). While the behavioral performance of cognitive tasks reflects segments of students’ cognitive abilities, brain activation pattern analysis can offer an additional perspective. Furthermore, we conducted a group comparison analysis between two groups with distinct academic achievement levels, and the feature selection results suggested a clear difference. Thus, this study provides a basis for future studies investigating the potential predictors of academic achievement regarding neural activation during cognitive task phases.

This study had several limitations. First, the quantum annealing based quantum hardware can include noises in the NISQ (‘noisy intermediate-scale quantum’) era77. Nevertheless, previous studies have reported the utility of QA-based algorithms in feature-selection tasks using neuroimaging data. Second, our fNIRS dataset was comprised of college students from South Korea. Such findings can only be generalized when samples are collected from various sources. Third, while investigating the cognitive tasks most related to academic achievement levels, we could not fully consider the individual characteristics of each cognitive task. For example, the duration of each task block was not controlled. In future studies, we plan to focus on this issue. Fourth, we used only CML models and not deep learning models to validate the results of the QA-based algorithms. Deep learning algorithms are increasingly employed for multivariate analysis; however, they require additional steps and effort to obtain feature importance values from the models. Therefore, we limited our validation to the CML models. Finally, since we selected cognitive tasks that have the association with CSAT score based on the frequency of feature variables, it is hard to draw additional neuroscientific interpretations in this design. To check more diverse reasons about experimental results of this work, we have a plan to conduct further studies that focusing on the theoretical aspect.

Conclusion

In this study, we investigated how quantum annealing algorithms can be applied in the feature selection process using the fNIRS dataset, which has mostly been conducted with classical machine learning models utilizing D-Wave quantum hardware in prior studies. Three cognitive tasks (VFT, recognition, and CBT) were found to have a relatively high correlation with students’ scholastic abilities (CSAT scores). Our study demonstrates the potential of QA-based algorithms in the feature selection process by analyzing multidimensional brain hemodynamic data. Future studies should employ these results to investigate predictors of academic achievement or, more broadly, cognitive ability.

Data availability

The dataset analyzed during the current study is available from the corresponding author on reasonable request.

References

Stroud, J. B. Intelligence testing. In Educational psychology (ed. Stroud, J. B.) 300–345 (MacMillan Co, 1935).

Cattell, R. B. Theory of fluid and crystallized intelligence: A critical experiment. J. Educ. Psychol. 54, 1 (1963).

Frey, M. C. & Detterman, D. K. Scholastic assessment or g? The relationship between the Scholastic Assessment Test and general cognitive ability. Psychol. Sci. 15, 373–378 (2004).

Rohde, T. E. & Thompson, L. A. Predicting academic achievement with cognitive ability. Intelligence 35, 83–92 (2007).

Meyer, E. M. & Reynolds, M. R. Multidimensional scaling of cognitive ability and academic achievement scores. J. Intell. 10, 117 (2022).

Kirchner, W. K. Age differences in short-term retention of rapidly changing information. J. Exp. Psychol. 55, 352–358. https://doi.org/10.1037/h0043688 (1958).

Shallice, T. Specific impairments of planning. Philos. Trans. R Soc. Lond. B Biol. Sci. 298, 199–209 (1982).

Visu-Petra, L., Cheie, L., Benga, O. & Miclea, M. Cognitive control goes to school: The impact of executive functions on academic performance. Proc.-Soc. Behav. Sci. 11, 240–244 (2011).

Stevenson, H. W. et al. Cognitive performance and academic achievement of Japanese, Chinese, and American children. In 1986 Annual Progress In Child Psychiatry. 324–350 (Routledge, 1991).

Stoodley, C. J., Valera, E. M. & Schmahmann, J. D. Functional topography of the cerebellum for motor and cognitive tasks: An fMRI study. Neuroimage 59, 1560–1570 (2012).

Cui, X. et al. A quantitative comparison of NIRS and fMRI across multiple cognitive tasks. Neuroimage 54, 2808–2821 (2011).

Herff, C. et al. Mental workload during n-back task—quantified in the prefrontal cortex using fNIRS. Front. Hum. Neurosci. 7, 935 (2014).

Irani, F. et al. Functional near infrared spectroscopy (fNIRS): An emerging neuroimaging technology with important applications for the study of brain disorders. Clin. Neuropsychol. 21, 9–37 (2007).

Soltanlou, M. et al. Applications of functional near-infrared spectroscopy (fNIRS) in studying cognitive development: The case of mathematics and language. Front. Psychol. 9, 277 (2018).

Oku, A. Y. A. & Sato, J. R. Predicting student performance using machine learning in fNIRS data. Front Hum Neurosci 15, 622224 (2021).

Khosla, M. et al. Machine learning in resting-state fMRI analysis. Magn. Reson. Imaging 64, 101–121 (2019).

Xu, W. et al. Comparison of feature selection methods based on discrimination and reliability for fMRI decoding analysis. J. Neurosci. Methods 335, 108567 (2020).

Fernandez Rojas, R., Huang, X. & Ou, K. L. A machine learning approach for the identification of a biomarker of human pain using fNIRS. Sci. Rep. 9, 5645 (2019).

Naseer, N., Hong, K. S., Khan, M. J., & Bhutta, M. R. Comparison of artificial neural network and support vector machine classifications for fNIRS-based BCI. In 2015 15th International Conference on Control, Automation and Systems (ICCAS) pp. 1817–1821. (IEEE, 2015).

Hearst, M. A., Dumais, S. T., Osuna, E., Platt, J. & Scholkopf, B. Support vector machines. IEEE Intell. Syst. Appl. 13(4), 18–28 (1998).

Choi, J. et al. Machine learning approach for classifying college scholastic ability test levels with unsupervised features from prefrontal functional near-infrared spectroscopy signals. IEEE Access 10, 50864–50877 (2022).

Quinlan, J. R. Induction of decision trees. Mach. Learn. 1, 81–106 (1986).

Karamzadeh, N. et al. A machine learning approach to identify functional biomarkers in human prefrontal cortex for individuals with traumatic brain injury using functional near-infrared spectroscopy. Brain Behav. 6(11), e00541 (2016).

Chen, T., & Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM Sigkdd international conference on knowledge discovery and data mining pp. 785–794 (2016)

Nath, R. K., Thapliyal, H. & Humble, T. S. Quantum annealing for automated feature selection in stress detection. In 2021 IEEE Computer Society Annual Symposium on VLSI (ISVLSI). 453–457 (IEEE, 2021).

Wierzbiński, M., Falcó-Roget, J. & Crimi, A. Community detection in brain connectomes with hybrid quantum computing. Sci. Rep. 13, 3446 (2023).

Johnson, T. H., Clark, S. R. & Jaksch, D. What is a quantum simulator?. EPJ Quantum Technol. 1, 1–12 (2014).

Corsi, P. M. Human memory and the medial temporal region of the brain.

Manelis, A., Huppert, T. J., Rodgers, E., Swartz, H. A. & Phillips, M. L. ‘The role of the right prefrontal cortex in recognition of facial emotional expressions in depressed individuals: FNIRS study’. J. Affect. Disorders 258, 151–158 (2019).

Schaeffer, J. D. et al. ‘An fNIRS investigation of associative recognition in the prefrontal cortex with a rapid event-related design’. J. Neurosci. Methods 235, 308–315 (2014).

Stroop, J. R. Studies of interference in serial verbal reactions. J. Exp. Psychol. 18, 643 (1935).

Owen, A. M. et al. N-back working memory paradigm: A meta-analysis of normative functional neuroimaging studies. Hum. Brain Mapp. 25, 46–59 (2005).

Shao, Z., Janse, E., Visser, K. & Meyer, A. S. What do verbal fluency tasks measure? Predictors of verbal fluency performance in older adults. Front. Psychol. 5, 772 (2014).

Ono, Y. et al. Motor learning and modulation of prefrontal cortex: An fNIRS assessment. J. Neural Eng. 12(6), 066004 (2015).

Causse, M., Chua, Z., Peysakhovich, V., Del Campo, N. & Matton, N. Mental workload and neural efficiency quantified in the prefrontal cortex using fNIRS. Sci. Rep. 7(1), 5222 (2017).

Kim, J. M. et al. Assessment of cerebral autoregulation using continuous-wave near-infrared spectroscopy during squat-stand maneuvers in subjects with symptoms of orthostatic intolerance. Sci. Rep. 8(1), 13257 (2018).

Shin, J., Kwon, J., Choi, J. & Im, C. H. Performance enhancement of a brain-computer interface using high-density multi-distance NIRS. Sci. Rep. 7(1), 1–10 (2017).

Kim, T. H. et al. Do motion artifact correction algorithms correct “motion”?: direct observation of motion-induced variance after correction. [Poster abstract] Society for fNIRS Conference 2022, Boston, MA, United States.

Singh, A. K., Okamoto, M., Dan, H., Jurcak, V. & Dan, I. Spatial registration of multichannel multi-subject fNIRS data to MNI space without MRI. Neuroimage 27(4), 842–851 (2005).

Fishburn, F. A., Ludlum, R. S., Vaidya, C. J. & Medvedev, A. V. Temporal derivative distribution repair (TDDR): A motion correction method for fNIRS. Neuroimage 184, 171–179 (2019).

Sun, J. et al. Connectivity properties in the prefrontal cortex during working memory: A near-infrared spectroscopy study. J. Biomed. Opt. 24(5), 051410–051410 (2019).

Delpy, D. T. et al. Estimation of optical pathlength through tissue from direct time of flight measurement. Phys. Med. Biol. 33, 1433 (1988).

Hong, K. S., Khan, M. J. & Hong, M. J. Feature extraction and classification methods for hybrid fNIRS-EEG brain-computer interfaces. Front. Hum. Neurosci. 12, 246 (2018).

Shin, J. & Jeong, J. Multiclass classification of hemodynamic responses for performance improvement of functional near-infrared spectroscopy-based brain–computer interface. J. Biomed. Opt. 19(6), 067009–067009 (2014).

Holper, L. & Wolf, M. Single-trial classification of motor imagery differing in task complexity: A functional near-infrared spectroscopy study. J. Neuroeng. Rehabil. 8, 1–13 (2011).

Abibullaev, B., An, J. & Moon, J. I. Neural network classification of brain hemodynamic responses from four mental tasks. Int. J. Optomechatronics 5(4), 340–359 (2011).

Tai, K. & Chau, T. Single-trial classification of NIRS signals during emotional induction tasks: Towards a corporeal machine interface. J. Neuroeng. Rehabil. 6, 1–14 (2009).

Watanabe, K. et al. NIRS-based language learning BCI system. IEEE Sens. J. 16(8), 2726–2734 (2016).

Stangl, M. et al. A haemodynamic brain–computer interface based on real-time classification of near infrared spectroscopy signals during motor imagery and mental arithmetic. J. Near Infrared Spectrosc. 21(3), 157–171 (2013).

Khan, M. J. & Hong, K. S. Hybrid EEG–fNIRS-based eight-command decoding for BCI: Application to quadcopter control. Front. Neurorobot. 11, 6 (2017).

Li, R. et al. Enhancing performance of a hybrid EEG-fNIRS system using channel selection and early temporal features. Front. Hum. Neurosci. 11, 462 (2017).

Buccino, A. P., Keles, H. O. & Omurtag, A. Hybrid EEG-fNIRS asynchronous brain-computer interface for multiple motor tasks. PloS One 11(1), e0146610 (2016).

Khan, M. J. & Hong, K. S. Passive BCI based on drowsiness detection: An fNIRS study. Biomed. Opt. Express 6(10), 4063–4078 (2016).

Hong, K. S. & Santosa, H. Decoding four different sound-categories in the auditory cortex using functional near-infrared spectroscopy. Hear Res. 333, 157–166 (2016).

Hwang, H. J. et al. Toward more intuitive brain–computer interfacing: Classification of binary covert intentions using functional near-infrared spectroscopy. J. Biomed. Opt. 21(9), 091303–091303 (2016).

Cipra, B. A. An introduction to the Ising model. Am. Math. Monthly 94(10), 937–959 (1987).

Harris, R. et al. Experimental demonstration of a robust and scalable flux qubit. Phys. Rev. B 81(13), 134510 (2010).

Uyanık, G. K. & Güler, N. A study on multiple linear regression analysis. Proc.-Soc. Behav. Sci. 106, 234–240 (2013).

Khan, M. A., Ghafoor, U., Yang, D., & Hong, K. S. Reduction in Command Generation Time for fNIRS-Based BCI. In 2022 13th Asian Control Conference (ASCC) pp. 36–41. (IEEE, 2022).

Yoo, S. H., & Hong, K. S. Classification of six sound categories using functional near-infrared spectroscopy. In 2022 13th Asian Control Conference (ASCC) pp. 1–5. (IEEE, 2022).

Tanveer, M. A. et al. Enhanced drowsiness detection using deep learning: an fNIRS study. IEEE Access 7, 137920–137929 (2019).

Khan, M. J. et al. Drowsiness detection using fNIRS in different time windows for a passive BCI. In 2016 6th IEEE International Conference on Biomedical Robotics and Biomechatronics (BioRob). 227–231 (IEEE, 2016).

Shamsi, F. & Najafizadeh, L. Multi-class classification of motor execution tasks using fNIRS. In 2019 IEEE Signal Processing in Medicine and Biology Symposium (SPMB). 1–5 (IEEE, 2019).

Al-Shargie, F. et al. Mental stress assessment based on feature level fusion of fNIRS and EEG signals. In 2016 6th International Conference on Intelligent and Advanced Systems (ICIAS). 1–5 (IEEE, 2016).

Firooz, S. & Setarehdan, S. K. IQ estimation by means of EEG-fNIRS recordings during a logical-mathematical intelligence test. Comput. Biol. Med. 110, 218–226 (2019).

Zhu, Y. et al. Classifying major depressive disorder using fNIRS during motor rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 28(4), 961–969 (2020).

Aksamovic, A. et al. Relationship between the verbal fluency and academic achievement in second and third grade students: The effects of gender and parents’ educational level. Acta Neuropsychologica 17, 139–150 (2019).

Kaufmann, S. et al. Semantic fluency including task switching predicts academic success in medical school. PloS One 15(12), e0244456 (2020).

Masrai, A. & Milton, J. Recognition vocabulary knowledge and intelligence as predictors of academic achievement in EFL context. TESOL Int. J. 12(1), 128–142 (2017).

Siquara, G. M., dos Santos Lima, C. & Abreu, N. Working memory and intelligence quotient: Which best predicts on school achievement?. Psico 49(4), 365–374 (2018).

Aghajani, H., Garbey, M. & Omurtag, A. Measuring mental workload with EEG+ fNIRS. Front. Hum. Neurosci. 11, 359 (2017).

Zafar, A., Ghafoor, U., Yaqub, M. A., & Hong, K. S. Initial-dip-based classification for fNIRS-BCI. In Neural Imaging and Sensing. Vol. 10865, 116–124 (SPIE, 2019).

Nazeer, H. et al. Enhancing classification accuracy of fNIRS-BCI using features acquired from vector-based phase analysis. J Neural Eng. 17(5), 056025 (2020).

Liu, J. et al. fNIRS feature extraction and classification in grip-force tasks. In 2021 IEEE International conference on robotics and biomimetics (ROBIO). 1087–1091 (IEEE, 2021).

Elbistan, B. D. B. & Stubbe, T. C. The relationship between problem-solving skills and planning ability by using tower of london test. Open Educ. Stud. 3(1), 226–232 (2021).

Luo, W. & Zhou, R. Can working memory task-related EEG biomarkers measure fluid intelligence and predict academic achievement in healthy children?. Front. Behav. Neurosci. 14, 2 (2020).

Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2, 79 (2018).

Acknowledgements

We would like to thank the Quantum Information Research Support Center at Sungkyunkwan University that provided support regarding D-Wave quantum computing resources for this research.

Funding

This work was supported by the Yonsei Signature Research Cluster Program of 2024 (grant number 2024-22-0166).

Author information

Authors and Affiliations

Contributions

J.G.C. and Y.J.K. contributed to the conception, and design of the study. J.G.C., Y.J.K., B.R.K., Y.W.P., J.H.C., J.K.C. and S.H.H. contributed to the acquisition of data. J.G.C., Y.J.K., J.H.C., and S.H.H. contributed to the analysis and interpretation of the data. J.G.C. and Y.J.K. contributed to the drafting of the manuscript.

Corresponding author

Ethics declarations

Competiseng interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Kim, Y., Choi, J., Kim, B. et al. Investigating the relationship between CSAT scores and prefrontal fNIRS signals during cognitive tasks using a quantum annealing algorithm. Sci Rep 14, 19760 (2024). https://doi.org/10.1038/s41598-024-70394-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-70394-7

Keywords

This article is cited by

-

Functional near-infrared spectroscopy for the detection of fear using parameterized quantum circuits

Scientific Reports (2025)