Abstract

Time-based surveys often experience missing data due to several reasons, like non-response or data collection limitations. Imputation methods play an essential role in incorporating these missing values to secure the accuracy and reliability of the survey outcomes. This manuscript proposes some optimal class of memory type imputation methods for imputing missing data in time-based surveys by utilizing exponentially weighted moving average (EWMA) statistics. The insights into the optimal conditions for incorporating our proposed methods are provided. A comprehensive examination of the proposed method utilizing simulated and real-life datasets is conducted. Comparative analyses against the existing imputation methods exhibit the superior performance of our methods, particularly in the scenarios characterized by developing trends and dynamic response patterns. The outcomes highlight the effectiveness of utilizing EWMA statistics into memory type imputation methods, displaying their flexibility to changing survey dynamics.

Similar content being viewed by others

Introduction

Accurate estimation of the population parameters, such as the population mean is fundamental in survey research and statistical analysis. However, real-life surveys often encounter the challenge of missing data due to non-response, incomplete reporting, or other data collection issues. Imputation methods play a critical role in enhancing the precision and validity of statistical inferences by providing estimates that incorporate missing information. The estimation of the population mean is of particular interest as it serves as a central measure, characterizing the average value of a variable within a population. Robust imputation methods contribute significantly to overcome the uncertainties and biases associated with missing data, ultimately improving the accuracy of mean estimates and supporting sound decision-making processes. In the last few decades, many imputation methods have been suggested by the well-known authors under different sampling schemes. Three cardinal concepts, namely missing at random (MAR), observed at random (OAR), and parameter distribution (PD), were proposed by Rubin1 in his seminal work. The concepts of MAR and missing completely at random (MCAR) were distinguished by Heitjan and Basu2. Sohail et al.3 developed a class of ratio type estimators for imputing the missing values under ranked set sampling (RSS), while Sohail et al.4 introduced the imputation of missing values utilizing raw moments. Singh et al.5 suggested an improved alternative method for imputating the missing data in sampling surveys. Prasad and Yadav6 introduced an imputation of missing data through product type exponential methods. Bhushan et al.7 suggested an estimation procedure for estimating the population mean in the presence of missing data under simple random sampling (SRS). Bhushan and Kumar8 introduced the imputation of missing data utilizing multi auxiliary information under RSS, while Kumar et al.9 examined the novel logarithmic imputation procedures utilizing multi auxiliary information under RSS. Rehman et al.10 developed the survey methods for novel mean imputation and illustrated the application of the developed methods through the abalone data. Some synthetic imputation methods for domain mean under SRS were introduced by Bhushan et al.11. An optimal random non response framework was developed by Bhushan and Pandey12 for estimating mean on current occasion.

Let \(\sum _{\Omega =1}^{N}y_i={\bar{Y}}\) be the mean of population \(\Omega =\{1,2,\ldots ,i,\ldots ,N\}\). To estimate \({\bar{Y}}\), a simple random sample of size n is chosen without replacement from \(\Omega\). Let r units provide response out of n sampled units such that \(r<n\). Let R and \({\bar{R}}\) be the sets of responding and non-responding units, respectively. The unit \(y_i\) is observed if \(i\in R\), while unobserved/missing if \(i \in {\bar{R}}\) and imputed values are computed. We suppose that the imputation is performed with the addition of an auxiliary variable x, such that the unit ith the variable x, i.e., \(x_i\) is known and positive for every \(i\in s=R\cup {\bar{R}}\). In other words, the data on \(x_s=\{x_i: i\in s\}\) are known.

When the additional supplementary data is considered, the imputation methods are separated into the following strategies.

Strategy 1: If \({\bar{X}}\) is known and \({\bar{x}}_{n}\) is used.

Strategy 2: If \({\bar{X}}\) is known and \({\bar{x}}_{r}\) is used.

Strategy 3: If \({\bar{X}}\) is unknown and (\({\bar{x}}_{n}\), \({\bar{x}}_{r}\)) are used.

When there is a positive correlation between the supplementary variable x and the main variable y, the ratio type imputations provide accurate results. Utilizing the notations of Lee et al.13, the conventional ratio imputation methods (CRIMs) and the resultant conventional ratio estimators (CREs) under the above cases are prescribed as

Strategy | CRIMs | Resulting CREs |

|---|---|---|

1 | \(y_{{.j}_{r_1}}= {\left\{ \begin{array}{ll} y_j\qquad \qquad \qquad \qquad\quad \text{for}\ j \in R\\ \frac{1}{n-r}\left[ n{\bar{y}}_{r}\frac{{\bar{X}}}{{\bar{x}}_{n}}-r{\bar{y}}_{r}\right] \qquad\text{for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{r_1}={\bar{y}}_{r}\frac{{\bar{X}}}{{\bar{x}}_{n}}\) |

2 | \(y_{{.j}_{r_2}}= {\left\{ \begin{array}{ll} y_j\qquad \qquad \qquad \qquad\quad \text{ for}\ j \in R\\ \frac{1}{n-r}\left[ n{\bar{y}}_{r}\frac{{\bar{X}}}{{\bar{x}}_{r}}-r{\bar{y}}_{r}\right] \qquad \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{r_2}={\bar{y}}_{r}\frac{{\bar{X}}}{{\bar{x}}_{r}}\) |

3 | \(y_{{.j}_{r_3}}= {\left\{ \begin{array}{ll} y_j\qquad \qquad \qquad \qquad\quad \text{ for}\ j \in R\\ \frac{1}{n-r}\left[ n{\bar{y}}_{r}\frac{{\bar{x}}_n}{{\bar{x}}_{r}}-r{\bar{y}}_{r}\right] \qquad \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{r_3}={\bar{y}}_{r}\frac{{\bar{x}}_n}{{\bar{x}}_{r}}\) |

Diana and Perri14 suggested the conventional linear regression imputation methods (CLRIMs) and the resultant conventional linear regression estimators (CLREs) under strategies 1–3 as:

Strategy | CLRIMs | CLREs |

|---|---|---|

1 | \(y_{{.j}_{1}}= {\left\{ \begin{array}{ll} y_i& \text{ for}\ j \in R\\ {\bar{y}}_{r}+\frac{nb_1}{n-r}({\bar{X}}-{\bar{x}}_{n}) & \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{1}={\bar{y}}_{r}+b_1\left( {\bar{X}}-{\bar{x}}_{n}\right)\) |

2 | \(y_{{.j}_{2}}= {\left\{ \begin{array}{ll} y_i& \text{ for}\ j \in R\\ {\bar{y}}_{r}+\frac{nb_2}{n-r}({\bar{X}}-{\bar{x}}_{r}) & \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{2}={\bar{y}}_{r}+b_2({\bar{X}}-{\bar{x}}_{r})\) |

3 | \(y_{{.j}_{3}}= {\left\{ \begin{array}{ll} y_i& \text{ for}\ j \in R\\ {\bar{y}}_{r}+\frac{nb_3}{n-r}({\bar{x}}_n-{\bar{x}}_{r}) & \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{3}={\bar{y}}_{r}+b_3({\bar{x}}_n-{\bar{x}}_{r})\) |

where \(b_1\), \(b_2\), and \(b_3\) are duly chosen scalars.

Singh and Deo15 suggested the power ratio imputation methods (PRIMs) and the resultant power ratio estimators (PREs) under strategies 1–3 as:

Strategies | PRIMs | Resulting PREs |

|---|---|---|

1 | \(y_{{.j}_{4}}= {\left\{ \begin{array}{ll} y_j\qquad \qquad \qquad \qquad \qquad\quad ~\text{ for}\ j \in R\\ \frac{1}{n-r}\left[ n{\bar{y}}_{r}\left( \frac{{\bar{X}}}{{\bar{x}}_{n}}\right) ^{b_4}-r{\bar{y}}_{r}\right] \qquad \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{4}={\bar{y}}_{r}\left( \frac{{\bar{X}}}{{\bar{x}}_{n}}\right) ^{b_4}\) |

2 | \(y_{{.j}_{5}}= {\left\{ \begin{array}{ll} y_j\qquad \qquad \qquad \qquad \qquad\quad ~\text{ for}\ j \in R\\ \frac{1}{n-r}\left[ n{\bar{y}}_{r}\left( \frac{{\bar{X}}}{{\bar{x}}_{r}}\right) ^{b_5}-r{\bar{y}}_{r}\right] \qquad \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_5={\bar{y}}_{r}\left( \frac{{\bar{X}}}{{\bar{x}}_{r}}\right) ^{b_5}\) |

3 | \(y_{{.j}_6}= {\left\{ \begin{array}{ll} y_j\qquad \qquad \qquad \qquad \qquad\quad ~\text{ for}\ j \in R\\ \frac{1}{n-r}\left[ n{\bar{y}}_{r}\left( \frac{{\bar{x}}_n}{{\bar{x}}_{r}}\right) ^{b_6}-r{\bar{y}}_{r}\right] \qquad \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_6={\bar{y}}_{r}\left( \frac{{\bar{x}}_n}{{\bar{x}}_{r}}\right) ^{b_6}\) |

where \(b_4\), \(b_5\), and \(b_6\) are suitably chosen scalars.

Searls16 shown that multiplying a tuning parameter in the estimator improves the efficiency of the estimators. Following Searls’ idea, Bhushan and Pandey17 suggested the Searls linear regression imputation methods (SLRIMs) and the resultant Searls linear regression estimators (SLREs) under the above strategies as:

Strategy | SLRIMs | Resulting SLREs |

|---|---|---|

1 | \(y_{{.j}_{s_1}}= {\left\{ \begin{array}{ll} a_1y_j& \text{ for}\ j \in R\\ a_1{\bar{y}}_{r}+\frac{nb_1}{n-r}({\bar{X}}-{\bar{x}}_{n}) & \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{s_1}=a_1{\bar{y}}_{r}+b_1\left( {\bar{X}}-{\bar{x}}_{n}\right)\) |

2 | \(y_{{.j}_{s_2}}= {\left\{ \begin{array}{ll} a_2y_j& \text{ for}\ j \in R\\ a_2{\bar{y}}_{r}+\frac{nb_2}{n-r}({\bar{X}}-{\bar{x}}_{r}) & \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{s_2}=a_2{\bar{y}}_{r}+b_2({\bar{X}}-{\bar{x}}_{r})\) |

3 | \(y_{{.j}_{s_3}}= {\left\{ \begin{array}{ll} a_3 y_j& \text{ for}\ j \in R\\ a_3 {\bar{y}}_{r}+\frac{nb_3}{n-r}({\bar{x}}_n-{\bar{x}}_{r}) & \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{s_3}=a_3{\bar{y}}_{r}+b_3({\bar{x}}_n-{\bar{x}}_{r})\) |

where \(a_1\), \(a_2\), and \(a_3\) are suitably chosen scalars.

Bhushan and Pandey18 suggested Searls power ratio imputation methods (SPRIMs) and the resultant Searls power ratio estimators (SPREs) under strategies 1–3 as:

Strategy | SPRIMs | Resulting SPREs |

|---|---|---|

1 | \(y_{{.j}_{s_4}}= {\left\{ \begin{array}{ll} y_j\qquad \qquad \qquad \qquad \qquad\quad ~~{\text{ for}}\ j \in R\\ \frac{1}{n-r}\left[ na_{4}{\bar{y}}_{r}\left( \frac{{\bar{X}}}{{\bar{x}}_{n}}\right) ^{b_{4}}-r{\bar{y}}_{r}\right] \qquad {\text{ for}}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{s_4}=a_{4}{\bar{y}}_{r}\left( \frac{{\bar{X}}}{{\bar{x}}_{n}}\right) ^{b_{4}}\) |

2 | \(y_{{.j}_{s_5}}= {\left\{ \begin{array}{ll} y_j\qquad \qquad \qquad \qquad \qquad\quad ~\text{ for}\ j \in R\\ \frac{1}{n-r}\left[ na_{5}{\bar{y}}_{r}\left( \frac{{\bar{X}}}{{\bar{x}}_{r}}\right) ^{b_{5}}-r{\bar{y}}_{r}\right] \qquad \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{s_5}=a_{5}{\bar{y}}_{r}\left( \frac{{\bar{X}}}{{\bar{x}}_{r}}\right) ^{b_{5}}\) |

3 | \(y_{{.j}_{s_6}}= {\left\{ \begin{array}{ll} y_j\qquad \qquad \qquad \qquad \qquad\quad ~~\text{ for}\ j \in R\\ \frac{1}{n-r}\left[ na_{6}{\bar{y}}_{r}\left( \frac{{\bar{x}}_n}{{\bar{x}}_{r}}\right) ^{b_6}-r{\bar{y}}_{r}\right] \qquad \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{s_6}=a_{6}{\bar{y}}_{r}\left( \frac{{\bar{x}}_n}{{\bar{x}}_{r}}\right) ^{b_{6}}\) |

where \(a_4\), \(a_5\), and \(a_6\) are suitably chosen scalars.

The mean square error of all resultant CREs, PREs, SLREs, and SPREs is given in Appendix A.

Time-based surveys are a key mechanism for securing dynamic trends and changes in several realms, such as economic indicators, public opinion, and social behaviors. Very limited studies are available in the literature for the estimation of population mean for time-based surveys utilizing EWMA statistics. Noor-ul-Amin19 introduced the memory type ratio and product estimators for population mean under SRS employing EWMA statistics. Following Noor-ul-Amin19, the EWMA-based memory type ratio and product estimators for population mean were suggested under stratified sampling and ranked set sampling by Aslam et al.20 and Aslam et al.21, respectively. Employing EWMA statistics, Qureshi et al.22 introduced memory type ratio and product estimators for population variance under SRS, while Qureshi et al.23 examined memory-type variance estimators in the presence of measurement error. Bhushan et al.24 designed a memory type logarithmic estimator for population mean based on EWMA statistics. Kumar et al.25 investigated a memory type general class of estimator for population variance based on EWMA statistics.

However, the reliability of time-based survey outcomes may be compromised when confronted with missing data, a fundamental difficulty arising from non-response or data collection limitations. Traditional imputation methods often struggle to capture the evolving nature of responses in time-series survey data. Memory type imputation methods, which consider the temporal dependencies within the data, show promise in addressing this limitation. Memory type imputation methods take a step further by incorporating EWMA statistics, a technique that assigns varying weights to observations based on their recency. This dynamic weighting enables memory type imputation methods to adapt to changing patterns in survey responses over time, providing a more accurate reflection of the underlying trends.

This study contributes valuable insights for survey practitioners, statisticians, and researchers seeking to enhance the accuracy of imputation strategies in the context of dynamic survey data. The aims of this study are described in the following points:

-

(i).

To adapt some fundamental memory type imputation methods and the corresponding resultant estimators for time-based surveys utilizing EWMA statistics.

-

(ii).

To propose some optimal class of memory type imputation methods and the corresponding estimators for time-based surveys utilizing EWMA statistics.

-

(iii).

To explore the optimal conditions for applying our proposed imputation methods by considering factors such as survey frequency, sample size, and the nature of missing data.

-

(iv).

To present a comprehensive examination of the proposed memory type imputation methods utilizing both simulated and real-life datasets.

-

(v).

To conduct a comparative analyses against the existing imputation techniques to showcase the superior performance of our approach, particularly in scenarios characterized by dynamic response patterns and evolving trends.

Following is the arrangement of the remaining text: The EWMA statistics and the notations used are discussed in Section “Exponentially weighted moving average statistics”. The adapted and proposed memory type imputation techniques are presented in Sections “Adapted memory type imputation methods” and “Proposed memroy type imputation methods”, respectively, along with their characteristics. Their characteristics are further contrasted with those of the traditional imputation techniques in Section “Efficiency comparisons”. The comprehensive simulation study and the interpretation of the simulation findings are provided in Section “Simulation study”. Section “Applications” provides an illustration of the suggested memory type imputation techniques using real datasets. The conclusion is given in Section “Conclusion”.

Exponentially weighted moving average statistics

Robert26 introduced the concept of the EWMA statistic as a means to efficiently discern shifts in the mean of elementary processes on the statistical quality control charts. This is a statistical technique utilized in time series analysis and signal processing to smooth out the fluctuations in data and highlight underlying trends. It is specially useful in finance, economics, and engineering for analyzing and forecasting trends in a series of data points over time. The EWMA is a type of moving average that provides more weight to recent data points while diminishing the significance of older observations. The main idea is to assign exponentially decreasing weights to past observations. This contrasts with a simple moving average where all data points are assigned equal weight.

The formulae for calculating the EWMA for the variables (y, x) are given below:

where \(Z_t\) and \(Q_t\) are the EWMAs at time t, while \(Z_{t-1}\) and \(Q_{t-1}\) are the EWMAs at time \(t-1\). \(\kappa\) is the smoothing parameter taking values between 0 and 1, while \({\bar{y}}_t\) and \({\bar{x}}_t\) are the the current data points at time t. The smoothing parameter \(\kappa\) determines the rate at which the weights decrease exponentially. A higher \(\kappa\) gives more weight to recent observations, making the EWMA more responsive to changes in the data. Conversely, a lower \(\kappa\) places greater emphasis on past observations, resultant in a smoother average that is less sensitive to short-term fluctuations.

Corresponding to conventional imputation methods, the imputed EWMA-based sample mean estimators for the missing data in the study and auxiliary variables (y, x) are as follows:

Moreover, the non-imputed sample means of variables (y, x) based on EWMA are:

For EWMA statistics, the imputation methods are divided into the following strategies.

Strategy 1: If \({\bar{X}}\) is known and \(Q_{t_n}\) is used.

Strategy 2: If \({\bar{X}}\) is known and \(Q_{t_r}\) is used.

Strategy 3: If \({\bar{X}}\) is unknown and (\(Q_{t_n}\), and \(Q_{t_r}\)) are used.

To find out the mean square error (MSE) of the memory type estimators, let \(Z_{tr}={\bar{Y}}(1+e_0)\), \(Q_{tr}={\bar{X}}(1+e_1)\), and \(Q_{tn}={\bar{X}}(1+e_2)\) provided that \(E(e_0)=E(e_1)=E(e_2)=0\), \(E(e_0^2)=\lambda _r\varPhi C_y^2\), \(E(e_1^2)=\lambda _r\varPhi C_x^2\), \(E(e_2^2)=\lambda _n\varPhi C_x^2\), \(E(e_0e_1)=\lambda _r\varPhi \rho C_xC_y\), \(E(e_0e_2)=\lambda _n\varPhi \rho C_xC_y\), \(E(e_1e_2)=\lambda _n\varPhi C_x^2\), \(\varPhi ={\kappa }/{(2-\kappa )}\), \(\lambda _r=\left( \frac{1}{r}-\frac{1}{N}\right)\), \(\lambda _n=\left( \frac{1}{n}-\frac{1}{N}\right)\), and \(\lambda _{rn}=\left( \frac{1}{r}-\frac{1}{n}\right)\).

Adapted memory type imputation methods

To fill the gap in the literature on memory type imputation methods, some prominent fundamental memory type imputations have been adapted along with their properties.

The memory type ratio imputation methods (MTRIMs) and the corresponding resultant memory type ratio estimators (MTREs) are given under strategies 1–3 as follows:

Strategy | MTRIMs | Resulting MTRE |

|---|---|---|

1 | \(y_{{.j}_{r_1}}^m= {\left\{ \begin{array}{ll} Z_{tj}\qquad \qquad \qquad \qquad \qquad\quad ~\text{ for}\ j \in R\\ \frac{1}{n-r}\left( nZ_{tr}\frac{{\bar{X}}}{Q_{tn}}-rZ_{tr}\right) \quad \qquad ~\text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{r_1}^m=Z_{tr}\frac{{\bar{X}}}{Q_{tn}}\) |

2 | \(y_{{.j}_{r_2}}^m= {\left\{ \begin{array}{ll} Z_{tj}\qquad \qquad \qquad \qquad \qquad\quad ~\text{ for}\ j \in R\\ \frac{1}{n-r}\left( nZ_{tr}\frac{{\bar{X}}}{Q_{tr}}-rZ_{tr}\right) \qquad \quad ~\text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{r_2}^m=Z_{tr}\frac{{\bar{X}}}{Q_{tr}}\) |

3 | \(y_{{.j}_{r_3}}^m= {\left\{ \begin{array}{ll} Z_{tj}\qquad \qquad \qquad \qquad \qquad\quad ~\text{ for}\ j \in R\\ \frac{1}{n-r}\left( nZ_{tr}\frac{Q_{tn}}{Q_{tr}}-rZ_{tr}\right) \qquad \quad ~\text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{r_3}^m=Z_{tr}\frac{Q_{tn}}{Q_{tr}}\) |

where the superscript m denotes the “memory” in the above and other imputation methods and the resultant estimators.

Theorem 3.1

The MSEs of the resultant MTREs are given as

Proof

See Appendix B. \(\square\)

The memory type linear regression imputation methods (MTLRIMs) and the resultant memory type linear regression estimators (MTLREs) under the above strategies are defined as:

Strategy | MTLRIMs | Resulting MTLREs |

|---|---|---|

1 | \(y_{{.j}_{1}}^m= {\left\{ \begin{array}{ll} Z_{tj}& \hbox { for}\ j \in R\\ Z_{tr}+\frac{n\beta _1}{n-r}({\bar{X}}-Q_{tn}) & \hbox { for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{1}^m=Z_{tr}+\beta _1\left( {\bar{X}}-Q_{tn}\right)\) |

2 | \(y_{{.j}_2}^m= {\left\{ \begin{array}{ll} Z_{tj}& \hbox { for}\ j \in R\\ Z_{tr}+\frac{n\beta _2}{n-r}({\bar{X}}-Q_{tr}) & \hbox { for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_2^m=Z_{tr}+\beta _2\left( {\bar{X}}-Q_{tr}\right)\) |

3 | \(y_{{.j}_3}^m= {\left\{ \begin{array}{ll} Z_{tj}& \hbox { for}\ j \in R\\ Z_{tr}+\frac{n\beta _3}{n-r}(Q_{tn}-Q_{tr}) & \hbox { for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_3^m=Z_{tr}+\beta _3\left( Q_{tn}-Q_{tr}\right)\) |

where \(\beta _1\), \(\beta _2\), and \(\beta _3\) are suitably chosen scalars.

Theorem 3.2

The minimum MSEs of the resultant MTLREs are given as

Proof

See Appendix B. \(\square\)

The memory type power ratio imputation methods (MTPRIMs) and the corresponding resultant memory type power ratio estimators (MTPREs) are given under strategies 1–3 as follows:

Strategy | MTPRIMs | Resulting MTPREs |

|---|---|---|

1 | \(y_{{.j}_4}^m= {\left\{ \begin{array}{ll} Z_{tj}\qquad \qquad \qquad \qquad \qquad \qquad\quad\; ~\text{ for}\ j \in R\\ \frac{1}{n-r}\left[ nZ_{tr}\left( \frac{{\bar{X}}}{Q_{tn}}\right) ^{\beta _4}-rZ_{tr}\right] \quad ~\qquad \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_4^m=Z_{tr}\left( \frac{{\bar{X}}}{Q_{tn}}\right) ^{\beta _4}\) |

2 | \(y_{{.j}_5}^m= {\left\{ \begin{array}{ll} Z_{tj}\qquad \qquad \qquad \qquad \qquad \qquad\quad\; ~\text{ for}\ j \in R\\ \frac{1}{n-r}\left[ nZ_{tr}\left( \frac{{\bar{X}}}{Q_{tr}}\right) ^{\beta _5}-rZ_{tr}\right] \quad ~\qquad \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_5^m=Z_{tr}\left( \frac{{\bar{X}}}{Q_{tr}}\right) ^{\beta _5}\) |

3 | \(y_{{.j}_6}^m= {\left\{ \begin{array}{ll} Z_{tj}\qquad \qquad \qquad \qquad \qquad \qquad\quad\; ~\text{ for}\ j \in R\\ \frac{1}{n-r}\left[ nZ_{tr}\left( \frac{Q_{tn}}{Q_{tr}}\right) ^{\beta _6}-rZ_{tr}\right] \quad ~\qquad \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_6^m=Z_{tr}\left( \frac{Q_{tn}}{Q_{tr}}\right) ^{\beta _6}\) |

where \(\beta _4\), \(\beta _5\), and \(\beta _6\) are suitably chosen scalars.

Theorem 3.3

The minimum MSEs of the resultant MTPREs are given as

Proof

See Appendix B. \(\square\)

Remark 3.1

The minimum MSEs of the resultant MTPREs are equal to the minimum MSEs of the resultant MTLREs in the corresponding strategies.

Proposed memroy type imputation methods

EWMA is frequently utilized in various fields like, signal processing, finance, and quality control. To provide efficient imputation methods for time-based surveys in the presence of missing data, we propose some optimal class of memory type Searls linear regression imputation methods (MTSLRIMs) and memory type Searls power ratio imputation methods (MTSPRIMs) along with the resultant memory type Searls linear regression estimators (MTSLREs) and memory type Searls power ratio estimators (MTSPREs), respectively, under strategies 1–3.

MTSLRIMs and resultant MTSLREs

The MTSLRIMs and the resultant MTSLREs under strategies 1–3 are given as follows:

Strategy | MTSLRIMs | Resulting MTSLREs |

|---|---|---|

1 | \(y_{{.j}_{s_1}}^m= {\left\{ \begin{array}{ll} \alpha _1Z_{tj}& \hbox { for}\ j \in R\\ \alpha _1Z_{tr}+\frac{n\beta _1}{n-r}({\bar{X}}-Q_{tn}) & \hbox { for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{s_1}^m=\alpha _1Z_{tr}+\beta _1\left( {\bar{X}}-Q_{tn}\right)\) |

2 | \(y_{{.j}_{s_2}}^m= {\left\{ \begin{array}{ll} \alpha _2Z_{tj}& \hbox { for}\ j \in R\\ \alpha _2Z_{tr}+\frac{n\beta _2}{n-r}({\bar{X}}-Q_{tr}) & \hbox { for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{s_2}^m=\alpha _2Z_{tr}+\beta _2\left( {\bar{X}}-Q_{tr}\right)\) |

3 | \(y_{{.j}_{s_3}}^m= {\left\{ \begin{array}{ll} \alpha _3Z_{tj}& \hbox { for}\ j \in R\\ \alpha _3Z_{tr}+\frac{n\beta _3}{n-r}(Q_{tn}-Q_{tr}) & \hbox { for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{s_3}^m=\alpha _3Z_{tr}+\beta _3\left( Q_{tn}-Q_{tr}\right)\) |

where \(\alpha _1\), \(\alpha _2\), and \(\alpha _3\) are suitably chosen scalars.

Theorem 4.1

The minimum MSEs of the resultant MTSLREs are given as

Proof

See Appendix C. \(\square\)

MTSPRIMs and resultant MTSPREs

The MTSPRIMs and the resultant MTSPREs under strategies 1–3 are given as follows:

Strategy | MTSPRIMs | Resulting MTSPREs |

|---|---|---|

1 | \(y_{{.j}_{s_4}}^m= {\left\{ \begin{array}{ll} Z_{tj}\qquad \qquad \qquad \qquad \qquad \qquad\quad\; ~\text{ for}\ j \in R\\ \frac{1}{n-r}\left[ n\alpha _4Z_{tr}\left( \frac{Q_{tn}}{{\bar{X}}}\right) ^{\beta _4}-rZ_{tr}\right] \quad ~\qquad \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{s_4}^m=\alpha _4Z_{tr}\left( \frac{Q_{tn}}{{\bar{X}}}\right) ^{\beta _4}\) |

2 | \(y_{{.j}_{s_5}}^m= {\left\{ \begin{array}{ll} Z_{tj}\qquad \qquad \qquad \qquad \qquad \qquad\quad\; ~\text{ for}\ j \in R\\ \frac{1}{n-r}\left[ n\alpha _5Z_{tr}\left( \frac{Q_{tr}}{{\bar{X}}}\right) ^{\beta _5}-rZ_{tr}\right] \quad ~\qquad \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{s_5}^m=\alpha _5Z_{tr}\left( \frac{Q_{tr}}{{\bar{X}}}\right) ^{\beta _5}\) |

3 | \(y_{{.j}_{s_6}}^m= {\left\{ \begin{array}{ll} Z_{tj}\qquad \qquad \qquad \qquad \qquad \qquad\quad\; ~\text{ for}\ j \in R\\ \frac{1}{n-r}\left[ n\alpha _6Z_{tr}\left( \frac{Q_{tr}}{Q_{tn}}\right) ^{\beta _6}-rZ_{tr}\right] \quad ~\qquad \text{ for}\ j \in {\bar{R}} \end{array}\right. }\) | \(t_{s_6}^m=\alpha _6Z_{tr}\left( \frac{Q_{tr}}{Q_{tn}}\right) ^{\beta _6}\) |

where \(\alpha _4\), \(\alpha _5\), and \(\alpha _6\) are suitably chosen scalars.

Theorem 4.2

The minimum MSEs of the resultant MTSPREs are given as

where \(L_4=1+\varPhi \lambda _rC_y^2+\beta _4(2\beta _4+1)\varPhi \lambda _nC_x^2-4\beta _4\varPhi \lambda _n\rho _{xy}C_xC_y\), \(M_4=1+\frac{\beta _4(\beta _4+1)}{2}\lambda _nC_x^2-\beta _4\varPhi \lambda _n\rho _{xy}C_xC_y\), \(L_5=1+\varPhi \lambda _rC_y^2+\beta _5(2\beta _5+1)\varPhi \lambda _rC_x^2-4\beta _5\varPhi \lambda _r\rho _{xy}C_xC_y\), \(M_5=1+\frac{\beta _5(\beta _5+1)}{2}\lambda _rC_x^2-\beta _5\varPhi \lambda _r\rho _{xy}C_xC_y\), \(L_6=1+\varPhi \lambda _rC_y^2+\beta _6(2\beta _6+1)\varPhi \lambda _{rn}C_x^2-4\beta _6\varPhi \lambda _{rn}\rho _{xy}C_xC_y\), and \(M_6=1+\frac{\beta _6(\beta _6+1)}{2}\lambda _{rn}C_x^2-\beta _6\varPhi \lambda _{rn}\rho _{xy}C_xC_y\).

Proof

See Appendix C. \(\square\)

Efficiency comparisons

In this section, the resultant MTSLREs and MTSPREs are compared with the conventional and adapted memory type estimators under two subsections. In Section “Comparison of MTSLREs with the conventional and memory type estimators”, the MTSLREs are compared with the conventional and memory type estimators, while, in Section “Comparison of MTSPREs with the conventional and memory type estimators”, the MTSPREs are compared with the conventional and memory type estimators.

Comparison of MTSLREs with the conventional and memory type estimators

-

Comparison of MTSLREs with CREs

-

(i).

The MTSLRE \(t_{s_1}^m\) dominates the CRE \(t_{r_1}\) under strategy 1, if

$$\begin{aligned} &MSE(t_{s_1}^m)<MSE(t_{r_1})\\ &{\bar{Y}}^2(1-\alpha _{1(opt)})<{\bar{Y}}^2(\lambda _rC_y^2+\lambda _nC_x^2-2\lambda _n\rho _{xy}C_xC_y)\\ &\alpha _{1(opt)}>1-(\lambda _rC_y^2+\lambda _nC_x^2-2\lambda _n\rho _{xy}C_xC_y) \end{aligned}$$ -

(ii).

The MTSLRE \(t_{s_2}^m\) dominates the CRE \(t_{r_2}\) under strategy 2, if

$$\begin{aligned} &MSE(t_{s_2}^m)<MSE(t_{r_2})\\& {\bar{Y}}^2(1-\alpha _{2(opt)})<{\bar{Y}}^2\lambda _r(C_y^2+C_x^2-2\rho _{xy}C_xC_y)\\& \alpha _{2(opt)}>1-\lambda _r(C_y^2+C_x^2-2\rho _{xy}C_xC_y) \end{aligned}$$ -

(iii).

The MTSLRE \(t_{s_3}^m\) dominates the CRE \(t_{r_3}\) under strategy 3, if

$$\begin{aligned} &MSE(t_{s_3}^m)<MSE(t_{r_3})\\& {\bar{Y}}^2(1-\alpha _{3(opt)})<{\bar{Y}}^2(\lambda _rC_y^2+\lambda _{rn}C_x^2-2\lambda _{rn}\rho _{xy}C_xC_y)\\& \alpha _{3(opt)}>1-(\lambda _rC_y^2+\lambda _{rn}C_x^2-2\lambda _{rn}\rho _{xy}C_xC_y) \end{aligned}$$

-

(i).

-

Comparison of MTSLREs with CLREs

-

(i).

The MTSLRE \(t_{s_1}^m\) dominates the CLRE \(t_{1}\) under strategy 1, if

$$\begin{aligned} &MSE(t_{s_1}^m)<MSE(t_{1})\\& \alpha _{1(opt)}>1-C_y^2(\lambda _r-\lambda _n\rho _{xy}^2) \end{aligned}$$ -

(ii).

The MTSLRE \(t_{s_2}^m\) dominates the CLRE \(t_{2}\) under strategy 2, if

$$\begin{aligned} &MSE(t_{s_2}^m)<MSE(t_2)\\& \alpha _{2(opt)}>1-C_y^2\lambda _r(1-\rho _{xy}^2) \end{aligned}$$ -

(iii).

The MTSLRE \(t_{s_3}^m\) dominates the CLRE \(t_{3}\) under strategy 3, if

$$\begin{aligned} &MSE(t_{s_3}^m)<MSE(t_3)\\& \alpha _{3(opt)}>1-\{\lambda _{n}C_y^2+\lambda _{rn}{\bar{Y}}^2C_y^2(1-\rho _{xy}^2)\} \end{aligned}$$

-

(i).

-

Comparison of MTSLREs with PREs

-

(i).

The MTSLRE \(t_{s_1}^m\) dominates the PRE \(t_{4}\) under strategy 1, if

$$\begin{aligned} &MSE(t_{s_1}^m)<MSE(t_{4})\\& \alpha _{1(opt)}>1-C_y^2(\lambda _r-\lambda _n\rho _{xy}^2) \end{aligned}$$ -

(ii).

The MTSLRE \(t_{s_2}^m\) dominates the PRE \(t_{5}\) under strategy 2, if

$$\begin{aligned} &MSE(t_{s_2}^m)<MSE(t_5)\\& \alpha _{2(opt)}>1-C_y^2\lambda _r(1-\rho _{xy}^2) \end{aligned}$$ -

(iii).

The MTSLRE \(t_{s_3}^m\) dominates the PRE \(t_{6}\) under strategy 3, if

$$\begin{aligned} &MSE(t_{s_3}^m)<MSE(t_6)\\& \alpha _{3(opt)}>1-\{\lambda _{n}C_y^2+\lambda _{rn}{\bar{Y}}^2C_y^2(1-\rho _{xy}^2)\} \end{aligned}$$

-

(i).

-

Comparison of MTSLREs with SLREs

-

(i).

The MTSLREs \(t_{s_i}^m,~i=1,2,3\) dominate the corresponding SLREs \(t_{s_i}\) under strategies 1–3, if

$$\begin{aligned} &MSE(t_{s_i}^m)<MSE(t_{s_i})\\& \alpha _{i(opt)}>a_{i(opt)} \end{aligned}$$

-

(i).

-

Comparison of MTSLREs with SPREs

-

(i).

The MTSLREs \(t_{s_i}^m,~i=1,2,3\) dominate the corresponding SPREs \(t_{s_j},~j=4,5,6\) under strategies 1–3, if

$$\begin{aligned} &MSE(t_{s_i}^m)<MSE(t_{s_j})\\& \alpha _{i(opt)}>\frac{Q_j^2}{P_j} \end{aligned}$$

-

(i).

-

Comparison of MTSLREs with MTREs

-

(i).

The MTSLRE \(t_{s_1}^m\) dominates the CRE \(t_{r_1}^m\) under strategy 1, if

$$\begin{aligned} &MSE(t_{s_1}^m)<MSE(t_{r_1}^m)\\& \alpha _{1(opt)}>1-\varPhi (\lambda _rC_y^2+\lambda _nC_x^2-2\lambda _n\rho _{xy}C_xC_y) \end{aligned}$$ -

(ii).

The MTSLRE \(t_{s_2}^m\) dominates the MTRE \(t_{r_2}^m\) under strategy 2, if

$$\begin{aligned} &MSE(t_{s_2}^m)<MSE(t_{r_2}^m)\\& \alpha _{2(opt)}>1-\varPhi \lambda _r(C_y^2+C_x^2-2\rho _{xy}C_xC_y) \end{aligned}$$ -

(iii).

The MTSLRE \(t_{s_3}^m\) dominates the MTRE \(t_{r_3}^m\) under strategy 3, if

$$\begin{aligned} &MSE(t_{s_3}^m)<MSE(t_{r_3}^m)\\& \alpha _{3(opt)}>1-\varPhi (\lambda _rC_y^2+\lambda _{rn}C_x^2-2\lambda _{rn}\rho _{xy}C_xC_y) \end{aligned}$$

-

(i).

-

Comparison of MTSLREs with MTLREs

-

(i).

The MTSLRE \(t_{s_1}^m\) dominates the CLRE \(t_{1}^m\) under strategy 1, if

$$\begin{aligned} &MSE(t_{s_1}^m)<MSE(t_{1}^m)\\& \alpha _{1(opt)}>1-\varPhi C_y^2(\lambda _r-\lambda _n\rho _{xy}^2) \end{aligned}$$ -

(ii).

The MTSLRE \(t_{s_2}^m\) dominates the MTLRE \(t_{2}^m\) under strategy 2, if

$$\begin{aligned} &MSE(t_{s_2}^m)<MSE(t_2^m)\\& \alpha _{2(opt)}>1-\varPhi C_y^2\lambda _r(1-\rho _{xy}^2) \end{aligned}$$ -

(iii).

The MTSLRE \(t_{s_3}^m\) dominates the MTLRE \(t_{3}^m\) under strategy 3, if

$$\begin{aligned} &MSE(t_{s_3}^m)<MSE(t_3^m)\\& \alpha _{3(opt)}>1-\varPhi \{\lambda _{n}C_y^2+\lambda _{rn}{\bar{Y}}^2C_y^2(1-\rho _{xy}^2)\} \end{aligned}$$

-

(i).

-

Comparison of MTSLREs with MTPREs

-

(i).

The MTSLRE \(t_{s_1}^m\) dominates the MTPRE \(t_{4}^m\) under strategy 1, if

$$\begin{aligned} &MSE(t_{s_1}^m)<MSE(t_{4}^m)\\& \alpha _{1(opt)}>1-\varPhi C_y^2(\lambda _r-\lambda _n\rho _{xy}^2) \end{aligned}$$ -

(ii).

The MTSLRE \(t_{s_2}^m\) dominates the MTPRE \(t_{5}^m\) under strategy 2, if

$$\begin{aligned} &MSE(t_{s_2}^m)<MSE(t_5^m)\\& \alpha _{2(opt)}>1-\varPhi C_y^2\lambda _r(1-\rho _{xy}^2) \end{aligned}$$ -

(iii).

The MTSLRE \(t_{s_3}^m\) dominates the MTPRE \(t_{6}^m\) under strategy 3, if

$$\begin{aligned} &MSE(t_{s_3}^m)<MSE(t_6^m)\\& \alpha _{3(opt)}>1-\varPhi \{\lambda _{n}C_y^2+\lambda _{rn}{\bar{Y}}^2C_y^2(1-\rho _{xy}^2)\} \end{aligned}$$

-

(i).

Comparison of MTSPREs with the conventional and memory type estimators

-

Comparison of MTSPREs with CREs

-

(i).

The MTSPRE \(t_{s_4}^m\) dominates the CRE \(t_{r_1}\) under strategy 1, if

$$\begin{aligned} &MSE(t_{s_4}^m)<MSE(t_{r_1})\\& {\bar{Y}}^2\left( 1-\frac{M_4^2}{L_4}\right)<{\bar{Y}}^2(\lambda _rC_y^2+\lambda _nC_x^2-2\lambda _n\rho _{xy}C_xC_y)\\& \frac{M_4^2}{L_4}>1-(\lambda _rC_y^2+\lambda _nC_x^2-2\lambda _n\rho _{xy}C_xC_y) \end{aligned}$$ -

(ii).

The MTSPRE \(t_{s_5}^m\) dominates the CRE \(t_{r_2}\) under strategy 2, if

$$\begin{aligned} &MSE(t_{s_5}^m)<MSE(t_{r_2})\\& {\bar{Y}}^2\left( 1-\frac{M_5^2}{L_5}\right)<{\bar{Y}}^2\lambda _r(C_y^2+C_x^2-2\rho _{xy}C_xC_y)\\& \frac{M_5^2}{L_5}>1-\lambda _r(C_y^2+C_x^2-2\rho _{xy}C_xC_y) \end{aligned}$$ -

(iii).

The MTSPRE \(t_{s_6}^m\) dominates the CRE \(t_{r_3}\) under strategy 3, if

$$\begin{aligned} &MSE(t_{s_6}^m)<MSE(t_{r_3})\\& {\bar{Y}}^2\left( 1-\frac{M_6^2}{L_6}\right)<{\bar{Y}}^2(\lambda _rC_y^2+\lambda _{rn}C_x^2-2\lambda _{rn}\rho _{xy}C_xC_y)\\& \frac{M_6^2}{L_6}>1-(\lambda _rC_y^2+\lambda _{rn}C_x^2-2\lambda _{rn}\rho _{xy}C_xC_y) \end{aligned}$$

-

(i).

-

Comparison of MTSPREs with CLREs

-

(i).

The MTSPRE \(t_{s_4}^m\) dominates the CLRE \(t_{1}\) under strategy 1, if

$$\begin{aligned} &MSE(t_{s_4}^m)<MSE(t_{1})\\& \frac{M_4^2}{L_4}>1-C_y^2(\lambda _r-\lambda _n\rho _{xy}^2) \end{aligned}$$ -

(ii).

The MTSPRE \(t_{s_5}^m\) dominates the CLRE \(t_{2}\) under strategy 2, if

$$\begin{aligned} &MSE(t_{s_5}^m)<MSE(t_2)\\& \frac{M_5^2}{L_5}>1-C_y^2\lambda _r(1-\rho _{xy}^2) \end{aligned}$$ -

(iii).

The MTSPRE \(t_{s_6}^m\) dominates the CLRE \(t_{3}\) under strategy 3, if

$$\begin{aligned} &MSE(t_{s_6}^m)<MSE(t_3)\\& \frac{M_6^2}{L_6}>1-\{\lambda _{n}C_y^2+\lambda _{rn}{\bar{Y}}^2C_y^2(1-\rho _{xy}^2)\} \end{aligned}$$

-

(i).

-

Comparison of MTSPREs with PREs

-

(i).

The MTSPRE \(t_{s_4}^m\) dominates the PRE \(t_{4}\) under strategy 1, if

$$\begin{aligned} &MSE(t_{s_4}^m)<MSE(t_{4})\\& \frac{M_4^2}{L_4}>1-C_y^2(\lambda _r-\lambda _n\rho _{xy}^2) \end{aligned}$$ -

(ii).

The MTSPRE \(t_{s_5}^m\) dominates the PRE \(t_{5}\) under strategy 2, if

$$\begin{aligned} &MSE(t_{s_5}^m)<MSE(t_5)\\& \frac{M_5^2}{L_5}>1-C_y^2\lambda _r(1-\rho _{xy}^2) \end{aligned}$$ -

(iii).

The MTSPRE \(t_{s_6}^m\) dominates the PRE \(t_{6}\) under strategy 3, if

$$\begin{aligned} &MSE(t_{s_6}^m)<MSE(t_6)\\& \frac{M_6^2}{L_6}>1-\{\lambda _{n}C_y^2+\lambda _{rn}{\bar{Y}}^2C_y^2(1-\rho _{xy}^2)\} \end{aligned}$$

-

(i).

-

Comparison of MTSPREs with SLREs

-

(i).

The MTSPREs \(t_{s_i}^m,~i=1,2,3\) dominate the corresponding SLREs \(t_{s_i}\) under strategies 1–3, if

$$\begin{aligned} &MSE(t_{s_i}^m)<MSE(t_{s_i})\\& \alpha _{i(opt)}>a_{i(opt)} \end{aligned}$$

-

(i).

-

Comparison of MTSPREs with SPREs

-

(i).

The MTSPREs \(t_{s_i}^m,~i=1,2,3\) dominate the corresponding SPREs \(t_{s_j},~j=4,5,6\) under strategies 1–3, if

$$\begin{aligned} &MSE(t_{s_i}^m)<MSE(t_{s_j})\\& \alpha _{i(opt)}>\frac{Q_j^2}{P_j} \end{aligned}$$

-

(i).

-

Comparison of MTSPREs with MTREs

-

(i).

The MTSPRE \(t_{s_1}^m\) dominates the MTRE \(t_{r_1}^m\) under strategy 1, if

$$\begin{aligned} &MSE(t_{s_1}^m)<MSE(t_{r_1}^m)\\& \frac{M_4^2}{L_4}>1-\varPhi (\lambda _rC_y^2+\lambda _nC_x^2-2\lambda _n\rho _{xy}C_xC_y) \end{aligned}$$ -

(ii).

The MTSPRE \(t_{s_5}^m\) dominates the MTRE \(t_{r_2}^m\) under strategy 2, if

$$\begin{aligned} &MSE(t_{s_5}^m)<MSE(t_{r_2}^m)\\& \frac{M_5^2}{L_5}>1-\varPhi \lambda _r(C_y^2+C_x^2-2\rho _{xy}C_xC_y) \end{aligned}$$ -

(iii).

The MTSPRE \(t_{s_6}^m\) dominates the MTRE \(t_{r_3}^m\) under strategy 3, if

$$\begin{aligned} &MSE(t_{s_6}^m)<MSE(t_{r_3}^m)\\& \frac{M_6^2}{L_6}&>1-\varPhi (\lambda _rC_y^2+\lambda _{rn}C_x^2-2\lambda _{rn}\rho _{xy}C_xC_y) \end{aligned}$$

-

(i).

-

Comparison of MTSPREs with MTLREs

-

(i).

The MTSPRE \(t_{s_1}^m\) dominates the MTLRE \(t_{1}^m\) under strategy 1, if

$$\begin{aligned} &MSE(t_{s_1}^m)<MSE(t_{1}^m)\\& \frac{M_4^2}{L_4}>1-\varPhi C_y^2(\lambda _r-\lambda _n\rho _{xy}^2) \end{aligned}$$ -

(ii).

The MTSPRE \(t_{s_5}^m\) dominates the MTLRE \(t_{2}^m\) under strategy 2, if

$$\begin{aligned} &MSE(t_{s_5}^m)<MSE(t_2^m)\\& \frac{M_5^2}{L_5}>1-\varPhi C_y^2\lambda _r(1-\rho _{xy}^2) \end{aligned}$$ -

(iii).

The MTSPRE \(t_{s_6}^m\) dominates the MTLRE \(t_{3}^m\) under strategy 3, if

$$\begin{aligned} &MSE(t_{s_6}^m)<MSE(t_3^m)\\& \frac{M_6^2}{L_6}>1-\varPhi \{\lambda _{n}C_y^2+\lambda _{rn}{\bar{Y}}^2C_y^2(1-\rho _{xy}^2)\} \end{aligned}$$

-

(i).

-

Comparison of MTSPREs with MTPREs

-

(i).

The MTSPRE \(t_{s_1}^m\) dominates the MTPRE \(t_{4}^m\) under strategy 1, if

$$\begin{aligned} &MSE(t_{s_1}^m)<MSE(t_{4}^m)\\& \frac{M_4^2}{L_4}>1-\varPhi C_y^2(\lambda _r-\lambda _n\rho _{xy}^2) \end{aligned}$$ -

(ii).

The MTSPRE \(t_{s_5}^m\) dominates the MTPRE \(t_{5}^m\) under strategy 2, if

$$\begin{aligned} &MSE(t_{s_5}^m)<MSE(t_5^m)\\& \frac{M_5^2}{L_5}>1-\varPhi C_y^2\lambda _r(1-\rho _{xy}^2) \end{aligned}$$ -

(iii).

The MTSPRE \(t_{s_6}^m\) dominates the MTPRE \(t_{6}^m\) under strategy 3, if

$$\begin{aligned} &MSE(t_{s_6}^m)<MSE(t_6^m)\\& \frac{M_6^2}{L_6}>1-\varPhi \{\lambda _{n}C_y^2+\lambda _{rn}{\bar{Y}}^2C_y^2(1-\rho _{xy}^2)\} \end{aligned}$$

-

(i).

In these conditions, the suggested MTSLREs and MTSPREs perform superior than their conventional counterparts.

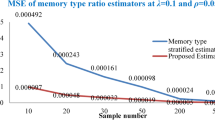

Simulation study

In this section, we compare the proposed MTSLREs and MTSPREs with the existing traditional and memory type estimators through a simulation study. The subsequent points give a description of the algorithm adopted in the simulation study.

-

(i).

Utilize the R software to draw a population of size \(N=1000\) from a bivariate normal distribution with the following parameters: \({\bar{Y}}=12\), \({\bar{X}}=17\), \(\sigma _y=18\), \(\sigma _x=28\), and varying levels of \(\rho _{xy}\), specifically 0.1, 0.5, and 0.9. Additionally, explore several values of \(\kappa\), including 0.1, 0.5, and 0.9.

-

(ii).

A total of 30,000 samples are drawn, each with sizes \(n=15\) comprising responding units (12, 14), \(n=150\) comprising responding units (120, 140), and \(n=300\) comprising responding units (240, 280).

-

(iii).

Based on the samples selected in the previous step, the 30,000 values for each estimator under consideration in this study are tabulated.

-

(iv).

The MSE for each estimator is tabulated utilizing Eq. (1), and the results are presented in Table 1 for conventional estimators and Table 2 for memory type estimators varying levels of \(\rho _{xy}\), respectively.

Consisting of simulation with 30,000 iterations, the MSE and RE are calculated, respectively, by employing the under mentioned equations:

The key findings of Tables 1 and 2 are outlined as follows:

-

1.

-

The MSEs of the adapted MTREs \(t_{r_i}^m,~i=1,2,3\) are least in comparison to the MSEs of the corresponding CREs \(t_{r_i}\) under each case. For example, under strategy 1, for \(\rho _{xy}=0.1\), \(n=150\), \(r=120\), and \(\kappa =0.1\), the MSE of MTRE \(t_{r_1}^m\) is 0.234 (see, Table 2), while, with \(\rho _{xy}=0.1\), \(n=150\), and \(r=120\), the MSE of CRE \(t_{r_1}\) is 4.45 (see, Table 1).

-

The MSEs of the adapted MTLREs \(t_{i}^m,~i=1,2,3\) are least in comparison to the MSEs of the corresponding CLREs \(t_{i}\) under each case. For example, under strategy 1, for \(\rho _{xy}=0.1\), \(n=150\), \(r=120\), and \(\kappa =0.1\), the MSE of MTLRE \(t_{1}^m\) is 0.124 (see, Table 2), while, with \(\rho _{xy}=0.1\), \(n=150\), and \(r=120\), the MSE of CLRE \(t_{1}\) is 2.36 (see, Table 1).

-

The MSEs of the adapted MTPREs \(t_{i}^m,~i=4,5,6\) are least in comparison to the MSEs of the corresponding CPREs \(t_{i}\) under each case. For example, under strategy 1, for \(\rho _{xy}=0.5\), \(n=300\), \(r=240\), and \(\kappa =0.5\), the MSE of MTPRE \(t_{4}^m\) is 0.275 (see, Table 2), while, with \(\rho _{xy}=0.5\), \(n=300\), and \(r=240\), the MSE of CPRE \(t_{4}\) is 0.830 (see, Table 1).

-

The MSEs of the suggested MTSLREs \(t_{s_i}^m,~i=1,2,3\) are least in comparison to the MSEs of the corresponding SLREs \(t_{s_i}\) under each case. For example, under strategy 1, for \(\rho _{xy}=0.9\), \(n=300\), \(r=240\), and \(\kappa =0.1\), the MSE of MTSLRE \(t_{s_1}^m\) is 0.021 (see, Table 2), while, with \(\rho _{xy}=0.9\), \(n=300\), and \(r=240\), the MSE of SLRE \(t_{s_1}\) is 0.41 (see, Table 1).

-

The MSEs of the suggested MTSPREs \(t_{s_i}^m,~i=4,5,6\) are least in comparison to the MSEs of the corresponding SPREs \(t_{s_i}\) under each case. For example, under strategy 1, for \(\rho _{xy}=0.9\), \(n=300\), \(r=280\), and \(\kappa =0.1\), the MSE of MTSPRE \(t_{s_4}^m\) is 0.012 (see, Table 2), while, with \(\rho _{xy}=0.9\), \(n=300\), and \(r=280\), the MSE of SPRE \(t_{s_4}\) is 0.22 (see, Table 1).

-

-

2.

From Table 2:

-

The MSEs of the proposed MTSLREs \(t_{s_i}^m,~i=1,2,3\) under each case decrease for the varying levels of correlation coefficient \(\rho _{xy}\) from 0.1 to 0.9. Consequently, the supplemental information increases the efficiency of the estimators. For example, with \(n=150\), \(r=120\), and \(\kappa\) = 0.9, the MSE values of the suggested MTSLRE \(t_{s_1}^m\) under strategy 1 are 1.909 and 0.713 for \(\rho _{xy}=0.1\), 0.9, respectively. A comparable trend in the MSEs is evident in strategy 2 and strategy 3.

-

The tendency of the MSEs discussed in above point can also be observed in the proposed MTSPREs \(t_{s_i}^m,~i=4,5,6\) and adapted MTREs \(t_{r_i}^m,~i=1,2,3\), MTLREs and MTPREs \(t_{i}^m,~i=1,2,\ldots ,6\) under each case.

-

For each given value of the correlation coefficient \(\rho _{xy}\), the MSE of the adapted and suggested memory type estimators decreases as sample size n increases from 15 to 300. For example, with \(\rho _{xy}=0.1\) and \(\kappa\) = 0.1, the MSE values of the suggested MTSLRE \(t_{s_1}^m\) under strategy 1 are 1.267 and 0.054 for \(n=15\), 300, respectively.

-

For each given value of the correlation coefficient \(\rho _{xy}\) and sample size n, the MSE of the adapted and suggested memory type estimators decreases as the responding units r increases. For example, with \(\rho _{xy}=0.1\), \(n=300\), and \(\kappa\) = 0.1, the MSE values of the suggested MTSLRE \(t_{s_1}^m\) under strategy 1 are 0.054 and 0.044 for \(r=240\), 280, respectively.

-

The findings of the adapted and proposed memory-type estimators are believed to experience an enhancement through the smoothing parameter \(\kappa\) as it plays a crucial role in minimizing the MSE by assigning appropriate weights to both previous and current observations.

-

Furthermore, as \(\kappa\) increases, the current information being processed is accorded more significance in comparison to the previously known information, resultant an elevation in the MSE of the memory-based estimators.

-

Applications

To exhibit the implementation of the suggested memory type imputation methods and the resultant estimators, we conducted an empirical study. This involved a meticulous selection of two different real datasets. The assessment and final conclusions are based on MSE criteria. The descriptions of both real datasets are given below:

-

Dataset 1:

The dataset, sourced from Singh27 on pages 1116–1118, is obtained from marine recreational fishermen’s catches by species group and year in the Atlantic and Gulf coasts spanning over 1992–1995. For this article, the study variable is the number of fish caught in 1995, while the auxiliary variable is the number of fish caught in 1994. The main characteristics of this dataset include: \(N=69\), \(n=27\), \(r=21\), \({\bar{X}}=4954.435\), \({\bar{Y}}=4514.899\), \(S_x^2=49829270\), \(S_y^2=37199578\), \(\rho _{xy}=0.9601\), and \(\kappa =0.2\). The density and box plots for the study and auxiliary variables in this dataset are depicted in Figs. 1 and 2, respectively.

-

Dataset 2:

The dataset is sourced from Singh27 on page number 1129 and is based on the number of immigrants admitted in the USA. In this dataset, the study variable is the number of immigrants admitted in the USA in 1996, while the number of immigrants admitted in the USA in 1995 is taken as an auxiliary variable. The characteristics of this dataset are summarized as follows: \(N=51\), \(n=20\), \(r=15\), \({\bar{X}}=13903.24\), \({\bar{Y}}=17702.76\), \(S_x^2= 915720373\), \(S_y^2=1418008209\), \(\rho _{xy}=0.99\), and \(\kappa =0.3\). The density and box plots for the study and auxiliary variables in this dataset are depicted in Figs. 3 and 4, respectively.

The results reported in Table 3 are discussed in the following points:

-

1.

The results of dataset 1 exhibit that:

-

The adapted MTRIMs and the resultant MTREs \(t_{r_i}^m,~i=1,2,3\) under all cases dominate the corresponding CRIMs and the resultant CREs \(t_{r_i},~i=1,2,3\). For instance, in strategy 2, the MSE of the MTRE \(t_{r_2}^m\) is 11933, while the MSE of the CRE \(t_{r_1}\) is 107401.

-

The adapted MTLRIMs and the resultant MTLREs \(t_{i}^m,~i=1,2,3\) under all cases dominate the corresponding CLRIMs and the resultant CLREs \(t_{i},~i=1,2,3\). For instance, in strategy 1, the MSE of the MTLRE \(t_{1}^m\) is 51026, while the MSE of the CLRE \(t_{1}\) is 459234.

-

The adapted MTPRIMs and the resultant MTPREs \(t_{i}^m,~i=4,5,6\) under all cases dominate the corresponding CPRIMs and the resultant CPREs \(t_{i},~i=4,5,6\). For instance, in strategy 3, the MSE of the MTPRE \(t_{6}^m\) is 96602, while the MSE of the CPRE \(t_{6}\) is 869424.

-

The proposed MTSLRIMs and the resultant MTSLREs \(t_{s_i}^m,~i=1,2,3\) under all cases dominate the corresponding SLRIMs and the resultant SLREs \(t_{s_i},~i=1,2,3\). For instance, in strategy 1, the MSE of the MTSLRE \(t_{s_1}^m\) is 50898, while the MSE of the SLRE \(t_{s_1}\) is 449116.

-

The proposed MTSPRIMs and the resultant MTSPREs \(t_{s_i}^m,~i=4,5,6\) under all cases dominate the corresponding SPRIMs and the resultant SPREs \(t_{s_i},~i=4,5,6\). For instance, in strategy 2, the MSE of the MTSPRE \(t_{s_5}^m\) is 10708, while the MSE of the SPRE \(t_{s_5}\) is 96374.

-

The memory type estimators attain lower MSE as compared to their corresponding conventional counterparts under each strategy.

-

-

2.

The results of dataset 2 exhibit the similar tendency as interpreted in the above points.

Conclusion

EWMA is commonly utilized in signal processing, finance, and quality control, among others, to analyze time-series data, identify trends, and filter out noise. It provides an adaptive and flexible way to estimate averages, making it particularly suitable for scenarios where the importance of observations diminishes over time. In this study, we have adapted some fundamental imputations and the corresponding estimators and proposed some optimal class of MTSLRIMs and MTSPRIMs with the resultant estimators and studied their characteristics. The efficiency conditions have been developed by comparing the proposed resultant memory type estimators with the resultant conventional and adapted memory type estimators. The theoretical results have been further evaluated through a comprehensive simulation study. The proposed methods have been further evaluated using some real datasets. The computational results have shown the dominance of the proposed optimal class of memory type imputation methods and the resultant estimators over the conventional and adapted memory type imputation methods and the resultant estimators. Hence, the proposed optimal class of MTSLRIMs and MTSPRIMs along with the resultant MTSLREs and MTSPREs can be considered for the estimation of population mean in the presence of missing data in time-based surveys.

In the near future, the proposed optimal class of MTSLRIMs and MTSPREs along with their corresponding estimators may be examined using other sampling cases such as stratified random sampling, ranked set sampling, stratified ranked set sampling, etc.

Data availability

All data generated or analysed during this study are included in this article.

References

Rubin, R. B. Inference and missing data. Biometrika 63(3), 581–592 (1976).

Heitjan, D. F. & Basu, S. Distinguishing “missing at random’’ and “missing completely at random’’. Am. Stat. 50, 207–213 (1996).

Sohail, M. U., Shabbir, J. & Ahmed, S. A class of ratio type estimators for imputing the missing values under rank set sampling. J. Stat. Theory Pract. 12, 704–717 (2018).

Sohail, M. U., Shabbir, J. & Sohil, F. Imputation of missing values by using raw moments. Stat. Transit. N. Ser. 20(1), 21–40 (2019).

Singh, G. N., Jaiswal, A. K., Singh, C. & Usman, M. An improved alternative method of imputation for missing data in survey sampling. J. Stat. Appl. Probab. 11(2), 535–543 (2022).

Prasad, S. & Yadav, V. K. Imputation of missing data through product type exponential methods in sampling theory. Rev. Colomb. Estad. 46(1), 111–127 (2023).

Bhushan, S., Kumar, A., Pandey, A. P. & Singh, S. Estimation of population mean in presence of missing data under simple random sampling. Commun. Stat. Simul. Comput. 52(12), 6048–6069 (2023).

Bhushan, S. & Kumar, A. Imputation of missing data using multi auxiliary information under ranked set sampling. Commun. Stat. Simul. Comput.[SPACE]https://doi.org/10.1080/03610918.2023.2288796 (2023).

Kumar, A., Bhushan, S., Emam, W., Tashkandy, Y. & Khan, M. J. S. Novel logarithmic imputation procedures using multi auxiliary information under ranked set sampling. Sci. Rep. 14(1), 18027 (2024).

Rehman, S. A., Shabbir, J. & Al-essa, L. A. On the development of survey methods for novel mean imputation and its application to abalone data. Heliyon 10(11), e31423 (2024).

Bhushan, S., Kumar, A. & Pokhrel, R. Synthetic imputation methods for domain mean under simple random sampling. Frankl. Open 7, 100101 (2024).

Bhushan, S. & Pandey, S. Optimal random non response framework for mean estimation on current occasion. Commun. Stat. Theory Methods[SPACE]https://doi.org/10.1080/03610926.2024.2330676 (2024).

Lee, H., Rancourt, E. & Sarndal, C. E. Experiments with variance estimation from survey data with imputed values. J. Off. Stat. 10, 231–243 (1994).

Diana, G. & Perri, P. F. Improved estimators of the population mean for missing data. Commun. Stat. Theory Methods 39, 3245–3251 (2010).

Singh, S. & Deo, B. Imputation by power transformation. Stat. Pap. 44, 555–579 (2003).

Searls, D. T. The utilization of a known coefficient of variation in the estimation procedure. J. Amer. Statist. Assoc. 59, 1225–1226 (1964).

Bhushan, S. & Pandey, A. P. Optimal imputation of missing data for estimation of population mean. J. Stat. Manag. Syst. 19(6), 755–769 (2016).

Bhushan, S. & Pandey, A. P. Optimality of ratio type estimation methods for population mean in the presence of missing data. Commun. Stat. Theory Methods 47(11), 2576–2589 (2018).

Noor-ul-Amin, M. Memory type estimators of population mean using exponentially weighted moving averages for time scaled surveys. Commun. Stat. Theory Methods 50(12), 2747–2758 (2021).

Aslam, I., Noor-ul-Amin, M., Yasmeen, U. & Hanif, M. Memory type ratio and product estimators in stratified sampling. J. Reliab. Stat. Stud. 13(1), 1–20 (2020).

Aslam, I., Noor-ul-Amin, M., Hanif, M. & Sharma, P. Memory type ratio and product estimators under ranked-based sampling scheme. Commun. Stat. Theory Methods 52(4), 1155–1177 (2021).

Qureshi, M. N, Tariq, M. U, & Hanif, M. Memory-type ratio and product estimators for population variance using exponentially weighted moving averages for time-scaled surveys. Commun. Stat. Simul. Comput. 1–10 (2022).

Qureshi, M. N. et al. Memory-type variance estimators using exponentially weighted moving average statistic in presence of measurement error for time-scaled surveys. PLoS ONE 18(11), e0277697 (2023).

Bhushan, S., Kumar, A., Al-Omari, A. I. & Alomani, G. A. Mean estimation for time-based surveys using memory-type logarithmic estimators. Mathematics 11(9), 2125 (2023).

Kumar, A., Emam, W. & Tashkandy, Y. Memory type general class of estimators for population variance under simple random sampling. Heliyon 10(16), e36090 (2024).

Roberts, S. Control chart tests based on geometric moving averages. Technometrics 1(3), 239–250 (1959).

Singh, S. Advanced Sampling Theory with Applications: How Michael Selected Amy Vol. 2 (Kluwer, The Netherlands, 2003).

Acknowledgements

The authors are extremely grateful to the panel of reviewers and the Editor-in-Chief for their careful insights and constructive suggestions which led to considerable improvement of the paper.

This work was supported by the Deanship of Scientific Research, Vice Presidency for Graduate Studies and Scientific Research, King Faisal University, Saudi Arabia [Grant No. KFU241756].

Author information

Authors and Affiliations

Contributions

A.K.: Writing original manuscript, methodology, simulation study, software; S.B.: Writing-review and editing; A.M.A.: Project administration, financial support.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Kumar, A., Bhushan, S. & Alomair, A.M. Optimal class of memory type imputation methods for time-based surveys using EWMA statistics. Sci Rep 14, 25740 (2024). https://doi.org/10.1038/s41598-024-73518-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-73518-1

Keywords

This article is cited by

-

Cyclical hybrid imputation technique for missing values in data sets

Scientific Reports (2025)