Abstract

The technique of extracting different distinguishing features by locating different part regions to achieve fine-grained visual classification (FGVC) has made significant improvements. Utilizing attention mechanisms for feature extraction has become one of the mainstream methods in computer vision, but these methods have certain limitations. They typically focus on the most discriminative regions and directly combine the features of these parts, neglecting other less prominent yet still discriminative regions. Additionally, these methods may not fully explore the intrinsic connections between higher-order and lower-order features to optimize model classification performance. By considering the potential relationships between different higher-order feature representations in the object image, we can enable the integrated higher-order features to contribute more significantly to the model’s classification decision-making capabilities. To this end, we propose a saliency feature suppression and cross-feature fusion network model (SFSCF-Net) to explore the interaction learning between different higher-order feature representations. These include (1) an object-level image generator (OIG): the intersection of the output feature maps of the last two convolutional blocks of the backbone network is used as an object mask and mapped to the original image for cropping to obtain an object-level image, which can effectively reduce the interference caused by complex backgrounds. (2) A saliency feature suppression module (SFSM): the most distinguishing part of the object image is obtained by a feature extractor, and the part is masked by a two-dimensional suppression method, which improves the accuracy of feature suppression. (3) A cross-feature fusion method (CFM) based on inter-layer interaction: the output feature maps of different network layers are interactively integrated to obtain high-dimensional features, and then the high-dimensional features are channel compressed to obtain the inter-layer interaction feature representation, which enriches the output feature semantic information. The proposed SFSCF-Net can be trained end-to-end and achieves state-of-the-art or competitive results on four FGVC benchmark datasets.

Similar content being viewed by others

Introduction

Fine-Grained Visual Classification (FGVC), which focuses on accurately distinguishing subcategories within broader categories (e.g., birds, cars), has attracted significant attention due to its practical applications in areas such as smart traffic, smart retail, and security detection. However, fine-grained images often exhibit small intra-class variance and large inter-class variance, making subclass differences subtle and typically identifiable only through expert knowledge. As a result, fine-grained visual classification remains a highly challenging research task.

To address the challenges above, most researchers have used location-based recognition methods for similar object differentiation. Some early works1,2,3,4,5 mainly relied on predefined bounding boxes and partial annotations to capture the distinguishable regions, which are called strongly supervised learning methods. Although these methods are highly efficient, they are not very practical when the additional annotation information in use is achieved by a large number of manual operations. With the further development of deep learning techniques, weakly supervised learning methods6,7,8,9,10,11,12,13 have been widely studied and used, which do not rely on or rely on less additional annotation information to perform the localization recognition task. These methods eliminate the need for expensive annotations and enable the construction of localization sub-networks to locate the most discriminative parts through attention mechanisms, feature clustering fusion, and other methods. However, existing weakly supervised learning methods often focus on the most discriminative parts, overlooking other features that could be beneficial for classification. This can lead to the model failing to fully utilize all available information, potentially impacting the final classification accuracy and model robustness.

Fine-grained visual datasets possess a more complex background noise, while there are problems where the target object is not a large part of the overall image. For example, in a bird image, the bird object only occupies 10% of the image area, while the background noise occupies 90%, which makes the recognition of this subcategory more difficult, as shown in Fig. 1a. On the other hand, it is found that there are numerous longitudinally growing object maps in the bird and dog datasets, which makes it easy for the single-dimensional feature suppression method to mask other distinguishing regions of the objects, as shown in Fig. 1b, Suppressing features along a single dimension can obscure distinctive parts of birds and dogs, such as a bird’s feathers and beak, which are essential local features. Thereby making it challenging for the model to focus on the critical features of the target objects. At the same time, there are some difficulties in the cross-fusion of higher-order features between different network layers.

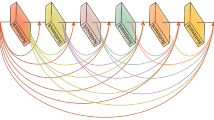

Based on the above questions, this paper proposed a salient feature suppression and cross-feature fusion network model (SFSCF-Net). The overview of the model is shown in Fig. 2. Our model employs three different methods and two training steps to construct the model framework. An object-level image generator (OIG) is used in the first training step to study the overall features of the object, and the feature mapping of the original image extracted by the backbone network needs to be used to obtain the object bounding box information, and the cropped object-level image is used as the input to the second training step. The fine scale of the object image is very helpful for classification because it contains the structural features and fine-grained features of the target, while also filtering the interference of background noise. In the second training stage, we divide the backbone network into three training stages, each of which outputs the feature map extracted in the current network stage and performs two-dimensional salient feature suppression (SFSM) on the feature map, which is then mapped to the input image and used as the input image for the next training stage until the last stage of the network. Feature information of different scales and differentiation is output at different network stages, and we apply a cross-feature fusion method (CFM) to cross-fuse feature information from different stages, which is useful for fine-grained feature learning.

The contributions of our model are summarized as follows:

-

1.

We propose an object-level image generator (OIG), which uses the output features of the last two layers of the network layer as a mask to map out the object bounding box of the original image, which can be cropped to obtain an object-level image.

-

2.

We demonstrate the effectiveness of the salient feature suppression module (SFSM) in improving the classification performance of the model, which breaks the bottleneck of single-dimensional feature suppression and makes the suppression region more accurate with two-dimensional suppression, while enabling more distinguishable regions to stand out.

-

3.

We adopt a cross-feature fusion method (CFM), which uses the principle of bilinear pooling to act on different stages of the network and treats the convolutional layers of each stage as local feature extractors. Then, the features from different stages of the convolutional layers are cross-fused by element-level multiplication to model the inter-layer interaction of local features, which enhances the feature representation and diversifies the features while reducing the information loss during the training process.

Related works

In this section, we describe the most representative methods related to our approach.

Fine-grained feature learning

In the FGVC task, identifying distinguishing local regions across different categories helps establish the relationships between regions and object instances while reducing the impact of distracting factors, such as variations in object poses and complex backgrounds. Consequently, approaches based on localization-classification sub-networks have been widely studied and applied. The one using more manually labeled information is called the localization-classification subnetwork method with strong supervised information. such as detailed part level, keypoint-level and attribute annotations14,15,16. These methods learn to localize semantic parts or keypoints and extract corresponding features which are used as a holistic representation for final classification. With the further development of deep learning technology, more methods start to use less labeled information to accomplish the task of localization and classification, and these methods are called localization-classification subnetwork methods with weakly supervised information. For example, WCCN17 generates class activation maps to identify regions of interest, which are then fed into the classifier and corrected through multiple instance learning. WSOD218 scores virtual candidate boxes through Top-Down and Bottom-Up approaches, with the virtual box with the highest score serving as the target output for the next layer. MIST19 refines regions of interest through self-training, while WSCL20 improves the features of regions of interest through data enhancement and contrastive learning.

Strongly supervised classification methods

Classification methods based on strongly supervised information require the use of additional labeled bounding boxes or key site points to make the model more focused on feature differences between local regions. Zhang et al.1 proposed Part R-CNN to predict fine-grained categories by training to capture block features from different parts of an image and then concatenate them as feature vectors. S. Branson et al.2 proposed a pose normalized fine-grained image classification architecture (Pose Normalized CNN), which first learns an estimate of the object’s pose for computing local features of the object, which are computed by using a deep convolutional neural network to localize and normalize image blocks, resulting in a more discriminative feature representation. Huang et al.3 proposed a new local stacking network architecture (Part-stack CNN) to explain the learning process of fine-grained recognition by modeling subtle differences with object localization. Lin et al.4 proposed a Deep Localization, Alignment and Classification Network (Deep LAC), which combines localization, alignment and classification in a deep neural network, and proposed classification and alignment functions that can adaptively reduce and alignment errors by a threshold connectivity function. After back-propagation of the network, it can update the local localization results. Wei et al.5 proposed Mask-CNN, which uses a full convolutional network (FCN) to learn a localization-based segmentation model, combined with local annotation training to obtain the minimum outer rectangle of different regions, and cropped and extracted features as a feature representation of the whole image.

Weakly supervised classification methods

Among weakly supervised information classification methods, much of the recent work has focused on the study and application of attention mechanisms. For example, Fu et al.8 proposed a recurrent attentional convolutional neural network (RA-CNN) that recursively learns discriminative region feature representations at multiple scales. Lopez et al.10 proposed a modular attention mechanism that applies attention to the activation of convolutional features rather than at the image level. This approach enables the network to focus more on low-level feature activations and use these activations to update the optimized weight information. He et al.11 proposed a multiscale, multigranularity deep reinforcement learning approach to learn multigranularity discriminative region attention and multiscale based region feature representation. he et al.12 proposed a weakly supervised partial selection method with spatial constraints. By jointly using saliency extraction and co-segmentation, the entire object detector is learned to automatically localize the target, and then the constraint information is used to select distinguishable parts. tan et al.13 proposed a multi-scale selective hierarchical hyperbolic pooling method to capture feature complementary information in multi-scale interaction structures with interactive intra- and inter-layer features. Zhang et al. Chang et al.21 found that coarse-level label prediction can exacerbate fine-grained feature learning, while fine-grained features can better improve the learning of coarse-level classifiers. Thus, a level-specific classification head was designed to unlock coarse-grained features with fine-grained features. Huang et al.22 proposed an interpretable attention-guided network for fine-grained visual classification. It includes an attention-guided framework, a progressive training mechanism, and an interpretable FGVC method. Xie et al.23 found a weakly supervised spatial group attention network for fine-grained bird image recognition, which applied MoEx data augmentation in the feature space to provide more training data for the weakly supervised network. and the SGA facilitated the weakly supervised learning network to generate an attention factor for every spatial location to extract more discriminative image feature. Guo et al.24 proposed an enhanced image synthesis strategy called Attentive Cutout, which purposefully conceals informative details by performing attention-guided content sampling on the high responses from channels. Our proposed method is significantly different from previous methods. Liu et al.25 proposed a weakly supervised vision localization method that leverages multi-scale feature aggregation. This method aggregates feature maps at different semantic levels and uses a weakly supervised triplet ranking loss to learn discriminative features, while also encouraging the repeatability of keypoint features for image representation.

Higher-order coding-based methods

The methods based on higher-order coding mainly enhance the expression of features by transforming convolutional neural network features to higher order and then perform classification, mainly bilinear models, kernel fusion, etc. Lin et al.26 proposed a bilinear pooling model, which consists of two feature extraction networks and obtains bilinear features by computing the outer product of different spatial locations of the feature map and performing pooling operations. Kong et al.27 proposed to represent the covariance features by a matrix and applied a recurrent bilinear classifier to perform the classification. Yu et al.28 proposed a cross-layer bilinear pooling method to obtain the local feature representation between different network layers. Secondly, a new inter-layer bilinear pooling framework was also proposed to integrate the output features of multiple network layers to enhance the feature representation. Cai et al.29 proposed a higher-order integration framework with hierarchical convolutional activation for fine-grained image classification. By using the convolutional activation as a local descriptor, the hierarchical convolutional activation can be used as a representation of local parts from different scales. And a polynomial kernel-based predictor is proposed to capture the higher-order statistics of convolutional activation for modeling partial interactions. Liu et al.30 proposed a novel recursive multi-scale channel-spatial attention module, which can capture channel-wise and spatial-wise attention of multiple scales and accordingly refine the deep feature maps to better correspond to the visual attention. Liu et al.31 found a self-supervised feature regularization method on deep representation learning for visual classification, which can incorporate inter-class correlation into features and also mitigate intra-class feature variations. Wang et al.32 proposed a visual recognition method based on deep nearest centroids, achieving classification decisions by automatically mining the potential distributions of each class and local salient patterns. Yan et al.33 introduced a global-local representation granularity framework, which uses a designed global-local encoder to preserve finer local features through local keyframes. Liu et al.34 developed a three-part feature enhancement pyramid network for dense prediction, which can be integrated into any existing bottom-up backbone network to generate enhanced multi-scale feature representations. Yu et al.35 proposed a lightweight high-resolution network, introducing an efficient conditional channel weighting unit to replace the expensive 1 × 1 convolutions in the shuffle block, enabling weight interaction across channels and spatial dimensions. Zheng et al.36 presented a multi-layer multi-attention fusion network for remote sensing image scene classification, incorporating a multi-layer adaptive feature fusion module to facilitate information exchange between different layers, enhancing the network’s multi-scale representation capabilities.

Methods

This section describes the three methods proposed above in detail. The salient feature suppression and cross-feature fusion network model consists of an object-level image generator, a salient feature suppression module, and an inter-layer cross-feature fusion method: (1) an object-level image generator (OIG), which aims to locate the target object and eliminate the interference of background noise. (2) A salient feature suppression module (SFSM) designed to suppress the most salient features extracted by the current layer. Compared with the suppression method in Chap. 3, this method increases the candidate region dimension to two dimensions, locating the salient features more accurately while preserving other potentially distinguishing regions as much as possible. (3) A cross-feature fusion method (CFM) between layers, which aims to use the bilinear pooling property to cross-fuse features from different network layers to make the output feature information more diverse and increase the generalization ability of the model. Our network framework is illustrated in Fig. 2. We employ ResNet50 as the backbone. For an input image \(\:{F}_{in}\in\:{\mathbb{R}}^{C\times\:H\times\:W}\), the initial features \(\:{F}_{0}\) are first extracted through a convolutional neural network. The original features \(\:{F}_{0}\) are then processed through stages 1–3 to extract features related to the target object, and the results are fed into the Salient Feature Suppression Module (SFSM) to obtain the feature suppression map \(\:{F}_{SFSM}\). Next, \(\:{F}_{SFSM}\) undergoes stage 4 for deep feature extraction, followed by another round of salient feature suppression. The features generated by stages 3, 4, and 5 are passed into the Cross-Feature Mapping (CFM) module to produce attention maps. These attention maps are fused with the input feature maps through element-wise multiplication, generating new feature representations. Finally, the classifier results from different stages are combined through weighted summation to produce the final prediction.

Object-level image generator

The main method proposed in this section is implemented for the objects in the image, so it is necessary to remove as much noisy background information other than the objects in the original image to obtain the object image before starting. After being inspired by TBMSL37, the original image is fed into the feature extractor and the output \({F_0}\) is obtained from the last convolution layer, and all feature maps in \({F_0}\) are summed in the channel dimension to obtain the feature map A. After that, the average value of A is calculated to obtain \(\bar {A}\). Finally, a mask map \({B_1}\) is constructed to represent the regions larger than the average activation value.

According to the empirical knowledge of TBMSL, multilayer integration will lead to more accurate object localization, so after experimental arguments it is found that the object usually lies in the maximum connected component of \({\widetilde {M}_{conv\_5c}}\). Therefore, the mask mapping \({B_2}\) of the output of the penultimate convolutional block in the feature extraction network is obtained by Eq. (1) and Eq. (2). Finally, the intersection B of \({B_1}\) and \({B_2}\) is obtained, which is shown in the following equation:

The original image is cropped and resized to obtain an object-centered image of the object by mapping the largest connected region in mask B. The object-centered image can cover the largest area of the object, which facilitates more accurate localization of salient higher-order features because it filters out noisy background regions. Then, the object image is used as input for subsequent tasks to facilitate complementary fusion learning of higher-order features.

Salient feature suppression module

First, we take the object image obtained by the first method as the input to the salient feature suppression module and through the feature extraction network obtain its feature map \({F_i} \in {R^{H \times W \times C}},i \in [1,3]\). i indicates the current training phase and the value of i is detailed in the subsequent ablation experiments. The feature map is visualized by the Grad-CAM algorithm and enables a more intuitive observation of the highest thermally activation region of this image and also the region where the model should be feature suppressed. The feature map is then manipulated in two similar parallel steps. In the first step, the F is divided equally into parts along the dimension, each part being denoted as \(F_{i}^{{h(j)}} \in {R^{(\frac{H}{M}) \times W \times C}},j \in [1,M]\). A simple 1 × 1 convolution operation is then used to perform channel aggregation, with the aim of obtaining the significance level of each part. This changes the number of channels of the segmented feature map to 1. After activation by normalization and the \(ReLU\) activation function, we obtain a list of standard salient scores for each part \({q_j}\):

where \(Con{v_{1 \times 1}}\) shares parameters between different parts to discriminate the degree of significance of different parts. Then, \({q_j}\) is globally averaged pooled to obtain the mean of each saliency score list \(\bar {q}\) and normalized by the \(softmax\) function to obtain \({F_i}\) based on the j the row saliency factor of M rows \(P_{i}^{{h(j)}}\):

At this point we have completed the first step of the module, which identifies the row in the feature map where the most salient features are located. The next step is to determine the column where the most salient features are located, which is what the second step does. The second step simply changes the cut direction of the feature map from H dimension to W dimension, and the rest of the operation is the same as the first step, which divides \({F_i}\) into M parts along the W dimension and each part is denoted as \(F_{i}^{{w(k)}} \in {R^{(\frac{H}{M}) \times W \times C}},k \in [1,M]\), so as to obtain the kth column significance factor \(P_{i}^{{w(k)}}\) of \({F_i}\) based on M. Based on the obtained \(P_{i}^{{h(j)}}\) and \(P_{i}^{{w(k)}}\), a unique salient region \(Z_{i}^{{j,k}}\) can be determined, as shown in Fig. 3. Afterwards, a suppression mask \({S_i}\) is generated for suppressing the salient features, which sets the pixel values falling in the \(Z_{i}^{{j,k}}\) region to 0 and the rest to 1. Finally, \({S_i}\) is multiplied with the current layer feature map \({F_i}\) at the pixel level to obtain the salient feature suppression output \({F_{i+1}}.\)

\(e_{i}^{{a,b}}\) represents the pixel value at each position in the mask, a represents the row and a represents the column. The module can be represented in the form of a function as follows:

Our model performs salient feature suppression twice, the second time on top of the first, which ensures the complementarity of higher-order features. For the number of times the salient feature suppression module is used, Previous studies have reported that after using it two times, the model is able to sufficiently mine the distinguishable and complementary higher-order features, and if the module is further added for training, the model may not have any further performance improvement, but will increase the number of computational parameters. These findings are also verified in the later ablation experiments.

Cross-feature fusion method

Before presenting the specific methods it is important to first introduce the decomposition bilinear pooling technique. This technique was proposed by Kim et al.38 and applied to the visual question answering task, which utilizes the factorial decomposition bilinear pooling of the Adama product to achieve an effective attention mechanism for multimodal learning. Suppose an image is extracted with features by CNN to output the feature map \(F \in {R^{h \times w \times c}}\), where h is the height, w is the width, and c is the number of channels. This method represents the channel descriptor at position F in space as \(f={[{f_1},{f_2}, \ldots ,{f_c}]^T}\). A fully bilinear model is then defined as follows:

The formula \({W_i} \in {R^{c \times c}}\) is a projection matrix and \({z_i}\) is the output of the bilinear model. To obtain an o-dimensional output z, it needs to learn \(W=[{W_1},{W_2},.,{W_o}] \in {R^{c \times c \times o}}\). According to the matrix decomposition in39, the projection matrix in Eq. 10 can be decomposed into two one-rank vectors:

In the above equation, \({U_i} \in {R^c}\), \({V_i} \in {R^c}\). So the output characteristic \(z \in {R^o}\) can be derived from the following equation:

In the above equation, \({U_i} \in {R^{c \times d}}\) and \({V_i} \in {R^{c \times d}}\) are the projection matrices, \(P \in {R^{d \times o}}\) is the classification matrix, \(z={P^T}({U^T}f \circ {V^T}f)\) is the Hadamard Product, and d is the hyperparameter that determines the dimensionality of the joint embedding.

Our proposed cross-feature fusion method employs a cross-layer bilinear pooling approach that treats each convolutional layer in each network phase as a local feature extractor. Then, the features from different convolutional layers are cross-fused by element-level multiplication to model the inter-layer interaction of local features. Thus, Eq. 12 can be rewritten as:

where f and g denote feature maps from different convolutional layers at the same spatial location, and features from different convolutional layers can be extended to high-dimensional space by independent linear mapping.

The cross-layer bilinear pooling method has superior feature interaction capability than the traditional bilinear pooling method. The inter-layer feature interaction between different convolutional layers is utilized to help capture local feature attributes that are distinguishable between fine-grained subcategories. Thus, the cross-feature fusion approach extends cross-layer bilinear pooling to integrate more intermediate convolutional layers, thus further improving the feature representation.

Specifically, the cross-feature fusion method is divided into a feature fusion phase and a classification phase, and the formulas for each of the two phases are as follows:

where \({z_{fus}}\) denotes the feature fusion output and z denotes the classification output. To better model the inter-layer feature fusion, the fused features are obtained by connecting multiple regions of cross-layer bilinear pooling. Thus, the final output of cross-feature fusion \({z_{CFM}}\) can be obtained as follows:

Where P is the classification matrix, U, V and S respectively represent the projection matrices of the convolutional layer features f, g and q for each network stage. The model classification performance is obtained by expanding \({z_{CFM}}\) with full connectivity and calculating it by the classifier.

Results

In this chapter, we will show some comprehensive comparison experiments and ablation experiments to validate the performance of SFSCF-Net. Our experiments are conducted on four public FGVC benchmark datasets, including CUB-200-201140, FGVC-Aircraft41, Stanford Cars42, and Stanford Dogs43, as shown in Table 1.

Parameter configuration

In this section, various experiments are conducted on four public fine-grained visual classification datasets, including CUB-200-2011, FGVC- Aircraft, Stanford Cars, and Stanford Dogs.

The model was evaluated using Pytorch with version > 1.5 on a GTX1080ti GPU for all experiments and using VGG-1644 and ResNet5045 as the backbone network. To obtain the best performance, the model sets the number of branches in the network phase to 3 and the number of groupings in the salient feature suppression module M to 4. The category labels of the images are uniquely annotated for training. During training, the input image is resized to 550 × 550 and the resized image is randomly cropped to 448 × 448. To expand the number of images in the training set, the model applies a random vertical flip. In the testing process, the input image is also resized to 550 × 550, and unlike the training process, the resized image is cropped from the center to 448 × 448.

The model uses SGD as the gradient descent optimizer and batch normalization as the regularize. Also, the learning rate of the added convolutional layers in the insertion module is initialized to 0.002 and annealed according to the cosine during the training process. The learning rate of the pre-trained convolutional layers is 0.0002. For all the above models, the training is done up to 120 epochs, the batch size is 16, the weight decay is 0.00001, and the momentum is 0.9.

Comparison with state-of-the-art methods

The performance of our method was compared with other state-of-the-art methods on four datasets, CUB-200-2011, FGVC-Aircraft, Stanford Cars, and Stanford Dogs, and the results are shown in Table 2.

CUB-200-2011

CUB-200-2011 is the most challenging benchmark dataset for fine-grained visual classification tasks, and our model based on both VGG16 and ResNet50 achieves the best results on this dataset. In the VGG16-based baseline model, our model improves the accuracy by 7.7% and 6.4% over DeepLAC and Part-RCNN using predefined bounding boxes and part annotations, respectively. The superior results obtained by our model compared to the baseline of the pooling-based model LRBP27 mainly benefit from the layer-by-layer interaction of features and the integration of multiple layers. Our model even outperforms RA-CNN8 and MA-CNN46, which are more classical unsupervised local region-based methods, with relative accuracy improvements of 2.7% and 1.5%, respectively. Our model also outperforms the BoostCNN47 by 1.8%, which enhances multiple bilinear networks trained at multiple scales. Although HIHCA29 proposed a similar idea for feature interaction models for fine-grained recognition, our model obtained a 2.7% accuracy improvement due to the mutual reinforcement framework of inter-layer feature interaction and discriminative feature learning. In the baseline model based on ResNet50, our model outperforms MGE-CNN48, S3N49, and FDL50, which are two-stage models, by 4.1%, 1.1%, and 1.0%, respectively. Compared with ISQRT-COV26, our method outperforms them by 1.5%, which explores higher-order information to capture subtle features. Compared with Cross-X, which differentiates features by pairwise interactions, our model improves by 1.9%. Compared with CIN51, LIO52, PMG53, and FBSD54, our method outperforms them by 2.1%, 1.6%, 0.6%, and 0.7%, respectively, indicating that the model is effective in further diversifying features using interlayer interactions while refining the accuracy of potential feature mining.

FGVC-aircraft

Due to the subtle differences, different aircraft models are difficult to identify, nevertheless, our model achieves the highest classification accuracy among all methods. Under the VGG16 baseline compared to the local region learning-based method MA-CNN and the pooling-based BoostCNN, our model obtained 0.7% and 2.1% performance gains. Under the ResNet50 baseline, our model outperforms WS-DAN55, DFL56, Cross-X55, MC-Loss57, and OR-Loss58 by 0.8%, 0.7%, 1.2%, 1.2%, and 0.5%, respectively. Our model outperforms the two-stage models FDL and S3N by 0.4% and 1.0%, respectively, which indicates that our model still maintains better performance in the equivalent model framework.

Stanford cars

Our model achieves state-of-the-art performance under the VGG16 baseline, relying on accurate feature complementation and inter-layer feature interaction learning, and our model outperforms unsupervised local region based RA-CNN and MA-CNN by 1.3% and 1.0%. Our model is also better than pooling-based methods BoostCNN and KP59. Using the ResNet50 backbone network, our model outperforms ISQRT-COV, Cross-X, LIO, S3N, MC-Loss, WS-DAN, and FBSD methods to varying degrees. However, it is slightly lower than PMG, probably due to the fact that PMG is more applicable to rigid datasets, while the object generation methods in our model have significantly less room for play in this dataset.

Stanford dogs

The Stanford Dogs dataset is much harder relative to the other three, so many models do not report results on this dataset. Our model obtains competitive results on this dataset and far outperforms RA-CNN, HIHCA, BoostCNN, LRBP, and S3N. but lags behind the Cross-X model by 0.6%. the Cross-X model’s approach to multi-feature learning of inter- and intra-class images performs better on complex datasets, compared to our model which focuses more on In contrast, our model focuses more on complementary feature interactions among individual images, but our model has fewer parameters, so it has a lower training cost.

In Table 3, we present a comparative analysis of the parameter counts and Flops between our proposed model and current state-of-the-art models on CUB_200_2011. The results demonstrate that our model achieves optimal parameter efficiency, with a parameter count 3% higher than the next best model, FBSD. It is noteworthy that although our model does not achieve the best results in terms of FLOPs, its accuracy surpasses that of Cross-X by 1.9% points. We believe that sacrificing some computational performance to enhance accuracy is justified, provided that the model’s runtime speed remains acceptable.

In summary, SFSCF-Net scales well to the four benchmark datasets. Using the VGG16 backbone network, SFSCF-Net achieves the best results on all four datasets. Using the ResNet50 backbone network, PMG achieves the best results on Stanford Cars, but performs poorly on FGVC-Aircraft. cross-X achieves the best results on Stanford Dogs, but the results on the other three datasets need to be improved. Our model shows the best performance on both CUB-200-2011 and FGVC-Aircraft, while the results on the other two datasets are also relatively competitive.

Ablation studies

In this section, an ablation study of SFSCF-Net is performed to analyze the contributions made by each of the proposed methods. The ablation experiments were performed on the Stanford Cars dataset with ResNet50 as the backbone network. The experimental results are shown in Table 4, where Stage 1–3 + Stage 4 + Stage 5 is a complete ResNet50 network, and Origin indicates that only ResNet50 was used to train the original images. First, this section divides the experiments into three groups, the first group verifies the contribution of OIG, and the second and third groups gradually add network stages while quantitatively analyzing the contribution of SFSM and CFM. As can be seen from Table 4, the performance of the network model with the object-level image generator in the first group is improved by 0.3%, which verifies its effectiveness. In the second group, a baseline performance was obtained by first adding a network phase, then SFSM was added to improve the model performance by 0.3%, and finally CFM was added to improve the model performance by another 0.3%, verifying the effectiveness of SFSM and CFM. The third group is the same as the second group, where the last network stage is added to give the model a complete ResNet50 backbone network, and then the SFSM and CFM are added to improve the model performance by 0.8% and 0.3%, respectively. It can be seen that with the complete backbone network, the addition of our proposed method can achieve a huge performance improvement, which further validates the effectiveness of our proposed method. Therefore, our model structure is set to Origin + Oig + Stage 1–3 + Stage 4 + Stage 5 + SFSM + CFM, as shown in Fig. 2.

In this section, the number of groupings of feature maps along W and H in SFSM is ablated to determine an optimal number of groupings. The model uses the output of OIG as the input image and uses SFSM for the output feature maps of Stage 1–3 and Stage 4, respectively, to obtain an optimal value of M by verifying the output of the model. From Table 5, it can be seen that the accuracy of the model is highest when the value of M is 4. The study shows that when the value of M is too small, the suppression region is too large resulting in covering other potential complementary feature information, making the accuracy rate insufficient; when the value of M is too large, the region of two-dimensional suppression becomes smaller and may not cover the whole salient region, resulting in the downstream network repeatedly extracting the same features from the upstream output, so the accuracy rate is reduced.

In the ablation study, we used the CUB_200_2011 to experiment with various parameters, including optimizers, loss functions, and learning rates, as shown in Tables 6 and 7, and 8. In Table 6, it is evident that the SGD optimizer outperforms other optimizers, with an accuracy rate 4.4% higher than the second-best FAdam optimizer. Table 7 demonstrates that our chosen CELoss achieves better results compared to other widely used loss functions. As shown in Table 8, we tested different learning rate parameters and ultimately selected learning rates of 0.0002 and 0.002 for the Old Layer and New Layer, respectively, leading to a significant improvement in classification accuracy.

Based on the above analysis, the Object-level Image Generator (OIG) eliminates complex background noise, allowing the network to focus on the objects themselves. The Salient Feature Suppression Module (SFSM) refines the salient feature suppression region, enabling the network to more accurately mine potential complementary features. Cross-feature fusion method (CFM) enables the network to interactively integrate features between layers to diversify features. The ablation experiments verify the effectiveness of the above methods.

Visualization

This section presents a two-part visualization analysis, the first part is a grouped visualization comparison of SFSM, and the second part is a visualization comparison of SFSCF-Net in each dataset under the ResNet50 backbone network. The experiments in this subsection are performed using the Grad-CAM visualization model and images from all four benchmark datasets.

In order to evaluate the suppression effect of SFSM more intuitively, we use a bird image as an example to demonstrate the suppression area effect with different number of groupings. Specifically, in this section, the grouping cases are carried out from M = 2 to M = 5 as set in the ablation experiment, as shown in Fig. 4. As can be seen from the figure, when M = 2, although the salient features are well suppressed, the body part of the bird is also forced to be suppressed, so there is a risk that potentially complementary features are forced to be suppressed; when M = 3 and M = 4, the size of the suppressed area is approximately the same, but the suppressed area of M = 4 is more accurate; when M = 5, the suppressed area is more accurate, but its size is also smaller at the same time. When M = 5, the suppressed region is more accurate, but the size of the suppressed region is also smaller, so that the same significant region is not completely suppressed, which makes the subsequent network risky to continue mining the features of the region. In summary, the best choice for this module is when M = 4.

To demonstrate the recognition effect of SFSCF-Net, this section makes a visualization comparison experiment between ResNet50 backbone network and SFSCF-Net on the output feature maps of the last three network stages, as shown in Fig. 5. The first column of the figure shows the images selected in each dataset, and the second and third columns show the visualization results of ResNet50 backbone network and SFSCF-Net at different network stages. As can be seen from the figure, the training process of the backbone network only locates one distinguishable region of the object, and fails to mine other distinguishable regions. In contrast, SFSCF-Net incorporates a salient feature suppression module and a cross-feature fusion method, which enables it to accurately mine complementary features and at the same time fuse inter-layer features to diversify features and thus locate multiple distinguishable regions.

Visual Comparison of SFSCF-Net and Backbone Network. Column (a) shows the original images, while columns (b), (c), and (d) display the feature visualizations from the last three stages of the ResNet backbone network. Columns (e), (f), and (g) present the feature visualizations from the last three stages of our proposed SFSCF-Net. Compared to the features extracted by the backbone network, our network highlights the discriminative regions of the target objects more distinctly, with attention covering a broader area.

In summary, the suppression effect by different number of groupings can be well observed in the two-dimensional grouping suppression visualization experiments of SFSM. In the visualization comparison experiments of the model, SFSCF-Net can better cover the attention to more regions of the object, which reflects the effectiveness of complementary feature mining and inter-layer feature fusion. As a result, the problem of complex background noise in fine-grained image datasets is effectively solved by the object-level image generator; the problem that different features extracted from different convolutional layers cannot be effectively fused is effectively solved by the cross-feature fusion method. Figures 4 and 5 verify the effectiveness of the above two solutions very intuitively by comparing our model with the backbone network model.

Discussion

In this paper, a salient feature suppression and cross-feature fusion network model (SFSCF-Net) is proposed. The model has three main components: (1) an object-level image generator (OIG), which aims to eliminate complex background noise in images and enable the network to focus on the objects themselves. (2) A salient feature suppression module (SFSM), which aims to make the salient feature suppression regions more accurate in a two-dimensional suppression manner, while enabling more distinguishable regions to stand out and improve the classification performance of the model. (3) A cross-feature fusion method (CFM), which uses the principle of bilinear pooling to enable the interactive integration of features between layers of the network to enhance feature representation and diversify features. Our model can be trained end-to-end without additional manual annotation. The model was experimented on four widely used fine-grained datasets, achieving state-of-the-art performance on two datasets, CUB-200-2011 and FGVC-Aircraft, and competitive results on Stanford Cars and Stanford Dogs. The comparative experimental results demonstrate the superiority of the overall model performance. The ablation experiments further demonstrate the effectiveness of the three proposed methods. Our model and the model in Chap. 3 implement the recognition of everyday birds, cars, dogs, and airplanes in different perspectives, respectively, and can equally demonstrate the usefulness of our model. Our model is experimentally compared with a large number of other methods and models, and achieves better results, demonstrating the robustness and robustness of our model in recognizing subclasses of objects.

Code availability

Our code is available at https://github.com/syyang2022/SFSCF-Net.

References

Zhang, N. et al. Part-based R-CNNs for fine-grained category detection. In European Conference on Computer Vision 834–849 (Springer, Cham, 2014).

Branson, S. et al. Bird species categorization using pose normalized deep convolutional nets. arXiv preprint https://arxiv.org/abs/1406.2952 (2014).

Huang, S. et al. Part-stacked CNN for fine-grained visual categorization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 1173–1182 (2016).

Lin, D. et al. Deep lac: Deep localization, alignment and classification for fine-grained recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 1666–1674 (2015).

Wei, X. S., Xie, C. W. & Wu, J. Mask-cnn: Localizing parts and selecting descriptors for fine-grained image recognition. arXiv preprint https://arxiv.org/abs/1605.06878 (2016).

Hu, T. & Qi, H. See better before looking closer: Weakly supervised data augmentation network for fine-grained visual classification. ArXiv https://arxiv.org/abs/1901.09891 (2019).

Xie, J., Zhong. Y., Zhang, J., Zhang, C. & Schuller, B.W. A weakly supervised spatial group attention network for fine-grained visual recognition. Appl. Intell. 53(20), 23301–23315 (2023).

Fu, J., Zheng, H. & Mei, T. Look closer to see better: Recurrent attention convolutional neural network for fine-grained image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Rcognition 4438–4446 (2017).

Behera, A., Wharton, Z., Hewage, P. R. & Bera, A. Context-aware attentional pooling (CAP) for fine-grained visual classification. In AAAI Conference on Artificial Intelligence (2021).

PR et al. Pay attention to the activations: A modular attention mechanism for fine-grained image recognition (2019).

He, X., Peng, Y. & Zhao, J. Which and how many regions to gaze: Focus discriminative regions for fine-grained visual categorization. Int. J. Comput. Vis. 127(9), 1235–1255 (2019).

He, X. & Peng, Y. Weakly supervised learning of part selection model with spatial constraints for fine-grained image classification. In Thirty-first AAAI Conference on Artificial Intelligence (2017).

Tan, M. et al. Fine-grained image classification via multi-scale selective hierarchical biquadratic pooling. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 18(1s), 1–23 (2022).

Zhang, N., Shelhamer, E., Gao, Y. & Darrell, T. Fine-grained pose prediction, normalization, and recognition. In ICLR workshop (2016).

Huang, S., Xu, Z., Tao, D. & Zhang, Y. Part-stacked cnn for fine-grained visual categorization. https://arxiv.org/abs/1512.08086 (2015).

Zhang, H. et al. Spda-cnn: Unifying semantic part detection and abstraction for fine-grained recognition. In CVPR (2016).

Diba, A., Sharma, V. & Pazandeh, A. M., Pirsiavash, H. & Van Gool, L. Weakly supervised cascaded convolutional networks. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 5131–5139 (2016).

Zhaoyang Zeng, B., Liu, J., Fu, H. C. & Zhang, L. Wsod2: Learning bottom-up and top-down objectness distillation for weakly-supervised object detection. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV) 8291–8299 (2019).

Ren, Z. et al. Instance-aware, contextfocused, and memory-efficient weakly supervised object detection. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

Jinhwan Seo, W., Bae, D. J., Sutherland, J., Noh & Kim, D. Object discovery via contrastive learning for weakly supervised object detection. In Computer Vision ECCV 2022 (eds Avidan, S., Brostow, G., Cissé, M., Farinella, G. M., Hassner, T.) 312–329 (Springer Nature Switzerland, Cham, 2022).

Chang, D. et al. Your Flamingo is My Bird: Fine-Grained, or Not. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 11476–11485 (2021).

Huang, Z. et al. Interpretable attention guided network for fine-grained visual classification. In International Conference on Pattern Recognition 52–63 (Springer, Cham, 2021).

Xie, J. et al. A weakly supervised spatial group attention network for fine-grained visual recognition. Appl. Intell. 53, 23301–23315 (2023).

Guo, C. et al. Inverse transformation sampling-based attentive cutout for fine-grained visual recognition. Vis. Comput. 39, 2597–2608 (2023).

Liu, D. et al. DenserNet: Weakly supervised visual localization using multi-scale feature aggregation. In AAAI Conference on Artificial Intelligence (2020).

Lin, T. Y., RoyChowdhury, A. & Maji, S. Bilinear cnn models for fine-grained visual recognition. In Proceedings of the IEEE International Conference on Computer Vision 1449–1457 (2015).

Kong, S. & Fowlkes, C. Low-rank bilinear pooling for fine-grained classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 365–374 (2017).

Yu, C. et al. Hierarchical bilinear pooling for fine-grained visual recognition. In Proceedings of the European Conference on Computer Vision (ECCV) 574–589 (2018).

Cai, S., Zuo, W. & Zhang, L. Higher-order integration of hierarchical convolutional activations for fine-grained visual categorization. In Proceedings of the IEEE International Conference on Computer Vision 511–520 (2017).

Liu, D. et al. Recursive multi-scale channel-spatial attention for fine-grained image classification. IEICE Trans. Inf. Syst. 105–D, 713–726 (2022).

Liu, K., Chen, K. & Jia, K. Convolutional fine-grained classification with self-supervised target relation regularization. IEEE Trans. Image Process. 31, 5570–5584 (2022).

Wang, W. et al. Visual recognition with deep nearest centroids. ArXiv https://arxiv.org/abs/2209.07383 (2022).

Yan, L. et al. Video captioning using global-local representation. IEEE Trans. Circuits Syst. Video Technol. 32, 6642–6656 (2022).

Liu, D. et al. Tripartite feature enhanced pyramid network for dense prediction. IEEE Trans. Image Process. 32, 2678–2692 (2023).

Yu, C. et al. Lite-HRNet: A lightweight high-resolution network. In 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 10435–10445 (2021).

Zheng, H. et al. M2FN: A multilayer and multiattention fusion network for remote sensing image scene classification. IEEE Geosci. Remote Sens. Lett. 19, 1–5 (2022).

Zhang, F. et al. Multi-branch and multi-scale attention learning for fine-grained visual categorization. In International Conference on Multimedia Modeling 136–147 (Springer, Cham, 2021).

Kim, J. H. et al. Hadamard product for low-rank bilinear pooling. arXiv preprint https://arxiv.org/abs/1610.04325 (2016).

Rendle, S. Factorization machines. In 2010 IEEE International Conference on Data Mining 995–1000 (IEEE, 2010).

Wah, C. et al. The caltech-ucsd birds-200-2011 dataset (2011).

Maji, S. et al. Fine-grained visual classification of aircraft. arXiv preprint https://arxiv.org/abs/1306.5151 (2013).

Krause, J. et al. 3D object representations for fine-grained categorization. In Proceedings of the IEEE International Conference on Computer Vision Workshops 554–561 (2013).

Khosla, A. et al. Novel dataset for fine-grained image categorization: Stanford dogs. In Proc. CVPR workshop on fine-grained visual categorization (FGVC). Citeseer, Vol. 2(1) (2011).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint https://arxiv.org/abs/1409.1556 (2014).

He, K. et al. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 770–778 (2016).

Zheng, H. et al. Learning multi-attention convolutional neural network for fine-grained image recognition. In Proceedings of the IEEE International Conference on Computer Vision 5209–5217 (2017).

Moghimi, M. et al. Boosted convolutional neural networks. In BMVC, Vol. 5, 6 (2016).

Zhang, L. et al. Learning a mixture of granularity-specific experts for fine-grained categorization. In Proceedings of the IEEE/CVF International Conference on Computer Vision 8331–8340 (2019).

Ding, Y. et al. Selective sparse sampling for fine-grained image recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision 6599–6608 (2019).

Liu, C. et al. Filtration and distillation: Enhancing region attention for fine-grained visual categorization. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 34(07) 11555–11562 (2020).

Gao, Y. et al. Channel interaction networks for fine-grained image categorization. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 34(07) 10818–10825 (2020).

Du, R. et al. Fine-grained visual classification via progressive multi-granularity training of jigsaw patches. In European Conference on Computer Vision 153–168 (Springer, Cham, 2020).

Song, J. & Yang, R. Feature boosting, suppression, and diversification for fine-grained visual classification. In 2021 International Joint Conference on Neural Networks (IJCNN) 1–8 (IEEE, 2021).

Hu, T. et al. See better before looking closer: Weakly supervised data augmentation network for fine-grained visual classification. arXiv preprint https://arxiv.org/abs/1901.09891 (2019).

Luo, W. et al. Cross-x learning for fine-grained visual categorization. In Proceedings of the IEEE/CVF International Conference on Computer Vision 8242–8251 (2019).

Wang, Y., Morariu, V. I. & Davis, L. S. Learning a discriminative filter bank within a cnn for fine-grained recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 4148–4157 (2018).

Chang, D. et al. The devil is in the channels: Mutual-channel loss for fine-grained image classification. IEEE Trans. Image Process. 29, 4683–4695 (2020).

Zhang, S. et al. Knowledge transfer based fine-grained visual classification. In 2021 IEEE International Conference on Multimedia and Expo (ICME) 1–6 (IEEE, 2021).

Cui, Y. et al. Kernel pooling for convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2921–2930 (2017).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. CoRR https://arxiv.org/abs/1412.6980 (2014).

Loshchilov, I. & Hutter, F. Fixing weight decay regularization in adam. ArXiv https://arxiv.org/abs/1711.05101 (2017).

Hwang, D. FAdam: Adam is a natural gradient optimizer using diagonal empirical Fisher information. ArXiv https://arxiv.org/abs/2405.12807 (2024).

Acknowledgements

The work was supported by Scientific Research Fund of Zhejiang Provincial Education Department (Y202352263), the National Natural Science Foundation of China (No. 61972357) and Natural Science Foundation of Xinjiang Uygur Autonomous Region (No. 2022D01C349). This work is part of a larger study conducted for author Xinqi Yang’s master’s thesis.

Author information

Authors and Affiliations

Contributions

S.Y. contributed to the conception, supervision, writing—original draft preparation and review and editing of the study. X.Y. significantly contributed to the methodology, experiment and writing—original draft preparation. J.W. supervised the project and contributed to the mathematical modeling and experimental data analysis. B.F. contributed to methodology, investigation, software and resources, writing—original draft preparation. All authors discussed the results and contributed to the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yang, S., Yang, X., Wu, J. et al. Significant feature suppression and cross-feature fusion networks for fine-grained visual classification. Sci Rep 14, 24051 (2024). https://doi.org/10.1038/s41598-024-74654-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-74654-4

This article is cited by

-

Facial expression recognition via variational inference

Scientific Reports (2026)

-

Enhancing occluded and standard bird object recognition using fuzzy-based ensembled computer vision approach with convolutional neural network

Scientific Reports (2025)

-

Integrating interpretable artificial neural networks, geomorphic plausibility and transfer learning for landslide susceptibility mapping: a case study of Pacitan, East Java

Discover Sustainability (2025)

-

Multi-level navigation network: advancing fine-grained visual classification

The Journal of Supercomputing (2025)