Abstract

Murals, as important carriers of cultural heritage and historical records, showcase artistic, aesthetic, social, and political significance. In ancient times, religious activities such as burning incense and candles in temples led to many murals being polluted by soot, causing them to darken, lose details, and, in severe cases, completely blacken. As a result, the development of efficient virtual cleaning methods has become a key strategy for addressing this issue. In this study, we use synthetic true colour and false colour images in different bands of the hyperspectral spectrum, and use a guided filter fusion technique to fuse these two images into a new image of the sooty mural. Through analyzing the histograms and colour distribution scatterplots of the synthetic sooty mural images, we observed significant similarities to low-luminance images. To enhance the synthesized murals, we applied the LIME model. In addition, comparisons of the histograms and colour distribution scatterplots of the enhanced sooty mural images with those of haze images revealed notable similarities. Therefore, we applied the dark channel prior algorithm to remove soot from the mural images. Considering that soot particles are larger than haze particles, we introduced guided filtering to refine the transmission map and created a nonlinear transformation function to enhance its details. In terms of both visual perception and quantitative analysis, the proposed method significantly outperforms previous methods in the virtual cleaning of sooty murals. This technology can not only restore the colours and details of murals but also provide new clues for subsequent mural studies, allowing people to once again appreciate the true beauty of the murals.

Similar content being viewed by others

Introduction

As one of the ancient art forms, murals have unique aesthetic and artistic value. They are also an integral part of ancient temples, grottoes, and other historical buildings. However, people burn incense or light candles in temples due to offerings and rituals, and the soot and particles from these burnings gradually deposit on the surface of the murals, causing them to blacken and obscure the original patterns and colours1. At the present time, the main conservation strategy for sooty murals is to preserve their current condition to prevent further deterioration.

In the field of cultural heritage studies, the use of non-invasive analysis methods for artefact study has become a topic of great interest2. Virtual restoration continues to develop and improve as an innovative approach, aiming to noninvasively restore artworks to their potential original appearance3,4. I. M. Ciortan et al.5 introduced an inpainting algorithm using a generative adversarial network for nondestructive virtual artwork restoration. The approach focused on edges and colour using two generators, which rebalanced colours in a dataset-specific gamut space, and demonstrated the model’s success on Dunhuang murals, yielding both visually and quantitatively competitive results. I. Sipiran et al.6 proposed a data-driven approach that utilized a novel point cloud neural network architecture to successfully repair pottery and demonstrated the effectiveness of general shape complementation using the ShapeNet and Completion3D datasets. V. Rakhimol et al.7 used cGANs and deep convolutional networks for mural reconstruction in response to the degradation problems faced by temple murals, surpassing six previous methods in terms of restoration quality. Mercedes Galiana et al.8 proposed a combination of deductive and inductive methods to virtually reconstruct disappeared buildings through the preservation of parts and documentation, with successful examples such as the Ambassador Vich’s Palace in Valencia. Sun et al.9 proposed a method for the virtual restoration of silk artefacts involving adaptive curve fitting and improved repair prioritization in a structurally oriented manner. Cao et al.10 developed an algorithm for automated calibration of flaking-related deterioration in Song Dynasty temple murals. This method, which utilized colour analysis and threshold segmentation, effectively improved restoration outcomes. Lv et al.11 introduced a novel network architecture, SeparaFill, that innovatively separated contour and content areas for restoration. The proposed method improved the PSNR by 1.1–4.3 dB compared to that of existing algorithms, demonstrating its promise for virtual restoration of ancient murals.

With the continuous advancement of technology, state-of-the-art equipment has been developed to acquire reflected radiation from murals in narrow spectral bands through single-point or linear scanning imaging modes, providing us with a wealth of hyperspectral information12,13. Currently, hyperspectral data is actively explored for surface feature detection. In "Picasso: The Blue Project" at the Picasso Museum in Barcelona, C. Cucci et al.14 used reflectance stellar imaging to revisit blue period paintings, revealing hidden layers and Picasso’s creative process. As the exhibition demonstrated, this technique proved effective in both analysis and education. D. Han et al.15 presented an efficient, non-destructive restoration method using hyperspectral imaging and computer technology. By repairing missing edges and identifying pigments, their method virtually reproduces the motifs of the Qin II bronze chariots, providing a valuable reference for similar painted bronze artefacts. C. Cucci et al.16 introduced a remote-sensing HSI approach utilizing avionic sensors and demonstrated its successful implementation at an archaeological site in Pompeii. The avionic hyperspectral imager effectively identified pictorial materials, mapped their distributions, and enhanced vanishing traits on wall paintings and mural inscriptions, demonstrating its potential for mural restoration and archaeological studies. X. Han et al.17 utilized near-infrared bands in hyperspectral imaging to detect graphite information and enhance mural features. By identifying graphite as the main pigment through spectral matching methods and selecting characteristic bands, the proposed approach efficiently extracts graphite information. To date, most studies on the hyperspectral data of artworks have focused mostly on surface pigment identification, implicit information mining, and primer line extraction. However, the study of virtual restoration of soot in murals is far from advanced. Previously, Cao et al.18 proposed a method to remove soot from mural images using a dark channel prior and Retinex bilateral filters. However, the method still presents some limitations. It failed to adequately distinguish between soot and haze and directly applied the dark channel prior to the sooty image, resulting in the restored image becoming even dimmer and requiring manual brightness adjustment. In addition, Retinex bilateral filters resulted in significant loss of textures and details in the mural. The restored murals exhibited severe colour distortion in specific areas, and the restoration of heavily sooty-contaminated areas was unsatisfactory. Building on this, Sun et al.19 proposed a new method combining inverse operation and twice colour attenuation prior, achieving even more outstanding results in the virtual cleaning of sooty murals.

Based on the characteristics of soot, we propose the concept of virtual cleaning. Virtual cleaning refers to the process of using non-destructive techniques to clean and remove contaminants from the surface of artworks. This process restores the original appearance of artworks by processing their images. Therefore, we investigated a novel method for virtual cleaning of sooty murals. We discovered that the HSI approach exhibits a distinct penetrating capacity for capturing sooty murals compared to ordinary cameras. We use synthetic true colour and false colour images in different bands of the hyperspectral spectrum, and use a guided filter fusion technique to fuse these two images into a new image of the sooty mural. By carefully analysing the histograms and colour distribution scatterplots, we also proposed new insights for soot restoration. On this basis, we developed a combination of the LIME model and the Improved Dark Channel Prior (IDCP) to effectively clean sooty murals and address issues in early virtual cleaning. As such, the contributions of this study are summarised below:

This study proposes a novel virtual cleaning method that generates new sooty mural images based on hyperspectral data and image fusion. The method achieves virtual cleaning by combining the LIME model with an improved dark channel prior algorithm and has demonstrated excellent results on real sooty murals, successfully restoring both details and colours. By revealing details obscured by soot, it provides additional information about the mural, supporting historical research and the exhibition of cultural relics.

Materials and methods

The study area selected for this research is the mural located in the Daheitian Hall, Qinghai, China. This mural exhibits a distinctive style and character. Unfortunately, these murals have undergone significant soot, leading to the degradation of pattern information, which necessitates virtual cleaning to recover the mural’s original details. The entire mural is 0.97 m high and 2.11 m long, with 24 views of hyperspectral images. Figure 1 presents a hyperspectral stitched image of the complete mural. The experimental data were collected using a VNIR400H hyperspectral imaging camera, capturing images across 1040 wavelength bands ranging from 400 nm (visible) to 1000 nm (near-infrared), with a spectral resolution of 0.6 nm. The size of each shot is 30 cm × 40 cm. During data acquisition, the hyperspectral camera was positioned approximately 1 m from the mural. To ensure lighting consistency comparable to sunlight, two halogen lamps were used as light sources due to their similar spectral characteristics. The hyperspectral camera was controlled via computer software using a wireless connection.

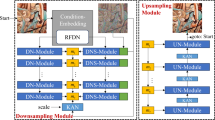

Generally, the spectral range of a digital camera closely corresponds to the visible spectral range perceived by the human eye, spanning approximately 380 nm to 760 nm. In contrast, a hyperspectral camera has a broader spectral range than a conventional digital camera, encompassing multiple wavelength regions. Hyperspectral cameras can capture and analyze the spectral information of objects across various wavelengths. Typically, hyperspectral cameras operate within the visible and near-infrared spectral ranges, extending beyond the visible spectrum of the human eye to include part of the infrared spectrum. As shown in Table 1, a detailed analysis of distinct bands within the soot revealed a progressive improvement in pattern clarity as the band values increased. This observation highlights the effectiveness of the near-infrared band in penetrating soot, a spectral region typically omitted by digital cameras. As a result, the decision was made to use the hyperspectral data as the foundational for subsequent processing. The overall framework of the proposed method is illustrated in Fig. 2.

Data preprocessing

During hyperspectral data acquisition, the data are affected by other light in the acquisition area. Therefore, light interference is reduced by using radiometric correction, which uses a reference image to convert the pixel DN values in the original hyperspectral image to the spectral reflectance of the relative material by using Eq. (1):

where \(I\) represents the corrected hyperspectral image in terms of relative reflectance (%), \(I_{0}\) represents the original hyperspectral image, \(B\) represents the dark current, and \(W\) represents the white reference image (the reflectance is approximately 99.9%). Preprocessed hyperspectral images contain redundant data and several types of noise. There is a large amount of noise in the bands at the ends of the wavelengths. Therefore, we manually selected 940 bands in the wavelength range of 51–990 (405.79–1000.79 nm) for subsequent processing to reduce noise. Subsequently, the MNF20 method was employed to further remove noise from the hyperspectral data. We performed the MNF transform on the band clipped hyperspectral data to separate the noise from the information and selected the top 10 components containing the most information to be inverted as a means of recovering the dimensionality of the hyperspectral data and realizing the denoising of the hyperspectral data.

Using the 460.20 nm, 549.79 nm, and 640.31 nm bands, we synthesized the true colour image (TCI) and selected regions of interest from both the red background and the sooty red background of the mural. The average spectral curves for these regions were computed, revealing similar trends with a crossover point occurring at approximately 800 nm (Fig. 3), indicating minimal influence of soot on the red background in this wavelength range. Consequently, we selected 825.74 nm as the characteristic wavelength for the near-infrared region, and 549.78 nm and 460.20 nm as the characteristic wavelengths for the green and blue bands, respectively. These bands were used to synthesize the false colour image (FCI), which served as the red, green, and blue channels. In the false colour image, the red background exhibited enhanced vibrancy and clearer black lines, but noticeable alterations were observed in the colour of the character costumes. To address this issue, the study applied image fusion techniques.

Initially, we optioned the background region from the TCI and subjected it to Gaussian blurring using a coefficient of 1.0. Similarly, this Gaussian blurring procedure was applied to the figure region within the FCI. Subsequently, the two blurred images were fused via guide filter fusion21. Guide filter fusion involves two main processes: dual-scale image decomposition and the construction of weight maps using guide filters. In the dual-scale image decomposition process, the input image is divided into two parts through average filtering: the base layer and the detail layer. The base layer contains the image’s large-scale changes, such as global brightness and contrast variations. The detail layer captures small-scale details, such as edges and textures. This decomposition allows for separate processing of the image’s multiscale information, thus enhancing the flexibility and effectiveness of the fusion. Next, the saliency map of the input image is calculated using a Laplacian filter. Then, employing a guide filter, the saliency map is taken as the guidance image to filter the images to be fused, obtaining a weight map based on the saliency map. Specifically, for each pixel position, the guide filter modifies the filtering process according to the saliency map’s information, calculating the weight for that pixel. The weight indicates the importance of the pixel in the fusion process; pixels with higher saliency are assigned higher weights, and those with lower saliency are assigned lower weights. After obtaining the saliency-based weight map, it is applied to the fusion of the base and detail layers. For the base layer, different input images are combined using the weight map in a weighted average to create the fused base layer. A similar weighted averaging is also applied to the detail layer. In the end, the fused base and detail layers are summed to produce the final fused image. The newly synthesized mural image retains the ability of the near-infrared band to transmit soot while ensuring the stability of the colours of the figures’ costumes, which an important basis for subsequent virtual cleaning.

LIME model

An image’s histogram serves as a graphical representation that quantifies and illustrates the distribution of pixel gray levels or colours. This representation delineates the distribution of individual gray levels or colour values along the horizontal axis, while pixel frequencies or the count of pixels is depicted on the vertical axis. For colour images, histograms are typically delineated for distinct colour channels. In each colour channel histogram, the frequency or number of pixels is displayed for each colour value within that channel. Histograms yield statistical insights into images, facilitating analyses of attributes such as contrast, brightness, and colour distribution. As shown in Fig. 4a,b, intriguing patterns emerge upon evaluating the histograms of both sooty mural images and low brightness images. Specifically, a notable concentration of pixel counts within the luminance value range of 0 to 50 becomes evident across all three colour channels. This suggests a semblance in the pixel distribution within this luminance range between sooty murals and low brightness images. Nevertheless, as the luminance surpasses 50, the pixel counts experience a steep decline, ultimately approaching 0. This decrease implies a significant reduction in pixel counts between luminance values of 50–100 for both sooty murals and low brightness images. This trend can be attributed to the influence of soot and sedimentation on sooty murals, leading to diminished pixel counts at higher luminance values. Extending our analysis to luminance values exceeding 100, it becomes apparent that pixel counts nearly reach 0. This implies that in regions with higher luminance values, the details and brightness of sooty murals and low brightness images become blurred or disappear. To conclude, histogram analyses revealed the shared characteristics of the luminance distributions of both sooty mural images and low brightness images. Both demonstrate a marked pixel count reduction at medium luminance values and the absence of pixels in regions characterized by high luminance values. In addition, we analysed the colour distribution scatterplots, as shown in Fig. 4c,d. By using colour distribution scatterplots, we can represent the pixels in the image as scatter points and plot them in a three-dimensional colour space based on their colour values. The scatter plot visually displays the distribution of different colours in the image, enabling us to observe characteristics such as colour clustering, dispersion, and uniformity. Similarly, their colour distribution scatterplots are also very similar, with scatter points concentrated and clustered at the bottom. These insights offer valuable information and guidance for future research endeavors and the processing of sooty murals.

Based on this, we choose the LIME model to enhance the fused sooty mural image22. This model is based on Retinex theory and estimates the mural illumination map T and combines it with the fused sooty mural image I, thus obtaining the enhanced mural image E. The corresponding mathematical expression is shown in (2):

To simplify the calculation, the three colour channels in the mural image are considered to share the same light map, and the three colour channel maxima are used to estimate the illuminance. The initial lightmap T expression is shown in (3):

where \(T\left( x \right)\) represents the estimated illumination map at pixel location \(x\), the \(max\) function takes the maximum value over the \(R,G,B\) colour channels at pixel location \(x\), and \(I^{c} \left( x \right)\) represents the intensity value of colour channel \(c\) at pixel location x. The equation indicates that the maximum intensity across the \(R,G,B\) channels at pixel \(x\) is assigned to the illumination map estimate at pixel \(x\).

The LIME model obtains an initialized illumination map through Eq. (3) and then applies two different optimization methods to initialize the illumination map, namely, the accelerated solution and the exact solution. The first method is used to solve the approximation problem alternatively, leading to significant time savings, while the other method can obtain an exact optimal solution to the target problem. Moreover, strategies I, II, and III are provided to further refine the light map. To ensure that this illumination map does not over saturate the enhanced image, a very small parameter \(\epsilon\) is added to limit it, which here takes the value of 0.02. Therefore, the final functional expression for the enhanced mural image is shown below:

Improved dark channel prior

Upon observing the surface of the enhanced mural, we found that the soot phenomenon changed significantly, showing a white colour, while the visual perception was similar to that of a layer of haze covering the mural. To study the characteristics in depth, we performed a histogram analysis of enhanced sooty mural images and dehaze images, and the results are shown in Fig. 5a,b. It is worth noting that the number of pixels with pixel intensities between 0 and 50 in enhanced sooty mural images is extremely limited and almost 0. This implies that there are almost no very dark pixels on the surface of enhanced sooty mural images, and hazy images exhibit a similar situation in that interval. At the same time, we also observe a significant increase in the number of pixels in enhanced sooty mural images between 150 and 255, reaching peak levels, which is similar to the case of hazy images. This indicates that both enhanced sooty mural images and hazy images contain more pixel points in the medium-to-high brightness range. Both visual and histogram analysis revealed that enhanced sooty mural images are somewhat similar to haze images. Similarly, Fig. 5c,d show that the colour distribution scatterplots of enhanced sooty mural images and haze images exhibit similar characteristics, with a concentrated distribution.

Because soot severely affects light propagation, mural images become dim and blurred. We present an imaging model for enhancing sooty murals, which mimics the atmospheric scattering model23,24,25. The expression is shown in Eq. (5).

where E(x) is the enhanced sooty mural image, A is the amount of scattered light caused by soot, t(x) is the soot-induced transmittance, and R(x) is the recovered sooty mural image.

The dark channel prior26,27 is based on the observation that in the dark channel of a haze-free image, the intensity of most pixels is close to 0. By utilising this prior knowledge, atmospheric light and transmission rate can be estimated, thereby restoring a clear image. For each pixel in the colour image, the minimum value is extracted from the RGB channels within its local neighbourhood, and these minimum values are combined to form the dark channel map. This step produces an estimated dark channel map, where each pixel value corresponds to the smallest RGB channel value within its local neighbourhood. To improve the accuracy and smoothness of the dark channel map, a minimum filter can be applied to the surrounding pixels to find the smallest grey-scale value within the neighbourhood, and this value is assigned to the current pixel, resulting in a precise dark channel map. The dark channel prior expression is as follows:

where \(R^{dark} \left( x \right)\) is the dark channel value of image R, whose value generally tends to 0; \(R^{c} \left( y \right)\) is one of the three channels of \(R\left( y \right)\); and \(\Omega \left( x \right)\) is the neighborhood centered at pixel point \(x\).

To verify that enhanced sooty mural images conform to the dark channel prior, this study analysed dark channel maps of both enhanced sooty murals and soot-free murals. The results are given in Fig. 6. The dark channel map of enhanced sooty murals showed that areas affected by soot have now turned grayish-white. The lower the degree of soot is, the darker the dark channel map is, and the smaller the value of the pixel points is; the higher the degree of soot is, the brighter the dark channel map is, and the larger the value of the pixel points is. However, the dark channel map of soot-free murals primarily displays a large amount of black, indicating that in soot-free murals, at least one colour channel has some pixels whose intensity are very low and close to zero. Equivalently, the minimum intensity in such a patch is close to zero. This is consistent with the dark channel prior.

The original dark channel prior was obtained by pick the top 0.1 percent brightest pixels in the dark channel. These pixels are usually most haze-opaque. Among these pixels, the pixels with highest intensity in the input image are selected as the atmospheric light. It is imperative to note that the majority of the murals we analysed exhibit multiple forms of degradation, not limited to a single type. For instance, beyond the issue of soot, many of these murals also suffer from the loss of pigment layers, characterized by white, highly reflective areas. This loss can significantly impact the selection of the brightest pixels in image processing. Our experiments have consistently shown that manually adjusting the value of A yields better results in virtual cleaning soot. When the scattered light value A caused by soot is known, the transmittance \(t\left( x \right)\) can be roughly estimated, as shown in Eq. (7),

where the constant \(\omega\) is the removal soot factor and 0 < \(\omega\) < 1,Ec is a colour channel component in image E.

By substituting \(t\left( x \right)\) and \(A\) into Eq. (5), the restored sooty mural can be obtained, as shown in Eq. (8),

In Eq. (8), to avoid distortion and noise caused by too small a value of transmittance, the lower limit of transmittance is limited, and the limit of \(t_{0}\) is taken to be 0.05.

Due to the size difference between soot and haze particles, soot particles are larger, resulting in a coarser transmittance. We use a guide filter to refine the transmittance due to soot. Here, the guide filter28 uses the grayscale map \(I\) of the enhanced mural image as the guiding image, and the transmittance \(t\left( x \right)\) obtained from Eq. (7) is used as the input map. The role of the guide filter here is to optimize the transmittance during the soot removal process so that the transmittance more accurately represents the degree of scattering of soot by each pixel point in the image. This approach can avoid unsatisfactory recovery results due to inaccurate estimation of transmittance. The filter radius controls the influence range of the filter, which makes the filtering result smoother while preserving the edge information of the mural. The output map can be expressed as:

where \(\omega\) is a square window of customized radius and \(a_{k}\) and \(b_{k}\) are two linear factors of the window. The window radius is defined as r. This local linear relationship ensures that the edges of the output \(t_{1} \left( x \right)\) coincide with the edges of the guide image \(I\). The main advantage of the guide filter is that it obtains optimal solutions for \(a_{k}\) and \(b_{k}\), thus minimizing the difference between the output image and the input image. In the custom window, the cost function of \(\omega_{k}\) is

where \(\varepsilon\) is the parameter for adjusting the value of \(a_{k}\). The optimal solution of \(a_{k}\) and \(b_{k}\) can be obtained by combining the above equation:

where \(\left| \omega \right|\) denotes the number of pixels in window \(\omega_{k}\), \(\overline{{t_{k} }}\) is the mean value of \(t\), and \(\sigma_{k}^{2}\) and \(\mu_{k}\) are the variance and mean of \(I\), respectively. When pixel point \(i\) is located in different windows, it is easy to obtain different values of \(a_{k}\) and \(b_{k}\). Therefore, the values of \(a_{k}\) and \(b_{k}\) in the window centered on point \(i\) are averaged, which leads to the new transmittance \(t_{1} \left( x \right)\):

where \(\overline{a}_{i}\) and \(\overline{b}_{i}\) are obtained by mean filtering, \(\overline{a}_{i}\) = \(\frac{1}{\left| \omega \right|}\mathop \sum \limits_{{i \in \omega_{k} }} a_{k}\), and \(\overline{b}_{i}\) = \(\frac{1}{\left| \omega \right|}\mathop \sum \limits_{{i \in \omega_{k} }} b_{k}\).

The input image is the rough estimated transmittance map of the sooty mural image, and the output image is the transmittance map after refinement by the guide filter. The refined transmittance map not only has better detail retention and is clearer but also suppresses the blocking effect, is overall more delicate and smoother, and better responds to the transmittance caused by the soot. Moreover, we devised a nonlinear transformation function aimed at further enhancing the transmittance. The specific nonlinear transformation formula is presented below:

The variable \(t_{1} \left( x \right)\) represents the transmittance after the guided filter, with a range of values from 0 to 1. The parameter \(a\), when negative, results in a reverse transformation. When the input value \(t_{1} \left( x \right)\) is large, the negative value of the cubic term \(t_{1} \left( x \right)^{3}\) reduces the transformed value, thereby enhancing the compression effect on high-intensity pixels. This is particularly useful for adjusting the highlights in an image, reducing overexposure or intensity in bright areas, and achieving a more balanced appearance. The parameter \(b\), multiplied by the square of \(t_{1} \left( x \right)\), causes a forward transformation. A positive value of the square term enlarges the transformed value within the range of smaller input values, thereby enhancing the stretching effect on low-intensity pixels. This improves the image contrast and makes the darker details more prominent. The parameter \(c\) acts as a constant term, shifting the transformed values either upwards or downwards, thereby adjusting the overall brightness.

By applying a nonlinear transformation to the transmittance map, the algorithm aims to enhance contrast and highlight the variations in transmittance. This helps reveal more details obscured by soot and improves the overall visual quality of restored murals. As shown in Fig. 7, a comparison reveals that the transmittance map after nonlinear transformation exhibits clearer details and more pronounced soot traces. Therefore, the final expression for restoring the sooty mural is as follows:

Evaluation methods

Traditionally, subjective indicators for evaluating the effectiveness of virtual mural cleaning include visual interpretation and expert scoring. The visual interpretation method is an approach where images are analyzed and evaluated through manual visual inspection. Evaluators subjectively assess the processing results based on the appearance, details, and colours of the images. Expert scoring, on the other hand, relies on experts to perform the evaluation. Experts assign scores to the virtually cleaned images based on specific evaluation criteria29.

In terms of quantitative analysis, given the unique characteristics of sooty murals, traditional dehazing image evaluation metrics (such as contrast, edge strength, and structural information) are not applicable. Therefore, we propose two new objective evaluation metrics: Saturated Pixel Percentage (SPP) and Dark Channel Prior Deviation (DCPD).

SPP is an index used to evaluate image quality, primarily measuring the percentage of pixels in an image that exceed a certain saturation threshold. Saturation reflects the vividness of colours in an image, with higher saturation making images appear more vibrant and livelier. The mathematical expression for SPP is presented in Eq. (15),

where \(M\) and \(N\) are the number of rows and columns of the image, and \(M \times N\) represents the total number of pixels in the image, \(S\left( {i,j} \right)\) is the saturation value of the image at pixel position \(\left( {i,j} \right)\), \(T\) is the saturation threshold, indicating that pixels with values exceeding this threshold are considered saturated (set to 0.5 here), \(1\left( {S\left( {i,j} \right) > T} \right)\) is an indicator function. When the condition \(\left( {S\left( {i,j} \right) > T} \right)\) is true, the value is 1; otherwise, it is 0, indicating whether the pixel is saturated.

The SPP assesses image quality by calculating the proportion of high-saturation pixels. A lower SPP mean that the image colours are dark, while a higher SPP indicate more vivid and lively colours. Similarly, the colours of the mural are obscured by soot, causing it to appear dull, thus concealing the original colours of the artwork. After virtual cleaning, the colours are effectively restored, enhancing the vividness and making the cleaned image closer to its original state. Therefore, a higher SPP value indicates that more soot-covered pixels have been successfully cleaned, demonstrating the effectiveness of the virtual cleaning process.

The Dark Channel Prior theory suggests that in most natural, haze-free images, at least one colour channel (red, green, or blue) of most pixels has a value close to zero. Based on this theory, the dark channel value of an image can be calculated, representing the minimum colour value within a local neighborhood of each pixel. In haze-free images, the dark channel values are typically small and close to zero. Haze removal algorithms rely on this theory, aiming for the dark channel values of the dehazed image to approximate those of haze-free images. For dehazed images, ideally, the dark channel value should be close to zero. Therefore, by calculating the average dark channel value of the dehazed image, the effectiveness of haze removal can be quantified. This average is referred to as the Dark Channel Prior Deviation (DCPD). The mathematical expression for DCPD is shown in Eq. (16),

where \(M\) and \(N\) are the number of rows and columns of the image, and \(M \times N\) represents the total number of pixels in the image; \(I_{dark} \left( {i,j} \right)\) is the dark channel value of the dehazed image at position \(\left( {i,j} \right)\).

Therefore, a larger DCPD value indicates that the dehazed image deviates from the dark channel prior theory, leading to poorer dehazing performance, whereas a smaller DCPD value suggests better results, as the image is closer to the expected haze-free state. Similarly, using this metric, we can evaluate the effectiveness of different methods for virtual soot cleaning. A smaller DCPD value indicates that the mural is closer to a soot-free state, reflecting better soot removal effectiveness.

Results

The LIME model is particularly effective for handling images with uneven lighting and low brightness, characteristics often found in sooty murals. By isolating and adjusting the illumination components of the image, the LIME model can effectively enhance the brightness, contrast, and details of the mural, revealing areas obscured by soot and significantly improving the overall visual quality. After processing with the LIME model, the blackened sooty regions of the mural visually appear white, resembling the features of hazy images. The dark channel prior is based on the assumption that for hazy images (excluding the sky), the pixel values in the dark channel tend to be higher, exhibiting a greyish-white tone; whereas for haze-free images, the pixel values are lower, resulting in predominantly dark areas. By analysing the dark channel maps of murals enhanced by the LIME model and their soot-free counterparts, it is evident that the areas affected by soot in the enhanced murals exhibit a greyish-white tone, while the dark channel maps of soot-free murals display predominantly black regions (as shown in Fig. 6). This aligns with the assumptions of the dark channel prior. Therefore, by combining the LIME model with the dark channel prior, we were able to achieve a virtual cleaning of the murals, yielding promising results.

In this section, a sooty mural is used as an example, and Fig. 8 shows the results of each step for this mural image. Furthermore, we have restored the entire mural of the Daheitian Hall, as shown in Fig. 9. This demonstrates the effectiveness of the proposed method for the virtual cleaning of larger murals. All experiments were performed in a PC environment configured with an Intel(R) quad-core (TM) i3-10100F 3.20 GHz CPU and 16 GB of RAM. The optimal parameters were determined through multiple experiments. The optimal results were achieved when the exact solver and Strategy I were selected in the LIME model, and the gamma-transformed luminance adjustment factor was set to 0.8. For the improved dark channel prior, the minimum value filter window size was set to 3, the soot removal factor \(\omega\) was set to 0.95, the scattered light value caused by soot was set to 230, and the guide filter radius \(r\) was set to 16. The nonlinear transformation coefficients \(a, b\), and \(c\) are best set to 0.7, 1.3, and 0.2, respectively, to achieve optimal restoration results. The virtual cleaning results demonstrate that the research effectively restored mural images contaminated by soot, possibly restoring their appearance before degradation and providing a crucial reference for their conservation.

Discussion

Restoration results using different images

Digital cameras are also the usual means of acquiring images of murals. Therefore, in this section, RGB image of murals were selected as the restoration subject, and virtual cleaning was performed based on the proposed methodology to evaluate its effectiveness in soot removal. The results are shown in Fig. 10a. It can be seen that virtual cleaning is less effective in areas with a large background of soot. Soot interference remains severe, leading to suboptimal representation of patterns and colour information. Similarly, the virtual cleaning result using the true colour image (see Fig. 10b) shows that white flocculent material persists in heavily sooty areas, suggesting limited removal efficacy. The recovery result using the synthetic false colour image (see Fig. 10c) demonstrates partial soot removal in the background; however, noticeable discoloration is observed in the figures’ clothing. In contrast, the cleaning result using the fused image achieved the best visual effect (see Fig. 10d).

Comparison with previous virtual cleaning methods

As soot degradation poses a significant challenge, there is limited research on the virtual cleaning of sooty murals. Comparisons with several commonly used image enhancement algorithms, such as homomorphic filtering and Gaussian stretching, were made in previous literature18. The results indicate that these classical methods are not effective, so this paper directly compares it with the method in18 and the state-of-the-art method in19.

Using the same research dataset (samples 1, 2, and 3), we conducted a comprehensive comparison between the proposed method and previous methods. As shown in Fig. 11, from a visual interpretation perspective, the restoration of sooty murals from18 suffered significant visual degradation. Notably, the mural images restored by the method showed a substantial loss of clarity. In particular, details within figures, such as eyes, headdresses, and clothing, lacked crisp rendering. There is also significant discolouration in some places. In the background (enclosed in the yellow box), the restoration results from18 still struggled with incomplete soot removal. Distinct white soot traces remained visible, and the collective black lines in the background exhibited a bluish tint, adversely affecting the mural’s original presentation. Moreover, the meticulous textures suffered significant blurring during restoration, leading to a loss of the original rich details and clarity. In contrast, the method proposed in this paper has significant advantages in terms of visual effects. The virtual cleaning results using the method from reference19 showed good performance on certain specific samples (such as sample 2), but for other samples (such as sample 1 and sample 3), the visual results were not as good as the method proposed in this paper.

We calculated the above objective metrics for both the previous methods and the proposed method, as shown in Table 2. The results indicate that the proposed method outperforms the previous methods in most evaluation metrics.

Comparison with other image processing methods

To validate the advantages of combining the LIME model with the IDCP, we selected several enhancement methods, such as SSR, MSR, and MSRCR30, and combined them with the IDCP for comparison with the proposed method. We then selected several advanced dehazing techniques, including the original DCP26, RADE31, and SLP32, and combined them with the LIME model for further comparison. The results of the various method combinations are presented in Table 3. We cropped the outputs, focusing on the areas with more severe soot, as shown in Table 4. SSP and DCPD were used as evaluation metrics for the cropped areas, and the calculation results are shown in Fig. 12. The results demonstrate that the proposed method not only outperforms other methods in terms of visual quality but also significantly exceeds them in the evaluation metrics.

Correctness of virtual cleaning results

We searched for relevant information on the murals of the Qutan Temple. Previous studie33 have analysed the pigments of the murals in Qutan Temple using X-ray diffraction, isotope X-ray fluorescence, optical microscopy, cross-sectional analysis, scanning electron microscopy with energy-dispersive spectrometry, and hyperspectral methods. Their results showed that the red pigment was cinnabar, the blue pigment was azurite, and the yellow pigment was orpiment, as shown in Fig. 13. This confirms that the main results of the virtual cleaning are accurate in terms of the colour palette.

Virtual cleaning of other murals

In addition, we verified the applicability of the proposed method by collecting sooty murals from other walls. These murals (Fig. 14a,c,e), which differ from those in the Daheitian Hall, are from the Baoguang Hall of Qutan Temple, and their virtual cleaning results are shown in Fig. 14b,d,f. This further demonstrates that our method achieves notable results on other murals as well. In addition to performing virtual cleaning on sooty murals, we also applied this method to different types of cultural relics. By applying virtual cleaning to a polluted beam painting from the Great Bell Temple in Beijing (Fig. 14g), we also achieved a good result (Fig. 14h).

As a further step, we performed virtual cleaning on a heavily sooty mural from Houheilong Temple in Yanqing District, Beijing. The surface of the mural was covered with a thick layer of soot and significant scratches, nearly obscuring the original patterns, as shown in Fig. 15a. We applied the proposed method, with the results shown in Fig. 15b. After virtual cleaning, some of the patterns in the upper right corner of the mural were faintly enhanced, but the lower right patterns remained unclear. Therefore, the proposed method retains certain limitations when dealing with severely sooty areas of murals. In addition, we calculated the SPP and DCPD for the virtual cleaning results of the heavily sooty mural, as shown in Table 5. Based on the metrics, the virtual cleaning results for heavily sooty murals are not as effective as those for lightly or moderately sooty murals.

Conclusion

This paper presents an in-depth study on the virtual cleaning of soot in murals using hyperspectral data. We use synthetic true colour and false colour images in different bands of the hyperspectral spectrum, and use a guided filter fusion technique to fuse these two images into a new image of the sooty mural, significantly aiding future virtual cleaning efforts. We enhanced these newly fused murals using the LIME model through a combined analysis of histogram and colour distribution scatterplot. In order to further improve the dark channel prior to achieve better virtual cleaning results, we utilize a guide filter and specially designed nonlinear functions sharpens the precision of transmittance affected by soot, leading to more effective soot removal. The effectiveness of our methodology is exemplified in its application to sooty murals in some temple, where it not only overcomes the limitations of previous methods but also restores colour and information to the murals.

Moreover, the proposed method has certain limitations when applied to the virtual cleaning of heavily sooty murals. Future research will investigate whether shortwave infrared (SWIR) can provide better penetration for heavily sooty areas and if integrating SWIR technology with image processing could overcome these limitations. Additionally, further studies will explore the development of a network model for virtual cleaning to enable rapid and simultaneous processing of multiple mural images. In the meantime, given the current hardware capabilities and capture speed of hyperspectral imaging devices, there may be some challenges in acquiring data for particularly large murals. Tracking the development of new hyperspectral imaging equipment is also an area of interest for us.

Data availability

The data are available from the corresponding author.

References

Al-Emam, E., Motawea, A. G., Caen, J. & Janssens, K. Soot removal from ancient Egyptian complex painted surfaces using a double network gel: empirical tests on the ceiling of the sanctuary of Osiris in the temple of Seti I—Abydos. Herit. Sci. 9, 1. https://doi.org/10.1186/s40494-020-00473-1 (2021).

Acke, L., De Vis, K., Verwulgen, S. & Verlinden, J. Survey and literature study to provide insights on the application of 3D technologies in objects conservation and restoration. J. Cult. Herit. 49, 272–288. https://doi.org/10.1016/j.culher.2020.12.003 (2021).

Zhang, Z., Xiong, K. & Huang, D. Natural world heritage conservation and tourism: a review. Herit. Sci. 11, 55. https://doi.org/10.1186/s40494-023-00896-6 (2023).

Priego, E., Herráez, J., Denia, J. L. & Navarro, P. Technical study for restoration of mural paintings through the transfer of a photographic image to the vault of a church. J. Cult. Herit. 58, 112–121. https://doi.org/10.1016/j.culher.2022.09.023 (2022).

Ciortan, I.-M., George, S. & Hardeberg, J. Y. Colour-balanced edge-guided digital inpainting: applications on artworks. Sensors 21, 2091. https://doi.org/10.3390/s21062091 (2021).

Sipiran, I., Mendoza, A., Apaza, A. & Lopez, C. Data-driven restoration of digital archaeological pottery with point cloud analysis. Int. J. Comput. Vis. 130, 2149–2165. https://doi.org/10.1007/s11263-022-01637-1 (2022).

Rakhimol, V. & Maheswari, P. U. Restoration of ancient temple murals using cGAN and PConv networks. Comput. Graph. 109, 100–110. https://doi.org/10.1016/j.cag.2022.11.001 (2022).

Galiana, M., Más, Á., Lerma, C., Jesús Peñalver, M. & Conesa, S. Methodology of the virtual reconstruction of arquitectonic heritage: ambassador Vich’s Palace in Valencia. Int. J. Archit. Herit. 8, 94–123. https://doi.org/10.1080/15583058.2012.672623 (2014).

Sun, X. et al. Structure-guided virtual restoration for defective silk cultural relics. J. Cult. Herit. 62, 78–89. https://doi.org/10.1016/j.culher.2023.05.016 (2023).

Cao, J., Li, Y., Cui, H. & Zhang, Q. Improved region growing algorithm for the calibration of flaking deterioration in ancient temple murals. Herit. Sci. 6, 67. https://doi.org/10.1186/s40494-018-0235-9 (2018).

Lv, C., Li, Z., Shen, Y., Li, J. & Zheng, J. SeparaFill: Two generators connected mural image restoration based on generative adversarial network with skip connect. Herit. Sci. 10, 135. https://doi.org/10.1186/s40494-022-00771-w (2022).

Striova, J., Dal Fovo, A. & Fontana, R. Reflectance imaging spectroscopy in heritagescience. La Rivista del Nuovo Cimento 43, 515–566. https://doi.org/10.1007/s40766-020-00011-6 (2020).

Filippidis, G. et al. Nonlinear imaging and THz diagnostic tools in the service of Cultural Heritage. Appl. Phys. A 106, 257–263. https://doi.org/10.1007/s00339-011-6691-7 (2012).

Cucci, C., Picollo, M., Stefani, L. & Jiménez, R. The man who became a Blue Glass. Reflectance hyperspectral imaging discloses a hidden Picasso painting of the Blue period. J. Cult. Herit. 62, 484–492. https://doi.org/10.1016/j.culher.2023.07.003 (2023).

Han, D., Ma, L., Ma, S. & Zhang, J. The digital restoration of painted patterns on the No. 2 Qin bronze chariot based on hyperspectral imaging. Archaeometry 62, 200–212. https://doi.org/10.1111/arcm.12516 (2020).

Cucci, C. et al. Remote-sensing hyperspectral imaging for applications in archaeological areas: non-invasive investigations on wall paintings and on mural inscriptions in the Pompeii site. Microchem. J. 158, 105082. https://doi.org/10.1016/j.microc.2020.105082 (2020).

Han, X., Hou, M., Zhu, G., Wu, Y. & Ding, X. Extracting graphite sketch of the mural using hyper-spectral imaging method. Tehnicki vjesnik/Technical Gazette 22. https://doi.org/10.17559/TV-20150521123645 (2015).

Cao, N. et al. Restoration method of sootiness mural images based on dark channel prior and Retinex by bilateral filter. Herit. Sci. 9, 30. https://doi.org/10.1186/s40494-021-00504-5 (2021).

Sun, P. et al. Virtual cleaning of sooty murals in ancient temples using twice colour attenuation prior. Comput. Graph. 120, 103924. https://doi.org/10.1016/j.cag.2024.103924 (2024).

Harris, J. R., Rogge, D., Hitchcock, R., Ijewliw, O. & Wright, D. Mapping lithology in Canada’s Arctic: application of hyperspectral data using the minimum noise fraction transformation and matched filtering1. Can. J. Earth Sci. 42, 2173–2193. https://doi.org/10.1139/e05-064 (2006).

Li, S., Kang, X. & Hu, J. Image fusion with guided filtering. IEEE Trans. Image Process. 22, 2864–2875. https://doi.org/10.1109/TIP.2013.2244222 (2013).

Guo, X., Li, Y. & Ling, H. LIME: low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 26, 982–993. https://doi.org/10.1109/TIP.2016.2639450 (2017).

Narasimhan, S. G. & Nayar, S. K. Vision and the atmosphere. Int. J. Comput. Vis. 48, 233–254. https://doi.org/10.1023/A:1016328200723 (2002).

Narasimhan, S. G. & Nayar, S. K. Chromatic framework for vision in bad weather. In Proceedings IEEE Conference on Computer Vision and Pattern Recognition. CVPR 2000 (Cat. No.PR00662), vol. 591, 598–605 (2000).

Fattal, R. Single image dehazing. ACM Trans. Graph. 27, 1–9. https://doi.org/10.1145/1360612.1360671 (2008).

He, K., Sun, J. & Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33, 2341–2353. https://doi.org/10.1109/TPAMI.2010.168 (2011).

Ullah, E., Nawaz, R. & Iqbal, J. Single image haze removal using improved dark channel prior. In 2013 5th International Conference on Modelling, Identification and Control (ICMIC), 245–248 (2013).

He, K. & Sun, J. Fast guided filter, arXiv preprint arXiv:1505.00996. https://doi.org/10.48550/arXiv.1505.00996 (2015).

Cao, J., Li, Y., Zhang, Q. & Cui, H. Restoration of an ancient temple mural by a local search algorithm of an adaptive sample block. Herit. Sci. 7, 39. https://doi.org/10.1186/s40494-019-0281-y (2019).

Tian, F., Wang, M. & Liu, X. Low-light mine image enhancement algorithm based on improved Retinex. Appl. Sci. 14, 2213. https://doi.org/10.3390/app14052213 (2024).

Li, Z., Zheng, X., Bhanu, B., Long, S., Zhang, Q. & Huang, Z. Fast region-adaptive defogging and enhancement for outdoor images containing sky. In 2020 25th International Conference on Pattern Recognition (ICPR), 8267–8274 (2021).

Ling, P., Chen, H., Tan, X., Jin, Y. & Chen, E. Single image dehazing using saturation line prior. IEEE Trans. Image Process. 32, 3238–3253. https://doi.org/10.1109/TIP.2023.3279980 (2023).

Gao, Z. et al. Application of hyperspectral imaging technology to digitally protect murals in the Qutan temple. Herit. Sci. 11, 8. https://doi.org/10.1186/s40494-022-00847-7 (2023).

Acknowledgements

The research project "Protection of Murals in Qutan Temple" was conducted in collaboration with the Dunhuang Academy, which generously provided their expertise and research resources to support this study. The author expresses sincere gratitude to the dedicated staff members of both Dunhuang Academy and Qutan Temple for their valuable contributions. Thanks to Cheng Cheng of the Ancient Bell Museum of Great Bell Temple and Zhang Tao of the Institute of Archaeology for the data on the coloured paintings on the beams of Great Bell Temple. This research was supported by the National Key Research and Development Program of China (No. 2022YFF0904400) and the National Natural Science Foundation of China (No. 42171356, No. 42171444).

Author information

Authors and Affiliations

Contributions

PYS: Conceptualization, Methodology, Writing—original draft. MLH: Methodology, Writing—review and editing, Funding acquisition. SQL: Methodology, Investigation, Funding acquisition. SNL: Editing, Investigation, Supervision. WFW: Project administration, Data curation. CC: Project administration, Data curation. TZ: Data curation.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Sun, P., Hou, M., Lyu, S. et al. Virtual cleaning of sooty mural hyperspectral images using the LIME model and improved dark channel prior. Sci Rep 14, 24807 (2024). https://doi.org/10.1038/s41598-024-75801-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-75801-7