Abstract

Tapping line detection and rubber tapping pose estimation are challenging tasks in rubber plantation environments for rubber tapping robots. This study proposed a method for tapping line detection and rubber tapping pose estimation based on improved YOLOv8 and RGB-D information fusion. Firstly, YOLOv8n was improved by introducing the CFB module into the backbone, adding an output layer into the neck, fusing the EMA attention mechanism into the neck, and modifying the loss function as NWD to realize multi-object detection and segmentation. Secondly, the trunk skeleton line was extracted by combining level set and ellipse fitting. Then, the new tapping line was located by combining edge detection and geometric analysis. Finally, the rubber tapping pose was estimated based on the trunk skeleton line and the new tapping line. The detection results from 597 test images showed the improved YOLOv8n’s detection mAP0.5, segmentation mAP0.5, and model size were 81.9%, 72.9%, and 6.06 MB, respectively. The improved YOLOv8n’s effect and efficiency were superior compared to other networks, and it could better detect and segment natural rubber tree image targets in different scenes. The pose estimation results from 300 new tapping lines showed the average success rate and average time consumed for rubber tapping pose estimation were 96% and 0.2818 s, respectively. The positioning errors in x, y, and z directions were 0.69 ± 0.51 mm, 0.73 ± 0.4 mm, and 1.07 ± 0.56 mm, respectively. The error angles in a, o, and n directions were 1.65° ± 0.68°, 2.53° ± 0.88°, and 2.26° ± 0.89°, respectively. Therefore, this method offers an effective solution for rubber tapping pose estimation and provides theoretical support for the development of rubber tapping robots.

Similar content being viewed by others

Introduction

The natural rubber tree is one of the most important economic crops in the world. Natural rubber is widely used in industrial production, national defense equipment, and other fields because of its excellent comprehensive properties. It is an indispensable industrial raw material and strategic material1,2. At present, natural rubber is mainly obtained by manually tapping the bark of rubber trees3. However, manual rubber tapping has high labor intensity, poor working environments, demanding working hours, high technical requirements, and high labor costs4. In addition, the price of rubber has been at a low ebb for a long time, and the income of rubber workers has significantly declined. Young people are unwilling to tap rubber, resulting in a serious loss of rubber workers. The shortage of rubber workers is becoming increasingly prominent, causing rubber plantations to abandon tapping and management, seriously hindering the healthy and sustainable development of the natural rubber industry5. Therefore, it is imperative to develop intelligent rubber tapping robots to replace manual rubber tapping. Among them, tapping line detection and rubber tapping pose estimation are key technologies of robot intelligent rubber tapping and are necessary guarantees for effective rubber tapping.

When the rubber tapping robot operates in the rubber plantation environment, the lighting conditions are complex, the object color and morphological characteristics are similar to the environment, and the color, texture, and shape of different tree ages and tree shapes vary greatly. These non-structural and uncertain factors make tapping line detection in a rubber plantation environment extremely challenging. In addition, if there is only position information about the new tapping line, the end effector of the rubber tapping robot is likely to collide with the non-new tapping line area of the rubber tree when moving toward the new tapping line, resulting in a reduced rubber tapping success rate, damage to the tree, and reduced production. For each new tapping line, it is very important to estimate its rubber tapping pose. Along the estimated rubber tapping pose, the end effector can move toward the new tapping line without colliding with the non-new tapping line area of the rubber tree.

So far, scholars have studied tapping line detection. According to the tapping line detection methods used, they can be roughly divided into traditional image processing methods and deep learning-based methods. Traditional image processing methods mainly rely on color and other feature information and use threshold segmentation and color difference methods to process the image to achieve the purpose of tapping line detection6. It has poor adaptability to situations such as no rubber liquid flowing out, large lighting changes, and the complex rubber plantation environment. The type of tapping line detected is single. To solve the above problems, researchers introduced tapping line detection methods based on deep learning. Sun et al.7 proposed an improved tapping line detection method for YOLOv5. Chen et al.8 proposed an improved mask region-based convolutional neural network (Mask R-CNN) tapping line detection algorithm to detect the tapping line in a rubber plantation environment. Both methods can achieve good tapping line detection results, but neither of them can achieve the task of tapping line detection in a night (rubber tapping usually starts at 2 a.m.) environment. The former can only roughly calculate the object’s position through the bounding box and can’t accurately obtain the object’s contour and shape information, which can’t meet the requirements for rubber tapping pose estimation. Although the latter achieves the acquisition of object contour shape information, it only detects and segments the tapped area. Rubber tapping pose estimation requires not only tapping line information but also tree trunk information. The latter is also unable to meet the requirements of rubber tapping pose estimation. Moreover, Mask R-CNN is a two-stage object detection algorithm with a large model size, many parameters, and a large amount of calculation, which makes it hard to meet the application needs of small mobile devices. Therefore, an object detection algorithm with both detection and segmentation functions is necessary for tapping line detection in rubber plantations. The algorithm should have multi-object detection and segmentation capabilities to meet the needs of rubber tapping pose estimation. At the same time, it should also have the characteristics of a small model size, few parameters, fast detection speed, and high detection and segmentation accuracy. As the current excellent single-stage object detection algorithm, You Only Look Once (YOLO) is widely used in the agricultural field due to its excellent performance and fast detection speed9,10,11,12,13,14,15. Among them, YOLOv8 was released by Ultralytics on the GitHub website in 2023 and can simultaneously complete tasks such as object classification, object detection, and object segmentation. YOLOv8 builds on the success of previous YOLO versions, introducing new features and improvements to further improve performance and flexibility. YOLOv8 is divided into five versions according to the depth and width of the network structure. Among them, the YOLOv8n model size is the smallest, and the number of parameters is the least. It can maintain the minimum storage occupation and the fastest recognition speed while ensuring high detection and segmentation accuracy, which is conducive to installation and implementation on mobile devices. Therefore, YOLOv8n has great potential for natural rubber tree tapping line detection in rubber plantation environments. However, when YOLOv8n is used for tapping line detection tasks, it has a good effect on the detection and segmentation of large, medium-sized, and salient objects. For small objects and natural rubber tree images with complex backgrounds, the detection and segmentation performance of YOLOv8n is not ideal. YOLOv8n needs to be improved in terms of feature information extraction, feature fusion, and so on to adapt to the needs of natural rubber tree tapping line detection in natural environments.

Currently, there are no research reports on rubber tapping pose estimation. In the agricultural field, research on pose estimation mainly focuses on fruit pose estimation. Sun et al.16 proposed a multi-task learning model to locate the center point of citrus and estimate the pose of citrus. Kim et al.17 proposed a deep learning network for tomato ripeness classification and six-dimensional (6D) pose estimation. Hussain et al.18 used Mask R-CNN for instance segmentation of green apple fruits and stems and then used principal component analysis to estimate the fruit pose. These methods can directly estimate the target pose from red, green, blue (RGB) images and speed up the pose estimation inference time, but they are only suitable for similar-to-circle targets. Furthermore, the lack of depth sensors limits the acquisition of target three-dimensional (3D) information, and the estimation of rubber tapping pose depends on the 3D information of the tree trunk skeleton. This method of estimating the target pose directly from RGB images is not suitable for rubber tapping pose estimation. Chen et al.19 proposed a method for tea bud detection and tea bud pose estimation using a depth camera in a tea garden environment for tea picking robots. Retsinas et al.20 proposed a method for mushroom detection and 3D pose estimation using RGB and depth information. Lin et al.21 proposed a method of fruit detection and pose estimation based on an RGB-depth (RGB-D) sensor for automatic and collision-free picking of guavas by a robot. Luo et al.22 proposed a method for object detection and 6D pose estimation using a depth camera for collision-free grape picking by robots in unstructured environments. These studies point out that target pose estimation can’t be performed only through RGB images. Depth information is crucial for target pose estimation. In actual scenes, the 3D pose information of the target is more critical. Similarly, depth information is indispensable for rubber tapping pose estimation. Based on this, the method of fusion of RGB-D information for rubber tapping pose estimation is proposed.

In summary, in order to solve the problem of tapping line detection and rubber tapping pose estimation for natural rubber trees in a natural environment at the same time, this study proposes a tapping line detection and rubber tapping pose estimation method for natural rubber trees based on improved YOLOv8n and RGB-D information fusion, which provides theoretical support for the rubber tapping robot’s vision system development and the end-effector’s motion planning in future research. The main contributions are summarized as follows:

-

(1)

A data set suitable for natural rubber tree tapping line detection and rubber tapping pose estimation under multi-angle, multi-light, and all-weather conditions was established.

-

(2)

The CSPLayer_2Conv (C2F) module of the backbone network was replaced by the convolution focal block (CFB) module, an output layer was added into the neck network, the efficient multi-scale attention (EMA) mechanism was fused into the neck network, and the Complete-IoU (CIoU) loss was replaced by the Normalized Wasserstein Distance (NWD) loss to improve YOLOv8n. The improved YOLOv8n was used to realize the multi-object detection and segmentation of the natural rubber tree image.

-

(3)

On the basis of the detection and segmentation of the natural rubber tree image, the trunk skeleton was extracted by the combination of level set and ellipse fitting, and the new tapping line was located by the combination of edge detection and geometric analysis. Then, the rubber tapping pose was calculated by the combination of the trunk skeleton and the new tapping line to realize the rubber tapping pose estimation.

-

(4)

The tapping line detection and rubber tapping pose estimation methods based on improved YOLOv8n and RGB-D information fusion were trained and tested to accurately detect and segment the object on the natural rubber tree image and effectively estimate the rubber tapping pose.

The remaining parts are organized as follows: Section "Materials and methods" introduces the composition of the image dataset, the tapping line detection model, and the rubber tapping pose estimation algorithm. In Section "Results and discussion", the experimental results of tapping line detection and rubber tapping pose estimation are given, and the results are analyzed and discussed in detail. Section “Conclusions” gives the main conclusions of the study and suggestions for future research.

Materials and methods

Image acquisition and dataset creation

The natural rubber tree in its natural growth state was taken as the experimental object. In four (the red boxes in Fig. 1a and b) natural rubber plantations in Danzhou City, Hainan Province, China, a digital camera (Sony DSC-RX100) and an RGB-D (Microsoft Kinect V2) camera were used for multi-angle shooting to collect images of natural rubber trees (Fig. 1c). The weather for image acquisition was sunny, cloudy, and nighttime (the LED light was used for supplementary lighting at night). The cutting years of natural rubber trees for image acquisition were one year, two years, three years, and above. The digital camera collected 800 RGB images. The imaging distance range of the camera was 300–1200 mm, and the image resolution was 5472 × 3648 pixels. The RGB-D camera was connected to a laptop (Lenovo Legion Y70002021) and collected 700 sets of RGB-D images. The imaging distance range of the RGB-D camera was 500–1500 mm. 700 depth images were obtained by an infrared camera with a resolution of 512 × 424 pixels, and 700 RGB images were obtained by an RGB camera with a resolution of 1920 × 1080 pixels. A total of 1500 RGB images and 700 depth images were collected. All images collected included the tapped area, the tapping line, the natural rubber tree, and the complex rubber plantation environment. As shown in Fig. 1c, the tapping line is in the tapped area. When the tapped area is detected, it means that the tapping line is also detected.

Image acquisition and mask visualization. (a) and (b) are image acquisition locations (figures were generated by the software Google Earth Pro 7.3.6.9796, and the URL link is https://earth.google.com/web/). (c) Image acquisition. (d) Mask visualization.

To reduce the calculation amount and running time of network model training, the image collected by the digital camera was scaled to 1920 × 1080 pixels, which was consistent with the RGB image pixels collected by the RGB-D camera. To improve the effect and generalization ability of the network training model, the collected RGB images were expanded using data enhancement methods. The collected 1500 RGB images were expanded to 2994 by increasing or decreasing brightness, color, and contrast. The outer contours of the tapped area, trunk, start area, and end area (Fig. 1d) in the RGB images of the natural rubber tree were labeled using the data annotation software Labelme to generate label files in json format, which were then converted into txt format and coco format using the json to txt and json to coco scripts to complete the production of RGB image data labels. To avoid the singularity within the image samples and ensure that the image set contained natural rubber tree images under various natural conditions, 597 images of the entire RGB image data set were randomly selected as the test set, and the remaining 2397 images were used as the training set. Among them, the test set included 297 RGB images and 300 sets of RGB-D images. To evaluate the performance of the rubber tapping pose estimation algorithm, the optimal rubber tapping pose needs to be obtained. The steps to obtain the optimal rubber tapping pose are as follows: For the 300 sets of RGB-D images in the test set, firstly, Labelme was used to mark the trunk and optimal tapping line in the RGB image. Then, the RGB image was registered with the depth image to obtain the trunk point cloud and the optimal tapping line point cloud. Finally, the optimal rubber tapping pose was marked in MATLAB.

Improved YOLOv8n for natural rubber tree image detection and segmentation

The backbone network, neck network, and loss function of YOLOv8n were improved. The improved YOLOv8n was named YOLOv8n-rubber. The model structure of YOLOv8n-rubber is shown in Fig. 2. YOLOv8n-rubber was mainly composed of the backbone network, the neck network, the prototype generation branch (Protonet), and the prediction head branch. The backbone network consisted of Conv2d + BN + SiLU (CBS), CFB, and spatial pyramid pooling fast (SPPF) modules, which extracted features from input natural rubber tree images and generated C1, C2, C3, C4, and C5 feature maps. The neck network adopted a feature pyramid network (FPN) and path aggregation network (PAN) structure. C2, C3, C4, and C5 generated by the backbone network were input to the neck network. After multi-scale feature fusion, P2, P3, P4, and P5 feature maps were generated to achieve multi-scale feature information fusion of natural rubber tree images and also provide the basis for the parallel prototype generation branch and prediction head branch. The prototype generation branch took the output P2 of the neck network as input and generated k prototype masks with different regional responses through convolution and upsampling (Upsample) operations to achieve the separation of the background and foreground. The prediction head branch took the outputs P2, P3, P4, and P5 of the neck network as input and used the decoupled head structure to infer the class probability and position information of the predicted object to generate nc class confidences and four bounding box regressors of the predicted object. In addition, a mask coefficient prediction branch was added to the prediction head branch to predict nm mask coefficients. The non-maximum suppression (NMS) was used to filter the categories, bounding box information, and mask coefficients generated by the prediction head branch. After removing the redundant objects, the mask coefficients were linearly combined with the mask templates to obtain the initial segmentation mask of the object. The initial segmentation mask of the object was cropped based on the filtered bounding box in the prediction head branch, and then the final segmentation mask of the object was obtained through threshold to achieve multi-object detection and segmentation of the natural rubber tree image. The specific improvement details are as follows:

Improved backbone network

The original backbone network of YOLOv8n is composed of CBS, C2F, and SPPF modules. When extracting features from natural rubber tree images, there are problems such as poor ability to extract subtle features and insufficient feature extraction of small targets. To solve the above problems, a CFB module was designed by combining the focal block of the cascade fusion network23. As shown in Fig. 2, the C2F of the YOLOv8n backbone network was replaced with CFB, and the number of repetitions of layer 6 was changed from 6 to 9 to enhance the small target and subtle feature extraction ability of the backbone network and improve the accuracy of network detection and segmentation.

On the basis of C2F, CFB was obtained by replacing the Bottleneck in C2F with the Focal Next block in the cascade fusion network. The network structure of the CFB module is shown in Fig. 3a. CBS receives the input feature map h × w × c1 and generates the intermediate feature map h × w × c2. Split splits the generated intermediate feature map into two parts. One part is passed directly to Concat, and the other part is passed to n Focal Next blocks for a series of convolution, normalization, and activation operations. Concat splices the feature map directly passed to Concat with the feature map generated by n Focal Next blocks. The spliced feature map h × w × (n + 2)c2/2 is processed by CBS to generate the final output feature map h × w × c2. The CFB module extracts and converts the features of the input feature map through the operations of feature conversion, branch processing, and feature fusion, and generates the output feature map with more representational ability so that the backbone network can better capture the complex features in the image and prevent the loss of small targets and subtle feature information. The network structure of the Focal Next block is shown in Fig. 3b. A dilated depth-wise convolution and two skip connections are introduced into the ConvNeXt block designed with an inverted bottleneck layer, which can simultaneously merge fine-grained local interactions and coarse-grained global interactions, use global attention or large convolution kernels to expand the receptive field, prevent the loss of small target feature information, improve the accuracy of target detection and segmentation, and reduce the number of network parameters. In Fig. 3b, the orange part represents the dilated depth-wise convolution operation and skip connection operation added to the ConvNeXt block, n is the channel number of the output feature, d7 × 7 is the 7 × 7 depth-wise convolution, r is the dilation rate of the additional convolution, 1 × 1 is the 1 × 1 convolution, GELU is an activation unit, and LN is the layer normalization.

Improved neck network

The detection and segmentation accuracy of small targets can be enhanced by improving the backbone network. However, due to the anisotropy of natural rubber trees and the different distance between the acquisition camera and the target, the target scale changes greatly, and the small target detection and segmentation effect is still not good. To solve the above problems, from the perspective of image feature fusion, the YOLOv8n neck network was improved. A new output layer was added to the YOLOv8n neck network, and the EMA module24 was fused to the YOLOv8n neck network.

The new output layer P2 of the YOLOv8n neck network was introduced through the C2 layer. As shown in Fig. 2, the C2 layer only performed two downsampling operations in the backbone network and contained richer underlying feature information about the target. As shown in the red dashed box in Fig. 2, C5, C4, and C3features were fused top-down to form the new feature. A Concat operation was performed between the new feature that passed through C2F, CBS, and Upsample and the shallow feature output C2. After the Concat operation result passed through the C2F and CBS modules, the new output feature layer P2 of the neck network was obtained. At the same time, the output features of the original YOLOv8n neck network were fused with the bottom-up P2 to form the new output layers P3, P4, and P5 of the neck network. Furthermore, the additional CBS module was added to the neck network to assist the C2F module with feature extraction. The additional CBS was marked with a # in Fig. 2. The four different scale feature outputs of the neck network were used as the inputs of the prototype generation branch and the prediction head branch, which can effectively alleviate the negative impact caused by scale variance and improve the accuracy of small object detection and segmentation. Although the operation of introducing the new output layer of the neck network into the prototype generation branch and the prediction head branch increases the calculation amount of the model to a certain extent, it has greatly improved the detection and segmentation capabilities of small targets.

Due to the existence of other non-detection targets such as weeds and soil ridges in the growth environment of natural rubber trees, causing more noise in the input image, the extracted feature map also contains noise interference, and the feature layer output after the neck network feature fusion also contains noise interference, which affects the accuracy of model detection and segmentation. Therefore, the lightweight attention mechanism module EMA was introduced at the end of each output layer of the neck network, as shown in Fig. 2. In addition, EMA was involved in the bottom-up feature fusion of the neck network, which further improved the feature extraction ability of each output layer of the neck network to the target information of the natural oak image and suppressed invalid redundant information, thereby improving the detection and segmentation accuracy of the network model.

The overall structure of EMA24 is shown in Fig. 4. For the input feature map F ∈ RC×H×W, first, the input feature map is feature grouped along the channel dimension direction to learn different semantic information, and a total of G sub-features are divided. Second, three parallel paths (two paths are 1 × 1 branches, and the third path is the 3 × 3 branch) are used to extract the attention weight descriptors of the grouped feature maps. The 1 × 1 branches adopt 1D global average pooling operations to encode channel information in the H and W spatial directions, respectively. The 3 × 3 branch captures multi-scale feature representations through 3 × 3 convolution. Then, two tensors (the output of the 1 × 1 branch and the output of the 3 × 3 branch) are introduced for cross-spatial learning to achieve richer feature aggregation. The output of the 1 × 1 branch encodes global spatial information through 2D global average pooling, while the output of the 3 × 3 branch is directly converted into the corresponding dimension shape. The output of the above parallel processing is multiplied through a matrix dot product operation, i.e., R11×C//G × R3C//G×H*W, to obtain the first spatial attention map. In addition, the 2D global average pooling is used to encode the global spatial information of the output of the 3 × 3 branch, while the output of the 1 × 1 branch is directly transformed into the corresponding dimension shape. After R31×C//G × R1C//G×H*W, the second spatial attention map is obtained. Finally, the output feature map within each group is calculated as the aggregation of two generated spatial attention weight values, followed by a sigmoid function. The final output of EMA is the same size as the input feature map F, making it easy to integrate into the existing network architecture. EMA not only avoids dimension reduction by processing similar to coordinate attention but also captures features on a larger scale through 3 × 3 convolution and aggregates the output feature maps of multiple parallel sub-networks through cross-spatial learning methods. EMA can effectively extract image features at different scales, perform weighted fusion of features on input images with different spatial resolutions, and extract multi-scale representations with rich feature information while maintaining high efficiency.

Improved loss function

The loss function of YOLOv8n is shown in Eq. (1), which consists of classification loss, regression loss, and mask loss. The classification loss and mask loss are calculated by the binary cross-entropy loss, while the regression loss is computed by the distribution focal loss and CIoU loss.

where \({{L}}\), \({{L}}_{{{{cls}}}}\), \({{L}}_{{{{box}}}}\), \({{L}}_{{{{mask}}}}\), \({{L}}_{{{{DFL}}}}\), and \({{L}}_{{{{CIoU}}}}\) are the total loss, classification loss, regression loss, mask loss, distribution focal loss, and CIoU loss, respectively, and \({{p}}_{{{i}}}\) and \({{y}}_{{{i}}}\) are the predicted probability of the category and the true probability of the category in turn. i is the serial number of the category in the image, N is the number of categories, and y is the coordinate of the prediction box. yj and yj+1 denote integers closest to the left and right of the prediction box coordinates, respectively. IoU(b, bgt) represents the intersection ratio of the prediction box and the real box, \({\uprho }\) represents the geometric center distance between the prediction box and the real box, \({{c}}\) denotes the diagonal length of the minimum circumscribed rectangle between the prediction box and the real box, and \({{v}}\) denotes the variance of the diagonal inclination angle of the prediction box and the real box. \({{k}}\) is the serial number of the pixels in the image and N1 is the total number of pixels. \({{p}}_{{{k}}}\) and \({{y}}_{{{k}}}\) are the predicted and true values of the category to which the k-th pixel belongs, respectively.

There are some problems when YOLOv8n uses the CIoU loss to calculate the regression loss. For example, it does not have invariance to scale and is sensitive to the position deviation of small objects. As a result, positive and negative samples may have similar characteristics and reduce the performance of small object detection and segmentation. Therefore, the NWD25 loss was used instead of the CIoU loss to calculate the regression loss. NWD models the box as a Gaussian distribution and then uses the Wasserstein distance to measure the similarity between the two distributions. Even if the two boxes do not overlap at all or overlap a little, the similarity can still be measured. In addition, NWD has scale invariance, position deviation insensitivity, and the ability to measure the similarity of disjoint frames. NWD solves the problem of CIoU loss and improves the performance of small object detection and segmentation. NWD is defined as follows:

where \({{L}}_{{{{NWD}}}}\) is the NWD loss, \({{N}}_{{{a}}}\) is the Gaussian distribution of the prediction box, \({{N}}_{{{b}}}\) is the Gaussian distribution of the real box, \({{C}}\) is a constant with a value of 12.8, \({{W}}_{{2}}^{{2}} \left( {{{N}}_{{{a}}} {{, N}}_{{{b}}} } \right)\) represents the Wasserstein distance between the prediction box and the real box, (\({{cx}}_{{{a}}}\),\({{cy}}_{{{a}}}\),\({{w}}_{{{a}}}\),\({{h}}_{{{a}}}\)) represents the center coordinate value, length, and width of the prediction box, and (\({{cx}}_{{{b}}}\),\({{cy}}_{{{b}}}\),\({{w}}_{{{b}}}\),\({{h}}_{{{b}}}\)) represents the center coordinate value, length, and width of the real box.

Trunk skeleton extraction based on level set and ellipse fitting

The extraction of the natural rubber tree trunk skeleton is an important prerequisite for rubber tapping pose estimation. On the basis of YOLOv8n-rubber’s detection and segmentation results of natural rubber tree images, the initial trunk point cloud was obtained based on the RGB-D information fusion. The initial trunk point cloud was preprocessed for trunk skeleton extraction. The tree trunk skeleton extraction process is shown in Fig. 5 (Fig. 5i–n), and the specific steps are as follows:

Trunk skeleton extraction and new tapping line location process. (a) Prediction image. (b) Starting and ending point recognition. (c) Tapping line image. (d) New tapping line pixel position calculation. (e) New tapping line image. (f) Depth image. (g) New tapping line point cloud. (h) New tapping line space curve. (i) Trunk mask image. (j) Initial trunk point cloud. (k) Preprocessed trunk point cloud. (l) Trunk point cloud segmentation. (m) Ellipse fitting. (n) Trunk skeleton line. (o) Trunk skeleton line and new tapping line.

Trunk point cloud extraction

Firstly, the mask image (Fig. 5i) of the natural rubber tree trunk generated by YOLOv8n-rubber was obtained. Then, the mapping function Map Depth Frame to Color Space in the Kinect for Windows SDK was called to adjust the binarized trunk mask image to 424 × 512 to match the depth image (Fig. 5f) to realize the image registration operation of the color image and the depth image. Finally, the pixel coordinate value of the trunk area was obtained by traversing the non-zero pixel area in the registered binarized mask image of the trunk. The pixel coordinate value of the trunk area was calculated by Eq. (3) to obtain the initial trunk point cloud (Fig. 5j).

where (\({{x}}_{{{i}}}\), \({{y}}_{{{i}}}\), \({{z}}_{{{i}}}\)) are the 3D coordinates of the registered color image pixel i, (\({{u}}_{{{i}}}\), \({{v}}_{{{i}}}\)) are the pixel coordinates of the registered color image pixel i, \({{I}}_{{{d}}}\) is the depth image, and (\({{c}}_{{{x}}}\), \({{c}}_{{{y}}}\), \({{f}}_{{{x}}}\), \({{f}}_{{{y}}}\)) are the internal parameters of the infrared camera of Kinect V2.

Trunk point cloud preprocessing

The initial trunk point cloud is affected by imaging geometry, environment, and other factors, resulting in some invalid discrete point clouds, which affect the subsequent trunk skeleton extraction. Therefore, the original trunk point cloud must be filtered to reduce the influence of the discrete point cloud on the effective point cloud. Straight-through filtering and radius filtering were used to denoise the original trunk point cloud, remove discrete point clouds and point clouds that do not belong to the main body of the tree trunk, and obtain a relatively accurate and compact main body point cloud. This is the key step in point cloud preprocessing. In addition, due to the high resolution of the Kinect V2 depth sensor, the generated trunk point cloud is dense. To improve the performance of the subsequent algorithm, the sparse trunk point cloud was generated by using the uniform voxel-down sampling method26 for the denoised trunk point cloud. The initial trunk point cloud was denoised and down-sampled to obtain a sparse and clean trunk point cloud (Fig. 5k).

Skeleton extraction of trunk point cloud

In nature, the natural rubber tree trunk is generally cylindrical. Based on the morphological characteristics of natural rubber tree trunks and the thought of differential, a trunk skeleton extraction method based on level set and ellipse fitting was proposed. The pseudo-code of the trunk skeleton extraction method is shown in Algorithm 1. For the preprocessed trunk point cloud QD (Fig. 5k), firstly, the number k of the level set was calculated. Then, the trunk point cloud QD was segmented based on the difference value d to obtain k layer point clouds (Fig. 5l), and the ellipse fitting method (Fig. 5m) was used to solve the central point C for each layer point cloud QS. Finally, the center points calculated from each layer point cloud were connected from bottom to top to obtain the initial trunk skeleton point G (Fig. 5n), and the cubic B-spline function was used to smooth the G to obtain the final skeleton line GF (Fig. 5n) of the trunk point cloud.

where a, b, c, d, and e are polynomial coefficients, which are obtained by substituting the x and y coordinates of each point in QS into Eq. (5). zzmin is the minimum value of the points in the QS along the z axis direction.

New tapping line location based on edge detection and geometric analysis

The new tapping line location is also an important prerequisite for achieving rubber tapping pose estimation. The new tapping line location is based on the object detection and segmentation results of the natural rubber tree image. The tapped area mask, start area bounding box, and end area bounding box of the natural rubber tree generated by YOLOv8n-rubber were used to determine the tapping line. Combined with the natural rubber tree depth image, the 3D coordinate value of the new tapping line was calculated, and the new tapping line location was realized. The new tapping line location process is shown in Fig. 5 (Fig. 5a–h). The specific implementation steps are as follows:

Tapping line position estimation

First of all, two extreme points A (xA, yA) and B (xB, yB) in Fig. 5b were identified. A is the starting point of the tapping line. B is the ending point of the tapping line. In the next part, the tapped area mask was processed by binarization and the edge detection canny algorithm27, and the result was output to obtain the position information of the edge line of the tapped area. Then, starting from point A (Fig. 5c), along the abscissa direction from point A to point B, the maximum value yi of the ordinate corresponding to the abscissa xi was calculated every 10 pixels until the point B was reached, and yi and xi were matched one by one to obtain the pixel coordinate set P = {(xi, yi)|i = 1, 2,…, 1 + \(\lceil{{(x}}_{{{A}}} {{ - x}}_{{{B}}} {)/10}\rceil\)} of each key point of the tapping line on the tapped area. In the end, the pixel coordinate of the key point of the tapping line was fitted by cubic polynomials to obtain the tapping line Q.

Center line acquisition of left and right edge lines of tapped area

To begin with, starting from the starting point of the tapping line, along the ordinate direction from the starting point to the origin point o, the maximum values of the abscissa corresponding to the ordinates \({{y}}_{{{A}}} { - 2}\) and \({{y}}_{{{A}}} { - 15}\) were obtained to form pixel coordinate points C1 (xC1, yC1) and C2 (xC2, yC2), and the line segment C1C2 (Fig. 5d) was used as the right edge line of the tapped area. Then, starting from the ending point of the tapping line, along the ordinate direction from the ending point to the origin point o, the minimum values of the abscissa corresponding to the ordinates \({{y}}_{{{B}}} { - 2}\) and \({{y}}_{{{B}}} { - 15}\) were obtained to form pixel coordinate points D1 (xD1, yD1) and D2 (xD2, yD2), and the line segment D1D2 (Fig. 5d) was used as the left edge line of the tapped area. At last, the center line E1E2 (Fig. 5d) of the left and right edge lines of the tapped area was calculated by Eq. (6).

where M is E1E2, (\({{x}}_{{{{E1}}}}\), \({{y}}_{{{{E1}}}}\)) is the pixel coordinate of the lower endpoint of E1E2, and (\({{x}}_{{{{E2}}}}\), \({{y}}_{{{{E2}}}}\)) is the pixel coordinate of the upper endpoint of E1E2.

Determination of new tapping line position

Firstly, the new tapping line QN was calculated according to the pixel coordinate Q = {(xj, yj)|j = 1, 2,…, n} of each point of the tapping line and Eq. (7), and the pixel coordinate QN = {(xk, yk)|k = 1, 2,…, n} of the new tapping line on the natural rubber tree image was obtained. Then, the pixel coordinate of the new tapping line was mapped to the natural rubber tree color image to obtain the new tapping line mask image (Fig. 5e) of the natural rubber tree, and the new tapping line point cloud TP (Fig. 5g) was obtained by using the method described in Section "Trunk point cloud extraction". Finally, the cubic polynomial was used to fit the new tapping line point cloud, and the new tapping line space curve TN (Fig. 5h) was obtained. The 3D position information of the new tapping line was determined, and the new tapping line location was realized.

where \({{m}}_{{1}}\) is the number of pixels moved down by the tapping line along the center line direction of the left and right edge lines of the tapped area.

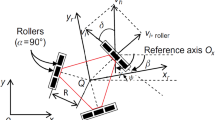

Rubber tapping pose estimation

The rubber tapping pose estimation of the natural rubber tree is of great significance for the successful rubber tapping of the natural rubber tree. The wrong rubber tapping pose causes damage to trees and production reduction, while the correct rubber tapping pose is not only beneficial to the growth of the rubber tree but also can increase the yield of the natural rubber. During the rubber tapping operation, the relative position relationship between the tapping knife and the rubber tree is shown in Fig. 6a. The main cutting edge ME of the tapping knife is perpendicular to the central axis of the natural rubber tree trunk. The tapping knife’s main rear blade surface MF is coplanar with the new tapping line TN. The auxiliary cutting edge AE of the tapping knife is perpendicular to the main cutting edge ME of the tapping knife. Therefore, for any cut point TNi on the new tapping line, its pose should satisfy the following geometric relationship: As shown in Fig. 6b, the cut point TNi is co-linear with the skeleton point GFj. The cut point TNi, the point TNi+1 after the cut point, and the skeleton point GFj are coplanar. Based on the geometric relationship between the cut point, the skeleton point, and the point after the cut point, the rubber tapping pose estimation was performed by combining the 3D position information of the trunk skeleton line and the new tapping line obtained in Sections "Trunk skeleton extraction based on level set and ellipse fitting" and "New tapping line location based on edge detection and geometric analysis".

Rubber tapping pose estimation process. (a) Actual rubber tapping image. (b) Cut point pose estimation image. (c) The estimated rubber tapping pose. (d) The enlarged drawing of the estimated rubber tapping pose under the different viewing angles. (e) The enlarged drawing of the estimated rubber tapping pose.

For any cut point TNi (xi, yi, zi) on the new tapping line TN except the ending cut point, the rubber tapping pose can be described as (xi, yi, zi, ai, oi, ni). Among them, i = 1, 2,…, n-1, and (xi, yi, zi) is the 3D coordinate value of the cut point TNi. As shown in Fig. 6b, ai is the direction vector from the cut point TNi to the skeleton point GFj, realizing that the cut point is co-linear with the skeleton point. Considering the factor of latex flow, TNi and GFj should be equal in height. oi is the vertical vector of ai, and oi starts from the cut point TNi and is coplanar with the plane formed by the cut point TNi, the point TNi+1 after the cut point, and the skeleton point GFj. oi and ai are coplanar, realizing that the cut point, the point after the cut point, and the skeleton point are coplanar. ni is the normal vector of the plane formed by vectors oi and ai. For the ending cut point, its position should be consistent with the ending cut point, and the posture should be consistent with the point before the ending cut point. In summary, the rubber tapping pose of each cut point of the new tapping line can be estimated by Eq. (8). The pseudo-code for rubber tapping pose estimation is shown in Algorithm 2. The estimated rubber tapping pose is shown in Fig. 6c, d, and e.

Experiment setting and evaluation indicators

Experiment setting

The model training and experiment were carried out on two computers, respectively. The model training hardware environment was an Intel (R) Xeon (R) Silver 4210 processor, 32 GB of running memory, an NVIDIA RTX 4000 (8 GB of memory) graphics card, and the Windows 10 operating system. The model training software environment was the Python 3.7 programming language and the PyTorch 1.10 deep learning framework. The experiment hardware environment was an Intel Core i7-11800H processor, 16 GB of running memory, an NVIDIA RTX 3050 (4 GB of memory) graphics card, and the Windows 10 operating system. The experiment software environment was Python 3.8, Matlab programming languages, and the PyTorch 1.9 deep learning framework. The dataset used for model training and experimentation was the natural rubber tree image dataset created in Section "Image acquisition and dataset creation". The batch size of model training was 8 (due to the limitations of the model training hardware platform, the batch size of Mask R-CNN and CondInst was 2). The initial learning rate, optimizer, momentum factor, weight decay, and number of iterations for model training were 0.01, SGD, 0.937, 0.0005, and 300, respectively.

Evaluation indicators

To evaluate the detection and segmentation performance of the improved model, precision (P, %), recall (R, %), F1 score (F1, %), and mean average precision (mAP, %) were used as model evaluation indicators. The calculation is shown in Eq. (9). Additionally, model size and detection segmentation time were used to evaluate the model volume and detection segmentation speed. The success rate of rubber tapping pose estimation (S, %), the mean (\(\overline{{{{e}}_{{{x}}} }}\), \(\overline{{{{e}}_{{{y}}} }}\), \(\overline{{{{e}}_{{{z}}} }}\), mm) and standard deviation (σx, σy, σz, mm) of the positioning error of the cut point in the x, y, and z directions, and the mean error angle (\(\overline{{{{e}}_{{{a}}} }}\), \(\overline{{{{e}}_{{{o}}} }}\), \(\overline{{{{e}}_{{{n}}} }}\), °) and standard error angle (\(\sigma_{{{a}}}\), \(\sigma_{{{o}}}\), \(\sigma_{{{n}}}\), °) of the cut point in the a, o, and n directions were used as the evaluation indexes of the rubber tapping pose estimation algorithm. The calculation is shown in Eqs. (10)–(12).

where TP, FP, and FN are the true positive, false positive, and false negative, respectively, m is the total number of the class, AP(k) is the class k AP value, AP is the integral of the P on the R, TA is the total number of rubber tapping pose estimation, and TS is the successful number of rubber tapping pose estimation. mm denotes the total number of cut points in a single sample, \({{d}}_{{\upbeta }}^{{{ p}}}\) denotes the predicted value of the cut point positioning, \({{d}}_{{\upbeta }}^{{{ t}}}\) denotes the optimal value of the cut point positioning, \(\overrightarrow {{{{q}}_{{\upbeta }}^{{{ p}}} }}\) denotes the cut point estimation pose, \(\overrightarrow {{{{q}}_{{\upbeta }}^{{{ t}}} }}\) denotes the optimal pose of the cut point, and \({{e}}_{{\upbeta }}^{{\upgamma }}\) denotes the error value calculated by a single sample. \({\upbeta }\) means the different evaluation error, n means the total number of samples, \(\overline{{{{e}}_{{\upbeta }} }}\) means the average error of the total sample, and \({\upsigma }_{{\upbeta }}\) means the standard deviation of the total sample.

Results and discussion

Ablation experiment

In order to improve the detection and segmentation accuracy of YOLOv8n, the CFB module, a new output layer, the EMA attention mechanism, and the NWD loss were added in different positions of YOLOv8n. To explore the impact of different modules on the YOLOv8n, ablation experiments were conducted. The results of the ablation experiment of the proposed method on the test set of 597 natural rubber tree images are shown in Table 1 and Fig. 7.

Comparison experiment results before and after model improvement. (a) The loss curve and mean average precision curve of YOLOv8n-rubber. (b) The P and R values of Model 1 and Model 6. (c) The average precision comparison of single classification detection between Model 1 and Model 6. (d) The average precision comparison of single classification segmentation between Model 1 and Model 6.

According to Table 1, the comparative analyses of model size, image detection and segmentation performance with Model 1 (YOLOv8n) as the benchmark are as follows:

-

1.

For Model 2, the model size was reduced by 5.59%, the detection F1 and mAP0.5 were increased by 1.3% and 2%, respectively, the segmentation mAP0.5 and mAP0.5:0.95 increased by 0.7% and 0.2%, respectively, and the detection mAP0.5:0.95 and segmentation F1 remained unchanged. It showed that after replacing the C2F module of the YOLOv8n backbone network with the CFB module, the model size was reduced without reducing the feature extraction ability of the network model. On the contrary, to a certain extent, the feature extraction ability of the network model was improved.

-

2.

Referring to Model 3, the model size was reduced by 1.09%, the detection F1, mAP0.5, and mAP0.5:0.95 were increased by 0.5%, 1%, and 0.1%, respectively, and the segmentation F1, mAP0.5, and mAP0.5:0.95 were increased by 2.2%, 3.4%, and 0.4%, respectively. This indicated that adding an output layer to the neck network of YOLOv8n could reduce the size of the network model, and the model could better fuse target features and improve the detection and segmentation performance of the network model.

-

3.

Mention Model 4, the model size was reduced by 0.47%, the detection F1, mAP0.5, and mAP0.5:0.95 were increased by 0.6%, 2.2%, and 0.2%, respectively, and the segmentation F1, mAP0.5, and mAP0.5:0.95 were increased by 2.6%, 4.8%, and 1.2%, respectively. This implied that after adding an output layer and fusing the EMA attention mechanism to the neck network of YOLOv8n, the model could better fuse the target features while maintaining a smaller size, pay attention to the characteristics of the target itself, suppress invalid redundant information, and effectively improve the detection and segmentation performance of the network model.

-

4.

To Model 5, the detection F1 and mAP0.5 were increased by 1.4% and 2.4%, respectively, and the segmentation F1 and mAP0.5 were increased by 1.3% and 2.3%, respectively. The model size and detection segmentation mAP0.5:0.95 did not change. This represented that after replacing the CIoU loss of YOLOv8n with the NWD loss, NWD could improve the detection and segmentation performance of the model to a certain extent, but it had no effect on the model size.

-

5.

In regard to Model 6, the model size was reduced by 5.9%, the detection F1, mAP0.5, and mAP0.5:0.95 were increased by 1.9%, 3.5%, and 0.6%, respectively, and the segmentation F1, mAP0.5, and mAP0.5:0.95 were increased by 3.5%, 5.8%, and 1.6%, respectively. It meant that after replacing the C2F module of YOLOv8n’s backbone network with the CFB module, adding an output layer to the neck network of YOLOv8n, fusing the EMA attention mechanism in the neck network of YOLOv8n, and replacing the CIoU loss of YOLOv8n with the NWD loss, the model not only maintained a smaller size but also significantly improved the detection and segmentation performance of the model. This was the result of the combined effects of the CFB module, new output layer, EMA attention mechanism, and NWD loss. CFB reduced the model size and enhanced the feature extraction ability of the backbone network. The new output layer enhanced the feature fusion ability of the network. EMA suppressed invalid, redundant information and paid more attention to the characteristics of the target itself. NWD had scale invariance, position deviation insensitivity, and the ability to measure the similarity of disjoint bounding boxes, which improved the detection performance of the small target. So that Model 6 achieved the best detection and segmentation performance while maintaining a smaller model size.

In term of detection segmentation time, compared with Model 1, the detection segmentation time of Model 2, Model 3, Model 4, Model 5, and Model 6 increased by 0.0006 s, 0.0027 s, 0.0034 s, 0.0002 s, and 0.0033 s, respectively. This indicated that the CFB module and NWD loss had little effect on the detection segmentation time of the model, and the new output layer and EMA attention mechanism had an effect on the detection segmentation time of the model. Although the new output layer and EMA attention mechanism slightly increased the model detection segmentation time, the impact was not significant and had basically no impact on the practical application. In summary, it can be found from Table 1 that, compared with other models, YOLOv8n-rubber achieved the minimum model size and the best detection and segmentation performance without significantly increasing the detection segmentation time, which verified the effectiveness of the model improvement.

In addition, combined with Fig. 7, the performance of the model before and after improvement was further analyzed, and the effectiveness of YOLOv8n-rubber was further verified. From Fig. 7a, it can be seen that with the increase in the number of iterations, the training set loss value and the test set loss (validation loss) value of YOLOv8n-rubber decreased continuously. When the number of iterations was greater than 150, the loss values of the training set and the test set gradually converged, and the loss value tended to be stable around 2.5. As the loss value tended to be stable, the detection mAP0.5, detection mAP0.5:0.95, segmentation mAP0.5:0.95, and segmentation mAP0.5 values of YOLOv8n-rubber also gradually tended to be stable. This showed that the YOLOv8n-rubber had a good training effect. It can be seen from Fig. 7b–d that the detection segmentation P, detection segmentation R, detection segmentation single classification AP0.5, and detection segmentation single classification AP0.5:0.95 values of YOLOv8n-rubber were higher than those of YOLOv8n, which indicated that compared with YOLOv8n, YOLOv8n-rubber could not only classify well but also accurately detect and segment the target information in the natural rubber tree image, which further verified the effectiveness of the improved model.

In general, YOLOv8n-rubber’s indicators were the best. Although YOLOv8n-rubber was 0.0033 s slower than YOLOv8n in detection segmentation time, it had basically no impact on the practical application. YOLOv8n-rubber not only ensured that the model had the highest detection and segmentation performance and the smallest size, but also took into account the detection and segmentation speed of the model, which verified the effectiveness of the improved model YOLOv8n-rubber.

Performance comparison experiment with different models

To further verify the performance of YOLOv8n-rubber, YOLOv8n-rubber was compared with YOLOv5, YOLOv7, YOLOv8n, YOLOv8s, YOLACT, Mask R-CNN, CondInst, and RTMDet models28,29,30,31,32,33. The detection and segmentation experimental results of other models and YOLOv8n-rubber on the test set of 597 natural rubber tree images are shown in Table 2, Figs. 8, and 9.

According to Table 2, Compared with YOLOv5, YOLOv7, YOLACT, Mask R-CNN, CondInst, RTMDet, and YOLOv8n models, the detection mAP0.5 of YOLOv8n-rubber increased by 1.3%, 0.9%, 8.8%, 8.4%, 8.1%, 2%, and 3.5%, respectively, the segmentation mAP0.5 of YOLOv8n-rubber enhanced by 5%, 4.8%, 14.6%, 1.6%, 6%, 12.6%, and 5.8%, respectively, the detection mAP0.5:0.95 of YOLOv8n-rubber improved by 3.2%, 1.4%, 13.1%, 6.2%, 5.4%, 3.9%, and 0.6%, respectively, and the segmentation mAP0.5:0.95 of YOLOv8n-rubber increased by 15.7%, 15.6%, 8.7%, 2.2%, 3.7%, 6.6%, and 1.6%, respectively. Compared with YOLOv8s, although the detection mAP0.5:0.95 of YOLOv8n-rubber decreased by 0.1%, the detection mAP0.5, segmentation mAP0.5, and segmentation mAP0.5:0.95 increased by 1%, 5.7%, and 1.2%, respectively. Moreover, it can be intuitively seen from Fig. 8 that the detection mAP0.5:0.95 advantage of YOLOv8s was not significant, while the detection mAP0.5, segmentation mAP0.5, and segmentation mAP0.5:0.95 advantages of YOLOv8n-rubber were significant. This showed that among these models, YOLOv8n-rubber had good detection and segmentation performance for natural rubber tree image targets. Furthermore, it can be seen from Table 2 and Fig. 8 that the model size of YOLOv8n-rubber was the smallest among these models, at 6.06 MB. Although the detection segmentation time of YOLOv8n-rubber was 0.0033 s slower than that of the fastest YOLOv8n, it had advantages in detection segmentation mAP0.5, detection segmentation AP0.5:0.95, and model size. This indicated that YOLOv8n-rubber had the best comprehensive performance among these models.

As can be seen from Fig. 9, compared with YOLOv8n-rubber, the detection and segmentation effects of the YOLOv5, YOLOv7, YOLOv8s, RTMDet, YOLACT, CondInst, Mask R-CNN, and YOLOv8n models were not ideal. For example, YOLOv5 and YOLOv7 had the phenomenon of incomplete object segmentation. YOLOv8s showed over-segmentation, incomplete segmentation, missed detection, and repeated detection of targets. RTMDet exhibited the phenomenon of over-segmentation, missed detection, and false detection of targets. YOLACT showed the phenomenon of object false detection and object missed detection. Mask R-CNN displayed over-segmentation, incomplete segmentation, false detection, and missed detection of targets. CondInst had the phenomenon of object missed detection and object over-segmentation. YOLOv8n displayed incomplete segmentation, false detection, and missed detection of targets. However, YOLOv8n-rubber still maintained a good detection and segmentation effect on the target.

To sum up, YOLOv8n-rubber’s detection and segmentation mAP0.5 and mAP0.5:0.95 scores were higher, it had a better detection and segmentation effect on the target, and the network accuracy, robustness, and generalization performance were better. Additionally, compared with other models, YOLOv8n-rubber had the smallest model size and the second-least detection segmentation time, indicating that YOLOv8n-rubber had obvious advantages in detection and segmentation accuracy, model size, and detection efficiency.

Comparative experiments on detection and segmentation effects in different scenes

To evaluate the detection and segmentation effect of the improved model YOLOv8n-rubber in different scenes, the 597 images of the test set were divided into sunny, cloudy, night, one year, two years, three years, and above according to the weather and the year of cutting, totaling six scenes. Comparison experiments of the detection and segmentation effects before and after model improvement were conducted in these six scenes. The experiment results are shown in Table 3 and Fig. 10.

As can be seen from Table 3, the detection and segmentation P, R, mAP0.5, and mAP0.5:0.95 values of YOLOv8n-rubber were all higher than YOLOv8n, indicating that the detection and segmentation performance of YOLOv8n-rubber was better than that of YOLOv8n in different scenes. It is worth noting that in the cloudy scene, the detection P, R, mAP0.5, and mAP0.5:0.95 values of YOLOv8n-rubber reached 82.3%, 83.6%, 82.8%, and 60.6%, respectively, and the segmentation P, R, mAP0.5, and mAP0.5:0.95 values of YOLOv8n-rubber reached 76.7%, 76%, 74.1%, and 58.5%, respectively, indicating that the target of the natural rubber tree image was easier to detect by YOLOv8n-rubber in this scene. But in sunny and night scenes, the detection and segmentation P, R, mAP0.5, and mAP0.5:0.95 values of YOLOv8n-rubber were lower than those in the cloudy scene. This was because in the cloudy scene, the target had clear color, texture, and contour, the detection difficulty was small, and the detection and segmentation effect was better. In sunny and night scenes, the target was affected by too strong illumination, uneven illumination distribution, and light supplementation. The target produced the exposure phenomenon. The target color was aliased with shadows caused by light. The target color was similar to the background. The target contour and texture were blurred. This made YOLOv8n-rubber difficult to detect the target in sunny and night scenes, and the detection segmentation effect was poor. Moreover, under different years of cutting, the YOLOv8n-rubber detection segmentation P, R, mAP0.5, and mAP0.5:0.95 values in the two-year scene were higher than those in the one-year, three-year, and above scenes. The reason was that in the two-year scene, the scale change between the targets was relatively small, the target was more conspicuous and easy to detect, and the object detection and segmentation effect was better. However, in the one-year, three-year, and above scenes, the scale change between the targets was relatively large, and the size difference between the targets was relatively large, resulting in poor object detection and segmentation effects. In view of the relatively poor detection and segmentation effect of YOLOv8n-rubber in sunny, night, one-year, three-year, and above scenes, the model detection and segmentation ability will be further improved by expanding the training data set and replacing the artificial light supplement equipment in the future.

It can be seen from Fig. 10 that YOLOv8n had the phenomenon of object false detection, object over-segmentation, object under-segmentation, and object missed detection. The reason was that in the sunny scene, the light intensity was high, and the color and shape of the non-target in the rubber plantation were too similar to the natural rubber tree trunk, resulting in false detection and over-segmentation. The start area and end area in the natural rubber tree image were tiny targets. The tiny target had a small size, carried less information, had weak feature expression ability, and after the multi-level convolution operation of the model, extracted fewer features, resulting in missed detection and under-segmentation. However, YOLOv8n-rubber significantly improved the phenomenon of false detection, over-segmentation, under-segmentation, and missed detection. YOLOv8n-rubber could accurately detect the position and category of the target without false detection or missed detection. In addition, YOLOv8n-rubber significantly improved the target mask over-segmentation and the target mask under-segmentation, making the segmentation accuracy of the target mask further approach the real area.

Overall, the detection and segmentation performance of YOLOv8n-rubber for objects in different scenes was better than that of YOLOv8n, which indicated that YOLOv8n-rubber had a good detection and segmentation effect for objects on natural rubber tree images in different scenes.

Rubber tapping pose estimation experiment

To verify the performance of the rubber tapping pose estimation algorithm, the rubber tapping pose estimation experiment was carried out on 300 sets of RGB-D images in the test set. The experiment results are shown in Table 4 and Fig. 11.

According to Table 4, the average success rate of rubber tapping pose estimation in different scenes was 96%. The success rate of rubber tapping pose estimation in the cloudy scene was 99.38%, which was 6.18% and 10.49% higher than that in the sunny and night scenes, respectively. This was because in the cloudy scene, the color, texture, contour, shape, and other features of the detection segmentation target were clear and obvious. YOLOv8n-rubber’s detection and segmentation results for the target were more accurate. There were more point clouds for tree trunk skeleton extraction, and the quality of the point clouds for the new tapping line location was better. The success rate of rubber tapping pose estimation was higher. In the sunny and night scenes, due to the influence of too strong, too weak, and an uneven distribution of light, the image imaging quality was poor, resulting in blurred target shape and texture and similar colors to the background. YOLOv8n-rubber had poor detection and segmentation results for the target. There were fewer point clouds for tree trunk skeleton extraction, and the quality of the point clouds for the new tapping line location was unsatisfactory. Due to the dual effects of object detection and segmentation results and the number and quality of point clouds, the success rate of rubber tapping pose estimation was lower. In the scene of cutting for two years, the success rate of rubber tapping pose estimation was 100%, which was 9.62% and 3.55% higher than that in the one-year, three-year, and above scenes, respectively. This was because, in the natural rubber tree image of cutting for two years, the scale change between the targets was small, the target was more prominent, and it was easy to detect. YOLOv8n-rubber had better detection and segmentation results for the target, accurately extracted the trunk skeleton and located the new tapping line, and had a high success rate in estimating the rubber tapping pose. In the scene of cutting for one year, three years, and above, the scale between the targets changed greatly, and the detection and segmentation effect of YOLOv8n-rubber on the target was bad, resulting in the inaccurate extraction of the trunk skeleton and the location of the new tapping line, which made the success rate of rubber tapping pose estimation lower.

Figure 11 shows the rubber tapping pose estimated by the rubber tapping pose estimation algorithm. The pink, light green, and orange arrows represent the rubber tapping poses of each cut point on the new tapping line estimated by the rubber tapping pose estimation algorithm. The red, dark green, and yellow arrows represent the optimal rubber tapping poses of each cut point on the optimal tapping line. The starting point of each arrow is the cut point. Figure 11a–d are examples of successful rubber tapping pose estimation, and Fig. 11e–h are examples of failed rubber tapping pose estimation. The reason for the failure of rubber tapping pose estimation was that in the unstructured rubber plantation, due to the limitations of YOLOv8n-rubber detection and segmentation accuracy, the trunk point cloud was missing or broken, the new tapping line point cloud was missing, and the quality was poor. In this case, the tree trunk skeleton extraction and the new tapping line location effects were bad, resulting in the failure of rubber tapping pose estimation. Another reason was that the shooting angle was not suitable for obtaining complete depth information of natural rubber trees. In view of the failure of rubber tapping pose estimation, the subsequent consideration is to fill the missing point cloud and improve the quality of the point cloud by improving the point cloud preprocessing algorithm. The autonomous vision algorithm is studied to give the robot the ability to automatically and reasonably adjust the shooting angle and improve the accuracy of rubber tapping pose estimation.

The error analysis of 288 sets of sample images with successful rubber tapping pose estimation was carried out to evaluate the accuracy of the rubber tapping pose estimation algorithm. The results are shown in Fig. 12.

According to Fig. 12, the maximum values of positioning errors in the x, y, and z directions were 1.98 mm, 1.98 mm, and 1.97 mm, respectively, the mean values \(\overline{{{{e}}_{{{x}}} }}\), \(\overline{{{{e}}_{{{y}}} }}\), and \(\overline{{{{e}}_{{{z}}} }}\) were 0.69 mm, 0.73 mm, and 1.07 mm, respectively, and the standard deviations σx, σy, and σz were 0.51 mm, 0.4 mm, and 0.56 mm, respectively. This indicated that the positioning error of the rubber tapping pose estimated by the rubber tapping pose estimation algorithm was small in the x, y, and z directions, and that change was relatively stable and had less fluctuation compared with the mean error. The maximum error angles in the a, o, and n directions were 2.99°, 3.99°, and 3.99°, respectively, the mean error angles \(\overline{{{{e}}_{{{a}}} }}\), \(\overline{{{{e}}_{{{o}}} }}\), and \(\overline{{{{e}}_{{{n}}} }}\) were 1.65°, 2.53°, and 2.26°, respectively, and the standard error angles \(\sigma_{{{a}}}\), \(\sigma_{{{o}}}\), and \(\sigma_{{{n}}}\) were 0.68°, 0.88°, and 0.89°, respectively. It showed that the error angle of the rubber tapping pose estimated by the rubber tapping pose estimation algorithm was small in the a, o, and n directions, and that change was relatively stable and had less fluctuation compared with the mean error angle. The error analysis results indicated that the rubber tapping pose estimation results could meet the accuracy requirements of the rubber tapping pose estimation for the rubber tapping robot4,5,8.

Algorithm time efficiency analysis

The rubber tapping pose estimation was divided into two stages. The first stage was the multi-object detection and segmentation stage, and the second stage was the trunk skeleton extraction, the new tapping line location, and the rubber tapping pose estimation stage. To ensure the normal operation of the rubber tapping robot during the rubber tapping process, the real-time performance of the rubber tapping pose estimation was analyzed. The average time spent on estimating the rubber tapping pose of 288 sample images with successful rubber tapping pose estimation was used to evaluate the real-time performance of the algorithm. The results are shown in Table 5. It is obvious that the rubber tapping pose estimation of each natural rubber tree took about 0.2818 s, which met the real-time operation requirements of the rubber tapping robot. For skeleton extraction, which takes up most of the calculation time, the extraction speed of skeleton points will be accelerated by studying the method of determining skeleton points according to the position of the tapping line in the future.

Conclusions

In this study, a method of tapping line detection and rubber tapping pose estimation for natural rubber trees based on improved YOLOv8n and RGB-D information fusion was proposed. Firstly, the improved YOLOv8n was used to achieve multi-object detection and segmentation of the natural rubber tree image. Then, the trunk skeleton was extracted by the combination of level set and ellipse fitting, and the new tapping line was located by the combination of edge detection and geometric analysis. Finally, the rubber tapping pose was estimated based on the trunk skeleton and new tapping line. It provides theoretical support for the future development of the vision system and motion planning of the end effector of the rubber tapping robot. The main conclusions are as follows:

-

(1)

Compared with YOLOv8n, the model size of the improved YOLOv8n was reduced by 5.9%. The detection F1, mAP0.5, mAP0.5:0.95, segmentation F1, mAP0.5, and mAP0.5:0.95 of the improved YOLOv8n were increased by 1.9%, 3.5%, 0.6%, 3.5%, 5.8%, and 1.6%, respectively, compared to YOLOv8n. This indicated that after replacing the C2F module of YOLOv8n’s backbone network with the CFB module, adding an output layer to the neck network of YOLOv8n, fusing the EMA attention mechanism into the neck network of YOLOv8n, and replacing the CIoU loss of YOLOv8n with the NWD loss, the model size was reduced, and the model detection and segmentation performance was improved, which verified the effectiveness of the model improvement.

-

(2)

Compared with YOLOv5, YOLOv7, YOLOv8s, YOLACT, Mask R-CNN, CondInst, and RTMDet, the improved YOLOv8n had obvious advantages in detection segmentation precision, model size, and detection efficiency, which further verified the effectiveness of model improvement. In addition, in different scenes, the detection and segmentation P, R, mAP0.5, and mAP0.5:0.95 values of the improved YOLOv8n were higher than those of YOLOv8n, indicating that the improved YOLOv8n could better detect and segment the natural rubber tree image targets in different scenes compared to YOLOv8n.

-

(3)

The rubber tapping pose estimation results of 300 new tapping lines in different scenes showed that the success rate of rubber tapping pose estimation in the cloudy scene was 99.38%, which was 6.18% and 10.49% higher than that in sunny and night scenes, respectively. The success rate of rubber tapping pose estimation in the two-year scene was 100%, which was 9.62% and 3.55% higher than that in the one-year, three-year, and above scenes, respectively. In different scenes, the average success rate of rubber tapping pose estimation was 96%, the average time consumed of rubber tapping pose estimation was 0.2818 s, the positioning errors in x, y, and z directions were 0.69 ± 0.51 mm, 0.73 ± 0.4 mm, and 1.07 ± 0.56 mm, respectively, and the error angles in a, o, and n directions were 1.65° ± 0.68°, 2.53° ± 0.88°, and 2.26° ± 0.89°, respectively, which met the accuracy and speed requirements of rubber tapping pose estimation of the natural rubber tapping robot.

This study provides an effective solution for natural rubber tree tapping line detection and rubber tapping pose estimation in a rubber plantation environment, but there are still shortcomings. For example, in sunny, night, one-year, three-year, and above scenes, the model detection and segmentation effects are relatively poor. For rubber tapping pose estimation, there are failures of rubber tapping pose estimation. In future research, the model detection and segmentation abilities will be further improved by expanding the training data set, replacing the artificial light supplement equipment, and further optimizing the network model structure. The speed of rubber tapping pose estimation will be accelerated by exploring the method to determine the skeleton point according to the tapping line position. The success rate of rubber tapping pose estimation will be further increased by improving the point cloud preprocessing algorithm and studying the autonomous vision algorithm.

Data availability

The datasets used and analyzed during the current study are available from the corresponding author on reasonable request.

References

Park, J. C., Ling, T., Kim, M. Y., Bae, S. W. & Ryu, S. B. Enhanced natural rubber production in rubber dandelion taraxacum kok-saghyz roots by foliar application of a natural lipid. Ind. Crops Prod. 213, 117714 (2024).

Zhang, C. et al. Experiment of influence factors on sawing power consumption for natural rubber mechanical tapping. Trans. Chin. Soc. Agric. Eng. 34, 32–37 (2018).

Qin, Y. et al. The role of citrate synthase HbCS4 in latex regeneration of hevea brasiliensis (para rubber tree). Ind. Crops Prod. 206, 117637 (2023).

Zhang, X. et al. Design and test of automatic rubber-tapping device with spiral movement. Trans. Chin. Soc. Agric. Mach. 54, 169–179 (2023).

Zhang, X. et al. Design and test of profiling progressive natural rubber automatic tapping machine. Trans. Chin. Soc. Agric. Mach. 53, 99–108 (2022).

Wongtanawijit, R. & Khaorapapong, T. Nighttime rubber tapping line detection in near range images. Multimed. Tools Appl. 80, 29401–29422 (2021).

Sun, Z., Yang, H., Zhang, Z., Liu, J. & Zhang, X. An improved YOLOv5-based tapping trajectory detection method for natural rubber trees. Agriculture 12, 1309 (2022).

Chen, Y., Zhang, H., Liu, J., Zhang, Z. & Zhang, X. Tapped area detection and new tapping line location for natural rubber trees based on improved mask region convolutional neural network. Front. Plant Sci. 13, 1038000 (2023).

Chen, J. et al. A method for multi-target segmentation of bud-stage apple trees based on improved YOLOv8. Comput. Electron Agr. 220, 108876 (2024).

Du, W., Jia, Z., Sui, S. & Liu, P. Table grape inflorescence detection and clamping point localisation based on channel pruned YOLOV7-TP. Biosyst. Eng. 235, 100–115 (2023).

Soeb, M. A. et al. Tea leaf disease detection and identification based onYOLOv7 (YOLO–T). Sci. Rep. 13, 6078 (2023).

Nie, L. et al. Deep learning strategies with CReToNeXt–YOLOv5 for advanced pig face emotion detection. Sci. Rep. 14, 1679 (2024).

Wang, S. M. et al. Tea yield estimation using UAV images and deep learning. Ind. Crops Prod. 212, 118358 (2024).

Zeng, T., Li, S., Song, Q., Zhong, F. & Wei, X. Lightweight tomato real-time detection method based on improved YOLO and mobile deployment. Comput. Electron Agr. 205, 107625 (2023).

Cui, M., Lou, Y., Ge, Y. & Wang, K. LES-YOLO: A lightweight pinecone detection algorithm based on improved YOLOv4-Tiny network. Comput. Electron Agr. 205, 107613 (2023).

Sun, Q. et al. Citrus pose estimation from an RGB image for automated harvesting. Comput. Electron Agr. 211, 108022 (2023).

Kim, J. et al. Tomato harvesting robotic system based on Deep-ToMaToS: Deep learning network using transformation loss for 6D pose estimation of maturity classified tomatoes with side-stem. Comput. Electron Agr. 201, 107300 (2022).

Hussain, M., He, L., Schupp, J., Lyons, D. & Heinemann, P. Green fruit segmentation and orientation estimation for robotic green fruit thinning of apples. Comput. Electron Agr. 207, 107734 (2023).

Chen, Z., Chen, J., Li, Y., Gui, Z. & Yu, T. Tea bud detection and 3D pose estimation in the field with a depth camera based on improved YOLOv5 and the optimal pose-vertices search method. Agriculture 13, 1405 (2023).

Retsinas, G., Efthymiou, N., Anagnostopoulou, D. & Maragos, P. Mushroom detection and three dimensional pose estimation from multi-view point clouds. Sensors 23, 3576 (2023).

Lin, G., Tang, Y., Zou, X., Xiong, J. & Li, J. Guava detection and pose estimation using a low-cost RGB-D sensor in the field. Sensors 19, 428 (2019).

Luo, L. et al. In-field pose estimation of grape clusters with combined point cloud segmentation and geometric analysis. Comput. Electron Agr. 200, 107197 (2022).

Zhang, G., Li, Z., Li, J. & Hu, X. CFNet: Cascade fusion network for dense prediction. arXiv preprint arXiv:2302.06052 (2023).

Ouyang, D. et al. Efficient multi-scale attention module with cross-spatial learning. In Proceedings of the IEEE International Conference on Acoustics 1–5 (2023).

Xu, C. et al. Detecting tiny objects in aerial images: A normalized Wasserstein distance and a new benchmark. ISPRS J. Photogramm. 190, 79–93 (2022).

Wang, S. et al. A new point cloud simplification method with feature and integrity preservation by partition strategy. Measurement 197, 111173 (2022).

Wang, L., Gu, X., Liu, Z., Wu, W. & Wang, D. Automatic detection of asphalt pavement thickness: A method combining GPR images and improved canny algorithm. Measurement 196, 111248 (2022).

Lawal, O. M. YOLOv5-LiNet: A lightweight network for fruits instance segmentation. Plos One 18, e02822 (2023).

Yasir, M. et al. Instance segmentation ship detection based on improved Yolov7 using complex background SAR images. Front. Mar Sci. 10, 1113669 (2023).

Bolya, D., Zhou, C., Xiao, F. & Lee, Y. J. YOLACT: Real-time instance segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision 9156–9165 (2019).

He, K., Gkioxari, G., Dollár, P. & Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision 2980–2988 (2017).

Tian, Z., Shen, C. & Chen, H. Conditional convolutions for instance segmentation. arXiv preprint arXiv:2003.05664 (2020).

Lyu, C. et al. RTMDet: An empirical study of designing real-time object detectors. arXiv preprint arXiv:2212.07784 (2022).

Acknowledgements

This study was supported by the National Natural Science Foundation of China (U23A20176), the National Modern Agricultural Industry Technology System (CARS-33-JX2), the Hainan Provincial Academician Innovation Platform (YSPTZX202109), and the International Scientific and Technological Cooperation R&D Project in Hainan Province (GHYF2023002).

Author information

Authors and Affiliations

Contributions

Conceptualization, Y.C. and X.Z.; writing—original draft preparation, Y.C.; writing—review and editing, J.L. and Z.Z.; visualization, Y.C. and H.Y.; supervision, Z.Z. and X.Z..

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions