Abstract

With regard to underground hydrogen storage projects, presuming that the hydrogen storage site has served as a repository for methane, the coexistence of a blend of methane and hydrogen is anticipated during the incipient stage of hydrogen storage. Therefore, the solubility of hydrogen/methane mixtures in brine becomes imperative. On the contrary, laboratory tasks of such measurements are hard because of its extreme corrosion ability and flammability, hence modeling methodologies are highly preferred. Therefore, in this study, we seek to create accurate data-driven intelligent models based upon laboratory data using hybrid models of adaptive neuro-fuzzy inference system (ANFIS) and least squares support vector machine (LSSVM) optimized with either particle swarm optimization (PSO), genetic algorithm (GA) and coupled simulated annealing (CSA) to predict hydrogen/methane mixture solubility in brine as a function of pressure, temperature, hydrogen mole fraction in hydrogen/methane mixture and brine salt concentration. The results indicate that almost all the gathered experimental data are technically suitable for the model development. The sensitivity study shows that pressure and hydrogen mole fraction in the mixture are strongly related with the solubility data with direct and indirect effects, respectively. The analyses of evaluation indexes and graphical methods indicates that the developed LSSVM-GA and LSSVM-CSA models are the most accurate as they exhibit the lowest AARE% and MSE values and the highest R-squared values. These findings show that machine learning methods could be a useful tool for predicting hydrogen solubility in brine encountered in underground hydrogen storage projects, aiding in the advancement of intelligent, affordable, and secure hydrogen storage technologies.

Similar content being viewed by others

Introduction

The widespread reliance on fossil fuels for energy production has led to significant greenhouse gas (GHG) emissions across various industrial sectors, contributing to environmental degradation and necessitating the pursuit for renewable energy resources and efficient storage solutions1. The ever-growing universal request for energy has prompted researchers to investigate innovative storage solutions, with hydrogen storage in underground reservoirs emerging as a promising alternative. This approach holds the potential to bridge the gap between energy supply and demand, mitigating the challenges associated with traditional energy storage methods2,3. The utilization of underground reservoirs as hydrogen storage facilities presents a range of benefits, such as vast storage volumes and long-term stability. In addition, the geological formations that have traditionally been employed to store oil, gas, and other hydrocarbons may also exhibit the necessary characteristics for effective hydrogen storage, facilitating the transition to a sustainable energy future4. The surplus hydrogen generated through the electrolysis of water can be stored in various underground reservoirs, including saline aquifers, permeable rock formations, and depleted oil and gas reservoirs. These subsurface geological features possess suitable properties for effective hydrogen storage, positioning them as viable options in the pursuit of sustainable energy solutions5. Estimating the potential hydrogen losses resulting from dissolution into the aquifer is a crucial aspect of evaluating the viability of underground hydrogen storage, and accurate estimation requires precise measurement and analysis. Experimental studies are commonly employed to achieve this, as they provide a high degree of accuracy and a deeper understanding of the mechanisms at play within the subsurface environment. Recently, Perera6 performed a detailed literature study to present the fluid/rock interactions that typically occur during hydrogen storage within the depleted gas reservoirs and elucidated that they are enormously influenced by alterations in interfacial tension, wettability, adsorption, diffusivity and solubility phenomena.

An important step in the hydrogen storage is the magnitude of hydrogen possibly being dissolved in reservoir brine as part of the methane storage task which may be encountered while storing hydrogen in depleted gas reservoirs. This knowledge is essential for optimizing the safety, security and efficiency of hydrogen storage in such reservoirs, as it enables a more comprehensive understanding of the dynamics within the subsurface environment7. Indeed, understanding the hydrogen dissolution process in reservoir water is not only crucial for estimating potential storage losses, but also for accurately determining the amount of hydrogen that could be involved in microbial processes. In the context of a storage site that has previously been utilized for methane storage, the initial stage of hydrogen storage is expected to result in a mixture of both hydrogen and methane in the primary phase. This mixture can have a significant impact on the dynamics of the subsurface environment, highlighting the importance of a thorough evaluation of the potential interactions between the stored hydrogen and the existing conditions within the reservoir6. The most accurate method, as mentioned, is the laboratory measurement tasks although owing to its extreme corrosion ability and flammability, experimentally measuring hydrogen solubility in brine are hardly ever carried out8. Hence, the preferred method in estimating the hydrogen solubility in brine or pure water is the modeling approach.

Machine learning methods have been successfully applied for various predictive and automating task in various disciplines9,10,11,12. For example, and in the realm of hydrogen storage and carbon capture realms, Ansari et al.5 constructed radial basis function (RBF) and least squares support vector machine (LSSVM) based models to predict the solubility of hydrogen in water-based solutions and compared their results with those based upon diverse equation of state models. Thanh et al.13 developed intelligent data-driven models based upon several machine learning algorithms to predict solubility of hydrogen in brines. Thanh et al.14 constructed artificial intelligence-based models to estimate hydrogen adsorption in different formations such as coal, sandstone and shale. Ewees et al.15 predicted the viscosity values of nitrogen/carbon dioxide within gaseous blends as a crucial objective for carbon capture and sequestration projects. Zhang et al.16 constructed several machine learning models to rapidly compute CO2 flux in areas affected by underground coal fires. Tanh et al.17 implemented a number data-driven based models to predict CO2 storage capacity and oil recovery values within the residual oil zones. Thanh et al.18 constructed intelligent models to predict the thermodynamic characteristics of hydrogen for the purpose of its geological storage in underground formations.

Therefore, in this paper, we aim to construct intelligent methods of adaptive neuro-fuzzy inference system (ANFIS) and least squares support vector machine (LSSVM) optimized with either particle swarm optimization (PSO), genetic algorithm (GA) or coupled simulated annealing (CSA) to predict the solubility of mixture of hydrogen and methane in underground brine reservoirs as a function of the effective parameters such as temperature, pressure, salt type concentration and hydrogen mole fraction in the mixture based upon an accurate experimental dataset. The authenticity of the prepared dataset is indicated via a renowned outlier detection algorithm. In addition, sensitivity analysis is intricately done using the concept of relevancy factor to figure out how the solubility behavior is changed by different effective parameter. The developed artificial intelligence-based models are evaluated via several statistical and graphical approaches. The main novelty of the current study is taking the account of most probable existing salts in underground formations brine fluid appropriate for hydrogen storage projects, that is, sodium chloride and calcium chloride (their concentrations as individual entities within the brine) for the intelligent model development in regard to predict hydrogen solubility in brine more precisely.

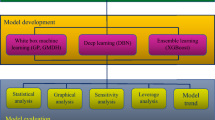

Methodology

In this section, we first present the background and mathematical explanations of the utilized machine learning methods (LSSVM and ANFIS) along with the pertinent optimization algorithms used. Then the dataset upon which the intelligent predictive models are developed, as well as the model performance indexes are explored.

Machine learning methods

Least squares support vector machine

Least Squares Support Vector Machine (LSSVM) is an extension of the standard Support Vector Machine (SVM), designed to simplify the optimization process. Traditional SVMs are influential apparatuses for regression and classification tasks, but their training involves solving a quadratic programming problem, which can be computationally intensive, especially for large datasets. LSSVM addresses this issue by transforming the inequality constraints of SVMs into equality constraints, which leads to a simpler and more efficient solution involving linear equations19.

LSSVM was introduced by Johan A. K. Suykens and Joos Vandewalle in the late 1990s as a way to make SVMs more practical for large-scale problems20. The primary advantage of LSSVM over traditional SVM lies in its computational efficiency, making it suitable for real-time applications and large datasets. In traditional SVMs, the optimization problem involves minimizing a cost function subject to inequality constraints. The classification task is correctly conducted with a margin via these constraints. The optimization problem can be complex and computationally demanding. LSSVM simplifies this by converting the inequality constraints into equality constraints. This results in a set of linear equations that are easier and faster to solve. The LSSVM optimization problem can be formulated as21,22:

Subject to:

wherein w denotes the weight vector, b is called the bias term, ei=[e1, e2,…,eN]T are known as the error variables, γ signifies a regularization parameter, and ϕ(xi) is a mapping to a higher-dimensional space. To solve this optimization problem, we construct the Lagrangian function:

where α = [α1, α2, …, αN]T are the Lagrange multipliers. By taking the partial derivatives of the Lagrangian with respect to w, b, and e and setting them to zero, we obtain the dual problem:

Substituting these into the Lagrangian gives the dual problem:

subject to:

where Qij=yiyjK(xi,xj), K(xi,xj)=ϕ(xi)Tϕ(xj) is the kernel function, and 1 is a vector of ones.

Note that in Least Squares Support Vector Machine (LSSVM), there are primarily two key tuning parameters that significantly affect the model’s performance: regularization parameter (ɣ) and the kernel parameter. The regularization parameter controls the trade-off between minimizing the classification error and maximizing the margin. It balances the complexity of the model (i.e., the margin size) against the sum of squared errors of the training data. In addition, the type of kernel function and subsequently its related factors greatly influence the performance of LSSVM. Here, the radial basis function (known as RBF) kernel function due to its great capability and generalization was used, which is defined as23:

In which \(\:\sigma\:\) is the tuning parameter of the RBF kernel function. Therefore, two tuning parameters of \(\:\sigma\:\) and ɣ are the adjusting parameters of the LSSVM approach in this paper.

Adaptive neuro-fuzzy inference system

The Adaptive Neuro-Fuzzy Inference System (ANFIS) is a hybrid intelligent system that synergistically combines the strengths of both neural networks and fuzzy logic principles. Developed by Jang in 1993, ANFIS has been widely adopted in various fields such as control systems, pattern recognition, and system modeling due to its proficiency in dealing with uncertain and imprecise information. ANFIS aims to leverage the fuzzy logic qualitative approach’s ability to manage vague and ambiguous information and the neural network’s capacity to learn from and adapt to data. This combination enhances the system’s modeling capabilities, making it extra vigorous and accurate in applications where traditional methods may struggle. ANFIS employs a five-layer feedforward neural network to implement a Sugeno-type fuzzy inference system. Each layer in the ANFIS architecture plays a specific role in the fuzzy inference process:

-

Layer 1 (known as Input Layer): Each node represents a fuzzy set, generating membership grades of the inputs using membership functions:

In which µAi (x) is the membership function value, x denotes the input, and ci, ai, and bi are parameters that define the shape and position of the membership function.

-

Layer 2 (known as Rule Layer): Nodes in this layer represent the fuzzy rules. In this layer, the firing strength of each rule is calculated by applying fuzzy operators to the membership grades:

Where wi is the firing strength of the i-th rule, µAi(x) and µBi(y) are the membership functions for the inputs x and y, respectively.

-

Layer 3 (known as Normalization Layer): Firing strengths from the previous layer are normalized via the nodes in this layer. This ensures that the firing strengths sum to one, providing a probabilistic interpretation:

In which \(\:\stackrel{-}{{w}_{i}}\) is the normalized firing strength of the i-th rule and \(\:{\sum\:}_{j=1}^{n}{w}_{j}\) is the sum of all firing strengths.

-

Layer 4 (known as Defuzzification Layer): This layer computes the consequent parameters for each rule. The layer outputs a weighted sum of the input values, scaled by the normalized firing strengths:

In which fi is the output of the i-th rule. pi, qi, and ri are the consequent parameters of the rule.

-

Layer 5 (known as Output Layer): Aggregates the outputs from the defuzzification layer. Produces the final output of the system:

In which the overall output is obtained by summing the contributions of all rules.

Optimization methods

In this section, the backgrounds of optimization techniques that were utilized in this study, are put forward.

Genetic algorithm (GA)

A Genetic Algorithm (GA) is an algorithmic paradigm that draws inspiration from biological evolution, emulating the process of natural selection and genetic inheritance. It is a powerful optimization technique often employed to locate near-optimal solutions to complex search and optimization problems across various domains. The fundamental concept of a GA is to evolve a population of candidate solutions to a problem over successive generations, gradually improving the quality of solutions through mechanisms akin to biological evolution such as crossover (recombination), selection, and mutation24.

Genetic algorithms are widely used in numerous fields including machine learning, optimization, engineering, economics, and artificial intelligence. They are particularly effective in problems where the search space is complex, large, or poorly understood, such as scheduling, design optimization, and game strategy development. The GA starts via a preliminary population of randomly generated individuals. In each generation, individuals are assessed using the fitness function. The finest individuals are nominated to act as parents, and through crossover and mutation, a new population is created. Over many generations, the population evolves, and the eminence of solutions improves. Ideally, the algorithm converges towards an optimal or near-optimal solution to the problem25,26.

Particle swarm optimization (PSO)

Particle Swarm Optimization (PSO) is a experiential optimization and search method that was developed by James Kennedy and Russell Eberhart in 1995, drawing inspiration from the social dynamics of flocking birds or schooling fish. In this paradigm, a population of particles, often referred to as a swarm, is used to explore the problem space and update their positions iteratively to converge towards optimal solutions. PSO has proven to be a versatile and robust approach for tackling optimization problems in both continuous and discrete spaces across a wide series of applications27. Each particle represents a potential solution to the problem and transfers through the solution space by following the best positions found by itself and its neighbors. PSO is employed in countless fields such as engineering design, machine learning, control systems, and economics. It is particularly effective for optimization problems where the search space is large, complex, or not well understood. PSO has been applied to problems like feature selection, neural network training, function optimization, and scheduling.

PSO starts with an initial swarm of particles, each with a random velocity and position in the search space. The algorithm iteratively updates the particles’ positions and velocities based on their own experience (personal best) and the swarm’s collective knowledge (global best). Within every iteration, the swiftness of each constituent particle is amended by way of a formula that factors in its existing velocity, the gulf between its present location and its prior personal best, as well as the disparity between its existing location and the global best. The location of each particle is revised on the basis of its new velocity. Each particle’s new location is then scrutinized via the fitness function. If this new location is deemed superior to the particle’s prior personal best, it is thus supplanted. If, conversely, the new location supersedes the global best, then the global best is similarly updated. This process repeats for a predefined number of iterations or until the particles converge on a solution28,29.

Coupled simulated annealing (CSA)

Coupled Simulated Annealing (CSA) is an optimization algorithm that extends the principles of Simulated Annealing (SA) by coupling multiple annealing processes. This approach allows for the simultaneous exploration of multiple solutions in the search space, enhancing the algorithm’s ability to escape local optima and improving the chances of finding a global optimum. CSA is particularly useful in complex optimization problems where the search space is large, multi-modal, or has many local optima30. CSA is particularly useful in complex optimization problems, such as those found in engineering design, machine learning, and operations research. It has been applied to problems like scheduling, circuit design, image processing, and parameter tuning in machine learning models31,32.

CSA starts with multiple chains, each initialized with a random solution and a temperature. In each iteration, each chain produces a novel candidate solution by making a small perturbation to its current solution. The new solution is assessed by means of the energy function. The acceptance probability is calculated based on the energy difference and the current temperature. The new solution may be accepted even if it is worse than the current solution, depending on this probability. The chains exchange information through the coupling mechanism, which could involve sharing the best solutions, adjusting temperatures, or other interactions. The temperature of each chain is gradually reduced according to the temperature schedule, leading the chains to converge on optimal or near-optimal solutions. This process continues until a stopping criterion is met often as convergence of the chains or a fixed number of iterations32.

Data gathering and appropriateness for modeling

Data used for the process of smart model construction was gathered from8, in which the solubility of mixtures of hydrogen/methane in brines was experimentally measured in different operating conditions encountered in the realistic projects of underground hydrogen storage. Indeed, the output parameter is solubility of hydrogen/methane mixtures in brine and the five input parameters are system temperature, system pressure, brine type (i.e., NaCl and CaCl2) concentration values and mole fraction of hydrogen within the mixture. The statistical data of the aforementioned dataset is given in Table 1. As can be seen, the approximate range of parameters are as follows: pressure [293–348] bar, temperature [200–600] degrees centigrade, NaCl concentration [0.1–50] g/L and CaCl2 concentration [8-183] g/L, all of which fall within the expected range of typical hydrogen projects globally. However, more pertinent data is absolutely beneficial to construction of more accurate and generalized data-driven models, which remains to be carried our appropriately and scrutinized in our future research work with more relevant data being at hand.

The authenticity of any data-driven intelligent model is highly affected by the validity of dataset used in the process of model development. Therefore, in this study, we aim to check the authenticity of data via the renowned Leverage technique, where Hat matrix may be given as33:

In the above equation, X represents the design matrix, which is dimensioned as m*n where m and n signify total number of datapoints and the number of input variables, respectively. In this methodology, the outlier detection is carried out using a plot called William’s plot in which the relationship between Hat values and normalized values are depicted. In this plot, the warning leverage is estimated via33:

Notice that the standardized residuals range between − 3 and + 3. In this way, the William’s plot is given in Fig. 1 wherein outlier and suspected datapoints can be identified. The two horizontal lines are called standardized residual values while the specific vertical line denotes the warning leverage value. Datapoints that are located within these bounds are characterized as reliable and validated. As can be observed in Fig. 1, only 6 datapoints are detected as outliers. However, to construct generalized models, all datapoints are considered during the model development.

Evaluation indexes

In order to find out how much the developed models are accurate and robust, the following accuracy indexes are calculated for each developed model34:

Relative error percent (RE%):

Absolute relative error (ARE%):

Average absolute relative error (AARE%):

Mean square error (MSE):

Determination coefficient (R2):

In which, N is called number of datapoints in the dataset, i is the index number within the dataset and subscripts pred and exp signify the modeled and experimental values, correspondingly.

Notice that input variables are temperature, pressure, NaCl concentration, CaCl2 concentration and hydrogen mole fraction in the hydrogen/methane mixture while the output variable is the hydrogen/methane solubility in the brine while attempting to construct the data-driven models. Moreover, the total dataset is divided into two portions of training and testing datasets, which contain 80% and 20% of the total data, respectively. Note that the normalization of input and output variables are carried out to lower the effects of data variations during the modeling process via the following relation:

In which nnorm is the normalized value; nmax is the maximum value in the dataset, nmin is the minimum value in the dataset and n is the actual datapoint.

Results and discussion

Sensitivity analysis

In this section, we seek to perform sensitivity study to understand the effect of each input parameter (i.e., temperature, pressure, hydrogen mole fraction within the mixture and salt type concentration) on the solubility of hydrogen/methane mixture in brine. For this purpose, the concept of relevancy factor should be followed, during which one estimates this factor for each input factor via the following mathematical expression:

The probable maximum and minimum values for this parameter is given as -1 and + 1, respectively. Two important pieces of information arise here; firstly, a negatively valued relevancy factor indicates an inverse relation of the input parameter with the output parameter while a positively valued indicates that a direct relationship exists. Secondly, the larger the magnitude of relevancy factor, the stronger relationship between the input and output variables. With regard to the given explanations, Fig. 2 shows the calculated relevancy factor for all input factors on the mixture solubility in brine. As can be seen, temperature, H2 mole fraction in the mixture and CaCl2 salt concentration appear to have indirect relationship with the hydrogen/methane solubility because the obtained values of relevancy factor for these parameters are negative. On the contrary, pressure and NaCl salt concentration are directly correlated with the output variable since the relevancy factor index for these parameters are positive. In addition, the strongest positively-correlated input parameter with solubility is recognized as the pressure while H2 mole fraction within the mixture is the negatively-correlated parameter. In addition, temperature as well as brine type concentration (both salt types including NaCl and CaCl2) are poorly-correlated with the solubility. The findings are justifiable. For instance, higher pressure increases gas solubility in liquids, which is consistent with Henry’s law. Particularly for hydrogen solubility in brine, increased pressure enhances the dissolution rate by forcing more hydrogen molecules into the brine, overcoming resistance from intermolecular forces within the liquid. Also, the inverse relationship between hydrogen mole fraction and solubility is due to the partial saturation effects within the solution. As the mole fraction of hydrogen increases, the system approaches saturation, thereby reducing the additional solubility capacity. This also relates to the salting-out effect, where certain ions in brine can reduce hydrogen solubility at higher concentrations.

Models’ evaluation

In this part, the robustness of the constructed intelligent models using the experimental dataset are assessed via statistical and evaluation indexes. The developed hybrid-based models are called LSSVM-PSO, LSSVM-GA, LSSVM-CSA, ANFIS-GA as well as ANFIS-PSO. In this way, the obtained tuning parameters of the LSSVM structures for its hybrid models are given in Table 2. In addition, Figs. 3, 4 and 5 represent coefficient of determination (R2), MSE and AARE% for all the developed hybrid models based upon training, testing and total datapoints, respectively. As can be seen, LSSVM hybrid models are generally more robust than ANFIS-based models in terms of these evaluation indexes. In detail, LSSVM-CSA appears to be the most accurate model since it has the maximum R2 values and lowest MSE and AARE% values.

The robustness of the formed models is further validated through the application of crossplots that juxtapose the predicted data with empirical results. As illustrated in Fig. 6, the anticipated outcomes for both the training and testing datasets are presented in conjunction with the actual values, along with the corresponding fitted linear equations. The close proximity of the estimated values derived from the intelligent models to the 45-degree reference line signifies a noteworthy degree of accuracy. This pronounced correlation underscores the models’ precision and reliability, thereby affirming their efficacy and relevance for predictive applications.

To enhance the validation of the models, we analyzed the relative error values by plotting them against the experimental data, as illustrated in Fig. 7. This graphical representation effectively showcases the models’ reliability and robustness. The proximity of the relative errors to the zero-error line indicates a minimal discrepancy between the predicted and observed values, thereby affirming the accuracy and trustworthiness of the models. This concentration of errors further highlights the models’ effectiveness and underscores their potential for reliable predictive analytics.

The cumulative frequency against ARE% for all datapoints are plotted for all constructed models in Fig. 8. Evidently, 100% of all the datapoints have ARE% smaller than around 25 while ARE% tends to become around 200%. This clearly shows that the hybrid LSSVM models are more accurate than ANFIS-based models.

As another evaluation index, the distribution of ARE% for each input variable is indicated in Fig. 9. As can be observed, the closeness of most of the data to zero horizontal line for LSSVM based models shows they are more accurate than ANFIS-based models.

In addition to the statistical indexes and plotted graphs put forward formerly, we need to check the developed models in terms of trend prediction. In this regard, Fig. 10 indicates the variations of solubility versus temperature, pressure and hydrogen mole fraction within the hydrogen/methane mixture. As can be seen, LSSVM-CSA and LSSVM-GA are the only data-driven models that can accurately capture the trend prediction for the aforementioned input parameters. This is while, for instance, LSSVM-PSO and ANFIS-PSO fails to capture the trend prediction with regard to temperature. Note that temperature and hydrogen mole fraction in mixture are indirectly related with solubility although pressure is directly related with solubility data; this was previously outlined relevancy index. Note also that the strongest relationship of pressure with hydrogen solubility in brine is seen in low pressure values and as pressure increases, this relationship gradually vanishes.

Based on the evaluation metrics and diverse graphical plots discussed, we can conclude that LSSVM-based hybrid models are the most accurate developed data-driven models; however, considering the trend prediction plots, one observes that LSSVM-CSA and LSSVM-GA can be considered reliable and accurate tools for the prediction of hydrogen solubility in brine with regard to realm of hydrogen storage projects in underground formations.

These findings suggest that machine learning methods could be beneficial for predicting the solubility of hydrogen in brine for underground hydrogen storage projects, advancing efficient and accurate storage methods. Furthermore, the utilization of significantly larger datasets such as incorporating more real-time data from ongoing real hydrogen storage projects would be advantageous in creating stronger, as well as more accurate and universal models, a concept that should be examined in upcoming research endeavors.

Conclusions

In this study, intelligent data-driven hybrid models of LSSVM-CSA, LSSVM-PSO, LSSVM-GA, ANFIS-PSO, and ANFIS-GA are developed based upon experimental datapoints. The results show that almost all the aforementioned data are reliable and authentic for the model development process. In addition, the calculated relevancy factor for each input parameter illustrates that pressure has the strongest direct relationship with the solubility while hydrogen mole fraction in the mixture has the strongest inverse relationship with the solubility. The evaluation process indicates that LSSVM-GA and LSSVM-CSA are the most accurate model as indicated by the obtained values of R-squared, MSE and AARE%. The developed models can be utilized without the need of laboratory works which are often heavy, tedious, time-consuming and particularly endangering in the field of hydrogen storage in underground formations. These results indicate that machine learning techniques have the potential to be a valuable resource for forecasting the solubility of hydrogen in brine found in underground hydrogen storage initiatives, contributing to the progress of smart, cost-effective, and safe hydrogen storage methods. In addition, much larger datasets would be beneficiary to development of more robust and generalized models, which should be scrutinized in future research works.

Data availability

Data can be gained from the corresponding author upon request.

References

Kumar, S. et al. A comprehensive review of value-added CO2 sequestration in subsurface saline aquifers. J. Nat. Gas Sci. Eng. 81, 103437 (2020).

Salina Borello, E. et al. Underground hydrogen storage safety: Experimental study of hydrogen diffusion through caprocks. Energies 17(2), 394 (2024).

Buscheck, T. A. et al. Underground storage of hydrogen and hydrogen/methane mixtures in porous reservoirs: Influence of reservoir factors and engineering choices on deliverability and storage operations. Int. J. Hydrog. Energy 49, 1088–1107 (2024).

Tarkowski, R., Uliasz-Misiak, B. & Tarkowski, P. Storage of hydrogen, natural gas, and carbon dioxide–geological and legal conditions. Int. J. Hydrog. Energy 46(38), 20010–20022 (2021).

Ansari, S. et al. Prediction of hydrogen solubility in aqueous solutions: Comparison of equations of state and advanced machine learning-metaheuristic approaches. Int. J. Hydrog. Energy 47(89), 37724–37741 (2022).

Perera, M. A review of underground hydrogen storage in depleted gas reservoirs: Insights into various rock-fluid interaction mechanisms and their impact on the process integrity. Fuel 334, 126677 (2023).

Zivar, D., Kumar, S. & Foroozesh, J. Underground hydrogen storage: A comprehensive review. Int. J. Hydrog. Energy 46(45), 23436–23462 (2021).

Tawil, M. et al. Solubility of H2-CH4 mixtures in brine at underground hydrogen storage thermodynamic conditions. Front. Energy Res. 12, 1356491 (2024).

Abdulshahed, A. M., Longstaff, A. P. & Fletcher, S. The application of ANFIS prediction models for thermal error compensation on CNC machine tools. Appl. Soft Comput. 27, 158–168 (2015).

Boyacioglu, M. A. & Avci, D. An adaptive network-based fuzzy inference system (ANFIS) for the prediction of stock market return: The case of the Istanbul stock exchange. Expert Syst. Appl. 37(12), 7908–7912 (2010).

Vakhshouri, B. & Nejadi, S. Prediction of compressive strength of self-compacting concrete by ANFIS models. Neurocomputing 280, 13–22 (2018).

Akkaya, E. ANFIS based prediction model for biomass heating value using proximate analysis components. Fuel 180, 687–693 (2016).

Thanh, H. V. et al. Data-driven machine learning models for the prediction of hydrogen solubility in aqueous systems of varying salinity: Implications for underground hydrogen storage. Int. J. Hydrog. Energy 55, 1422–1433 (2024).

Thanh, H. V. et al. Artificial intelligence-based prediction of hydrogen adsorption in various kerogen types: Implications for underground hydrogen storage and cleaner production. Int. J. Hydrog. Energy. 57, 1000–1009 (2024).

Ewees, A. A. et al. Smart predictive viscosity mixing of CO2–N2 using optimized dendritic neural networks to implicate for carbon capture utilization and storage. J. Environ. Chem. Eng. 12(2), 112210 (2024).

Zhang, H. et al. Catalyzing net-zero carbon strategies: Enhancing CO2 flux prediction from underground coal fires using optimized machine learning models. J. Clean. Prod. 441, 141043 (2024).

Vo Thanh, H., Sugai, Y. & Sasaki, K. Application of artificial neural network for predicting the performance of CO2 enhanced oil recovery and storage in residual oil zones. Sci. Rep. 10(1), 18204 (2020).

Thanh, H. V. et al. Modeling the thermal transport properties of hydrogen and its mixtures with greenhouse gas impurities: A data-driven machine learning approach. Int. J. Hydrog. Energy 83, 1–12 (2024).

Van Gestel, T. et al. Least Squares Support Vector Machines (2002).

Suykens, J. A. & Vandewalle, J. Least squares support vector machine classifiers. Neural Process. Lett. 9, 293–300 (1999).

Suykens, J. A. & Vandewalle, J. Recurrent least squares support vector machines. IEEE Trans. Circuits Syst. I: Fundamental Theory Appl. 47(7), 1109–1114 (2000).

Van Gestel, T. et al. Benchmarking least squares support vector machine classifiers. Mach. Learn. 54, 5–32 (2004).

Chapelle, O. et al. Choosing multiple parameters for support vector machines. Mach. Learn. 46, 131–159 (2002).

Mitchell, M. An Introduction to Genetic Algorithms (MIT Press, 1998).

Golberg, D. E. Genetic algorithms in search, optimization, and machine learning. Addion Wesley 1989(102), 36 (1989).

Holland, J. Adaptation in natural and artificial systems, university of michigan press, Ann Arbor. Cité Page 100, 33 (1975).

Kennedy, J. & Eberhart, R. Particle swarm optimization. In Proceedings of ICNN’95-International Conference on Neural Networks (IEEE, 1995).

Shi, Y. Particle Swarm Optimization: Developments, Applications and Resources. In Proceedings of the Congress on Evolutionary Computation (IEEE Cat. No. 01TH8546) (IEEE, 2001).

Poli, R., Kennedy, J. & Blackwell, T. Particle swarm optimization: An overview. Swarm Intell. 1, 33–57 (2007).

Ingber, L. Simulated annealing: Practice versus theory. Math. Comput. Model. 18(11), 29–57 (1993).

Azencott, R. Simulated Annealing: Parallelization Techniques (1992).

Goffe, W. L., Ferrier, G. D. & Rogers, J. Global optimization of statistical functions with simulated annealing. J. Econ. 60(1–2), 65–99 (1994).

Dufrenois, F. & Noyer, J. C. Discriminative Hat Matrix: A new tool for outlier identification and linear regression. In The 2011 International Joint Conference on Neural Networks(IEEE, 2011).

Bemani, A., Madani, M. & Kazemi, A. Machine learning-based estimation of nano-lubricants viscosity in different operating conditions. Fuel 352, 129102 (2023).

Author information

Authors and Affiliations

Contributions

F.M.A.A., and M.J.A. developed the required codes for this.K.V. and N.N. prepared the experimental dataset needed for modeling work.R.P.K.N., B.K., K.K., and S.B.A. ran the codes and extracted all the plots.S.S.J., A.M.A., M.F.A., and A.K. wrote the initial draft of the paper and later edited it.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Altalbawy, F.M.A., Al-saray, M.J., Vaghela, K. et al. Machine-learning based prediction of hydrogen/methane mixture solubility in brine. Sci Rep 14, 30227 (2024). https://doi.org/10.1038/s41598-024-80959-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-80959-1

Keywords

This article is cited by

-

Machine learning models for the prediction of hydrogen solubility in aqueous systems

Scientific Reports (2025)