Abstract

Age-related sensory declines are unavoidable and closely linked to decreased visual, auditory, and cognitive functions. However, the interrelations of these declines remain poorly understood. Despite extensive studies in each domain, shared age-related characteristics are complex and may not consistently manifest direct relationships at the individual level. We investigated the link between visual and auditory perceptual declines in healthy aging and their relation to cognitive function using six psychophysical and three cognitive tasks. Eighty young and older healthy adults participated, revealing a general age-related decline. Young adults consistently outperformed older adults in all tasks. Critically, the performance in visual tasks significantly correlated with performance in auditory tasks in older adults. This suggests a domain-general decline in perception, where declines in vision are related to declines in audition within individuals. Additionally, perceptual performance in older adults decreased monotonically year by year. Working memory performance significantly correlated with perceptual performance across both age groups and modalities, further supporting the hypothesis of a domain-general decline. These findings highlight the complex and interconnected nature of sensory and cognitive declines in aging, providing a foundation for future translational research focused on enhancing cognitive and perceptual abilities to promote healthy aging and ultimately improve the quality of life for older adults.

Similar content being viewed by others

Introduction

The average age of the population is increasing1,2. In particular, the number of people over the age of 60 is expected to reach two billion, representing 38% of the worldwide population by 20501,2. Age-related sensory decline usually affects daily tasks such as safe mobility3, reading4,5, and socializing6,7. Understanding these changes is crucial for older adults functioning8. Here, we investigate how healthy aging impacts perceptual visual, auditory, and cognitive abilities and how these domains are interconnected within the same individuals.

Age-related perceptual changes in vision occur even in the absence of clinical or pathological conditions9. Previous studies have consistently shown that healthy aging can alter various aspects of vision, leading to declines in perceptual abilities (for reviews, see9,10) such as visual acuity11, contrast sensitivity12, spatiotemporal integration of motion13, and motion processing14. Auditory decline is also a common aspect of aging, even without clinical hearing impairments15. This decline is accompanied by a decrease in hearing sensitivity16, which affects both lower and higher level processing, such as sound detection, discrimination, and speech understanding, especially in noisy environments17,18,19. Previous studies have demonstrated a decline in auditory temporal perception and frequency discrimination20, phoneme discrimination deterioration21,22, and challenges with more complex stimuli, such as speech understanding in noise15,23,24,25,26.

While declines in vision10 and audition15 with age are well documented, few studies directly compare sensory functions across auditory and visual modalities within the same participants directly linking individual differences in performance across modalities. Some studies suggest unique declines for each sensory system27, whereas others find similarities across senses28. For example, a recent electrophysiological study showed a positive correlation between auditory and visual responses in older adults29. However, how these differences relate to perceptual differences remain unclear29. Moreover, other studies have suggested similarities and modality-specific aspects across age groups in auditory and visual perceptual tasks, depending on the task type30. Hence, the extent to which declines in auditory and visual function correlate or reflect common age-related changes remains unclear.

Perceptual changes are accompanied by changes in brain structure or function as individuals age31, demonstrating that aging may affect both early sensory and higher level cognitive functions32. Therefore, studies suggest that declines in vision31 and audition33 may correlate with cognitive decline, impacting attention, memory, and processing speed, which are also considered more vulnerable to age-related decline22,31,32,34,35,36,37. Previous studies have suggested that these cognitive abilities are crucial in shaping individuals’ auditory38,39 and visual perceptions40,41, particularly in the context of healthy aging. As explained above, studies have illustrated this link between visual and auditory perception and cognitive performance; however, they have often demonstrated this link in auditory42 and visual modalities43 separately. Although few studies have investigated the three domains in one study, for example, Sternang, et al.44 found a relationship between auditory and visual modalities, as well as working memory, among healthy older adults. However, in the sensory tasks, they tested only a single task for each modality, which was threshold acuity. Still, this relationship reinforces the highlights in Wahl and Heyl45 review, where they emphasized a correlation between an individual’s age and their functioning in vision, audition, and cognition. Yet, the exact nature of the relationship between age-related declines in cognitive and sensory functions remains uncertain. Therefore, in the current study, we aim to explore the interaction of auditory, visual, and cognitive domains to unravel the links between perceptual and cognitive age-related decline.

In this context, the literature proposes two different hypotheses in an attempt to explain the link between age-related perceptual declines and cognitive decline. The “Cascade” hypothesis, posited by Birren46, suggests that age-related deficits in the sensory system may lead to a “domino effect”, accelerating cognitive decline due to potential adverse effects on cognitive function from inadequate or uneven sensory stimulation, (supported by later studies47,48). Conversely, a review by Wahl and Heyl45 presents another hypothesis, framing the correlation between age and performance in vision, audition, and cognition as the “Common Cause” hypothesis. This hypothesis suggests that the observed declines in sensory and cognitive functions are due to common age-related neuronal degradation that impacts a wide variety ofcharacteristics directly associated with central nerve processing capacity. This is supported by the electrophysiological study conducted by Alain et al.29. Although that study is very informative, their research did not evaluate behavioral performance, leaving it unclear how these electrophysiological findings translate into perceptual variations among participants. Moreover, their assessments were solely focused on older adults. Another study reinforcing this hypothesis is Humes et al.35, which employed perceptual psychophysical measures including threshold sensitivity, temporal gap detection, temporal order identification, and temporal masking across audition, vision, and touch, alongside cognitive abilities. While that study demonstrated a link between cognitive-visual and cognitive-auditory processing throughout the adult lifespan, from ages 18 to 87, it did not directly explore the relationship between auditory and visual decline. Instead, it merely established a correlation between visual and auditory acuity without considering the correlation in temporal processing abilities. Importantly, some participants had a level of hearing loss, which was considered acceptable within their defined maximum acceptable hearing thresholds. Still, some studies, including Baltes and Lindenberger49 and Humes et al.30, support both hypotheses. These findings suggest that while shared age-related variances may exist among sensory and cognitive capabilities in older adults, the effects of aging on these domains are complex and may not consistently manifest direct relationships at the individual level.

It is important to note that the investigations in the mentioned studies had several limitations. These include lack of examination of auditory and visual perception under adverse conditions, and use of behavioral measures that focus on a single task within each modality, thereby limiting our understanding of the connections between perception and aging across modalities. Additionally, these studies often fail to examine correlations across different domains, that is how vision and audition are directly related to each other. Taken together and as introduced above, previous studies typically focused on the links between cognitive measures and a single sensory modality: linking cognition to audition33 or cognition to vision31. Few studies have examined links across multiple modalities, and those that did, typically tested only a single task per modality, focusing mainly on threshold acuity. Missing from the literature is an examination of individual level differences of multiple perceptual processes across modalities in aging36. Therefore, while we are aware of the connection between various sensory-cognitive functions, we are unsure of its nature. Importantly, understanding how visual and auditory declines are related is especially important for designing effective protocols that prevent or limit declines in both modalities, such as through perceptual learning, that provides non-invasive approach to improve perception and aligns with the goal of maintaining independence and safety in older populations. This perspective supports Beard50, who suggests that health systems need more individual-centered approaches to effectively serve aging populations by evaluating different abilities, such as cognitive and sensory capacities in older adults.

In the current study, we examine the relationship between visual and auditory perception and cognitive function across two age groups – young adults and older adults. Different from previous research, we investigate age-related differences in both visual and auditory domains within the same participants and with the same target performance accuracy in adverse conditions. We examine three psychophysical tasks in each modality. As a first step, in each sensory modality, we examine tasks that have been demonstrated to show reduced performance in older adults and capture a range of sensory processing levels. In audition, we use frequency discrimination20, speech-in-noise in pseudoword discrimination and sentence recognition17,18,19. In vision, we use motion in noise13,14, spatial frequency, and contrast sensitivity12. These tasks cover a broad range of sensory function and are especially focused on sensory abilities in adverse conditions. For each sensory task we measure thresholds using an adaptive staircase procedure. This approach enhances sensitivity to subtle sensory changes while also minimizing concerns regarding ceiling/floor effects and response biases. For our cognitive tasks, we test abilities that decline in older adults and impact behavior51, namely processing speed, inhibitory control and attention, and working memory, which are all reduced in aging22,52,53,54. Our participant recruitment protocol, which includes healthy young and older adults, enables us to focus on healthy aging while excluding those with diagnosed clinical conditions and sensory impairments. We are guided by two independent main research questions: (1) Does healthy aging impact perception in auditory and visual modalities equally, or is it more specific to one modality? (2) Are auditory and visual perception declines linked to cognitive decline? To address these questions, we employ both within-subject and between-subject designs. This approach enables us to directly compare vision and audition both within the same age group and across age groups.

Results

Older adults perform poorer than young adults in auditory and visual tasks

First, we found no significant interaction between age and task within modality (age group x three nested tasks within each modality: Auditory: F (4,156) = 0.11, p = 0.98; visual: F (4,156) = 1.27, p = 0.28). This suggests that the impact of age did not differ significantly across tasks. Indeed, age had a significant main effect in both audition (F (1,156) = 33.52, p < 0.001, ηp2 = 0.18) and vision (F (1,156) = 56.46, p < 0.001, ηp2 = 0.27), indicating that older adults performed poorer than young adults.

We conducted post-hoc independent samples t-tests to compare the two age groups in each task. After applying Bonferroni corrections, we found significant difference in thresholds: young adults outperformed older adults in all three sensory tasks within both the auditory (see Fig. 1d-f) and visual (see Fig. 2d-f) modalities.

Auditory Perceptual Tasks. (a-c). Depicts the trial sequences used to assess auditory perceptual performance (a) Frequency Discrimination (b) Speech-in-noise Pseudoword Discrimination (c) Speech-in-noise Sentence Recognition. (d-f). Auditory performance across age groups. Individual JNDs (open symbol) and mean JNDs (closed symbol) for young adults (N = 40, light green circles) and for older adults (N = 40, dark green triangles) across the three auditory tasks. Independent samples t-tests with Bonferroni corrections86 to address multiple comparisons indicate that younger adults outperformed older adults across the three auditory tasks: Frequency discrimination task: young adults M = 37.98, SD = 27.28; older adults M = 106.32, SD = 91.20, t (78)= -4.53, p < 0.001, d = -1.01; Speech-in-noise pseudoword discrimination: young adults M = -9.82, SD = 2.87; older adults M = -6.64, SD = 4.92, t (78) = -3.52, p < 0.001, d = -0.78; Speech-in-noise sentence recognition: young adults M = -7.54, SD = 3.36, older adults M = -4.37, SD = 3.80, t (78) = -3.94, p < 0.001, d = -0.88. Error bars represent standard errors, ***p < 0.001.

Visual Perceptual Tasks. (a-c). Depicts the trial sequences used to assess visual perceptual performance (a) Motion Direction Discrimination (b) Spatial Frequency Discrimination (c) Contrast Threshold Discrimination. (d-f). Visual performance across age groups. Individual thresholds (open symbol) and mean thresholds (closed symbol) for young adults (N = 40, light red circles) and for older adults (N = 40, dark red triangles) across the three visual tasks. Independent samples t-tests with Bonferroni corrections86 to address multiple comparisons indicate that younger adults outperformed older adults across the three visual tasks: Motion direction discrimination task: young adults M = 0.19, SD = 0.12, older adults M = 0.41, SD = 0.21, t (78) = -5.68,p < 0.001, d = -1.27; Spatial frequency discrimination: young adults M = 0.08, SD = 0.05, older adults M = 0.27, SD = 0.24, t (78) = -4.74, p < 0.001, d = -1.06; Contrast threshold discrimination: young adults M = -0.05, SD = 0.06, older adults M = 0.55, SD = 0.37, t (78) = -8.23, p < 0.001, d = -1.84. Error bars represent standard errors, ***p < 0.001.

No significant difference in age related declines in auditory and visual tasks

Next, we examined whether declines in vision differ from those in audition. We conducted a combined analysis of auditory and visual tasks in each modality to further investigate potential differences in perceptual performance across modalities. Specifically, we employed hierarchical Proc Glimmix models, incorporating standardized scores for each individual in each task, to explore the potential interaction between modality type x three nested tasks within each modality x age group. This allowed us to assess whether performance within a given modality differed from performance in tasks within the other modality. The results of the additional Proc Glimmix model showed no significant interaction for modality type x three nested tasks within each modality x age group (F (10, 390) = 0.90, p = 0.53), and no significant interaction for age group x three nested tasks within each modality (F (5, 390) = 1.80, p = 0.11). To delve into the questions of whether there is a difference stemming from modality type, we compared perceptual performance across the two modalities within each age group. The comparison was not significant in the young (t (398) = 1.62, p = 0.64) nor in the older group (t (398) = -1.62, p = 0.64), (see Fig. 3). In order to examine the effect of age, and after confirming no significant interaction between modality type and the task used, we ran a final model that excluded the effects of modality type and task interaction focusing solely on the age group effect. The findings revealed a significant age effect regardless of modality (F (1, 400) = 61.02, p < 0.001, ηp²=0.14). In summary, our findings indicate that perceptual performance in the two modalities is not significantly different within each age group. The findings suggest that aging affects performance across both modalities to the same extent. Next, we examine whether and how the two modalities are related across individuals.

Visual and Auditory standardized scores across age. Represents the standardized scores for each task across the two modalities for the young group (top panel a), and the older group (bottom panel b), for visual modality (red) and auditory modality (green). Standardized Scores < 0 indicate better performance.

Strong correlation between vision & audition in older adults

To examine the correlation between the two modalities and across the three tasks in each modality, we calculated a vision score and an audition score for each participant by averaging thresholds across tasks. We examined Pearson’s correlation between performance in vision and in audition for each group. A strong significant correlation was observed in the older (r = 0.52, p = 0.001) but not in the young group (r = 0.21, p = 0.18; see Fig. 4) and there was a significant difference in the correlations between groups (Fisher’s z: z = 1.56, p = 0.05). This correlation in the older adults group is related to the variability in the group within each sensory modality compared to the young adults group; and this is likely driven by the perceptual decline which is more evident as year of age increases (see below).

In older adults, perceptual performance declines steadily each year

Keeping in mind that our design is cross-sectional, we wanted to examine the impact of year of age on performance. Thus, we analyzed age as a continuous variable rather than a discrete factor (old versus young), as in previous sections. We investigated how age affected perceptual performance across groups in each modality over the years. Fixed effects represent the interaction between modality type and covariates (year of age) for each age group and since we did not find a significant interaction between modality type x year of age for older (F (1,198) = 0.61, p = 0.43) or young adults (F (1, 198) = 0.16,p = 0.68) we used an additional model without using the fixed effects to focus on the age range within each age group. In the younger group, there was no effect of age (F (1, 199) = 0.10, p = 0.74). This suggests that for young individuals’ the year of age did not impact performance. In older adults, year of age significantly affected their performance (F (1, 199) = 7.94,p = 0.0053, ηp²=0.04). This implies that their performance is poorer each year regardless of the modality type (F (1, 199) = 1.72, p = 0.19). Given the significant impact of age in older adults, we calculated the correlation between age in years and performance in each modality and, as expected, found it to be significant; the older the age, the lower the performance (audition: r = 0.38, p = 0.01; vision: r = 0.39,p = 0.01, see Fig. 5).

Correlation across age and modality in older adults. Significant positive correlations were observed between age and average standardized scores across both visual (r = 0.39,p = 0.001, in red color) and auditory (r = 0.33, p = 0.001, in green color) modalities. Standardized Scores < 0 indicate better performance.

Young adults outperformed older adults in cognitive tasks

To examine the difference between age groups, we conducted Independent samples t-tests with Bonferroni corrections86 to correct for multiple comparisons across the three cognitive tasks: as seen in Fig. 6d-f, young adults outperformed older adults in the three cognitive tasks. Flanker inhibitory control & attention task: young adults M = 102.48, SD = 10.61, older adults M = 92.58, SD = 11.75, t (78) = 3.95, p < 0.001, d = 0.88; List sorting working memory task: young adults M = 112.20, SD = 14.40, older adults M = 90.35, SD = 8.24, t (78) = 8.28,p < 0.001, d = 1.85; Pattern comparison processing speed task: young adults M = 113.65, SD = 20.32; older adults M = 85.75, SD = 16.18, t (78) = 6.79,p < 0.001, d = 1.51.

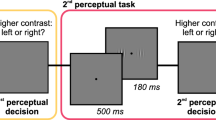

Cognitive Tasks. (a–c). Depicts the trial sequences used to assess cognitive functions (a) Flanker Inhibitory Control & Attention (b) List Sorting Working Memory (c) Pattern Comparison Processing Speed. d–f. Cognitive performance across age groups. Individual (open symbol) and mean standard (closed symbol) scores for young adults (N = 40, grey circles) and for older adults (N = 40, black triangles) across the cognitive tasks.

Correlation between cognitive and perceptual performance

To examine the correlation between cognitive and perceptual performance, each cognitive task was treated as an independent variable. Cognitive scores interacted with age group x task nested within modality type x modality type. Fixed effects represent the interaction between age group x task nested within modality type x cognitive function while random effects accounted for individual differences in task performance within the same participant and age group. We used the hierarchical Proc Glimmix model to determine whether each cognitive task may be utilized to predict perceptual task performance. After removing insignificant variables in the proposed model, list sorting working memory emerged as a predictor of perceptual performance (F (1, 398) = 6.56, p < 0.001, ηp²=0.02), independent of modality type (F (1,380) = 1.16, p = 0.28). This suggests that strong working memory performance predicts better perceptual task performance across both modalities and age groups. In contrast, flanker inhibitory control and attention, and pattern comparison processing speed tasks were not significant predictors of perception (F (1, 380) = 0.01,p = 0.93; F (1, 379) = 0,p = 0.99).

Pearson’s correlations revealed a significant relationship only in the younger group, between perceptual average performance (the average of six sensory tasks) and the flanker inhibitory control and attention task (Young: r = -0.32, p = 0.03; Older: r = -0.28, p = 0.09;), and between perceptual average performance and the pattern comparison processing speed task (Young: r = -0.37, p = 0.01; Older: r = -0.15, p = 0.33). The correlation between perceptual average performance and working memory was significant in both groups (Older: r = -0.34,p = 0.03; Young: r = -0.42, p = 0.007; see Fig. 7) with no significant group differences (Fisher’s z: z = -0.40, p= 0.34). Interestingly, examining individual participants in different age groups in Fig. 7, we observe that some older adults fall within the perceptual performance range of young adults (e.g. x-axis below zero). Although, this number of older adults participants that are within similar perceptual performance of young adults, is not sufficient to draw definitive conclusions, it seems that their working memory performance varied, as indicated by the variance on the y-axis (this point will be further discussed below).

Sensory-working memory correlation across age groups. Significant positive correlations were observed between the working memory standardized scores and sensory tasks average for standardized scores for older (r = -0.34, p = 0.03, in black color) and young (r = -0.42, p = 0.007, in grey color) adults. Standardized Scores < 0 indicate better performance.

Discussion

In the present study, we examined the impact of aging on auditory and visual modalities to understand the nature of the relationship between age-related declines in perception across audition and vision and their association with cognitive decline by examining two age groups, young and older adults. Consistent with prior research, at the group level, younger adults outperformed older adults in auditory, visual and cognitive tasks. Critically, our study examined individual level differences across six different psychophysical tasks that tap into different processing abilities in audition and vision. We revealed a strong correlation across modalities in older adults. The extent of the auditory decline was related to the extent of visual decline, suggesting a shared mechanism for sensory decline across modalities. For the older adults’ group, although our data is cross-sectional rather than longitudinal, we found that participants who were older had poorer perceptual performance than “younger” older adults. Unlike inhibitory attention control and pattern comparison, performance on working memory tasks was related to perceptual decline.

Older adults consistently exhibited poorer perceptual performance compared to young adults across the three auditory tasks (Fig. 1d-f). As has been seen in previous studies using similar perceptual tasks in frequency discrimination55,56,57, speech-in-noise pseudoword discrimination58,59, and speech-in-noise sentence recognition60,61. Similarly, in visual tasks, older adults demonstrated poorer perceptual performance (Fig. 2d-f) in motion direction discrimination, as in previous findings14,62, as well as in tasks involving spatial frequency and contrast discrimination, consistent with previous studies employing comparable tasks and age groups63,64.

Additionally, our analysis revealed that perceptual performance in the two modalities was not significantly different in each age group, as shown in Fig. 3. Thus, perceptual performance across tasks in each modality and between modalities in each group did not differ. This result suggests that there is a common aspect that affects both modalities in a similar manner in each age group. Consistent with this suggestion, Baltes and Lindenberger (1997)49, who supported the “Common Cause” concept, found a high degree of similarity between the age-related decline of visual acuity and auditory acuity, leading support to the general level decline with aging. At the same time, in an EEG study, Ceponiene et al. (2008)65 reported diminished event-related potential (ERP) in early responses due to aging in the visual but not in the auditory modality. However, their study showed diminished response amplitudes in ERP later responses across both modalities. Similarly, Humes et al. (2007)30 demonstrated mixed findings supporting both “Common Cause” and “modality-specific” aspects. This discrepancy with our findings could be attributable to the methodology of the study as well as the abilities assessed (for example, Humes30 focused on speech recognition tasks). We examined three tasks in each modality and found this trend across all tasks, which could not be attributable to task specificity or different levels of perceptual processing. Our findings support the notion that shared characteristics between the modalities affect perceptual performance within individuals.

Critically, our findings showed a strong and significant correlation between the two modalities in the older group but not in the young group (see Fig. 4). These findings provide novel insights into how older individuals process auditory and visual information, demonstrating a robust correlation that supports the idea of a significant amodal aspect in perceptual decline. This suggests that the extent to which these functions vary with aging is similar, reinforcing the concept of domain-general decline. In our study, we employed low level perceptual processing tasks (e.g., auditory frequency discrimination, visual spatial frequency discrimination, and contrast sensitivity) and slightly higher-level tasks (pseudoword discrimination, auditory sentence recognition, and motion discrimination in noise). By integrating data from both low-level and high-level processes and examining the relationship between these modalities, we have paved the way for further research into aging, perception, and their interconnection. These findings may lead to practical methods for enhancing sensory function in older adults, ultimately improving their quality of life. While our study reveals a correlation in perceptual thresholds, a recent EEG study revealed a similar pattern of a positive correlation between sensory-evoked response amplitude measures across sensory domains (audition and vision) in older adults but not in young adults29. The difference in correlation between the two age groups was attributed to the hypothesis that age-related changes in auditory and visual sensory-evoked responses are mitigated by age-related changes in prefrontal-to-sensory cortex synchrony, which affects both modalities in a similar way in older adults29. Here, we too found no vision to auditory correlation in the young group, which may be explained by similarly and possibility to the relatively little variability in the young versus the older group, particularly in the visual tasks (in which performance was relatively high; Fig. 2d-f).

Interestingly, the results revealed that within the younger age group (18–30), the exact year of age does not significantly impact sensory performance. However, when examining the data from older adults, the results revealed that the year of age relates to poorer perceptual performance in this group regardless of modality type (Fig. 3). This result was further supported by a strong association between aging and perceptual performance across both modalities in older adults (Fig. 5). In contrast, the young adults exhibited less variability in perception and no correlation between modalities. These findings point to the understudied topic of individual variability in perception, particularly how stable these differences remain with age (see review Mollon et al.66). Thus, future studies should consider different designs (e.g., multiple testing sessions), to investigate whether these individual differences in vision and audition are interconnected as individual age.

To our knowledge, no study has examined the effect of year of age in both modalities in the same study and within the same individuals. The impact of a year of age has been studied separately in each modality and is consistent with our findings. In audition, Matilainen et al.67, showed that the latency of the transient brain response in older adults was prolonged by aging each year compared to the young, and the accuracy of behavioral reactions to sinusoids was similarly diminished by aging; while the young group showed no effect for year of age. Furthermore, Herrmann and Buckland68 suggested that healthy aging can lead to changes in neural responses to auditory stimuli, alterations in sensitivity to temporal regularity in sounds, and potential differences in auditory perception when compared to younger adults. In vision, a study by Casco et al.69 demonstrated that older adults have a lower ability to select neural representations of target orientation compared to younger adults. This difference was particularly pronounced when age was treated as a continuous variable in the presence of noise. Additionally, their findings suggested that the performance of older adults deteriorates at a faster rate each year, whereas the performance of young adults remains relatively stable across different ages within the young group.

Processing speed, inhibitory control and attention, and working memory are the basic domains of cognitive decline that have been linked to behavioral changes in older adults51. Consistent with previous studies22,52,53,54, we found age-related differences in performance in the three cognitive tasks performed in our study (Fig. 6d-f). Importantly, here we investigated the correlation with perceptual performance. Our study revealed that working memory is related to perceptual performance in a manner that is not different between the modality types (Fig. 7). The results further suggest that good performance on working memory tasks can serve as a partial predictor of success in tasks involving perception. Notably, although we observed that some older adults fall within the perceptual performance range of young adults, yet this similar perceptual threshold between age groups does not necessarily result in comparable cognitive performance. Older adults who demonstrate perceptual abilities similar to younger adults still exhibit variability in cognitive scores, suggesting that cognitive decline may involve factors beyond just perceptual capabilities. Still, future studies should investigate this relationship using matched discrimination stimuli to clarify the link between sensory and cognitive functions in aging. Prior work has revealed that working memory performance was related to perceptual performance separately in each modality. In audition, this relates to the challenge posed by poor sensory input, such as background noise, which can lead to difficulties in accurately perceiving speech sounds and accessing lexical representations. Our results align with the Ease of Language Understanding (ELU) model, which emphasizes the crucial role of working memory in speech-in-noise processing among both young and older adults70,71,72. In the current study, the mismatch from the noisy incoming signal necessitates the utilization of working memory, thereby possibly explaining the correlation between auditory perceptual performance and cognitive performance. In vision, working memory also plays a critical role when perception requires integrating multiple visual features or retaining visual information over time63. Some research has linked visual working memory capability to spatial information retention in the presence of a mask stimulus63,73. The interaction between visual degradation and working memory task demand, accompanied by the age-related reduction in available processing resources, highlights the increased vulnerability of older adults. Another explanation for the correlation between perception and working memory might be related to the nature of perceptual tasks, which may rely more on working memory than inhibitory control and processing speed (e.g., recalling the pseudoword or sentence, or the higher spatial frequency among two consequetive stimuli). Taken together, it becomes clearer why older adults may face greater difficulties than young adults in “filling in the gaps” and why the brain needs to exert more effort to process ongoing degraded perceptual information in vision and audition.

The current study did not observe correlations between perceptual tasks and tasks of inhibitory control and attention or pattern comparison processing speed, although it has been shown in previous studies that inhibitory control and attention and processing speed play a role in auditory perception e.g51. and visual perception e.g63. Interestingly, in a recent study, Verissimo et al.74 pointed out that while some cognitive measures show declines, others, like attention and executive inhbitory efficency, may remain stable. This challenges the traditional narrative that views cognitive aging purely as a period of decline, underscoring the complexity of age-related changes in cognitive functions. It also emphasizes the significance of incorporating a diverse range of cognitive tasks when studying the link between sensory measures and cognitive functions in future studies.

Overall, the available evidence suggests that sensory and cognitive aging are interconnected. As described earlier (see Introduction), the “Cascade” and “Common Cause” hypotheses both explore this relationship but propose different mechanisms. The “Cascade” hypothesis suggests a sequential process where sensory declines, such as in vision or audition, directly lead to or accelerate cognitive decline due to reduced sensory input and stimulation. In contrast, the “Common Cause” hypothesis suggests that both sensory and cognitive declines stem from a shared underlying age-related neural degradation, which affects central processing capacities and results in simultaneous declines across multiple domains. Thus, while the “Cascade” hypothesis implies a direct causal relationship between sensory and cognitive declines, the “Common Cause” hypothesis suggests a parallel decline driven by a common underlying factor. Although our study was not designed to directly test the “Cascade” or “Common Cause” hypotheses of aging, we believe that our findings align more closely with the “Common Cause” hypothesis. This is evidenced by the observation that aging affects perceptual performance in both auditory and visual modalities without significant differences between them. Moreover, the robust correlation between a year of age and functioning levels in vision and audition provides additional support for the idea of a significant amodal aspect in perceptual decline. This implies similarities in the extent to which function in these domains varies with age, supporting domain-general decline that affects audition, vision, and cognition within individuals. Still, further studies that employ longitudinal approaches and more directed experimental design are needed, to compare between the two hypotheses more effectively.

Limitations

While the current study offers valuable insights into age-related declines in auditory, visual perception, and cognitive abilities within individuals, it has some limitations that need acknowledgment. Our sample had unequal gender distribution, potentially affecting generalizability. However, further statistical analysis, demonstrated that there are no statistically significant differences between genders within the older and the young groups for the nine tasks (three for each domain: audition, vision, cognition, p > 0.01). Additionally, the study concentrated exclusively on testing normal hearing audiograms. However, high-frequency thresholds were not assessed in this study, which could be explored in future studies to offer a more comprehensive understanding of auditory perceptual performance. Moreover, the present study’s findings indicate that there was not enough variability in the visual tasks performed by young adults. It is possible that the younger group may need longer staircases to reach threshold performance. However, in our study design we wanted to ensure similar starting levels of difficulty as well as a similar number of trials across groups. Future studies should explore thresholds in various ways to examine differences between groups. Another possible limitation could be that our tasks do not reflect all of the underlying abilities that are involved in sensory processing in each modality. Thus, future studies should consider testing other sensory dimensions in modality (e.g., facial recognition in vision or localization in audition) and also multisensory aspect of perception. Additionally, the age ranges differed between groups. Thus, we recommend that future studies use a narrower age range with a larger sample size to better capture individual variability in sensory processing within specific age groups and year of age. Lastly, we used a cross-sectional design, limiting our understanding of the pace of decline across modalities with age and future studies should consider a longitudinal design.

Conclusions

The present study is one of the first to investigate healthy age-related effects in sensory-cognitive behavioral performance that can represent individuals’ daily experiences under adverse conditions. The study aimed to observe if and how a decline in vision is related to a decline in audition and how these sensory declines are related to cognitive decline in a cross-sectional design. Our findings suggest evidence about common aspects and shared mechanisms in the auditory and visual perceptual performances of older adults, leading to the conclusion that aging affects both modalities with no significant differences between them. In the older group, year of age is related to poorer sensory performance regardless of the modality type. A correlation was observed between modalities in older adults, supporting this notion. Moreover, we show that working memory is highly related to perceptual function, while flanker inhibitory control and attention and processing speed are not. Thus, our findings illustrate that while group level differences were apparent across all tasks, at the individual level only working memory was related to perceptual processing.

Beyond providing a theoretical foundation for understanding the sensory-cognitive link in the aging process, our findings can impact the design of interventions that target vision, audition and cognitive processes simultaneously in older adults, which may prove more effective than addressing only one domain.

Methods

Participants

Eighty young and older adults participated in the study and were divided into two age groups. Young group consisted of 40 young adults (27 females) between the ages 18–30 years old (Mean = 22.95 years, SD = 3.08). Older group consisted of 40 older adults (16 females), between the ages 50–70 years old (Mean = 59.13, SD = 5.45). All participants underwent a clinical audiometric test and exhibited normal hearing thresholds; clinically normal hearing was defined as hearing thresholds of ≤ 25 dB HL75 at octave frequencies from 0.25 to 8 kHz with no interaural asymmetries of ≥ 15 dB HL at no more than two adjacent frequencies and normal speech reception thresholds (SRT) and monosyllable discrimination tests. All participants exhibited normal or corrected-to-normal vision. All participants were screened for mild cognitive dysfunction of Montréal Cognitive Assessment (MoCA)76.

The study was conducted at the University of Haifa, and all methods were carried out in accordance with the University regulations. All experimental protocols were approved by a named institutional and/or licensing committee. Informed consent was obtained from all subjects. Ethical approval #518/21 from the ethical committee of the Faculty of Education, University of Haifa.

Participants were recruited through the University’s Sciences Research Participation System and through campus and social media advertisements. Participants were compensated by a money voucher or course credit after completion of all study tasks.

Participants were included if they did not have previous or current visual or hearing impairments, learning disabilities, attention deficit disorders, traumatic brain injury, or another cognitive or neurological disorder. Participants were included in the study sample if they scored ≥ 26 (out of 30 points) in MoCA, and all did. Participants were compensated by a money voucher or course credit after completion of all study tasks.

Procedure

Participants underwent three perceptual auditory tasks (frequency discrimination, speech-in-noise pseudoword discrimination, and speech-in-noise sentence recognition), three visual tasks (motion direction discrimination, spatial frequency discrimination, and contrast threshold discrimination) and three cognitive tasks (flanker inhibitory control and attention, list sorting working memory, and pattern comparison processing speed). Each participant completed the auditory, visual and cognitive tasks while seated in front of either a computer (for the perceptual tasks) or an iPad (for the cognitive tasks) in a quiet acoustically shielded room in the lab. The order of tasks was counterbalanced.

Tasks

Auditory perception tasks

Each participant completed a frequency discrimination task, speech-in-noise pseudoword discrimination task, and speech-in-noise sentence recognition task (see Fig. 1a-c). All auditory stimuli were presented binaurally to both ears via headphones. Prior to starting each task, participants underwent a short training phase designed to familiarize them with the task and stimuli and making sure the volume level of the computer was comfortable. Each training phase included 5 trials for the two Speech-in-noise tasks and 10 trials for the frequency discrimination task. Following the training phase, 60 test trials were presented for each task using a 2-down/1-up staircase procedure.

Frequency discrimination task

We used a frequency discrimination oddball procedure consisting of 60 trials with a reference tone set at 2000 Hz and a duration of 500 ms. The frequency difference between the odd and frequent tones was adjusted based on participants’ performance described in24, with 100 ms visual feedback after each trial. On average, each participant completed the task within 9 min. Initially △f (percentage of base frequency) started at 50% and decreased by a factor of two after each correct response until the first incorrect one. Subsequently, △f changed by a factor of 1.414 following a 2-down/1-up rule for a 79% correct rate.

Scoring: The threshold was determined as the geometric mean of the last four reversals, normalized to JNDs (JNDs – which refers to the magnitude of change required for a listener to report and detect differences between sounds77). To normalize the threshold to JNDs, we first calculated the threshold as the geometric mean of the last four reversals, representing the smallest frequency difference the participant could detect. This threshold was then normalized to JNDs using the formula JND = Δf/f. Where Δf is the detected frequency difference threshold and f is the base frequency used in the task. This ratio indicates the relative change in frequency that the participant can detect. Using MATLAB R2023a, we divided the frequency difference threshold by the base frequency for each participant, providing a dimensionless measure that reflects sensitivity to frequency changes and allows for standardized comparisons across participants and conditions.

Speech-in-noise tasks

To assess auditory perceptual performance under adverse conditions using speech-in-noise, we examined perceptual performance with stimuli consisting of pseudowords (described in24) and sentences. The sentences were categorized into plausible78,79 and non-plausible sentences. A female speaker recorded the lists of pseudowords and sentences in a lab’s soundproof room. All stimuli were root mean square (RMS) amplitude adjusted to 70 dB SPL using Audacity 3.3.2. Recordings were randomized and split into three versions for each task. For both the pseudowords and sentence tasks, there was an equal number of “same” and “different” or “plausible” and “non-plausible” trials, respectively. Background speech noise was introduced for both tasks, with the initial signal-to-noise ratio (SNR) determined based on a pre-experimental pilot phase. We used Praat software to create the speech noise80. We conducted a pre-experimental procedure with a pilot group consisting of four young adults and four older adults to establish the initial starting value for each task which was determined with a 70% performance accuracy. The starting SNR was set at 5 dB. Participant responses were adapted using a 2-down/1-up adaptive staircase procedure, with the adaptive parameter being the SNR, which adjusted the noise level in 1.5 dB steps.

Speech-in-noise pseudoword discrimination task

Participants completed a same/different discrimination task where they were presented with pairs of two-syllabic pseudowords embedded in noise. Their task was to determine whether the pair was the same or different (e.g., “same”: /damul/-/damul/, “different”: /malud/-/maluk/), with an equal number of pairs from each phonetic contrast and vowel template. The task was administered through a user-friendly interface24, with 100 ms visual feedback after each trial. On average, each participant completed the task within 11 min.

Speech-in-noise sentence recognition

Participants judged 60 sentences for plausibility in the same noise used in the previous task, with 30 plausible sentences and 30 non-plausible sentences, each consisting of seven words. Notably, the plausibility difference between the sentences was determined by the last word (e.g., “plausible”: /blʃita mhana wsari sʔʕu wdawo eldafaj/ (In winter, Tom and Max got cold and turned on the heater), “non-plausible”: /wida: d maʃatat ʃaʕer ʔuxta blmxade/ (Lisa combed her sister’s hair with the pillow)). The task was administered through a user-friendly interface24, with 100 ms visual feedback after each trial. On average, each participant completed the task within 12 min.

Scoring for the two speech-in-noise tasks

The thresholds in the speech-in-noise tasks were also normalized to the JNDs, which served as the outcome measure for speech-in-noise tasks. We used MATLAB R2023a to normalize the threshold for each participant to JNDs.

Visual perception tasks

Each participant completed a motion direction discrimination, spatial frequency discrimination, and contrast threshold discrimination (see Fig. 2a-c). Visual stimuli were presented on a calibrated display (LG Ultrafine™ OLED Monitor − 27-inch 4 K UHD − 3840 × 2160 resolution OLED Pro display). The stimuli (visual presentations) were produced using MATLAB (r2019b, The MathWorks, Inc., Natick, MA) with the Psychophysics Toolbox extensions and presented on a 22-inch calibrated monitor (48 × 30 cm). We used a SpectroCAL MKII Spectroradiometer (Cambridge Research Systems, UK) to calibrate luminance and color using MATLAB. Participants were seated 57 cm away from the monitor. To ensure a stable head position, participants used a chin rest. Similar to the auditory procedure tasks, we conducted a pre-experimental procedure with a pilot group consisting of four young adults and four older adults. This was to establish the initial starting values, including the ratio of dots moving randomly in the motion direction discrimination task, the percentage by which the frequency differed between the two Gabors in the spatial frequency discrimination task, and the contrast value of the two presented Gabors in the contrast threshold discrimination task. The initial starting value was determined with a 70% performance accuracy. Prior to starting each task participants underwent a short training phase designed to familiarize them with the task and stimuli, with each training phase including 20 trials for the three visual tasks. Following the training phase, 60 test trials were presented for each task using a 2-down/1-up staircase procedure.

Motion direction discrimination

The stimuli consisted of randomly moving dots, with the majority of dots moving randomly, referred to as “noise”, and a certain percentage moving coherently, known as the “signal” as described in81. Random dot kinematograms (RDKs) featured 290 dots moving at a rate of 15 degrees per second within a stationary aperture with a 5° radius, leaving a 2° space around the central fixation cross. The black dots were 4 pixels in size and had a luminance of 3.7 cd/m². They appeared on a uniform grey background with a luminance of 26 cd/m². The lifetime of each dot was limited to 140 ms, and in each frame, 20% of the dots relocated randomly within the circle. The dot density was set at 1 dot per square degree. The task began with a 1000 ms fixation point, followed by 300 ms of RDK stimuli. Subsequently, a fixation point appeared for 700 ms, followed by a tone signaling the start of the response interval. Participants had 1500 ms to respond via the keyboard, indicating whether the motion direction was clockwise or counterclockwise from the horizontal (within ± 10º from 0º). Audio feedback lasted for 100 ms. On average, each participant completed the task within 7 min.

Scoring: The coherence discrimination thresholds for each observer were determined using a 2-down/1-up staircase procedure within a single block of 60 trials. The threshold was calculated as the geometric mean of all the staircase reversals.

Spatial frequency discrimination

Participants engaged in a temporal forced-choice task, determining which of two vertical sinusoidal gratings had a higher spatial frequency (i.e., which one had more lines), following the procedure described in82. Each trial began with a 1000 ms fixation point, followed by 70 ms of the first grating, a 70 ms interstimulus interval (ISI), and 70 ms of the second grating. Subsequently, a 300 ms fixation point appeared, followed by a tone signaling the start of a 1500 ms response interval. The task aimed to assess a discrimination threshold for an intermediate reference frequency (4 c/°). In each trial, the first stimulus had the reference frequency, and the second contained a test frequency randomly greater or lower than the reference. The starting test frequency differed by 75% from the reference and adjusted adaptively using a 2-down/1-up staircase approach. Each Gabor grating measured 2° in size, had 50% contrast, a horizontal orientation, a random phase, and provided audio feedback lasting 100 ms. On average, each participant completed the task within 5 min.

Scoring: Spatial frequency thresholds were measured using a 2-down/1-up staircase procedure for 60 trials. The threshold was calculated as the geometric mean of all the staircase reversals.

Contrast threshold discrimination

The contrast task involved two Gabor patches, following a trial sequence similar to Exp 1. in Donovan et al.83 and Szpiro, et al.84. Each trial began with a black fixation point in the center for 300 ms, followed by a pre-cue at 0.4° eccentricity for 60 ms, and then another fixation point for 40 ms. Two Gabor patches (4 cycles/°, 5° eccentricity, 2° in size each) appeared horizontally on the right and left sides of the screen for 100 ms when viewed from a distance of 57 cm. Subsequently, a fixation point was displayed for 300 ms, followed by a response cue indicating the target Gabor. A tone signaled the start of a 1500 ms response interval, during which participants indicated if the Gabor was oriented clockwise or counterclockwise from either 5° or 355°. Audio feedback lasted for 100 ms. On average, each participant completed the task within 10 min.

Scoring: Gabor contrast thresholds were measured using a 2-down/1-up staircase procedure for 60 trials in one block. The threshold was calculated as the geometric mean of all the staircase reversals.

Cognitive tasks

Cognitive tasks were assessed using a battery of standard tasks from the National Institutes of Health (NIH) Toolbox Cognition Test Battery (www.nihtoolbox.org), using the flanker inhibitory control & attention, the list sorting working memory and the pattern comparison processing speed (see Fig. 6a-c). The participants completed the tasks via an iPad with the adapted version presented in Arabic by Mais Abbas and Hanin Karawani available in NIH Toolbox V3 Arabic https://nihtoolbox.zendesk.com/hc/en-us/articles/23800604330900-NIH-Toolbox-V3-Arabic.

Flanker inhibitory control & attention

Briefly, participants had to identify the side of a target arrow presented within the row of arrows that were congruous or incongruous. On average, each participant completed the task within 3 min.

Scoring: Accuracy and response time to target arrows surrounded by incongruent arrows were recorded and included in the age-uncorrected standard score, which was the task’s measure85.

List sorting working memory

Participants completed two versions of the sequencing task, which involved the sequential presentation of (1) animals or food items, (2) animal and food items. The participant had to view the sequence of items and order them according to physical size from smallest to largest, following the procedure outlined by Tulsky, et al.86. The task started with two items, and with each correct response, an additional item was added to the series, with a maximum of seven items in a series. On average, each participant completed the task within 10 min.

Scoring: The total correct responses across the two versions were reflected in the age-uncorrected standard score, which was the task’s measure.

Pattern comparison processing speed

It was a timed task in which participants were visually presented with two images on the iPad screen and asked to indicate whether the images were identical or not. The two images could differ in type, complexity, or the number of stimuli87. On average, each participant completed the task within 3 min.

Scoring: Responses were scored for all answers answered within 85 s88. Which resulted in the age-uncorrected standard score, which was the task’s measure.

Statistical analysis

In order to determine how similar perceptual levels are across modalities and what might indicate a general multi-modal sensory process as well as to explore the relationship between aging and declines in sensory and cognitive abilities, a within-subject factor was employed in the analysis. Moreover, a between-subject factor was employed to examine the effect of age on sensory and cognitive performance by comparing young and older adults across three domains.

As stated above, JNDs were used to evaluate auditory performance by normalizing thresholds in auditory tasks. This was achieved by using the raw scores from each participant for each task. The employment of JNDs facilitated the comparison and normalization of auditory thresholds on a common scale. The analysis was carried out using MATLAB R2023a. For the visual tasks, we also analyzed the data using MATLAB R2023a to obtain thresholds across the three nested tasks. For cognitive tasks, we used age-uncorrected standard scores using the NIH toolbox. We then computed average scores and SD across groups to enable group comparisons within each domain.

Subsequently, we assessed data normality for the variables under investigation and Skewness and Kurtosis tests were used to confirm that the data were normally distributed (p > 0.1). These analyses revealed outlier values among four older adult participants, likely attributable to fatigue and tiredness, thereby contributing to non-normality. Consequently, we removed these participants, resulting in datasets that conformed to a normal distribution (n = 40 in each group).

Next, we conducted statistical analyses using SAS® 9.4 TS1M8 and IBM SPSS Statistics 27.0.1.0. In the SAS software analysis, we applied a standardization procedure (SAS Institute Inc. 2019) to standardize sensory and cognitive thresholds (from the previous analysis) to enable comparisons across tasks and modalities based on our research questions. Subsequently, we employed Procedure for Generalized Linear Mixed Models (Proc Glimmix) within each group to perform an analysis of variance (ANOVA) and compare within-subject variables and between-subject variables. Furthermore, we performed PROC REG multiple linear regression analysis to examine the correlation between cognitive scores and mean performance in perceptual tasks within and between participants.

We used IBM SPSS software to conduct Pearson’s correlation analysis, which allowed us to investigate various aspects using the previously described standardization scores, as outlined below.

Sensory-perceptual performance

First, we tested “Older adults perform poorer than young adults in auditory and visual tasks” using SAS software. We ran two Proc Glimmix models, one for each modality, with standardized scores incorporated for each individual in each task. Both models included fixed effects representing the interaction between age group x three nested tasks within each modality. When effects were significant, post-hoc t-test analyses were used and adjusted for multiple comparisons using Bonferroni corrections89 to enable us to compare differences between the two groups in each task and modality using the raw data without standardization.

Second, we performed a combined analysis of auditory and visual modalities to assess whether “No significant difference in age related declines in auditory and visual tasks”. We employed hierarchical Proc Glimmix models, with standardized scores incorporated for each individual in each task, to explore the potential interaction between modality type x three nested tasks within each modality x age group.

Additionally, we compared sensory-perceptual performance between the two modalities within each age group to investigate any interactions between vision and audition. We employed standardized scores in this comparison to conduct a comparative analysis across the two modalities.

Third, to determine whether there is a “Strong correlation between vision & audition in older adults”, we conducted Pearson’s correlation analyses to assess the relationship between performance in the visual and auditory modalities within each group. To calculate the average standardized mean score for both the auditory and visual tasks, we used each participant’s mean score in each modality.

Lastly, to illustrate how “In older adults perceptual performance declines steadily each year”, we investigated how aging affected sensory-perceptual performance within each age group separately and across the two modalities over the years. In this analysis, we treated age as a continuous variable rather than a discrete factor67,69. We used a hierarchical Proc Glimmix model and then calculated the correlation between age in years and performance in each modality using Pearson’s correlation. The method we used to calculate the average standardization scores for the auditory and visual tasks in this analysis was similar to that described in the previous paragraph, aligning with the procedure outlined in the second paragraph of this section.

Cognitive behavioral performance

In order to measure “Young adults outperformed older adults in cognitive tasks”, we performed independent samples t-tests on each cognitive task to compare differences between the two groups. To adjust for multiple comparisons, we applied Bonferroni corrections89 using the raw data without standardization.

Sensory-perceptual & cognitive performances

Each cognitive task was considered as an independent variable in relation to other sensory tasks in order to identify a " Correlation between cognitive and perceptual performance”. Our objective was to determine whether each cognitive task could be utilized to predict the performance of sensory-perceptual tasks. To accomplish this, we employed a hierarchical Proc Glimmix model and we performed PROC REG multiple linear regression analysis, using standardized scores incorporated for each individual in each cognitive task and using the average standardized scores for the auditory tasks as well as the visual tasks. Additionally, to depict the relationship between sensory-perceptual average performance (comprising the six sensory tasks) and the three cognitive tasks, we computed Pearson’s correlation. This was done by calculating the average of the standardized scores for the sensory tasks (both auditory and visual) and using the standardized scores for the three cognitive tasks for each participant.

Sample size

Given the demands of the study and based on previous studies22 a power analysis using G*Power software (v. 3.1.9.2) determined that a sample size of 39 participants would power the study at 80% to detect small-to-medium-sized effects at α = 0.05 for repeated-measures ANOVA.

Data availability

Data Availability Statement. All data required to reach the stated conclusions are presented in the paper. Raw data can be found here: https://osf.io/e8z59/?view_only=b7bdd3c661784628855d3d821d6ee395.

References

Lisko, I. et al. How can dementia and disability be prevented in older adults: Where are we today and where are we going? J. Intern. Med. 289 (6), 807–830 (2021).

Rudnicka, E. et al. The World Health Organization (WHO) approach to healthy ageing. Maturitas 139, 6–11. https://doi.org/10.1016/j.maturitas.2020.05.018 (2020).

Lord, S. R., Smith, S. T. & Menant, J. C. Vision and falls in older people: Risk factors and intervention strategies. Clin. Geriatr. Med. 26, 569–581. https://doi.org/10.1016/j.cger.2010.06.002 (2010).

Sterkin, A. et al. Vision improvement in pilots with presbyopia following perceptual learning. Vision. Res. 152, 61–73. https://doi.org/10.1016/j.visres.2017.09.003 (2018).

Yu, D., Cheung, S. H., Legge, G. E. & Chung, S. T. Reading speed in the peripheral visual field of older adults: Does it benefit from perceptual learning? Vision. Res. 50, 860–869. https://doi.org/10.1016/j.visres.2010.02.006 (2010).

Nussbaum, J. F., Pecchioni, L. L., Robinson, J. D. & Thompson, T. L. Communication and Aging (Routledge, 2000).

Humes, L. E. Aging and speech communication: Peripheral, central-auditory, and cognitive factors affecting the speech-understanding problems of older adults. ASHA Lead. 13 (5), 10–33 (2008).

Paraskevoudi, N., Balci, F. & Vatakis, A. Walking through the sensory, cognitive, and temporal degradations of healthy aging. Ann. N. Y. Acad. Sci. 1426 (1), 72–92. https://doi.org/10.1111/nyas.13734 (2018).

Andersen, G. J. Aging and vision: Changes in function and performance from optics to perception. Wiley Interdisciplinary Reviews: Cogn. Sci. 3 (3), 403–410. https://doi.org/10.1002/wcs.1167 (2012).

Owsley, C. Aging and vision. Vision. Res. 51, 1610–1622. https://doi.org/10.1016/j.visres.2010.10.020 (2011).

Saftari, L. N. & Kwon, O. S. Ageing vision and falls: A review. J. Physiol. Anthropol. 37, 11. https://doi.org/10.1186/s40101-018-0170-1 (2018).

Owsley, C., Sekuler, R. & Siemsen, D. Contrast sensitivity throughout adulthood. Vision. Res. 23, 689–699. https://doi.org/10.1016/0042-6989(83)90210-9 (1983).

Mestre, D., Blin, O., Serratrice, G. & Pailhous, J. Spatiotemporal contrast sensitivity differs in normal aging and Parkinson’s disease. Neurology 40, 1710–1714. https://doi.org/10.1212/wnl.40.11.1710 (1990).

Billino, J. & Pilz, K. S. Motion perception as a model for perceptual aging. J. Vis. 19 https://doi.org/10.1167/19.4.3 (2019).

Tucker, B. V., Ford, C. & Hedges, S. Speech aging: Production and perception. Wiley Interdisciplinary Reviews: Cogn. Sci. 12, e1557. https://doi.org/10.1002/wcs.1557 (2021).

Stach, B. A., Hornsby, B. W., Rosenfeld, M. A. L. & DeChicchis, A. R. The complexity of auditory aging. In Seminars in Hearing (Vol. 30, No. 02, 094–111). © Thieme Medical (2009).

Tun, P. A., Williams, V. A., Small, B. J. & Hafter, E. R. The effects of aging on auditory processing and cognition. Am. J. Audiol. 21, 344–350. https://doi.org/10.1044/1059-0889(2012/12-0030) (2012).

Rosemann, S., Smith, D., Dewenter, M. & Thiel, C. M. Age-related hearing loss influences functional connectivity of auditory cortex for the McGurk illusion. Cortex 129, 266–280. https://doi.org/10.1016/j.cortex.2020.04.022 (2020).

Schmitt, R., Meyer, M. & Giroud, N. Better speech-in-noise comprehension is associated with enhanced neural speech tracking in older adults with hearing impairment. Cortex 151, 133–146 (2022).

Schneider, B. A. & Hamstra, S. J. Gap detection thresholds as a function of tonal duration for younger and older listeners. J. Acoust. Soc. Am. 106, 371–380. https://doi.org/10.1121/1.427062 (1999).

Gordon-Salant, S., Yeni-Komshian, G. H., Fitzgibbons, P. J. & Barrett, J. Age-related differences in identification and discrimination of temporal cues in speech segments. J. Acoust. Soc. Am. 119, 2455–2466. https://doi.org/10.1121/1.2171527 (2006).

Roque, L., Karawani, H., Gordon-Salant, S. & Anderson, S. Effects of Age, Cognition, and neural encoding on the perception of temporal Speech cues. Front. NeuroSci. 13, 749. https://doi.org/10.3389/fnins.2019.00749 (2019).

Karah, H. & Karawani, H. Auditory perceptual exercises in adults adapting to the use of hearing aids. Front. Psychol. 13, 832100. https://doi.org/10.3389/fpsyg.2022.832100 (2022)

Karawani, H., Bitan, T., Attias, J. & Banai, K. Auditory perceptual learning in adults with and without age-related hearing loss. Front. Psychol. 6, 2066. https://doi.org/10.3389/fpsyg.2015.02066 (2016).

Pichora-Fuller, M. K. & Singh, G. Effects of age on auditory and cognitive processing: Implications for hearing aid fitting and audiologic rehabilitation. Trends Amplif. 10, 29–59. https://doi.org/10.1177/108471380601000103 (2006).

Schneider, B. A., Pichora-Fuller, M. K., Kowalchuk, D. & Lamb, M. Gap detection and the precedence effect in young and old adults. J. Acoust. Soc. Am. 95, 980–991. https://doi.org/10.1121/1.408403 (1994).

Cavazzana, A. et al. Sensory-specific impairment among older people. An investigation using both sensory thresholds and subjective measures across the five senses. PLoS One. 13, e0202969. https://doi.org/10.1371/journal.pone.0202969 (2018).

Correia, C. et al. Global sensory impairment in older adults in the United States. J. Am. Geriatr. Soc. 64, 306–313. https://doi.org/10.1111/jgs.13955 (2016).

Alain, C. et al. Aging enhances neural activity in Auditory, Visual, and Somatosensory cortices: The Common cause revisited. J. Neurosci. 42, 264–275. https://doi.org/10.1523/JNEUROSCI.0864-21.2021 (2022).

Humes, L. E., Burk, M. H., Coughlin, M. P., Busey, T. A. & Strauser, L. E. Auditory speech recognition and visual text recognition in younger and older adults: Similarities and differences between modalities and the effects of presentation rate. (2007).

McNair, S., Okan, Y., Hadjichristidis, C. & de Bruin, W. B. Age differences in moral judgment: Older adults are more deontological than younger adults. J. Behav. Decis. Mak. 32 (1), 47–60 (2019).

Dully, J., McGovern, D. P. & O’Connell, R. G. The impact of natural aging on computational and neural indices of perceptual decision making: A review. Behav. Brain. Res. 355, 48–55. https://doi.org/10.1016/j.bbr.2018.02.001 (2018).

Brewster, K. K., Golub, J. S. & Rutherford, B. R. Neural circuits and behavioral pathways linking hearing loss to affective dysregulation in older adults. Nat. Aging. 1, 422–429. https://doi.org/10.1038/s43587-021-00065-z (2021).

Bieber, R. E. & Gordon-Salant, S. Improving older adults’ understanding of challenging speech: Auditory training, rapid adaptation and perceptual learning. Hear. Res. 402, 108054. https://doi.org/10.1016/j.heares.2020.108054 (2021).

Humes, L. E., Busey, T. A., Craig, J., Kewley-Port, D. & Attention Are age-related changes in cognitive function driven by age-related changes in sensory processing? Percept. Psychophysics, 75, 508–524 (2013).

Humes, L. E., Young, L. A. & 52S-61S. Sensory-cognitive interactions in older adults. Ear Hear. 37 https://doi.org/10.1097/AUD.0000000000000303 (2016).

Roberts, K. L. & Allen, H. A. Perception and cognition in the Ageing Brain: A brief review of the short- and long-term links between Perceptual and Cognitive decline. Front. Aging Neurosci. 8, 39. https://doi.org/10.3389/fnagi.2016.00039 (2016).

Pichora-Fuller, M. K., Schneider, B. A. & Daneman, M. How young and old adults listen to and remember speech in noise. J. Acoust. Soc. Am. 97 (1), 593–608 (1995).

Jayakody, D. M. P., Friedland, P. L., Martins, R. N. & Sohrabi, H. R. Impact of aging on the Auditory System and related cognitive functions: A narrative review. Front. NeuroSci. 12, 125. https://doi.org/10.3389/fnins.2018.00125 (2018).

Greenwood, P. M. & Parasuraman, R. Scale of attentional focus in visual search. Percept. Psychophys. 61 (5), 837–859. https://doi.org/10.3758/bf03206901 (1999).

Greenwood, P. M., Parasuraman, R. & Alexander, G. E. Controlling the focus of spatial attention during visual search: Effects of advanced aging and Alzheimer disease. Neuropsychology 11, 3–12. https://doi.org/10.1037/0894-4105.11.1.3 (1997).

Murphy, C. F. B. et al. Auditory Processing performance of the Middle-aged and Elderly: Auditory or cognitive decline? J. Am. Acad. Audiol. 29, 5–14. https://doi.org/10.3766/jaaa.15098 (2018).

Arsiwala, L. T. et al. Associations of visual function with cognitive performance in community-based older adults: The Eye determinants of Cognition study. Journals Gerontology: Ser. A. 77 (10), 2133–2140 (2022).

Sternang, O., Jonsson, B., Wahlin, A., Nyberg, L. & Nilsson, L. G. Examination of the common cause account in a population-based longitudinal study with narrow age cohort design. Gerontology 56, 553–563. https://doi.org/10.1159/000279754 (2010).

Wahl, H. W. & Heyl, V. Connections between Vision, hearing, and cognitive function in Old Age. Generations 27 (1), 39–45 (2003).

Birren, J. E. The psychology of aging. (1964).

Anstey, K. J., Luszcz, M. A. & Sanchez, L. Two-year decline in vision but not hearing is associated with memory decline in very old adults in a population-based sample. Gerontology 47 (5), 289–293 (2001).

Hofer, S. M., Berg, S. & Era, P. Evaluating the interdependence of aging-related changes in visual and auditory acuity, balance, and cognitive functioning. Psychol. Aging. 18 (2), 285. https://doi.org/10.1037/0882-7974.18.2.285 (2003).

Baltes, P. B. & Lindenberger, U. Emergence of a powerful connection between sensory and cognitive functions across the adult life span: A new window to the study of cognitive aging? Psychol. Aging. 12 (1), 12–21. https://doi.org/10.1037/0882-7974.12.1.12 (1997). https://doi.org

Beard, J. R. Beyond integrated care for older adults. Nat. Aging. 4, 1–4. https://doi.org/10.1038/s43587-023-00542-7 (2024).

Diaz, M. T., Rizio, A. A. & Zhuang, J. The neural language systems that support healthy aging: Integrating function, structure, and behavior. Lang. Linguistics Compass. 10 (7), 314–334 (2016).

Salthouse, T. A. & Babcock, R. L. Decomposing adult age differences in working memory. Dev. Psychol. 27 (5), 763 (1991).

Salthouse, T. A. The processing-speed theory of adult age differences in cognition. Psychol. Rev. 103 (3), 403 (1996).

Salthouse, T. A. Selective review of cognitive aging. J. Int. Neuropsychol. Soc. 16, 754–760. https://doi.org/10.1017/S1355617710000706 (2010).

Clinard, C. G., Tremblay, K. L. & Krishnan, A. R. Aging alters the perception and physiological representation of frequency: Evidence from human frequency-following response recordings. Hear. Res. 264, 48–55. https://doi.org/10.1016/j.heares.2009.11.010 (2010).

Grassi, M. & Borella, E. The role of auditory abilities in basic mechanisms of cognition in older adults. Front. Aging Neurosci. 5, 59. https://doi.org/10.3389/fnagi.2013.00059 (2013).

Raz, N., Millman, D. & Moberg, P. J. Auditory memory and age-related differences in two-tone frequency discrimination: Trace decay and interference. Exp. Aging Res. 15, 43–47. https://doi.org/10.1080/03610738908259757 (1989).

Mattys, S. L. & Scharenborg, O. Phoneme categorization and discrimination in younger and older adults: A comparative analysis of perceptual, lexical, and attentional factors. Psychol. Aging. 29, 150–162. https://doi.org/10.1037/a0035387 (2014).

Rufener, K. S., Liem, F. & Meyer, M. Age-related differences in auditory evoked potentials as a function of task modulation during speech–nonspeech processing. Brain Behav. 4 (1), 21–28 (2014).

Ben-David, B. M. et al. Effects of aging and noise on real-time spoken word recognition: Evidence from eye movements. J. Speech Lang. Hear. Res. 54, 243–262. https://doi.org/10.1044/1092-4388(2010/09-0233) (2011).

Füllgrabe, C., Moore, B. C. & Stone, M. A. Age-group differences in speech identification despite matched audiometrically normal hearing: Contributions from auditory temporal processing and cognition. Front. Aging Neurosci. 6, 347 (2015).

Pilz, K. S., Miller, L. & Agnew, H. C. Motion coherence and direction discrimination in healthy aging. J. Vis. 17 (1), 31–31. https://doi.org/10.1167/17.1.31 (2017).

Faubert, J. Visual perception and aging. Can. J. Experimental Psychology/Revue Canadienne de Psychologie expérimentale. 56, 164–176. https://doi.org/10.1037/h0087394 (2002).

Mantiuk, R. K. & Ramponi, G. Age-dependent prediction of visible differences in displayed images. J. Soc. Inform. Display. 26 (1), 4–13 (2018).

Ceponiene, R., Westerfield, M., Torki, M. & Townsend, J. Modality-specificity of sensory aging in vision and audition: Evidence from event-related potentials. Brain Res. 1215, 53–68. https://doi.org/10.1016/j.brainres.2008.02.010 (2008).

Mollon, J. D., Bosten, J. M., Peterzell, D. H. & Webster, M. A. Individual differences in visual science: What can be learned and what is good experimental practice? Vis. Res. 141, 4–15. https://doi.org/10.1016/j.visres.2017.11.001 (2017).

Matilainen, L. E. et al. The effects of healthy aging on auditory processing in humans as indexed by transient brain responses. Clin. Neurophysiol. 121 (6), 902–911 (2010).

Herrmann, B., Buckland, C., Johnsrude, I. S. & & Neural signatures of temporal regularity processing in sounds differ between younger and older adults. Neurobiol. Aging. 83, 73–85 (2019).

Casco, C., Barollo, M., Contemori, G. & Battaglini, L. The effects of Aging on Orientation discrimination. Front. Aging Neurosci. 9, 45. https://doi.org/10.3389/fnagi.2017.00045 (2017).

Ronnberg, J. et al. The ease of Language understanding (ELU) model: Theoretical, empirical, and clinical advances. Front. Syst. Neurosci. 7, 31. https://doi.org/10.3389/fnsys.2013.00031 (2013).

Ronnberg, J., Holmer, E. & Rudner, M. Cognitive hearing science and ease of language understanding. Int. J. Audiol. 58, 247–261. https://doi.org/10.1080/14992027.2018.1551631 (2019).

Ronnberg, J., Holmer, E. & Rudner, M. Cognitive hearing science: Three Memory systems, two approaches, and the ease of Language understanding Model. J. Speech Lang. Hear. Res. 64, 359–370. https://doi.org/10.1044/2020_JSLHR-20-00007 (2021).

Klaver, P. & Talsma, D. Behind the scenes: How visual memory load biases selective attention during processing of visual streams. Psychophysiology 50 (11), 1133–1146 (2013).

Verissimo, J., Verhaeghen, P., Goldman, N., Weinstein, M. & Ullman, M. T. Evidence that ageing yields improvements as well as declines across attention and executive functions. Nat. Hum. Behav. 6, 97–110. https://doi.org/10.1038/s41562-021-01169-7 (2022).

ANSI, S. American National Standard Specification for Audiometers (American National Standards Institute New York, 2010).

Nasreddine, Z. S. et al. The Montreal Cognitive Assessment, MoCA: A brief screening tool for mild cognitive impairment. J. Am. Geriatr. Soc. 53 (4), 695–699 (2005).

Yost, W. A. Fundamentals of Hearing (Academic, 2020).

Bsharat-Maalouf, D. & Karawani, H. Bilinguals’ speech perception in noise: Perceptual and neural associations. PLoS One 17, e0264282. https://doi.org/10.1371/journal.pone.0264282 (2022).