Abstract

Academic institutions face increasing challenges in predicting student enrollment and managing retention. A comprehensive strategy is required to track student progress, predict future course demand, and prevent student churn across various disciplines. Institutions need an effective method to predict student enrollment while addressing potential churn. The existing approaches are often inadequate in handling both numerical and textual data, limiting the ability to provide personalized retention strategies. We propose an innovative framework that combines deep learning with recommender systems for student enrollment prediction and churn prevention. The framework integrates advanced preprocessing techniques for both numeric and textual data. Feature extraction is performed with statistical measures for numeric data, and advanced text techniques like GloVe embeddings, Latent Dirichlet Allocation (LDA) for topic modeling, and SentiWordNet for sentiment analysis. A weighted feature fusion approach combines these features, and the optimal features are selected using the Pythagorean fuzzy AHP with a Hybrid Optimization approach, specifically the Instructional Emperor Pigeon Optimization (IEPO). The DeepEnrollNet model, a hybrid CNN-GRU-Attention QCNN architecture, is used for enrollment prediction, while Deep Q-Networks (DQN) are applied to generate actionable retention recommendations. This comprehensive methodology improves predictive accuracy for student enrolment and provides tailored strategies to enhance retention by addressing both text and numeric data in a unified framework. The DeepEnrollNet has the minimum MSE of 0.218978, MSRE of 0.216445, a NMSE of 0.232453, RMSE of 0.23213, and MAPE of 0.218754.

Similar content being viewed by others

Introduction

Global universities struggle to raise students’ enrolment and retention to gain competitiveness and achieve excellence in academics1,2. This becomes central to institutions, directly linking student success to institutional growth and reputation.

It caters to a large student population from every part of the world, offering a wide range of undergraduate and graduate programs in fields as varied as engineering, medicine, computer science, business, and the humanities. The university is taking part in building a knowledge-based economy, which is the Kingdom’s Vision 2030, by excellence in education, innovation, and research. Student enrollment and retention grow to be ever more crucial issues as the globalization and nature of changing demands within the workforce increase the desire of the university for more influence.

A shrinking student level or high attrition rate can dramatically affect institutional performance both operationally and academically. Given these risks, institutions are increasingly seeking data-driven approaches to anticipate enrollment trends and to respond with effective strategies for student retention1,3,4. Such challenges could now be approached with new opportunities brought in by the emerging deep learning and recommender systems that make use of large amounts of student data to predict behavior and outcomes5,6. Deep learning and recommender systems can be combined to aid transformative potential into aspects such as student enrollment and the way student retention could be improved. Traditional enrollment forecasting and student retention approaches cannot match the dynamic and divergent needs of the students7. This research leverages high-order deep learning methodologies using convolutional neural networks and long short-term memory networks, along with personalized recommender systems, to really revolutionize the way enrollment trends are projected in higher education and focus on better student success1,8. Better forecasting because of complex pattern analysis in large sets of data, it will enable customized recommendations according to the profile of students. The impact of this work in society is much bigger than increasing the efficiency of the institutions9,10. Improved forecast of enrollment will allow for better optimization of resource allocation and program planning, with improved strategies for retention that secure an environment that is more supportive and engaging in terms of education11,12. Ultimately, it increases graduation rates with high-quality student outcomes and educational equity for the sake of the university community and society at large13,14,15.

Artificial Intelligence, through its embedding in data analysis and prediction, has enhanced in capability across domains. In this regard, machine learning techniques such as Support Vector Machines, Random Forests, and k-Nearest Neighbors emerge as leading pattern recognizers, classifiers, and predictors for delivering relevant decisions9,10. These have proved most impactful in domains like student enrollment forecasting, wherein they aid trend identification and resource optimization16,17. Deep learning models18, among them Convolutional Neural Networks and Recurrent Neural Networks, with the Long Short-Term Memory networks, push further the boundaries by excelling in the processing of complex, high-dimensional, and sequential data19,20. However, though very helpful, AI technologies21 also have drawbacks: a likelihood of overfitting, high computational demands, and challenges relating to model interpretability and bias2,22. These limitations underline the need for further related research and improvements to have AI applications be not only effective but fair, with high stakes on accuracy and fairness in sensitive domains such as education23,24.

Inspired by a need for extending existing AI technologies toward student enrollment forecasting and retention strategies from both aspects, we find limitations in overfitting and high computation demand as they are not interpretable3. For solving these drawbacks, we propose a novel model whereby we have combined advanced optimization algorithms with DL techniques for improving the accuracy and efficiency. We scientifically arrive at a model in which the modern approaches towards deep learning, such as convolution neural networks, recurrent neural networks, long short-term memory methodologies, and latest optimization, have been compactly integrated into the framework to enhance the precision of prediction at reduced computational complexity. This means that the new model developed herein is going to be very effective in informing educational institutions about maximizing their resources and providing personalized student support. Our work will ultimately lead to increased social benefits since education will have been improved with enhanced success rates for student outcomes.

The major contribution of this research work is:

-

This study introduces a unique framework that integrates deep learning and recommender systems for forecasting student enrolment and improving retention strategies.

-

The optimal features are selected using the Pythagorean fuzzy AHP with hybrid optimization technique. The proposed hybrid optimization approach- IEPO, is developed by combining TLBO, EMA, and EPO. This approach significantly improves feature selection, resulting in better model accuracy and efficiency.

-

The research work introduces the DeepEnrollNet model, including CNN, GRU, and Attention QCNN in prediction. This is a crafted model designed to increase predictive power by capturing complex temporal and spatial patterns in students.

-

The application of DQN to retention recommendation is a novel contribution, providing actionable insights for improving student retention by simulating different strategies and recommending optimal actions based on student engagement and performance.

-

The implementation of a weighted feature fusion approach, which combines numeric and text-based features, ensures that the most relevant information from both data types is utilized in the prediction and recommendation processes, enhancing the overall accuracy and robustness of the framework.

-

The framework is tested and validated, providing practical insights and demonstrating its effectiveness in a real-world educational setting. This case study can be adapted and applied to other institutions facing similar challenges in enrollment prediction and retention strategy development.

The rest of this article is organized as: Sect. 2 discussed about the recent works undergone regarding the enrolment prediction, and Sect. 3 details about the proposed work accomplished on student enrolment and retention recommendation. The results acquired with the proposed model is discussed in Sect. 4, and this article is concluded in Sect. 5.

Literature review

Review

Though very promising, a number of drawbacks to the advances of AI for HE discussed in recent literature have occurred. Saaida (2023) pointed out the potential of AI to really transform education but proved that the integration of AI tools within the curricula would be the real challenge something which educators would most likely resist4. Mambile and Mwogosi 2024 indicated the transformative power of AI within Tanzania’s higher education, bringing out the limitations of a digital divide and lack of infrastructure that might hinder its full application5. Singh, 2023, describes the power of AI in higher education. In addition, there were concerns over data privacy and security, therefore challenging the effective harnessing of AI tools7. Malik et al. (2023) explored the potential of generative AI but pointed out that these types of technologies might increase biases and ethical concerns that need to be acknowledged11. The Adıgüzel et al. (2023) declaration on the ChatGPT tool represents the possible future mode of engaging with artificial intelligence (AI)12. On the other hand, Gruetzemacher and Whittlestone (2022) have been able to challenge the extent to which AI should be used within education as there are other aspects in which its significance is felt. This does not deny the fact that there is much more that can still be done on improving it for various reasons but this has one major drawback throughout its development in relation to learning objectives13. The management of AI strategically has been announced by George and Wooden (2023). However, such solutions require fine-tuning over different deduction contexts if they are going to be scaled up globally, illustrating them in our paper14. Southworth et al., 2023 presented an AI literacy model which revealed that embedding artificial intelligence into different academic fields posed difficulty at times, risking some of its capabilities at certain levels. In terms of integration into higher education, infrastructure, privacy or ethics limitations emphasize that holistic approaches are the only way to fully utilize the power of AIs there15.

Problem statement

Universities face ongoing challenges in forecasting student enrolment and improving retention rates. With a growing student population and a diverse range of academic programs, the university must maintain a delicate balance between attracting new students and ensuring that existing students persist through their studies.

For schools and other educational institutions, keeping retention rates high is of utmost importance, as it suggests that the institution is able to address the needs of the students and keep them interested and within an academic environment that promotes their success. High student dropout rates, on the other hand, tarnish the image of the institution and can also result in financial losses as a result of the expenses incurred in recruitment, provision of scholarships, and other services aimed at students. For in the case of retaining students, there is another aspect affecting the position of the score tables within which cut-off points include the proportions of graduated students and the satisfaction of the students with the institution. In turn, comprehension of material aspects of student retention allows creating different focused strategies, for example, implementing academic supporting courses, offering students counseling, or extracurricular activities, that would enhance students and persuade them to finish education.

Moreover, there is a need to emphasize the fact that forecasting student enrollment is also crucial in academic planning and resource management, which are effective. Effective enrollment forecasting provides an accurate understanding of resource needs, namely personnel, space, and capital, in high-demand course and program offerings for the university. Foreseeing high periods of intake can also facilitate institutions hiring the correct number of departments or increasing the number of highly enrolled units, therefore relieving congestion and improving education standards. On the flip side, if fewer enrollments are achieved, then there may be inefficient use of resources, while if many students are enrolled beyond what is manageable, then the resources may become too crowded, resulting in a negative impact on the students.

Inaccurate enrolment predictions can result in underutilized resources or overcrowding, while high dropout rates can negatively impact the university’s reputation, financial stability, and contributions to Saudi Arabia’s Vision 2030. In the eight reviewed papers, the drawbacks emphasized the struggles in integrating AI into higher education. Saaida (2023) pointed out that while AI could change education, there is often resistance from educators towards its integration into curriculum that acts as a barrier to widespread implementation. Mambile and Mwogosi (2024) mentioned through their findings about Tanzania’s digital divide and poor infrastructure which limits on use of AI tools hence increase inequities in education further. Singh (2023) raised data privacy and security concerns, which are very important when it comes to the use of AI effectively in fraternity and higher learning environments, especially now that the student body comprises minors’ details. Malik et al. (2023) examined ethical implications related to generative AI such as biases in AI-generated content that must be managed appropriately in order not justify unfair outcomes. According to Adıgüzel et al. (2023), AI tools like ChatGPT often fall short of giving any insight into the context and can eventually lead to overdependence on automated systems at the cost of critical thinking. Gruetzemacher and Whittlestone (2022) emphasized how AI developments take a different direction than educational objectives and highlighted a dire need for AI to support educational quality rather than harm it. George and Wooden (2023) noted that AI solutions developed for one kind of educational setting often prove rather difficult to scale across other very different educational environments, thus reducing their field of applicability. Finally, according to Southworth et al. (2023), the integration of AI literacy into a number of different disciplines can sometimes be very challenging; it may impact the overall effect of AI in education. All these limitations call for strategic interventions on integration, infrastructure, privacy, bias, and scalability. Such existing approaches to enrollment prediction and at-risk student identification tend to rely on rather more conventional statistical techniques, which can only do a limited job of picking up on the complex patterns in modern student data, including behavioral, demographic, and academic factors. Accordingly, the University lacks a robust system that would enable predicting enrollment trends and apply timely, data-driven interventions for student retention. This clearly articulates the need for a more complex way that would be able to utilize deep learning and recommender systems in order to perform superior prediction of enrollment and recommend personalized support strategies. The current work adds to the literature available on these problems by proposing a unique framework that makes use of deep learning for accurate forecasting and recommender systems for tailored retention strategies, fine-tuned to the needs.

Proposed methodology for student enrolment prediction and retention recommendation

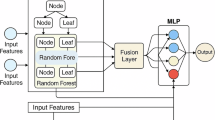

In this research work, novel deep learning-based approach has been introduced for student enrolment prediction. In addition, the reinforcement learning has been utilized for retention recommendation. The architecture of the proposed model is shown in Fig. 1.

Problem definition

-

Objective: Design a system that will not only allow for the prediction of future student enrollments but also give recommendations on personalized strategies in relation to student retention and improved academic performance.

-

Data scope: Historical data from 2020 to 2023, including both numeric (academic records, demographics) and text data (student feedback, evaluations).

Data collection and integration

Data sources

-

SIS: Enrolment records, demographic information, Academic performance, attendance, course evaluations.

-

Data Types:

-

Numeric data\(\:{D}^{Num}\): Enrolment data, grades, attendance, course loads, demographic information.

-

Text data\(\:{D}^{Text}\): Student feedback, course evaluations, faculty reviews.

-

Pre-processing

In the first step of data preprocessing, \(\:{D}^{Num}\) as well as \(\:{D}^{Text}\)is refined. The gathered \(\:{D}^{Num}\)is pre-processed via KNN based data imputation and Z-score normalization approach. On the other hand, the gathered \(\:{D}^{Text}\)is pre-processed via stop word removal, stemming and lemmatization. The data collection, integration and pre-processing is shown in Fig. 2.

Numeric data

Data cleaning

Data cleaning is a critical step in preprocessing datasets. K-Nearest Neighbors (KNN)25 imputation is a method designed to deal with missing values in data by inserting the respective values based on the presence of other closest data points. This method is more useful when the dataset has absent values that can be found using other similar values in the data. In this situation, that is, in the case of predicting the likelihood of student enrollment and retention, the dataset may include a number of student characteristics, such as previous performance, social demographic factors, or level of activity, among others, that are not fully represented. KNN imputation does not alter the structure and relationships of the data; thus, it contributes to the enhancement of the dataset on which the predictive model works, thereby ensuring the performance of the model in terms of accuracy and reliability is improved. The KNN imputation applies on \(\:{D}^{Num}\)to remove the missing values, as well as fill them in. KKN transports information from the complete data and fills in the nearest data points of \(\:{D}^{Num}\). The data set after imputation by KNN is expressed as \(\:{D}^{KNN\_Num}\):

Z-score normalization

The Z-Score Normalization26 is a standardization method it transforms \(\:{D}^{KNN\_Num}\) data into a standard scale with a mean of 0 and a standard deviation of 1. This data preprocessing method is important, especially when features with different measurement scales, such as the test scores, percentage of student attendance, and levels of engagement, exist in the data set. If normalization is not done, the larger value range features may skew the learning of the model, hence making some predictions inaccurate. Z-score normalization helps in ensuring that all features are treated similarly within the model, thereby making it possible for the model to learn and recognize structures without the scale of the different parameters limiting it in any way. This step is necessary to prevent any deterioration in efficiency, especially when features containing numbers are mixed with those containing words or categories. The z-score-based normalized data is denoted as \(\:{D}^{p\_Num}\), which refers to numerical data pre-processed.

Text data

Information that is gathered is preprocessed through stop word removal27, stemming27, tokenization27 and the NER approach28. Stemming reduces words to their base form and helps to access the meaning of different forms of the same word, which expands the scope of the model. Tokenization is the process of breaking down the text within fixed units, such as sentences, and the model is then able to detect and learn patterns, e.g., the most common words or phrases related to a particular subject area, which in this case is students performance and engagement. In named entity recognition, entities are recognized and classified within a text; for instance, identification of students’ course or date within the normalized form of the text aids in prediction by retrieving important information. Altogether, these discussed processes ensure the cleansing, structuring, and formatting of the text data, more so increasing the efficiency of the model in deriving actionable information out of text data as it relates to student enrollment and retention plans. The pre-processed text data is represented as pre-processed textual data. The SHAP analysis of pre-processing stage is shown in Fig. 3.

With the data preprocessed, the next task is to extract meaningful features that can serve as the input for predictive models.

Feature extraction

Feature extraction allows us to identify the most important variables that influence student enrolment and retention outcomes. Feature extraction involves converting raw data into meaningful features. The statistical features29 like mean, median, standard deviation, skewness and kurtosis are extracted from \(\:{D}^{p\_Num}\). The extracted statistical features are denoted as \(\:{f}^{Num}\). The feature extraction stage is visualized in Fig. 4.

Text data

The word embeddings (GloVe)30 capture word semantics from. Topic modelling (LDA)31 extract’s themes from. Sentiment analysis (SentiWordNet)32 gauges sentiment in. The extracted features from text data are denoted as.

Weighted feature fusion

Once the features are extracted, the next step is to combine and enhance these features through a weighted fusion approach. This method ensures that both numeric and text-based data contribute effectively to the prediction and recommendation processes. The general procedure for calculating the unified feature vector is given as according to Eq. (10). The weighted feature fusion approach combines these features given as per Eq. 13). The features from different data sources \(\:{f}^{Text}\)and \(\:{f}^{Num}\) are multiplied with weights w1, w2, based on their importance. The weighted feature fusion approach combines these features given as per Eq. (1).

Where, \(\:U\) is the unified feature vector, \(\:w1,\:w2\) are the weights assigned to each feature. The SHAP analysis (XAI) of Feature extraction stage is shown in Fig. 5.

After fusing the features, it is essential to refine and select the most relevant features for model training. This is accomplished through the application of the Pythagorean fuzzy AHP with Hybrid Optimization with hybrid optimization, which helps prioritize features based on their impact on student enrolment and retention outcomes.

Feature selection via Pythagorean fuzzy AHP with hybrid optimization

The optimal features among \(\:U\) are selected using the new MCDM based Instructional Emperor Pigeon Optimization (IEPO). IEPO is the combination of TLBO33, EMA34, EPO35, respectively. Features extracted U act as input for the feature selection phase.

The pythagorean fuzzy AHP is explained in the parts that follow

Step 1

First, a decision matrix for pairwise comparisons with each feature in the hierarchical system’s dimensions is constructed using Pythagorean fuzzy numbers. Determine which of the two dimensions is more significant when performing pairwise comparisons, as indicated by the matrix A below in Eq. (2).

Step 2

A fuzzy comparison matrix24 can be made by converting pairwise comparisons into crisp values. Equation (3) can be used to generate a normalized fuzzy matrix.

Step 3

The proposed model presents a new method of utilizing the Hybrid Pythagorean fuzzy AHP methodology to generate a weighted priority matrix. The weighted priority matrix is computed by dividing the sum of each element (feature) in the normalized matrix by the number of criteria. Thus,

Step 4

The eigen vectors are obtained by multiplying the fuzzy pairwise comparison matrix multiplications with the weighted priority. To determine the largest eigenvalue, also known as the eigen maximal value, the eigen vector summation is necessary.

Step 5: Validation

Validating consistency is essential to the fuzzy AH P process. The validation requires the following.

Step 5.1

The formula for calculating CI is as follows by Eq. (4).

Where \(\:{\lambda\:}_{max}\) denotes the greatest eigenvalue and n indicates the matrix’s order.

Step 5.2

The random index values are influenced by the matrix’s order. The following Table 1 represents the list of random indexes.

Step 5.3

The Consistency Ratio could be calculated using the following formula as Eq. (5).

Therefore, the highest CR value is 0.1. If the total consistency ratio is higher than desired, it will need to be examined once more in order to bring it down to the desired level. A helpful technique for assessing the general hierarchy and decision-makers’ consistency is consistency evaluation.

Step 6

Determine the geometric mean of each criterion for each member in the pairwise comparison matrix is given by Eq. (6).

Geometric Mean

Step 7

Equation (7) calculates each criterion’s fuzzy weight.

Fuzzy weight,

Step 8

To get the ultimate weight, the fuzzy weight for each criterion is multiplied by the random distribution values whose sum equals one.

Step 9: Ranking

The features are ranked based on TOPIS Approach.

Step 10: Feature selection

Based on the ranking obtained from TOPSIS, the top-ranked features are selected for the next phase.

The mathematical steps followed in the IEPO model are manifested below:

IEPO

The Instructional Emperor Pigeon Optimization (IEPO) method is a hierarchical optimization approach that refines and selects the best feature sets to enhance predictive accuracy. It begins by generating an initial population of potential feature sets, randomly or with prior knowledge, and evaluating each set’s fitness based on a performance metric like Mean Squared Error (MSE). The feature sets are then ranked into three groups: the top 25% “elite” group maintains its position with minimal changes, the middle 26–50% group adjusts features relative to the elite’s values, and the lower-ranked group explores riskier variations using random factors to find high-reward solutions. The Teaching-Learning-Based Optimization (TLBO) phase then refines each set by updating it based on the best-performing sets, akin to learning from a top instructor. Further refinement follows with Emperor Pigeon Optimization (EPO), which adjusts each feature set to move in the best set’s direction. This iterative process continues until the fitness change falls below a threshold, at which point the optimized feature set is selected, providing a robust solution for accurate predictions.

In contrast to traditional approaches, the IEPO algorithm proposed in this paper incorporates a structured ranking-based grouping mechanism that classifies search agents into three groups, namely, elite, middle, and explorative agents. This hierarchy helps in maintaining feature even change features and even allows exploring the features efficiently and effectively without being too risky about the exploration of the feature set. This method is also strengthened by the “teaching phase” TLBO method as well as oscillation-based updates of the EPO method, which improves statistical feature selection not only in convergence rate but also in the quality of solutions found. Hence, due to these specific iterative enhancements, the proposed IEPO method produces better predictive outcomes while incurring less computational cost, eliminating drawbacks in earlier models that were either stuck in local optima or unable to explore features efficiently.

Step 1: Initialization

An initial population (shown in Eq. (8)) of the feature set of generated, and each of the feature set is denoted as \(\:{pop}^{i}\). The number of features in \(\:{pop}^{i}\) is denoted as \(\:n\). Typically, the feature sets are initialized randomly within a predefined range or based on domain knowledge.

In addition, the parameters like \(\:T\) for TLBO, \(\:\gamma\:\) for EMA and \(\:Step\_size\) for EPO is defined.

Step 2: Fitness computation

– for each of the feature in the population, the fitness is computed. Fitness is often based on how well the features perform in predicting the target variable (student enrolment) using an appropriate performance metric (MSE). Here, \(\:{Fit}_{i}\) is the fitness of \(\:{i}^{th}\)features is given by Eq. (9).

Step 3: Fitness based classification of search agents for position update

The initial group consists of search agents with a high ranking (first 25% of best fitness).

The first category consists of the highest performers in the search space, or elite participants. They account for 25% of the total population. Their main goal is to maintain their status in the populace by averting any noteworthy alterations.

The second group consists of search agents with mean rank (26–50% of best fitness)

Members of this group, or search agents, are ranked in the middle and are essential in exploiting the variations in the share percentages of the initial group members. In optimization situations, it is their responsibility to find the optimal location. Usually, this group makes about 26–50% of the search space. It takes at least two people to compare the targets of the members of the first group in a meaningful way. Assume that there are two sets of people (search agents). Each person (search agent) in the first group is the owner of a unit-valued share (target) of the asset denoted by y. To better evaluate the differences in the shares held by the members of the two groups and to achieve our goals, adjust the share values in line with Eq. (10).

As per Eq. (2), \(\:{n}_{l}\)represents the \(\:nth\) person in the first group, while \(\:{n}_{k}\)refers to \(\:nth\) person in the second group. The variable \(\:r\) takes on a random value between 0 and 1. \(\:{pop}_{1,l}^{gp1}\) defines the \(\:lth\) person in the first group, and \(\:{pop}_{2,l}^{gp1}\) refers to another person in the first group, also indexed by \(\:l\). Meanwhile, \(\:{pop}_{k}^{gp2}\) refers to the \(\:kth\) individual in the second group.

The third group comprises search agents with weak rank

Compared to individuals in the second group, those in the third group are less fit. They select shares that are riskier than those held by the second group, but they are comparable to those held by the first group in order to reap larger rewards. Individuals in the third group, in contrast to those in the second group, utilize both the distinctions in the first group’s share values and the distinctions in their own share values when making adjustments. It is necessary to compare the shares held by the first group between two search agents. Two random factors are substituted for factor 1 in order to raise the risk that members of the third group assume relative to members of the second group. The average value of these random components is quite close to unity. Consequently, in order to maximize their gains, members of the third group adjust the values of their shares by applying Eqs. (11) and (12).

A third set of people identified as \(\:{pop}_{j}^{gp3}\) - makes up a sizeable percentage (20–50%) of the market’s total population. This group’s members are all uniquely identified by the index \(\:{n}_{j}\)with the \(\:jth\) member identified as \(\:j\), and possesses an equivalent share variation value, \(\:{SV}_{j}\). In order to find the best spot, every individual in this group produces two random numbers, \(\:{r}_{1}\) and \(\:{r}_{2}\) both of which lie within the interval [0, 1].

Step 4-Update each feature set based on the best feature set using the teaching phase of TLBO model

The newly updated solution based on the teaching phase of TLBO model is shown in Eq. (13).

Here, \(\:Best\_Feature\_Set\)is the acquired best feature among the 3 groups.

The new feature set is refines based on the movement updating (oscillation phase) of EPO model. The proposed model is shown in Eq. (14).

Step 4-Feature refinement based on EPO

Here, \(\:po{p}_{i}^{new}\)is the recorded new set of features, and \(\:ste{p}_{i}\)is the step size an \(\:D\)is the direction of the best feature.

Step 5

- The fitness is computed for the new population set is calculated by the formula given in Eq. (15).

Step 6- Termination criteria

The modification in the fitness value is monitored and if the change is found to be below the threshold, then the convergence is considered. If the termination criterion is met, the best solution (feature) \(\:{U}^{*}\)is returned.

The convergence analysis of the proposed IEPO model over the existing model is shown in Fig. 6. As per the acquired outcome, the proposed model is found to have maximal convergence speed, while compared to the existing one. The major improvement is owing towards the inclusion of the teaching principles of TLBO and the exchange principles of EMA model.

Student enrolment prediction via DeepEnrollNet

With the relevant features selected, we now move on to the core predictive task—predicting student enrolment. This is achieved through the DeepEnrollNet model, which is the combination of CNN-LSTM and Attention QCNN (diagrammatically shown in Fig. 5). The input data U* is initially sent via a convolutional layer, which uses a convolution process to extract pertinent information from the input. It is the final stage of the process which is done by using the results of the previous phase. Figure 7 illustrates the layout of DeepEnrollNet.

Convolutional neural networks are primarily used in image processing, and they excel in spatial feature extraction. As far as student enrollment prediction is concerned, CNNs are used to model local patterns found in various student-related features, including historical enrollment, grades, or other measures of student engagement and activity. Thanks to the inclusion of convolutional layers in the architecture of the CNN model, the system is capable of recognizing the secondary correlations present in the database and understanding the features that are crucial in predicting future enrollment figures.

The other element of the proposed enrollment predictor is the use of gated recurrent units that are part of recurrent neural networks. This is because such data contains a temporal aspect, which should be modeled as well. When analyzing student enrollment rates, there may be many periodic or even long-term trends (for example, related to the academic year). Hence it is important that a past sequence of observations is used in making a forecast about the future. GRU units are particularly good at this because they are able to model such long-term dependencies without suffering from the vanishing gradient, which renders traditional recurrent neural networks ineffective. This is especially important in a prediction task, where enrollment rates have to be predicted depending on previous data.

The capability of focusing on significant elements of the data has been added to the model using the attention mechanism, which contributes to the capability of the model in handling long sequences. In this aspect, the attention mechanism enables the model to differentiate between the relevance of different features or the importance of different time steps while predicting the number of enrollments. For instance, it can be that the model is trained in such a way that it considers several seasons or years more than others because they have a greater impact on the decisions of students in enrolling, thus improving the ability of the model to handle information that is complicated and scattered, like students, their performance, and factors that affect their enrollment.

The Quadratic CNN adds a quadratic layer into the model that goes beyond the convolution process. This model can model feature interaction between different levels. Such a model works well where the data involves complex interrelationships between a number of factors, as in student enrollment when different levels are non-linearly related. With the introduction of quadratic interactions, QCNN again comes into play and helps model these relationships in a much better way, resulting in better predictions.

A unique deep learning method with four layer which are input(i/p), CNN, LSTM and output layer—called CNN-LSTM and attention based Quadratic CNN is used to predict the enrollment rates of upcoming years. The CNN layer is trained with U*. The convolution operation is intended to enhance the depth in order to compress the number of parameters, and the feature is subsequently decreased by pooling.

Input layer

It is the initial layer which contains the data by which the classification is to be done.

Convolution neural network layer

Convolutional layers are typically the most crucial components of CNNs. The input cube is convolved with numerous learnable filters at each convolutional layer, producing a variety of feature maps. For example, assume that X is the i/p and that its size is mX n Xd, where m, n denotes spatial size, d denotes its channel count, and denotes the ith feature map of M. The following can be used to represent the convolutional layer’s jth output by using the formula shown by Eq. (16).

f (·) activation function that improves nonlinearity. ReLu is used and it is given as per Eq. (17)

The pooling layers are occasionally added after multiple convolutional layers in the CNNs because redundant information in images exists. The feature maps’ spatial size gradually shrinks as a result of the pooling procedure, and the network’s computation and parameter count likewise drop. The typical pooling method can be represented as, with a p × p window-size neighbour designated as S. This can be calculated by using the formula given as Eq. (18).

where F number of elements in S.

Fully connected layer

The feature maps from the preceding layer are sent to fully connected layers. By bending feature maps into an n-dimension vector, the fully connected layers of a conventional neural network are used to extract deeper and more abstract information. Equation (19) represents the formula for fully connected layer.

where \(\:X`\) is input, \(\:Y`\)is Output, W is the Weight and b is the bias.

LSTM layer

The three gated units that comprise the bulk of the LSTM neural network are the forget gate, i/p gate, and o/p gate. The i/p gate is the main processor of i/p data. The forget gate controls how well the current cell remembers prior information. The o/p gate contains the neuron’s o/p. Considering that the sequence of i/p is, the calculation formula for each LSTM neuron parameter at time t is as follows by Eqs. (20), (21), (22), (23) and (24).

Where \(\:{i}_{t}\),\(\:{f}_{t}\),\(\:{o}_{t}\)is the i/p of the i/p gate, forget gate, o/p gate at time t respectively, \(\:{x}_{t}\) is i/p to the LSTM neuron t, \(\:{h}_{t-1}\) hidden layer’s o/p state at t-1 respectively. In addition, \(\:{W}_{i}\), \(\:{W}_{f}\), \(\:{W}_{o}\) is weight of the i/p gate, the forget gate, o/p gate of the neuron at time t, and \(\:{b}_{i}\), \(\:{b}_{f}\), \(\:{b}_{o}\)offset vectors, \(\:{W}_{c}\) weight between the i/p and the cell unit, \(\:{h}_{t}\) o/p of the hidden layer at time t and \(\:S\:\)is the sigmoid function.

Output layer

It is the final layer which contains the output of the CNN-LSTN model, which provides the accurate outcomes on the future enrolment rates.

Self- attention based quadratic CNN

A phenomenon known as self-attention allows a model to consider the relative importance of different elements in its input sequence while making predictions. It increases the receptive field of the CNN without incurring the computational cost associated with very large kernel sizes. The quadratic CNN is a nine-layered neural network architecture that consists of an i/p layer, a fully connected, five hidden layers made up of pool and convolution layers, and an o/p layer (SoftMax). A dropout layer follows the fully linked layer in this configuration. In the training phase, the neuron node’s probability is p = 0.5, and for trial phase, p = 1. All layers’ activation functions aside from o/p are rectified linear units, or leaky ReLUs, which are used to increase convergence speed and address the gradient saturation issue. This can be expressed by the following Eq. (25).

Anything that suggests the opposing representation is represented as cost during the training process, and the cost function is determined by using cross entropy as shown by Eq. (26).

where \(\:\theta\:,\:,\:{\theta\:}^{{\prime\:}}\) are predicted probability distribution, actual distribution. The training and validation analysis of proposed model for varying epochs is shown in Fig. 8.

Both graphs (in Fig. 8) show that the model is learning effectively, as the training loss decreases and the accuracy increases consistently over the epochs. By epoch 50, the training accuracy is quite high, suggesting that the model has successfully fit the training data.

The feature fusion in the proposed model, which combines numeric and text-based features, is a critical component that directly contributes to the accuracy and robustness of the framework. Numeric attributes often refer to structured information that can be effectively handled by conventional mathematical and statistical methodologies or machine learning approaches, such as social background, trends, or results. Textual features, however, include non-structured features that consist of unrevealed and fully qualitative information that is embedded within them that numbers possess no means of expressing. Because of this duality of feature types, the model benefits from both features and thus ensures that all valuable information is utilized, which would have been lost if either feature type were focused on alone.

The weighted fusion strategy allows for the appropriate weight and relevancy of different features in the model. For example, some numerical elements, such as age and prior academic record, are likely to contribute more heavily towards estimating student retention or admission rates than textual components, such as comments made on feedback or the sentiment expressed in students’ answers. If the model applied different weights to each type of feature, it would be able to merge the components of information more successfully, resulting in enhancement of the predictive abilities of the model. This weighted expansion enables the model to emphasize the important features while including less important features to produce better predictions.

Integration layer

Integration layers are often used in CNNs to combine information from different feature maps. It is a layer that combines output from the CNN-LSTM and Attention-Quadratic CNN which results in improved accuracy.

Student retention recommendation based on DQN

Beyond predicting enrolment, it is also crucial to provide actionable recommendations for improving student retention. This is accomplished through the use of Deep Q-Networks (DQN), which enables the development of strategies to optimize student retention. Retention recommendation using DQN is an innovative approach that leverages reinforcement learning (RL) to optimize retention strategies in dynamic environments such as student retention or customer retention systems. Mathematically, the reinforcement learning model for retention recommendation is defined by the following key components:

-

States (S): In retention recommendation systems, the states represent all the possible situations the agent might go through in interaction with the environment. A typical example is a student retention problem: at this point, a state might represent a student profile in terms of academic performance, levels of engagement, and participation in extracurricular activities. Regarding customer retention, this can include purchasing behavior, satisfaction score, and frequency of service usage.

-

Actions (A): Actions are the set of retention strategies available with the agent at each state. Such actions may include interventions in the form of customized emails, offers, counseling sessions, or incentives targeted for improvements in retention. The selected action will determine the outcome in the case of a student continuing to stay enrolled or if a customer is staying with a brand.

-

Rewards (R): Rewards are the numeric signals that come from the environment to the agent, contingent on the outcomes of the taken actions. A positive reward could mean success of an action retaining a person-for example, a student wanting to continue her education or renewal of customer subscription. A negative reward represents those instances when retention did not occur-an example is a student dropping out or cancellation of service by customers.

-

Policy (π): Actually, the policy is an instance of a decision-making strategy followed by the agent in order to derive the most promising action from any given state. In this case, the retention policy maps the current state and the recommended action, such as characteristics of students or customers and a retention intervention. The agent updates its policy through interaction with the environment by trying to maximize long-term retention outcomes.

The Bellman Equation is an important idea in RL. This equation illustrates the relationship between a state’s value and the projected future rewards associated with that state. It asserts that the importance of a state \(\:W\left(s\right)\) is given by Eq. (27) corresponds to the immediate reward gained for performing an action (\(\:a\)) within that state \(\:T\left(s,a\right)\) plus the delayed expected value of the following state. The discount factor (\(\:\gamma\:\)) is a value between 0 and 1 that indicates the agent’s preference for future rewards over immediate rewards.

Here, \(\:F\left[W{\prime\:}\left(S{\prime\:}\right)\right]\) denotes the expected value of another state, taking into account all possible future states that could come from action (\(\:a\)) in state (\(\:s\)). The outcomes of the proposed retention recommendation model is shown in Fig. 9.

The graphical results for key scenarios in the retention recommendation stage based on DQN is shown in Fig. 10.

-

Scenario 1 - High Reward (Stayed): This scenario reflects students who had consistently high rewards from the model, indicating positive reinforcement and engagement, leading to retention.

-

Scenario 2 - Medium Reward (At Risk): In this scenario, the rewards are moderate, reflecting students who are on the verge of leaving but still have a chance to stay with some intervention.

-

Scenario 3 - Low Reward (Dropped): This scenario reflects students who received low rewards, indicating disengagement or poor outcomes, leading to them dropping out.

Result and discussion

The proposed student enrolment prediction and retention recommendation model has been implemented in python. The data has been collected from https://www.kaggle.com/datasets/thedevastator/university-student-enrollment-data. The training set made up 70% of the dataset, while the testing set included the remaining 30%. The dataset was split into these two subgroups. For the construction and validation phases, respectively, these subsets were employed. Various error measures were utilized, namely mean absolute error (MAE) and root mean squared error (RMSE), to evaluate the performance of the DeepEnrollNet model in relation to the most advanced methodologies.

R2

Also known as the coefficient of determination, R-squared is a metric based on statistics that is commonly employed to measure how much of the variation of the model’s dependent variable can be expressed by the independent variables in the model. Effective explanations of the observed variability in enrolment or retention variance are encapsulated in high R-squared values, hence serving as a key measure when analysing the explanatory power of a model.

Mean squared Error

The average of the errors (more specifically the squares of the errors) is known as the mean squared error (MSE), and it gives an idea as to the size of the prediction errors. With respect to the numbers predicted by the model, lower values of MSE signify more precision in prediction because smaller error values mean that the estimated values are closer to the actual values. Also, this measurement tends to penalize large errors more than small errors, which makes it possible to spot large errors within the predicted values compared to the actual values.

Mean Square root error

MSRE is like MSE but applies more importance to the transformation of the dependent variable to its root form, which helps to minimize the effect of outliers while assessing the model. This measure becomes important where the need is to explain the variations in error less and seek the accuracy of prediction in a more distinct way.

Mean squared error (MSE)

It is a more general metric, and in this respect, it is non-negative and less than or equal to one since it represents both the within and between sums of squares. These allow for reasonable comparisons of performance even in practice where the scale or even the type of data points being predicted may change drastically. For example, in a prediction task with varied distributions of prediction features.

Root mean square error

It is simply the square root of MSE, meaning it gives an error measure with the same unit as the target variable. As it indicates the level of prediction accuracy of the model, RMSE is more practical instead of greater prediction accuracy as the model does not overfit its predictions, with lower values expected to be closer to real data.

MAPE

It refers to the average percentage error of predicted values against real ones. MAPE is thereby helpful as it provides a clear picture when it comes to the assessment of the given model’s errors in the prediction’s relative sense. Because MAPE gives the error in percentage, it is helpful in assessing the performance of the model over certain limits, and the extent to which the model is able to reach out is growth in capability.

The AUC-ROC analysis of DeepEnrollNet model for 70 and 80% of variation in training rate is shown in Fig. 11.

Performance evaluation of proposed feature selection model in enrolment detection over existing model: with 70% learning rate

The Table 2 presents a comparison of various performance metrics for five different algorithms: IEPO, TLBO, EMA, EPO, and a DeepEnrollNet, respectively. The DeepEnrollNet has the lowest MSE of 0.234643, suggesting that it has the smallest average squared difference between the predicted and actual values. EMA has the second-lowest MSE at 0.32342, followed by TLBO, EPO, and IEPO. The DeepEnrollNet ranks maximum MSE value, i.e. 0.234643, which could be inferred that the DeepEnrollNet has the maximum average squared difference of predicted values from the actual values. MSE is defined as the mean of squared residuals or errors (deviations), where lower MSE suggests higher accuracy of the model. MSRE is the relative squared error which indicates error measurement from a different angle. The EMA ranks next after DeepEnrollNet with the MSRE value of 0.32342, while, TLBO, EPO, and IEPO in sequence. In analogy to MSE the DeepEnrollNet ranks least in MSRE of value 0.24323, which could be inferred that the scheme has the least average squared relative error. EMA secures the second minimum MSRE with a value of 0.33243, followed closely by TLBO, EPO, and IEPO. NMSE: The NMSE for the DeepEnrollNet secures the lowest value of 0.21134, which implies that it attains the minimum average squared error that is normalized by the variance of data. While EMA secures the value 0.34432, TLBO, EPO, and IEPO all are at very similar values. The DeepEnrollNet has the least RMSE as 0.231467, which states that the average of squared error is small. The observed lower value of RMSE supports that the proposed model is highly accurate in predicting the enrollment values. EMA provided the second-lowest RMSE as 0.36536, followed by EPO, IEPO, and TLBO algorithms. The DeepEnrollNet has the least MAPE as 0.212345, which states that it has the least average absolute percentage error. The Mean Absolute Percentage Error assesses the absolute deviation in percentage terms between forecasted and observed values, where the lower values equate to better precision. EMA provided the second-lowest MAPE as 0.38786, followed by EPO, TLBO, and IEPO algorithms. The performance of the Proposed among these algorithms is consistently superior to all others across the performance metrics. Model assessment also propounds that higher R2 better predict the model fit. The proposed model attained the highest R2 of 0.98263 which is greater than that of all IEP0 (0.81263), TLBO (0.82334), EMA (0.87363), and EPO (0.91623). This performance indicates that the proposed model accounts for almost all the changes in the enrolment data suggesting its predicting strength. In every aspect, in this instance all other models were inferior to the model, as the model recorded the highest R2 coupled with the best MSE, MSRE, NMSE, RMSE and MAPE distinctively the lowest returns. The result means that the proposed model in addition to offering a better explanation of the data also reduces both absolute and relative predictive errors therefore making it very suitable for enrolment detection at 70% learning rate. Such results within such diverse measures speak volumes as regards the efficiency of the selected feature based prediction maintenance approach over the previous methodologies of student enrolment and retention predictions.

Performance evaluation of proposed feature selection model in enrolment detection over existing model: with 80% learning rate

The Table 3 compares the performance of five different algorithms using various metrics for a learning rate of 80%. R-squared values indicate the proportion of variance explained by the model. The enrollment detection model put forward here performs remarkably well on all performance indexes, clearly outdoing rival models - namely IEPO, TLBO, EMA and EPO - in terms of prediction and incapacity to make errors. The DeepEnrollNet achieves the highest R² of 0.972632, suggesting it explains approximately 97.26% of the variance in the data, indicating a very strong fit. EPO follows with an R² of 0.9123, also showing a good fit, while IEPO has the lowest at 0.8242, indicating it explains only 82.42% of the variance. MSE measures the average squared difference between predicted and actual values. The DeepEnrollNet again performs the best with the lowest MSE of 0.22133, indicating minimal error in predictions. EPO, with an MSE of 0.34542, shows a moderate performance, while TLBO and IEPO have higher MSE values, suggesting greater prediction errors. MSRE provides insight into the relative error of the predictions. The DeepEnrollNet has the lowest MSRE at 0.23235, indicating it has the smallest average squared relative error. EPO and TLBO show better performance than IEPO, which has the highest MSRE of 0.423454. NMSE normalizes the MSE by the variance, providing a relative measure of error. The DeepEnrollNet leads with an NMSE of 0.21342, indicating a strong performance. TLBO and EPO also perform well, while IEPO has the highest NMSE, suggesting it has the least favorable performance in terms of normalized error. RMSE is another measure of prediction error, providing a more interpretable metric by returning to the original units of the data. The DeepEnrollNet has the lowest RMSE of 0.248786, indicating it has the smallest average error in predictions. EPO and TLBO show competitive RMSE values, while IEPO has the highest RMSE, reflecting its overall poorer performance. MAPE measures the average absolute percentage error. The DeepEnrollNet again performs best with a MAPE of 0.23786, indicating it has the smallest average percentage error. EPO and TLBO show reasonable performance, while IEPO has the highest MAPE, indicating less accuracy in its predictions. The graphical outcomes are shown in Fig. 12.

This model demonstrates a persistent efficiency in error minimization offering dependable predictions that do not stray much from the observed values. The close approximation to the actual data and the ability to deal with both types of errors, absolute and relative, indicate that this is a model that can be easily applied in the real-world context of enrolment forecasting. Moreover, the greater variability insensitivity of the model also makes it reliable to facilitate the strategic planning of enrolment and retention, thus making it an essential asset for organizations that seek to utilize data in making decisions rather than guessing. This model’s overall ability to detect enrolment trends accurately marks a significant improvement in both predictive stability and accuracy.

Performance evaluation of proposed deep learning (prediction) model over existing model: with 70% learning rate

Table 4 illustrates the deep learning-based analysis of 70% learning rate. The DeepEnrollNet consistently outperformed all other deep learning models (CNN, MLP, LSTM, and DNN) across all evaluation metrics (R², MSE, MSRE, NMSE, RMSE, and MAPE) when using 70% of the learning data. This indicates its robustness and effectiveness in capturing the underlying patterns in the data while minimizing prediction errors. The DeepEnrollNet exhibits the highest R² value of 0.9764, indicating an exceptional fit to the data. Moreover, the elevated measure indicates the excellent fit of the model to the data as compared to many other like BayesNet15 (R² = 0.7852), Naive Bayes16 (R² = 0.7923), SVM23 (R² = 0.8214) and ID3 + SVM24 (R² = 0.8556) models. All these models exhibit much weakened R² values hence making them less reliable for prediction since they account for less variability in the data. This suggests that the model explains a significant portion of the variance in the dependent variable, outperforming all other models. The model DeepEnrollNet presents the most performance with the smallest MSE of 0.218978, which means it has the least prediction error than any other model. In precise situations, this is critical as the lower the MSE values, the more reliable the predictions. Precocious comparisons Depicts BayesNet15 with MSE = 0.5121, where the Naive Bayes algorithm16 had MSE = 0.4794 while SVM23 reported MSE of 0.4321 yielding high MSE values hence a larger prediction error. Similarly, ID3 + SVM24 occured with an MSE of 0.469 which is still much lower than the DeepEnrollNet MSE. The DeepEnrollNet achieves the lowest NMSE of 0.21232, indicating superior performance in terms of normalized prediction accuracy, which accounts for the scale of the data. The DeepEnrollNet has again outperformed every other model with an MSRE of 0.216445, suggesting it is the best at reducing relative prediction errors. Some other models, such as BayesNet15 (MSRE = 0.4965) and Naive Bayes16 (MSRE = 0.4634), have shown comparatively higher MSRE values which means they are not that effective in minimizing errors. SVM23 (MSRE = 0.4203) and ID3 + SVM24 (MSRE = 0.4485) are a bit better, but they are still lower than the performance of the DeepEnrollNet. The DeepEnrollNet has the lowest RMSE of 0.24345, reinforcing its effectiveness in minimizing prediction errors. RMSE is a widely used metric for assessing the accuracy of continuous predictions. The DeepEnrollNet once again performs excellently in prediction error minimization with the RMSE of 0.23213, which is the lowest observed. Other models such as BayesNet15 (RMSE = 0.4514), Naive Bayes16 (RMSE = 0.4365), SVM23 (RMSE = 0.4775) and ID3 combined with SVM24 (RMSE = 0.4613) exhibit higher RMSE values, indicating larger prediction errors. The DeepEnrollNet al.so leads with the lowest MAPE of 0.234532, indicating the best performance in terms of percentage error, which is particularly useful for understanding the accuracy of predictions in a relative sense.

The fact that this model has improved both absolute and relative errors means that the quality of prediction has improved significantly, enabling accurate enrolment projections that correspond to the actual figures. This consistent and reliable performance across all possible evaluation metrics makes the proposed model very useful in enhancing decision-making processes within an educational system based on empirical data. Its Improved Architecture supports the delivery of accurate and timely information for the management of enrollments in a better than before manner in regards to Conventional models.

Performance evaluation of proposed deep learning (prediction) model over existing model: with 80% learning rate

The suggested deep learning structure is superior when evaluated on an 80% learning split dataset against architectures such as CNN, MLP, LSTM, DNN, BayesNet15, Naive Bayes16, SVM23, ID3 + SVM24, and the PROPOSED. It has a very high R-squared (R²) value which indicates resilience in predictive power as it captures almost all the changes in the enrolment data variances implying a very good fit. For instance, the model under consideration always presents the lowest error rates when other models are compared using the standard metrics such as Mean Squared Error (MSE), Mean Squared Relative Error (MSRE), Normalized Mean Squared Error (NMSE), Root Mean Squared Error (RMSE), and Mean Absolute Percentage Error (MAPE). The remarkably low error rates suggest that the model has effectively reduced both absolute and relative differences, thus ensuring that the predicted values of enrolment closely correspond to the real values. Table 5 represents the deep learning-based analysis of 80% learning rate. It achieves this with the DeepEnrollNet al.ways outperforming all other deep learning models, CNN, MLP, LSTM, and DNN, using 80% of the learning data in terms of all the metrics R², MSE, MSRE, NMSE, RMSE, and MAPE. This suggests that the robust and effective algorithm captures the underline pattern in the data effectively along with a minimum prediction error. The R² maximum value, for the DeepEnrollNet, has the best value at 0.98765. The DeepEnrollNet reaches an all-time high R² of 0.98765, indicating that the model explains most of the variance present in the data under consideration. Other models, such as CNN (R² = 0.8764), MLP (R² = 0.90355), and LSTM (R² = 0.94353), have lower but close values, indicating that the DeepEnrollNet has a better capability of modeling the data than the rest. Among the traditional models used, the following have even lower R² values suggesting poor fits- BayesNet15 (R² = 0.7852), Naive Bayes16 (R² = 0.7923), SVM23 (R² = 0.8214) and ID3 + SVM24 (R² = 0.8556). This would, therefore, explain a high percentage of the variance and be higher compared to other models. The DeepEnrollNet has the minimum MSE of 0.218978, which means that it generated the best performance for the minimization of prediction errors. DeepEnrollNet realized the least MSE of 0.218978, which means it excels in prediction error mitigation. Other models such as CNN (MSE = 0.42323), MLP (MSE = 0.29876), and LSTM (MSE = 0.37654) report higher error values. Among other existing models, BayesNet15 recorded an MSE of 0.5121, Naive Bayes16 had an MSE of 0.4794, SVM23 reported an MSE of 0.4321 while ID3 + SVM24 had an MSE of 0.469, which all have greater error margins showing how better DeepEnrollNet deals with errors. The DeepEnrollNet achieves a NMSE of 0.232453, indicating strong performance in terms of normalized prediction accuracy, although it is higher than some other metrics, it is still the lowest among the models. The PROPOSED model has the lowest RMSE of 0.23213, reinforcing its effectiveness in minimizing prediction errors. RMSE is a critical metric for assessing the accuracy of continuous predictions. With a MAPE of 0.218754, the DeepEnrollNet al.so leads in this metric, indicating the best performance in terms of percentage error, which is particularly useful for evaluating prediction accuracy in a relative context. The DeepEnrollNet achieves the lowest Root Mean Square Error (RMSE) value of 0.23213, indicating its excellence in the ability to predict performance metric which is very vital for assessing the accuracy of continuous predictions. Other models, such as: CNN model (RMSE = 0.35664), MLP model (RMSE = 0.32345), and LSTM model (RMSE = 0.398988) provided higher RMSE values. Similarly, BaeysNet RMSE reported by15 is 0.4514 whereas Naive Bayes RMSE reported in16 is 0.4365 and SVM RMSE in23 is 0.4775 and ID3 + SVM in24 is 0.4613 all show high values further supporting the claims of the superiority of DeepEnrollNet.The acquired outcomes are visualized in Fig. 13.

As indicated by these metrics, the proposed model is both reliable and stable, therefore can be deemed fit for the purpose of enrollment forecasting. This consistency in error metrics assists in understanding the model’s performance in extrapolative mode, adding value to the model for educational institutions that aim to base their solutions on futuristic enrollment data. Upon considering the aims of the model, it can be asserted that this contribution exceeds the traditional deep learning models within the field.

Analysis on retention recommendation based on DQN

The bar chart represents the retention recommendation results based on DQN for three key scenarios:

-

1.

High reward (Stayed): The students in this group are more likely to stay, with a reward value of 85, shown in green.

-

2.

Medium reward (At Risk): These students are at risk but still have potential for retention, with a reward value of 60, shown in orange.

-

3.

Low reward (Dropped): These students are less likely to stay, with a reward value of 20, shown in red.

The outcomes acquired are shown in Fig. 14.

Discussion

The improved conversation sheds light on the potential influence of advanced deep learning techniques such as DeepEnrollNet, as part of predictive analytics frameworks, on the policy and decision-making processes of higher education institutions. Further, these concepts introduce additional perspectives on the impact of implementing such models, which is why they are included in the subsequent section.

Advanced data-driven decision making

With the precision and projection capabilities of DeepEnrollNet, institutions can participate in more aggressive and more informed decision making for a wide array of strategic purposes. Predictive insights enable administrators to modify academic offerings, staffing, and resources on the fly to be in accordance with enrollment trends. This evolution in planning of educational programs turns planning from reactive responses to developments, to proactive strategies which allow academic institutions to be more flexible in coping with the changing needs of students, the funds available, and any other aspects in the higher education environment.

Improved resource allocation and strategic infrastructure development

A deeper understanding of the enrollment trends through data analytics enables institutions to make more systematic decisions on resource allocation in terms of time and place. For instance, after considering historical data in addition to future expectations, organizations can prevent overinvestment in redundant, unsustainable infrastructure or, on the flip side, they can incorporate versatility into their expansion strategies wherever required in a shorter time period. Being predictive becomes most useful in areas where several facilities and human resources on campus need to be controlled, for example, student housing, dining facilities, classrooms, and faculty recruitment.

Personalized retention and intervention strategies

As a result of DeepEnrollNet’s predictive precision, institutions are able to design more effective retention plans, particularly to those students most likely to drop out, as opposed to students in general. Knowing which variables are more likely than others to cause a certain percentage of students to leave an institution, schools will be able to provide targeted treatments, ranging from academic advising to economic support, which would tackle the problem of student leaving. Custom-made retention strategies impact more than just an institution’s retention rates; they create a conducive environment where students of different needs are able to learn enhancing the ability for such an institution to produce graduates years after year.

Strategic planning and policy development for institutional growth

in the presence of predictive analytics to support enrollment estimates, institutions are more able to perform strategic planning. For example, changes in the number of expected students can assist in planning a time-frame of investments in allocations to new areas such as e-learning or launching new degree programs that are driven by the changing markets. Predictive models help in relating these strategies to the future trends in enrollment aiding in the establishment of the institution and its international strategies. The institutions can therefore make informed investments in the most promising sectors which improves their competitiveness and promotes creativity.

Financial planning and sustainability

The precise enrollment estimations aids educational institutions in preparing more plausible financial forecasts. Understanding the future income from tuition fees more clearly, institutions can create sustainable budgets minimizing the need for contingencies or unexpected budget cuts. Furthermore, forecasting and planning facilitate prioritization of certain investments within the institution such as financial aid, faculty hiring, and development of infrastructure. This integrates finances with academic and operational planning thus ensuring sound financial management and the achievement of longer-term institutional objectives.

Enhancing institutional reputation and academic quality

Predictive models being utilized promotes consistent and strategic decision making which contributes to enhancing the quality of student experience. By efficiently responding to the needs of the students, the institutions are able to raise the levels of satisfaction and academic performance, which are the critical determinants of the rank of the institution. Such operational strength and positive reputation ensures enrolment of new students and retention of the old ones and therefore positive growth in the brand value of the institution.

Enhanced precision in enrollment forecasting

The efficiency of the institutions in predicting future admission trends improves significantly as more advanced models, for example, DeepEnrollNet, which are superior to standard models in R², MSE, MSRE, NMSE, RMSE and MAPE constraints, are utilized. This ability to predict with precision informs the formulation of very specific policies which take into account the variations in annual, as well as seasonal, or cyclical enrollments and allows for planning that is flexible and quick to change. In practical terms, this would help the institution in managing the effects that the changes in student enrollment would cause, assisting in targeting the resources more effectively. For instance, the assessment of likely changes in the trends of enrollment using past information and present conditions is useful to departments in preparing for additional or lesser changes, which in turn assists in the management of personnel, classroom resources, and finances during the year.

Continuous feedback loop for institutional improvement

The implementation of predictive analysis makes it possible to create a feedback loop within institutions that enhances their policies and strategies over time. Over time, as more data is brought in by institutions, predictive models can be reset up to date. Such a process is particularly relevant for institutions which wish to keep up with trends in the education sector, new technological advancement, the demands of the students and the employment sector as a whole. In fact, the perspective incorporation of such advanced deep neural networks enhanced decision-making capacities in different strategic aspects of the institution. Through the increasing precision of predictions, tailoring better services to students, managing available resources, and enabling gradual growth in the institutions’ budget, these complexities facing the institutions are better addressed. This expectation leads to expansion among institutions and success strategy but also improves education and the student’s experience which is beneficial for institutions for survival and creativity in the education sector globally.

Conclusion

This study presents a robust framework for predicting student enrollment and enhancing retention strategies by integrating advanced deep learning models and novel optimization techniques. The use of both numeric and text data, coupled with sophisticated preprocessing and feature extraction methods, enables a comprehensive understanding of enrollment patterns and student behavior. By leveraging K-Nearest Neighbors (KNN) imputation, Z-score normalization, and advanced text processing techniques, the framework ensures high-quality data input. The application of word embeddings, topic modeling, and sentiment analysis enriches feature representation, while the Pythagorean fuzzy AHP with Hybrid Optimization with hybrid optimization approach combining Instructional Emperor Pigeon Optimization (IEPO), Teaching-Learning-Based Optimization (TLBO), Exchange Market Algorithm (EMA), and Emperor Pigeon Optimization (EPO) enhances feature selection. The DeepEnrollNet model, incorporating CNN-GRU-Attention QCNN, provides accurate enrollment predictions, while Deep Q-Networks (DQN) offer actionable insights for improving student retention. This integrated approach not only improves predictive accuracy but also provides effective strategies for optimizing student success and institutional planning, offering valuable contributions to the field of educational data science.

Key contributions of this study are:

-

Introduced an integrated framework combining deep learning and recommender systems for accurate student enrollment prediction and effective retention strategies.

-

Developed a hybrid optimization technique (IEPO), merging TLBO, EMA, and EPO, for enhanced feature selection using Pythagorean fuzzy AHP, which improved model efficiency and accuracy.

-

Designed the DeepEnrollNet model, featuring CNN-GRU-Attention QCNN layers, to capture complex temporal and spatial patterns in enrollment data.

-

Applied Deep Q-Networks (DQN) to generate actionable, data-driven retention recommendations based on student performance and engagement.

-

Employed a weighted feature fusion approach to integrate numeric and text-based data, ensuring robust feature representation and prediction accuracy.

-

Validated the framework’s effectiveness in a real-world educational setting, demonstrating its practical application and adaptability for other institutions facing similar enrollment and retention challenges.

The limitations of this research must be acknowledged, as they may contribute to a more analytical approach and encourage appropriate expectations regarding its possible utility. The size and scope of the database utilized in this study present restrictions in that it may not capture all the variations in enrollment patterns existing in different schools in different regions. The general approach may be used to avoid overfitting strains of the present dataset. However, the present formulation is quite unlikely to work for other datasets with demographics, institutions in comparison, or even completely different structures from what is used here. Moreover, this particular choice of features and hyperparameters may be difficult to replicate in other datasets, as it was designed to work with only this one. Addressing these limitations not only highlights areas for improvement but also underscores the importance of testing the model on diverse datasets to validate its generalizability and adaptability to various educational environments.

Future works can include situational awareness analysis could enable institutions to act on the changing patterns of behaviors of students, for instance, the new students and the students who are retained. Embedding adaptive learning algorithms could also help create retention policies for students with respect to their academic progress and engagement at an individual level. Furthermore, this framework can be modified for different types of educational environments, for example, extending it for implementation within e-learning sites or situated within technical institutes where the patterns of enrollment and retention attributes can be completely different.

These possible use cases highlight the significance of this research as it offers an actionable approach that can be deployed cheaply across different institutions with the aim of improving the effectiveness of usage of human resources and other resources in the institutions. More research could focus on how the use of other types of data, like social and behavioral data, can improve the accuracy of predictions and recommendations regarding retention. This also makes it possible to build on the progress towards predictive modeling and retention techniques for effective use in educational data science.

Data availability

The datasets used in this study were collected from https://www.kaggle.com/datasets/thedevastator/university-student-enrollment-data.

Abbreviations

- KNN:

-

k-nearest neighbours

- SOTA:

-

State-of-art approaches

- TLBO:

-

Teaching-learning-based optimization

- DL:

-

Deep learning

- EMA:

-

Exchange market algorithm

- EPO:

-

Emperor pigeon optimization

- DQN:

-

Instructional emperor pigeon optimization

- SIS:

-

Student information system

- AI:

-

Artificial intelligence

References