Abstract

Accurate identification of soybean leaf diseases is essential to improving quality and yield. Aiming at the problem of insufficient data volume that may lead to model overfitting and low recognition ability, this paper proposes a hypergraph cell membrane computing network model for soybean disease identification (HcmcNet). The main components of HcmcNet are the pyramid convolutional feature extraction membrane, the ordinary feature extraction membrane, the U-type feature extraction membrane, and the dynamic attention membrane. The three parallel feature extraction membranes are designed to improve the model’s ability to capture disease features. The dynamic attention membrane aims to enhance the model’s expressiveness and performance by dynamically adjusting the attentional weights of the three feature extraction membranes to fuse the disease features effectively. Soybean leaf disease images were used to create the dataset and conduct experiments. The experimental results show that HcmcNet achieves 98% accuracy on the test set. Compared with classical models, HcmcNet shows obvious advantages in several evaluation metrics. We also conducted experiments on public datasets. The results show that it is feasible to use HcmcNet for soybean leaf disease recognition, and HcmcNet has higher classification accuracy and stronger generalization ability on small sample datasets. HcmcNet has great application prospects in soybean leaf disease recognition.

Similar content being viewed by others

Introduction

Soybean, as the main source of edible oil, accounts for about 25% of the world’s total edible oil1. China is one of the largest soybean-producing countries in the world. With the increase in population, the demand for soybeans as the main food and oil crops is also increasing2. Soybean diseases can cause serious yield reductions in soybeans. The annual loss caused by diseases is about 10%, and the serious loss can reach more than 30%. 120 kinds of soybean diseases have been reported in the world, and 52 kinds of soybean diseases have been reported in China. Among them, soybean leaf diseases are serious and common3. Therefore, to improve the yield and quality of soybeans and promote the development of intelligent agriculture, it is necessary to identify soybean leaf diseases4. In the early days, the identification of soybean leaf diseases mainly relied on manual identification of leaf diseases5. This method requires farmers to have rich experience and is time-consuming and less efficient. It has a significant impact on productivity. With the emergence and development of artificial intelligence and computer vision technology, these methods have been successfully applied in different fields6,7,8. In recent years, with the development of intelligent agriculture, artificial intelligence and computer vision technology have been more and more widely used in the field of agriculture, such as crop management9, pest and disease detection10, agricultural quality testing11 and automated agricultural machinery12.

Machine learning models and deep learning models are mainly used in the field of disease recognition13,14. Earlier disease recognition was divided into two phases, where disease features were extracted using algorithms such as histogram of oriented gradients (HOG)15 and local binary pattern (LBP)13,14, and then classified using algorithms such as support vector machine (SVM)18 and artificial neural network (ANN)19. Due to manually selecting and designing features when extracting features, this is a difficult and time-consuming task, and the model has poor versatility. Therefore, deep learning has become a research hotspot for recognizing leaf diseases20. Different from machine learning, deep learning can automatically extract disease features21, and it is highly nonlinear modeling that can improve model accuracy and has stronger generalization ability22,23.

The above research shows that using deep learning models to identify soybean leaf diseases is the most popular research direction at present24. Membrane computing is a computing paradigm inspired by the structure and function of living cells by Gh. Păun25, which belongs to natural computing. Membrane computing is divided into two main types. One is cell membrane computing26, whose membrane structure is like a tree. The other is tissue membrane computing27, whose membrane structure is a network structure similar to a graph. Neural membrane computing28 is a special tissue-type membrane computing. This paper designed a hypergraph cell membrane computing network model and used the model to conduct experiments on the soybean disease dataset. The results show that the model performs well on small sample datasets. The main contributions of this study are as follows:

-

(1)

Make a soybean disease dataset with 1010 disease images. Among them, 505 images of healthy plants and 505 images of diseased plants.

-

(2)

Propose a hypergraph cell membrane computing network (HcmcNet), which has the membrane structure of hypergraph cell membrane computing and can perform parallel computing. To effectively extract soybean leaf disease features, we designed three feature extraction membranes to extract disease features in parallel, among which the pyramid convolutional feature extraction membrane (PCFE) utilizes pyramid convolution to capture multi-scale feature information, the ordinary feature extraction membrane (OFE) captures complex feature information by stacking convolution kernels with different sizes, and the U-type feature extraction membrane (UTFE) adopts a U-type structure and jump connection for retaining shallow feature information while extracting advanced features and capturing contextual information.

-

(3)

To pay more attention to the key disease features and effectively fuse the features of the three feature extraction membranes, we designed a dynamic attention membrane and proposed a dynamic attention mechanism. By dynamically adjusting the attention weights of different feature extraction membranes, the model can effectively fuse the features of the three feature extraction membranes.

-

(4)

Complete experiments on the dataset and compare it with eight popular deep learning models at multiple scales. The results show that HcmcNet has significant performance; it is shown that the model has a significant advantage in soybean leaf disease identification and has better generalization ability on small sample datasets.

Related work

The research on the identification of soybean leaf diseases has always been the focus of soybean disease identification. Soybean disease recognition using deep learning is currently the most popular model. Rajput et al. created a high-quality dataset of soybean disease images called SoyNet to address the challenges associated with soybean leaf recognition29. Sharma et al. proposed a new SoyaTrans model that combines the functionality of a traditional CNN with a swin transformer network for efficient classification of real-field soybean datasets and other benchmark datasets30. Yu et al. proposed an improved deep learning model-based soybean leaf disease recognition model to overcome the low accuracy and complexity of chemical analysis operations in identifying soybean leaf disease using traditional deep learning models31. Some of the existing studies on the identification of soybean leaf disease have encountered challenges in achieving a balance between model performance and practical applicability. To solve this problem, adding an attention mechanism to the model is a good solution32. Be it, a well-designed two-stage feature aggregation network framework (TFANet) is proposed33. Soybean mosaic virus (SMV) can lead to a decline in soybean production and cause huge losses. Early detection and treatment of this disease is particularly important. In this regard, an early detection method of SMV based on convolutional neural network and support vector machine (CNN-SVM) is proposed34. The results show that the accuracy of the CNN-SVM model train set reaches 96.67%, and the accuracy of the test set reaches 94.17%. Soybean bacterial spot disease is also the main reason affecting soybean quality and yield. Wang et al. proposed an image recognition method for soybean leaves at different disease stages based on CNN35. Noah Bevers et al. developed an automatic classifier for soybean disease digital images based on CNN36. To train and verify the model, they obtained more than 9,500 original soybean images, including eight categories, and achieved an overall testing accuracy of 96.8% on the DenseNet201 model. Yu et al. proposed a lightweight soybean disease diagnostic model (RANet18) based on attention mechanisms and residual neural networks, achieving an accuracy of 96.50%. This model addresses the challenge of applying deep learning-based soybean disease diagnostic models to portable mobile terminals37. Zhang et al. developed a synthetic soybean leaf disease image dataset to solve the problem of insufficient datasets. Meanwhile, a multi-feature fusion Faster R-CNN model (MF3R-CNN) was designed to solve the problem of poor accuracy in detecting soybean leaf disease in complex scenes38, and the best average precision mean value of 83.34% was obtained. Bouacida et al. used a small Inception model architecture, which was able to identify small-area disease features without compromising performance. An accuracy of 94.04% was achieved on the PlantVillage dataset39. In addition, when the image has a complex background, most of the previous models can not accurately identify the correct leaf disease40. It can be seen from the aforementioned literature that deep learning is widely applied in soybean disease recognition. However, the classification accuracy of these models can be affected by small datasets. To address this issue, the HcmcNet was developed, which demonstrates excellent performance on smaller datasets. The model architecture will be described in detail in the subsequent sections.

Methods and materials

Hypergraph cell membrane computing

Cell membrane computing performs well in various fields. However, the membrane structure of classical cell membrane computing is a tree structure, and the communication between membranes can only be carried out between connected membranes, which limits the ability of cell membrane computing to describe practical problems. Hypergraph is a topological structure that can describe complex and high-order relationships. The use of hypergraph structures in cell membrane computing can describe more complex relationships. In graph theory, a hypergraph is a generalized graph characterized by the fact that a hyperedge can connect any non-zero integer number of vertices. A hypergraph H is a set group, \(H=\left( {V,E} \right)\) where V is the set of vertices and E is the set of hyperedges. Figure 1a shows the membrane structure and tree diagram of the cell membrane computing, and Fig. 1b shows the membrane structure and tree diagram of the hypergraph cell membrane computing. The red thick solid line in (b) is the super edge composed of nodes 2,3 and 4 in the tree structure of hypergraph cell membrane computing. The corresponding membrane structure is that membrane 4 can exist in both membrane 2 and membrane 3, and substances and information in membrane 4 can communicate with both membrane 2 and membrane 3. It can be seen that the hypergraph cell membrane computing structure is more complex and can describe more complex problems.

Model construction

HcmcNet is formally defined as:

Where:

-

(1)

V is a not empty finite alphabet whose object is the feature of the image;

-

(2)

\(O \subseteq V\)is the output alphabet and the output algorithm results;

-

(3)

H is a set of membrane labels, \(H=\left\{ {1,2, \cdot \cdot \cdot ,7} \right\}\);

-

(4)

\(\mu\)is a membrane structure with m membranes, as shown in Fig. 2, \(m=7\);

-

(5)

\({\omega _i}(1 \leqslant i \leqslant 7)\)represents the multiset of objects in a region i, corresponding to the feature map composed of features in each membrane;

-

(6)

\({R_i}(1 \leqslant i \leqslant 7)\)is a set of evolution rules in each region of membrane structure;

In the HcmcNet, the object multiple sets in the region contained by each membrane can only communicate with its adjacent membranes. Equation 2 shows the general form of evolution rules and communication rules between each membrane.

$$r:u \to (v\left| A \right.,B)$$(2)Where: u, v is the multiple sets of objects in each membrane, that is the image feature map ; \(A \in \left\{ {\left. {{f_{PCFE}},{f_{OFE}},{f_{UTFE}},{f_{DAM}},fc} \right\}} \right.\) is an operation type, Where \({f_{PCFE}}\), \({f_{OFE}}\), and \({f_{UTFE}}\) represent feature extraction operations, \({f_{DAM}}\) represents the dynamic attention mechanism, and \(fc\) is the full connection operation; \(B \in (in,out)\), when there is B, it means that the communication rule is executed, \(in\)means that the generated object enters the sub-membrane of the current membrane, and \(out\) means that the generated object enters the parent membrane of the current membrane. For example, when membrane \({\sigma _1}\) is contained by membrane \({\sigma _2}\), membrane \({\sigma _1}\) is called the daughter membrane of membrane \({\sigma _2}\), and membrane \({\sigma _2}\) is called the parent membrane of membrane \({\sigma _1}\).

-

(7)

\({i_0}\) represents the label of the output membrane in the membrane systems, \({i_0}=7\).

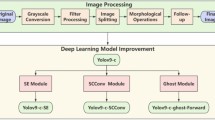

Figure 2 shows the membrane structure of HcmcNet. There are 7 membranes in the diagram, \({\sigma _1}\) is the input membrane, \({\sigma _2}\) is the pyramid convolutional feature extraction membrane (PCFE), \({\sigma _3}\) is the ordinary feature extraction membrane (OFE), \({\sigma _4}\) is the U-type feature extraction membrane (UTFE), \({\sigma _5}\) is the dynamic attention membrane, \({\sigma _6}\) is the fully connected membrane, and \({\sigma _7}\) is the output membrane. The main components of HcmcNet are the pyramid convolutional feature extraction membrane, the ordinary feature extraction membrane, the U-type feature extraction membrane, and the dynamic attention membrane.\({\sigma _1}\) is included in \({\sigma _2}\), \({\sigma _3}\) and \({\sigma _4}\) at the same time. In order to make the diagram more concise, it is drawn in \({\sigma _2}\), \({\sigma _3}\) and \({\sigma _4}\) respectively, and represented by a dotted line. Three feature extraction membranes were used in parallel to extract soybean leaf disease features. Among them, PCFE utilizes pyramidal convolution to capture multi-scale feature information, OFE increases the nonlinear representation of the model by stacking convolution kernels of different sizes, and UTFE employs a U-type structure to retain detailed feature information in shallow images while extracting advanced features and capturing contextual information. To improve recognition accuracy and focus on important feature information, we designed the dynamic attention membrane. In the dynamic attention membrane, the output feature maps of PCFE, OFE, and UTFE are dynamically weighted and then fused using dynamic attention. By dynamically adjusting the attention weights of different feature extraction membranes, the model can efficiently fuse the features of the three feature extraction membranes and then pass them to the fully connected membrane, which improves the expressive ability and performance of the model. Finally, the fully connected membrane is used to obtain the final recognition result through the fully connected operation.

Pyramid convolutional feature extraction membrane (PCFE)

The diseases of soybean leaves are diverse, exhibiting differences in the size and shape of lesions caused by various pathogens. Pyramid convolution, through multi-scale convolution kernels, can simultaneously focus on these details and global changes. Therefore, to enable the model to adequately extract features at multiple scales, enhance the ability of network to understand and characterize soybean disease images, and improve the performance of model when dealing with complex tasks, we designed the PCFE, as shown in Fig. 3.

Figure 3 shows the overall structure of PCFE. In PCFE, a \(3 \times 3\) convolution is used first, and two pyramid convolutions are used to capture the multi-scale feature information. \(1 \times 1\) convolution and \(2 \times 2\) maximum pooling are used before and after the pyramid convolution, and the former is used to reduce the number of channels. The latter is used to reduce the feature map size, which helps to optimize the computational efficiency of the network and the number of parameters. The designed pyramidal convolution contains three convolutional layers of different sizes, and the size of the convolutional kernel increases from the top to the bottom, which is \(3 \times 3\), \(5 \times 5\), and \(7 \times 7\), respectively. The change in the size of the feature map can be observed in Fig. 3. When the size of the input feature map is \(227 \times 227\), the size after the PCFE is \(28 \times 28\).

Ordinary feature extraction membrane (OFE)

Extracting local features of soybean leaf diseases, such as edges, texture, and color changes, is important for identifying tiny spots and local damage on soybean leaves. We designed the OFE by stacking ordinary convolution to fully capture local detail information and enhance the nonlinear representation of the network, as illustrated in Fig. 4.

Figure 4 shows the general feature extraction membrane, in which the dimensions of the convolutional layers include \(1 \times 1\), \(3 \times 3\), \(5 \times 5\), and \(7 \times 7\), and the pooling layer is \(2 \times 2\) with maximum pooling. The use of large-size convolution can obtain a large receptive field, which helps to extract complex disease features. The change in feature map size can be observed in Fig. 4. When the size of the input feature map is \(227 \times 227\), after applying the OFE, the size becomes \(28 \times 28\).

U-type feature extraction membrane (UTFE)

Soybean leaf diseases manifest not only as color changes but may also include alterations in leaf texture and morphology. The U-shaped structure fuses low-level features extracted from the encoder (e.g., color, texture) with high-level abstract features (e.g., disease shape, type) through jump connections, helping the model capture both local details and global structural information. This multi-level feature fusion preserves rich, detailed information and enhances the ability of model to characterize complex disease types, enabling it to identify different kinds of soybean leaf diseases better. Therefore, we designed UTFF, which employs a U-shaped structure and jump connections to directly link the bottom-level features with the top-level features, retaining more information about soybean leaf diseases and improving the model’s efficiency in utilizing detailed and contextual information. Figure 5 illustrates the structure of UTFF.

As shown in Fig. 5, in the UTFE we designed a feature extraction module with a U-type structure including an encoder, decoder, and jump connections. A jump connection was introduced between the encoder and the decoder, where the feature maps of the corresponding layers in the encoder were directly connected to the corresponding layers in the decoder, thus preserving the detailed information of the soybean leaf disease and enabling the model to effectively learn the local and global information of the soybean leaf disease. From Fig. 5, we can see that when the size of the input feature is \(227 \times 227\), the size after the UTFE becomes \(28 \times 28\).

Dynamic attention membrane

In HcmcNet, to improve recognition accuracy and focus on important feature information, we design the dynamic attention membrane, in which we propose the dynamic attention mechanism (DAM). By dynamically modulating the attention weights of the output feature maps from PCFE, OFE, and UTFE, the significance of the output feature maps from various feature extraction membranes is adaptively tuned to enhance the representative capacity and efficacy of the model. The DAM is depicted in Fig. 6.

As shown in Fig. 6, DAM dynamically weights the features extracted from PCFE, OFE, and UTFE, flexibly adjusting the importance of the three feature extraction membranes, and then concatenates the weighted three feature maps, paying attention to both local details and global contextual information, and finally reduces the number of channels using \(1 \times 1\) convolution to reduce the computation of the model. The dynamic attention mechanism can be described by Eq. 3.

Where: \(F^{\prime}\) is the output feature map of the attention film; \({f^{1 \times 1}}\) is the convolution operation of size \(1 \times 1\); \(Concat\) denotes the series operation; \({W_{PCFE}}\), \({W_{OFE}}\), and \({W_{UTFE}}\) are the dynamic attention weights corresponding to PCFE, OFE, and UTFE; \({F^{PCFE}}\), \({F^{OFE}}\), and \({F^{UTFE}}\) are the output feature maps corresponding to PCFE, OFE, and UTFE; and \(\otimes\) is the matrix multiplication operation.

Dynamic attention weights W

The dynamic attention weight W is computed using the respective output feature maps of PCFE, OFE, and UTFE, with W primarily determined by the attention weight Y. Figure 7 illustrates the designed attention weight Y. The dynamic attention weights are iteratively updated following Eq. 4.

Where: \({W_j}\) represents the dynamic attention weights corresponding to PCFE, OFE, and UTFE, and \({Y_j}\) denotes the attention weight values corresponding to PCFE, OFE, and UTFE.

Figure 7 shows a schematic diagram of the calculation of the attention value Y for PCFE, OFE, and UTFE. First, the average value of the feature map is calculated using global average pooling. A sigmoid function is activated on the feature map to get the importance of each channel. Finally, after averaging it, the attention weight of the feature extraction membrane is obtained as Y. Equation 5 is the formula for calculating the attention weight Y.

Where: \({F^j}\) is the output feature corresponding to PCFE, OFE, and UTFE; \(Avg\) is averaging over the features; \(\delta\) is the sigmoid function; and \(GAP\) is global average pooling.

Experiment data

The images used in the study were soybean diseased plants and normal plants collected locally in Huainan, Anhui Province, in 2023, which were in the background information of the real growing environment. The brand of camera used was the Fuji X-A5 with a resolution of 4000 × 6000. The actual dataset is named the soybean disease dataset. The number of healthy samples is 505, and the number of diseased samples is 505. The original image of the dataset is preprocessed to remove low-quality images such as people and blur. The image size is converted to \(3 \times 227 \times 227\), and the image data of each category is divided into a train set, validation set, and test set according to a certain proportion. The example image of the dataset is shown in Fig. 8, where (a) is the original healthy plant image, (b) is the original diseased plant image, (c) is the processed healthy plant image, and (d) is the processed diseased plant image. Table 1 is the soybean disease dataset distributed by category. Table 1 lists the number of images contained in the train set, validation set, and test set, and the distribution of the two types of images in the train set, validation set, and test set.

Evaluation indicators

The evaluation indicators used in this paper include confusion matrix, classification accuracy, precision, recall, specificity, F1 score, PR curve, and ROC curve.

Confusion matrix

The confusion matrix is a table used to evaluate the performance of the classification model, which compares the predicted results of the model with the real results. Confusion matrix is usually used for binary classification problems, which consists of four important indicators:

-

True Positive (TP): The representation model correctly predicts a positive sample as a positive case.

-

False Negative (FN): The representation model predicts a positive sample to a negative sample.

-

False Positive (FP): The representation model predicts negative samples as positive samples.

-

True Negative (TN): The representation model predicts the negative sample as a negative example.

In this paper, a confusion matrix is used to assess whether soybeans are diseased or healthy, so the image of diseased plants is set as a positive case with a label of 0, and the image of healthy plants is a negative case with a label of 1.

Classification accuracy

Classification accuracy refers to the percentage of correctly classified samples compared to the total samples. Equation 6 is the classification accuracy of the method calculated according to the confusion matrix.

Precision

Precision is the ratio of the number of correct positive cases predicted to the total number of positive cases predicted, which is used to measure the accuracy of the model to evaluate the prediction. Equation 7 is the precision of the method calculated according to the confusion matrix.

Recall

Recall also called sensitivity, is the ratio of the correct number of positive examples to the total number of positive examples, which is used to measure the ability of the model to identify positive samples. Equation 8 is the recall rate calculated according to the confusion matrix.

Specificity

Specificity is the ratio of the number of negative cases predicted correctly to the total number of negative cases, which is used to measure the ability of model to identify negative samples. Equation 9 is the specificity calculated according to the confusion matrix.

F1 score

For a classifier, F1 Score is a judgment index that combines accuracy and recall. Equation 10 is the calculation formula of the F1 Score respectively.

PR curve

The PR curve is drawn with Recall as the abscissa and Precision as the ordinate. The closer the curve is to the upper right corner, the better the overall performance of the model. The PR curve can use the area under the curve to measure the effect of the classifier. The larger the area, the better the performance of the model.

Receiver operation characteristic curve (ROC curve)

The ROC curve is drawn with the true positive rate (Recall) as the ordinate and the false positive rate (1-specificity) as the abscissa. ROC curve is often used to compare models in binary classification problems. The closer the curve is to the upper left corner, the more positive cases are before negative cases, and the better the overall performance of the model. By calculating the area under the ROC curve (AUC), the sorting ability of the classifier to the sample can be reflected. The larger the AUC, the better the natural sorting ability, and the better the overall performance of the model.

Results

This study uses Python and TensorFlow deep learning frameworks for experiments. All experiments were performed on Windows 10, using Intel (R) Core (TM) i7-9700 K CPU and NVIDIA RTX2070 GPU.

To verify the performance of the HcmcNet model, we established AlexNet, LeNet-5, Vgg16, EfficientNetB0, InceptionV3, DenseNet121, ResNet50, ResNet101, and HcmcNet models under the same training conditions. The top-level classifiers of these nine models are the same. The above model is trained on the train set, and the validation set to obtain the optimal model parameters. Then, the trained model is tested on the test set, the results are analyzed and the performance of the model is compared using the above evaluation indicators.

Hyperparameters setting

For all models, the hyperparameters of the training model are set to the configuration listed in Table 2.

Analysis of model results

Table 3 shows the results of 9 models on the test set, including the recognition results of each model in the soybean disease image, the soybean health image, and the whole test set. In Table 3, the accuracy of the nine models on the test set is different. The classification of soybean disease images reached 97.87% of the maximum accuracy and 82.98% of the minimum accuracy. The classification of soybean health images reached 100% of the maximum accuracy and 33.96% of the minimum accuracy. The classification of the test set reached 98% of the maximum accuracy and 64% of the minimum accuracy. The classification accuracy of HcmcNet on soybean disease images is 97.87%, and the classification accuracy on soybean health images is 98.11%. The classification accuracy on the test set is the greatest of the nine models, which is 98%. It shows that HcmcNet can accurately classify soybean plant images and has excellent recognition ability.

Figure 9 shows the confusion matrix of the recognition results of the 9 models on the test set. The confusion matrix in the figure lists the TP, FN, FP, and TN of the 9 models on the test set. It can be found that HcmcNet can correctly classify 98 images, which is the most among the 9 models. It can correctly classify 52 soybean health images, which is only lower than EfficientNetB0. It can be seen that HcmcNet can accurately classify soybean disease images and has excellent recognition ability. At the same time, the ability of the model to correctly classify soybean disease images needs more attention. HcmcNet was able to correctly classify 46 soybean disease images, which was tied for first place with InceptionV3 and DenseNet121, indicating that the HcmcNet model was able to recognize soybean disease plants effectively, and the ability to acknowledge soybean disease images was also significant.

Comparison of model performance

Table 4 shows the classification accuracy, precision, recall, specificity, and F1 score of the nine models on the test set. In Table 4, HcmcNet obtains a classification accuracy of 98% on the test set, which is the highest among all models. In addition, HcmcNet is 97.78% and 98.11% on precision and specificity, respectively, which is only lower than EfficientNetB0. On recall, HcmcNet was 97.87%, which was tied for first place with InceptionV3 and DenseNet121. In terms of F1 score, HcmcNet has the highest score of 97.87%. It shows that HcmcNet has an excellent comprehensive ability to identify soybean diseases.

Figure 10 shows the PR curves of the nine models on the test set to measure their overall performance. The PR curves of all models, including HcmcNet, are close to the upper right corner of the graph, indicating that these models can effectively classify the images in the test set and demonstrate good classification performance. The AUPRC of the model is the area under the PR curve. The AUPRC of HcmcNet is 0.9638, which is the highest among the nine models, indicating that the overall performance of HcmcNet is the best.

Figure 11 shows the ROC curves of the 9 models on the test set to measure the generalization performance of the nine models. In Fig. 11, the ROC curves of the nine models, including HcmcNet, are close to the upper left corner, indicating that the TPR of the model is greater than the FPR, and the model has good classification ability. The AUC of the model is the area under the PR curve. The AUC of the HcmcNet model is 0.9799, which is the highest among the nine models, indicating that the HcmcNet model has the best overall performance.

Ablation experiments

Table 5 shows the results of the ablation experiments of HcmcNet on the test set. HcmcNet achieved the highest classification accuracy of 98% on the test set among all models. The classification accuracy on the test set using only PCFE, OFE, and UTFE was 92%, 89%, and 89%, respectively. This indicates that all three feature extraction membranes were effective in extracting disease features. By parallelizing the three feature extraction membranes, the accuracy improved to 96%, significantly enhancing performance on the test set. Notably, the addition of DAM further increased the accuracy of the three parallel membranes by an additional 2%. Additionally, combining the three membranes in pairs and then adding DAM also improved accuracy. These results demonstrate that HcmcNet can effectively extract soybean leaf disease features from a small sample dataset, leading to high accuracy and excellent recognition capability.

Experimental results on SoyNet

SoyNet is a publicly available dataset of soybean leaf disease images. These soybean disease images were taken in India during October-November and include both healthy and diseased categories.SoyNet consists of two subfolders, SoyNet Raw Data and SoyNet Pre-processing Data. To verify the performance of HcmcNet, 3720 soy images from the subfolder Images Camera Clicks_256*256 in the Pre-processed Images folder were used for the experiments, and the dataset was randomly partitioned in the ratio of 8:1:1. The details of the dataset are shown in Table 6, and the example images of each category are shown in Fig. 12.

Table 7 shows the classification accuracy, precision, recall, specificity, and F1 score of HcmcNet and its comparison model on SoyNet. Table 7 shows that the accuracy of HcmcNet is 97.85%, which is the highest among all models. Moreover, on precision and specificity, HcmcNet is 99.05% and 95.55%, respectively, which is also the highest. On recall, HcmcNet is 98.42%, respectively, which is only lower than AlexNet. On F1 score, HcmcNet has the highest score of 98.73%. It can be seen that HcmcNet also has excellent performance on the SoyNet dataset, which indicates that HcmcNet has strong generalization ability and has a wider application prospect.

Figure 13 shows the confusion matrices of HcmcNet and its comparison models on SoyNet. It can be observed that HcmcNet correctly classified 312 soybean disease images and 52 healthy images, which is the highest among all models. However, none of the models correctly classified all the images, which may be due to subtle disease features or imbalanced sample data. Overall, HcmcNet demonstrates excellent recognition ability in identifying soybean disease images.

Comparison with the latest model

To further evaluate the recognition ability of HcmcNet on soybean diseases, we compared HcmcNet with the latest soybean disease recognition models. Table 8 shows the comparison results, listing the model name, publication date, datasets, and accuracy. From Table 8, we can see that, except for MF3R-CNN, the accuracy of all the listed models is higher than 90%, indicating that deep learning models exhibit excellent performance in soybean disease recognition. Among these studies, the accuracy rates of TRNet18 and RANet18 are higher than ours; RANet18 utilizes transfer learning and does not train the model from scratch, while the accuracy rate of TRNet18 is only slightly higher than that of our study. All other studies show lower accuracy than ours, which may be attributed to the need for improved feature extraction capabilities in those models. This indicates that HcmcNet has significant advantages in recognizing soybean leaf diseases.

In this study, HcmcNet was implemented to identify soybean diseases. According to the results of the confusion matrix, both healthy and diseased images were misclassified. One possible reason for this is that the disease features of early-stage diseased leaves are not very distinct and exhibit similarities with the features of healthy leaves, leading to model misclassification. Additionally, the current study only analyzed diseased and healthy leaves without further classifying the types of diseases present. This is an issue that requires further investigation.

Conclusion

This paper proposes an HcmcNet model for the recognition of small soybean disease datasets. HcmcNet has seven membranes, namely the input membrane, pyramid convolutional feature extraction membrane, ordinary feature extraction membrane, U-type feature extraction membrane, dynamic attention membrane, fully connected membrane, and output membrane. The input membrane is simultaneously contained by the pyramid convolutional feature extraction membrane, the ordinary feature extraction membrane, and the U-type feature extraction membrane. The three feature extraction membranes run in parallel to effectively extract soybean leaf disease features, and the dynamic attention membrane can focus on key disease feature information to improve recognition accuracy. This study uses the camera to collect a soybean disease dataset, a total of 1010 images, and the HcmcNet is experimented on the dataset and compared with other classical convolutional neural networks. The results show that HcmcNet has a classification accuracy of 98% on the test set, an F1 score of 97.87%, and demonstrates significant advantages in other evaluation metrics. In addition, experiments were conducted on the public soybean dataset SoyNet, achieving an accuracy of 97.85% and an F1 score of 98.73%, making it the best-performing model among all evaluated models. HcmcNet has excellent overall performance and strong generalization ability. It can achieve accurate recognition of soybean images with a small sample dataset, can provide a basis for precise drug application, and has great application prospects in soybean leaf disease prevention and control. However, the size of the HcmcNet model proposed in this study is 99.91 MB, and its deployment has high requirements for portable mobile terminals; its FPS is 5.3915 after deployment on Jetson Xavier NX, and its real-time performance needs to be improved. In addition, we did not focus on the application of HcmcNet in real-world environments.

In the future, we will conduct research on the above issues and lighten HcmcNet by combining the a priori knowledge of soybean plant protection experts to contribute to the development of agricultural intelligence.

Data availability

The data presented in this study are available on request from the corresponding author.Direct URL to SoyNet: https://data.mendeley.com/datasets/w2r855hpx8/2.

References

Anderson, E. J. et al. Springer, Cham,. Soybean [Glycine max (L.) Merr.] Breeding: History, Improvement, Production and Future Opportunities in Advances in Plant Breeding Strategies: Legumes (ed. Al-Khayri, J., Jain, S., Johnson, D.) 431–516 (2019).

Zhu, Q. et al. Modeling soybean cultivation suitability in China and its future trends in climate change scenarios. J. Environ. Manage. 345, 118934 (2023).

Ametefe, D. S. et al. Enhancing leaf disease detection accuracy through synergistic integration of deep transfer learning and multimodal techniques. Inform. Process. Agric. (2024).

Thakur, P. S., Khanna, P., Sheorey, T. & Ojha, A. Trends in vision-based machine learning techniques for plant disease identification: a systematic review. Expert Syst. Appl. 208, 118117 (2022).

Barbedo, J. G. A. A review on the main challenges in automatic plant disease identification based on visible range images. Biosyst. Eng. 144, 52–60 (2016).

Rahman, M. M. & Siddiqui, F. H. An optimized Abstractive text Summarization Model using Peephole Convolutional LSTM. Symmetry, 11, 1290 (2019).

Rahman, M. M. & Siddiqui, F. H. Multi-layered attentional peephole convolutional lstm for abstractive text summarization. ETRI J. (2020).

Rahman, M. M., Shokouhmand, S., Bhatt, S. & Faezipour, M. M. I. S. T. Medical Image Segmentation Transformer with Convolutional Attention Mixing (CAM) Decoder. IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 403–412(2024). (2024).

Barman, U. & Choudhury, R. D. Smartphone image based digital chlorophyll meter to estimate the value of citrus leaves chlorophyll using Linear Regression, LMBP-ANN and SCGBP-ANN. Journal of King Saud University - Computer and Information Sciences. 34(6, Part A), 2938–2950 (2022).

Mumtaz, R. et al. Integrated digital image processing techniques and deep learning approaches for wheat stripe rust disease detection and grading. Decis. Analytics J. 8, 100305 (2023).

Tunio, M. H. et al. Meta-knowledge guided bayesian optimization framework for robust crop yield estimation. J. King Saud Univ. - Comput. Inform. Sci. 36 (1), 101895 (2024).

Cho, S. et al. Plant growth information measurement based on object detection and image fusion using a smart farm robot. Comput. Electron. Agric. 207, 107703 (2023).

Ngugi, L. C., Abelwahab, M. & Abo-Zahhad, M. Recent advances in image processing techniques for automated leaf pest and disease recognition – A review. Inform. Process. Agric. 8 (1), 27–51 (2021).

Kotwal, J., Kashyap, R. & Pathan, S. Agricultural plant diseases identification: from traditional approach to deep learning. Mater. Today: Proc. 80(1), 344–356 (2023).

Fan, X. et al. Leaf image based plant disease identification using transfer learning and feature fusion. Comput. Electron. Agric. 196, 106892 (2022).

Anil, J. & Padma, S. L. A novel fast hybrid face recognition approach using convolutional Kernel extreme learning machine with HOG feature extractor. Measurement: Sens. 30, 100907 (2023).

Araujo, J. M. M. & Peixoto, Z. M. A. A new proposal for automatic identification of multiple soybean diseases. Comput. Electron. Agric. 167, 105060 (2019).

Sethy, P. K., Barpanda, N. K., Rath, A. K. & Behera, S. K. Deep feature based rice leaf disease identification using support vector machine. Comput. Electron. Agric. 175, 105527 (2020).

Alshammari, H. H., Taloba, A. I. & Shahin, O. R. Identification of olive leaf disease through optimized deep learning approach. Alexandria Eng. J. 72, 213–224 (2023).

Li, X. et al. SLViT: shuffle-convolution-based lightweight vision transformer for effective diagnosis of sugarcane leaf diseases. J. King Saud Univ. - Comput. Inform. Sci. 35 (6), 101401 (2023).

Khan, S. & Narvekar, M. Novel fusion of color balancing and superpixel-based approach for detection of tomato plant diseases in natural complex environment. J. King Saud Univ. - Comput. Inform. Sci. 34 (6, Part B), 3506–3516 (2022).

Iqbal, Z. et al. An automated detection and classification of citrus plant diseases using image processing techniques: a review. Comput. Electron. Agric. 153, 12–32 (2018).

Rahman, M. M. et al. A Deep Learning Approach-FDNN: Forest Deep Neural Network to Predict cow’s parturition date. J. Appl. Artif. Intell. 3 (1), 61–74 (2022).

Karlekar, A., Seal, A. & SoyNet Soybean leaf diseases classification. Comput. Electron. Agric. 172, 105342 (2020).

Sarkar, C., Gupta, D., Gupta, U. & Hazarika, B. B. Leaf disease detection using machine learning and deep learning: review and challenges. Appl. Soft Comput. 145, 110534 (2023).

Dong, J. et al. A learning numerical spiking neural P system for classification problems. Knowl. Based Syst. 296, 111914 (2024).

Song, H., Huang, Y., Song, Q., Han, T. & Xu, S. Feature selection algorithm based on P systems. Nat. Comput. 22, 149–159 (2023).

Li, Y., Song, B. & Zeng, X. Rule synchronization for monodirectional tissue-like P systems with channel states. Inf. Comput. 285 (Part B), 104895 (2022).

Rajput, A. S., Shukla, S., Thakur, S. S. & SoyNet A high-resolution Indian soybean image dataset for leaf disease classification. Data Brief. 49, 109447 (2023).

Sharma, V., Tripathi, A. K., Mittal, H., Nkenyereye, L. & SoyaTrans A novel transformer model for fine-grained visual classification of soybean leaf disease diagnosis. Expert Syst. Appl. 260, 125385 (2025).

Yu, M., Ma, X. & Guan, H. Recognition method of soybean leaf diseases using residual neural network based on transfer learning. Ecol. Inf. 76, 102096 (2023).

Rahman, M. M., Faezipour, M., Bhatt, S. & Vhaduri, S. A. H. P. C. M. Attentional Homogeneous-Padded Composite Model for Respiratory Anomalies Prediction, IEEE 11th International Conference on Healthcare Informatics (ICHI), Houston, TX, USA, 65–71(2023). (2023).

Pan, R. et al. A two-stage feature aggregation network for multi-category soybean leaf disease identification. J. King Saud Univ. - Comput. Inform. Sci. 35 (8), 101669 (2023).

Gui, J., Fei, J., Wu, Z., Fu, X. & Diakite, A. Grading method of soybean mosaic disease based on hyperspectral imaging technology. Inform. Process. Agric. 8 (3), 380–385 (2021).

Wang, X. et al. Diagnosis of soybean bacterial blight progress stage based on deep learning in the context of data-deficient. Comput. Electron. Agric. 212, 108170 (2023).

Bevers, N., Sikora, E. J. & Hardy, N. B. Soybean disease identification using original field images and transfer learning with convolutional neural networks. Comput. Electron. Agric. 203, 107449 (2022).

Yu, M., Ma, X., Guan, H. & Zhang, T. A diagnosis model of soybean leaf diseases based on improved residual neural network. Chemometr. Intell. Lab. Syst. 237, 104824 (2023).

Zhang, K., Wu, Q. & Chen, Y. Detecting soybean leaf disease from synthetic image using multi-feature fusion faster R-CNN. Comput. Electron. Agric. 183, 106064 (2021).

Bouacida, I., Farou, B., Djakhdjakha, L., Seridi, H. & Kurulay, M. Innovative deep learning approach for cross-crop plant disease detection: a generalized method for identifying unhealthy leaves. Inform. Process. Agric. https://doi.org/10.1016/j.inpa.2024.03.002 (2024).

Sun, Z., Valencia-Cabrera, L., Ning, G. & Song, X. Spiking neural P systems without duplication. Inf. Sci. 612, 75–86 (2022).

Acknowledgements

This study was supported by the National Natural Science Foundation of China (61772033).

Author information

Authors and Affiliations

Contributions

Y. H.: Conceptualization, Formal analysis, Investigation, Methodology, Visualization, Writing – review & editing, Funding acquisition. H. S.: Methodology, Conceptualization, Investigation, Writing – original draft, Software, Visualization. T. H.: Formal analysis, Supervision, Writing – review & editing. S. X.: Project administration, Resources, Formal analysis. Z. W.: Investigation, Validation. X. W.: Investigation, Validation. Q. L.: Conceptualization, Investigation, Validation.All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Huang, Y., Song, H., Han, T. et al. A hypergraph cell membrane computing network model for soybean disease identification. Sci Rep 14, 29637 (2024). https://doi.org/10.1038/s41598-024-81325-x

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-81325-x