Abstract

Diabetic macular edema (DME) is a common complication of diabetes that can lead to vision loss, and anti-vascular endothelial growth factor (anti-VEGF) therapy is the standard of care for DME, but the treatment outcomes vary widely among patients. This study collected optical coherence tomography (OCT) images and clinical data from DME patients who received anti-VEGF treatment to develop and validate deep learning (DL) models for predicting the anti-VEGF outcomes in DME patients based on convolutional neural network (CNN) and multilayer perceptron (MLP) combined architecture by using multimodal data. An Xception-MLP architecture was utilized to predict best-corrected visual acuity (BCVA), central subfield thickness (CST), cube volume (CV), and cube average thickness (CAT). Mean absolute error (MAE), mean squared error (MSE) and mean squared logarithmic error (MSLE) were employed to evaluate the model performance. In this study, both the training set and the validation set exhibited a consistent decreasing trend in MAE, MSE, and MSLE. No statistical difference was found between the actual and predicted values in all clinical indicators. This study demonstrated that the improved CNN-MLP regression models using multimodal data can accurately predict outcomes in BCVA, CST, CV, and CAT after anti-VEGF therapy in DME patients, which is valuable for ophthalmic clinical decisions and reduces the economic burden on patients.

Similar content being viewed by others

Introduction

Diabetic macular edema (DME) is a common complication of diabetic retinopathy (DR), a microvascular disorder caused by diabetes mellitus (DM). DME affects approximately 746,000 or 4% of all Americans with DM aged 40 years or older1. The prevalence of DME increases with the duration of the disease and the stage of DR, approaching 30% in adults who have had DM for more than 20 years and 71% of those with proliferative diabetic retinopathy (PDR)2,3. DME is the accumulation of excess fluid in the extracellular space within the retina in the macular area, typically in the inner nuclear, outer plexiform, Henle’s fiber layer, and subretinal space which can impair vision and cause blindness if left untreated4,5.

Anti-vascular endothelial growth factor (anti-VEGF) therapy is the main treatment for DME, and is superior to other medications on the market, particularly when administered alone6. However, variability in response to anti-VEGF therapy and the high cost of anti-VEGF drugs make it essential and necessary to develop predictive models for anti-VEGF therapy outcomes in DME patients. Such models would be beneficial for both ophthalmologists and DME patients. For instance, a predictive model could help doctors figure out which patients are likely to respond well to specific anti-VEGF drugs based on their initial data. This would allow for more personalized treatment plans, improving vision outcomes and avoiding unnecessary treatments for those who are unlikely to benefit. Additionally, by predicting the treatment course, patients and doctors can better manage and plan for the financial costs of long-term therapy. This approach not only improves clinical decisions but also reduces the financial burden on patients.

Due to its robust performance, deep learning (DL) is increasingly gaining popularity in various applications, including lesion segmentation, biomarkers segmentation, disease diagnosis and image synthesis7. Among the various DL networks, the Convolutional Neural Network (CNN) is particularly popular8. The primary advantage of CNNs over their predecessors is their ability to automatically detect significant features without any human supervision9. This feature makes CNNs highly convenient for clinical doctors who may not have extensive knowledge in computer science. The Xception architecture exhibits numerous superior characteristics compared to other models including better accuracy, architectural simplicity, rapid processing speed, high computational efficiency, and generalizability to custom tasks9,10,11. Multilayer Perceptron (MLP) is a type of artificial neural networks (ANNs) that is commonly used in machine learning (ML) for both classification and regression tasks. However, MLP is not ideal for processing multidimensional data12,13. To address this limitation, researchers have proposed combining MLP with CNN for multidimensional data processing14.

In previous studies, DL models have demonstrated high accuracy in diagnosing, recognizing lesions, segmenting lesions, and predicting the prognosis of DR15. However, these models often rely on unimodal data, such as imaging or clinical data alone, which can limit their predictive accuracy and generalizability due to the lack of comprehensive data. Additionally, many prognosis prediction models have utilized categorical algorithms instead of regression algorithms. There is also a relative scarcity of studies employing multimodal data in ophthalmology. By integrating various types of data, such as images, tabular data, and videos, models can be provided with more comprehensive input. The combination of CNNs and MLPs leverages the strengths of both architectures, thereby enhancing the model’s ability to predict treatment responses more accurately.

Therefore, the aim of this study is to develop and validate DL models for predicting the anti-VEGF outcomes in DME patients based on CNN and MLP combined architecture by using multimodal data.

Results

Participants

This study included 272 eyes with DME treated with anti-VEGF drugs, as detailed in Table 1. The participants’ ages ranged from 33 to 84 years, with a median age of 59 years. Of these, 167 (61.4%) were male and 105 (38.6%) were female. A total of 153 (56.25%) right eyes and 119 (43.75%) left eyes were affected. The quantity of eyes treated with ranibizumab, conbercept, and afibercept were 60 (22.06%), 182 (66.91%), and 30 (11.03%), respectively. The mean follow-up time was 31.658 ± 20.472 days. The baseline intraocular pressure (IOP) was 16.283 ± 3.801 mmHg. Baseline measurements before treatment were: BCVA, 0.699 (range, 0.398-1); CST, 369.540 ± 158.233 μm; CV, 14.454 ± 5.480; and CAT, 369.438 ± 74.866 μm. Post-treatment measurements were: BCVA, 0.699 (range, 0.301-1); CST, 309.648 ± 124.630; CV, 12.982 ± 3.012; and CAT, 350.625 ± 66.037. No significant statistical differences observed between the training set and validation set.

Model development

Supplementary Fig. S1 and Supplementary Fig. S2 illustrate the training processes of the CNN-MLP and CNN models, respectively. The models, evaluated by MAE, MSE, and MSLE, displayed a pattern of near uniformity across all four clinical indicators, with a marked decline in the initial ten epochs, followed by a steady decrease, and then a plateau, indicating the model’s robustness and validity. The MAE, MSE, MSLE of the optimal models on the validation set are listed in Table 2.

Model performance

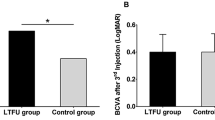

Figure 1 shows the correlation between actual and predicted values in validation set, with concordance indicated by points along the diagonal. In Fig. 2, box plots represent the interquartile range, with whiskers extending to the extreme values. As presented in Table 3, for validation set, the comparative analysis for the CNN + MLP models indicated no significant differences between the observed and predicted metrics in the validation sets, specifically in BCVA (Z = 0.263, P = 0.792, 95%CI[0.013, 1.307]), CST (T = 0.004, P = 0.997, 95%CI[123.246, 483.494]), CV (T = 1.314, P = 0.194, 95%CI[9.579, 16.361]), and CAT (T = 0.283, P = 0.778, 95%CI[230.208, 472.032]). Meanwhile, for the CNN models, the analysis showed no significant differences in BCVA (Z=-0.802, P = 0.422, 95%CI[0.524, 0.876]), CST (T = 0.523, P = 0.603, 95%CI[257.535, 329.584]), and CV (T = 1.676, P = 0.099, 95%CI[11.309, 14.131]), but a significant difference in CAT (T = 9.187, P < 0.001, 95%CI[190.984, 304.076]).

Discussion

In this study, we established a database based on real-world data, incorporating clinical data from patients prior to treatment and OCT images as input. Utilizing DL algorithms, short-term efficacy indicators for DME patients receiving monotherapy with anti-VEGF drugs were predicted. Additionally, we compared the predictive accuracy of CNN-MLP models with standalone CNN models. Using identical OCT images and CNN architectures, our results demonstrated that the CNN-MLP models significantly outperformed the CNN models in terms of accuracy.

All four CNN-MLP models predicting BCVA, CST, CV and CAT showed favorable predictive performance in this study. The comparison of four models in terms of MAE, MSE and MSLE does not show any significant differences in model development. However, when assessing model performance, the CAT prediction model stood out as the best performer among the four. It achieved the highest P-value, indicating that in the validation set, the actual CAT values closely matched the model predictions. Additionally, the scatter plot provided further evidence of the model’s accuracy. Notably, BCVA is a non-continuous variable, and scatter plots for BCVA typically display points aligned vertically along a fixed horizontal axis. While classification algorithms may produce better results, this study also shows that regression algorithms can achieve satisfactory performance even without constraining the output dimensions.

Increased reactive oxygen species and glycation end products are involved in the pathophysiology of DME, which results in VEGF-mediated vascular alterations16. Macular edema and neovascularization are lessened by VEGF inhibition17. Vascular permeability and angiogenesis are stimulated by the VEGF family, which includes VEGF-A, the main subtype in ophthalmology18,19. Though researched, the function of VEGF-B in retinal protection is still up for debate20,21,22. Additionally, in ischemic retinal circumstances, placenta growth factor (PIGF) has a role in neovascularization23. Anti-VEGF medications include ranibizumab, conbercept, and aflibercept. Ranibizumab is a humanized IgG1 monoclonal antibody fragment that inhibits neovascularization by blocking the interaction between VEGF-A receptors24. Conbercept binds all VEGF-A subtypes, VEGF-B, and PIGF25. It is a fusion protein of the VEGFR-1 and VEGFR-2 domains with human IgG1 Fc. Aflibercept is a recombinant VEGFR-1 and VEGFR-2 fusion with IgG1 Fc, also binds all VEGF-A subtypes and PIGF26. Through their ability to prevent VEGF-A, VEGF-B, and PIGF from binding to VEGF-related receptors, these three anti-VEGF drugs can treat DME by reducing the amount of VEGF expressed in the eye.

Compared to other neural networks, implementing large-scale networks is easier by using CNNs. The evolution of CNN architectures has seen significant changes from LeNet to AlexNet27, network-in-network28, ZefNet29, VGG30, GoogLeNet31, highway network32, ResNet33, and Inception34, leading to the Xception architecture, which is regarded as the best version of the Inception model, improved over Inception-v310. By separating the spatial and channel dimensions using depthwise separable convolutions and residual connections, it speeds up model convergence and greatly improves accuracy10. Hemelings et al.35 used the Xception architecture to predict visual field conditions based on optic disc OCT, with positive results in the field of ophthalmology. Furthermore, Xception DL models have been used in other research to grade choroidal thickness36, diagnose glaucoma37, detect retinitis pigmentosa early37, and detect Fuchs’s syndrome38. All of these tasks have been accomplished with high accuracy.

Compared to earlier studies, Raita et al.39 integrated multimodal emergency department triage data into machine learning models to predict clinical outcomes, specifically mortality, but they did not use DL techniques. Bardak et al.40 developed a CNN and multimodal learning model that incorporates time series data and medical entities to predict clinical outcomes for intensive care unit patients, like length of hospital stay and mortality. Moreover, He et al.41 used MRI and clinical data in combination with a multimodal DL technique to predict the likelihood of neurodevelopmental abnormalities in 215 preterm newborns. Our previous work42 tried using MLP without OCT images to establish regression ML models predicting the same clinical indicators as this study, but the MAE and MSE are higher than this study, which indicate that the potential of clinical images to establish the AI models. Similarly, Wen et al.43 developed a DL model based on preoperative OCT to automatically predict the 6-month postoperative BCVA in patients with idiopathic epiretinal membrane, achieving a MAE of 0.070 and a MSE of 0.11. In comparison, our study yielded a comparable MAE, but the MSE observed in our research was significantly lower, indicating enhanced predictive accuracy.

Despite these advancements, regression DL models based on multimodal data in ophthalmology are still restricted. To date, only a small number of studies have used multimodal regression DL techniques to predict clinical efficacy in ophthalmology. This method may help physicians choose which anti-VEGF medication is best for each patient, improving treatment outcomes and lessening the financial strain on patients.

While DL is frequently used for classification tasks and provides comprehensive evaluation metrics, it is also effective in regression studies. DL has the potential to address problems beyond the scope of classification algorithms in ophthalmology, including predicting BCVA, monitoring changes in retinal thickness, and assessing variations in corneal thickness. Through the application of these algorithms, ophthalmologists can use anticipated results as a reference index to make specific treatment plans, promoting the personalized medicine in ophthalmology.

There are several limitations in this study. Since this is a retrospective study, the model training may be somewhat impacted by partial data missing, yet the outcomes are still considered satisfactory. In this study, the BCVA data is not a continuous variable, which may have contributed to the ultimately poor predictive outcomes for BCVA. Additionally, the absence of an external test set to assess model performance is also a limitation. The performance metrics presented are derived from the internal validation set. Future studies should consider incorporating an external test set to validate the model’s generalizability and robustness.

Methods

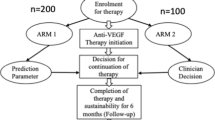

The study followed the Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis (TRIPOD) checklist44 for reporting observational studies (Supplementary File 1). The flow diagram of the study is presented in Fig. 3.

Source of data

The study was a retrospective review of 272 DME eyes who received anti-VEGF drugs from April 20, 2017 to June 30, 2022. The Academic Committee and the Ethics Committee of Ineye Hospital of Chengdu University of Traditional Chinese Medicine approved the study (Ethics number: 2022yh-023) and it adhered to the Declaration of Helsinki principles. All subjects or their legal guardian(s) gave informed consent, and the data was de-identified.

Participants

Patients were included according to the following criteria: The inclusion criteria for this study were: (1) clinically significant macular edema diagnosed according to the ETDRS criteria; (2) DME confirmed by fundus examination, macular optical coherence tomography (OCT) examination and/or fluorescein fundus angiography; (3) the data of the subjects must include best corrected visual acuity (BCVA), central subfield thickness (CST), cube volume (CV), cube average thickness (CAT) before and after treatment within a 3-month period; (4) the subjects must receive at least one intravitreal injection of anti-VEGF (ranibizumab 0.2mL; or conbercept 0.2mL; or aflibercept 0.1mL).

Patients with the following conditions were excluded: (1) other eye diseases that severely impaired vision, such as retinal detachment, acute retinal necrosis, or glaucoma; (2) history of unstable systemic diseases; (3) severe opacity of the refractive media that hindered the examination; (4) previous intraocular surgery; (5) vitreous hemorrhage, endophthalmitis, or tractional retinal detachment that required vitreoretinal surgery during the treatment period; or (6) absence of OCT image data.

The included DME patients must be treated with intravitreal injection of anti-VEGF alone. Patients must return for follow-up within 3 months after treatment and undergo BCVA, intraocular pressure (IOP) and OCT re-examination.

Outcome

The models were developed to predict the most precise values of BCVA, CST, CV, and CAT following anti-VEGF therapy within a 3-month period. CST is an objective measurement of macular thickness readily available on OCT imaging. The central subfield is defined as the circular area 1 mm in diameter centered around the center point of the fovea. CV is a measure of the total volume within a specified cube of the retina. The cube is defined by a raster-scanning pattern centered at the fovea, consisting of multiple frames of horizontal linear B-scan. CAT is a measure of the average thickness of the retina within the entire 6 × 6 mm square scanned area in an OCT scan45,46.These parameters are often used in ophthalmology to assess and monitor the condition of macular edema. They can provide valuable information about the efficacy of anti-VEGF therapy.

Predictors

In our multimodal model development research, we utilize both image and tabular data as predictors.

The image data was acquired using Zeiss HD-OCT and SVision Imaging OCT software. Due to the retrospective nature of this study, the OCT imaging modes varied based on real-world clinical practices and the preferences of individual ophthalmologists. Therefore, we did not restrict the image scanning modes. For each DME patient, we selected a single B-scan OCT image that spanned the highest point of macular edema. This approach not only reflects real-world conditions more accurately but also enhances the model’s applicability. Specifically, the selected B-scan images were chosen to ensure they captured the most significant area of edema, providing a consistent basis for analysis across different patients. Initially, the macula center is automatically aligned to capture B-scan cross-sectional images. In rare cases of automatic macula alignment failure, manual adjustments are made by the operator. After converting the image to grayscale, it is labeled as ‘number_01,’ corresponding to its position in the table.

Regarding the tabular data, our study encompasses two types of variables: categorical and numeric. Categorical variables include patient gender (male, female), anti-VEGF drug type (bevacizumab, conbercept, aflibercept), and OCT device. Numeric variables cover patient age, BCVA, IOP, CST, CV, CAT, and duration of follow-up visits. Additionally, our study collects data related to macular region retinal thickness segmentation. The segmentation method adheres to the Early Treatment of Diabetic Retinopathy Study (ETDRS) guidelines, utilizing default zones provided by the OCT system47. The macular region is divided into nine areas: central subfield, temporal outer, superior outer, nasal outer, inferior outer, temporal inner, superior inner, nasal inner, and inferior inner.

Preprocessing

During the processing of OCT image data, we used Python 3.9 to crop images obtained from two different machines. The cropping operation was centered on the image. These images were saved in .jpg format while preserving their original filenames. Initially, the central 570 pixels in width and 380 pixels in height of each image were automatically retained through code. After automatic cropping, all images were manually reviewed to ensure the macular edema region was fully included. If the image did not encompass the entire macular edema, manual cropping adjustments were made.

As for categorical data, conversion into the one-hot encoding format was executed. Regarding continuous data, utilization of the missing value replacement function available in SPSS 27 software was undertaken. Specifically, the linear trend method based on neighboring data points was employed as the strategy for handling missing values.

Additionally, the dataset was randomly partitioned into training and validation sets using Scikit-Learn, maintaining an 8:2 ratio. The training set was utilized for model development, while the validation set was employed for model validation.

Model development

In this study, TensorFlow 2 was selected as the development framework. A multi-input MLP-CNN regression model was constructed by combining multi-modal data and utilizing both a MLP and a CNN for model training. Specifically, the MLP branch was employed for handling tabular data, while the CNN branch was adapted to process image data. The architecture of the CNN branch was based on the Xception model48. Notably, Xception, originally designed for 299 pixels × 299 pixels images, was modified in this study to accommodate the smallest width and height observed in all OCT images, resulting in dimensions of 570 pixels × 280 pixels. Furthermore, while Xception was initially utilized for image classification tasks, it was repurposed for regression tasks in our research. The outputs of the above two parts of the structure were concatenated combined, and a fully connected layer with an output dimension of 4 is connected. Finally, the prediction data is obtained by inputting to a fully connected layer with an output dimension of 1. Additionally, to compare the CNN-MLP with CNN alone, we also constructed standalone CNN models, which only take OCT images as input.

Statistical analysis

In this research study, Python 3.9 and SPSS 27.0 were employed for conducting statistical analyses on the collected data. Continuous variables that adhered to a normal distribution were summarized using the mean ± standard deviation (Mean ± SD). The comparison between two independent groups was performed using the independent sample t-test. For continuous variables that deviated from normality, they were represented using the median (interquartile range), denoted as median (IQR). The comparison between two groups in this context was carried out using the Mann-Whitney U test, where a significance level of P < 0.05 indicated statistically significant differences.

The prediction results for both the training set and the test set were visualized through Python 3.9. The horizontal axis represented the actual values, while the vertical axis represented the predicted values. Therefore, if the points of the predicted values were closer to the diagonal line, it indicated a closer approximation to normal.

During training, MAE serves as the loss function to evaluate model performance. It measures the discrepancy between predicted values ŷ and actual values y. The goal is to minimize this loss using gradient descent49. Additionally, MSE and MSLE are used to assess predictive accuracy. For a dataset with n samples of true values y and corresponding model predictions ŷ50:

Conclusions

In this study, the improved Xception-MLP regression models using multimodal data can accurately predict outcomes including BCVA, CST, CV, and CAT after anti-VEGF therapy in DME patients. This study shows that the CNN-MLP DL models, which integrate ocular images (OCT, fundus photography, ultrasound, etc.) with general patient condition data, are beneficial for clinical decision-making and reducing the financial burden for ophthalmic patients. Further validation in larger, more diverse cohorts is necessary to confirm the robustness and generalizability of CNN-MLP models.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Varma, R. et al. Prevalence of and risk factors for diabetic macular edema in the United States. JAMA Ophthalmol. 132, 1334–1340. https://doi.org/10.1001/jamaophthalmol.2014.2854 (2014).

Klein, R., Klein, B. E., Moss, S. E., Davis, M. D. & DeMets, D. L. The Wisconsin epidemiologic study of diabetic retinopathy. IV. Diabetic macular edema. Ophthalmology 91, 1464–1474. https://doi.org/10.1016/s0161-6420(84)34102-1 (1984).

Javadzadeh, A. The effect of posterior subtenon methylprednisolone acetate in the refractory diabetic macular edema: a prospective nonrandomized interventional case series. BMC Ophthalmol. 6, 15. https://doi.org/10.1186/1471-2415-6-15 (2006).

Otani, T., Kishi, S. & Maruyama, Y. Patterns of diabetic macular edema with optical coherence tomography. Am. J. Ophthalmol. 127, 688–693. https://doi.org/10.1016/s0002-9394(99)00033-1 (1999).

Yanoff, M., Fine, B. S., Brucker, A. J. & Eagle, R. C. Jr. Pathology of human cystoid macular edema. Surv. Ophthalmol. 28 Suppl, 505–511. https://doi.org/10.1016/0039-6257(84)90233-9 (1984).

Tricco, A. C. et al. Anti-vascular endothelial growth factor therapy for age-related macular degeneration: a systematic review and network meta-analysis. Syst. Rev. 10, 315. https://doi.org/10.1186/s13643-021-01864-6 (2021).

Li, T. et al. Applications of deep learning in fundus images: a review. Med. Image Anal. 69, 101971. https://doi.org/10.1016/j.media.2021.101971 (2021).

Dhillon, A. & Verma, G. K. Convolutional neural network: a review of models, methodologies and applications to object detection. Progress Artif. Intell. 9, 85–112. https://doi.org/10.1007/s13748-019-00203-0 (2020).

Alzubaidi, L. et al. Review of deep learning: concepts, CNN architectures, challenges, applications, future directions. J. Big Data. 8, 53. https://doi.org/10.1186/s40537-021-00444-8 (2021).

Chollet, F. in Proceedings of the IEEE conference on computer vision and pattern recognition. 1251–1258.

Carreira, J., Madeira, H. & Silva, J. G. Xception: a technique for the experimental evaluation of dependability in modern computers. IEEE Trans. Software Eng. 24, 125–136 (1998).

Ramchoun, H., Ghanou, Y. & Ettaouil, M. & Janati Idrissi, M. A. Multilayer perceptron: Architecture optimization and training. (2016).

Taud, H. & Mas, J. Multilayer perceptron (MLP). Geomatic approaches for modeling land change scenarios, 451–455 (2018).

Zhang, S., Niu, Y. & LcmUNet: A Lightweight Network combining CNN and MLP for Real-Time Medical Image Segmentation. Bioengineering 10, 712 (2023).

Lim, W. X., Chen, Z. & Ahmed, A. The adoption of deep learning interpretability techniques on diabetic retinopathy analysis: a review. Med. Biol. Eng. Comput. 60, 633–642. https://doi.org/10.1007/s11517-021-02487-8 (2022).

Li, G., Zheng, S. & Li, X. in Digital TV and Wireless Multimedia Communication: 14th International Forum, IFTC 2017, Shanghai, China, November 8–9, Revised Selected Papers 14. 193–202 (Springer). (2017).

Kauppi, T. et al. in BMVC. 10 (Citeseer).

Giancardo, L. The Hamilton eye institute macular edema dataset (hei-med). (2012).

Mateen, M., Wen, J., Nasrullah, N., Sun, S. & Hayat, S. Exudate detection for diabetic retinopathy using pretrained convolutional neural networks. Complexity 1–11 (2020). (2020).

Bora, A. et al. Predicting the risk of developing diabetic retinopathy using deep learning. Lancet Digit. Health. 3, e10–e19 (2021).

Zuo, M., Zhang, W., Xu, Q. & Chen, D. Deep personal multitask prediction of diabetes complication with attentive interactions predicting diabetes complications by multitask-learning. J. Healthcare Eng. (2022). (2022).

Lin, T. Y. et al. Deep learning to infer visual acuity from optical coherence tomography in diabetic macular edema. Front. Med. 9, 1008950 (2022).

Alryalat, S. A. et al. Deep learning prediction of response to anti-VEGF among diabetic macular edema patients: treatment response analyzer system (TRAS). Diagnostics 12, 312 (2022).

Arcadu, F. et al. Deep learning algorithm predicts diabetic retinopathy progression in individual patients. NPJ Digit. Med. 2, 92 (2019).

Tanaka, S. et al. A deep learning-based radiomics approach to predict head and neck tumor regression for adaptive radiotherapy. Sci. Rep. 12, 8899 (2022).

Lin, A. et al. Deep learning-enabled coronary CT angiography for plaque and stenosis quantification and cardiac risk prediction: an international multicentre study. Lancet Digit. Health. 4, e256–e265 (2022).

LeCun, Y. et al. Learning algorithms for classification: a comparison on handwritten digit recognition. Neural Networks: Stat. Mech. Perspective. 261, 2 (1995).

Lin, M., Chen, Q. & Yan, S. Network in network. arXiv preprint arXiv:1312.4400 (2013).

Zeiler, M. D. & Fergus, R. in Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6–12, Proceedings, Part I 13. 818–833 (Springer). (2014).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014).

Szegedy, C. et al. in Proceedings of the IEEE conference on computer vision and pattern recognition. 1–9.

Srivastava, R. K., Greff, K. & Schmidhuber, J. Highway networks. arXiv preprint arXiv:1505.00387 (2015).

He, K., Zhang, X., Ren, S. & Sun, J. in Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.

Szegedy, C., Ioffe, S., Vanhoucke, V. & Alemi, A. in Proceedings of the AAAI conference on artificial intelligence.

Hemelings, R. et al. Pointwise visual field estimation from optical coherence tomography in glaucoma using deep learning. Trans. Vis. Sci. Technol. 11, 22–22 (2022).

Kang, N. Y. et al. Classification of pachychoroid on optical coherence tomography using deep learning. Graefe’s Archive Clin. Exp. Ophthalmol. 259, 1803–1809 (2021).

Lim, W. S. et al. Use of multimodal dataset in AI for detecting glaucoma based on fundus photographs assessed with OCT: focus group study on high prevalence of myopia. BMC Med. Imaging. 22, 206 (2022).

Zhang, W. et al. Detection of Fuchs’ uveitis syndrome from slit-lamp images using deep convolutional neural networks in a Chinese population. Front. Cell. Dev. Biology. 9, 684522 (2021).

Raita, Y. et al. Emergency department triage prediction of clinical outcomes using machine learning models. Crit. Care. 23, 64. https://doi.org/10.1186/s13054-019-2351-7 (2019).

Bardak, B. & Tan, M. Improving clinical outcome predictions using convolution over medical entities with multimodal learning. Artif. Intell. Med. 117, 102112. https://doi.org/10.1016/j.artmed.2021.102112 (2021).

He, L. et al. Deep Multimodal Learning from MRI and Clinical Data for early prediction of neurodevelopmental deficits in very Preterm infants. Front. Neurosci. 15, 753033. https://doi.org/10.3389/fnins.2021.753033 (2021).

Shi, R. et al. Machine learning regression algorithms to predict short-term efficacy after anti-VEGF treatment in diabetic macular edema based on real-world data. Sci. Rep. 13, 18746 (2023).

Wen, D. et al. Deep learning-based postoperative visual acuity prediction in idiopathic epiretinal membrane. BMC Ophthalmol. 23, 361. https://doi.org/10.1186/s12886-023-03079-w (2023).

Collins, G. S., Reitsma, J. B., Altman, D. G. & Moons, K. G. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. Bmj 350, g7594. https://doi.org/10.1136/bmj.g7594 (2015).

Krzystolik, M. G. et al. Reproducibility of macular thickness and volume using Zeiss optical coherence tomography in patients with diabetic macular edema. Ophthalmology 114, 1520–1525. https://doi.org/10.1016/j.ophtha.2006.10.055 (2007).

Ahuja, S., Saxena, S., Meyer, C. H., Gilhotra, J. S. & Akduman, L. Central subfield thickness and cube average thickness as bioimaging biomarkers for ellipsoid zone disruption in diabetic retinopathy. Int. J. Retina Vitreous. 4, 41. https://doi.org/10.1186/s40942-018-0144-9 (2018).

Grading diabetic retinopathy. From stereoscopic color fundus photographs–an extension of the modified Airlie House classification. ETDRS report number 10. Early Treatment Diabetic Retinopathy Study Research Group. Ophthalmology 98, 786–806 (1991).

Han, K. et al. A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 45, 87–110 (2022).

Muthukumar, V. et al. Classification vs regression in overparameterized regimes: does the loss function matter? J. Mach. Learn. Res. 22, 222:221–222 (2020).

Hodson, T. O. Root-mean-square error (RMSE) or mean absolute error (MAE): when to use them or not. Geosci. Model Dev. (2022).

Funding

Support: The study was funded by National Key Research and Development Program of China (2023YFC3503005) and Chengdu University of Traditional Chinese Medicine (XKTD2022009).

Author information

Authors and Affiliations

Contributions

Concept and design: Xiangjie Leng, Xuejing Lu.Acquisition, analysis, or interpretation of data: Xiangjie Leng, Ruijie Shi, Zhaorui Xu, Hai Zhang, Wenxuan Xu, Keyin Zhu.Drafting of the manuscript: Xiangjie Leng, Ruijie Shi, Zhaorui Xu, Hai Zhang.Critical revision of the manuscript for important intellectual content: Xiangjie Leng, Xuejing Lu.Statistical analysis: Xiangjie Leng, Ruijie Shi.Administrative, technical, or material support: Xuejing Lu.Supervision: Xuejing Lu.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Leng, X., Shi, R., Xu, Z. et al. Development and validation of CNN-MLP models for predicting anti-VEGF therapy outcomes in diabetic macular edema. Sci Rep 14, 30270 (2024). https://doi.org/10.1038/s41598-024-82007-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-82007-4