Abstract

Coronary artery disease (CAD) is the main cause of death. It is a complex heart disease that is linked with many risk factors and a variety of symptoms. In the past few years, CAD has experienced a remarkable growth. Prompt risk prediction of CAD would be capable of decreasing the death rate by permitting timely and targeted treatments. Angiography is the most precise CAD diagnosis technique; however, it has several side effects and is expensive. Multi-criteria decision-making approaches can well perceive CAD by analysing main clinical indicators like ChestPain type, ST_Slope, and HeartDisease presence. By assessing and evaluating these factors, the model improves diagnostic accuracy and aids informed clinical decisions for quick CAD detection. Mainly machine learning (ML) and deep learning (DL) use plentiful models and algorithms, which are commonly employed and very useful in exactly detecting the CAD within a short time. Current studies have employed numerous features in gathering data from patients while using dissimilar ML and DL models to attain results with high accuracy and lesser side effects and costs. This study presents a Leveraging Fuzzy Wavelet Neural Network with Decision Making Approach for Coronary Artery Disease Prediction (LFWNNDMA-CADP) technique. The presented LFWNNDMA-CADP technique focuses on the multi-criteria decision-making model for predicting CAD using biomedical data. In the LFWNNDMA-CADP method, the data pre-processing stage utilizes Z-score normalization to convert an input data into a uniform format. Furthermore, the improved ant colony optimization (IACO) method is used for electing an optimum sub-set of features. Furthermore, the classification of CAD is accomplished by utilizing the fuzzy wavelet neural network (FWNN) technique. Finally, the hyperparameter tuning of the FWNN model is accomplished by employing the hybrid crayfish optimization algorithm with the self-adaptive differential evolution (COASaDE) technique. The simulation outcomes of the LFWNNDMA-CADP approach are investigated under a benchmark database. The experimental validation of the LFWNNDMA-CADP approach portrayed a superior accuracy value of 99.49% over existing techniques.

Similar content being viewed by others

Introduction

The heart is the main part to survive by efficiently forcing oxygenated blood and controlling significant hormones to preserve optimum blood pressure level1. Any deflection from its working could induce the progression of heart illnesses, together called cardiovascular diseases (CVD). CVD contains various illnesses, which affect the blood vessels and heart, like pulmonary embolisms, cerebrovascular difficulties, congenital abnormalities, peripheral arterial problems, CAD, irregular heart rhythms, etc2. In the domain of CVDs, CAD is mainly regarded owing to its association with overall death rates. As stated by the WHO, the significance of CVDs is intense, with shocking statistics showing that approximately 17.9 million mortalities yearly were accredited to these illnesses globally3. Traditional invasive techniques for heart disease recognition are based on physical and laboratory tests, examination by a doctor, etc4. Amongst the invasive methods, catheterization and angiography are important. Catheterization is one of the medical techniques in which a thin, a catheter is injected into a blood vessel for detecting heart disorders. Angiography is a medical image method, which utilizes X-rays to see the blood vessels within the body. Both techniques come down with restrictions, like invasiveness (risking infection or bleeding), expensiveness, and limited access5. Magnetic Resonance Imaging (MRI) and Computed Tomography (CT) are often deployed to identify and diagnose heart illness. Both methods have restrictions that require the patient to be still in a specific place, experience with ionizing radiation, costly treatment, incapability to identify initial-stage or mild heart disease, etc6.

So, an effectual technique is needed for the experts to identify CAD at an initial stage. Artificial intelligence (AI) is frequently used in forecast to solve these difficulties, among other ML and DL become the main. This prediction method studies an information to state whether the patient has the illness and achieves much more accurate results in comparison to physical diagnoses7. Among these techniques, which are often created in medical decision support systems is the diagnosis method depends upon ML, which can predict the presence of illness in a patient. Currently, the usage of various ML models for various applications is gradually usual and widespread. Owing to the enormous size and difficulty of data in these several applications, extracting hidden and valuable information from them is vital8. This information is utilized to improve the quality of numerous services. Analyzing this information, researchers, organizations, governments, and others could offer better services and add value to their communications with their patients, users, customers, etc. CVDs, specifically CAD, represent a leading cause of mortality worldwide, with significant social and economic impacts. Early detection and accurate prediction of CAD can substantially enhance patient outcomes and mitigate healthcare costs9. However, the complexity and variability of biomedical data present threats in developing reliable diagnostic models. Conventional models mostly find difficulty in capturing the complex relationships within the data. This study aims to address these challenges by using advanced ML models, integrating fuzzy logic (FL), wavelet neural networks (NNs), and multi-criteria decision-making for enhanced CAD prediction accuracy. The goal is to provide a more efficient tool for early diagnosis, aiding in timely interventions and better management of CAD10.

This study presents a leveraging fuzzy wavelet neural network with decision making approach for coronary artery disease prediction (LFWNNDMA-CADP) technique. The presented LFWNNDMA-CADP technique focuses on the multi-criteria decision-making model for predicting CAD using biomedical data. In the LFWNNDMA-CADP method, the data pre-processing stage utilizes Z-score normalization to convert an input data into a uniform format. Furthermore, the improved ant colony optimization (IACO) method is used for electing an optimum sub-set of features. Furthermore, the classification of CAD is accomplished by utilizing the fuzzy wavelet neural network (FWNN) technique. Finally, the hyperparameter tuning of the FWNN model is accomplished by employing the hybrid crayfish optimization algorithm with the self-adaptive differential evolution (COASaDE) technique. The simulation outcomes of the LFWNNDMA-CADP model are investigated on a benchmark dataset. The key contribution of the LFWNNDMA-CADP model is listed below.

-

The LFWNNDMA-CADP method utilizes Z-score normalization to standardize the input data, confirming that all features are on a comparable scale. This pre-processing step averts features with larger numerical ranges from controlling the model’s learning process. By normalizing the data, the methodology improves the capability of the model to efficiently learn patterns and improve classification accuracy.

-

An improved version of the IACO method is employed for choosing relevant features for the classification model. This enhancement improves the efficiency of the feature selection process by concentrating on the most informative attributes, thereby mitigating computational complexity. As a result, the accuracy of the model in classifying CAD is significantly improved.

-

The LFWNNDMA-CADP method utilizes FWNN to classify CAD, by employing its capability to capture complex, non-linear patterns in the data. This improves the diagnostic accuracy of the technique by adapting to discrepancies in the input features. By incorporating FL and wavelet transforms (WT), the FWNN enhances the robustness and precision of CAD classification.

-

The LFWNNDMA-CADP methodology employs COASaDE model to fine-tune the hyperparameters of the FWNN method, ensuring optimal configurations for training. This results in an enhanced model performance by effectually exploring the hyperparameter space. The use of COASaDE improves the predictive accuracy and stability of the FWNN in diagnosing CAD.

-

The LFWNNDMA-CADP approach incorporates IACO, FWNN, and COASaDE into a combined framework, improving feature selection, classification accuracy, and hyperparameter optimization for CAD diagnosis. This methodology stands out by incorporating the merits of every technique, addressing limitations in conventional methods. The novelty is in the seamless integration of these advanced algorithms, resulting in more accurate and effectual CAD classification.

Literature of works

Mansoor et al.11 present a unique ML method that has been presented to predict the disease of CAD precisely utilizing a DL method in a classifier context. An ensemble voting classifier is created based on different models like XGBoost, NB, Random Forest (RF), DT, support vector machine (SVM), and much more. SMOTE is utilized to tackle the problem of imbalanced databases, and the Chi-square test is utilized as a feature selection (FS). In12 a novel ensemble Quine McCluskey Binary Classifier (QMBC) model has been developed. The QMBC method uses an ensemble of 7 methods, and multi-layer perceptron, and executes extraordinarily well on dual class databases. The research also uses feature extraction and FS methods to quicken the process of prediction. Moreover, ANOVA and Chi-Square models were used to recognize features and produce a database subset. In13, the Adaptive Synthetic Sampling model is utilized for data balancing, whereas the Point Biserial Correlation Coefficient is utilized as an FS method. In this research, dual DL methods, a Blending-based CVD Detection Network (BlCVDD-Net) and an Ensemble-based CVD Detection Network (EnsCVDD-Net), are presented for the classification and precise prediction of CVDs. EnsCVDD-Net is created by using an ensemble model to Gated Recurrent Unit (GRU) and BlCVDD-Net, and LeNet is created by combining LeNet, GRU, and Multilayer Perceptron (MLP). Shapley Additive exPlanations is utilized to deliver an accurate understanding of the affecting various factors have on CVD diagnosis. In14 an intelligent healthcare method for predicting CVD based on the Swarm-Artificial NNs (Swarm-ANNs) approach has been presented. Firstly, the suggested Swarm-ANN approach arbitrarily creates pre-defined numbers of NN for assessing the structure depend upon the consistency of the solution. Moreover, the NN populations were trained by dual phases of weight modifications and their weight is modified by a recently intended heuristic formulation. Elsedimy et al.15 presents a new heart disease recognition method based on the SVM classification method and quantum-behaved particle swarm optimizer (QPSO) method, specifically, QPSO-SVM has been presented to predict and analyze risk of heart disease. Initially, the data pre-processing was executed by transmuting small data into mathematical data and using effectual scaling methods. Then, the SVM is stated as an optimizer problem and resolved utilizing the QPSO to identify the optimum features. Eventually, a self-adaptive threshold technique to fine-tune the QPSO-SVM parameter is suggested. Sowmiya and Malar16 introduce the hybrid FS technique based on Lasso, RF-based boruta, and recursive feature removal techniques. The importance of a feature is identified by the score each technique offers. ML methods like RF, SVM, K-NN, LR, DT, and NB were formed as base classifications. Later, ensemble models such as boosting and bagging methods are produced utilizing base classifications. The Z-Alizadeh Sani dataset has been utilized to test and build the method.

Saheed et al.17 develop a new version of the Bidirectional Long Short-Term Memory (BiLSTM) method, integrating an attention mechanism (AM) to enable FS. The importance of the feature has been identified by computing the coefficient scores in each of the SML. Either with or without the addition of hyperparameter tuning, the process of grid search moreover enhanced the models. In18 a novel stacking method is suggested, which syndicates 4 classifications, Ridge Classifier (RC), Passive Aggressive Classifier (PAC), eXtreme Gradient Boosting (XGBoost), and Stochastic Gradient Descent Classifier (SGDC), at the bottom layer, and LogitBoost is used for the concluding forecasts. In PaRSEL, Recursive Feature Elimination (RFE), three dimensionality reduction models, Factor Analysis (FA), and Linear Discriminant Analysis (LDA), are utilized to decrease the dimensionality and choose the most related features. Moreover, eight balancing methods were utilized to resolve the dataset’s imbalanced nature. Imran, Ramay, and Abbas19 present an Ensemble Stacked NN (ESNN) model integrating ML and DL, utilizing multiple datasets, data pre-processing, PCA, and nine ML approaches, with RF as a meta-model for enhanced prediction accuracy. Li et al.20 propose a 2D motion-compensated reconstruction methodology. Registration is based on mutual information (MI) and rigidity penalty (RP), optimized by utilizing an adaptive stochastic gradient descent (ASGD) model. Zhao et al.21 developed a MLP approach for early coronary microvascular dysfunction (CMD) detection using vectorcardiography (VCG) and cardiodynamicsgram (CDG) features, optimized with sequential backward selection and sparrow search. Ma, Chen, and Ho22 presents a lightweight convolutional NN (CNN) for classifying phonocardiogram data, utilizing self-supervised learning (SSL) for enhanced accuracy and faster convergence. A mobile app prototype enables in-device inference and fine-tuning. Muthulakshmi and Kavitha23 integrate anatomical left ventricle myocardium features with an optimized extreme learning machine (ELM) for precise classification of subjects based on ejection fraction (EF) severity utilizing CMR images. Gupta et al.24 introduce an ensemble learning-based medical recommendation system by employing Fast Fourier Transform (FFT) for enhanced early detection and management of CAD. Gupta et al.25 proposes spectrogram-based feature extraction for heartbeat analysis, capturing energy changes over time. Features are optimized with the spider monkey optimization (SMO) technique after pre-processing with a digital bandpass filter.

Honi and Szathmary26 present a 1DCNN approach with optimized architecture and feature selection to enhance predictive performance, validated through cross-validation techniques. Lazazzera and Carrault27 introduces MonEco, a health monitoring ecosystem that anticipates respiratory and cardiovascular disorders by utilizing the eCardio app, CareUp smartwatch, and UpNEA smart glove. Gupta et al.28 compare three methodologies such as IPCA, FrFT, and FrWT for detecting R-peaks in ECG signals, with FrWT portraying the optimum performance. El Boujnouni et al.29 presents a diagnosis system by employing capsule networks with wavelet-decomposed ECG images to classify eight diseases, attaining high performance with less training data and time. Filist et al.30 develop a multimodal classifier utilizing heterogeneous data and intelligent agents for early CVD diagnosis. Bhardwaj, Singh, and Joshi31 employ continuous WT (CWT) scalograms of PCG signals and a 2-D CNN for multiclass classification of valvular heart diseases, with model interpretation through occlusion maps and deep dream images. Baseer et al.32 presents a CAD prediction model integrating IoMT for data collection and AI techniques namely TabNet and catBoost for accurate, real-time heart disease risk assessment, utilizing diverse patient data to enhance precision and personalization. Ahmed et al.33 develop an effective disease prediction system implementing fog computing, Enhanced Grey-Wolf Optimization-based Feature Selection (EGWO-FSA), and Deep Belief Network (DBN) to predict stroke and heart-related diseases with reduced computation time. Dai et al.34 introduces a CAD detection methodology by using raw heart sound signals, incorporating a multidomain feature model and a medical multidomain feature fusion model into a combined framework. Muthu and Devadoss35 propose a Genetically Optimized NN (GONN) with WT for glaucoma detection. Seoni et al.36 presents the Spatial Uncertainty Estimator (SUE) for assessing ECG classification reliability. Khanna et al.37 presents an IoT and DL-based healthcare model (IoTDL-HDD). The approach utilizes BiLSTM for feature extraction, optimized with the Artificial Flora Optimization (AFO) approach, and classifies signals utilizing a fuzzy deep NN (FDNN). Sasikala and Mohanarathinam38 utilizes Synthetic Minority Oversampling Technique (SMOTE) to address imbalanced data in PAD detection, utilizing six AI classifiers and hyperparameter optimization.

Various studies present ML and DL methods for predicting CAD and other cardiovascular conditions, utilizing techniques such as ensemble classifiers, NNs, and feature extraction models. To address imbalanced data, methods namely SMOTE, GWO, and adaptive sampling are used. WTs are employed for processing ECG and PCG signals, with CNNs and other models for classification. Optimization methods such as artificial flora and swarm-based approaches enhance model performance, while hybrid models integrating ML with FL and DL improve prediction reliability. Challenges comprise computational complexity, data scalability, and generalization to real-world applications. Despite the enhancements in AI-based models for CVD prediction, threats remain in handling imbalanced datasets, feature selection, and real-time prediction accuracy. Furthermore, existing models mostly face difficulty with generalizing across diverse patient populations and incorporating heterogeneous data sources, emphasizing the requirement for more robust, scalable, and interpretable approaches.

Materials and methods

In this study, a LFWNNDMA-CADP technique is presented. The presented LFWNNDMA-CADP technique focuses on the multi-criteria decision-making model for predicting CAD using biomedical data. To achieve this, the LFWNNDMA-CADP model involves data preprocessing, FS, classification model, and hyperparameter tuning method. By integrating multi-criteria decision-making strategies and advanced optimization techniques, this model provides a powerful tool for clinical decision support systems, helping healthcare professionals identify high-risk patients with greater precision and timeliness. Figure 1 portrays the complete workflow of the LFWNNDMA-CADP technique.

Z-score normalization

In the LFWNNDMA-CADP method, the data pre-processing stage involves Z-score normalization to convert the input data into a uniform format. It is a numerical model, which is employed to normalize data, converting it into a format where the standard deviation is 1 and the mean is 039. In the framework of CAD prediction utilizing biomedical data, this model aids in alleviating the effects of fluctuating measures between dissimilar features, like cholesterol levels, blood pressure, and other biometrics. By using Z-score normalization, every feature is changed into a Z-score, representing how many standard deviations is from the mean. This standardization enhances the performance of ML techniques by improving their capability to learn patterns without being subjective by the scale of the input data. Furthermore, it eases better convergence throughout model training, prime to more precise predictions of CAD risk. Generally, it is a vital preprocessing stage in the analysis of biomedical databases for effectual CAD prediction.

FS using IACO model

Next, the IACO approach is used for electing an optimum sub-set of features40. This model presents various merits over conventional methodologies such as genetic algorithms (GAs) or wrapper-based approaches. First, IACO is inspired by the natural behavior of ants, utilizing pheromone trails to guide the search process, which assists it explore the solution space effectually and averts getting stuck in local optima. Its probabilistic behaviour allows it to handle intrinsic, high-dimensional datasets effectively. Compared to other methods, IACO can attain improved generalization performance by choosing the most relevant features, thus mitigating overfitting. It also adapts dynamically by refining the search process based on feedback, making it more robust to noise in the data. Furthermore, IACO is computationally effectual, particularly for massive datasets, and needs lesser iterations to converge, which is a key merit over exhaustive search methodologies namely brute force. This integration of flexibility, effectualness, and adaptability makes IACO a powerful tool for feature selection. Figure 2 illustrates the working flow of the IACO model.

The ACO is an intellectual optimizer technique, which pretends the ant’s foraging behaviour. The ACO was developed depend upon population optimization that shows robustness and flexibility. The ACO approach is a processing technique, which creates the foraging ant’s behavior in nature. It utilizes pheromone change and collaboration among the individual ants to identify the optimum solution to difficulties. In the method, every ant determines the place to visit later depending upon the pheromone’s attention and upgrades the pheromones once finishing a cycle. The possibility of an ant stirring from location \(\:i\to\:j\) on time \(\:t\) as displayed in Eq. (1):

whereas \(\:{p}_{ij}^{k}\left(t\right)\) denotes the transitional probability of working ant \(\:k\) from location \(\:i\to\:j\) on time \(\:t;\)\(\:{\tau\:}_{ij}\left(t\right)\) represents the pheromone attention on path \(\:i\to\:j\) on time \(\:t;\)\(\:{\eta\:}_{ij}\left(t\right)\) signifies the heuristic data of location \(\:i\to\:j\), and its size is typically \(\:\frac{1}{{d}_{i}i}\), and \(\:{d}_{ij}\left(t\right)\) denotes the length among the \(\:i\) and \(\:j\:\)points\(\:;\)\(\:a=\{\text{1,2}\dots\:S\}\), whereas \(\:S\) denotes the group of feasible points; \(\:\alpha\:\) means the factor of pheromone significance; and \(\:\beta\:\) represents the heuristic factors.

The ants mainly depend on pheromones for choosing the following route. Thus, the pheromone focus at every iteration plays a vital character in the path selection. To improve the speed, speed converging, and upsurge the possibility, that the route selected by the ant in the preceding cycle is smaller than the one selected in the subsequent cycle, the pheromone focus expression is enhanced in Eq. (2):

Whereas \(\:\rho\:\) denotes the evaporating rate (or reduction co-efficient) of the pheromone that is typically in the interval of zero and one; \(\:\lambda\:\) denotes the additional pheromone strengthening factor; \(\:\varDelta\:{\tau\:}_{ij}\) signifies the upsurge in the pheromone; and \(\:Q\) means the pheromone intensity that is a positive constant utilized to fine-tune the pheromone upgrade intensity.

The pheromone significance factor \(\:\alpha\:\) shows the comparative significance of the volume of data collected by the ants in steering their exploration. A \(\:\alpha\:\) value, which is very huge will upsurge the possibility of the ants selecting an earlier moved path again, leading to a drop in search arbitrariness. On the other hand, a \(\:\alpha\:\) value that is very low will drop into a local optimal. As the sum of iterations upsurges, the significance level develops, as displayed in Eq. (3):

Whereas \(\:K\) denotes the maximal sum of iteration.

On the initial iteration, the pheromone significance factor \(\:\alpha\:\) is fixed to a smaller value to inspire deep exploration and increase the global search ability of ACO. During iteration, \(\:\alpha\:\) is attuned to 2.5 to ensure that the ACO preserves its searchability without dropping into a local optimal. On the final iteration, the pheromone significance factor \(\:\alpha\:\) is improved to a higher value to quicken converging and eventually induce constant converging of outcomes.

The fitness function (FF) imitates the classifier accuracy and the quantity of selected features. It degrades the classifier accuracy and declines the dimension of set. So, the following FF is employed to assess individual solutions, as exposed in Eq. (4).

Where \(\:ErrorRate\) is the classification rate of error by employing the preferred features. \(\:ErrorRate\) is computed as the ratio of incorrect categorized to the number of classifications prepared, definite as a value between 0 and 1, \(\:\#SF\) denotes the number of chosen feature and \(\:\#All\_F\) means the total amount of features in the unique database. \(\:\alpha\:\) is employed for controlling the consequence of classifier excellence and length of sub-set. In the research, \(\:\alpha\:\) is fixed to 0.9.

Classification model using FWNN method

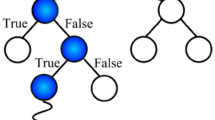

Besides, the classification of CAD is performed by using the FWNN method41. This technique incorporates the merits of FL, WT, and NNs, making it specifically effectual for classification tasks. The FL component allows for handling uncertainty and imprecision in data, while the WT gives multi-resolution analysis, enabling the method to capture both low- and high-frequency patterns in the input data. The NN feature gives adaptive learning and pattern recognition capabilities. Compared to conventional approaches, FWNN is better suited for complex, non-linear, and noisy data, as it incorporates feature extraction and classification in an integrated framework. Its capability to generalize well with lesser training samples and its robustness to noise make it a superior choice over methods namely decision trees (DTs) or SVMs, particularly in applications with ambiguous or incomplete data. Moreover, FWNN can give enhanced accuracy, faster convergence, and a more interpretable decision-making process related to purely statistical models. The proposed FWNN method combines a Fuzzy NN (FNN) technique and the Wavelet NN (WNN) method for classification. This network includes 8 layers, as defined under. Figure 3 represents the structure of FWNN model.

First layer

This layer provides the input features from the primary layer of the offered technique.

Image classification and independent parameter features are each integrated into the layer. \(\:X=\left\{{X}_{j}|j=1,\dots\:,n\right\}\) has been used to determine the layer.

Second layer

It includes WNN and FNN for calculation. This wavelet is computed utilizing the equation provided below within the wavelet part of the layer.

The number of wavelets has been estimated as \(\:N\), while the number of input features was represented by \(\:n\). At the same time, the wavelet change signifies the display function and relates to its local feature. These features are very simple to train the NNs to model the extreme nonlinear information as shown.

Where \(\:\psi\:\left(x\right)\in\:{L}^{2}\left(R\right)\) signifies the wavelet function

Considering, \(\:\widehat{\psi\:}\left(\omega\:\right)\) as a \(\:\psi\:\left(x\right)\) Fourier transform. To encourage a multiple-variable process, multiple dimension wavelets need to be offered. Besides, the fuzzy Membership Function (MF) has been considered with the fuzzy area of layer 2, as specified under.

Here, \(\:{c}_{kj}\) signifies the center, and \(\:{\sigma\:}_{kj}\) designates SD for \(\:k\)-\(\:th\) MF.

Third layer

This third layer output must be multiplied by the layer of aggregation. Now, several WNNs are used by activation function of the wavelet.

Additionally, each of the nodes in layer three specifies a single fuzzy rule. The resulting signal has been assessed with an operator of AND.

Fourth layer

The outcome of the wavelet element has been considered at layer four.

\(\:{R}_{k}\): When \(\:{x}_{1}\) is \(\:{A}_{k1}\dots\:\:AND\:{x}_{n}\) is \(\:{A}_{kn},\) Formerly,

Let \(\:{x}_{1},{x}_{2},\dots\:,{x}_{n}\) be the input features, \(\:{Y}_{1},{\:Y}_{2},\dots\:,{\:Y}_{M}\) symbolize the output of layer four, and \(\:{A}_{kj}\) directs the \(\:k\)-\(\:th\) fuzzy-set. The bias and the weighted matrices are \(\:{\overline{y}}_{k}\:\)and\(\:{\:w}_{i}^{k}\).

Fifth layer

The FNN’s output in layer 3 and the result of the layer 4 is incorporated by these layers. The Fifth Layer multiplies the third layer result dataset through the fourth layer result dataset.

Sixth Layer: Similarly, dual neurons perform as calculating operators due to the output signals of the 5th and 3rd layers. This quotient is generated with an output neuron of the seventh layer.

Seventh Layer: The output was accumulated by layer 7.

Eighth Layer: The final layer of the network through the features classification. The gradient descent model has been applied for training the provided FWNN afterward the parameter calibration with the straight PSO. The objective parameter gradient was assessed from the opposed direction, depending on \(\:\varTheta\:=({c}_{kj},\:{\sigma\:}_{kj},\:{b}_{ij}^{k},\:{a}_{ij}^{k},\:{w}_{i}^{k},{\overline{y}}_{k})\) as shown.

Hyperparameter tuning using COASaDE model

Finally, the hyperparameter tuning of the FWNN model is realized by the design of the hybrid COASaDE method42. This model is chosen due to its capability to effectually balance exploration and exploitation during the optimization process. The COA model outperforms in finding optimal solutions for complex and multi-modal problems by replicating the natural behaviors of crayfish in searching for food, which promotes both local and global search capabilities. When integrated with SaDE, the model attains further robustness, as SaDE improves the parameter tuning within the algorithm itself, allowing for dynamic adjustments during the optimization process. This self-adaptivity enhances the effectiveness and accuracy of hyperparameter tuning, confirming improved convergence rates without needing manual parameter alterations. Compared to conventional techniques such as grid search or random search, COASaDE is more efficient in exploring large search spaces, averts premature convergence, and can adapt to diverse problem landscapes. Its hybrid nature also makes it more resilient to noise and outliers, delivering superior performance across a range of ML models. Figure 4 illustrates the steps involved in the COASaDE technique.

The Hybrid COASaDE Optimizer merges the COA with SaDE. The combinational method combines the exploration efficacy of COA, renowned for its exclusive three-phase behavioral-based tactic by the adaptive exploitation capabilities of SaDE, considered through dynamical parameter modification. This model begins with COA’s exploration stage by comprehensively investigating the space of solution for preventing early convergence. For example, possible solutions are recognized, and the model changes to using SaDE’s adaptable methods, which adjust this solution over self-adjustment crossover and mutation procedures. This model guarantees a complete exploration accompanied by an effective exploitation stage, with every method adding the other’s boundaries. The main challenges in these hybrid approaches exist in attaining a unified transition among the techniques while preserving a balance that permits the powers of SaDE and COA.

Initialize

The model initializes the population inside the space of search limits with random numbers distributed uniformly. This guarantees a different starting point towards the search method, which upsurges the probability of discovering the globally optimal, as exposed in Eq. (15):

Whereas \(\:{X}_{i}^{\left(0\right)}\) represents \(\:i\:th\) location of the individual within the early population, \(\:ub\) and \(\:lb\) represent upper and lower limits, respectively; and \(\:r\) denotes randomly generated numbers distributed at uniform.

Evaluation

The fitness of every individual has been calculated with the objective function. This stage controls the solution’s qualities, managing the searching procedure, as exposed in Eq. (2):

Here, \(\:f\left(\cdot\:\right)\) means objective function and \(\:{f}_{i}^{\left(0\right)}\) represents \(\:i‐th\) individual’s fitness.

Initialization of mutation factor and crossover rate

This presents a balanced exploitation and exploration ability primarily, as exposed in Eq. (17):

Now, \(\:{F}_{i}^{\left(0\right)}\) denotes a factor of mutation, and \(\:C{R}_{i}^{\left(0\right)}\) represents the rate of crossover for \(\:i\:th\) individuals.

Adaptive update

The crossover rate and mutation factor are upgraded adaptably depending on previous success, improving the model’s capability for adapting to various optimizer backgrounds dynamically as presented in Eqs. (18) and (19):

Here, \(\:N(\mu\:,\sigma\:)\) characterizes a normal distribution with\(\:\:\mu\:\) mean and \(\:\sigma\:\:\)standard deviation.

Behavior of COA

This method uses COA-detailed behaviors like competition and foraging depending on \(\:temp\), which assistances to expand the searching process and prevent local optimum, as displayed in Eqs. (20) and (21):

Here, \(\:{C}^{\left(t\right)}\) refers to the reducing factor, \(\:r\sim\:\mathcal{U}\left(\text{0,1}\right)\) stands for randomly generated vector, \(\:x{f}^{\left(t\right)}\) signifies food location, and \(\:{X}_{z}\) means an individual’s location is selected at random.

SaDE crossover and mutation

Differential Evolution’s crossover and mutation mechanisms have been used to make sample vectors, stimulating solution diversities and increasing the exploration abilities of the model, as exposed in Eqs. (22) and 23):

Here, \(\:r1,r2,r3\) are different indices that vary from \(\:i,\)\(\:{V}_{i}^{\left(t\right)}\) represents a mutant vector, \(\:{U}_{i}^{\left(t\right)}\) denotes sample vector, \(\:{r}_{j}\sim\:\mathcal{U}\left(\text{0,1}\right)\) refers to a randomly generated number, and \(\:{i}_{rand}\) means index chosen at random.

Selection

The fitness of the sample vectors has been valued and individuals have been upgraded when the novel solution is improved. These stages guarantee that only enhanced solution is accepted forward, improving convergence near the optimal that is exposed in Eqs. (24) and (25):

Here \(\:{X}_{i}^{(t+1)}\) denotes the updated location of the \(\:i\:th\) individuals and \(\:{f}_{i}^{(t+1)}\) might be fitness.

Final outputs

The finest solution discovered has been resumed as the last outcome together by the convergence curve, as presented in Eqs. (26) and (27):

Here, \(\:best\_position\) represents the finest solution discovered, \(\:best\_fun\) might it fitness, and cuve-f(\(\:t\)) refers to finest fitness at every iteration \(\:t.\)

Exploration Stage: The COASaDE exploration stage is crucial to navigating the searching space and uncovering possible solutions. This stage uses the exploration abilities joined by the crossover mechanisms and SaDE adaptive mutation to efficiently balance exploitation and exploration. The model uses different tactics, such as foraging, summer resort, and competitive phases, to enable diverse movement designs amongst candidate solutions and then adapt to environment indications for effective exploration of the search space. By including random features like temperature states and individual choices for adapting the parameter, the model keeps variety inside the population, preventing it since being fixed in local optimum and guaranteeing complete search space exploration.

Exploitation stage: The exploitation stage of the hybrid COA with SaDE concentrated on filtering candidate solutions to improve their convergence and quality near optimum solutions. This stage uses the crossover mechanisms and adaptive mutation of SaDE to proficiently use encouraging areas of the search space. Fitness updating and assessment are essential, as recently made solutions are assessed with the objective function and better solution substitute their parents within the populace. Over constant updating depending on fitness, crossover, mutation, and COASaDE converge in the direction of near-optimal or optimal solutions, efficiently using the encouraging areas recognized throughout the exploration stage.

The fitness selection is the substantial element manipulating the efficiency of the COASaDE approach. The hyperparameter range process includes the solution-encoded system to assess the effectiveness of the candidate solution. In this work, the COASaDE method reflects precision as the foremost standard to project the FF and the equation is given below.

Here, \(\:TP\) and \(\:FP\) signify the true and false positive values.

Performance validation

In this section, the experimentation validation of the LFWNNDMA-CADP method is verified under the heart failure prediction dataset43. The database contains 900 samples under dual classes as represented in Table 1. This dataset is analyzed using multi-criteria methods to detect CAD based on ChestPain type, ST_Slope, and HeartDisease. The total number of features is 11, but only eight features are selected.

Figure 5 presents the classifier outcomes of the LFWNNDMA-CADP model with 70%TRAPH and 30%TESPH below ChestPain type. Figure 5a,b displays the confusion matrix with precise classification and recognition of all 2 classes. Figure 5c exhibits the PR analysis, signifying the highest performance across all 2 classes. Finally, Fig. 5d shows the ROC analysis, representing efficient outcomes with better ROC values for diverse classes.

Table 2 signifies the CAD recognition of the LFWNNDMA-CADP model below 70%TRAPH and 30%TESPH based on ChestPain type. The outcomes indicate that the LFWNNDMA-CADP methodology appropriately identified the samples. With 70%TRAPH, the LFWNNDMA-CADP methodology provides an average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l},\)\(\:{F1}_{score}\), and \(\:{G}_{Measure}\) of 97.35, 91.84, 85.98, 88.31, and 88.61%, respectively. Meanwhile, with 30%TESPH, the LFWNNDMA-CADP methodology gives average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l},\)\(\:{F1}_{score}\), and \(\:{G}_{Measure}\) of 97.46, 93.60, 87.61, 90.04, and 90.32%, correspondingly.

In Fig. 6, the training \(\:acc{u}_{y}\)(TRAAC) and validation \(\:acc{u}_{y}\)(VLAAC) outcomes of the LFWNNDMA-CADP method under ChestPain type is exhibited. The \(\:acc{u}_{y}\)values are estimated for 0–25 epochs. The figure underlined that the TRAAC and VLAAC values display a growing trend which notified the ability of the LFWNNDMA-CADP technique with better performance over various iterations. Additionally, the TRAAC and VLAAC stay adjacent over the epochs, which specifies the least overfitting and shows the superior performance of the LFWNNDMA-CADP technique, promising constant forecast on unnoticed samples.

In Fig. 7, the TRA loss (TRALS) and VLA loss (VLALS) graph of LFWNNDMA-CADP technique under the ChestPain type is presented. The value of losses is estimated for 0–25 epochs. It is denoted that the TRALS and VLALS values show a lower trend, reporting the capability of the LFWNNDMA-CADP method in balancing a trade-off between generalization and data fitting. The incessant reduction in loss values moreover assurances the higher performance of the LFWNNDMA-CADP method and fine-tune the prediction outcomes over time.

Figure 8 represents the classification results of the LFWNNDMA-CADP model with 70%TRAPH and 30%TESPH below ST_Slope. Figure 8a and b shows the confusion matrix with precise identification of all 2 class labels. Figure 8c exhibits the PR analysis, representing the greatest performance across all 2 class labels. Eventually, Fig. 8d depicts the ROC analysis, indicating efficient results with better ROC values for different class labels.

Table 3 presents the CAD recognition of the LFWNNDMA-CADP model under 70%TRAPH and 30%TESPH based on ST_Slope. The outcomes indicate that the LFWNNDMA-CADP model appropriately identified the samples. With 70%TRAPH, the LFWNNDMA-CADP approach gives average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l},\)\(\:{F1}_{score}\), and \(\:{G}_{Measure}\) of 97.30, 96.34, 91.96, 93.92, and 94.03%, respectively. Meanwhile, with 30%TESPH, the LFWNNDMA-CADP approach provides average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l},\)\(\:{F1}_{score}\), and \(\:{G}_{Measure}\) of 96.38, 92.68, 90.09, 91.30, and 91.34%, correspondingly.

In Fig. 9, the TRAAC and VLAAC outcomes of the LFWNNDMA-CADP technique under ST_Slope is exhibited. The \(\:acc{u}_{y}\)values are estimated for 0–25 epoch counts. The figure underlined that the TRAAC and VLAAC values display an increasing trend which informed the ability of the LFWNNDMA-CADP methodology with greater performance over various iterations. Also, the TRAAC and VLAAC remain adjacent over the epochs that shows the least overfitting and demonstrate superior efficiency of the LFWNNDMA-CADP technique, promising constant prediction on unnoticed samples.

In Fig. 10, the TRALS and VLALS graph of the LFWNNDMA-CADP methodology under ST_Slope is presented. The loss values are estimated for 0–25 epochs. It is denoted that the TRALS and VLALS values exhibit a reducing tendency, advising the skill of the LFWNNDMA-CADP methodology in balancing a trade-off between generalize and data fitting. The constant reduction in loss values furthermore guarantees the better performance of the LFWNNDMA-CADP model and tunes the prediction outcomes over time.

Figure 11 represents the classification results of the LFWNNDMA-CADP model with 70%TRAPH and 30%TESPH under HeartDisease. Figure 11a and b displays the confusion matrix with precise classification and identification of all 2 classes. Figure 11c exhibits the PR analysis, signifying the greatest performance across all 2 classes. Ultimately, Fig. 11d demonstrates the ROC analysis, exhibiting efficient outcomes with higher ROC values for distinctive classes.

Table 4 denotes the CAD recognition of LFWNNDMA-CADP approach below 70%TRAPH and 30%TESPH based on HeartDisease. The outcomes indicate that the LFWNNDMA-CADP approach appropriately identified the samples. With 70%TRAPH, the LFWNNDMA-CADP methodology provides average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l},\)\(\:{F1}_{score}\), and \(\:{G}_{Measure}\) of 99.49, 99.54, 99.49, 99.51, and 99.51%, respectively. Meanwhile, with 30%TESPH, the LFWNNDMA-CADP methodology presents an average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l},\)\(\:{F1}_{score}\), and \(\:{G}_{Measure}\) of 99.26, 99.26, 99.26, 99.26, and 99.26%, correspondingly.

In Fig. 12, the TRAAC and VLAAC outcomes of the LFWNNDMA-CADP technique under HeartDisease is displayed. The \(\:acc{u}_{y}\)values are estimated for 0–25 epochs. The outcome emphasized that the TRAAC and VLAAC values show an increasing trend which informed the ability of the LFWNNDMA-CADP methodology with enhanced performance over various iterations. Also, the TRAAC and VLAAC remain nearer over the epochs, which specifies minimum overfitting and displays superior efficiency of the LFWNNDMA-CADP methodology, promising constant forecast on unnoticed samples.

In Fig. 13, the TRALS and VLALS graph of the LFWNNDMA-CADP methodology under HeartDisease is shown. The value of losses is estimated for 0–25 epoch counts. It is signified that the TRALS and VLALS values exhibit a decreasing trend, informing the capability of the LFWNNDMA-CADP methodology in balancing a trade-off among generalization and data fitting. The constant reduction in loss values additionally pledges the greater performance of the LFWNNDMA-CADP model and fine-tune the forecast results over time.

Table 5; Fig. 14 study the comparative results of the LFWNNDMA-CADP technique with the current techniques44,45,46. The results underlined that the Support Vector Classifier (SVC), K-Nearest Neighbors (KNN), J48, and 1D CNN methods have stated worse performance. In the meantime, ACVD-HBOMDL, REFTree, and RF methods have attained closer outcomes. Moreover, the LFWNNDMA-CADP technique stated enhanced performance with maximal \(\:pre{c}_{n}\), \(\:rec{a}_{l},\)\(\:acc{u}_{y},\:\)and \(\:{F1}_{score}\) of 92.47, 93.17, 96.11, and 96.36%, respectively.

Conclusion

In this study, a LFWNNDMA-CADP technique is presented. The presented LFWNNDMA-CADP technique focuses on the multi-criteria decision-making model for predicting CAD using biomedical data. To complete this, the LFWNNDMA-CADP model comprises various stages namely data preprocessing, FS, classification model, and hyperparameter tuning method. At first, the data pre-processing stage utilizes Z-score normalization to convert the input data into a uniform format. Furthermore, the IACO model is used for choosing an optimal feature subset. Besides, the classification of CAD is performed by using the FWNN method. Finally, the hyperparameter selection of the FWNN model is accomplished by employing the hybrid COASaDE method. The simulation outcomes of the LFWNNDMA-CADP approach are investigated under a benchmark database. The experimental validation of the LFWNNDMA-CADP approach portrayed a superior accuracy value of 99.49% over existing techniques. The limitations of the LFWNNDMA-CADP approach comprise the dependence on a limited set of features for disease prediction, which may not fully capture the complexity of cardiovascular conditions. Furthermore, the performance of the model may be affected by the quality and variability of data from diverse sources, potentially limiting its generalizability across various populations. Another limitation is the computational complexity related with real-time prediction, which may affect its implementation in resource-constrained environments. Future work may concentrate on expanding the feature set to comprise more comprehensive physiological markers and enhancing the robustness of the model through domain adaptation techniques. Moreover, incorporating multi-modal data from various wearable devices could improve prediction accuracy. Lastly, optimizing the model for low-latency, edge-computing environments would enable faster, more effectual diagnosis and improved scalability in clinical applications.

Data availability

The data that support the findings of this study are openly available in the Kaggle repository at https://www.kaggle.com/datasets/fedesoriano/heart-failure-prediction.

References

Wang, P., Lin, Z., Yan, X., Chen, Z. & Ding, M. Y. S., & Meng, L. Awear able ECG monitor for deep learning based real-time cardiovascular disease detection. (2022).

Bakar, W. A. W. A., Josdi, N. L. N. B., Man, M. B. & Zuhairi, M. A. B. A review: Heart disease prediction in machine learning & deep learning. In: Proc. 19th IEEE Int. Colloq. Signal Process. Appl. (CSPA). 150–155 (2023).

Kim, C. et al. ‘A deep learning–based automatic anal ysis of cardiovascular borders on chest radiographs of valvular heart disease: Development/external validation. Eur. Radiol. 32 (3), 1558–1569 (2022).

Malnajjar, M., Khaleel, Abu-Naser & Samy, S. Heart sounds analysis and classification for cardiovascular diseases diagnosis using deep learning. http://dspace.alazhar.edu.ps/xmlui/handle/123456789/3534 (2022).

Qureshi, M. A., Qureshi, K. N., Jeon, G. & Piccialli, F. Deep learning based ambient assisted living for self-management of cardiovascular conditions. Neural Comput. Appl. 34 (13), 10449–10467 (2022).

Shrivastava, P. K., Sharma, M., Sharma, P. & Kumar, A. HCBiLSTM: a hybrid model for predicting heart disease using CNN and BiLSTM algorithms. Meas. Sensors 25, 100657 (2023).

Fajri, Y. A. Z. A., Wiharto, W. & Suryani, E. ‘‘Hybrid model feature selection with the bee swarm optimization method and Q-learning on the diagnosis of coronary heart disease. Information 14 (1), 15 (2022).

Cuevas-Chávez, A. et al. González-Serna, G. A systematic review of machine learning and IoT applied to the prediction and monitoring of cardiovascular diseases. Healthcare 11, 2240 (2023).

Plati, D. K. et al. Mach. Learn. Approach Chronic Heart Fail. Diagnosis Diagnostics. 11, 1863 (2021).

Bandera, N. H., Arizaga, J. M. M. & Reyes, E. R. Neutrosophic multi-criteria decision-making methodology for evaluation chronic obstructive pulmonary disease. Int. J. Neutrosophic Sci. (1), 184 – 84. (2023).

Mansoor, C. M. M., Chettri, S. K. & Naleer, H. M. M. Development of an efficient novel method for coronary artery disease prediction using machine learning and deep learning techniques. Technol. Health Care 1–25 (2024).

Kapila, R., Ragunathan, T., Saleti, S., Lakshmi, T. J. & Ahmad, M. W. Heart disease prediction using novel quine McCluskey binary classifier (QMBC). IEEE Access 11, 64324–64347 (2023).

Khan, H. et al. Heart disease prediction using novel ensemble and blending based cardiovascular disease detection networks: EnsCVDD-Net and BlCVDD-Net. IEEE Access (2024).

Nandy, S. et al. An intelligent heart disease prediction system based on swarm-artificial neural network. Neural Comput. Appl. 35 (20), 14723–14737 (2023).

Elsedimy, E. I., AboHashish, S. M. & Algarni, F. New cardiovascular disease prediction approach using support vector machine and quantum-behaved particle swarm optimization. Multimedia Tools Appl. 83 (8), 23901–23928 (2024).

Sowmiya, M. & Malar, E. Ensemble classifiers with hybrid feature selection approach for diagnosis of coronary artery disease. Sci. Temper. 14 (03), 726–734 (2023).

Saheed, Y. K., Salau-Ibrahim, T. T., Abdulsalam, M., Adeniji, I. A. & Balogun, B. F. Modified bi-directional long short-term memory and hyperparameter tuning of supervised machine learning models for cardiovascular heart disease prediction in mobile cloud environment. Biomedical Signal Process. Control 94, 106319 (2024).

Noor, A. et al. Heart disease prediction using stacking model with balancing techniques and dimensionality reduction. IEEE Access (2023).

Imran, M., Ramay, S. A. & Abbas, T. Predictive modeling for early detection and risk assessment of cardiovascular diseases using the ensemble stacked neural network model. J. Comput. Biomed. Inf. 7 (02), (2024).

Li, S., Nunes, J. C., Toumoulin, C. & Luo, L. 3D coronary artery reconstruction by 2D motion compensation based on mutual information. IRBM 39 (1), 69–82 (2018).

Zhao, X. et al. Early detection of coronary microvascular dysfunction using machine learning algorithm based on vectorcardiography and cardiodynamicsgram features. IRBM 44, (6), 100805 (2023).

Ma, S., Chen, J. & Ho, J. W. An edge-device-compatible algorithm for valvular heart diseases screening using phonocardiogram signals with a lightweight convolutional neural network and self-supervised learning. Comput. Methods Programs Biomed. 243, 107906 (2024).

Muthulakshmi, M. & Kavitha, G. Cardiovascular Disorder severity detection using myocardial anatomic features based optimized extreme learning machine approach. IRBM 43 (1), 2–12 (2022).

Gupta, A., Chouhan, A. S., Jyothi, K., Dargar, A. & Dargar, S. K. March. An effective investigation on implementation of different learning techniques used for heart disease prediction. In 2024 11th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions)(ICRITO) 1–5 (IEEE, 2024).

Gupta, V., Mittal, M., Mittal, V., Diwania, S. & Saxena, N. K. ECG signal analysis based on the spectrogram and spider monkey optimisation technique. J. Inst. Eng. India Ser. B 104 (1), 153–164 (2023).

Honi, D. G. & Szathmary, L. A one-dimensional convolutional neural network-based deep learning approach for predicting cardiovascular diseases. Inform. Med. Unlock. 49, 101535 (2024).

Lazazzera, R. & Carrault, G. MonEco: a novel health monitoring ecosystem to predict respiratory and cardiovascular disorders. Irbm 44 (2), 100736 (2023).

Gupta, V., Sharma, A. K., Pandey, P. K., Jaiswal, R. K. & Gupta, A. Pre-processing based ECG signal analysis using emerging tools. IETE J. Res. 70 (4), 4219–4230 (2024).

El Boujnouni, I., Harouchi, B., Tali, A., Rachafi, S. & Laaziz, Y. Automatic diagnosis of cardiovascular diseases using wavelet feature extraction and convolutional capsule network. Biomed. Signal Process. Control 81, 104497 (2023).

Filist, S. et al. Biotechnical neural network system for predicting cardiovascular health state using processing of bio-signals. Int. J. Med. Eng. Inf. 16 (4), 324–349 (2024).

Bhardwaj, A., Singh, S. & Joshi, D. Explainable deep convolutional neural network for valvular heart diseases classification using pcg signals. IEEE Trans. Instrum. Meas. 72, 1–15 (2023).

Baseer, K. K. et al. Healthcare diagnostics with an adaptive deep learning model integrated with the internet of medical things (IoMT) for predicting heart disease. Biomed. Signal Process. Control 92, 105988. (2024).

Ahmed, L. J. et al. An efficient heart-disease prediction system using machine learning and deep learning techniques. In 2023 9th International Conference on Advanced Computing and Communication Systems (ICACCS) Vol. 1, 1980–1985. (IEEE, 2023).

Dai, Y. et al. Deep learning fusion framework for automated coronary artery disease detection using raw heart sound signals. Heliyon 10 (16), (2024).

Muthu, S. P. V. & Devadoss, A. K. V. Genetically optimized neural network for early detection of glaucoma and cardiovascular disease risk prediction. Traitement Du Signal. 40 (4), (2023).

Seoni, S. et al. Application of spatial uncertainty predictor in CNN-BiLSTM model using coronary artery disease ECG signals. Inform. Sci. 665, 120383 (2024).

Khanna, A. et al. Internet of things and deep learning enabled healthcare disease diagnosis using biomedical electrocardiogram signals. Expert Syst. 40 (4), e12864 (2023).

Sasikala, P. & Mohanarathinam, A. A powerful peripheral arterial disease detection using machine learning-based severity level classification model and hyper parameter optimization methods. Biomed. Signal Process. Control 90 105842 (2024).

Muthulakshmi, P. & Parveen, M. Z-Score normalized feature selection and iterative African buffalo optimization for effective heart disease prediction. Int. J. Intell. Eng. Syst. 16 (1), (2023).

Zhang, J., Liu, W., Wang, Z. & Fan, R. Electric vehicle power consumption modelling method based on improved ant colony optimization-support vector regression. Energies 17 (17), 4339 (2024).

Ahmadi, M., Dashti Ahangar, F., Astaraki, N., Abbasi, M. & Babaei, B. FWNNet: presentation of a new classifier of brain tumor diagnosis based on fuzzy logic and the wavelet-based neural network using machine-learning methods. Comput. Intell. Neurosci. 2021, (2021).

Fakhouri, H. N., Ishtaiwi, A., Makhadmeh, S. N., Al-Betar, M. A. & Alkhalaileh, M. Novel hybrid crayfish optimization algorithm and self-adaptive differential evolution for solving complex optimization problems. Symmetry 16 (7), 927 (2024).

https://www.kaggle.com/datasets/fedesoriano/heart-failure-prediction.

Obayya, M., Alsamri, J. M., Al-Hagery, M. A., Mohammed, A. & Hamza, M. A. Automated cardiovascular disease diagnosis using honey badger optimization with modified deep learning model. IEEE Access 11, 64272–64281 (2023).

Khan Mamun, M. M. R. & Elfouly, T. Detection of cardiovascular disease from clinical parameters using a one-dimensional convolutional neural network. Bioengineering 10 (7), 796 (2023).

Ogunpola, A., Saeed, F., Basurra, S., Albarrak, A. M. & Qasem, S. N. Machine learning-based predictive models for detection of cardiovascular diseases. Diagnostics 14 (2), 144 (2024).

Acknowledgements

This Project was funded by the Deanship of Scientific Research (DSR) at King Abdulaziz University (KAU), Jeddah, Saudi Arabia under grant no. (GPIP: 570-156-2024). The authors, therefore, acknowledge with thanks DSR at KAU for technical and financial support.

Author information

Authors and Affiliations

Contributions

Mahmoud Ragab: Conceptualization, methodology development, formal analysis, investigation, writing original draft. Sami Saeed Binyamin: Formal analysis, investigation, visualization, writing. Wajdi Alghamdi: Formal analysis, review and editing, validation. Turki Althaqafi: Methodology, investigation, review and editing. Fatmah Yousef Assiri: Review and editing, experiment. Mohammed Khaled Al-Hanawi: Conceptualization, discussion, writing original draft, investigation. Abdullah AL-Malaise AL-Ghamdi: Conceptualization, methodology development, supervision. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ragab, M., Binyamin, S.S., Alghamdi, W. et al. Leveraging fuzzy embedded wavelet neural network with multi-criteria decision-making approach for coronary artery disease prediction using biomedical data. Sci Rep 14, 31087 (2024). https://doi.org/10.1038/s41598-024-82019-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-82019-0