Abstract

Potato late blight is a common disease affecting crops worldwide. To help detect this disease in complex environments, an improved YOLOv5 algorithm is proposed. First, ShuffleNetV2 is used as the backbone network to reduce the number of parameters and computational load, making the model more lightweight. Second, the coordinate attention mechanism is added to reduce missed detection for leaves that are overlapping, damaged, or hidden, thereby increasing detection accuracy under challenging conditions. Lastly, a bidirectional feature pyramid network is employed to fuse feature information of different scales. The study results show a significant improvement in the model’s performance. The number of parameters was reduced from 7.02 to 3.87 M, and the floating point operations dropped from 15.94 to 8.4 G. These reductions make the model lighter and more efficient. The detection speed increased by 16 %, enabling faster detection of potato late blight leaves. Additionally, the average precision improved by 3.22 %, indicating better detection accuracy. Overall, the improved model provides a robust solution for detecting potato late blight in complex environments. The study’s findings can be useful for applications and further research in controlling potato late blight in similar environments.

Similar content being viewed by others

Introduction

Potato, one of the most crucial food crops worldwide, faces significant losses each year due to plant diseases1,2. Among the various plant diseases, late blight is one of the most prevalent and serious, caused by pathogenic molds that infect potato leaves, leading to localized wilting and significantly hindering potato growth3. This not only affects crop yield but also results in substantial economic losses for the potato industry. Therefore, the rapid identification of late blight-infected leaves in agricultural fields are crucial for the effective prevention and management of potato diseases4.

Traditionally, identifying potato late blight has relied heavily on manual labor, with plant pathology experts needing to physically visit fields to identify the disease5,6. Unfortunately, this method has proven inefficient. However, advances in machine learning technology7,8 and improvements in computer hardware have led to significant progress in using image processing techniques to recognize plant diseases. Many researchers have successfully utilized clustering9, random forest10, and other methods for disease recognition, reducing both cost and labor. Nonetheless, these methods still require manual design of disease extraction features and face challenges such as limited adaptability to different situations and susceptibility to environmental factors.

With the rapid advancements in computer hardware and significant breakthroughs in deep learning, image processing techniques utilizing convolutional neural networks (CNN)11,12, have emerged as a prominent area of research. Numerous scholars have achieved notable success in target classification, detection, and segmentation using these neural network models. In the context of plant leaf disease recognition, the PlantVillage dataset created by Mohanty et al.13 has greatly facilitated research efforts, prompting many researchers to explore deep learning methodologies using this dataset. However, the images collected in laboratory settings often feature simple backgrounds and under constant lighting conditions. While some researchers have attempted to enhance the dataset through various data augmentation techniques, the effectiveness of models trained on these datasets remains constrained when applied to images captured in complex agricultural environments.

To fundamentally enhance the diversity of datasets, some researchers14,15,16, have developed plant disease leaf datasets that include complex backgrounds and have studied the recognition and detection of associated leaf diseases based on these datasets. However, these researchers often focus on addressing the low accuracy of plant leaf recognition and detection in complex environments, prioritizing improvements in model accuracy while overlooking the importance of model lightweighting. These models can achieve the desired accuracy when processing plant leaf images in complex environments. However, they are not suitable for deployment in resource-constrained edge settings because of their large number of parameters and high computational requirements.

In summary, detecting potato late blight leaves in complex environments presents several key challenges. First, the images of potato late blight leaves in publicly available datasets were primarily taken in simple laboratory settings. As a result, detection models trained on this data often perform poorly in real farmland conditions. Second, potato disease leaves exhibit significant diversity in complex environments, including variations in leaf size, capture angles, occlusions, and leaf damage. These factors make it harder to identify and detect the disease, increasing the demands on the model’s robustness and accuracy. Third, many existing detection models require substantial computational resources, which limits their use on resource-constrained edge devices. Therefore, it is essential to develop an efficient and lightweight model for detecting potato late blight leaves in complex environments.

To address the challenges mentioned above, this paper develops a YOLOv5-based lightweight model specifically designed for detecting potato late blight leaves in complex environments. This model effectively balances complexity and detection efficiency. The main contributions of this work are as follows:

-

A potato late blight leaf image dataset was constructed that reflects complex environments. This dataset was created by collecting images from real farmland, along with employing multiple data augmentation techniques. This ensures the robustness of the proposed model in real scenario applications.

-

The backbone network of the original YOLOv5 was enhanced by integrating ShuffleNetV217, which employs a coordinate attention18 mechanism. This change greatly decreases the number of parameters and the computational burden of the model, while also improving its ability to extract features. Consequently, the model is better suited for use on embedded devices and reduces the rates of missed detections for potato late blight leaves in challenging environments.

-

The feature fusion network of the original model was improved by incorporating bidirectional feature pyramid network19, which enhances the model’s ability to extract features of potato late blight leaves at different scales. This improvement also boosts the model’s generalization when dealing with potato leaves at various growth stages and from different shooting distances.

The following sections of this paper are organized as follows: Section “Related works” discusses related works on plant disease identification. Section “Materials and methods” outlines the materials used and provides a detailed description of the proposed methodology. Section “Experiments” describes the experimental environment and parameter settings. Section “Experimental results” analyzes and validates the experimental results in detail. Finally, Section “Conclusion” summarizes the main findings of this paper and offers future perspectives.

Related works

In traditional plant image processing research, various feature extraction techniques and machine learning algorithms have been used for plant image recognition studies. For example, Nebojsa et al.20 improved the firefly algorithm and used it to optimize the parameters of the support vector machine algorithm, which achieved better results than the original algorithm in the task of classifying four categories of images: apples, cherries, peppers, and tomatoes. Nidhis et al.9 applied a clustering algorithm to categorize common rice leaf diseases, calculated the diseased area’s percentage, and thereby classified the disease severity. Govardhan et al.10 experimented on tomato leaf images from eight categories, comparing six machine learning methods including K-nearest neighbors, decision tree, and random forest. They found that the random forest method achieved the best results with a classification accuracy of 95%. Bukumir et al.21 extracted 12 features from carrots, such as length, mean diameter, and RGB color, and used a Bayesian-optimized cascade graph convolution neural network for grading. These methods based on traditional machine learning for plant image processing have shown some success, but they rely on manual feature extraction, are sensitive to environmental changes, and suffer from complex operation and poor generalization.

Compared with traditional methods, deep learning techniques are increasingly studied and utilized in plant leaf disease recognition22 due to their advantages like automatic feature extraction and excellent performance. For example, Eunice et al.11 employed various popular deep learning models for disease classification of 38 crop leaf categories in the PlantVillage dataset, finding that DenseNet-121 achieved the best performance with a mean average precision (mAP) of 99.81%. Similarly, Deng et al.23 constructed a classification network combining residual structure and attention mechanism, achieving 87.77% accuracy on 16 disease datasets across four plant species. Asif et al.24 designed a convolutional neural network model for diagnosing early blight, late blight and healthy potato leaves, attaining a maximum classification accuracy of 97%. These deep learning methods demonstrate high accuracy in classifying plant leaves and diseases, significantly aiding rapid identification and diagnosis. However, image classification alone does not fully address the complexity of diseases, such as the location of infected leaves and infection severity. Thus, employing object detection techniques is essential for a more comprehensive diagnosis of diseased leaves, providing more precise data to support disease management.

Deep learning based object detection methods are classified into two types: two-stage and one-stage. Two-stage algorithms, like Faster R-CNN, were initially dominant in target detection due to their high accuracy. However, their long training times and slow detection speeds limit their applicability in real-time industrial scenarios. In contrast, one-stage algorithms like SSD, EfficientDet, and the YOLO series have gained significant attention for their superior real-time performance and ease of use. Notably, the YOLO series has shown impressive results in both research and various industry applications. For instance, Zhong et al.12 developed an automated road defect detection model using YOLOv5, achieving an average accuracy of 81.6%. Qu et al.25 proposed a YOLOv8-LA model for underwater small target detection by improving the convolutional module and overall architecture of YOLOv8, achieving the highest mAP of 84.7% on a public dataset. Jovanovic et al.26 utilized both nano and small models of YOLOv8 to accomplish real-time detection of rocket bodies and engine flames, showcasing the promising applications of computer vision techniques in the field of passive rocket detection and tracking. It can be seen that the YOLO series algorithms have demonstrated effective applications across a variety of industries

In the field of plant leaf disease diagnosis, the YOLO family of algorithms has also been heavily researched and applied. For example, By incorporating an attention mechanism into YOLOv5, Zhang et al.27 achieved 85.59% precision and 83.70% recall in detecting grape downy mildew leaves. This advancement offers a quick and accurate deep learning approach for the automatic detection of grape leaf diseases. Zhao et al.28 proposed a novel loss function and integrated it into the YOLOv7 model, combining it with transfer learning. This approach achieved a mAP of 96.75% in detecting five common plant leaf diseases. Abdullah et al.29 enhanced the original YOLOv5 model by incorporating dilated convolution, global attention mechanism and the EIoU loss function. This modified model successfully detected healthy tomato leaves as well as nine types of diseased tomato leaves from the PlantVillage dataset. The detection results demonstrated a higher mAP of 91.40%, outperforming the original YOLOv5, as well as the YOLOv7 and YOLOv8 algorithms. Although these studies show excellent detection accuracy, the datasets were mostly collected in controlled environments with simple backgrounds and constant light. This limits the effectiveness of models trained on these datasets when applied to real farmland situations.

Some researchers have improved model performance for practical applications using images from real plant environments. Liu et al.14 captured images at a greenhouse tomato planting site to create a dataset of tomato brown rot with real backgrounds, achieving a detection accuracy of 94.6% using the YOLOv5 method. Yan et al.15 collected images of apple leaves in natural settings from the internet, creating a dataset called ALDD that includes eight typical diseased and healthy apple leaves. They achieved an average detection accuracy of 88.6% with their proposed FSM-YOLO method. Leng et al.16 conducted experiments using the YOLOv5 model with images of maize leaf blight taken in farmland, achieving a mAP of 87.5%. These studies are valuable for improving the practical application of leaf disease detection by building datasets in complex environments. However, these studies have primarily concentrated on improving model accuracy to tackle the challenges presented by complex environmental images, while overlooking the importance of lightweight solutions. As a result, the models may be too complex to deploy effectively on resource-constrained embedded devices.

In conclusion, recent studies mostly focus on datasets from controlled environments, overlooking the complexity of real farmland environments, which limits the model’s generalization. Several studies have achieved high detection accuracy using datasets from real farmland environments. However, the complexity of these models presents challenges when deploying them on edge devices with limited computational resources. The work in this paper should focus on improving detection accuracy in complex settings, while also enhancing model efficiency and lightweight design to meet computational limits and real-time needs in practical applications.

Materials and methods

Data collection and processing

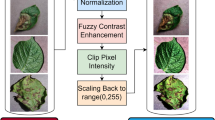

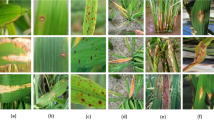

To create a dataset specifically for potato late blight leaf detection in complex environments, a total of 2592 images were collected from modernized potato planting bases in Yunnan Province and various internet sources. Due to limited storage space, the size of these images was reduced from 7.72 GB to 342 MB using the compression tool docosmall. Some of the processed pictures are shown in Fig. 1.

The collected images were then labeled using LabelImg, resulting in 27,540 instances of late blight leaves. To expand the dataset, random rotation, mirroring, and scaling operations were applied to the original images. Additionally, brightness adjustments, color variations, and noise were introduced to further augment the dataset. These processes produced a total of 20,736 images. After manually filtering out poor-quality images, a final dataset of 19,950 images with 215,856 labeled instances was obtained.

The dataset was then divided into three sets: training, validation, and testing, in a ratio of 7:2:1. Care was taken to ensure that the average number of annotations per image was similar across all three sets, guaranteeing balanced representation. During the training process, the Mosaic data augmentation method included in YOLOv5 was disabled. This decision was made to prevent any potential negative impact from overlapping data augmentation techniques and to conserve computational resources.

Improved YOLOv5-based detection

YOLOv5 is a algorithm derived from the real-time, single-stage target detection algorithm YOLO (You Only Look Once). In October 2021, Ultralytics introduced the sixth iteration of YOLOv5, which is comprised of four versions: YOLOv5s, YOLOv5m, YOLOv5l, and YOLOv5x. The primary distinction between these versions lies in the depth and width of the network, which influences the quantity of parameters and the training duration of the model. Over time, newer YOLO versions like YOLOv6, YOLOv7, and YOLOv8 have been proposed. However, YOLOv5 still has a strong accumulation of community technologies, which provide a large number of solutions for development and deployment. It will take time for new versions to gain similar support. Additionally, YOLOv5, due to its earlier introduction and proven advantages, has been successfully applied in various industrial scenarios, demonstrating mature experience and positive outcomes. In contrast, while YOLOv8 has shown superior performance on standard test datasets, its application across diverse environments in different scenarios still requires extensive validation. Therefore, this paper chooses YOLOv5 as the foundational research framework for detecting potato late blight in complex environments, aiming for efficient development and stable deployment.

To adapt the original YOLOv5 model to detect potato late blight leaves in complex farm environments, this study incorporates several key improvements. Firstly, ShuffleNetV2 is employed as the backbone network to fulfill the lightweight requirements. Secondly, a coordinate attention mechanism is integrated into the backbone to enhance detection accuracy and reduce false negatives. Thirdly, BiFPN is used to merge features of different scales, thereby enhancing the model’s feature expression capability and improving the detection of small targets. The improved YOLOv5 is illustrated in Fig. 2.

Lightweight backbone network

In large potato fields, implementing disease detection models on edge devices such as UAVs (Unmanned Aerial Vehicles) offers a practical solution30 for automated disease detection. However, the limited storage and performance of these edge devices make traditional detection models unsuitable31. Therefore, this paper uses ShuffleNetV2 to lighten the YOLOv5 backbone network, making it more suitable for edge device deployment.

ShuffleNetV2 is a lightweight network architecture designed for edge devices. Fig. 3 illustrates two main unit structures of ShuffleNetV2. Stage 1 is the basic unit of ShuffleNetV2. It starts by dividing the input feature map into two segments via channel splitting. One segment is processed through three convolution operations, while the other remains unprocessed. These segments are then concatenated, followed by a channel shuffle operation to facilitate information exchange among channels. Stage 2 is the downsampling unit and differs from Stage 1 by not splitting the input feature map. Instead, it processes the input through deep convolution operations on two separate routes. The resulting feature maps are concatenated and subjected to a channel shuffle operation, which doubles the number of channels in the final output. This stage is designed to increase the network’s capacity without a significant increase in computational complexity.

Coordinate attention

In image processing tasks, attention mechanisms32 assigns weights to different features of the input data like channels and locations, enabling the model to focus on key areas and thus improve its ability to select and weight features. This dynamic weight allocation not only enhances the model’s accuracy in complex scenarios but also strengthens its robustness, allowing it to better adapt to various environments. In the task of detecting potato late blight in complex environments, factors such as occlusion, complex backgrounds, and lighting changes can interfere with the model’s judgment. Attention mechanisms can automatically adjust the importance of each position or channel, highlighting relevant areas for disease leaf recognition and localization while ignoring irrelevant backgrounds. This process enhances the model’s ability to extract features related to diseased leaves33 and ultimately improves the accuracy of disease leaf detection.

To address the challenges of detecting potato late blight in complex environments, this paper introduces the coordinate attention (CA) mechanism into the YOLOv5 network architecture. Coordinate attention is a hybrid attention model that integrates position information into channel attention and encodes it bidirectionally. This approach enables the model to capture both channel relationships and long-distance dependencies more effectively. The CA mechanism consists of two main steps: coordinate information embedding and coordinate attention generation. Specifically, for a feature map x with an input size of \(C{\times }H{\times }W\), a pooling kernel of size (H,1) is used to encode along the horizontal direction. This operation results an output \(z^h\) with the number of channels C, height H, and width 1. The output \(z^h\) for the h-th row of the c-th channel can be described as follows:

In the above equation, \(x_c(h, i)\) represents the value of the feature map x at the c-th channel, located in the h-th row and i-th column. In a similar manner, the output \(z^w\) for the w-th column of the c-th channel in the vertically encoded result can be defined as follows:

Likewise, \(x_c(j, w)\) has the same meaning as \(x_c(h, i)\) in Eq. (1). The two output feature maps mentioned above are concatenated into a new feature map of size \(C{\times }1{\times }\)(H+W). This concatenated feature is then downsampled using a 1\(\times\)1 convolution, denoted as \(F_1\). Finally, a non-linear activation function \(\delta\) is applied to obtain the final output, f. This can be represented as follows:

where [,] denotes the concatenation operation along the spatial dimension. Following the above steps, the output f is split into two components \(f^h\) and \(f^w\). These two feature maps are then transformed into two new feature maps, respectively, using two separate 1\(\times\)1 convolutional kernels, \(F_h\) and \(F_w\). At this point, the horizontal and vertical attention weights \(g^w\) and \(g^h\) are obtained. This can be described as:

The \(\sigma\) in the above is the sigmoid activation function. In the final step, the feature information of \(g^h\) and \(g^w\) are fused and then multiplied with the original feature map x to yield the final coordinate attention module, denoted as y. The weight value for the c-th channel in this module is defined as:

As mentioned in the above equation, \(g_c^h\left( i\right)\) represents the attention value for the c-th channel at i-th row, while \(g_c^w\left( j\right)\) denotes the attention value for the c-th channel at j-th column. By consecutively multiplying them with the value \(x_c\left( i,j\right)\) at the i-th row and j-th column of the c-th channel in the feature map x, the final weight \(y_c\left( i,j\right)\), incorporating the coordinate attention mechanism, is obtained. In this study, three coordinate attention modules are incorporated after each stage 1 of ShuffleNetV2. The placement of these additional coordinate attention modules is illustrated in Fig. 4.

Bidirectional feature pyramid network

In practical applications, such as detecting potato leaves in a field, the size of potato leaves can vary with the growth stages. Moreover, leaves from the same plant may differ in size, and their apparent size can also change depending on the shooting distance. Therefore, fusing features from different scales is crucial to improve the model’s ability to detect potato late blight leaves of various sizes.

The Feature Pyramid Network34 (FPN) enhances by merging feature information from feature maps of various scales within the backbone network. This process allows larger-scale feature maps to retain higher dimensional feature information while compensating for any potential loss during the downsampling of smaller-scale feature maps.

In the original YOLOv5, a Path Aggregation Network (PAN) based on improved FPN is used as the feature fusion network. This network enables bidirectional fusion of features across different scales. However, PAN simply integrates features through a cascading structure without considering the weighted contributions of feature maps at different scales during fusion, which leads to high computational complexity and less efficient. To address these issues, Tan et al.19 proposed the Bidirectional Feature Pyramid Network (BiFPN) in EfficientDet. BiFPN employs a weighted feature fusion technique where different scales of features are combined using learnable weights. This adaptive mechanism helps in effectively merging features of varying resolutions and importance, leading to better feature representation. Compared to PAN, BiFPN performs feature fusion more efficiently, significantly enhancing the model’s performance. The structural comparison of these two feature fusion networks is illustrated Fig. 5.

Experiments

Experiment settings

In the experiments conducted in this paper, consistency across all hardware and software environments was ensured to maintain the comparability and reliability of the results. The hardware configuration includes a CPU setup of 6 \(\times\) Xeon E5-2678 v3 and an NVIDIA GeForce RTX 2080 Ti GPU with 16GB of memory. On the software side, Ubuntu 18.04 was used as the operating system, Python 3.8 as the programming language, PyTorch 1.10 as the deep learning framework, and CUDA 11.3 for GPU acceleration.

The input size for all training and testing images was standardized to 640 pixels to ensure consistency in image scale across different model configurations. During training, a batch size of 16 and 300 epochs were utilized. The initial learning rate was set at 0.01 to facilitate rapid convergence in the early stages of training, while a cyclic learning rate of 0.002 was employed to dynamically adjust according to changes in the loss curve. Stochastic gradient descent (SGD) was employed for backpropagation, with a momentum of 0.94 and a weight decay of 0.0005. Additionally, a confidence threshold of 0.5, and a non-maximum suppression threshold was established at 0.3.

Evaluation metrics

In this experiment, the evaluation metrics were primarily chosen based on the requirements for deploying the detection model in real-world agricultural settings. The main focus was on the model’s detection performance and its lightweight nature. To evaluate the detection performance of the model, Precision (P), Recall (R), and Average Precision (AP) were used as the evaluation metrics. The three evaluation metrics are formulated as:

In the formulas above, TP (True Positive) refers to the number of positive samples correctly identified as positive. FP (False Positive) denotes the number of negative samples incorrectly identified as positive. FN (False Negative) represents the number of positive samples incorrectly identified as negative. Furthermore, this study employs the number of parameters, floating point operations (FLOPs), and frames per second (FPS) as evaluation metrics for model lightweighting. These metrics are critical for assessing model efficiency, particularly given the limited computational resources in practical applications and the necessity to measure the degree of model lightweighting.

Experimental results

Comparison of backbone networks

To demonstrate the feasibility of the lightweight improvement method proposed in this paper, this section employs CSPDarknet53, MobileNetV335, and ShuffleNetV2 as the respective backbone networks. The experiments compare the differences in AP, parameter count, and FLOPs among these different backbone network models. Table 1 presents the model detection performance of YOLOv5 under different backbone networks. The results indicate that both MobileNetV3 and ShuffleNetV2 significantly reduce the parameters and FLOPs compared to the original YOLOv5 model with CSPDarknet53 as the backbone network. Notably, the YOLOv5 model with MobileNetV3 as the backbone network exhibits the most pronounced lightweighting effect, with a reduction of 3.48M parameters and FLOPs decreasing from 15.9\(\times 10^{9}\) to 6.3\(\times 10^{9}\). However, this comes at the cost of a nearly 6% decrease in AP. Considering the objective of lightweighting the backbone network while minimizing accuracy loss, this paper selects ShuffleNetV2 as the lightweight backbone network due to its superior overall performance. Although the YOLOv5 model with ShuffleNetV2 as the backbone network has slightly higher parameters and FLOPs compared to MobileNetV3, its AP is 2.66% higher. This choice aligns better with the requirements of potato late blight leaf detection in complex environments addressed in this study.

Comparison of attention mechanisms

In this section, the introduction of attention mechanisms into the lightweight backbone network is discussed. The attention mechanisms incorporated include SE36 (Squeeze-and-Excitation networks), CBAM37 (Convolutional Block Attention Module), and CA. Experiments are conducted to compare their potential enhancements on the detection performance of the YOLOv5-ShuffleNetV2 network. The results of these experiments are presented in Fig. 6 and Table 2.

Table 2 provides a detailed comparison of various attention mechanisms on the model’s performance metrics, including AP, precision, recall, and parameter count. Without introducing any attention mechanism, the network exhibits significantly lower recall compared to precision, leading to frequent misses in detecting potato late blight leaves in complex environments. When the SE mechanism is introduced, both precision and recall see improvements, with precision increasing by 1.83% and recall by 2.87 %. The CBAM further enhances AP and recall,although its precision is slightly lower than that achieved with SE. The addition of the CA leads to the greatest improvement in AP, precision, and recall, showing AP increasing by 3.64%, precision by 1.93%, and recall by 4.76%, compared to the original model without any attention mechanism. As illustrated in Fig. 6, the P-R curves also demonstrates improved precision and recall upon incorporating CA. Additionally, the CA attention mechanism adds the fewest parameters, aligning well with this study’s goal for a lightweight model. In conclusion, the model incorporating CA most effectively meets the experimental expectations by significantly enhancing the original network’s low precision and recall for detecting potato late blight leaves, all while minimally increasing the parameter count.

Ablation experiments

To validate that the enhancement strategies proposed in this paper effectively improve the detection of potato late blight leaves in complex environments. Based on the YOLOv5-ShuffleNetV2, this section includes CA and BiFPN into the network as independent validation modules for ablation experimental analysis. The experimental results are presented in Table 3 and Fig. 7. In the table, a “\(\checkmark\)” means the module is used, while a “—” means it is not.

As shown in Table 3, the AP of the original lightweight network improves after adding either CA or BiFPN individually, with CA showing an additional 1.11% increase compared to BiFPN. When both modules are added simultaneously, the model’s detection performance sees further improvement, achieving a 4.78% increase in AP with only a 0.11M rise in parameters, maintaining the model’s lightweight nature. Fig 7 illustrates the comparison of loss values during model training with different improvement modules. The results show that the model converges the fastest and achieves the lowest final loss value when both modules are implemented together. This outcome aligns with the study’s expectations for enhancing potato late blight leaf detection in complex environments, effectively accomplishing the detection task in such scenarios.

Comparison of different size models

This section compares the proposed method with YOLOv5 and YOLOv8 to evaluate the balance between detection accuracy, number of parameters, and computational complexity for different model sizes. The goal is to choose the best potato late blight leaf detection model for the scenarios discussed in this paper.

Table 4 shows that larger models improve detection accuracy for all models, especially for YOLOv5 and YOLOv8, highlighting the benefits of larger models. However, these improvements come with more parameters and higher computational needs, which may limit the deployment of the model in resource-constrained devices. YOLOv8 outperforms YOLOv5 in detection accuracy for same sizes, although models of the same size generally have more parameters and require more computation, these enhancements are manageable on devices with sufficient resources.

The proposed method achieves a lightweight model comparable to the original YOLOv5 while also improving average precision across all size configurations. Specifically, it enhances AP by 3.22% for small size, 1.51% for medium size, and 0.86% for large size. This suggests that the proposed improvement strategy is especially effective for smaller YOLOv5 models, offering a more optimized solution for lightweight models used in detecting potato late blight on leaves. Compared to YOLOv8, the proposed model maintains higher accuracy in small size while being more lightweight. Although its detection accuracy for medium and large configurations is slightly lower than that of YOLOv8, the proposed model clearly outperforms in terms of reduced parameter count and computational load. Overall, the proposed method optimally balances detection accuracy, parameter count, and computational complexity in the small size model. It reduces parameters and computation while maintaining high accuracy, making it more suitable for applications in resource-limited edge devices.

Comparison with other methods

In this section, the proposed method is rigorously compared with other lightweight algorithms to substantiate its superior efficacy in detecting potato late blight leaves in complex environments. The experiments meticulously control variables and utilize the same dataset to ensure uniform experimental conditions. The outcomes of the experiment are presented in Table 5.

Based on the comparison data in Table 5, it is evident that the YOLOv5s algorithm has the highest FLOPs and the slowest detection speed. In contrast, the YOLOv3-tiny algorithm achieves the fastest detection speed at 87 FPS but has the lowest AP. The YOLOv5-ghost algorithm, while being the lightest, has lower AP and FPS compared to the method proposed in this paper. The proposed method exhibits the highest AP, with parameters and FLOPs slightly higher than YOLOv5-ghost but significantly lower than other methods. It achieves an AP of 77.65% and a detection speed of 51 FPS, both surpassing YOLOv5s and YOLOv5-ghost. In conjunction with Fig. 8, the method proposed in this paper achieves an optimal balance among detection accuracy, model complexity, and detection speed, resulting in superior overall performance. It proves to be an effective approach for detecting late blight on potato leaves in complex environments.

Conclusion

In this work, an enhanced YOLOv5 model designed for deployment in farmland settings was introduced, with the aim of detecting potato late blight leaves in complex environments. A specialized dataset was created, followed by extensive experiments. The main work is in the following aspects: Firstly, various backbone networks were compared, and ShuffleNetV2 was selected as the lightweight backbone. This choice significantly reduces the model’s parameters and computation while maintaining detection accuracy, facilitating deployment in resource-constrained embedded devices. Secondly, building on lightweighting, the study examined various attention mechanisms’ effects on model performance. Results indicated that introducing the CA mechanism significantly improved the model’s AP value and recall rate, effectively reducing missed detections of potato late blight leaves in the original model. Next, ablation experiments verified that combining CA and BiFPN further enhances the model’s performance while preserving its lightweight feature. Finally, the proposed method is compared with other methods. Experiments show that the proposed improvement strategy achieved the highest increase in AP at small size, reaching a value of 3.22%, while also offering the most lightweight solution. Compared to other lightweight detection methods, it excels in detection accuracy and speed, achieving an optimal balance in overall performance. In summary, this paper’s key contribution is the development of an efficient and lightweight method for detecting potato late blight in complex environments. This method balances detection accuracy, model complexity, and speed, offering effective technical support for diagnosing late blight in real potato farmland scenarios.

The proposed method accurately and quickly detects potato late blight leaves in complex environments but has limitations. It can identify diseased leaves but cannot measure how severe the disease is. This is important in potato farming because different severity levels need different treatments to keep plants healthy and productive. The inability of the current method to assess severity could potentially reduce control effectiveness and lead to resource waste or unnecessary chemical use. Therefore, future research should include area calculations for leaves and diseases based on the proposed detection model. Additionally, it should precisely assess potato late blight severity through diseased area percentage. This severity grading will offer more effective decision support and a comprehensive diagnostic information for the control of potato late blight.

Data availability

The datasets generated and analyzed during the current study are available from the corresponding author on reasonable request.

References

Sharma, S., Kooner, R. & Arora, R. Insect pests and crop losses. Breeding Insect Resistant Crops Sustain. Agricul.[SPACE]https://doi.org/10.1007/978-981-10-6056-4_2 (2017).

Zaheer, K. & Akhtar, M. H. Potato production, usage, and nutrition-a review. Crit. Rev. Food Sci. Nutr. 56, 711–721. https://doi.org/10.1080/10408398.2012.724479 (2016).

Chakraborty, K., Mukherjee, R., Chakroborty, C. & Bora, K. Automated recognition of optical image based potato leaf blight diseases using deep learning. Physiol. Molecular Plant Pathol. 117, 101781. https://doi.org/10.1016/j.pmpp.2022.101781 (2022).

Fernández, C., Leblon, B., Haddadi, A., Wang, K. & Wang, J. Potato late blight detection at the leaf and canopy levels based in the red and red-edge spectral regions. Remote Sens. 12, 1292. https://doi.org/10.3390/rs12081292 (2020).

Sharma, R., Singh, A., Dutta, M., Riha, K. & Kriz, P. Image processing based automated identification of late blight disease from leaf images of potato crops. In 2017 40th international conference on telecommunications and signal processing (TSP), 758–762, https://doi.org/10.1109/TSP.2017.8076116 (IEEE, 2017).

Paluchowska, P., Śliwka, J. & Yin, Z. Late blight resistance genes in potato breeding. Planta 255, 127. https://doi.org/10.1007/s00425-022-03984-2 (2022).

Domingues, T., Brandão, T. & Ferreira, J. Machine learning for detection and prediction of crop diseases and pests: A comprehensive survey. Agriculture 12, 1350. https://doi.org/10.3390/agriculture12091350 (2022).

Fenu, G. & Malloci, F. An application of machine learning technique in forecasting crop disease. In Proceedings of the 3rd International Conference on Big Data Research, 76–82, https://doi.org/10.1145/3365224.3365237 (2019).

Nidhis, A., Pardhu, C., Reddy, K. & Deepa, K. Cluster based paddy leaf disease detection, classification and diagnosis in crop health monitoring unit, 281–291 (Springer International Publishing, 2019).

Govardhan, M. & Veena, M. Diagnosis of tomato plant diseases using random forest. In 2019 Global Conference for Advancement in Technology (GCAT), 1–5, https://doi.org/10.1109/GCAT47503.2019.8978443 (IEEE, 2019).

Eunice, J., Popescu, D., Chowdary, M. & Hemanth, J. Deep learning-based leaf disease detection in crops using images for agricultural applications. Agronomy 12, 2395. https://doi.org/10.3390/agronomy12102395 (2022).

Zhong, S., Chen, C., Luo, W. & Chen, S. Ddvc-yolov5: An improved yolov5 model for road defect detection. IEEE Access[SPACE]https://doi.org/10.1109/ACCESS.2024.3453914 (2024).

Mohanty, S., Hughes, D. & Salathé, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 7, 1419. https://doi.org/10.3389/fpls.2016.01419 (2016).

Liu, J., Wang, X., Zhu, Q. & Miao, W. Tomato brown rot disease detection using improved yolov5 with attention mechanism. Front. Plant Sci. 14, 1289464. https://doi.org/10.3389/fpls.2023.1289464 (2023).

Yan, C. & Yang, K. Fsm-yolo: Apple leaf disease detection network based on adaptive feature capture and spatial context awareness. Digital Signal Process. 155, 104770. https://doi.org/10.1016/j.dsp.2024.104770 (2024).

Leng, S., Musha, Y., Yang, Y. & Feng, G. Cemlb-yolo: efficient detection model of maize leaf blight in complex field environments. Appl. Sci. 13, 9285. https://doi.org/10.3390/app13169285 (2023).

Ma, N., Zhang, X. & Zhang, H. Shufflenet v2: practical guidelines for efficient cnn architecture design. In European Conference on Computer Vision (ECCV), https://doi.org/10.1007/978-3-030-01264-9_1 (Springer, Cham, 2018).

Hou, Q., Zhou, D. & Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 13713–13722, https://doi.org/10.1109/CVPR46437.2021.01349 (2021).

Tan, M., Pang, R. & Le, Q. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 10781–10790, https://doi.org/10.1109/CVPR42600.2020.01080 (2020).

Bacanin, N. et al. A novel multiswarm firefly algorithm: An application forplant classification (Springer, 2022). https://doi.org/10.1007/978-3-031-09173-5_115.

Bukumira, M. et al. Carrot grading system using computer vision feature parameters and a cascaded graph convolutional neural network. J. Electron. Imaging 31, 061815–061815. https://doi.org/10.1117/1.JEI.31.6.061815 (2022).

Wongchai, A. et al. Farm monitoring and disease prediction by classification based on deep learning architectures in sustainable agriculture. Ecol. Modell. 474, 110167. https://doi.org/10.1016/j.ecolmodel.2022.110167 (2022).

Deng, H. et al. Leaf disease recognition based on channel information attention network. Multimed. Tools Appl. 83, 6601–6619. https://doi.org/10.3923/itj.2011.267.275 (2024).

Asif, K., Rahman, M. & Hena, M. Cnn based disease detection approach on potato leaves. In 2020 3rd International Conference on Intelligent Sustainable Systems (ICISS), 428–432, https://doi.org/10.1109/ICISS49785.2020.9315921 (IEEE, 2020).

Qu, S., Cui, C., Duan, J., Lu, Y. & Pang, Z. Underwater small target detection under yolov8-la model. Sci. Rep. 14, 16108. https://doi.org/10.1038/s41598-024-66950-w (2024).

Jovanovic, L. et al. Computer vision based areal photographic rocket detection using yolov8 models. Int. J. Robot. Automation Technol. 11, 37–49. https://doi.org/10.31875/2409-9694 (2024).

Zhang, Z., Qiao, Y., Guo, Y. & He, D. Deep learning based automatic grape downy mildew detection. Front. Plant Sci. 13, 872107. https://doi.org/10.3389/fpls.2022.872107 (2022).

Zhao, Y., Lin, C., Wu, N. & Xu, X. Apeiou integration for enhanced yolov7: Achieving efficient plant disease detection. Agriculture 14, 820. https://doi.org/10.3390/agriculture14060820 (2024).

Abdullah, A. et al. A deep-learning-based model for the detection of diseased tomato leaves. Agronomy 14, 1593. https://doi.org/10.3390/agronomy14071593 (2024).

Sun, Z. et al. Pine wilt disease detection in high-resolution uav images using object-oriented classification. J. Forestry Res.[SPACE]https://doi.org/10.1007/s11676-021-01357-y (2021).

Chen, J. & Ran, X. Deep learning with edge computing: A review. Proc. IEEE 107, 1655–1674. https://doi.org/10.1109/JPROC.2019.2921977 (2019).

Niu, Z., Zhong, G. & Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 452, 48–62. https://doi.org/10.1016/j.neucom.2021.03.091 (2021).

Karthik, R. et al. Attention embedded residual cnn for disease detection in tomato leaves. Appl. Soft Comput. 86, 105933. https://doi.org/10.1016/j.asoc.2019.105933 (2020).

Lin, T. et al. Feature pyramid networks for object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2117–2125, https://doi.org/10.1109/CVPR.2017.106 (2017).

Howard, A. et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF international conference on computer vision, 1314–1324, https://doi.org/10.1109/ICCV.2019.00142 (2019).

Hu, J., Shen, L. & Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, 7132–7141, https://doi.org/10.1109/CVPR.2018.00745 (2018).

Woo, S., Park, J., Lee, J. & Kweon, I. Cbam: Convolutional block attention module. In Proceedings of the European conference on computer vision (ECCV), 3–19, https://doi.org/10.1007/978-3-030-01234-2_1 (2018).

Dou, X., Wang, T. & Shao, S. A lightweight yolov5 model integrating ghostnet and attention mechanism. 2023 4th International Conference on Computer Vision, Image and Deep Learning (CVIDL) 348–352, https://doi.org/10.1109/CVIDL58838.2023.10166155 (2023).

Acknowledgements

This work has received support from the Yunnan Provincial Department of Science and Technology under the Major Science and Technology Special Project, Grant No.202402AE090017. Sincere gratitude is extended for this assistance.

Author information

Authors and Affiliations

Contributions

J.L. conceived the experiments, J.W. conducted the experiments, R.L. collected and processed the data, G.S. and K.Z. analyzed the results, X.L. wrote the main content of the manuscript, C.W. and T.Z. provided critical revisions on the experiments. All authors reviewed the manuscript and provided feedback.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, J., Wu, J., Liu, R. et al. Potato late blight leaf detection in complex environments. Sci Rep 14, 31046 (2024). https://doi.org/10.1038/s41598-024-82272-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-82272-3