Abstract

Underwater image enhancement (UIE) is challenging since image degradation in aquatic environments is complicated and changing over time. Existing mainstream methods rely on either physical-model or data-driven, suffering from performance bottlenecks due to changes in imaging conditions or training instability. In this article, we attempt to adapt the diffusion model to the UIE task and propose a Content-Preserving Diffusion Model (CPDM) to address the above challenges. CPDM first leverages a diffusion model as its fundamental model for stable training and then designs a content-preserving framework to deal with changes in imaging conditions. Specifically, we construct a conditional input module by adopting both the raw image and the difference between the raw and noisy images as the input at each time step of the diffusion process, which can enhance the model’s adaptability by considering the changes involving the raw images in underwater environments. To preserve the essential content of the raw images, we construct a content compensation module for content-aware training by extracting low-level image features of the raw images as compensation for each down block. We conducted tests on the LSUI, UIEB, and EUVP datasets, and the results show that CPDM outperforms state-of-the-art methods in both subjective and objective metrics, achieving the best overall performance. The GitHub link for the code is https://github.com/GZHU-DVL/CPDM.

Similar content being viewed by others

Introduction

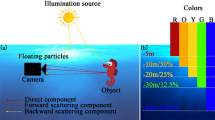

Underwater image enhancement (UIE) has gained great attention recently as human activities have increasingly ventured into the ocean. However, enhancing the visual quality of degraded underwater images poses significant challenges because of the unpredictable underwater environment and insufficient lighting conditions. The degradation of underwater images is primarily caused by the selective absorption and scattering of visible light wavelengths within the underwater environment1,2,3,4,5. Consequently, the acquired underwater images exhibit low contrast, low brightness, significant color deviations, blurred details, uneven bright spots, and other defects. Due to the complex and time-changing underwater imaging conditions, existing models often exhibit poor generalization and limited versatility. These limitations greatly hinder practical applications in fields such as marine ecology6, marine biology and archaeology7, remotely operated vehicles, and autonomous underwater vehicles8,9. Therefore, the study of UIE holds immense significance for advancing related underwater research.

Some UIE techniques have been developed to enhance the quality of underwater images, which can be roughly categorized into physical-model and data-driven methods. Physical-model methods10,11,12,13,14,15,16,17,18,19,20,21aim to model the physical process of light propagation in water by taking absorption, scattering, and other optical properties of the underwater environment into account. These methods often involve complex mathematical models and algorithms to simulate degradation. Motivated by the success of deep learning in a wide range of fields, a large number of data-driven methods22,23,24,25,26,27,28,29,30,31,32,33 based on deep learning have been proposed. These methods rely on large-scale datasets for model training. They typically learn the mapping relationship between degraded underwater images and their corresponding reference images, as well as effectively improve the restoration quality of underwater images based on the learned patterns and features.

Although physical-model and data-driven methods have achieved certain results in UIE tasks, there are still deficiencies. For the physical-model method, due to the ever-changing aquatic environments, the method tailored to a specific physical scenario struggles to adapt to other diverse scenarios, leading to limited generalization capabilities. On the other hand, the data-driven method faces challenges as currently available UIE datasets are predominantly collected from a single underwater environment characterized by low lighting, varying turbidity, and different particulate matter densities. Consequently, models trained on a single dataset often exhibit poor cross-dataset performance. Addressing these limitations, in this work, we propose a novel UIE framework named Content-Preserving Diffusion Model (CPDM) to enhance the quality of underwater images. By leveraging the diffusion model to learn the data distribution of the original images, our model is able to transcend the constraints of different physical scenarios while demonstrating robust performance across various datasets. Furthermore, we incorporate two tailored modules to elevate the quality of the restored images. Specifically, we employ the raw image as a conditional input during model training. To facilitate the extraction of differential features across time steps, we introduce the differences between the noisy image and the raw image at each time step as an additional conditional input. Additionally, to ensure that the model preserves the essential content of the raw images, we design a content compensation module that extracts low-level features from the raw images for content-aware training. Figure 1 offers a sneak peek into the enhancement results achieved by our CPDM. The key contributions of this article are summarized as follows:

-

We present a Content-Preserving Diffusion Model (CPDM) for underwater image enhancement (UIE). To adapt the diffusion model to such a new task, we take the raw image as conditional input for training at each time step, enabling the restored target image to have consistent content with the raw image.

-

We introduce the difference between the raw image and the noisy image of the current time step as an extra conditional input for content-aware training at the current time step, iteratively refining the output of each time step and resulting in a high-quality enhanced target image.

-

We design a content compensation module to ensure that the trained model preserves the low-level image features of raw images, such as structure, texture, and edge, preventing excessive modification of these important details within the images.

-

Extensive experimental results show that our CPDM exhibits superior performance. The ablation study also demonstrates the effectiveness of each module designed in this work. Especially, the proposed CPDM achieves good generalization and greatly improves color fidelity.

Related work

Underwater image enhancement methods

Recent advancements in computer vision have focused on improving task efficiency and model explainability. Innovations in instance segmentation emphasize better query-based methods for more robust instance separation and matching35. Nonparametric approaches like deep nearest centroids (DNC) enhance classification and segmentation by simplifying the decision process36. Flexible image translation techniques now offer more controllable and interpretable models for visual tasks37. Prototypes-based frameworks in motion learning provide a clearer understanding of dynamic scenes38. New strategies in model distillation help efficiently compress large models39. Deep reinforcement learning has been successfully applied to optimize resource allocation in dynamic cloud networks40. Advances in signal processing allow for more accurate identification of emitter signals41. Multitask learning frameworks have shown significant improvements in scene understanding and depth estimation42. Building on these advancements in computer vision, new methodologies are also being explored for specialized tasks such as underwater image enhancement. Methods for underwater image enhancement play a crucial role in improving the visual quality of underwater images. The existing mainstream methods mainly consist of physical and data-driven model43.

Physical-model.The physical-model methods take the UIE as an inverse problem. This inverse problem takes underwater images as the starting point, combines them with the physical-model that causes their degradation, and restores the original image through backward solving. These methods involve several steps: constructing a physical-model of the underwater image degradation process, estimating the model parameters based on the given image, and using the estimated parameters to restore the underwater image to a target image. By solving the inverse problem, these methods can enhance underwater image quality, mitigating the impact of degradation factors such as color distortion, scattering, and light attenuation. Drews et al12. introduced the Underwater Dark Channel Prior method, addressing the issue of the unreliable red channel in underwater images. Liu et al44. designed a cost function that ensures the dark channel of underwater images tends to zero and minimized this cost function to find the optimal transmission mapping that maximizes image contrast. Peng et al14. enhanced underwater images by estimating image blur and depth. Peng et al16. incorporated adaptive color correction into the image restoration model by introducing a generalized dark channel prior. Akkaynak et al17. presented a modified underwater color correction method to enhance the visual quality of underwater images. Zhang et al19. employed multiple strategies to tackle the issues of color deviations and low visibility in underwater images. Zhuang et al20. designed a hyper-laplacian reflectance priors-inspired retinex variational model to enhance underwater images. Zhang et al21. addressed the serious quality degradation issues faced by underwater images through dual prior optimized contrast enhancement and piecewise color correction.

Data-driven.In addition to physical-model methods, data-driven approaches for underwater image enhancement have also seen significant development in recent years29,45,46. Since the effectiveness of underwater image enhancement is influenced by specific factors such as scene, lighting condition, temperature, and turbidity, it is challenging to employ synthetic and realistic underwater images for network training. Moreover, there are certain challenges in applying neural networks trained on synthetic underwater image datasets to real-world scenarios. WaterGAN22utilized depth maps and in-air images as input to generate synthetic underwater images. These synthetic underwater images are subsequently used to correct the colors of monocular underwater images. Water CycleGAN23relaxed the requirement of paired underwater images by utilizing a weakly-supervised color transfer approach to correct color distortions. However, it may generate unrealistic results. Guo et al24. proposed a multi-scale dense GAN for underwater image enhancement. However, it still has shortcomings in overcoming the limitations of GANs47regarding output unpredictability. Ucolor25integrated an underwater physical imaging model and a medium transmission-guided model to enhance image quality in regions with severe degradation. However, the performance of the approach is significantly affected by different underwater environments. U-shape28designed a novel U-shaped transformer with an integrated module to enhance the network’s focus on the severe attenuation of color channels and spatial regions. However, this method still exhibits major color distortion. As for data-driven methods, there have been several diffusion model-based studies. For instance, TBDM32designed a lightweight transformer-based diffusion network and employed skip sampling strategies, piecewise sampling, and evolutionary algorithm search to optimize the sampling process. Lu et al30. proposed a novel fusion-diffusion and denoising process, and used a special accelerated sampling technique to improve sampling speed and quality. DiffWater29performed channel-wise color compensation in the RGB color space, and customized it for different scenarios. Lu et al31. employed two UNet networks to complete image distribution transformation and denoising, thereby improving the visual quality of underwater images.

Unlike the aforementioned diffusion model-based methods, this paper proposes two novel designs to adapt the diffusion model to the UIE task. Firstly, at each time step t, we input the image difference between the original underwater image and the current noisy raw clean image into the model. This increases the input information to the model, enabling it to extract the difference information between underwater and clean images. Secondly, we design a content preservation module, which inputs the low-level content and structural features of the underwater image into the model. This ensures that the restored image maintains consistency in content and structure with the original underwater image.

Diffusion model for image generation

As a generative model, the diffusion model has demonstrated impressive performance in numerous computer vision tasks. According to the presence or absence of conditions, the diffusion model can be categorized into conditional diffusion and unconditional diffusion48.

Unconditional Diffusion.The Denoising Diffusion Probabilistic Model (DDPM)49,50draws inspiration from non-equilibrium thermodynamics51and are composed of two processes: forward noising process and backward denoising process. In the forward process, DDPM applies a Markov chain-based diffusion, gradually adding noise to the original image until it finally conforms to a standard Gaussian distribution. The backward process is the inverse of the forward process, where a sample is drawn from a standard Gaussian distribution, and the noise introduced during the forward process is gradually eliminated, resulting in the gradual generation of the target image. Denoising Diffusion Implicit Model (DDIM)52 optimizes the sampling process of DDPM by transforming it into a non-Markovian process and enhancing sampling efficiency. The training process remains unchanged, while significant optimizations are made to the steps in the sampling process.

Conditional Diffusion.Conditional diffusion models are built upon the diffusion model and incorporate additional conditions, such as category, text, and image, to guide the diffusion and generation processes. Guided diffusion53utilizes a classifier to classify the generated images, calculates gradients based on the cross-entropy loss between the classification score and the target category, and then employs these gradients to guide the next sampling. This method has a significant advantage in that it does not require retraining the diffusion model. Instead, guidance is added during the forward process to achieve the desired generation effect. Semantic guidance diffusion (SGD)54introduces two different types of guidance, which are reference graph-based guidance and text-based guidance. By designing corresponding gradient terms, the SGD method achieves specific guidance effects tailored to these different forms of category guidance. In AS-OCT55, the conditional input images are incorporated into both the encoder and decoder layers of the UNet to guide the model in generating specific target images. ControlNet56 introduces conditional input images into each layer of the UNet decoder to guide the pretrained diffusion model in generating specific target images. Unlike AS-OCT and ControlNet, our approach introduces conditional input images into each layer of the UNet encoder. This design leverages the skip connections between the encoder and decoder layers with matching feature dimensions in the UNet structure. While our method shares similarities with ControlNet and AS-OCT, we have made adjustments based on the unique structure of the UNet, achieving an outstanding effect in generating specific target images. Unlike traditional methods that input categories or images as conditions into the model, we input the differential image as a condition into the model, enabling it to better extract the difference information between underwater images and clean images. To enhance the application of CPDM to UIE tasks, we have designed a content compensation module to improve the image restoration performance of the CPDM.

Proposed method

In this section, we will provide a detailed explanation regarding the technical details of the proposed Content-Preserving Diffusion Model (CPDM). Our CPDM includes the conditional input and content compensation modules, which are shown in Figures 2 and 3. Figure 2 illustrates the overall forward noise addition process (indicated by arrows pointing left) and backward noise removal process (indicated by arrows pointing right) of CPDM. Figure 3 details the specific implementation of the conditional input and content compensation modules at each time step t. The conditional input module feeds additional information into the model to enhance its performance or guide its output. The content compensation modules, on the other hand, effectively integrate low-level information into the UNet structure through 1\(\times\)1 convolutional operations, supplementing or enhancing the information processed by the UNet. In the following, we will describe these two modules in detail.

Conditional input module

Our CPDM for underwater image enhancement differs from the fundamental diffusion model in the training and sampling processes. First, we need a paired dataset \(\{(x_0^i, y_0^i)\}, i=0,1,...,S\) for training, where S is the size of the dataset, \(y_0^i\) and \(x_0^i\) denote the i-th raw degraded underwater image and its corresponding raw clean image, respectively. For simplicity, we hereinafter use the sample (\(x_0, y_0\)) to represent an arbitrary training sample (\(x_0^i, y_0^i\)). In the training process, except for the input of the noisy image (\(x_t\)), we input the raw underwater image (\(y_0\)) at each time step t as a supervisory condition, which can guide the diffusion model to generate the enhanced underwater images. we found that when applying it to the UIE task, the diffusion model based on the UNet architecture, which has only structures such as downsampling, skip connections, and upsampling, performs well in image segmentation. In DDPM, we expect UNet to perform noise prediction, so it needs to extract more information from the input image. To address this problem, an additional condition is introduced, which is the difference between the noisy image (\(x_t\)) at the current time step t and the raw underwater image (\(y_0\)).

By incorporating this difference \((y_0-x_t)\) as another conditional input, the network can extract more useful information and cues about \(y_0\) and \(x_t\). The extracted information helps the diffusion model to produce more accurate and visually appealing enhancements.

After introducing our conditional input (\(y_0\) and \(y_0-x_t\)), the posterior probability of our diffusion model (denoted as \(\theta _{\text {cpdm}}\)) can be defined as

Accordingly, the mean of the predicted noise can be written as

Therefore, our loss function \(\mathscr {L}_{\text {simple}}\) can be defined as

Content compensation module

In this subsection, we introduce a content compensation module to boost the ability of the information aggregation inside the noise prediction network. It is known that the UNet depends on a relatively straightforward network structure, and the UIE task demands the safeguarding of vital low-level features, including color, contour, edge, texture, and shape. We want the predicted noise to strongly correlate with the low-level characteristics of the underwater image \(y_0\), such as contour, edge, texture, and shape. Thus, we designed a content compensation module that inputs the low-level information of the underwater image \(y_0\) into the network. Specifically, we feed the low-level information into the UNet encoder. Since the encoder and decoder are directly connected through skip connections, we input it only into the encoder. The training framework for time step t is illustrated in Figure 3. As shown in Figure 3, each layer of our UNet has four blocks, and the content compensation module inputs a low-level feature of the raw image into the last block of each layer in the encoder side (i.e., downsampling part). Such low-level features can always control the network to preserve the image content, which is beneficial to restore a high-quality target image corresponding to the input raw underwater image. The significance of the content compensation module lies in its ability to effectively retain the low-level features during the sampling process, thereby leading to an overall enhancement in the quality of the restored image. Algorithm 1 and Algorithm 2 illustrate our training and sampling processes, respectively.

Experiments

Dataset

Large Scale Underwater Image Dataset (LSUI)28contains 4,279 image pairs. This dataset involves a rich range of underwater scene images, including various water body types, lighting conditions, and target categories, all with good visual quality. We divide the LSUI into 3,879 pairs of training data (Train_L) and 400 pairs of test data (Test_L400). In addition, an Underwater Image Enhancement Benchmark (UIEB) dataset43is also used in our experiment. This dataset contains 890 data pairs, where 800 pairs are used for training, and the remaining 90 pairs are used for testing (Test_U90). In addition to these two datasets, we select 200 pairs of underwater images from the Enhancing Underwater Visual Perception dataset (EUVP)57 for out-of-sample testing. This additional test dataset is referred to as Test_E200. The statistics of all training and testing data are shown in Table 1. To facilitate the training of our diffusion model, we resize all image pairs into 64\(\times\)64 size.

Experimental setting and metrics

Our experiments are run on an RXT 3090 GPU. In the forward process, we set \(T=1000\). The number of iterations of the model is 2, 000, 000, and the parameters follow the parameter settings of DDPM. Since the test dataset includes the reference images, we evaluate our experimental results using reference-based metrics such as PSNR60, SSIM61, and MSE. These three metrics reflect the proximity to the reference, with higher PSNR values representing closer image content, higher SSIM values reflecting more similar structures and textures, and lower MSE values indicating smaller differences between the corresponding pixels of two images. Nine mainstream UIE methods including WaterNet43, FUnIE57, Ucolor25, Restormer58, Maxim59, U-shape Transformer28, TBDM32, WWPF34, and DiffWater29 are selected for the performance comparison.

Visual comparison of enhanced underwater images by various methods on the Test_L400 (LSUI) dataset. The leftmost Input represents the underwater image that needs to be enhanced, while the rightmost GT represents the clean reference image. The methods we compared include WaterNet43, FUnIE57, Ucolor25, U-shape Transformer28, TBDM32, WWPF34, and DiffWater29.

Visual comparison of enhanced underwater images by various methods on the Test_U90 (UIEB) dataset. The leftmost Input represents the underwater image that needs to be enhanced, while the rightmost GT represents the clean reference image. The methods we compared include WaterNet43, FUnIE57, Ucolor25, U-shape Transformer28, TBDM32, WWPF34, and DiffWater29.

Results

As shown in Table 2, our Content-Preserving Diffusion Model (CPDM) method achieves promising results in quantitative metrics (PSNR, SSIM, and MSE). Specifically, on Test_E200, our method outperforms all the compared methods in the three metrics. On Test_L400 and Test_U90, our method exhibits a slight decrease in SSIM and MSE compared to its best competitor, TBDM. However, our method’s SSIM is consistently higher than TBDM. Furthermore, our method achieves either the best or the second-best results across all tests, demonstrating excellent overall performance. Note that we conduct an extra test on the Test_E200 dataset, without prior training on the EUVP dataset. This test can verify the generalization ability of the compared methods. Table 3 summarizes the subjective metric NIQE results, where lower NIQE values indicate better image quality. The results show that images generated by CPDM have higher subjective quality. Table 4 compares the Flops and parameters of several diffusion model-based UIE methods, showing that these methods all have relatively high computational resource consumption. As shown in Figures 4 and 5, our CPDM method produces better visual results that are much closer to the reference images than its competitors. In particular from Figure 4, we can see that in the first row of images, CPDM and Ushape outperform the other methods in color restoration. In the second and fourth rows, TBDM and CPDM achieve better recovery effects compared to the other methods. For the third row, it is evident that the color consistency of the other methods is inferior to that of CPDM. In the fifth row, although none of the methods achieved particularly good recovery results, CPDM managed to produce images closest to the real ones compared to the other methods. From Figure 5, we can see that diffusion-based models generally perform well, but DiffWater appears visually darker and exhibits color recovery deviations. Both TBDM and CPDM achieve visual effects that are sufficiently close to clean images. For the first row of images, the colors in TBDM’s output are slightly brighter compared to the clean images, whereas CPDM’s output is closer to the clean images. This demonstrates the effectiveness of diffusion-based models and highlights the utility of the two modules we designed for UIE tasks.

The superior performance of our CPDM can be attributed to two key designs: conditional input module and content compensation module. Firstly, we introduce the conditional input during the training of the noise prediction network. By introducing the raw image and the difference between the raw image and the noisy image of the current time step as conditional input, the noise prediction model can iteratively refine useful characteristics from the conditional input. Through this step-by-step refinement, our method can restore high-quality target images. Secondly, the content compensation module plays a pivotal role in extracting the low-level features of the input images, as depicted in Figure 3. By integrating such features into the UNet network, the content compensation module ensures that the enhanced images possess the same content information as the raw images, such as edge, texture, and shape, throughout the sampling process. This preservation of low-level features contributes significantly to the overall improvement in the quality of the enhanced images.

Ablation study

To verify the effectiveness of each module designed in our method, we conduct a series of ablation experiments in a step-by-step addition manner. This way allows us to gradually assess the contribution of each module to the overall performance of CPDM. The numerical results of these ablation experiments are summarized in Table 5, and the corresponding visualization effects are shown in Figure 6.

Base Model. The visual effects show that utilizing only the raw image as the input condition (model-A) can produce semantically meaningful results. This showcases a certain potential of diffusion-based methods for enhancing underwater images. However, we can see from Table 5 that its numerical results arrive at the lowest level in all objective metrics.

Model with Conditional Input Module. The performance of model-B is improved when the offset between the raw image and the noisy image of the current time step is added as the conditional input. Adding the dual-input condition significantly enhances the model’s overall performance. Compared to model-A, incorporating the offset between the raw image and the noisy image of the current time step in model-B allows for further integration of the information of \(x_t\) predicted at the current time step. As shown in Figure 6, the output images of model-B exhibit improved consistency in terms of color tone compared to those of model-A. Furthermore, as indicated by the quantitative metrics in Table 5, model-B outperforms model-A in objective metrics. Our designed conditional input module incorporates the \(y_0-x_t\) of the current time step as a conditional input into the diffusion model. Compared to the raw diffusion model, including a conditional input related to \(x_t\) provides additional complementary information to the model. Thus, our designed conditional input module is proven to be beneficial for conditional diffusion tasks.

Model with Content Compensation Module. To validate the functionality of our designed content compensation module, we introduce model-C, which incorporates both \(y_0\) and the content compensation module as inputs. Unlike model-B, model-C removes the input \(y_0-x_t\) and includes a content compensation module instead. As shown in Figure 6, we can observe that all our models achieve good visual results, including model-A, model-B, and model-C. Interestingly, model-C exhibits improved color consistency closer to the real reference images than model-A and model-B. As presented in Table 5, model-C outperforms models-A and model-B in terms of numerical results. The design of model-C incorporates the content compensation module on top of model-A. Our designed content compensation module can extract structural information from the input \(y_0\) across different feature dimensions, which is then fed into different layers of the UNet architecture. This further enhances the encoding of input information and decoding of output in the UNet framework. Therefore, our designed content compensation module is also proven to be beneficial for conditional diffusion tasks.

Full Model. Finally, we can see that the full model (model-D) encompassing all modules achieves the best results. This outcome indicates that each module of the CPDM plays a specific role and contributes to its overall effectiveness. The step-by-step addition of these modules gradually enhances the model’s capability, leading to improved image quality in the UIE task.

Conclusion

In the article, we have attempted to adapt the diffusion model to the underwater image enhancement (UIE) task and have presented a Content-Preserving Diffusion Model (CPDM) for enhancing the quality of the restored underwater image. The proposed CPDM has demonstrated impressive performance compared with the leading UIE methods. We introduce two carefully designed conditional inputs, effectively guiding CPDM to generate high-quality results. Moreover, our designed content compensation module plays a crucial role throughout the training process, ensuring that raw images provide more low-level features as guiding conditions for generating target images. Our CPDM operates in an iterative refinement paradigm by embedding two modules into each time step during both the training and sampling processes, thereby guiding the generation of image content in each denoising step and enhancing the visual quality of the restored images. Extensive experimental results validate the outstanding capabilities of CPDM in terms of numerical evaluations and visual effects. More importantly, the methodology designed in our CPDM can be easily extended to other conditional generative tasks.

Limitations and future work

Our CPDM has demonstrated good performance in UIE tasks from both qualitative and quantitative perspectives. However, training the diffusion model requires vast computational resources, resulting in a parameter volume of 121 million according to our experiment. This could, to some extent, hinder its practical deployment. In the future, we may introduce the architecture of the latent diffusion model (LDM) to encode the raw data into the latent space, thereby reducing the computational costs of training and sampling. Additionally, the sampling speed of our CPDM is still slow, and real-time sampling cannot be achieved at present. Promisingly, we can introduce the recently proposed wavelet diffusion model (WaveDiff) as the backbone of our CPDM, which only requires four sampling steps to obtain the final recovery result. We hope to reduce the consumption of computational resources and improve sampling speed using the above methods, but how to customize LDM and WaveDiff for our UIE task requires further and deeper exploration.

Data availability

The data that support the findings of this study are openly available in the public repository at https://bianlab.github.io/data.html (LSUI reference number 28), https://li-chongyi.github.io/proj_benchmark.html (UIEB reference number 35), and https://irvlab.cs.umn.edu/resources/euvp-dataset (EUVP reference number 49) for anyone to access and use.

References

McGlamery, B. A computer model for underwater camera systems. In Ocean Optics VI 208, 221–231 (1980).

Jaffe, J. S. Computer modeling and the design of optimal underwater imaging systems. IEEE Journal of Oceanic Engineering 15, 101–111 (1990).

Hou, W., Woods, S., Jarosz, E., Goode, W. & Weidemann, A. Optical turbulence on underwater image degradation in natural environments. Applied Optics 51, 2678–2686 (2012).

Akkaynak, D. et al. What is the space of attenuation coefficients in underwater computer vision? In IEEE Conference on Computer Vision and Pattern Recognition, 4931–4940 (2017).

Akkaynak, D. & Treibitz, T. A revised underwater image formation model. In IEEE Conference on Computer Vision and Pattern Recognition, 6723–6732 (2018).

Strachan, N. Recognition of fish species by colour and shape. Image and Vision Computing 11, 2–10 (1993).

Ludvigsen, M., Sortland, B., Johnsen, G. & Singh, H. Applications of geo-referenced underwater photo mosaics in marine biology and archaeology. Oceanography 20, 140–149 (2007).

Johnsen, G., Ludvigsen, M., Sørensen, A. & Aas, L. M. S. The use of underwater hyperspectral imaging deployed on remotely operated vehicles-methods and applications. IFAC-PapersOnLine 49, 476–481 (2016).

Ahn, J., Yasukawa, S., Sonoda, T., Ura, T. & Ishii, K. Enhancement of deep-sea floor images obtained by an underwater vehicle and its evaluation by crab recognition. Journal of Marine Science and Technology 22, 758–770 (2017).

Galdran, A., Pardo, D., Picón, A. & Alvarez-Gila, A. Automatic red-channel underwater image restoration. Journal of Visual Communication and Image Representation 26, 132–145 (2015).

Li, C.-Y., Guo, J.-C., Cong, R.-M., Pang, Y.-W. & Wang, B. Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Transactions on Image Processing 25, 5664–5677 (2016).

Drews, P. L., Nascimento, E. R., Botelho, S. S. & Campos, M. F. M. Underwater depth estimation and image restoration based on single images. IEEE Computer Graphics and Applications 36, 24–35 (2016).

Li, C., Guo, J., Guo, C., Cong, R. & Gong, J. A hybrid method for underwater image correction. Pattern Recognition Letters 94, 62–67 (2017).

Peng, Y.-T. & Cosman, P. C. Underwater image restoration based on image blurriness and light absorption. IEEE Transactions on Image Processing 26, 1579–1594 (2017).

Wang, Y., Liu, H. & Chau, L.-P. Single underwater image restoration using adaptive attenuation-curve prior. IEEE Transactions on Circuits and Systems I: Regular Papers 65, 992–1002 (2017).

Peng, Y.-T., Cao, K. & Cosman, P. C. Generalization of the dark channel prior for single image restoration. IEEE Transactions on Image Processing 27, 2856–2868 (2018).

Akkaynak, D. & Treibitz, T. Sea-thru: A method for removing water from underwater images. In IEEE Conference on Computer Vision and Pattern Recognition, 1682–1691 (2019).

Zhuang, P., Li, C. & Wu, J. Bayesian retinex underwater image enhancement. Engineering Applications of Artificial Intelligence 101, 104171 (2021).

Zhang, W. et al. Underwater image enhancement via minimal color loss and locally adaptive contrast enhancement. IEEE Transactions on Image Processing 31, 3997–4010 (2022).

Zhuang, P., Wu, J., Porikli, F. & Li, C. Underwater image enhancement with hyper-laplacian reflectance priors. IEEE Transactions on Image Processing 31, 5442–5455 (2022).

Zhang, W., Jin, S., Zhuang, P., Liang, Z. & Li, C. Underwater image enhancement via piecewise color correction and dual prior optimized contrast enhancement. IEEE Signal Processing Letters 30, 229–233 (2023).

Li, J., Skinner, K. A., Eustice, R. M. & Johnson-Roberson, M. Watergan: Unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robotics and Automation Letters 3, 387–394 (2017).

Li, C., Guo, J. & Guo, C. Emerging from water: Underwater image color correction based on weakly supervised color transfer. IEEE Signal Processing Letters 25, 323–327 (2018).

Guo, Y., Li, H. & Zhuang, P. Underwater image enhancement using a multiscale dense generative adversarial network. IEEE Journal of Oceanic Engineering 45, 862–870 (2019).

Li, C. et al. Underwater image enhancement via medium transmission-guided multi-color space embedding. IEEE Transactions on Image Processing 30, 4985–5000 (2021).

Rowghanian, V. Underwater image restoration with haar wavelet transform and ensemble of triple correction algorithms using bootstrap aggregation and random forests. Scientific Reports 12, 8952 (2022).

Fu, Z., Lin, X., Wang, W., Huang, Y. & Ding, X. Underwater image enhancement via learning water type desensitized representations. In IEEE International Conference on Acoustics, Speech and Signal Processing, 2764–2768 (2022).

Peng, L., Zhu, C. & Bian, L. U-shape transformer for underwater image enhancement. IEEE Transactions on Image Processing 32, 3066–3079 (2023).

Guan, M. et al. Diffwater: Underwater image enhancement based on conditional denoising diffusion probabilistic model. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing (2023).

Lu, S., Guan, F., Zhang, H. & Lai, H. Speed-up ddpm for real-time underwater image enhancement. IEEE Transactions on Circuits and Systems for Video Technology (2023).

Lu, S., Guan, F., Zhang, H. & Lai, H. Underwater image enhancement method based on denoising diffusion probabilistic model. Journal of Visual Communication and Image Representation 96, 103926 (2023).

Tang, Y., Kawasaki, H. & Iwaguchi, T. Underwater image enhancement by transformer-based diffusion model with non-uniform sampling for skip strategy. In Proceedings of the ACM International Conference on Multimedia, 5419–5427 (2023).

Wang, L. et al. Underwater image restoration based on dual information modulation network. Scientific Reports 14, 5416 (2024).

Zhang, W. et al. Underwater image enhancement via weighted wavelet visual perception fusion. IEEE Transactions on Circuits and Systems for Video Technology (2023).

Wang, W., Liang, J. & Liu, D. Learning equivariant segmentation with instance-unique querying. In Advances in Neural Information Processing Systems 35, 12826–12840 (2022).

Wang, W., Han, C., Zhou, T. & Liu, D. Visual recognition with deep nearest centroids. In International Conference on Learning Representations (2023).

Han, C. et al. Image translation as diffusion visual programmers. In International Conference on Learning Representations (2024).

Han, C. et al. Prototypical transformer as unified motion learners. In International Conference on Machine Learning (2024).

Han, C. et al. Amd: Automatic multi-step distillation of large-scale vision models. In European Conference on Computer Vision (2024).

Liu, Y. & Zhang, J (Deep reinforcement learning approach. IEEE Transactions on Network and Service Management, Service function chain embedding meets machine learning, 2024).

Zhang, J., Liu, Y., Ding, G., Tang, B. & Chen, Y. Adaptive decomposition and extraction network of individual fingerprint features for specific emitter identification. IEEE Transactions on Information Forensics and Security (2024).

Zhang, J., Su, Q., Tang, B., Wang, C. & Li, Y. Dpsnet: Multitask learning using geometry reasoning for scene depth and semantics. IEEE Transactions on Neural Networks and Learning Systems 34, 2710–2721 (2021).

Li, C. et al. An underwater image enhancement benchmark dataset and beyond. IEEE Transactions on Image Processing 29, 4376–4389 (2019).

Liu, H. & Chau, L.-P. Underwater image restoration based on contrast enhancement. In IEEE International Conference on Digital Signal Processing, 584–588 (2016).

Paulo Drews, J. R., Nascimento, E., Moraes, F., Botelho, S. & Campos, M. Transmission estimation in underwater single images. In IEEE International Conference on Computer Vision Workshops, 825–830 (2013).

Yang, M., Hu, K., Du, Y., Wei, Z. & Hu, J. Underwater image enhancement based on conditional generative adversarial network. Signal Processing Image Communication 81 (2019).

Goodfellow, I. et al. Generative adversarial nets. In Advances in Neural Information Processing Systems, 2672–2680 (2014).

Croitoru, F.-A., Hondru, V., Ionescu, R. T. & Shah, M. Diffusion models in vision: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence 45, 10850–10869 (2023).

Ho, J., Jain, A. & Abbeel, P. Denoising diffusion probabilistic models. In Advances in Neural Information Processing Systems, 6840–6851 (2020).

Sohl-Dickstein, J., Weiss, E. A., Maheswaranathan, N. & Ganguli, S. Deep unsupervised learning using nonequilibrium thermodynamics. In International Conference on Machine Learning, 2256–2265 (2015).

Jarzynski & C. Equilibrium free energy differences from nonequilibrium measurements: a master equation approach. Physical Review E 56, 5018-5035 (1997).

Song, J., Meng, C. & Ermon, S. Denoising diffusion implicit models. In International Conference on Learning Representations (2020).

Dhariwal, P. & Nichol, A. Diffusion models beat gans on image synthesis. In Advances in Neural Information Processing Systems, 8780–8794 (2021).

Liu, X. et al. More control for free! image synthesis with semantic diffusion guidance. In IEEE Winter Conference on Applications of Computer Vision, 289–299 (2023).

Li, S. et al. Content-preserving diffusion model for unsupervised as-oct image despeckling. In International Conference on Medical Image Computing and Computer-Assisted Intervention, 660–670 (2023).

Zhang, L., Rao, A. & Agrawala, M. Adding conditional control to text-to-image diffusion models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 3836–3847 (2023).

Islam, M. J., Xia, Y. & Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robotics and Automation Letters 5, 3227–3234 (2020).

Zamir, S. W. et al. Restormer: Efficient transformer for high-resolution image restoration. In IEEE Conference on Computer Vision and Pattern Recognition, 5728–5739 (2022).

Tu, Z. et al. Maxim: Multi-axis mlp for image processing. In IEEE Conference on Computer Vision and Pattern Recognition, 5769–5780 (2022).

Korhonen, J. & You, J. Peak signal-to-noise ratio revisited: Is simple beautiful? In Fourth International Workshop on Quality of Multimedia Experience, 37–38 (2012).

Horé, A. & Ziou, D. Image quality metrics: Psnr vs. ssim. In 20th International Conference on Pattern Recognition, 2366–2369 (2010).

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grants 62272116 and 62394334, and in part by the Science Foundation of Guangzhou University under Grant YJ2023022.

Author information

Authors and Affiliations

Contributions

X.S.: Investigation, Methodology, Coding, Experiments, Writing - Original Draft. Y.-G. W.: Data Curation, Writing - Review & Editing, Resources, Supervision. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors have no competing interests to declare that are relevant to the content of this article.

Accession codes

The code will be available once the paper is published.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Shi, X., Wang, YG. CPDM: Content-preserving diffusion model for underwater image enhancement. Sci Rep 14, 31309 (2024). https://doi.org/10.1038/s41598-024-82803-y

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-82803-y

Keywords

This article is cited by

-

Dscyolo: dynamic snake convolutional YOLO network for underwater image recognition

The Journal of Supercomputing (2026)