Abstract

Traditionally, the place-pitch ‘tonotopically’ organized auditory neural pathway was considered to be hard-wired. Cochlear implants restore hearing by arbitrarily mapping frequency-amplitude information. This study shows that recipients, after a long period of sound deprivation, preserve a level of auditory plasticity, enabling them to swiftly and concurrently learn speech understanding with two alternating, distinct frequency maps. During rehabilitation, subjects switched maps randomly on a daily basis, serving as their own control. After stating their preference, on average, subjects maintained their hearing performance with the non-preferred, legacy map over time. In addition, subjects continued to learn and improve with their map of preference. Being able to train and process the auditory information from two maps did not seem to be a competition over neural resources, such as would be the case in a typical zero-sum game. This shows a new level of flexibility in learning and long-term adaptation of the auditory system. Practically, the required novel study design halves the sample size while mitigating order effects associated with neural plasticity, benefitting sensory-oriented trials.

Similar content being viewed by others

Introduction

We set out to investigate if adult humans are able to relearn hearing with a cochlear implant (CI) after profound deafness, while simultaneously using two distinct cochlear frequency allocations alternating over time1. In short, the frequency allocation tables included the manufacturers standard table and another one optimized to resemble tonotopical stimulation in a healthy cochlea. Newly implanted postlingual CI users trained hearing while randomly alternating on a daily basis between a standard map and a tonotopically aligned map. If successful, the extent of plasticity of the auditory system for adults after sensory deprivation is even broader than was previously believed2,3,4. By quantifying and comparing the learning process for both cochlear frequency allocation maps, the place-spectral information allocation in the cochlea was effectively changed. This change mimics a tonotopy shift for normal hearing subjects. As such, the impact of dynamic spectral information (re)allocation on auditory functionality training and the extent of auditory generalization5 can be experimentally analysed in humans. Previously, we have used this setup for quantifying the performance with the two different maps for the clinical purpose of selecting the best map and found that in contrast to our expectations, the more ‘natural map’ performed worse6. As outlined in Lambriks et al.6, possible reasons for this result include underrepresentation of important low-frequency regions in the tonotopically aligned map and a difference in the total number of activated electrodes between fittings. Additionally, variability in neural health and cochlear anatomy likely contributed to individual differences in map performance.

Much is left to explore in terms of the dynamic capacities of our auditory system, specifically in listeners who have received a cochlear implant. In terms of short-term adaptation: how are humans able to adapt to switches in frequency allocation maps and continue to hear? In terms of plasticity and learning: how does speech comprehension of CI users improve over time when using two maps differing in both spectral information and spectral resolution? And, do CI users retain a representation of the legacy (i.e. non-preferred) map, in a (dormant) brain network7, after a prolonged period of using their preferred map? This is a somewhat similar experimental setup to the study of neural plasticity using different spectral pinna cues for sound localization8. Effectively, this intervention changes the auditory information on a day-to-day basis within the auditory and speech processing connectomes of an individual (forms of structural connectivity), as these redevelop during rehabilitation after deafness. This provides a novel perspective on the plasticity of the functional neural networks involved, by tracking their performance over time in speech understanding.

In addition to a better understanding of the capabilities of auditory learning, the outcomes of this study can illustrate the advantages of combining two different types of experiments in the auditory and clinical neurosciences (a hybrid setup): a cross-over and multiple-arm randomised controlled trial (RCT). First, randomly alternating two different sound processor configurations over time prevents the order effect of a cross-over trial. This is crucial for studies of neural plasticity because the period after sensory reactivation could be critical for neural plasticity, which is best understood only in infancy9,10,11. Second, the trial setup requires fewer participants than a conventional RCT by having each subject serve as their own control. This crossover design removes the need for separate groups, effectively halving the sample size while maintaining statistical power. Finally, comparing the incremental auditory performance with two maps within subjects diminishes the effects of patient specific factors.

The current manuscript describes results of the clinical trial ELEPHANT, which has formed the basis for multiple publications reporting various subsets of its data. In Lambriks et al.1, the trial protocol was published. Primary results focusing on the initial three-month rehabilitation period were presented in Lambriks et al.6, while imaging and electrophysiological outcomes were published in Lambriks et al.12. The current manuscript extends these findings by reporting the learning and adaptation process in word recognition outcomes over a longer follow-up period of 12 months. All mentioned studies rely on the same dataset from the same cohort of cochlear implant users.

Results

Learning with two maps simultaneously

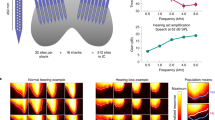

To investigate the plasticity of the human adult auditory system, newly implanted CI users (n = 14) participated in a cross-over design, switching between a standard fitting map (one-size-fits-all) and a tonotopically aligned map (Fig. 1A) using a daily randomized wearing schedule for a period of 3 months1. We assessed the effects of alternating spectral information allocations and the extent of neural plasticity by measuring word recognition scores at regular intervals after implementation of the CI, as shown here in Fig. 1B.

During the randomization period, exposure to the standard and tonotopic map was approximately equal (53%-47%). Contrary to our expectations6 that the experimental map would perform better, the clinical report showed that mean word recognition scores were better with the standard fitting compared to tonotopic fitting throughout the whole 3 months of map randomization (Fig. 1B). After using their preferred map for a period of 3 subsequent months, twelve subjects (86%) chose to continue CI use with the standard map and two subjects (14%; S3 and S12) retained the tonotopic map for clinical use. During the period of individual choice, 12 subjects only wore one of the two maps (the preferred map) and thus did not experiment further with switching between settings. This was in contrast to subjects S9 and S12, who also used their eventually unpreferred, legacy processor for respectively 27% and 43% of total wearing time.

The ‘individual choice’ panel of Fig. 1B shows that average performance with the legacy map, either tonotopic or standard, remained relatively stable (red color). Speech understanding with the preferred map (which was worn exclusively in most patients) improved further (green color). This difference in learning rate between the preferred and legacy map during the period of individual choice is shown in Fig. 1C. The median improvement in speech recognition with the preferred map was 6.9% (CI95% 1.79–11.98). This was significantly above 0%, indicating a continued performance increment during the individual choice period. In contrast, participant performance with the legacy map did not significantly change over time (-1.9% (CI95% -6.22-2.46)). The median of intra-individual differences in incremental performance over time between maps was significantly higher than 0% (p = 0.02). This indicates that subjects continued to learn with the preferred map and reached an overall significant performance increment at the end of the individual choice period in comparison to the legacy map. There was no significant (or indication of a substantial non-significant) decline in speech comprehension with the legacy map, proving that subjects can simultaneously learn to hear with two alternating spectral information allocations.

a, Median allocated frequency distribution of standard (blue) and tonotopic (orange) setting showing lower frequency bounds for each electrode. Median tonotopic electrode positions are represented in green. Median numbers of fitted channels per octave in each setting are shown on the right. Error bands indicate first and third quartile. V = virtual channel (enabled in tonotopic setting). b, Learning curves for mean CNC word recognition during CI rehabilitation. For the randomization phase (equal exposure to both settings) results for the standard (blue) and tonotopic (orange) map are shown. In the individual choice period (unequal exposure to both settings), the solid green line represents the preferred map and the solid red line represents the legacy map. The individual choice panel extends until day 150, marking the final time point at which measurements were performed with both settings. Mean scores of standard (dashed blue line) and tonotopic (dashed orange line) settings, as presented in the randomization panel, are also continued in the individual choice panel. Error bands indicate 95% confidence intervals. The X symbols represent final scores with the preferred setting after 1 year (blue X for standard and orange X for tonotopic setting). c, Difference in speech recognition at the end compared to the beginning of the individual choice period for the preferred (green) and legacy setting (red). Intra-individual differences in incremental performance over time between maps are shown in yellow. Confidence intervals for the median are represented by notches.

Characterization of the learning process on a group level during randomization

Table 1 characterizes learning during the randomization phase with multiple parameters for both maps. Here, learning rate represents the mean daily progress in word recognition for the first weeks and months of CI rehabilitation. With both settings, the learning curve was highest in the first week of rehabilitation and gradually improved further up to the first month. After the first month, learning stabilized, showing only relatively small improvements in the second and third month. Between maps, learning during the first month was significantly faster with the standard setting (1.3% (IQR 1.1%) compared to the tonotopic setting (0.9% (IQR 0.8%), p = 0.04, Wilcoxon signed-rank test). The duration of the first learning boost (number of days to reach a score 10% higher than the initial score at CI activation), or Start, was significantly shorter with the standard setting than for the tonotopic setting (p = 0.04). The parameter 75% of max, which represents the number of days to reach 75% of the highest score during rehabilitation, was also in favor of the standard setting (p = 0.04, Wilcoxon signed-rank test). The time until a learning plateau was reached, or End (defined as the number of days to reach a score 10% lower than the highest score), was shorter for the standard setting (44 days (IQR 30)) than the tonotopic setting (57.5 days (IQR 28)), although not significantly different. Duration, the time between Start and End, was not significantly different between fittings.

Characterization of the learning process on an individual level

Individual learning curves, presented in Extended Data Fig. 1, showed substantial variation in learning among patients (in general and/or between settings). For example, subject S4 was a slow learner at first but then developed quickly to one of the best performers of the study group. In contrast, S6 already reached considerable speech understanding levels after a few weeks but then plateaued, and major improvements remained absent after 1 month of rehabilitation. Most subjects obtained better speech understanding with the standard fitting (S1, S2, S5, S14) while S3 and S12 performed better with the tonotopic fitting, and another group only showed a minor difference in performance between both settings (S4, S9, S10). Future studies with larger samples sizes should consider to study the learning curves in a similar qualitative way, to identify subgroups of individuals who learn in a similar manner.

Learning with alternating spectral information allocations: the effect of the trial design

To evaluate the effect of the trial design on overall learning speed during CI rehabilitation, a post-hoc comparison was performed with a reference population from our clinic, which received regular clinical follow-up. Similar to the study population, the reference population included subjects implanted with (HiFocus) Mid-Scala electrodes and fitted with Advanced Bionics speech processors. Study subjects seemed to perform similar to better than the reference population, despite being exposed to two settings (Fig. 2). Study characteristics of both populations were not significantly different (age, p = 0.43; duration of hearing loss, p = 0.70; preoperative Pure Tone Average (500, 1000 and 2000 Hz) for the implanted ear, p = 0.74, best aided CNC results (across 55-65-75 dB SPL) for the implanted ear, p = 0.85, Mann-Whitney test; Extended Data Table 1).

Learning curve for mean CNC word recognition with preferred setting (N = 14) in study subjects (black) and an interpolated clinical reference performance from a population of 21 CI subjects (purple). At CI activation, no measurement was performed within the reference group. Error bands indicate 95% confidence intervals.

Discussion

It was thought that auditory experience could influence the fundamental aspects of the auditory system only during restricted ‘critical’ or ‘sensitive’ periods during development13. In addition to the relatively recent pivot13 in our understanding of auditory plasticity, our results demonstrate that the auditory system in adults can even accommodate and learn two different, alternating frequency allocation maps after profound deafness.

Switching auditory neural networks by changing the sound processor

After a successful learning phase, the performance stability with the legacy map compared to the performance increment of the preferred map, suggests that there are two different neural brain networks employed at will by CI recipients. A relatively stable performance after prolonged discontinuation indicates these differences in performance were not the result of fixed ratios in performance levels, associated with the effectiveness of the two maps.

For CI users this means that learning two distinct frequency allocations during the rehabilitation phase gives the opportunity to select the best performing or subjectively preferred map. Moreover, these results suggest that alternating between maps will not impede their final performance. Future studies should prove if such intervention indeed increases speech understanding, using a randomized control group.

Partial coexistence of the auditory neural networks & auditory generalisation

One map does not seem to displace or ‘overwrite’ the other map in the brain, as has been suggested to be the case before14, nor did we find indications that alternating wearing diminishes speech understanding, when learning two maps concurrently. This is surprising as spectral information is fundamental in our perception of sound and a representation of spectral information is dominantly present at different levels within the neural auditory pathways, which are strongly tonotopically organised. This suggest that the specific network topology in place allows for the co-existence of exclusive map-dependent information routing, ending in similar functional endpoints (speech), which, however differ in performance. Also, it indicates that less frequently used neural routes remained present and functional over time.

CI users were able to switch between maps and continued to understand speech without significant interruption, indicating strong short-term adaptation. In addition, they were able to improve hearing performance using the alternating frequency allocation scheme and our results suggest that both maps were learnt, to some extent, individually. Initially, the learning curves of the two maps differed substantially, as did the learning rates. After subjects themselves selected a preferred map for the three-month period of individual choice, speech comprehension continued to improve with the preferred map while speech comprehension with the legacy map remained relatively stable over time. Evidently, the spectral information transfer within the auditory and speech networks was compatible enough to connect both maps to language representation. These results suggest that there was also a form of generalisation and transfer learning present.

The neural substrate of switching between maps

Learning different frequency allocation maps might have modified responsible neural networks on multiple levels, from individual dendrites to cortical areas5. Indeed, auditory frequency generalization studies consistently show that it is unlikely that changes are restricted simply to frequency tuned neurons or neural regions15. For perceptual learning, it is often not a permanent change in cortical circuitry, but instead a change in the gating of networks. Indeed, learning could benefit from reusing common neural elements and circuitry. This would minimize developmental resources or optimize energy consumption and information flow while enhancing compatibility with existing pathways. Effectively such neural networks could switch or multiplex depending on the context13. Here, differences in the sound spectrogram for either map could signal the switch in networks and explain how CI users without much effort switched from one map to another and continue to understand speech. The multiplexing hypothesis15 and insights from brain network communication7 fit our behavioural observations well. These should be further investigated using measures such as functional imaging or high-temporal resolution (electrical) activity measures in the brain5.

Practical implications for auditory neuroscience

Besides the scientific implications of our findings, this study also has practical implications for auditory and neural plasticity research. The trial design used in this study introduced daily crossover randomization to prevent order effects due to initial brain plasticity related to any CI fitting strategy that is given first.

Our results show that the present trial design enables a significant reduction in sample size while controlling for forms of bias (e.g. blinding, patient factors) and critical periods after sensory deprivation. Results of the current study show discriminative learning abilities between fittings. That is, learning curves with both settings were different in terms of initial performance, steepness and end result within each subject. Therefore, the trial design seems to enable direct comparisons between CI settings. As discussed in Lambriks et al.1, it can be argued that the absolute learning speed with either setting will be slower with this approach because the exposure is distributed over twice the amount of time. However, the final performance of our patients was in line with clinical populations, indicating that learning curves were not negatively influenced16,17,18. Possibly, selection bias might have occurred in our study population, as subject characteristics could potentially be different within the sample population that was willing to participate in the study compared to the clinical population. No randomization procedure was applied to control for these differences. Also, it should be noted that that the tonotopic maps in this study were based on an estimation using the Greenwood function. However, the exact natural spectral information arrangement in the human cochlea currently remains unknown. It is therefore unclear to what extent stimulation in the tonotopic maps matched with actual corresponding acoustic locations in the cochlea and/or frequency perceptions in the auditory cortex, and whether alternative models delineating tonotopic distribution would have provided closer representations19,20. Indeed, in general, all of these models, including the one which has been presented here, have only been superficially validated21. Future studies combining similar trial designs with other neuroimaging methods (for example, functional imaging or electrophysiological measures) could perhaps determine on what level(s) information multiplexing happens within the auditory connectome and when it happens during the learning process.

Further enhancing the development of auditory skills in CI users

Our study focussed on the learning process with two different frequency allocation maps. However, very little is known about the development of many auditory capabilities over time, either in general or in the hearing impaired (e.g. CI users) specifically. For example, how do humans learn to extract relevant meaning, in terms of features, cues, localisation and ultimately speech, from the auditory information provided? Common theories, such as perceptual learning2, Gestalt-psychology, or pattern recognition are limited in explaining how such general auditory skills develop or relate to innate abilities. Nevertheless, CI implanted subjects demonstrate both remarkable flexibility to function adequately with distorted input, as long as they have learned complex pattern recognition during normal language acquisition3, while the unaccounted variability in performance is not well understood22. Recently, Froemke et al. showed in deafened rats that neuromodulation of the locus coeruleus is strongly related to plasticity and directly effects the learning rate with a CI23. Via a reward system that is activated depending on the behavioural relevance of auditory cues, it has been proposed to (partially) explain the unaccounted large variation in new CI user learning rates. In the present investigation, we showed that a subset of individuals performed drastically different in terms of learning rate and final performance with one of the two programmed maps, even under similar other circumstances (such as individual patient factors). Previously, we hypothesized that the differences in performance are related to a different representation of spectral information and the neural health of the cochlea6. Perhaps an additional relationship exists, a positive feedback loop, where the initial auditory performance is crucial in the activation of reward mechanisms that promote plasticity and enhance learning. Indeed, here we found that subjects significantly started to learn faster with the map which was dominantly preferred, and continued to have a higher learning rate during the first month.

While we know relatively little about the development of auditory capabilities and the underlying neural mechanisms, we know more about when learning occurs. For instance, the importance of the exposure to relevant sounds at critical periods is well established3,9,10,11. In this respect, it would be interesting to compare adults, who we have shown to achieve the majority of their performance during the first month, with the learning rates of post-lingually deafened children with a CI. Zhou et al. showed that such critical periods might be relative, by showing that in rats long term exposure to noise could seem to ‘reboot’ cortical organisation, to a state similar to an immature auditory cortex24. Perhaps, future investigations could focus on ‘reboot’ strategies for low-performing CI users.

How do the fundamental components in neural networks adapt to flexibly process changing streams of incoming information for behavioural use? Recent studies using complex deep neural network models showed that neural sound processing is adapted to statistical characteristics of natural soundscapes as well as peripheral processing properties25,26. Similarly, future studies modelling sound processing in CI recipients using similar complex models may provide new insights into auditory learning and neural plasticity.

Conclusion

The adult auditory system preserves a sufficient level of plasticity to swiftly and concurrently learn two alternating distinct cochlear frequency mappings for speech understanding during rehabilitation, as was demonstrated here in an experimental setup. Afterwards, subjects retained the ability to hear with an on average similar performance using the legacy (non-used) frequency mapping, while subjects continued to learn with the map in use. Interestingly, there were no indications that the two maps competed for the same neural resources (a zero-sum game). On a practical level this suggests subjects could improve their auditory performance with the CI by training two daily alternating maps and selecting the best performing map without substantial negative effects on performance. Moreover, the results of this study demonstrate the potential of daily randomized, blinded, controlled trial for studying competing hearing interventions or the incremental auditory learning process. It requires only half of the participants in comparison to traditional designs, with discriminating results available as soon as within a month of follow up.

Materials and methods

Ethics

This investigation has been approved by the ethics committee of the Maastricht University Medical Center (MUMC+) and has been registered in the Clinical Trials Register (NL64874.068.18) and at ClinicalTrials.gov (NCT03892941). The study was conducted in compliance with the Declaration of Helsinki and ISO 14155:2020 (Clinical investigation of medical devices for human subjects - Good clinical practice). Before signing informed consent, participants were explained the study rationale and trial procedures. Subjects were compensated for their travelling costs.

Study setup

In this single blinded, controlled clinical study with daily crossover randomization, subjects were recruited prior to surgery and treatment exposure was implemented directly from the start of CI rehabilitation. A detailed description of the trial set-up is given in its protocol publication1. For a period of three months, starting at first fit, subjects followed a randomization scheme whereby each individual crossed over between a standard and a tonotopically aligned map. Here, two labeled speech processors were distributed, one of which was programmed with the standard settings and the other with the tonotopic settings. Each day, subjects were allocated to wear one of both processors, according to a randomization schedule using a 1:1 ratio between both maps. For the tonotopically aligned setting, mapping of electrical input was aligned to a hypothesized natural spectral information arrangement in the individual cochlea, which was estimated based on a post-operative cone beam CT scan27. In short, adjustments to the CI’s frequency allocation table were made so electrical stimulation of frequencies matched as closely as possible with corresponding acoustic locations in the cochlea, as estimated using the Greenwood function28 (Fig. 1A). The details of this procedure can be found in Lambriks et al.1 and resulting individual electrode locations in Lambriks et al.12 The randomization period was followed by a 3-month period of individual choice in which patients were at liberty to use their preferred map. Compliance to study procedures was evaluated by comparing self-reported wearing time to predefined cut-off points. Eventually, one subject was excluded due to severe non-compliance with the wearing schedule as a result of an early preference for the standard map. This exclusion was made before any outcome data from both maps were analyzed.

Subjects

Fourteen adult patients (eleven male and three female, median age: 65 years, IQR: 9 years) were enrolled in the clinical trial6. All participants were Dutch speaking, aged 18 years or older, with post-lingual onset of profound deafness (median duration: 25 years, IQR: 17 years), and met the Dutch criteria for CI implantation. Subjects were unilaterally implanted with an Advanced Bionics HIRes Ultra implant and HiFocus Midscala electrode in the MUMC+. 13 out of 14 subjects used a hearing aid the contralateral ear during the study window. Study exclusion criteria included contraindications for MR or CT imaging, cochlear or neural abnormalities, prior or bilateral CI implantation, and disabilities preventing active trial participation. Additional study characteristics can be found in Lambriks et al.6.

Measurements

Speech outcomes (such as speech intelligibility in quiet and noise, sound quality and listening effort) were measured with both settings in a randomized order over the course of one year starting at the beginning of rehabilitation. In the current manuscript, results of the main outcome (CNC word recognition) are presented. Results from the unreported tests are presented in Lambriks et al.6 Word recognition was evaluated with phoneme scoring at the level of 65 dB SPL with test-retest using a Dutch monosyllabic consonant-nucleus-consonant (CNC) speech recognition test29. Recorded voice stimuli were presented in free field from a speaker at ear level at a distance of 1 m in the front29. The average of values on 65 dB SPL was recorded. At each measurement session, word recognition was tested first. For subjects using a contralateral hearing aid, this device was turned off and left in situ during CI measurements.

Statistical analyses

Mathematica 13.0 (Wolfram Research, Champaign, USA) was used for analysis and visualization of data. Means with confidence intervals (95%) and medians with confidence intervals (95%) and interquartile ranges (IQR) were presented as descriptives. Learning curves were established by applying linear interpolation between visits. Missing values were also handled by linear interpolation. Given the sample size and post-hoc observation of non-normal distributions according to Shapiro-Wilk test, the non-parametric Wilcoxon signed-rank test was used to calculate differences between results with both CI settings. There were no instances of multiple comparisons.

Data availability

Data is provided within the manuscript or supplementary information files.

Change history

17 April 2025

A Correction to this paper has been published: https://doi.org/10.1038/s41598-025-95841-x

References

Lambriks, L. J. G. et al. Evaluating hearing performance with cochlear implants within the same patient using daily randomization and imaging-based fitting - The ELEPHANT study. Trials 21, 1–14 (2020).

Dahmen, J. C. & King, A. J. Learning to hear: plasticity of auditory cortical processing. Curr. Opin. Neurobiol. 17, 456–464 (2007).

Moore, D. R. & Shannon, R. V. Beyond cochlear implants: awakening the deafened brain. Nature Neuroscience 2009 12:6 12, 686–691 (2009).

Irvine, D. R. F. Plasticity in the auditory system. Hearing Research Preprint at (2018). https://doi.org/10.1016/j.heares.2017.10.011

Wright, B. A. & Zhang, Y. A review of the generalization of auditory learning. Philosophical Trans. Royal Soc. B: Biol. Sci. 364, 301–311 (2009).

Lambriks, L. et al. Imaging-based frequency mapping for cochlear implants – Evaluated using a daily randomized controlled trial. Front. Neurosci. 17, 1–17 (2023).

Seguin, C., Sporns, O. & Zalesky, A. Brain network communication: concepts, models and applications. Nature Reviews Neuroscience 2023 24:9 24, 557–574 (2023).

Hofman, P. M., Van Riswick, J. G. A. & Van Opstal, A. J. Relearning sound localization with new ears. Nat. Neurosci. 1, 417 (1998).

Kral, A. & Sharma, A. Developmental neuroplasticity after cochlear implantation. Trends Neurosci. 35, 111–122 (2012).

Knudsen, E. I. Sensitive Periods in the Development of the Brain and Behavior. J. Cogn. Neurosci. 16, 1412–1425 (2004).

Persic, D. et al. Regulation of auditory plasticity during critical periods and following hearing loss. Hear. Res. 397, 107976 (2020).

Lambriks, L. et al. Toward neural health measurements for cochlear implantation: The relationship among electrode positioning, the electrically evoked action potential, impedances and behavioral stimulation levels. Front. Neurol. 14, (2023).

Irvine, D. R. F. Plasticity in the auditory system. Hear. Res. 362, 61–73 (2018).

Fu, Q. J., Shannon, R. V. & Galvin, I. I. I. Perceptual learning following changes in the frequency-to-electrode assignment with the Nucleus-22 cochlear implant. J. Acoust. Soc. Am. 112, 1664–1674 (2002).

Irvine, D. R. F. Auditory perceptual learning and changes in the conceptualization of auditory cortex. Hear. Res. 366, 3–16 (2018).

Schafer, E. C. et al. Meta-Analysis of Speech Recognition Outcomes in Younger and Older Adults With Cochlear Implants. Am. J. Audiol. 30, 481–496 (2021).

Firszt, J. B. et al. Recognition of speech presented at soft to loud levels by adult cochlear implant recipients of three cochlear implant systems. Ear Hear. 25, 375–387 (2004).

Devocht, E. M. J., George, E. L. J., Janssen, A. M. L. & Stokroos, R. J. Bimodal hearing aid retention after unilateral cochlear implantation. Audiol. Neurotology. 20, 383–393 (2015).

Stakhovskaya, O., Sridhar, D., Bonham, B. H. & Leake, P. A. Frequency map for the human cochlear spiral ganglion: implications for cochlear implants. J. Association Res. Otolaryngol. 8, 220–233 (2007).

Li, H. et al. Three-dimensional tonotopic mapping of the human cochlea based on synchrotron radiation phase-contrast imaging. nature.comH Li, L Helpard, J Ekeroot, SA Rohani, N Zhu, H Rask-Andersen, HM Ladak, S AgrawalScientific reports, 2021•nature.com 6, 7 (123AD).

Svirsky, M. A. et al. Valid Acoustic Models of Cochlear Implants: One Size Does Not Fit All. Otol Neurotol. 42, S2–S10 (2021).

Tropitzsch, A. et al. Variability in Cochlear Implantation Outcomes in a Large German Cohort With a Genetic Etiology of Hearing Loss. Ear Hear. https://doi.org/10.1097/AUD.0000000000001386 (2023).

Glennon, E., Zhu, A., Wadghiri, Y. Z., Svirsky, M. A. & Froemke, R. C. Locus coeruleus activity improves cochlear implant performance. nature.com https://doi.org/10.1101/2021.03.31.437870

Zhou, X. et al. Natural restoration of critical period plasticity in the juvenile and adult primary auditory cortex. Soc. Neurosci. https://doi.org/10.1523/JNEUROSCI.6470-10.2011 (2011).

Saddler, M., Gonzalez, R. & communications, J. M. N. & undefined. Deep neural network models reveal interplay of peripheral coding and stimulus statistics in pitch perception. nature.com. (2021).

Francl, A. & McDermott, J. H. Deep neural network models of sound localization reveal how perception is adapted to real-world environments. Nature Human Behaviour 6, 111–133 (2022). (2022).

Devocht, E. M. J. et al. Revisiting place-pitch match in CI recipients using 3D imaging analysis. Annals Otology Rhinology Laryngology. 125, 378–384 (2016).

Greenwood, D. D. A cochlear frequency-position function for several species—29 years later. J. Acoust. Soc. Am. 87, 2592–2605 (1990).

Bosman, A. J. & Smoorenburg, G. F. Intelligibility of Dutch CVC syllables and sentences for listeners with normal hearing and with three types of hearing impairment. Audiology 34, 260–284 (1995).

Author information

Authors and Affiliations

Contributions

Conceptualization, MvH and ED; Methodology, MvH and ED; Formal analysis, LL and MvH; Investigation, LL; Writing – Original Draft, MvH and LL; Writing – Review & Editing, MvH, LL, ED, KvdH, JD, and EG; Visualization, MvH and LL; Supervision, EG.

Corresponding author

Ethics declarations

Competing interests

The work of Lars Lambriks and Elke Devocht in this investigator-initiated study was financially supported by Advanced Bionics Inc. The study was designed in cooperation between MUMC and Advanced Bionics. Data collection, analysis, and the decision to publish were all solely accounted for by MUMC. The work presented in this manuscript is the intellectual property of MUMC. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this Article was revised: In the original version of this Article, the colours for the Standard and Tonotopic settings were switched in the description panel of Figure 1. Full information regarding the corrections made can be found in the correction for this Article.

Electronic Supplementary Material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

van Hoof, M., Lambriks, L., van der Heijden, K. et al. Learning to hear again with alternating cochlear frequency allocations. Sci Rep 15, 317 (2025). https://doi.org/10.1038/s41598-024-83047-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-83047-6