Abstract

This study created the C//Sim attention mechanism employing the parallel connection of the CA attention mechanism and the SimAm attention mechanism to detect cracks in lightweight concrete. MobileNetV3 was improved using the above method, and a lightweight concrete crack recognition model, MobileNetV3-C//Sim, was established. To validate the model’s practicality, this paper has been tested on self-built and public datasets. The improved model performs higher accuracy, recall, precision, and F1 values than Mobilenetv3 in both datasets, with increases of 0.44–0.69% and 0.46–0.89% for the binary and multi classification tasks, respectively. For the CA attention mechanism, SimAm attention mechanism, and ablation tests with different combinations of each other showed that the parallel connection combination was superior to the single-type, front-to-back concatenation combination. In noise testing with different attention mechanisms, the C//Sim reduction is the smallest. It is verified to have better noise immunity and robustness. Regarding the number of model parameters, the proposed method involves only 2.90 M, which is 30.17% less than that of MobileNetV3. The method can provide a model reference for further concrete crack lightweight identification research.

Similar content being viewed by others

Introduction

Concrete is an important building material that is widely used in all kinds of civil engineering due to its wide availability, low price, convenient construction, etc.1 However, concrete has compressive but not tensile load-bearing characteristics, and is very susceptible to cracks during use due to the effects of applied loads, temperature changes, heat of hydration, etc.2. The appearance of cracks weakens the safety, stability, and durability of the concrete structure and cracks may even cause engineering disasters3, such as the I-35W highway bridge collapse in Minnesota, USA in 20074 and the major infiltration accident in the Shijingshan Tunnel in Guangdong, China in 20215. Therefore, detecting cracks in concrete is essential to ensure the safety of concrete structures.

Traditional concrete crack detection is mainly manual detection, low efficiency, low accuracy, poor safety, belongs to the subjective detection method, and needs to invest a lot of money6. With the development of machine vision technology in recent years, significant progress has been made in automated detection based on digital image processing algorithms and traditional machine learning algorithms. Digital image processing algorithms rely on thresholds, edges, regions, and other features of the images. Traditional machine learning algorithms often require manual setting of width, area, and other features to recognize cracks. However, compared to other object recognition, cracks are usually very subtle and small in size, and the morphology and orientation of cracks do not have a fixed pattern, making it difficult to accurately recognize them. In addition, image backgrounds in engineering environments are often contaminated with dust, stains, and other structural defects, which can interfere with the accurate identification of cracks. As a result, these classifiers, based on “image features” have poor performance and robustness when dealing with complex and varied crack images7,8,9,10,11,12,13.

Compared with digital image processing algorithms, deep learning algorithms can extract features directly from images, perform end-to-end learning, and effectively guarantee the identification of concrete cracks after extensive training, showing good robustness. For example, Cha et al.14 established a deep architecture convolutional neural network (CNN) concrete crack classification model, which outperformed the Canny operator and Sobel operator in the recognition of images with strong spots and shadows; the recognition accuracy reached 98%. Furthermore, Lu Deng et al.15 established a deformable modular CNN (R-CNN) crack detector, which employed deformable convolution and deformable pooling operation to effectively improve the extraction of crack features. Additionally, Zhou and Song16 developed a crack classification method based on heterogeneous image fusion based on the temperature difference between cracked and non-cracked regions, yielding a recognition accuracy of 99.2%. Moreover, Guo et al.17 established a deep-width network (DWN) model to classify concrete cracks, displaying an accuracy rate of up to 98.55%. Darragh O 'Brien et al.18 classified and recognized tunnel lining cracks based on VGG16, which effectively overcame the unfavorable tunnel environment, and the recognition accuracy rate reached 98.3%. Majdi Flah et al.19 combined an improved Otsu image processing technique with a deep learning classifier to classify the crack types according to the direction of the cracks in the concrete with 96.17%; Yamaguchi et al.20 proposed an accurate classification method for pavement crack images based on a deep learning architecture, and the improved VGG16-SOM achieved an accuracy of 96%. Meanwhile, Dong et al.21 combined the channel attention mechanism and multi-head attention for crack recognition in complex scenes; Yang et al.22 proposed a transfer learning-based multi-classification crack detection method for DCNN with 99.83%, 99.72%, and 97.07% test results on three kinds of datasets, respectively. The concrete crack recognition model based on a deep learning algorithm exhibited good robustness and high accuracy. However, most of the existing crack recognition models based on deep learning algorithms were improved by using large CNNs, such as VGG, ResNet, Densnet (Dense Convolutional Network), etc.18,20,22,23,24. These result in a large number of computational parameters and high deployment requirements for applications.

Therefore, establishing a lightweight recognition model with high accuracy and easy deployment will provide more practical application value and potential. Among the existing lightweight neural networks, MobileNet is often used as a basic model25,26. In order to improve the concrete crack recognition accuracy, the model must be enhanced by selecting an appropriate attention mechanism for engineering features27,28,29. Therefore, based on the characteristics of concrete crack image recognition, this paper employed the C//Sim (Coordinate, Simple, and Parameter-Free Attention Module) attention mechanism to extract spatial features and channel features embedded with position information in the image, and introduced it into MobileNetV3. The MobileNetV3-C//Sim modeling was completed. The effectiveness, high accuracy, and light weight of the model for concrete crack recognition were verified experimentally in the concrete crack binary classification task (cracks, non-cracks) and multi classification task (horizontal, vertical, diagonal, and irregular cracks).

Lightweight model based on MobileNetV3-C//Sim

MobileNetV3

MobileNetV3 is a lightweight convolutional neural network25, which is characterized in comparison with traditional convolutional neural networks.

-

(1)

Depth separable convolution greatly reduces the number of parameters of the model through pointwise and deep convolutions. The ratio of depth separable convolution to ordinary convolution computation is shown in Eq. (1).

$$\frac{{D_{K}^{2} MD_{F} + MND_{F}^{2} }}{{D_{K}^{2} MND_{F}^{2} }} = \frac{1}{N} + \frac{1}{{D_{K}^{2} }}$$(1)where Dk denotes the size of the convolution kernel, DF represents the size of the input feature map, M indicates the number of channels, and N refers to the number of channels of the output feature map.

-

(2)

Figure 1 displays the inverted residual structure. The accuracy is ensured by an hourglass-type structure with two small ends and a large middle, which further reduces the number of parameters of the model and improves its running speed.

-

(3)

The SE (Squeeze and Excitation) attention mechanism is used. Firstly, the feature map U of size H × W × C is globally average pooled to obtain the feature vector z by Squeeze operation. Subsequently, the obtained feature vector z is inserted into two fully connected layers containing the ReLu activation function using the Excitation operation, and the weights of each feature channel are generated using the Sigmoid activation function. Finally, the normalized weights are applied to the feature maps of each channel by the Scale operation.

Improved attention mechanisms

Considering that the SE attention mechanism in MobileNetV3 is limited to evaluating the importance of information between encoded channels, the location details that are crucial in recognizing the structure of the object cannot be fully captured, limiting the model recognition rate. Concrete cracks have different morphologies, directions, and widths, and their distribution is often inhomogeneous. Therefore, the features at different locations in the spatial dimension should be weighed to focus on the critical regions that likely contain cracks. Therefore, additional feature information needs to be introduced to improve the recognition accuracy of the model and enhance its sensitivity to the crack location information.

This paper combines the CA (Coordinate Attention) attention mechanism30 and SimAm (A Simple, Parameter-Free Attention Module) attention mechanism31, taking advantage of their strengths. Consequently, this study proposes the C//Sim attention mechanism, the structure of which is shown in Fig. 2. The CA attention mechanism obtains the feature maps in two directions by dividing the input feature maps into two directions, namely width and height, and performing global average pooling. In the detection task, this attention mechanism can improve the model accuracy by embedding the position information into the channel attention32. The SimAm attention mechanism is based on the biologically active principle of neuron inhibition of peripheral neurons and obtains a higher priority attention allocation mechanism, which can automatically assign different weights to the channel features and spatial features of the target without increasing the parameters. It can effectively extract the crack features, improve the model accuracy, and reduce the amount of computation in the detection of concrete cracks33.

As shown in Fig. 2, the feature maps obtained from the C//Sim attention mechanism and the SE attention mechanism, respectively, are demonstrated. In the feature map of the C//Sim attention mechanism, more crack regions are highlighted, showing a higher level of attention, which indicates that the C//Sim attention mechanism is more effective in capturing and emphasizing the feature of cracks. Therefore, compared with the SE attention mechanism, the C//Sim attention mechanism can reduce the number of computational parameters and storage requirements while improving the feature expression ability. The mechanism is suitable for lightweight convolutional neural networks. The specific implementation steps were as follows:

-

(1)

In the CA module, the input feature maps in the vertical and horizontal directions were first divided into two independent direction-aware feature maps using two 1D global pooling operations. Then, the feature information in these two directions was spliced and downscaled to obtain an intermediate feature map of C/r × 1 × (W + H) (r is the scaling factor for the number of scaled-down channels). Subsequently, the intermediate feature maps from the vertical and horizontal directions were respectively, which was upscaled to the original dimension by 1 × 1 convolution, and the final feature maps in the two directions were obtained by the Sigmoid activation function. Finally, the input feature maps and the amount of final directional feature maps were converted to the final output feature maps by multiplication.

-

(2)

In the SimAm module, the importance of different neurons was first divided by calculating the energy function of different neurons, as shown in Eq. (2), where λ is the canonical term, t was the target neuron of the input feature map on a single channel, and μ and σ are the mean and the variance of all neurons on a single channel, respectively. A lower minimum energy e indicated that the target neuron t was more different from the other neurons x, with a higher importance. Finally, the importance of the neurons was weighted by the Sigmoid activation function, as shown in Eq. (3).

$$e_{t} = \frac{{4\left( {\sigma^{2} + \lambda } \right)}}{{\left( {t - \mu } \right)^{2} + 2\sigma^{2} + 2\lambda }}$$(2)$$S = sigmoid\left( \frac{1}{E} \right) \cdot X$$(3) -

(3)

As shown in Fig. 3, the final feature map was obtained by adding the feature maps obtained in the first two steps.

Modeling MobileNetV3-C//Sim

The MobileNetV3-C//Sim network model based on MobileNetV3 was obtained by replacing the SE module in the MobileNetV3 structure with the C//Sim module. The overall structure of the model is shown in Fig. 4 below.

-

(1)

Input layer: the original crack image is received as input.

-

(2)

Feature extraction layer: the input image is first passed through a 3 × 3 initial convolutional layer with a step size of 2 to initially extract the crack features; the feature map obtained from the initial convolutional layer is then used as an input to the bottleneck layer, which further extracts the crack features by upgrading and then downgrading the feature map through the depth-separable convolution, residual connection, and the C//Sim attention mechanism while keeping the model lightweight.

-

(3)

Output Layer: Global Average Pooling is used to convert the feature map into a one-dimensional feature vector, then a 1 × 1 convolutional layer is used instead of the fully connected layer, and the SoftMax activation function is used to generate probability distributions for each category, yielding the final classification results.

Experimental design

Test environment

Python 3.9 was used as the experimental environment in this study, with the deep learning framework of Pytorch 2.0.0. The CPU was AMD Ryzen 5 5600H with Radeon Graphics 3.30 GHz, GPU NVIDIA GeForce RTX3050Ti with 4 GB of video memory, and the operating system was Windows 11.

Dataset construction

We incorporated structures from different environments and designed two datasets. The datasets are specified below:

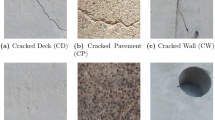

Dataset 1: In this paper, the datasets for binary and multi classification are selected from the public dataset of concrete cracks in roads and bridges34,35, and were divided into the training set, the validation set, and the test set according to a ratio of 6:2:2. Among them, the binary classification dataset contained 12,000 crack images and 8000 background images; the multi classification dataset contained 2000 horizontal cracks, 2000 vertical cracks, 2000 diagonal cracks, and 1500 irregular cracks, as displayed in Fig. 5a.

Dataset 2:This dataset is from the Changlinggang Tunnel on the Chengde South Expressway in China. We took 425 original images with a resolution of 3072 × 4096, In order for the model to extract more features to accommodate images with different brightness and contrast. The dataset is expanded using operations such as cropping, luminance change, and rotation as in Fig. 5c. Cropping simulates different viewpoints or local attention and helps the model to learn different parts of the image and contextual information. Luminance change enables the model to adapt to images under different lighting conditions, enhancing the model’s generalization ability in real scenes. Rotation enables the model to learn to deal with cracks or scenes with different orientations.

Divided into a training set, validation set, and test set in the ratio of 6:2:2. The binary classification dataset contains 4000 crack images and 4000 background images; the multi classification dataset contains 1000 crack images of each type, as in Fig. 5b.

Experimental procedure

To improve the performance of the concrete crack classification model, the crack dataset was randomly shuffled, the cross-entropy loss function was chosen as the loss function during training, Epoch was set to 50, the initial learning rate was set to 0.001, and the batch size was set to 8. The training set was input into the model and repeated iterative calculations were conducted to optimize the model parameters. The model that demonstrated the highest accuracy rate on the validation set was determined as the best model for subsequent testing. Finally, the test set is fed into the trained model, and the accuracy, recall, precision, and F1 values are evaluated to verify the generalization ability of the model. The implementation process is shown in Fig. 6.

Test results and analysis

Evaluation index

Correctly choosing appropriate evaluation metrics can improve the accuracy, robustness, and discriminative ability of the model. In this paper, accuracy, recall, precision, F1 values, and Confusion Matrix are chosen as evaluation metrics. For the classification evaluation indexes, the closer the value is to 1 the better the prediction effect of the model. The formulas of Accuracy, Recall, Precision, and F1 values are shown in Eqs. (4)–(7).

Classification task test results

Figure 7 shows the validation set accuracy curves of MobileNetV3-C//Sim and MobileNetV3 during the training process of the binary classification task. The training on the two datasets shows that the accuracy curve of MobileNetV3-C//Sim rises quickly and smoothly, oscillates after a rapid rise in rounds 1 to 12, and finally reaches the convergence state. The initial accuracy curve of the MobileNetV3 model was lower than that of MobileNetV3-C//Sim, and the subsequent curves were almost always lower than that of MobileNetV3-C//Sim. The above two best-performing models in the validation set were used as test models. The test results are shown in Table 1, the improved model performs higher accuracy, recall, precision, and F1 values than Mobilenetv3 in both Dataset 1 and Dataset 2. In dataset 1, accuracy increased by 0.6%, recall increased by 0.6%, precision increased by 0.48%, and F1 values increased by 0.84%. In dataset 2, accuracy increased by 0.69%, recall increased by 0.69%, precision increased by 0.66%, and F1 values increased by 0.68%.

For the training of the multi classification task, Fig. 8 shows the validation set accuracy profiles of MobileNetV3-C//Sim and MobileNetV3. The accuracy curves of the two models went through three phases of rapid increase, slow increase, and continuous oscillation, and finally reached the convergence state around the 30th round. Similar to the binary classification task, the initial accuracy curve of the MobileNetV3 model was lower than that of this paper’s method, and the subsequent accuracy was always lower than that of MobileNetV3-C//Sim. The test results are shown in Table 2, the improved model also performs higher accuracy, recall, precision, and F1 values than Mobilenetv3 in both dataset 1 and dataset 2. In dataset 1, accuracy increased by 0.46%, recall increased by 0.65%, precision increased by 0.37%, and F1 values increased by 0.53%. In dataset 2, accuracy increased by 0.87%, recall increased by 0.88%, precision increased by 0.76%, and F1 values increased by 0.89%. The experimental results of binary and multi classification show that the improved model performs better performance in data adaptation and feature learning, which enhances the classification performance and robustness of the model.

As shown in Fig. 9, the test results of the MobileNetV3-C//Sim model on two multi classification datasets are visualized by the confusion matrix, where the diagonal lines represent the number of correctly classified, the rows represent the predicted labels, and the columns are the true labels, which gives a clear picture of the model’s misidentification. In both datasets, it is the irregular cracks that have higher misrecognition, which may be due to the inclusion of features of other cracks in the irregular cracks at the same time. But overall, the recognition rate is still high.

In summary, the improved MobileNetV3-C//Sim model achieved a higher recognition accuracy compared to MobileNetV3, indicating that the C//Sim attentional mechanism better captures the feature information of the cracks, while the higher F1 values reflect that the model works well in balancing precision and recall. In addition, the robustness of the model is verified by comparing the self-constructed dataset with the public dataset.

Comparative tests of different models

We selected some representative convolutional neural networks for comparison. As shown in Table 3, the parameter for MobileNetV3, ResNet50, DenseNet, EfficientNetV2, ShuffleNetV2, and MobileNetV3-C//Sim are 4.21 M, 5.48 M, 9.85 M, 21.45 M, 5.34 M, and 2.90 M, respectively. Compared with the benchmark network, MobileNetV3-Large, the method in this paper effectively reduces the number of parameters by 30.17%. On mobile device deployment, it only requires 11.5 MB, which is lower than other models, indicating that the model occupies very little storage and is suitable for resource-limited environments. In addition, during runtime, the model occupies a certain amount of memory for inference computation, usually within the range of tens of MB, which is acceptable for low-resource mobile devices.

To verify the practicality of this paper’s method, we conducted a comparison using the same experimental data and model parameter settings, as shown in Table 4. Among the two classification tasks, only EfficientNetV2 has a slightly higher recall of 0.01% for the binary classification task in dataset 1, but its 21.45 M computational parameter count is 7.4 times higher than that of 2.90 M, whereas a larger number of parameters will consume a large amount of memory space and computational resources. In the rest of the metrics, the method proposed in this paper is equal to or surpasses other models. In conclusion, the improved model proposed in this paper strikes a good balance between accuracy and lightweight recognition.

Ablation test

To test the rationality of the C//Sim attention mechanism, ablation tests were conducted on different combination approaches between the CA attention mechanism and the SimAm attention mechanism to explore the effects of different combination approaches on recognition performance. The combination approaches are displayed in Table 5, which contains parallel connection, front-back tandem combination, and single type.

The test results are shown in Table 6. In both datasets, the parallel combination is higher than the front and back tandem combination and the single type in all metrics. The accuracy exceeds 0.07–0.8%, recall exceeds 0.04–0.88%, precision exceeds 0.02–0.71%, and F1 values exceed 0.08–0.75%. The reason for this improvement is information complementarity, where different attention mechanisms may focus on different aspects or features of the input data. When combining in parallel, the SimAm attention mechanism can dynamically adjust the weights of the feature maps, making the model more focused on important features. When combined in parallel with the CA attention mechanism, the enhancement of feature extraction can be more significant. In sequential combination, the final attention mechanism loses some of the feature information of the original input image. In addition, the parallel combination can also reduce the computation time. In sequential configuration, one module needs to wait for the completion of another module to continue processing. Parallel combination can make more effective use of hardware resources, reduce training time by about 8%, and improve training efficiency.

During the acquisition process, image data is often affected by such things as noise during shooting and distortion caused by image compression. To verify the reliability and robustness of the C//Sim attention mechanism, we build a new binary classification test set and a multi classification test set, in which the binary classification test set has 400 images with the ratio of the number of cracks to the background of 1:1. the multi classification test set has 400 images with the ratio of the number of transverse, longitudinal, diagonal, and composite cracks of 1:1:1: 1:1. We choose Gaussian noise and salt&pepper noise for robustness testing mainly because these two types of noise are very typical in digital image processing, in addition, during image acquisition, these two types of noise may be introduced due to sensor material properties, operating environment, electronic components, and circuit structure. By adding Gaussian noise and salt&pepper noise, possible scenarios are simulated to evaluate the performance of the model in the presence of noise as shown in Fig. 10.

We compare the C//Sim attention mechanism with other excellent attention mechanisms for noise stress tests using models trained on Dataset2. As shown in Table 7, when the models embedded with different attention mechanisms encounter completely unfamiliar noise, the C//Sim attention mechanism performs the smallest degradation in all the performances and exceeds the other attention mechanisms by 0.65 to 4.28%. This stability indicates that the C//Sim attention mechanism is robust and able to resist noise interference to a certain extent. In the C//Sim attention mechanism, features are extracted and the influence of noise is minimized mainly through the CA module and the SimAm module, and the following mainly explains from two aspects. Firstly, the CA module enables the model to understand the input data more comprehensively and capture the association between features more accurately by considering the global context information and spatial location information. It helps the model maintain accuracy in recognizing key features despite noise interference. SimAm module adopts a parameter-free design to generate attention weights by calculating the similarity between elements within the feature map. This parameter-free design reduces the risk of overfitting to noise while suppressing irrelevant features introduced by noise, improving the generalization ability and robustness to noise. The generated attention weights are able to weight the feature map to emphasize important features.

Summary and prospect

Summary

In this paper, the MobileNetV3-C//Sim model, an improved concrete crack recognition model based on MobileNetV3, was developed. The C//Sim attention mechanism introduced in this model extracts spatial features and channel features embedded with position information in the image, which improves the recognition performance of the model while reducing the computational complexity and storage requirements. In order to verify the practicality of the model, this paper conducts tests on self-constructed datasets and public datasets. The improved model performs higher accuracy, recall, precision, and F1 values than mobilenetv3 for dataset 1 and dataset 2, with increases of 0.44–0.69% and 0.46–0.89% for binary and multi classification tasks, respectively. The structural soundness of the C//Sim attention mechanism is verified by ablation tests on different combinations of the CA attention mechanism and SimAm attention mechanism. In the noise test with CA, CBAM, and SE attention mechanism, C//Sim performs the smallest degradation, which verifies that it performs better noise resistance and robustness. In terms of the number of model parameters, the method proposed in this paper involved only 2.90 M, representing a 30.17% reduction compared with MobileNetV3. In summary, the MobileNetV3-C//Sim network model proposed in this paper can accurately recognize crack and non-crack images and can provide a model reference for the research of lightweight recognition of concrete cracks.

Prospect

The method in this paper optimizes recognition performance, but there is still room for improving the recognition accuracy of composite cracks. For example, in previous research based on image processing methods, scholars have specially processed the color and morphology of the crack texture to improve the recognition accuracy; however, in many current studies based on deep learning, few scholars have analyzed the texture features of the crack itself. Therefore, in future research, excellent feature processing algorithms in the past can be combined with deep learning to explore the attention mechanism related to crack texture to improve the recognition accuracy of composite cracks.

Data availability

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

references

Zhang, Y., Ni, Y. Q., Jia, X. & Wang, Y. W. Identification of concrete surface damage based on probabilistic deep learning of images. Autom. Constr. 156, 105141. https://doi.org/10.1016/j.autcon.2023.105141 (2023).

Larosche, C. J. Types and causes of cracking in concrete structures. In Failure, Distress and Repair of Concrete Structures 57–83 (Elsevier, 2009). https://doi.org/10.1533/9781845697037.1.57.

Zhang, J., Qian, S. & Tan, C. Automated bridge surface crack detection and segmentation using computer vision-based deep learning model. Eng. Appl. Artif. Intell. 115, 105225. https://doi.org/10.1016/j.engappai.2022.105225 (2022).

Koch, C., Georgieva, K., Kasireddy, V., Akinci, B. & Fieguth, P. A review on computer vision based defect detection and condition assessment of concrete and asphalt civil infrastructure. Adv. Eng. Inform. 29, 196–210. https://doi.org/10.1016/j.aei.2015.01.008 (2015).

Kong, H. Q. & Zhang, N. Risk assessment of water inrush accident during tunnel construction based on FAHP-I-TOPSIS. J. Clean. Prod. 449, 141744. https://doi.org/10.1016/j.jclepro.2024.141744 (2024).

Chen, J. H., Su, M. C., Cao, R., Hsu, S. C. & Lu, J. C. Ass self organizing map optimization based image recognition and processing model for bridge crack inspection. Autom. Constr. 73, 58–66. https://doi.org/10.1016/j.autcon.2016.08.033 (2017).

Hoang, N. D., Nguyen, Q. L. & Tran, V. D. Automatic recognition of asphalt pavement cracks using metaheuristic optimized edge detection algorithms and convolution neural network. Autom. Constr. 94, 203–213. https://doi.org/10.1016/j.autcon.2018.07.008 (2018).

Jiang, F. et al. Application of canny operator threshold adaptive segmentation algorithm combined with digital image processing in tunnel face crevice extraction. J. Supercomput. 78, 11601–11620. https://doi.org/10.1007/s11227-022-04330-9 (2022).

Talab, A. M. A., Huang, Z. C., Fan, X. & Liu, H. M. Detection crack in image using Otsu method and multiple filtering in image processing techniques. Optik Int. J. Light Electron Opt. 127, 1030–1033. https://doi.org/10.1016/j.ijleo.2015.09.147 (2016).

Song, B. B. & Wei, N. Statistics properties of asphalt pave⁃ment images for cracks detection. J. Inform. Comput. Sci. 10, 2833–2843. https://doi.org/10.12733/jics20102037 (2013).

O’Byrne, M., Schoefs, F., Ghosh, B. & Pakrashi, V. Texture analysis based damage detection of ageing infrastructural. Elements 28, 162–177. https://doi.org/10.1111/j.1467-8667.2012.00790.xs (2013).

O’Byrne, M., Ghosh, B., Schoefs, F. & Pakrashi, V. Regionally enhanced multiphase segmentation technique for damaged surfaces. Comput. Aided Civil Infrastruct. Eng. 28, 644–658. https://doi.org/10.1111/mice.12098 (2014).

Cubero-Fernandez, A., Rodriguez-Lozano, F. J., Villatoro, R., Olivares, J. & Palomares, J. M. Efficient pavement crack detection and classification. J. Image Video Proc. 39, 1687–5281. https://doi.org/10.1186/s13640-017-0187-0 (2017).

Cha, Y. J., Choi, W. & Büyüköztürk, O. Deep learning-based crack damage detection using convolutional neural networks. Comput. Aided Civil Infrastruct. Eng. 32, 361–378. https://doi.org/10.1111/mice.12263 (2017).

Deng, L., Chu, H. H., Shi, P. & Kong, X. Region-based CNN method with deformable modules for visually classifying concrete cracks. Appl. Sci. 10, 2528. https://doi.org/10.3390/app10072528 (2020).

Zhou, S. L. & Song, W. Deep learning–based roadway crack classification with heterogeneous image data fusion. Struct. Health Monit. 20, 1274–1293. https://doi.org/10.1177/1475921720948434 (2021).

Guo, L., Li, R. Z., Jiang, B. & Shen, X. Automatic crack distress classification from concrete surface images using a novel deep-width network architecture. Neurocomputing 397, 383–392. https://doi.org/10.1016/j.neucom.2019.08.107 (2020).

‘Brien, D. O., Osborne, J. A., Perez-Duenas, E., Cunningham, R. & Li, Z. L. Automated crack classification for the CERN underground tunnel infrastructure using deep learning. Tunn. Undergr. Space Technol. Incorp. Trenchless Technol. Res. 131, 104668. https://doi.org/10.1016/j.tust.2022.104668 (2023).

Flah, M., Suleiman, A. R. & Nehdi, M. L. Classification and quantification of cracks in concrete structures using deep learning image-based techniques. Cement Concr. Compos. 114, 103781. https://doi.org/10.1016/j.cemconcomp.2020.103781 (2020).

Yamaguchi, T. & Mizutani, T. Road crack detection interpreting background images by convolutional neural networks and a self-organizing map. Comput. Aided Civil Infrastruct. Eng. 39, 1616–1640. https://doi.org/10.1111/mice.13132 (2024).

Kang, D. H. & Cha, Y. J. Efficient attention-based sdeep encoder and decoder for automatic crack segmentation. Struct. Health Monit. 21, 2190–2205. https://doi.org/10.1177/14759217211053776 (2022).

Yang, Q. N., Shi, W. M., Chen, J. & Lin, W. G. Deep convolution neural network-based transfer learning method for civil infrastructure crack detection. Autom. Constr. 116, 103199. https://doi.org/10.1016/j.autcon.2020.103199 (2020).

Riedel, H. et al. Automated quality control of vacuum insulated glazing by convolutional neural network image classification. Autom. Constr. 135, 104144. https://doi.org/10.1016/j.autcon.2022.104144 (2022).

Tong, Z., Gao, J., Han, Z. Q. & Wang, Z. J. Recognition of asphalt pavement crack length using deep convolutional neural networks. Road Mater. Pavement Design 19, 1334–1349. https://doi.org/10.1080/14680629.2017.1308265.ss (2018).

Howard, A., Sandler, M., Chu, G., Chen, L.C., Chen, B., Tan, M., Wang, W., Zhu, Y., Pang, R., Vasudevan, V. & Le, Q.V. Searching for MobileNetV3. In 2019 IEEE/CVF international conference on computer vision (ICCV), (2019). https://doi.org/10.1109/iccv.2019.00140.

Meng, Q. C. et al. Image-based concrete cracks identification under complex background with lightweight convolutional neural network. KSCE J. Civ. Eng. 27, 5231–5242. https://doi.org/10.1007/s12205-023-0923-1 (2023).

Ali, R., Chuah, J. H., Talip, M. S. A., Mokhtar, N. & Shoaib, M. A. Crack segmentation network using additive attention gate—CSN-II. Eng. Appl. Artif. Intell. 114, 105130. https://doi.org/10.1016/j.engappai.2022.105130 (2022).

Yao, H. et al. A detection method for pavement cracks combining object detection and attention mechanism. IEEE Trans. Intell. Transp. Syst. 23, 22179–22189. https://doi.org/10.1109/tits.2022.3177210 (2022).

Qu, Z., Wang, C. Y., Wang, S. Y. & Ju, F. R. A method of hierarchical feature fusion and connected attention architecture for pavement crack detection. IEEE Trans. Intell. Transp. Syst. 23, 16038–16047. https://doi.org/10.1109/tits.2022.3147669 (2022).

Hou, Q. B., Zhou, D. Q. & Feng, J.S. Coordinate attention for efficient mobile network design. In 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), (2021). https://doi.org/10.1109/cvpr46437.2021.01350.

Yang, L. B., Zhang, R. Y., Li, L. & Xie, X.H. SimAM: A simple, parameter-free attention module for convolutional neural networks. In International Conference on Machine Learning (2021).

Cui, J. R., Wei, W. Z. & Zhao, M. Rice disease identification model based on improved MobileNetV3, transactions of the Chinese society of agricultural. Machinery 54, 217–224. https://doi.org/10.6041/i.issn.100s0-1298.2023.11.021 (2023).

Yang, J., Jiang, Y. X. & Xiong, X. Y. Combining transformer and SimAM lightweight pavement damage detection algorithms. J. Railw. Sci. Eng. https://doi.org/10.19713/j.cnki.43-1423/u.T20232012 (2024).

Zhang, L., Yang, F., Zhang ,Y. D., & Zhu, Y.J. Road crack detection using deep convolutional neural network. In 2016 IEEE International Conference on Image Processing (ICIP) (2016). https://doi.org/10.1109/icip.2016.7533052

Xu, H. Y. et al. Automatic bridge crack detection using a convolutional neural network. Appl. Sci. 9, 2867. https://doi.org/10.3390/app9142867 (2019).

Acknowledgements

This research was funded by the Natural Science Foundation of Sichuan Province of China (No. 24NSFSC5134), the National Natural Science Foundation of China (No. 52308391) and Sichuan Normal University Experimental Equipment Research Project (No. ZZYQ2021003).

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, R., Chen, R., Yan, H. et al. Lightweight concrete crack recognition model based on improved MobileNetV3. Sci Rep 15, 15704 (2025). https://doi.org/10.1038/s41598-025-00468-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-00468-7