Abstract

Multi-view Clustering (MVC) has gained significant attention in recent years due to its ability to explore consensus information from multiple perspectives. However, traditional MVC methods face two major challenges: (1) how to alleviate the representation degeneration caused by the process of achieving multi-view consensus information, and (2) how to learn discriminative representations with clustering-friendly structures. Most existing MVC methods overlook the importance of inter-cluster separability. To address these issues, we propose a novel Contrastive Learning-based Dual Contrast Mechanism Deep Multi-view Clustering Network. Specifically, we first introduce view-specific autoencoders to extract latent features for each individual view. Then, we obtain consensus information across views through global feature fusion, measuring the pairwise representation discrepancy by maximizing the consistency between the view-specific representations and global feature representations. Subsequently, we design an adaptive weighted mechanism that can automatically enhance the useful views in feature fusion while suppressing unreliable views, effectively mitigating the representation degeneration issue. Furthermore, within the Contrastive Learning framework, we introduce a Dynamic Cluster Diffusion (DC) module that maximizes the distance between different clusters, thus enhancing the separability of the clusters and obtaining a clustering-friendly discriminative representation. Extensive experiments on multiple datasets demonstrate that our method not only achieves state-of-the-art clustering performance but also produces clustering structures with better separability.

Similar content being viewed by others

Introduction

Clustering is a traditional unsupervised learning task aimed at grouping patterns (observations, data points, or feature vectors) into distinct clusters without labels1. Clustering plays a crucial role in various fields, such as data mining2,3, image processing4,5, bioinformatics6,7, and machine learning8,9. With the rapid development of multimedia applications, data often originates from different domains and multiple sources. For instance, images shared on social media platforms are typically accompanied by corresponding textual tags and descriptions; specific news events may be reported by multiple news agencies; and sensor signals can be decomposed in both time and frequency domains10. Traditional single-view clustering methods are inadequate for handling multi-view data. To better exploit the complementary and consistency among multi-view data, Multi-view Clustering (MVC) has become a hot topic in both research and practical applications.

Compared to traditional MVC methods, Deep Learning-based MVC methods (Deep-MVC) can more effectively handle non-linear and high-dimensional data. Specifically, Deep-MVC methods typically extract non-linear and high-dimensional features through view-specific autoencoders, then globally fuse the features extracted by different view autoencoders, and finally perform clustering based on the global features11,12. To ensure the consistency and complementary information of multi-view data, various Deep-MVC methods employ different strategies. For example, Some methods leverage subspace representations by incorporating multiple self-expressive layers between the encoder and decoder to enhance information flow. By facilitating interactions among the self-expressive layers of different views, then construct a shared common subspace, effectively capturing cross-view consistency13; some methods utilize self-attention mechanisms to learn complementary information across views and preserve structural consistency by maximizing the mutual information between multiple views14. Despite significant progress in Deep-MVC methods in recent years, But how to effectively address the conflict between view consensus information and reconstructing view-specific private information is a challenge for Deep-MVC.

To address this issue, contrastive learning-based MVC methods have been proposed. These methods enforce representation alignment across views to extract consensus information, thereby mitigating conflicts with the reconstruction of view-specific private information15,16. While contrastive learning-based approaches have demonstrated promising performance, their excessive emphasis on view consistency can result in representation degradation, where high-quality views are forced to conform to low-quality ones. This phenomenon ultimately limits the effectiveness of MVC, Moreover, these contrastive learning methods generally overlook the importance of inter-cluster separability, making it difficult to achieve optimal discriminative representations through clustering-friendly structures.

To address the aforementioned issues, we propose a novel Deep Multi-view Clustering method based on a Dual Contrastive Mechanism. Our research is driven by the following two key challenges: (1) Traditional multi-view contrastive learning (MCL) methods primarily achieve consistency by aligning cross-view latent representations. However, this approach can lead to representation degradation. Specifically, high-quality views may be forced to align with low-quality views during training (as illustrated in Fig. 1). The primary cause of this issue is the overemphasis on consistency, which overlooks the distinctive information inherent in each view. These view-specific variations often carry crucial discriminative signals, and failing to preserve them limits the model’s effectiveness. Moreover, excessive pursuit of consistency may result in the loss of valuable complementary information, thereby reducing the discriminability of the clustering process. (2) Existing MCL methods fail to learn discriminative representations with a well-structured clustering arrangement. (As shown in Fig. 2a), clusters remain partially entangled, rather than being distinctly separated. Additionally, samples within the same cluster exhibit low compactness, leading to a dispersed representation that weakens both clustering stability and discriminative capability.

Based on the above motivation, we propose an adaptive weighted view module based on contrastive learning. This module employs MLP-based view-specific encoders to extract latent representations for each view from the original data space, followed by global feature fusion to construct a unified representation. Under the assumption that representations of the same sample across different views exhibit inherent similarity, we perform multi-view contrastive learning between the view-specific latent features and the global representation to capture cross-view semantic consistency. Furthermore, by measuring the discrepancy between view-specific latent features and the global representation, we adaptively strengthen high-quality views while weakening unreliable views. This mechanism ensures that high-quality views play a dominant role in global fusion, thereby significantly mitigating the impact of unreliable views on clustering performance. To obtain discriminative representations with a cluster-friendly structure, as shown in Fig. 2b, we introduce a Dynamic cluster diffusion module(DC) from the DCMVC framework to optimize the clustering structure12.

Compared to existing methods, our approach offers the following contributions:

-

1.

We propose a novel Deep Multi-view Clustering method based on a Dual Contrastive Mechanism, which effectively captures discriminative representations with a clustering-friendly structure.

-

2.

We design an Adaptive Weighted Multi-view module, which can adaptively enhance useful views with semantic information during the feature fusion process, while suppressing the influence of unreliable views.

-

3.

Extensive experiments are conducted on six datasets and the results demonstrate the state-of-the-art clustering performance of our proposed method.

The rest of the paper is organized as follows: Sect. 2 reviews the related work; Sect. 3 provides a detailed description of the proposed Deep Multi-view Clustering network model; Sect. 4 presents comprehensive experimental setups and result analysis; finally, Sect. 5 concludes the paper and discusses future research directions.

Related work

In this section, we primarily review existing MVC methods and introduce Contrastive Learning, which has been widely used in many unsupervised learning tasks.

Multi-view clustering

In this section, we classify existing MVC representation methods into three categories based on the differences in their approaches: (1) Subspace-based Multi-view Clustering: Multi-view subspace clustering methods learn a unified subspace representation from the specific subspaces of all views. In recent years, some methods have explored the potential representations of multiple views through subspace learning, effectively leveraging the complementary characteristics of multi-view data17,18. In addition, Su et al. proposed using anchor point learning to address the challenge of large-scale multi-view data processing19. Liu et al. incorporated diversity representation and block diagonal representation modules into multi-view subspaces, thereby enhancing both the discriminability and diversity of the clustering20. (2) Graph-based multi-view clustering: graph representation is an important data structure in multi-view data relations21. Many graph-based multi-view clustering algorithms are devoted to learning consensus affinity graphs across views and clustering based on them22,23. In order to solve the problem of different view weights, Wang et al. designed a graph fusion technique with automatically weighted graph matrices24. In addition, Nie et al. introduced the constrained Laplace rank (CLR), which enables the consensus affinity graph to automatically form \(\:c\) connected components, thus avoiding the subsequent clustering operations22. (3) Multi-view clustering based on deep embedded representations: many current MVC methods are based on auto-encoder frameworks, which are deep embedded frameworks with excellent abilities to handle nonlinear features and high-dimensional data. One of the representative methods is Deep Embedded Clustering (DEC), which simultaneously learns the auto-encoder’s clustering assignment and embedding features25. Based on this, the improved DEC avoids the “collapse” problem of deep models by introducing a tradeoff between clustering and reconstructing objects26. In addition, different from the traditional self-encoder-based DEC methods, Deep Matrix Factorization (DMF) has also received much attention, which employs semi-nonnegative matrix factorization to learn the hierarchical semantics of multiple views in a hierarchical manner27. Subsequently, Li et al. introduced graph Laplacian regularization to the multilayer NMF model, which enabled more complex hierarchical information learning28.

Contrastive learning

Contrastive learning aims to maximize the similarity between pairs of positive samples while minimizing the similarity between pairs of negative samples. Due to its excellent performance in the field of unsupervised learning, researchers have introduced contrastive Learning into the field of clustering and proposed various deep clustering methods based on contrastive learning29. Specifically, Li et al. construct positive-negative instance pairs via data augmentation first, and then perform contrastive learning at the instance level and cluster level respectively to jointly learn the representation and cluster allocation by simultaneously optimizing the contrastive loss at both the instance level and the cluster level29. Huang et al. maximize the distances between prototypical representations via contrastive learning, which improves the homogeneity of the representations, and align the positive pairs of the instances to improve intra-cluster compactness30. However, these methods focus on single-view data and are not applicable to multi-view data. Many multi-view clustering methods based on contrastive learning have been proposed due to its excellent performance in capturing cross-view consistency. For example, Wu et al. maximize the consensus information between global features and view-specific features through contrastive learning, which in turn effectively ensures the consistency of multi-views11, while Xu et al. utilize contrastive learning to achieve consistency between high-level features and semantic labels15. In addition, Lin et al. aligned sample representations of multi-views through contrastive learning to capture invariant information between views31. However, we note that most existing approaches ignore the representation degradation problem, where high-quality views are aligned to low-quality views, and also insufficiently consider the importance of maintaining cluster separability. Therefore, to address these issues, we propose a novel deep multi-view clustering method based on dual contrastive mechanism.

Compared to existing multi-view clustering methods based on contrastive learning, our approach introduces significant advancements in view-adaptive weighting and clustering structure optimization. First, to mitigate the issue of representation degradation, which is commonly observed in existing methods, we propose a contrastive learning-based view-adaptive weighting module. This module dynamically adjusts the weight of each view based on its contribution to the global representation. By effectively balancing view consistency and complementarity, our method prevents high-quality views from being forced to align with low-quality ones due to an overemphasis on consistency. In contrast, most existing methods employ fixed or heuristic weighting strategies, which fail to appropriately distinguish the importance of different views in the clustering process. Furthermore, we incorporate a Dynamic cluster diffusion module (DC) within the DCMVC framework to further refine the clustering structure. This addition enables the model to learn more compact intra-cluster representations and better-separated inter-cluster distributions, thereby improving clustering performance. In contrast, existing approaches primarily focus on instance-level contrastive learning without explicitly optimizing the clustering structure. Consequently, they often struggle to enforce well-separated clusters, resulting in suboptimal discriminative representations. By maintaining multi-view consistency while mitigating representation degradation, our method effectively overcomes the limitations of existing contrastive multi-view clustering approaches and facilitates the learning of more discriminative representations with a cluster-friendly structure.

Proposed methods

Task Statement: Given a multi-view set \(\:{\left\{{X}^{v}\right\}}_{v=1}^{M}\), which has \(\:N\) samples across \(\:M\) views, where \(\:{X}^{v}=\left\{{X}_{1}^{v};{X}_{2}^{v}; \ldots ;{X}_{N}^{v}\right\}\in\:{R}^{N\times\:{D}_{v}}\), and \(\:{D}^{v}\) represents the feature dimension of the \(\:v-th\) view sample. Multi-view clustering aims to partition \(\:N\) samples into \(\:K\) clusters, and to improve clarity and simplicity, the primary notations used in this paper are listed in Table 1.

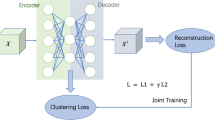

To solve the aforementioned MVC issues, we propose a new Deep-MVC network ( details are shown in Fig. 3).

Specifically, the network mainly includes the following modules: a global feature fusion module for obtaining cross-view consistency information; an adaptive weighted module for assigning weights to different views by maximizing the consistency information of view-specific features and global features (with loss \(\:{L}_{aw}\)). and a dynamic cluster diffusion module to enhance inter-cluster separation by maximizing the distance between clusters (with loss \(\:{L}_{dc}\)).

In this section, we will focus on three modules and their optimization methods, and finally present a time complexity analysis of our methods.

Autoencoder and global feature fusion module

In MVC, it is crucial to extract discriminative features from different features of the original multi-views. Since autoencoders perform excellent in processing nonlinear features and high-dimensional data, we utilize several view-specific autoencoders to extract the deep features of each view, while the corresponding auto-decoders are utilized to preserve other information of the original data of different views. Specifically, let \(\:{f}^{v}\)and \(\:{g}^{v}\)represent the autoencoder and auto-decoder of the \(\:v-th\) view, respectively, then the latent features of the \(\:v-th\) view extracted by the autoencoder can be expressed as:

where \(\:{z}_{i}^{v}\in\:{R}^{d}\) is the latent feature extracted from the \(\:i-th\) sample of the \(\:v-th\) view through the autoencoder, and \(\:{\theta\:}^{v}\) is a parameter of the autoencoder. The decoding process of the \(\:v-th\) view can be described as follows.

where \(\:\stackrel{\wedge\:}{{x}_{i}^{v}}\) is the feature of the \(\:i-th\) sample in the \(\:v-th\) view reconstructed by the auto-decoder, and \(\:{\phi\:}^{v}\) is a parameter in the auto-decoder. Similar to the traditional autoencoder, the reconstruction loss is also introduced for multi-view, which allows the autoencoder for all views to capture latent features and reduce the loss of information across views simultaneously. The reconstruction loss of the multi-view autoencoder can be described by the following equation:

Consistency and complementary across views is highly important in the MVC process. Raw data from different views have complementary latent features extracted by view-specific autoencoders. To capture the consistency across views and obtain better clustering results, two popular approaches are concatenation and fusion12. Among them, concatenation is to connect features from different views into a global feature11, however, this approach often requires a MLP layer to downscale the global feature in subsequent work, which increases the computational complexity. Therefore, in this paper, we choose the fusion approach to obtain the global consensus information, and its expression can be represented by the following equation:

where\(\:\:Z\) is a global feature that contains consistent information across views, and \(\:{w}_{v}\) is the weights of different views, which is a parameter that can be learned. In order to solve the representation degeneration problem in view alignment, the adaptive weighted module in the follow-up work will assign weights to views based on different view qualities, as detailed in the second part of this section.

Adaptive weighted module

In the previous section, we obtained the global feature \(\:Z\) of multi-view by fusion as shown in Eq. (4), and in order to preserve the consistency between the global feature \(\:Z\) and the view-specific feature \(\:\{{z}^{v}{\}}_{v=1}^{M}\), we let the feature dimensions of \(\:Z\) be the same as the feature dimensions of \(\:\{{z}^{v}{\}}_{v=1}^{M}\). As mentioned before, indirectly obtaining consistent semantic information for multiple views by employing view feature alignment may cause a representation degradation problem, therefore, we employ multi-view contrastive learning (MCL) to obtain the consensus information of global feature \(\:Z\) and view-specific feature \(\:\{{z}^{v}{\}}_{v=1}^{M}\), which generates a contrastive loss can be described as:

The learnable global feature space \(\:Z\) contains consistent information for each view, and the specific latent representations of the same sample in different views are similar, based on this, the representations of the same sample in global features and the specific representations in different views should be mapped closer. Therefore, we let \(\:\{{z}_{j}^{v},{Z}_{i}{\}}_{j\ne\:i}^{v=1, \ldots ,M}\) as \(\:M\) positive feature pairs and the rest \(\:\{{z}_{j}^{v},{Z}_{i}{\}}_{i\ne\:j}^{v=1, \ldots ,M}\) as \(\:M(N-1)\) negative feature pairs, meanwhile we use cosine similarity to measure the similarity between different feature pairs:.

where \(\left\langle \cdot \right\rangle\) denotes the dot product operation, and the specific contrastive loss is represented as follows:

where \(\:\tau\:\) is a temperature parameter that moderates the effect of similarity and \(\:1\left[j\ne\:i\right]\in\:\left\{\text{0,1}\right\}\) is an indicator function.

Several of the previous MCL works equally consider each view, ignoring the problem of view representation degeneration12,16,32. To address this problem, we assign different weights \(\:{W}^{v}\) to different views, which adaptively adjusts the contribution of each view according to the view quality during the process of global feature fusion. Specifically, it will enhance the contrastive learning between that view’s features and the global features if a view is useful; and vice versa, it will weaken the contrastive learning between them. In this way, quality views can dominate the fusion process, which mitigates the view representation degeneration problem. Therefore, our adaptive multi-view weighted contrastive loss can be described as:

Effectively distinguishing whether view information is useful or not in MVC is tricky, and we solve this problem based on the dissimilarity between the global view feature \(\:Z\) and the view-specific feature \(\:\{{z}^{v}{\}}_{v=1}^{M}\). Specifically, the lower the discrepancy between the view-specific feature and the global view feature also means that they are more relevant to each other, and then the view will have a higher weight. Thus the view weights can be updated as:

where \(\:I(\cdot\:)\) denotes the discrepancy between specific view features and global view features, and \(\:f(\cdot\:)\)denotes the view weight function assigned based on the discrepancy. To estimate the correlation between different feature pairs, the maximum mean discrepancy (MMD) can effectively measure the discrepancy between two distributions P and Q. This method is based on the expectations of the two-view data, where \(\:{X}_{1}=\{{x}_{1}^{i}{\}}_{i=1}^{{n}_{1}}\) and \(\:{X}_{2}=\{{x}_{2}^{j}{\}}_{j=1}^{{n}_{2}}\) are generated from distributions P and Q, respectively33. Mathematically, MMD can be expressed as:

where \(\:\phi\:(\cdot\:)\) denotes the nonlinear mapping function and H denotes the Reproducing Kernel Hilbert Space(RKHS). We obtain the following equation by squaring Eq. (10)

Here, we adopt a high-dimensional mapping \(\:\phi\:\left(x\right)\) corresponding to the Gaussian kernel denoted as \(\:\phi\:\left(x\right)=[{e}^{-\frac{{\left\| x-{c}_{1}\right\|}^{2}}{2{\sigma\:}^{2}}},{e}^{-\frac{{\left\| x-{c}_{2}\right\|}^{2}}{2{\sigma\:}^{2}}}, \ldots]\). specifically, when computing MMD, it is unnecessary to explicitly compute \(\:\phi\:\left(x\right)\), as the Gaussian kernel inherently defines the inner product in the RKHS, thereby implicitly capturing the nonlinear feature space representation, i.e., \(\:K(x,y)=\left\langle \phi\:\left(x\right),\phi\:\left(y\right) \right\rangle\) the above equation can be expanded as:

from Eqs. (10,11,12) we can infer the discrepancy formula between global and view-specific pairs of views:

Considering that the feature \(\:{z}^{v}\)having lower discrepancy with the global feature \(\:Z\) should be assigned a higher weight value, we employ the normalization function \(\:f\left(I\right({z}^{v},Z\left)\right)=Soft{max}(-I({z}^{v},Z))\) as the view weight assignment function. The view weight is updated by:

\(\:{W}^{v}\) adaptively adjusts the consistency objective between \(\:Z\) and \(\:\{{z}^{v}{\}}_{v=1}^{M}\), where the useful views will dominant feature fusion while the unreliable views are weakened, significantly mitigating representation degeneration.

Dynamic cluster diffusion module

In many previous MVC studies, the importance of inter-cluster separability has often been overlooked, resulting in an inability to fully exploit the clustering discriminative information through effective clustering structures16. To address this issue, we introduce a Contrastive Learning-based Dynamic Cluster Diffusion(DC) module12, which not only achieves intra-cluster cohesion across views but also facilitates the separation of clusters in the latent representation space. Specifically, the module assumes that the multi-view dataset consists of \(\:k\) classes, and it obtains the feature representations of the corresponding cluster centers in both the global feature space and each view-specific feature space. Then, the following cluster-driven contrastive loss function is employed to maximize the distance between clusters:

Since the last term \(\:{log}({exp}(\frac{s({\mu\:}_{k},{\mu\:}_{k}^{v})}{{\tau\:}_{c}})+{\sum\:}_{j=1,j\ne\:k}^{K}{exp}(\frac{s({\mu\:}_{k},{\mu\:}_{j})}{{\tau\:}_{c}}))\) contains both positive and negative sample terms, it is difficult to optimize it directly, so the calculation can be simplified by using the log-sum-exp (LSE) approximation30, i.e.,

Equation (16) is introduced into Eq. (15):

Here \(\:{L}_{dc}\) enforces cluster cohesion (pulling pairs of positive samples closer together) in the first term and cluster separation (separating pairs of negative samples) in the second term. where \(\:s({\mu\:}_{k},{\mu\:}_{j})=\frac{{\mu\:}_{k}^{T}{\mu\:}_{j}}{\left\langle {\mu\:}_{k}\right\rangle \left\langle {\mu\:}_{j}\right\rangle}\) is a measure of the similarity of the feature representations of the cluster centers, \(\:{\tau\:}_{c}\) is a temperature parameter used to control the sharpness of the distributions, and \(\:{\mu\:}_{k}\) and \(\:{\mu\:}_{k}^{v}\) denote the feature representations of the \(\:k-th\) cluster centers of the global and view-specific feature spaces, respectively, which are described by the mathematical expressions as:

where \(\:p\left(k\right|{Z}_{i})\) denotes the hard assignment of the \(\:i-th\) sample belonging to the \(\:k-th\) cluster.

During the training process, obtaining an accurate \(\:p\left(k\right|{Z}_{i})\)is crucial for optimizing the proposed model. Here, EM framework can be used to optimize the model, in each E-step, alternately using K-means to obtain the cluster centroid representation, and then obtain the initial hard assignment \(\:p\left(k\right|{Z}_{i})\). Then in M-step, the cluster centroid representation is updated by minimizing the loss function \(\:{L}_{dc}\), to optimize the model. Specifically, as the optimization progresses, the update of cluster centers no longer solely relies on the initial hard assignment but is dynamically adjusted through the loss function, introducing the property of soft assignment. This approach more accurately reflects the contribution of each sample to different clusters, allowing \(\:p\left(k\right|{Z}_{i})\) to serve as a weighting factor for updating cluster centers, thereby promoting a more reasonable and robust clustering structure during training.

The aforementioned clustering-driven contrastive loss consists of two primary components, the cluster cohesion term, which facilitates interactions across views, and the cluster separation term, which promotes the separation of different clusters from each other in latent feature space, resulting in a distinct and fully separated cluster structure.

Optimal

We train our deep clustering network in pre-training stage and fine-tuning stage. In the pre-training stage, using randomly initialized parameters for these deep autoencoders may lead to convergence of the model to a local optimum during training. Therefore, we first train these deep autoencoders to obtain better parameters \(\:\{{\theta\:}^{v}{\}}_{v=1}^{M}\) and\(\:\:\{{\phi\:}^{v}{\}}_{v=1}^{M}\) to speed up the convergence of the model to the optimal solution. In the fine-tuning stage, we first calculated the adaptive weight contrastive loss (Eq. (8)) to iteratively update the different view weights; then, due to the importance of obtaining an accurate \(\:p\left(k\right|Z)\) for optimizing our model, we exploited the expectation-maximization (EM) framework to train our model, specifically, alternately using K-means in each E-step on the obtained global features for clustering, and then in M-step we take into account all module losses and optimize the following overall objective loss using the Adam optimizer:

where \(\:\partial\:\) and \(\:\beta\:\) are two hyperparameters to control the balance of the three loss components.

The training process of our proposed method is summarized in Algorithm 1, and the final clustering results are obtained by performing K-means clustering on the consensus representation Z generated by the global feature fusion module.

Complexity analysis

The computational complexity of the algorithm for the model proposed in this paper includes two primary components: adaptive weighted feature fusion and dynamic cluster diffusion. On the one hand, in calculating different view weights by contrastive learning loss, the computational complexity is \(\:3{N}^{2}d\). In fusing the potential features of specific views into global features based on different view weights, the computational complexity is \(\:NVd\), where\(\:N\)denotes the number of samples, \(\:V\) denotes the number of views, and d stands for the feature dimensionality of the samples. Thus, after a maximum of T iterations, the computational complexity is \(\:O(3T{N}^{2}Vd+TNVd)\). On the other hand, the computational complexity of K-means clustering on E-steps is \(\:NKd\), subsequently a min-batch \(\:B\) is randomly sampled in M-steps assuming that its size is \(\:b\). The computational complexity of the cluster center representation obtained by Eqs. (16)(17) is \(\:KVb{d}^{2}\), where \(\:K\) denotes the number of clusters. Furthermore one round of iteration in M-step needs to iterate Mini-batches, Mini-batches=\(\:\frac{N}{b}\), so the time complexity of each round is \(\:O\left(\frac{N}{b}\right(NKd+KVb{d}^{2}\left)\right)=O(\frac{{N}^{2}Kd}{b}+NKV{d}^{2})\), and the time complexity after the maximum number of \(\:T\) iterations is \(\:O(\frac{T{N}^{2}Kd}{b}+TNKV{d}^{2})\), so the overall time complexity is \(\:O(3T{N}^{2}Vd+TNVd+TNVK{d}^{2}+\frac{{N}^{2}TKd}{b})\), Since the parameters of \(\:K,V,b,d\) are far less than the number of samples \(\:N\), the time complexity of the model can be be approximated as \(\:O\left(T{N}^{2}d\right(3V+K\left)\right)\).

Experiments

In this section, six datasets are selected for experiments and the results are compared with 12 representative and state-of-the-art methods to verify the effectiveness of the proposed method.

Dataset

Our experiments are conducted on the following datasets, for details please refer to Table 2.

-

1.

Sentences NYU v2(RGB-D): RGB-D is a set of indoor images which includes textual descriptions34. For the first view, we use image features pre-extracted by the ResNet-50 network and for the second view, we use image descriptions obtained by training a doc2vec model on the Wikipedia dataset12.

-

2.

Cora: Cora is a text dataset that includes seven classes35, and in this paper we select all four of its content, inbound, outbound, and citation features as different views.

-

3.

Columbia Consumer Video(CCV): CCV is a video dataset that contains about 6773 samples over 20 categories36. We selected three hand-crafted bag-of-words representations as different views from it, which are STIP, SIFT and MFCC.

-

4.

Hdigit: The Hdigit dataset is derived from the MNIST and USPS handwritten digit datasets and contains 10,000 samples and two different views37.

-

5.

ALOI: ALOI is a subset of ALOI-1 K where the color similarity, Haralick, HSV, and RGB features are extracted separately for each image and these features are used for the four views38.

-

6.

Digit-product: Digit-product is similar to Hdigit, derived from the MNIST and Fashion HandWritten datasets, and contains 30,000 samples and two views39.

Compared methods and evaluation measures

We compare our proposed deep clustering method based on dual contrastive learning with the following traditional methods and some of the latest deep clustering approaches.

-

1.

K-means: K-means is a classic clustering algorithm that partitions data by minimizing the distance between a point and the cluster centroid40.

-

2.

BMVC: BMVC efficiently incorporates collaborative information from multiple views by jointly learning collaborative discrete representations and binary cluster structures41.

-

3.

LMVSC: LMVSC constructs a smaller graph for the view between the raw data and the anchors via anchor learning, followed by merging these anchor graphs for subspace clustering.

-

4.

FPMVS-CAG: FPMVS-CAG enables the two processes to collaborate with each other and improve the clustering quality by constructing a unified optimization framework for anchor selection and subspace42.

-

5.

EAMC: EAMC is an end-to-end Multi-Modal Adversarial Attention Network that leverages adversarial learning to align the latent distributions and employs attentional mechanisms to quantify the importance of different modalities43.

-

6.

SiMVC: Simple Multi-view Clustering (SiMVC) exhibits competitive or superior performance by prioritizing information views through a linear combination mechanism16.

-

7.

DSMVC: DSMVC simultaneously extracts complementary information across views and discards meaningless noise through automatic feature selection32.

-

8.

ProPos: ProPos enhances the consistency of the representation by maximizing the distance between prototypes and augments the view by sampling neighbor alignment for intra-cluster compactness30.

-

9.

CoMVC: Contrastive Multi-view Clustering (CoMVC) combines a selective contrast alignment module with SiMVC to leverage alignment advantages while maintaining the priority of the information view16.

-

10.

MFLVC: MFLVC effectively balances reconstruction of view-private with learning of common semantics through multi-level feature learning15.

-

11.

GCFAgg: GCFAgg aligns consensus and view-specific representation information through a structure-guided contrastive learning module44.

-

12.

DCMVC: DCMVC achieves intra-cluster compactness and inter-cluster separateness by introducing a dual contrastive learning module, respectively, which realizes the best clustering discriminative information through a friendly clustering structure12.

We selected quantitative metrics including unsupervised clustering accuracy (ACC)45, normalized mutual information (NMI)46, and purity (PUR)47.

Implementation details

We adopt the Fully Connected (FC)-based layer as the main layer of the deep network, specifically, for the view-specific network, the encoder architecture is set to: input-FC500-FC500-FC2000-FC256, and the decoder is the mirror structure of each view-specific encoder. We use RELU48 as the activation function and Adam49 as the optimizer. We have a default learning rate of 0.0003, a batch size of 256, and 200 epochs of all view-specific autoencoders trained in the pre-training stage; in the training stage, the network is trained for 200 epochs on each dataset. the temperature parameter, \(\:\tau\:\), is set to 1, and \(\:{\tau\:}_{c}\) is set to 0.5. for the hyperparameter, \(\:\partial\:\), it is chosen from the range \(\:\left\{\text{0.001,0.01,0.1,1}\right\}\), and for the hyperparameter \(\:\beta\:\), it is chosen in the range of \(\:\left\{\text{0.4,0.6,0.8,1}\right\}\).

Our experiments are conducted on Ubuntu 22.04, and the hardware configuration includes an NVIDIA 4090 graphics processing unit (GPU), an Intel(R) Xeon(R) Silver 4310 cpu, and a 32GB of RAM.

Experimental results

The results of the experiments on the six datasets are outlined in Tables 3 and 4. The ACC metrics of the comparison experiments are shown in Fig. 4. The optimal and sub-optimal results are presented in bold and underlined, respectively, and it is notable that:

-

1.

In some small-scale datasets, our method outperforms most models. for example, on the RGB-D, Cora, and CCV datasets, it achieves significantly higher quantitative metrics in the clustering task than the traditional MVC approach. Compared to the EAMC, SIMVC, and MFLVC methods, our ACC enhances by 7.21%, 11.76%, and 5.55%, respectively, which indicates that our method performs better in capturing multi-view complementary information. However, our results are not optimal compared to some latest experimental methods for example DCMVC. The reason for this result may be that false negatives disturb the clustering process during contrastive learning.

-

2.

In general, single-view clustering (e.g., K-means) performs inferiorly, however, we found that some multi-view clustering methods, i.e., BMVC and SIMVC, perform worse than K-means on RGB-D, Cora, and CCV datasets, which may be attributed to the fact that many MVC methods fail to efficiently extract discriminative features from multi-views, which affects the clustering performance. This observation shows that simply having multiple views is not sufficient to ensure excellent performance; the key lies in how to effectively integrate and utilize multi-view information. Our method employs a dual-contrast mechanism that is able to extract discriminative features from multiple views.

-

3.

Our approach outperforms other models in terms of quantitative metrics obtained by clustering on the larger datasets such as Hidigit, ALOI, and Digit-product. This may be attributed to the fact that most MVC approaches equally consider each view in the clustering process, which ignores the impact of some low-quality views containing redundancy and noise on the clustering. In our method, the adaptive weighted module based on contrastive learning can adaptively strengthen the useful view and weaken the useless view, thus improving the clustering effect.

Ablation study

In this section, we perform a detailed ablation study to gain a deeper insight into the sub-modules designed for MVC. The ablation results are shown in Table 5.

-

1.

Effect of Adaptive weighted feature fusion: AW is an adaptive weighted module based on contrastive learning. In order to evaluate the effectiveness of adaptive weighted feature fusion introduced in our network, we compared it with a network utilizing average fusion. As shown in Table 5, our method outperforms the method with no AW in terms of clustering performance. It is evident from these results that adaptive weighted feature fusion enables the model to capture more comprehensive feature information and obtain an adaptive clustering representation compared to average fusion.

-

2.

Effect of Dynamic Cluster Diffusion: we evaluate the effectiveness of DC module by comparing the proposed method with a degraded network with no DC module. From the results in Table 5, we can see that without the DC module, the clustering performance is degraded. For example, on the Digit-product dataset, the absence of the DC module decreases the ACC by 2.04% and the PUR by 3.11%; on the RGB-D dataset, the ACC decreases by 1.84%. These results indicate that the DC module can effectively improve the clustering performance of our network. Besides, the DC module further optimizes the clustering representation by augmenting the separability across clusters.

-

3.

Effects of Contrastive Loss Functions: In our proposed method, it mainly involves two kinds of loss functions \(\:{L}_{aw}\) and \(\:{L}_{dc}\). Among them, \(\:{L}_{aw}\) plays a crucial role in our method, without it, the ACC metrics of our method on the datasets RGB-D, CCV, and Digit-product will be decreased by 16.39%, 10.15%, and 21.24%, respectively, this is because the global features computed without \(\:{L}_{aw}\) will be disturbed by the irrelevant information inherent in each view, which seriously affects the clustering performance. Besides, we introduce \(\:{L}_{dc}\) to optimize our clustering representation, without it, the ACC metrics of our method on the datasets RGB-D, CCV, and Digit-product will decrease by 1.02%, 1.79%, and 1.11%, respectively, this is because without b, the contribution of the samples to the different clusters is not well reflected, which results in some samples incorrect clustering.

Model analysis

Visualization of the clustering results

In order to validate that our method can obtain discriminative representations with a clustering-friendly structure, we use the t-SNE method on the RGB-D and Cora datasets to visualize the consensus representations learned in different training steps50. As shown in Figs. 5 and 6, as the training epoch increases, the clustering structure of the learned consensus representation gains clarity, the distance between clusters gradually increases, and the distribution of data within clusters becomes more compact.

View weighting analysis

Adaptive weighted of views is one of the key components in our proposed method, which is designed to adaptively strengthen the weights of useful views and weaken the influence of unreliable views. This section discusses in detail how the adaptive weighted method affects the process of multi-view contrastive learning. Specifically, Fig. 7 illustrates the change trend of different view weights with the iterations on the RGB-D and Cora datasets. We observe that initially the weights of the different views are the same, but as iterations proceed, the weights of the views change based on their quality. It is notable that all views are weighted and summed to 1. High-quality views are assigned higher weights, and low-quality views are assigned lower weights. The result is that contrastive learning based on high-quality views is strengthened, while alignment errors for low-quality views are effectively mitigated. As shown in Fig. 8, the final global features can be highly consistent with the high-quality view, thus effectively extracting more useful semantic information from the reliable view. The above experimental results verify the effectiveness and advantages of our proposed adaptive weighted multi-view method.

Hyper-parameter analysis

We conducted experiments on the ALOI dataset with various combinations of \(\:\partial\:\) and \(\:\beta\:\) to analyze the sensitivity of the hyperparameters. When performing sensitivity tests for a single parameter, the other parameters are fixed to their optimal values. Figure 9 present the clustering performance across different combinations of \(\:\partial\:\) and \(\:\beta\:\) for NMI and ACC metrics, respectively, and the experimental results show that the clustering effect of our method on the ALOI dataset remains relatively stable. In summary, our method exhibits better robustness to hyperparameter selection, maintaining consistently good performance across a variety of settings.

Conclusion and future perspectives

In this paper, we propose a novel deep multi-view clustering network based on dual contrastive mechanism. Compared with existing MVC methods, our method can obtain a more accurate clustering representation by adaptively assigning different weights to views with different qualities, and effectively mitigates the problem of degeneration of view representations. Moreover, our method can learn discriminative representations with cluster-friendly properties, enabling better separation of different clusters in the latent space. Specifically, we introduce view-specific autoencoders for extracting the distinct features of each view. Then, we design an adaptive weighted Module based on contrastive learning to ensure that the consensus representation aligns the features of high-quality views. To further enhance the clustering effect, we introduce a dynamic cluster diffusion (DC) module. This module aims to maximize the separation between clusters by increasing the distance between different cluster representations in the consensus feature space. Experimental results show that our approach not only effectively mitigates the view representation degradation problem, but also generates better clustered discriminative representations. Experimental results demonstrate that our approach not only effectively mitigates the view representation degeneration problem, but also generates better clustering discriminative representations. Moreover, in experiments on several large-scale datasets, our method obviously better than the existing state-of-the-art methods.

In our future work, we plan to address the interference of the false-negative problem in contrastive learning on the clustering process to improve the robustness of the clustering network. We will also investigate and improve the view discrepancy formula to reduce the computational cost.

Data availability

All data generated or analyzed during this study are included in this published article.

References

Jain, A. K., Murty, M. N. & Flynn, P. J. Data clustering: a review. ACM Comput. Surv. (CSUR). 31(3), 264–323 (1999).

Berkhin, P. A survey of clustering data mining techniques. In Grouping Multidimensional Data: Recent Advances in Clustering 25–71 (Springer, 2006).

Neha, D. & Vidyavathi, B. M. A survey on applications of data mining using clustering techniques. Int. J. Comput. Appl., 126(2). (2015).

Saxena, A. et al. A review of clustering techniques and developments. Neurocomputing 267, 664–681 (2017).

Oyelade, J. et al. Data clustering: Algorithms and its applications. In 2019 19th International Conference on Computational Science and Its Applications (ICCSA), 71–81 (IEEE, 2019).

Masood, M. A. & Khan, M. N. A. Clustering techniques in bioinformatics. IJ Mod. Educ. Comput. Sci. 1, 38–46 (2015).

Ekanayake, J., Gunarathne, T. & Qiu, J. Cloud technologies for bioinformatics applications. IEEE Trans. Parallel Distrib. Syst. 22(6), 998–1011 (2010).

Zhang, Z. et al. Discriminative clustering on manifold for adaptive transductive classification. Neural Netw. 94, 260–273 (2017).

Hoya, T. Reducing the number of centers in a probabilistic neural network via applying the first neighbor means clustering algorithm. Array 14, 100161 (2022).

Yang, Y. & Wang, H. Multi-view clustering: A survey. Big Data Min. Analytics. 1(2), 83–107 (2018).

Wu, S. et al. Self-weighted contrastive fusion for deep multi-view clustering. IEEE Trans. Multimed. (2024).

Cui, J. et al. Dual contrast-driven deep multi-view clustering. IEEE Trans. Image Process. (2024).

Xu, K. et al. Clean and robust multi-level subspace representations learning for deep multi-view subspace clustering. Expert Syst. Appl. 252, 124243 (2024).

Wang, Y. et al. Consistent multiple graph embedding for multi-view clustering. IEEE Trans. Multimedia. 25, 1008–1018 (2021).

Xu, J. et al. Multi-level feature learning for contrastive multi-view clustering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 16051–16060. (2022).

Trosten, D. J. et al. Reconsidering representation alignment for multi-view clustering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 1255–1265. (2021).

Zhang, C. et al. Latent multi-view subspace clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 4279–4287. (2017).

Zhang, C. et al. Generalized latent multi-view subspace clustering. IEEE Trans. Pattern Anal. Mach. Intell. 42(1), 86–99 (2018).

Su, C. et al. Anchor-based multi-view subspace clustering with graph learning. Neurocomputing 547, 126320 (2023).

Liu, M. et al. Multi-view subspace clustering network with block diagonal and diverse representation. Inf. Sci. 626, 149–165 (2023).

Zhou, L. et al. A survey and an empirical evaluation of Multi-view clustering approaches. ACM Comput. Surveys. 56(7), 1–38 (2024).

Nie, F., Li, J. & Li, X. Self-weighted multiview clustering with multiple graph. In IJCAI, 2564–2570. (2017).

Mei, Y. et al. Robust graph-based multi-view clustering in latent embedding space. Int. J. Mach. Learn. Cybernet. 1–12. (2022).

Wang, H. et al. A study of graph-based system for multi-view clustering. Knowl. Based Syst. 163, 1009–1019 (2019).

Xie, J., Girshick, R. & Farhadi, A. Unsupervised deep embedding for clustering analysis. Int. Conf. Mach. Learn. PMLR, 478–487 (2016).

Guo, X. et al. Improved deep embedded clustering with local structure preservation. In IJCAI. vol. 17, 1753–1759. (2017).

Zhao, H., Ding, Z. & Fu, Y. Multi-view clustering via deep matrix factorization. In Proceedings of the AAAI Conference on Artificial Intelligence. vol. 31, no. 1 (2017).

Li, J. et al. Deep graph regularized non-negative matrix factorization for multi-view clustering. Neurocomputing 390, 108–116 (2020).

Li, Y. et al. Contrastive clustering. In Proceedings of the AAAI Conference on Artificial Intelligence. vol. 35, no. 10, 8547–8555 (2021).

Huang, Z. et al. Learning representation for clustering via prototype scattering and positive sampling. IEEE Trans. Pattern Anal. Mach. Intell. 45(6), 7509–7524 (2022).

Lin, F. et al. Contrastive multi-view hyperbolic hierarchical clustering. arXiv preprint arXiv:2205.02618, (2022).

Tang, H. & Liu, Y. Deep safe multi-view clustering: Reducing the risk of clustering performance degradation caused by view increase. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 202–211. (2022).

Chen, T. et al. A simple framework for contrastive learning of visual representations. In International Conference on Machine Learning. PMLR, 1597–1607 (2020).

Kong, C. et al. What are you talking about? text-to-image coreference. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 3558–3565 (2014).

Fang, S. G. et al. Efficient multi-view clustering via unified and discrete bipartite graph learning. IEEE Trans. Neural Networks Learn. Syst. (2023).

Jiang, Y. G. et al. Consumer video understanding: A benchmark database and an evaluation of human and machine performance. In Proceedings of the 1st ACM International Conference on Multimedia Retrieval, 1–8 (2011).

Chen, M. S. et al. Representation learning in multi-view clustering: A literature review. Data Sci. Eng. 7(3), 225–241 (2022).

Zhang, G. Y., Huang, D. & Wang, C. D. Facilitated low-rank multi-view subspace clustering. Knowl. Based Syst. 260, 110141 (2023).

Xu, J. et al. Multi-VAE: Learning disentangled view-common and view-peculiar visual representations for multi-view clustering. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 9234–9243 (2021).

MacQueen, J. Some methods for classification and analysis of multivariate observations. Proc. Fifth Berkeley Symp. Math. Stat. Probab. 1(14), 281–297 (1967).

Zhang, Z. et al. Binary multi-view clustering. IEEE Trans. Pattern Anal. Mach. Intell. 41(7), 1774–1782 (2018).

Wang, S. et al. Fast parameter-free multi-view subspace clustering with consensus anchor guidance. IEEE Trans. Image Process. 31, 556–568 (2021).

Zhou, R. & Shen, Y. D. End-to-end adversarial-attention network for multi-modal clustering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 14619–14628 (2020).

Yan, W. et al. Gcfagg: Global and cross-view feature aggregation for multi-view clustering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 19863–19872 (2023).

Xu, W., Liu, X. & Gong, Y. Document clustering based on non-negative matrix factorization. In Proceedings of the 26th Annual International ACM SIGIR Conference on Research and Development in Informaion Retrieval, 267–273 (2003).

Strehl, A., Ghosh, J. & Cluster, E. A knowledge reuse framework for combining multiple partitions. J. Mach. Learn. Res. 33(3), 583–617 (2002).

Schütze, H., Manning, C. D. & Raghavan, P. Introduction To Information Retrieval (Cambridge University Press, 2008).

Glorot, X., Bordes, A. & Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics. JMLR Workshop and Conference Proceedings, 2011, 315–323 (2011).

Kingma, D. P. & Adam A method for stochastic optimization. arXiv preprint arXiv:1412.6980, (2014).

Van der Maaten, L. & Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res., 9(11). (2008).

Acknowledgements

This work is supported by the National Natural Science Foundation of China (62266007); Guangxi Natural Science Foundation (2021GXNSFAA220068); Innovation Project of Guangxi Graduate Education (JGY2022104, JGY2023116); the Research Fund of Guangxi Minzu University Cultivation Project (2023MDKJ005).

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Huang, H., Mei, Y., Wei, X. et al. Self-weighted dual contrastive multi-view clustering network. Sci Rep 15, 16154 (2025). https://doi.org/10.1038/s41598-025-00895-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-00895-6