Abstract

Teachers who are aware of their students’ strengths and weakness can tailor their teaching methodologies to meet the challenging students efficiently for better results. This helps them to identify any potential learning challenges at an early stage leading to improved academic performance and success ratio. This also fosters a learning environment where students feel motivated and valued to excel in their respective fields. This study offers a robust adaptive feedback system tailored for Learning Management System leveraging instance level explorations, helping teachers to find the specific instance affecting the learner’s learning outcome. The proposed system can also be utilized by the institutions where the outcome-based education system has been adopted. The study includes Stacking, Capsule Network, SVM, Random Forest, Decision Tree, and KNN for experiments. Stacking achieved the highest accuracy of 76.70% while SVM demonstrated the highest precision of 0.78 showing the effectiveness of ensemble learning techniques. The primary objective of this endeavor is to elevate automated assessment to provide precise and meaningful feedback, enhancing the educational experience for tertiary students through the integration of technology and pedagogical concepts. The learning feedback has been made available via a user-friendly webserver at: https://khan-learning-feedback.streamlit.app/.

Similar content being viewed by others

Introduction

Every student has different cognitive skills and learning styles with different areas where they excel. The identification of these areas is very important as the same strengths and weakness further help the teachers and guardians to better understand and support them. This allows the teacher to make strategies accordingly and tailor the educational material and instructions while keeping in view the gaps or weakness in the learner, thus making a learning environment fostering the growth of the students1.

The evolution of the Learning Management System has facilitated the student–teacher interaction and become a pivotal role for dissemination of educational content. Analyzing this issue, it is possible to state that the post-pandemic world has greatly influenced and boosted the usage of LMS2. Some of the benefits of LMS are its flexibility while its accessibility complements the principles of the personalization of learning hence increasing students’ interest and performance as well as the opportunities of group work. LMS keeps students by their side in real-time and give the teachers crucial reports and other data-driven information. Evidence has revealed that there are 561 LMS platforms available for academic and education uses3. The AI embedded era promoted by the Fourth Industrial Revolution has greatly influenced the education sector. This innovation has extended the process of teaching welcoming the new generations to an innovative form of learning that suits the modern society4. Within LMS, the adaptive feedback model plays an important role since it provides guidance according to the profile and performance of the learner5. The adaptive feedback model serves as a base line – offering the detailed analyses of the challenging areas that need to be improved6. The learning analytics can help in identifying the learners’ challenges and the optimal time to overcome them in order to deepen constructive learning7.

In recent years, more instructors have incorporated LMS and methods of automated assessment, which reveal a gap in current approaches about the extent of feedback and the role of learners’ characteristics in learning8. There is a need for an advanced intelligent system integrated within automated assessment systems or Learning Management Systems (LMS) that can accurately identify and diagnose learning gaps in real-time. Such a system should also be capable of identifying specific instances affecting a student’s learning capability, enabling educators to implement timely, personalized interventions. This approach fosters improved student engagement, performance, and overall academic success by proactively addressing individual learning challenges. Therefore, this study seeks to provide a blueprint and the empirical assessment of a delicate personalized adaptive feedback system for tertiary education sectors based on the learner’s unique features or demographics that inform the learning preferences. The research has employed different machine learning algorithms with the help of a simulated dataset to determine the challenges of the learners. The aim is to identify certain features or instances that particularly impact the progress of the student. So, the main focus is kept on instance level learning algorithms, however other machine learning algorithms are also utilized to evaluate the proposed study. The contribution of this work are as follows:

-

Utilization of Instance-level learning algorithms to determine the specific instance of the learner influencing his / her overall learning outcomes.

-

Implementation of Capsule Networks to capture patterns and relationships in the dataset resulting in a robust adaptive feedback system.

-

A comparative analysis of results with traditional machine learning algorithms to highlight the strengths and weaknesses of each model.

This study is organized into different section; Introduction outlining the background of the study and the objectives behind; Related Work detailing the similar literature, Materials and Methods depicting the research design and datasets; Results showing the findings; Discussion, an analysis of the results; and Conclusion summarizing the key findings and future directions.

Related work

The integration of technology and learning, notably AI and data analytics, has sparked transformative progress within the critical domain of higher education9. These modern learning platforms aim to incorporate historical data about previous users by modeling learning processes from the perspective of the learners. As a result, they can adapt to a rapidly changing environment by providing learners with not only accurate and high-quality learning materials but also by taking into account individual learners’ needs. To create more individualized educational environments, AI-enabled learning systems are increasingly being merged with cutting-edge methodologies. These systems are becoming more popular because they can deliver educational information and adapt to meet the needs of specific students10.

LMS have become very important in the field of education. They offer a consolidated platform for organizing educational resources and promoting interaction and cooperation between students and teachers. Additionally, LMS provides analytics and reporting capabilities, supporting assessment and evaluation procedures. According to several studies, different LMSs and related tools improve student retention, critical thinking, collaborative performance, and engagement. Furthermore, LMSs provide teachers with the ability to monitor student progress, predict performance (for early identification of at-risk students), and then use the collected data to adjust and adapt their teaching methods. Various LMS possess their distinct proprietary approaches to learning assessment and feedback, often lacking extensive compatibility with external modules11. Integrating automated assessment mechanisms into an LMS, especially when aimed at delivering insightful feedback, introduces several challenges, primarily revolving around privacy and security considerations. Numerous research studies and tools have been introduced to enhance LMS functionalities and address heightened security apprehensions12.

Through the use of Bayesian networks and Learning Analytics (LA)13, a study provides a novel method for modeling learners’ personalities within an intelligent Moodle environment (iMoodle). The study attempts to predict personality dimensions—extraversion, openness, and neuroticism—through an unobtrusive method based on LA data in response to the need for individualized and adaptive learning. The technique successfully predicts these personality traits, as indicated by recall, precision, F-measure, and accuracy scores falling within an acceptable range, according to the findings of an experiment involving 139 students. Additionally, the study demonstrates that the existing Big Five Inventory (BFI) and the LA-driven approach exhibit a respectable level of agreement when modeling the selected personality traits. By identifying challenging personality traits and suggesting integration with gamification tactics, the research provides insights for improving smart learning environments and bridging the gap between conventional psychological assessment tools and innovative learning analytics techniques14.

This study15 addresses the urgent need for scalable and valuable feedback delivery in response to the changing educational landscape. The authors note a lack of discipline-agnostic frameworks for providing first-year education within the context of educational massification, despite the presence of feedback models. They propose a novel "strategic framework for feedback at scale" to bridge this gap, consisting of three stages: automatic continuous feedback, peer-led staged feedback, and teacher-led staged feedback. This framework is supported by specific examples and implementation recommendations, aiming to enhance first-year undergraduate learning and engagement across various educational contexts16. The study emphasizes the necessity of empirical research to evaluate the framework’s effectiveness in real-world situations while recognizing its limitations and highlighting its potential to reshape educational practices and outcomes.

The author17 explores the expanding usage of online learning environments like Moodle in higher education, illustrating how they alter the student–teacher connection by granting students more control over their education while reducing in-person interactions. They introduce the TeSLA project, advancement in e-learning that enhances e-assessment capabilities. However, this shift, enabling student monitoring and potentially compromising personal data, raises privacy concerns. Using the TeSLA project as a case study, the authors analyze several e-learning platform design techniques and their implications for data privacy. To enhance privacy protection while promoting virtual learning, they propose different design solutions. This study provides suggestions for striking a balance between technological advancement and safeguarding personal information, contributing to the ongoing discourse on privacy and e-learning.

Another study investigates how Learning Analytics (LA) methods can be utilized to forecast student performance in hybrid medical courses18. The authors examine student engagement, self-regulation, and academic achievement by analyzing the online activity data of 133 students enrolled in such a course to facilitate early intervention measures. The study employs various data sources and tools, including database queries and Moodle plugins, to gather data on activities such as logins, views, forum interactions, formative assessments, and communication patterns. Self-regulation is assessed through engagement indicators19. The results indicate a 63.5% accuracy in predicting final grades, a 53.9% identification rate for at-risk students, and an increase to 80.8% with a binary logistic model. Even with mid-course data, prediction accuracy remains at 42.3%, with student engagement and regular use of online resources serving as the primary predictors20. Learning Management Systems (LMS) enable real-time monitoring and provide educators with valuable data-driven insights. Research shows that there are 561 LMS platforms available globally for academic and educational purposes. In particular advanced Information and Communication Technologies, due to the Fourth Industrial Revolution including big data, cloud computing, artificial intelligence among others has influenced every aspect of life including education. Such changes have changed the paradigm of educational process and reinvigorated pedagogy with innovative approaches to the teaching practices grounded in today’s learning environment.

Thus, this work points to the applicability of data analytics-based methods, especially in cases like blended learning, for putting emphasis on struggling students and possibly helping them before they drift significantly off course. The authors suggested a number of criteria for choosing a secure browser for e-assessments with the requirements of the limited budget and the necessity to work with many students being taken into consideration21. A survey of scholarly works on technology for secure browsers and specific search given the project context showed that SEB met the requirements and was cheaper than other products with similar functionalities. There were also other applied parts of the work, including the semester examinations and questionnaires together with the students’ feedback.

To foster critical thinking and innovative skills in higher education, this study focuses on creating and assessing online learning systems22. Based on constructivist principles, the research utilizes collaborative approaches such as literature reviews, expert focus groups, and leveraging Moodle LMS tools. The system architecture prioritizes student-centered learning with features for interaction, collaboration, learning materials, presentation, motivation, and evaluation. A dedicated Moodle plug-in, "Inno Ed Tools," is implemented to gauge skill enhancement. Employing gamification techniques gradually, the system aims to enhance student engagement. Impacts of Open Learning Spaces reveal incredible enhancement of the skills, which supports the visual concept to re-establish online learning platforms. The study suggests the increase of the use of mobile technology in learning and more implementation of learner-centered methods for skills enhancement efficiently.

One of the elements within the assessment model developed since 2009–10 and based on the theory of formative assessment is feedback and learning objectives. Applies with interaction in a Mathematical Analysis course for multiple engineering students, this model uses the adaptive Moodle23. Some of them are Online Moodle Quizzes (MQ) which is applied for formative as well as for the summative sake, decided task portfolio; small group projects (SGP), guided practice as well as an optional test. Project contests and peer reviews are backed by the developmental APLICA_MATES social networking. This course shows more effectiveness than other courses; the students’ satisfaction and interaction also show the efficiency of the model in the learning and assessment process24. The QUIZ application was developed using the LeaP using LTI to integrate it with Moodle. This application enables educators to include miscellaneous components in a test such as HTML, JavaScript, and variables that can be constructed on the fly; thus, teachers and students experience a rich evaluation environment. This involves aspects in handling tries by students, automatic grading system based on JavaScript, and users’ role management systems. The developments of the application are questioning parameter generator and the solution Assistance Together with the explanation of correct answers. Additionally, the grading interface, which manages and calculates scores for student quiz attempts, has the potential to be expanded in the future to incorporate JavaScript integration and learning analytics. The study highlights the seamless connection between the application and Moodle, enhancing quiz design and assessment procedures for educational purposes.

Existing literature demonstrates various AI embedded LMS and analytics-based methodologies for personalized education, yet many gaps exist. While Bayesian networks and Learning Analytics (LA) have been used to model learner personalities in Learning Management Systems, they primarily focus on broad personality traits rather than instance-level learning challenges. Similarly, some projects like TeSLA enhance e-assessment but lacks adaptability for targeted, student-specific feedback. Furthermore, dynamic quiz applications integrated with Moodle improve assessment flexibility but do not facilitate real-time adaptive feedback. While prior studies propose innovative methodologies, they often fail to provide granular, instance-level feedback necessary for teachers to identify specific learning obstacles. Our study bridges this gap by introducing a robust adaptive feedback system within LMS, enabling educators to pinpoint individual learning challenges and enhance teaching effectiveness in outcome-based education settings.

Material and methods

Instance-level exploration methods are very important in analysis of predictions on the individual observations25. It enables the exploration of specific scenarios, the assessment of explanatory variables on predictions, revealing how the change in the variable values influence the predictions while showing the reasons affecting incorrect predictions. For example, while predicting the risk of potential heart attack, there may affect many factors like age, smoking or any other addictions, gender, a model might be used for a predictive score, along with the reasons and queries for this score of each patient. Moreover, many other examinations may be included like how manipulation in addictions influences the risk of heart attack. Additionally, instance level explorations also help in highlighting the factors that lead to discrepancies like heart attack experienced by a low-risk patient. Figure 1 depicts the instance level exploration with its different possible approaches. It shows the function of two variables or the prediction of a model since the area of interest is the prediction at a single point. The variable attributions are shown in part A where the additive effect of each variable depicts the difference of prediction of some specific observation from the average. In the meanwhile, part B shows the local behavior, i.e., the approximation of black box model around the observation. What-If analysis is shown by part C25. This shows the prediction of the model as a function of a single variable while keeping all the other explanatory variables fixed. The figure was generated using Python (v3.8.6) with Matplotlib (v3.7.5, https://matplotlib.org/) and NumPy (v1. 24.3, https://numpy.org/). The illustration visualizes a 3D function, where subplots (A, B, and C) represent different perspectives, including surface plots, wireframes, and contour curves. The function used is \(f\left({x}_{1}, {x}_{2}\right)= {e}^{\left\{-{x}_{1}^{2}- {x}_{2}^{2}\right\}}+ 2{e}^{\left\{-{\left({x}_{1}- 1\right)}^{2}- {\left({x}_{2}- 1\right)}^{2}\right\}}\) computed over a mesh grid of values from \(\left[-2,2\right]\) for \(\text{x}1\) and \(\text{x}2\).

A Depiction of Instance-Level Exploration (Fig. 5.1)25- Generated using Python.

KNN

An example of Instance level learning is KNN, the K-Nearest Neighbors algorithm, which determines the similarity by calculating the distance between the instances. It stores all the instances from the training data unlike explicitly building of a model26. Upon need of a prediction for a new instance, KNN explores the k nearest neighbors by using distance metrices. It then gives the class label on the majority vote out of these neighbors. The distance metrices help to form the decision boundaries which define the different regions of the query points.

The Eq. (1) shows the Euclidean distance, the most used distance measure. It evaluates the straight line between the query point and the point to be measured.

The above Eq. (2) shows another distance metric that measures absolute value between the two points. This is also known as block distance ass it shows the navigation from one point to another via city streets.

Minkowski Distance as shown in Eq. (3) is the generalized form of Euclidean and Manhattan Distance metrics.

x = y D = 0

x ≠ y D ≠ 1

The Hamming distance which typically deals with the Boolean or string vectors by exploring the points where the vectors do not have any matching as shown in Eq. (4).

In the KNN algorithm, the parameter k specifies the number of neighbors considered when classifying a particular query point. For example, with k = 1, the instance is classified based on the class of its closest neighbor. Selecting the value of k is crucial, as different values can lead to over-fitting or under-fitting. Lower k values may result in high variance but low bias, while higher k values may cause high bias but low variance. The optimal k depends largely on the input data; datasets with more outliers or noise typically benefit from higher k values. It’s generally recommended to choose an odd number for k to avoid ties in classification. Cross-validation techniques can help determine the best k value for your dataset. Firstly, the dataset was divided into 2 sections i.e., the features and the target value upon which we intend to give the outcomes or predictions following its splitting into testing and training dataset with test size of 0.2. For scaling the dataset into testing and training, we used standard scalar module to standardize the features by removing mean and scale the unit to variance. Standardization is a common requirement to many Machine learning estimator modules. The fit transform method was used to scale the training data set while transform method was used to scale testing dataset whereas all the data set was initialized on sc_x in standard scalar function. Following features are used for the creation of the K nearest neighbour.

-

1.

n_neighbours = 1

-

2.

P = 2

-

3.

Metric = Euclidian

Dataset acquisition and configuration

Dataset is always been an important step while working with artificial intelligence and predictive models27. We generated a dataset of software engineering students using a Python script aiming to get all the demographics of the students. The features in this dataset include gender, midterm and final scores (easy, medium, hard), total score, father’s education, location, transportation mode, accommodation type, and additional work status and whether the student cleared the course. Recognizing the limitations of simulated dataset, SMOTE was used to address class imbalance. The midterm and final term marks were split into three categories based on the complexity of the question i.e., simple, intermediate, and complex problems, to get more insights and tailor precise feedback for the student’s improvement. The acquiring of demographics of students was necessary to get the knowledge of the specific instance, influencing their learning. The dataset can be accessed via Zenodo30. In addition, we also used a real time dataset DS-0231 of students enrolled in the Faculty of Engineering and Faculty of Educational Sciences in 2019 that is available on Kaggle. It was gathered by the questionnaires and the school reports possessing 145 instances and 32 features. Key demographic features include age, sex, high school type, parental education and occupation, and accommodation type. The dataset also captures study behaviors such as attendance, note-taking, reading habits, and midterm/final exam preparation methods. Academic performance is measured through cumulative GPA and final course grades. Mainly this dataset is divided into four categories, i.e., demographic information, family background, study habits and academic behavior, and academic performance. Some of the insights of the dataset are as follows which suggests that individual demographic factors have minimal direct correlation with output grades in this dataset:

-

Age Distribution: Majority (48.3%) of students are aged 22–25, followed by 44.8% aged 18–21.

-

Gender: 60% of students are male, 40% female.

-

High School Type: 71% attended state high schools, while 17.2% attended private institutions.

-

Scholarships: 52.4% received a 50% scholarship, while only 0.7% had no scholarship.

-

Parental Education: Fathers’ education is slightly higher than mothers’ (31.7% of fathers completed high school vs. 26.9% of mothers).

-

Parental Occupation: 71% of mothers are housewives, while fathers are more distributed across employment types, with 26.2% self-employed.

Another dataset DS-0332 was used for external evaluation, which possess the data of 2392 high school students, mainly categorized in students’ demographics, study habits, parental involvement, extracurricular activities and their academic performance. Following Table 1 describes the configuration of training and testing samples of the datasets used in this study.

Feature selection

Feature selection is a very important step in machine learning to identify the most important features to optimize and enhance the model performance28. This can be achieved by various algorithms like genetic algorithms. This algorithm can be mathematically explained as the combinatorial optimization problem, where the objective function is the model’s generalization performance, represented by the error term on the selection features of the dataset29. Each instance includes 1 and 0, and with N features, the algorithm calculates 2N combinations. Genetic algorithms are inspired by natural genetics and biological evolution, that optimize the selection of features by creating the population of various solutions. It evaluates fitness and improves itself iteratively through selection, crossover and mutation. After that the fitness of each solution is ranked using the following Eq. (5).

where \({R}_{i}\) is the rank of the \({i}^{th}\) individual.

These iterations continue to improve until a threshold is reached while giving the assurance of evolution of population better fit for the environment.

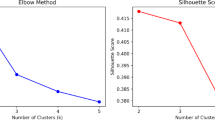

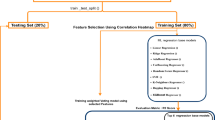

For the feature selection from our dataset, we applied Genetic algorithm to select only the relevant features and remove the odd one that have not any impact on the learning outcome of the learner as given in Fig. 2. Here are some of the Features that we implement in the Genetic Algorithm:

-

1.

Population Size = 50

-

2.

Num_generations = 50

-

3.

Mutation rate = 0.1

-

4.

Crossover_rate = 0.8

-

5.

Num_features_to_select = 10

Different techniques, i.e., Machine Learning algorithm like Random Forest, Decision tree, SVM and Capsule network are utilized to enhance the interpretability of the results and provide deeper insights into the students’ performance patterns. The Pseudocode for Capsule Network-Based Classification is shown in Table 2.

Results

The PCA plot of the dataset shows that the first two principal components capture potential variance in data as shown in Fig. 3. This depicts an insight into the class separability. There is also some overlap, but for each class, the distant cluster is clearly observable. This shows that the features have potential to distinguish between the classes. The purple colour represents the class 0, the intermediate level, class 1 with teal, depicting medium level, with yellow points representing complex problems as class 2. The model remained robust while giving an accuracy of 95%. This shows the validation of the model, the effective analysis capturing the underlying patterns in the data. Figure 4 shows the results and performance of different algorithms applied to identify deeper insights into the students’ performance patterns.

Capsule network

The architecture of the Capsule Network begins with an input layer for data that has 31 features, and then move to the reshaping layer i.e. followed by a Conv1D layer with 16 filters and a kernel size of 3, and a Max-Pooling 1D layer with a pool size of 2 to extract the first level features. It results in a flattened dense layer with 32 neurons and ReLU activation function; L2 is applied to all weights to minimize over-fitting, and dropout of 50 percent is applied. The flattened output is passed through the capsule layer with 4 capsules of with a dimension of 4 to handle the hierarchical relationship. They then flatten the output, apply another dropout layer, and a final dense output layer with soft-max activation to classify. To verify its performance, the Capsule Network attained an accuracy of 69.64. The Capsule Network achieved an accuracy of 69.64%, demonstrating its ability to effectively capture complex patterns in the data through its capsule layer, which is adept at recognizing hierarchical and spatial relationships. The balanced metric results indicate it’s consistency across different evaluation criteria.

Stacking classifier

Stacking incorporates predictions of multiple base classifiers (KNN, SVM, Decision Tree, Random Forest) employing a meta-model (Random Forest) to arrive at the final prediction. To train the meta-model, the predictions of the base models are used as input features. The Stacking Classifier has the highest accuracy of 76.79% among all the three models as shown in Fig. 4, thus showing how more than one classifier can be useful in achieving high accuracy.

Support vector machine

SVM defines the hyper-plane which divides classes with the highest margin. It employs kernels (e.g., linear, RBF) that enable it classify the non-linear separable data by converting it into a higher dimensional space. SVM implemented with true probability enables usage of cross-validation to provide the class probabilities. The SVM model is the best performer among individual models with the highest precision and F1 score, showing its strong ability to correctly classify and balance between precision and recall.

Random forest

Random Forest combines multiple decision trees to improve performance. Each tree is trained on a random subset of the data and features, and predictions are aggregated (e.g., by majority vote) to produce the final result. The Random Forest model demonstrates good precision and F1 score, but its accuracy and recall are slightly lower, indicating it produces more false negatives compared to SVM.

KNN

KNN classifies data based on the majority vote among the k nearest neighbors. It calculates the distance (e.g., Euclidean) between data points and determines the class of a sample based on the most common class among its neighbors. The model shows moderate performance across all metrics and is less effective compared to SVM and Random Forest.

Decision tree

A Decision Tree recursively splits the data based on feature values to maximize information gain or minimize impurity (e.g., Gini impurity). The tree structure represents decision rules leading to class labels. The Decision Tree model performs the worst among all, with the lowest accuracy, precision, recall, and F1 score, indicating it struggles to classify the data effectively.

Figure 5 shows the results of different algorithms trained on DS-01, DS-02 and DS-03. For DS-01, it represents the SVM model as the best performer among other models with the highest precision and F1 score, showing its strong ability to correctly classify and balance between precision and recall. For the dataset DS-02, Capsule Network has the lowest precision and F1 score, indicating its struggle with the classification task. KNN achieved the highest accuracy, precision, and F1 score among the models, suggesting it was the most effective. The Random Forest and Stacking Classifier models showed moderate performance, with their accuracy slightly lower than KNN. Overall, KNN emerged as the best-performing model based on the provided metrics. Stacking classifier gave highest accuracy for DS-03 by achieving the value of 97.52. Random Forest and Decision Tree also show strong results by achieving the accuracy of 95.79 and 93.81.

Discussion

To be on student centric approach, the need to identify the challenging students and determining the students’ progress is crucial. At first, we generated the dataset of students of BS Software Engineering, their demographics along with their results. We also utilized the dataset from Kaggle that resembles simulated data but different in classes. We applied different machine learning algorithms i.e., random forest, stacking, SVM, KNN, decision tree and capsule network. The results obtained from the experiments show significant insights into the performance of the students while classifying the categories i.e., intermediate, medium and complex, each with different levels of accuracy. The SVM model gave significant results in terms of the results, achieving an effective precision of 0.78. The confidence intervals for the accuracy of each model are also computed using standard error estimation resulting in 95% confidence. This provides a range within which the true accuracy is expected to fall, offering a measure of result reliability. Furthermore, paired t-tests are also performed on model predictions to compare the performance differences between classifiers statistically. The results indicate that some models exhibit statistically significant differences in accuracy (p < 0.05), while others do not, supporting a more robust interpretation of our findings. While there may be some potential biases in data selection and evaluation. The dataset primarily consists of students from a specific region (Cyprus), which may limit generalizability to other educational systems. Moreover, there may be inherent biases due to imbalances in student demographics (e.g., gender, socioeconomic background), which could affect model fairness. The use of SMOTE for handling class imbalance could introduce synthetic patterns not fully representative of real-world student data. The evaluation metrics such as accuracy and F1-score provide an overall performance view but may not fully capture model biases. Further fairness analysis (e.g., demographic parity, equalized odds) could help mitigate these concerns.

Figure 6 displays a violin plot of the four performance indicators i.e., Accuracy (Acc), Precision, Recall and F1-Score, after employing SVM model on the dataset namely as: DS-01. Each metric represented by a single violin – with different shape and color representing the distribution of the mentioned metric values. The plot represented is a combination of box plot with a kernel density plot, gives a clear picture of distribution of metric values. The width of each violin represents the density of data points, offering insight into the concentration of values within different ranges. A white dot is placed within each violin to indicate the mean value of the respective metric, facilitating the quick identification of the central tendency of the data distribution. The distribution of accuracy values is shown in the first violin, with the spread of values around the mean captured by the shape of the violin. The second violin represents the distribution of precision values, while the third violin visualizes the recall values, both with the width of the violin at different points showing the density of data points around those values. The fourth violin illustrates the distribution of F1-Score values, with the mean value prominently marked by the white dot. The plot is described as "Distribution of Metrics for SVM," indicating the context of the metrics displayed, with the x-axis representing the different metrics and the y-axis scale the range of values for each metric. This visualization aids help to interpret the performance of the SVM model across different evaluation metrics, highlighting not only the mean performance but also the variability and distribution of these performance measures.

The proposed study as shown in Fig. 7 poses significant importance for the teachers to know about their students by bringing insights into the learner’s ability to perceive the content being delivered. This system can be deployed seamlessly with Learning Management Systems and especially helpful for the institutions adopting Outcome Based Education. To assess and identify the influence of the demographics and that specific instance on academic performance, we analyzed their correlation with key performance indicators, including midterm and final scores, cumulative GPA, and expected graduation GPA. The study found multilingual education setting as the most influencing instance affecting the efficiency of the student e.g., student enrolled in linguistically diverse environment.

The proposed intelligent feedback system offers significant intervention in Learning Management Systems (LMS) and automated assessment platforms by enabling precise identification of learning gaps and specific instances affecting student performance. This capability allows educators to tailor interventions, improving engagement and academic success, particularly in institutions following outcome-based education models. Compared to existing approaches, which primarily focus on broad learning analytics, our system provides granular, instance-level insights, making it more effective for personalized learning. The proposed model can assist educators and policymakers in predicting student performance, enabling early interventions to support at-risk students. Moreover, the institutions can utilize the system to optimize academic support programs, scholarship allocations, and personalized learning strategies. The deployment of our model as a web application https://student-feedback.streamlit.app enables real-time prediction and analysis for practical use.

Future research will explore integrating advanced AI techniques, such as deep learning and explainable AI, to enhance trust and usability for educators. Additionally, ensuring cross-platform compatibility with various LMS and addressing data privacy concerns will be crucial for large-scale implementation. Further studies could also examine the system’s long-term impact on student learning outcomes and its adaptability across different educational contexts. The dataset will also be expanded to include a more diverse student population across different geographical regions and educational systems. Additional features such as psychological factors, socioeconomic status, and behavioral data will also be incorporated to improve predictive accuracy.

Conclusion

The proposed system aims to develop an advanced adaptive feedback system to meet the needs of learners of any level effectively. This will help the teachers to get an awareness of the students’ standings, and the gaps they lack behind, thus fostering a learning environment for the students and teachers where students especially will feel motivated and excel in their respective fields. The proposed study used instance level explorations like KNN, enabling identification of the instance that might have a potential impact on the students’ performance. A comparative analysis was also conducted by doing experiments on other algorithms like random forest, decision tree, stacking and capsule network. The study found multilingual education setting as the most influencing instance affecting the efficiency of the student. The proposed model can seamlessly integrate with the LMS like Moodle to have a better analysis of the students. In future, the scope of the study will be increased by the addition of the descriptive questions and collection of real time data of more students.

Data availability

This work is conducted using an open-access dataset and a self-collected dataset. The datasets can be accessed using the following links: https://zenodo.org/records/13850594; https://www.kaggle.com/datasets/csafrit2/higher-education-students-performance-evaluation; https://www.kaggle.com/datasets/rabieelkharoua/students-performance-dataset.

References

Campus, Q. Student’s Strengths and Weaknesses. https://medium.com/@joinquickcampus/students-strengths-and-weaknesses-94b2fd507fb3 (2024).

Alturki, U. & Aldraiweesh, A. Application of learning management system (LMS) during the COVID-19 pandemic: A sustainable acceptance model of the expansion technology approach. Sustainability 13, 10991 (2021).

Gamage, S. H., Ayres, J. R. & Behrend, M. B. A systematic review on trends in using Moodle for teaching and learning. Int. J. STEM Educ. 9, 1–24 (2022).

Schwab, K. The Fourth Industrial Revolution: what it means, how to respond. (2016).

Cavalcanti, A. P. et al. Automatic feedback in online learning environments: A systematic literature review. Comput. Educ. Artif. Intell. 2, 100027 (2021).

Huang, H.-C., Wang, T.-Y. & Hsieh, F.-M. Constructing an adaptive mobile learning system for the support of personalized learning and device adaptation. Procedia Soc. Behav. Sci. 64, 332–341 (2012).

Wongwatkit, C. 2019 21st International Conference on Advanced Communication Technology (ICACT). 563–568 (IEEE).

Martin, N., Priya, R. & Smarandache, F. Decision Making on Teachers’ Adaptation to Cybergogy in Saturated Interval-Valued Refined Neutrosophic Overset/Underset/Offset Environment (Infinite Study, 2020).

Quy, V. K., Thanh, B. T., Chehri, A., Linh, D. M. & Tuan, D. A. AI and digital transformation in higher education: Vision and approach of a specific university in Vietnam. Sustainability 15, 11093 (2023).

Ciolacu, M., Tehrani, A. F., Binder, L. & Svasta, P. M. 2018 IEEE 24th International Symposium for Design and Technology in Electronic Packaging (SIITME). 23–30 (IEEE).

Williams, D. & Whiting, A. Exploring the relationship between student engagement, Twitter, and a learning management system: A study of undergraduate marketing students. Int. J. Teach. Learn. High. Educ. 28, 302–313 (2016).

Finogeev, A. et al. Convergent approach to synthesis of the information learning environment for higher education. Educ. Inf. Technol. 25, 11–30 (2020).

Tlili, A. et al. Automatic modeling learner’s personality using learning analytics approach in an intelligent Moodle learning platform. Interact. Learn. Environ. 31, 2529–2543 (2023).

García-Gorrostieta, J. M., López-López, A. & González-López, S. Automatic argument assessment of final project reports of computer engineering students. Comput. Appl. Eng. Educ. 26, 1217–1226 (2018).

Hepburn, L.-A., Borthwick, M., Kerr, J. & Vasnev, A. A strategic framework for delivering ongoing feedback at scale. Assess. Eval. High. Educ. 47, 742–754 (2022).

Gutiérrez, I., Álvarez, V., Paule, M. P., Pérez-Pérez, J. R. & De Freitas, S. Adaptation in e-learning content specifications with dynamic sharable objects. Systems 4, 24 (2016).

Kiennert, C., De Vos, N., Knockaert, M. & Garcia-Alfaro, J. The influence of conception paradigms on data protection in e-learning platforms: A case study. IEEE Access 7, 64110–64119 (2019).

Saqr, M., Fors, U. & Tedre, M. How learning analytics can early predict under-achieving students in a blended medical education course. Med. Teach. 39, 757–767 (2017).

Paiva, R. C., Ferreira, M. S., Mendes, A. G. & Eusébio, A. M. Interactive and multimedia contents associated with a system for computer-aided assessment. J. Educ. Comput. Res. 52, 224–256 (2015).

Ramírez-Noriega, A., Jiménez, S., Inzunza, S. & Martínez-Ramírez, Y. ASHuR: Evaluation of the relation summary-content without human reference using ROUGE. Comput. Inform. 37, 509–532 (2018).

Adesemowo, A. K., Johannes, H., Goldstone, S. & Terblanche, K. The experience of introducing secure e-assessment in a South African university first-year foundational ICT networking course. Africa Educ. Rev. 13, 67–86 (2016).

Chootongchai, S. & Songkram, N. Design and development of SECI and moodle online learning systems to enhance thinking and innovation skills for higher education learners. Int. J. Emerg. Technol. Learn. 13, 154 (2018).

García López, A. & García Mazarío, F. The use of technology in a model of formative assessment. JOTSE J. Technol. Sci. Educ. 6, 91–103 (2016).

Schweighofer, J., Taraghi, B. & Ebner, M. Development of a Quiz-implementation of a (Self-) assessment tool and its integration in moodle. Int. J. Emerg. Technol. Learn. (iJET) 14, 141–151 (2019).

Burzykowski, P. B. a. T. Explanatory Model Analysis Explore, Explain, and Examine Predictive Models. With examples in R and Python. (2020).

IBM. https://www.ibm.com/topics/knn, https://www.ibm.com/topics/knn (2024).

Smarandache, F., Stanujkic, D. & Karabasevic, D. An approach for assessing the reliability of data contained in a single valued neutrosophic number. Collected Papers. Volume VI: On Neutrosophic Theory and Applications, 215 (2022).

Lei, S. 2012 international conference on computer science and electronics engineering. 355–358 (IEEE).

Khanmohammadi, S., Kizilkan, O. & Musharavati, F. Thermodynamic Analysis and Optimization of Geothermal Power Plants 279–291 (Elsevier, 2021).

Khan, Rafaqat, https://zenodo.org/records/13850594 (2024).

Csafrit, https://www.kaggle.com/datasets/csafrit2/higher-education-students-performance-evaluation (2021).

https://www.kaggle.com/datasets/rabieelkharoua/students-performance-dataset.

Acknowledgements

This work was funded by the National Natural Science Foundation of China NSFC62272419, U22A20102, Natural Science Foundation of Zhejiang Province ZJNSFLZ22F020010 and Zhejiang Normal University Research Fund YS304023947.

Author information

Authors and Affiliations

Contributions

H.M.Q., R.A.K. and M.R. conducted the experiments and wrote the main manuscript text and M.J.H. prepared the figures. M.S. and M.A.S. reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Declaration of generative AI and AI-assisted technologies in the writing process

During the preparation of this work, the author(s) used Grammarly in order to improve the manuscript grammatically. After using this tool/service, the author(s) reviewed and edited the content as needed and take(s) full responsibility for the content of the publication.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Qadir, H.M., Khan, R.A., Rasool, M. et al. An adaptive feedback system for the improvement of learners. Sci Rep 15, 17242 (2025). https://doi.org/10.1038/s41598-025-01429-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-01429-w