Abstract

Sentiment analysis of content is highly essential for myriad natural language processing tasks. Particularly, as the movies are often created on the basis of public opinions, reviews of people have gained much attention, and analyzing sentiments has also become a crucial and demanding task. The unique characteristics of this data, such as the length of text, spelling mistakes, and abbreviations, necessitate a non-conventional method and additional stages for sentiment analysis in such an environment. To do so, this paper conducted two different word embedding models, namely GloVe and Word2Vec, for vectorization. In this study, Bidirectional Gated Recurrent Unit was employed, since there were two polarities, including positive and negative. Then, it was optimized by the Enhanced Human Evolutionary Optimization (EHEO) algorithm, hence improving the hyperparameters. The findings showed that using GloVe, the Bi-GRU/EHEO model achieved 97.26% for precision, 96.37% for recall, 97.42% for accuracy, and 96.30% for F1-score. With Word2Vec, the suggested model attained 98.54% for precision, 97.75% for recall, 97.54% for accuracy, and 97.63% for F1-score. These model were compared with other models like GRU that accomplished the precision, recall, accuracy, and F1-score values of 89.24, 90.14, 89.57, and 89.68 for Glove as well the values of 89.67, 90.18, 90.75, and 89.41 for Word2Vec; and Bi-GRU that accomplished the values of 90.13, 90.47, 90.71, and 90.30 for Glove, as well as the values of 90.31, 90.76, 90.67, and 90.53 for Word2Vec. The suggested sentiment analysis approaches demonstrated much potential to be used in real-world applications, like customer feedback evaluation, political opinion analysis, and social media sentiment analysis. By using these models’ high efficiency and accuracy, the approaches could have offered some practical solutions for diverse industries to forecast trends, enhance decision-making procedures, and examine textual data.

Similar content being viewed by others

Introduction

Metaheuristic optimization algorithms are recognized as effective tools for addressing optimization issues, particularly in cases where human-centered optimization approaches are unsuitable. Many of these algorithms are applied across various domains, such as groundwater resource1, waste management2, oral cancer detection3, economics, logistics, engineering, and artificial intelligence4, since optimization issues span all fields and typically involve solution spaces that can be nonlinear, high-dimensional, and multimodal5. Generally, optimization algorithms and neural network are used for different areas and tasks that other mean fall short in solving them6.

Dynamics of society has been significantly altered by the Internet and its associated web technologies7. Myriad platforms like Facebook and Twitter are widely used for the exchange of ideas, information sharing, trade and business promotion, running ideological and political ideological campaigns, and promoting services and products8. Social media is commonly examined from various points, such as gathering business insights to promote services and products, monitoring for malicious actions to identify and address cyber threats, and conducting sentiment analysis and identify polarity of the text.

The analysis of the meaning of these tweets from a scientific perspective is referred to as sentiment analysis9. Sentiment analysis is typically used to identify and classify the emotional tone of a given text. The aim is to determine if a specific document falls into a positive or negative category according to a standard classification10.

Opinion mining, also referred to as sentiment analysis, is a crucial form of text analysis that deals with the task of detection, extraction, and analysis of opinion-based text. Its goal is to identify negative and positive opinions, and to quantify the level of positivity or negativity that an entity (such as individuals, organizations, events, locations, products, topics, etc.) is viewed11. It is an area of research with significant possibility for various real-world practices, where the gathered opinion data are utilized to assist companies, organizations, or individuals in making informed decisions12. It has been represented by that conventional approaches fell short; for example, Naïve Bayes fell short in sentiment analysis13.

The issue of sentiment analysis has gained considerable importance due to the rise of social media platforms, where individuals explain their feelings and views in diverse and intricate ways. Conventional methods, like Support Vector Machines and Naïve Bayes, often fail to accurately categorize sentiments as they struggle to understand the details of natural language, including idiomatic phrases, meanings, and sarcasm shaped by context. The distinctive features of sentiment data, such as casual language and abbreviations, make the analysis even more challenging, highlighting the need for more advanced approaches capable of efficiently handling sequential data. As a result, there is an urgent demand for sophisticated techniques that could improve the robustness and reliability of sentiment classification in this complex area. Moreover, due to the fact that Bag-of-words (BoW) fall short in many cases, other word embedding techniques have been used more recently.

Embedding words involves representing them as numerical values or vectors in a matrix. This method creates similar vector representations for words, which have akin meanings. It is also known as word vectorization, where all the words are transformed into a vector for neural network input. The mapping procedure typically occurs in a low-dimensional region, although it can depend on the scope of the vocabulary.

Recurrent Neural Networks (RNNs) have been frequently utilized in natural language processing due to their efficient memory of network, allowing them to process information of context. GRU (Gated Recurrent Unit) and LSTM (Long Short-Term Memory) are common types of RNNs that are well-suited for classification task of sentiment analysis14,15,16. Moreover, R-CNN models can be utilized for instance segmentation17, and artificial intelligence has been even used in medical diagnosis field18. Furthermore, the subsequent paragraphs discuss various other studies.

Metaheuristic optimization algorithms serve as powerful instruments for overcoming intricate optimization problems in diverse fields19, such as health care20, artificial intelligence, engineering, and economics. The progress of the social media platforms and Internet has fundamentally changed how society interacts, allowing for sharing information and ideas while also aiding in business marketing and political campaigns. Within this framework, sentiment analysis is essential for grasping the emotional nuances of text, especially in social media, where users convey a wide range of opinions. Conventional approaches like Support Vector Machines and Naïve Bayes often find it challenging to grasp the complexities of natural language, which results in a need for more sophisticated methods that can accurately classify sentiments. Recent developments contain Recurrent Neural Networks (RNNs), including Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) networks, and word embedding techniques which improve the reliability and strength of sentiment categorization in this complex area.

Moreover,

The traditional sentiment analysis approaches have encountered several issues like handling informal text, often containing grammatical errors and slang, and detecting sarcasm that positive words are have negative sentiments. In addition, those models have problems with domain adjustment as a result of distinctions within context and vocabulary, have poor performance on datasets that are imbalanced, and miss long-range dependencies within text.

In this study, the Enhanced Human Evolutionary Optimization (EHEO) has been suggested as a novel optimizer that has been integrated with BiGRU. The evolutionary nature of the suggested algorithm can improve the capability of the model to avoid local optimum and converge to an optimum global solution. Dissimilar to traditional optimizers like Genetic Algorithm (GA) or Particle Swarm Optimization (PSO), this algorithm employs some exceptional attributes that have been inspired via procedure of human evolutionary that allow the algorithm to hit a good balance between exploitation and exploration. The balance can result in enhance accuracy and speed of convergence while training the model. Of course the use of optimization algorithm to improve deep learning is not a new concept, which has been discussed before1,2.

El-Kenawy et al.21 suggested a Greylag Goose optimizer that was inspired by the nature. The suggested algorithm (GGO) was in the category of swarm-based optimizer, taking inspiration from the Greylag Goose. This algorithm has been mentioned in another study, and all explanation relevant to it has been described. The suggested algorithm was validated via being utilized in 19 various datasets, which were derived from UCI machine learning approaches. Then, it was employed while solving diverse engineering functions. Diverse case studies have been solved by the use of the suggested optimizer as well. It was revealed by the outcomes that the suggested optimizer could perform superior to other optimizers and could deliver better accuracy in comparison with others. Moreover, the findings of the statistical tests, like one-way analysis of variance (ANOVA) and Wilcoxon’s rank-sum, represented the superiority of the suggested algorithm.

El-Kenawy et al.6 suggested FbOA (Football Optimization Algorithm) as an optimization on the basis of population, which was derived from techniques of football team. This optimizer has been developed to overcome complicated optimization issues featured via multiple local optima, nonlinearity, and high dimensionality. Moreover, the present work assessed the efficacy of the suggested algorithm by the use of CEC 2005 test suite with 30- and 100- dimensional optimization issues. The findings represented the suggested optimizer could perform superior to other advanced optimizers in terms of accuracy, robustness, and speed of convergence.

Kurniasari and Setyanto13 aimed to evaluate the classification accuracy of the sentiment analysis while employing neural networks and deep learning. Word2vec and Recurrent Neural Network (RNN) were employed in that study. This model was not used in any study for analyzing sentiments expressed in Indonesian, making its accuracy level uncertain. The research commenced by creating a sentiment analysis classification model, which was then examined through investigations. The study utilized two classifications, namely negative and positive, and the experiments were conducted with training data sets, as well as test data sets obtained from the Traveloka website. The results indicated that the model achieved an accuracy of approximately 91.9%.

Senthil Kumar and Malarvizhi22 offered a model that CNN (Convolutional Neural Network) and bi-directional LSTM (Long Short Term Memory) were combined. Here, bi-directional LSTM held sequential data for PoS tagging (Part-of-Speech), and CNN extracted the probable features. The model could achieve the accuracy rate of 98.6% using the suggested model, which could outperform other models.

Alamoudi and Alghamdi23 investigated the polarity of online reviews, comprising the rankings and the text of reviews. The reviews from Yelp for restaurants were analyzed to determine two types of sentiment, including positive and negative. They were also classified into three categories, including positive, negative, and neutral. Three different predictive models were also used, including transfer learning, deep learning, and machine learning models. Additionally, an unsupervised method was introduced for aspect-level classification of polarity, which was in accordance with similarity of semantic. This method allowed the suggested framework to take advantage of pre-trained language networks, such as GloVe, while eliminating myriad complexities pertinent to models of supervised learning. Several aspects, such as ambience, price, food, and service were categorized in accordance with their sentiment. In the end, a maximum accuracy of 98.30% was achieved using the ALBERT model. The suggested aspect extraction approach could gain the accuracy level of 83.04%.

Priyadarshini and Cotton24 offered a kind of neural network employing CNN (Convolutional Neural Networks), LSTM (Long Short-Term Memory), and grid search. The research examined fundamental algorithms, such as K-nearest neighbor, convolutional neural networks, LSTM–CNN, CNN–LSTM, and LSTM. These algorithms were assessed based on specificity, precision, F-1 score, accuracy, and sensitivity across several datasets. The findings indicated that the suggested model, which relied on optimization of hyperparameter, performed better than other baseline networks, achieving the accuracy level of 96%.

Gandhi et al.25 identified the tweets, and meaning was assigned into it. The feature work was combined with word2vec, stop words, and tweet words. It was also integrated with the deep learning approaches of Long Short-Term Memory and Convolution Neural Network. The algorithms could identify the pattern of counts of stop word with its technique. The models were applied and well trained for IMDB dataset which contained 50,000 reviews of movie. By processing a vast amount of Twitter data, sentimental tweets could be predicted and classified. The suggested method allowed for effective discrimination of experimentally gathered samples from actual-time surroundings, leading to improved system efficacy. The Deep Learning algorithms’ results focused on rating review tweets and reviews of movie with accuracy levels of 87.74% and 88.02%.

Ahmed et al.26 explored the advancements and potential future pathways in the realm of ML and DL for predicting generation of renewable energy. This paper evaluated various models that have been employed to forecast renewable energy. It discussed the issues, including the management of uncertainty and the unpredictability associated with output of renewable energy, availability of data, and the interpretability of models. In conclusion, this research underscored the significance of creating reliable and accurate forecasting networks for renewable energy to support the future shift towards sustainable energy sources and to allow for the combination of renewable energy sources into the electrical grid. Another review study was developed through El-kenawy et al.27 that entailed forecasting the spread of some illnesses like Zika via complex and large datasets. The review provided overview of the problem that stated potential of ML practices in developing the public health.

The motivation of the study is the groundbreaking method that utilizes a Bidirectional Gated Recurrent Unit (Bi-GRU), which has been optimized through the Enhanced Human Evolutionary Optimization (EHEO) algorithm to enhance the effectiveness of sentiment classification tasks. In this research, the authors have applied both GloVe and Word2 Vec models as the main techniques for word embedding, enabling sentences to be accurately represented as numerical vectors. This transformation is vital for allowing the neural network to process and analyze the textual data more effectively. Before inputting the data into the Bi-GRU model, a thorough data cleaning process was conducted. This procedure encompassed multiple essential steps, such as eliminating unnecessary characters, correcting slang terms that could obstruct comprehension, and removing stop words, which are common terms that do not add any meaning. Additionally, several phases of data preprocessing were conducted to improve the dataset’s quality. These phases included tokenization, which divides sentences into individual words or tokens; stemming, which converts words to their base form; and part-of-speech tagging, which categorizes each word grammatically. This optimization procedure is vital as it has a direct impact on the overall efficiency of the sentiment classification results.

Recent research emphasizes the complementary relationship between deep learning and metaheuristic optimization in the realm of sentiment analysis. For example1, illustrates how evolutionary optimizers can fine-tune hyperparameters in recurrent networks, whereas21 highlights their effectiveness in lowering computational expenses and enhancing generalization of model. These methods are consistent with the application of EHEO to improve the performance of Bi-GRU.

Instruments

The goal of this paper is to establish a new method for enhancing sentiment analysis of sentences by utilizing the Bi-GRU model optimized by the Enhanced Human Evolutionary Optimization (EHEO) algorithm. In the following section, first Human Evolutionary Optimization Algorithm (HEOA) will be explained, then enhanced form of it will be explained. This section provides a detailed discussion of the suggested model’s structure.

Word vectorization (Word embedding)

In the current stage, the network receives raw text input and breaks it down into individual words or tokens. The tokens are transformed into a numeric value vector. Pre-trained word embedding systems, such as GloVe and Word2 Vec, are employed to produce a matrix of word vectors. GloVe and Word2 Vec models are applied separately to assess the model’s effectiveness. The text of \(\:n\) words has been illustrated by \(\:T\) that equals \(\:[{w}_{1},\:{w}_{2},\:.\:.\:.\:,\:{w}_{n}]\). After that, the words are converted into vector of word with \(\:d\)dimensions. The input text has been expressed in the following way:

The length of the input text has to uniform the length, illustrated by \(\:\left(l\right)\), because they have various lengths. Moreover, its length has been amplified with the zero-padding technique. The text that has extended length in comparison with the previously determined length \(\:l\) gets shortened. On the other hand, the zero padding techniques gets incorporated into the length when the text owns a shorter length in comparison with \(\:l\). As a result, there can be seen a matrix with the identical dimension in each text. Each text that has the \(\:l\) dimension has been expressed in the following way:

Neural Network

Network architecture

In order to improve the reproducibility of the findings in this research, a detailed overview of the Bidirectional Gated Recurrent Unit (BiGRU) has been presented, which has been optimized via the Enhanced Human Evolutionary Optimization (EHEO) algorithm. The architecture begins with an input layer that takes sequences of word embeddings produced by pre-trained models like Word2 Vec and GloVe. After the input layer, a BiGRU layer has been conducted using 128 units, which enables bidirectional analysis of the sequential data, effectively taking contextual details from both subsequent and preceding states. To decrease the risk of overfitting, a dropout layer with a 0.5 rate has been incorporated. The output of the BiGRU layer gets inserted into a fully connected layer containing 64 neurons, which is then followed by an activation function of softmax to categorize the sentiment as either negative or positive. The model has been trained by the use of cross-entropy for the loss function and optimized by the Enhanced Human Evolutionary Optimization (EHEO) algorithm. Hyperparameters such as batch size, the number of epochs, and learning rate were meticulously optimized using the EHEO algorithm to attain the best performance [Table 1].

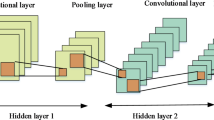

Background of recurrent neural network and gated recurrent unit

Recurrent Neural Network (RNN) is employed in various contexts for processing data, which are sequential28. If the input is considered \(\:(x={x}_{1},.\:.\:.,\:{x}_{T})\), then hidden vectors \(\:(h=\:{h}_{1},.\:.\:.,{h}_{T})\) and output vectors \(\:(y={y}_{1},.\:.\:.,\:{y}_{T})\) are gained via employing the subsequent formula:

where, the element-wise of sigmoid function and activation function has been indicated by \({\Phi}\). The weight matrix of input-to-hidden has been illustrated by \(\:\cup\:\), the weight matrix of hidden-to-hidden has been demonstrated by \(\:W\), the vector of hidden-to-bias has been displayed via \(\:b\), the weight matrix of hidden-to-output has been indicated via \(\:V\) in Eq. (3), and the vector output-to-bias has been depicted via \(\:c\). Moreover, conventional Recurrent Neural Network has been represented in Fig. 1.

Long-term dependences are highly complex to obtain, since the gradient bursts or vanishes. For this reason, myriad researchers endeavored to overcome this challenge. In 2014, Cho et al.29 introduced GRU (Gated Recurrent Unit networks), a simpler alternative to LSTM (Long Short-Term Memory) models. GRU was designed to address the issue of vanishing gradients in RNNs, thus making long-term learning more challenging.

The GRU framework consists of three components, namely reset, input, and update gates. The input gate determines how much of the new input will be integrated with the current hidden unit. The update gate determines the extent to which the previous hidden unit is maintained and how the new input is combined with the current hidden unit. The reset gate determines the fraction of the previous hidden unit to be discarded. The complete illustration of the GRU framework can be illustrated in Fig. 2.

Input gate

The input gate determines the amount of novel input to be integrated with the current hidden unit. It comprises the previous hidden unit and the present input, and outputs a value between zero and one. This value represents the extent to which the new data should be incorporated into the current hidden unit. A value of zero means that the novel data is not integrated with the current hidden element, while a value 1 declares that the new data is fully presented within the current hidden element. This process is calculated in the following way:

where, the current input has been displayed by \(\:{x}_{t}\), the bias and weight components have been, in turn, depicted by \(\:{b}_{i}\) and \(\:{W}_{i}\), the prior hidden unit has been demonstrated by \(\:{h}_{t-1}\), and the activation function of sigmoid has been displayed by \(\:sigmoid\). The output of this has been represented by \(\:{i}_{t}\) that has been employed for calculating the unit of hidden \(\:{\stackrel{\sim}{h}}_{t}\) that includes the novel input. this procedure has been represented subsequently:

where, the activation function of hyperbolic tangent has been displayed by \(\:tanh\).

Update gate

The update gate determines which part of the previous hidden unit should be kept and how much of the new input ought to be integrated with the present hidden element. The current input and the prior hidden element are regarded as input. Additionally, the update gate can output a value in the interval [0, 1], representing the proportion of the previous hidden unit to retain. A value of 0 means that no information from the previous hidden state should be kept, while a value of 1 means that all the information from the previous hidden unit should be kept. Mostly, the current has been mathematically computed in the subsequent manner:

where, the prior hidden element has been displayed by \(\:{h}_{t-1}\), the learnable components of this gate have been depicted via \(\:{b}_{z}\) and \(\:{W}_{z}\), the present input has been illustrated via \(\:{x}_{t}\), and the activation function of sigmoid has been represented by \(\:sigmoid\). Then, the output of the current gate has been employed for calculating the present hidden element \(\:{h}_{t}\)in the following manner:

In this gate, the model is allowed to enhance the hidden element in a selective manner according to the input that is within each time step, which can prevent each gradient from exploding or disappearing in vast structures.

Reset gate

The GRU has determined the number of prior hidden units that should be omitted. This gate ascertains the following hidden unit and the current input. Then, it produces a value in the interval [0, 1], indicating the proportion of following hidden unit to be removed. Here, 0 means the subsequent hidden units have been omitted, while 1 means the previous hidden unit is preserved. The calculation of this gate has been represented subsequently:

The output of this gate has been depicted by \(\:{r}_{t}\) that has been used for computing the enhanced prior hidden unit \(\:{\stackrel{\sim}{h}}_{t}\), comprising the novel input. This operation has been illustrated in the following:

where, the enhanced prior hidden unit \(\:{\stackrel{\sim}{h}}_{t-1}\) has been integrated with the hidden element \(\:{\stackrel{\sim}{h}}_{t}\) by employing the current gate. The model has been empowered by the current gate for omitting some sections of the prior hidden unit that is not pertinent to the current input. In addition, the data does not overfit. Commonly, the model is enabled to ascertain the forget update data within the time steps that is able to ease to long-term dependencies’ seizure in the consecutive data.

The gating mechanisms stop gradients from exploding or disappearing during extensive sequences, making it easier to train the network. Research has shown that GRUs are highly effective in various tasks involving sequential data and are commonly used in deep learning.

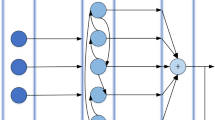

Bidirectional gated recurrent unit

In the current section, it is endeavored to represent the suggested method sentiment analysis and determine the polarity of sentences by the use of Bi-GRU. This network has been demonstrated in Fig. 3.

As RNN merely processes each sequence from front-to-back and do not have positional data, it can result in information loss. The Bi-RNN addresses this issue by incorporating RNNs to process information. The basic design of Bi-RNN involves splitting a standard RNN in two ways; one runs in the forward order, and the other in the reverse order (counterclockwise). Both RNNs have been linked to the identical layer of output, allowing the layer of output to receive the entire contextual data of the input sequences. When constructing a Bi-GRU model for sentiment analysis, the history of input needs to be simultaneously fed into the backward GRU and the forward GRU to capture the highest contextual data. The positional association between the object and the sentiment word is essential, as it determines the polarity a phrase. As a result, employing Bi-GRU can yield improved outcomes.

The input sentence has been demonstrated by \(\:\mathcal{D}=[{\mathcal{l}}_{1},\:{\mathcal{l}}_{2},\:{\mathcal{l}}_{m-1},{\mathcal{l}}_{m},\:{\mathcal{l}}_{5},\:{\mathcal{l}}_{6},\:.\:.\:.,\:{\mathcal{l}}_{n}]\), and the main purpose of the present study is to determine the polarity of sentence \(\:{\mathcal{l}}_{m}\) and to provide the model with superior position. The polarity and positional tokens get replaced with the sentences, which is represented in the following manner:

where, the words existing in each sentence has been illustrated by \(\:\mathcal{l}\), the positional token has been displayed via \(\:\beta\:\), and the sentence has been depicted via \(\:{\mathcal{l}}_{m}\).

The sentences and features have been inserted to the embedding layer, which has GloVe and Word2 Vec. These are two various word embedding approaches utilized for obtaining the vector illustration of terms. The main goal of training is utilizing statistical data for finding resemblances amid words on the basis of statistical data and co-occurrence matrix. Here, \(\:{t}_{i}\in\:{\mathbb{R}}^{k}\) and \(\:{x}_{i}\in\:{\mathbb{R}}^{k}\) be the k-dimensional vector of word in the aspect and sentence that correspond to the word \(\:i\) within the sentence. The aspect and sentence have been calculated in the subsequent manner:

where, the sentence’s highest length has been demonstrated via \(\:h\), the aspect’s highest length has been depicted via \(\:v\). The words existing in an aspect \(\:{t}_{i}\) and sentence \(\:{x}_{i}\) has been shown by embedding vectors \(\:\left({w}_{1},\:{w}_{2},\:.\:.\:.\:,\:{w}_{f}\right)\). The low-dimensional vectors of aspect and sentence have been passed through Bi-GRU to achieve hidden units.

The row-wise max value, the row-wise average, processes of hidden units of the aspect, processes of hidden units of the context sentence have been adjusted for guiding the production of weights of words. Subsequently, the process of subtraction has been conducted on them. Therefore, the hidden illustration of aspect and context has been achieved.

where, the output of the sentence and aspect Bi-GRU have been, in turn, demonstrated by \(\:\rho\:\) and \(\:\gamma\:\). Eventually, the output achieved from the subtraction’s eventual context sentence has been represented by \(\:\eta\:\), the aspect has been illustrated by \(\:\tau\:\) that concatenated row-wise like a vector \(\:\varphi\:\) of a softmax. A feed-forward layer has been employed to map the input vectors \(\:\varphi\:\) to the two target sentiment \(\:S\) classes in a forward direction. Within this layer, a dropout rate of 0.5 was specified.

where, \(\:b\) and \(\:W\) represent the bias and weight variables. Equation (22) computes the labeling probability with polarity of sentiment, selecting the label with the maximum possibility as the outcome. Analyzing the corresponding sentiment polarity involves all the processes described above.

Model training

When training the model, backpropagation is implemented using a cross-entropy loss function, and an L2 normalization function is incorporated into the model to prevent overfitting. The model has been optimized by minimization of the loss function for sentiment analysis, which is provided below.

where, the class index has been illustrated by \(\:j\), negative and positive, and the sentence index has been demonstrated by \(\:i\). The correct sentiment’s polarity of the aspect level within a sentence has been displayed by \(\:{y}_{i}^{j}\), the polarity of the classification at the aspect level has been displayed by \(\:{\widehat{y}}_{i}^{j}\), the weight of regularizers of L2 has been represented via \(\:\lambda\:\), and the parameters throughout training have been indicated by \(\:\theta\:\).

Enhanced human evolutionary optimization (EHEO) algorithm

Optimization refers to the practice of determining the most effective solution to a specific problem from a set of alternatives. Optimization methods can generally be categorized into two types, including stochastic intelligent algorithms and deterministic algorithms, based on their characteristics21. In this section, the Human Evolutionary Optimization Algorithm’s fundamental framework has been introduced, and the optimum procedures have been defined.

Definition of the HEOA and motivation

The motivation for improving the current algorithm stems from several important reasons. The remarkable human evolution’s adaptability is a primary factor that motivates this effort, as it enables the discovery of enhanced solutions in intricate environments.

The capability to handle intricate situations and discover the best solutions has led to the creation of HEOA, an algorithm that adeptly navigates through complex optimization scenarios. The concept of a Chaotic Universe offers a distinct viewpoint on the beginning and progression of the universe. On the basis of this theory, the universe did not have a specific starting point or a defined formation event, but instead, it gradually developed through self-governing phenomena and chaotic procedures. The current concept serves as a stimulus for Chaos Mapping by employing Logistic mapping as a method of initialization. Chaos theory is incorporated into the human evolutionary optimization algorithm, and adds an analytical and active component to the optimality procedure.

The stages of human evolution can be categorized into two main phases, including human exploration and human improvement. This categorization offers a simplified way to comprehend the intricate process of human evolution and provides a technical foundation for evaluating the advancement of human civilizations. In the initial stages of human exploration, individuals encounter novel environments and circumstances, requiring experimentation, adaptation, and the development of new ways of living. The acquisition of information and skills occurred gradually through exploration and stimulus. The step of exploration and alterations aligns with the initial exploration, and reflects the inquisitive nature of human evolution. The individual improvement phase marks the gradual formation of human communities, along with the diverse societies’ presence, social structures, and technologies. Education, opinions, and an understanding of the environment and the world are essential in human evolution. Through the collection and exchange of information, individuals are developed a set of knowledge about formation and themselves.

This algorithm categorizes individuals in society into four distinct personality types: leaders, losers, followers, and searchers. Leaders investigate important features of personal growth using existing information, while searchers concentrate on unknown territories. Once leaders are identified, followers discover and join them in their exploration.

Unsuccessful individuals are removed from the community, and the population is moved to areas that support personal progress. Various methods are needed to detect the most effective solution for each position. This algorithm can be improved by including the Levy flight and jumping approaches, which offer extra incentives for the search procedure. The Levy flight technique is frequently utilized in metaheuristic optimization algorithms to enhance their performance. This method improves the potential for exploration by incorporating random movements. The jumping method integrates techniques for picture solidity, which validates the diminishing state of the research. It employs a jumping process that is similar to compression for simplifying the exploration and improvement procedure. The current algorithm’s operation and structure have been maintained by various motivations, and enables it to influence key human development principles while efficiently addressing intricate optimization issues. The flowchart of this optimization algorithm has been visualized below [Fig. 4].

The diagram above represents the iterative procedure of hyperparameter optimization for the Bi-GRU model. For population initialization, chaotic mapping has been utilized that can generate different individual solutions. For fitness evaluation, the categorization efficacy of BiGRU selected the optimum hyperparameters. Considering role-based updates, each leader exploits areas with high-performance, and each searcher explores globally. Each follower replicates the leader, and the losers have been replaced to keep variety. Hyperparameters have been optimized through EHEO using cross-validation making sure generalizability. For instance, the dropout rate value of 0.5 could minimize overfitting while keeping flexibility of model.

In general, the algorithm starts with setting and determining the variables of the optimizer and initializing the population. After that, the fitness value of all individuals have been calculated. In this stage, if the termination condition has been met, the process ends. Otherwise, it continues to upgrade the population and enhance the variation using Eq. (37). In the following, novel candidate solutions have been generated, and the fitness value of the novel solutions have been calculated. If the novel solution is better, the process gets terminated. Otherwise, the process gets conducted again to achieve the best solution.

Initialization of population

This algorithm employs the Logistic Chaos Mapping for simulation of the chaotic phase at the start of human evolution by changing the population. The following formula represents the Logistic Chaos Mapping’s initialization equation:

where, the variable \(\:{z}_{i}\) demonstrates the \(\:{i}^{th}\) iteration’s number. The variable \(\:{z}_{i-1}\) indicates the prior iteration’s number. The chaotic value (\(\:{z}_{i}\)) has been mapped to the search space employing the equation below:

where, the variable \(\:u\) indicates the upper boundary, and the variable \(\:l\) demonstrates the lower boundary.

Exploration stage of human

After initialization of the individuals, the next stage involves evaluating the fitness of every solution. Throughout the experiments, the exploration phase has been identified as the highest 25% of iterations. In situations where individuals come across unfamiliar regions and possess restricted knowledge, they often rely on a standardized investigative approach while progressing personally. The mathematical framework is outlined as follows:

where, the variable \(\:\beta\:\) indicates the adaptive function. The variable \(\:t\) illustrates the iterations’ number. The variable \(\:dim\) demonstrates the dimensionality of the challenge or the quantity of the complicated variables. The variable \(\:{Z}_{i}^{t}\) demonstrates the present condition. The variable \(\:{Z}_{i}^{t+1}\) demonstrates the following enhancement. The variable \(\:{Z}_{finest}\) indicates the optimum cost function. The variable \(\:{Z}_{mean}^{t}\) represents the present average condition of the population. The variable \(\:floor\) indicates the rounding downwards’ operation. The variable \(\:Levy\) indicates the distribution of the Levy. The variable \(\:{f}_{jmp}\) indicates the coefficient of the jump. A stochastic variable that has been demonstrated by \(\:rand\) ranges from 0 to 1. The average location of the present algorithm is demonstrated by \(\:{Z}_{mean}^{t}\), and it can be computed as follows:

Adaptive function \(\beta\)

The adaptive function has the responsibility of improving variables on the basis of the present location and iterations, and it is demonstrated by \(\:\beta\:\). This function deals with the increasing difficulty of individually analyzing data and computing the characteristics of crowding using the subsequent formula:

The \(\:Levy\) distribution is used for simulation of the intricate procedure of acquiring information during the human search stage and personalized advancement. The equation below defines the \(\:Levy\) distribution, with a \(\:\gamma\:\) value of 1.5 designated:

Technique of jumping

Exploration involves a method of jumping while collecting and rearranging images to improve the scope of search locations. This technique changes the manner in which an individual’s eyes perceive an image, considering local and global structures, making it somewhat resistant to distortion produced by particular local data. The coefficient \(\:{f}_{jmp}\) indicates the jump amount and is calculated using the equation below:

Improvement phase of the candidate

The algorithm categorizes candidates into four distinct types based on their personal development stages: leaders, searchers, losers, and followers. Every type has its own method for finding solutions and collaborates to determine the most effective one. The particular approaches for each type are outlined as follows:

Leaders

Individuals with supplementary knowledge are usually located in optimal locations. The highest 40% of individuals displaying strong adaptability are selected to be leaders. These chosen leaders embark on missions to discover more favorable regions, using their current knowledge to impact the result. The present method for the exploration is computed utilizing the following formula:

where, a stochastic number that examines a standard distribution is indicated by \(\:Rn\). The function of \(\:ones(1,\:d)\) produces raw vector that contains \(\:d\) areas where every element equals 1. Moreover, every element is regular. A random amount that is indicated by \(\:R\) ranges between 0 and 1, and it represents the intricacy of the situations related to the leaders. The variable \(\:B\) demonstrates the evaluation quantity amount of the circumstance, and its value is equal to 60%. The leader will choose the most suitable search method depending on the intricacy of the situation in a specific condition. The variable \(\:\omega\:\) illustrates the ease element in attaining information, and it progressively reduces with increasing improvement. The following formula is utilized for calculating the variable \(\:\omega\:\):

Searchers

Searchers are essential for discovering unidentified regions in order to find the most optimal solution globally. During research, searchers are selected from the top 40–80% of the population according to the benchmark. The following formula defines the method of search used by searchers:

The variable \(\:{Z}_{worst}^{t}\) represents the worst individual’s location in the population’s \(\:{t}^{th}\) iteration.

Followers

The individuals simulate the methods of the adaptable leader while conducting the inquiry. Followers are selected from the upper 80–90% of the population according to their benchmark. The equation below illustrates the search method employed by followers:

The variable \(\:{Z}_{finest}^{t}\) illustrates the population’s optimal individual in each iteration (\(\:t\)). A random number \(\:Rd\) is in the range of 1 and \(\:d\).

Losers

The candidates that are poorly improved and stay in the population are labeled as losers. The candidates that are not developed and are not appropriate for the society will be removed, and the novel individuals will be introduced by simulation in the regions that are appropriate for personal development. The enhancement of the population has been computed using the following equation:

Enhanced human evolutionary optimization

The primary aim of creating the Human Evolutionary Optimization (HEO) is to enhance its capacity to tackle intricate optimization problems. Although, there are certain constraints associated with the HEO algorithm. The population’s initialization depends on a logistic map, which exhibits a low Lyapunov exponent and a restricted chaotic range. Consequently, the initial population lacks the ability to explore and demonstrate diversity.

The strategy for updating the population of the algorithm involves individual selection, which is inadequate for tackling large-scale multi-objective optimization problems. Moreover, it does not incorporate or utilize knowledge from previous populations during the offspring update process. Lastly, it does not recognize the advantages of integrating such information. The sine map is characterized by a Lyapunov exponent of approximately 1.57 and a stochastic range of [\(\:0,\:\pi\:\)]. The chaotic range has been determined by \(\:a\). The logistic map displays the Lyapunov exponent of about 0.69. The variable \(\:a\) has a chaotic range of 3.57 and 4. The following formula mathematically defines the sine map:

where, \(\:0\:\le\:\:{\upalpha\:}\:\le\:\:{\uppi\:}\) and \(\:\text{i}\:=\:\text{1,2},\dots\:,\text{N}\). It is possible to improve the population initialization equation by substituting the logistic map with the sine map as follows:

where, the variable \(\:{Z}_{finest}\) represents the population’s optimal individual. The variable \(\:Rn\) is a stochastic value, which range between 0 and 1.

The variable for the sine map, which is represented by \(a\), can generate an initial population that is more exploratory and diverse than the original Eq. (26). Equation (37) demonstrates the enhanced model of the population.

The population is updated by the algorithm iteration using the population-enhanced model as defined in Eq. (37) during the study. This model describes the procedure for updating the population. To enhance Eq. (37), a diversity factor can be potentially introduced to prevent the population from rapidly converging to a local optimum. A specific distribution, such as normal or uniform distribution, can represent the diversity of a random variable. To include the diversity element in the formula, the following modifications have been made:

where, the variable \(\:D\) indicates the diversity element. The variable \(\:D\) can be calculated using the following formula, once its value is in the range of \(\:-0.1\) to \(\:0.1\):

where, \(\:U(0,\:1)\) demonstrates a random number that simulates a uniform distribution that ranges between 0 and 1. Consequently, the variable \(\:D\) is between 0 and 0.2. The variable \(\:D\) randomly alters during every iteration of the algorithm. It is probable to enhance diversity within the population by combining the value of \(\:D\) into the formula for population renewal, as demonstrated in Eq. (40). The parameter settings of the suggested optimization algorithm have been represented in the Table 2.

Compared to conventional metaheuristics like Particle Swarm Optimization (PSO) and Genetic Algorithms (GA), HEOA presents several benefits. GA depends significantly on mutation and crossover, which can lead to slower convergence in intricate landscapes, whereas PSO frequently encounters difficulties with local optimum in multimodal functions. HEOA, by replicating human evolutionary manner through elite retention and adaptive methods, strikes a better balance between exploitation and exploration. These features make it more effective for optimization of deep learning parameters, like those utilized in BiGRU model.

Hyperparameter optimization

EHEO overcomes the shortcomings of GS and BO by utilizing a biologically inspired, population-based strategy that combines chaos theory with evolutionary dynamics. In contrast to BO that depends on probabilistic models and has difficulty with high-dimensional spaces or GS that methodically assesses previously defined integrations, EHEO adopts a flexible and evolving search approach. It incorporates chaotic initialization to guarantee a variety of initial points, steering clear of the issues associated with uniform or random sampling. This organized randomness enables EHEO to efficiently investigate sparse and dense areas of the solution space.

The EHEO population has been categorized into four roles, including Followers, Searchers, Leaders, and Losers, forming a self-organizing ecosystem. Leaders take advantage of areas with high fitness, while Searchers engage in global search. Followers imitate Leaders to facilitate directed local search, and Losers have been replaced to sustain variety. This hierarchical organization maintains a balance between exploitation and exploration, allowing EHEO to break avoid local optimum and efficiently navigate non-convex, intricate loss landscapes.

EHEO performs exceptionally well in high-dimensional environments, where Gaussian procedures in Bayesian Optimization become too resource-intensive, and the exhaustive nature of Grid Search gets impractical. Utilizing a group of 50 agents, EHEO spreads global search throughout various dimensions, enabling the simultaneous adjustment of several hyperparameters. This approach, coupled with chaotic updates, guarantees effective exploration of the solution space while avoiding the computational burdens associated with Bayesian Optimization or Grid Search.

EHEO also tackles the temporal dependencies that are characteristic of models such as Bi-GRU. In contrast to BO and GS, which consider hyperparameters as independent factors, EHEO’s bidirectional optimization connects hyperparameters (like hidden units and sequence lengths) with the structure of the model. This approach guarantees that hyperparameters adhere to temporal causality, resulting in improved performance.

Regarding computational efficiency, EHEO is superior to both BO and GS. It aims to avoid the exhaustive technique used by GS through selectively narrowing down low-potential individuals and concentrating efforts on regions with high fitness. In contrast to BO, EHEO’s use of chaotic updates and a population-based search minimizes unnecessary evaluations, leading to quicker convergence with fewer iterations.

In this research, the Enhanced Human Evolutionary Optimization (EHEO) algorithm has been utilized for the purpose of hyperparameter optimization, representing an innovative metaheuristic method inspired via processes of human evolution. EHEO incorporates chaotic initialization, adaptive mechanisms, and Levy Flight for exploration and exploitation to optimize hyperparameters effectively, steering clear of local optima and promoting global convergence. Although automated methods such as Hyperopt or Optuna have been not been used, EHEO presents a comparable or even superior option, especially in high-dimensional settings, by maintaining a balance between exploitation and exploration without the intensive computational demands of exhaustive explorations. To assess the reliability of the adjusted hyperparameters, a sensitivity analysis has been performed. This examination investigated the effect of changes in critical hyperparameters (such as quantity of hidden units, dropout rate, and learning rate) on the model’s efficacy, validating their effectiveness and their role in ensuring model generalization and stability. In future investigation, the aim is to investigate the incorporation of automatic optimization methods with EHEO to improve scalability and efficiency.

The EHEO tackles both overfitting and the speed of convergence in hyperparameter optimization via various strategies. To address overfitting, EHEO utilizes a strong exploitation and exploration approach, making sure that it avoids settling too early on subpar solutions that might result in overfitting. The algorithm incorporates regularization methods like early termination, which stops the optimization if the network’s efficacy on the test dataset begins to decline. Additionally, cross-validation has been included in the optimization method, enabling EHEO to assess networks on several splits of the dataset and thereby enhance generalization to new data. Regarding the speed of convergence, EHEO enhances the search procedure by preserving a varied collection of potential solutions, which enables the simultaneous assessment of various hyperparameter configurations. This population-based strategy accelerates convergence in comparison with conventional approaches, as it escapes local optima and effectively focuses on the optimum solution through evolutionary strategies. Moreover, EHEO modifies its search according to feedback from prior iterations, improving its solutions and accelerating the optimization procedure.

Computational efficiency and scalability

Recent research has shown that Bayesian Optimization can require significant computational resources as the quantity of evaluations rises, mainly because of the intricate process involved in generating individual solutions. On the other hand, Evolutionary Algorithms such as EHEO tend to have constant-time complexity for generating individual solutions, potentially resulting in enhanced time efficiency.

The computational efficiency of the suggested Bi-GRU/EHEO model has been influenced by the implementation of Bidirectional Gated Recurrent Units, which effectively managed sequential data while decreasing parameter complexity in comparison with traditional RNNs. This efficiency has been improved by the Enhanced Human Evolutionary Optimization (EHEO) algorithm, which optimized hyperparameters effectively without the need for exhaustive searches. Moreover, the preprocessing methods, including tokenization, stemming, and part-of-speech tagging, ensured that only pertinent and cleaned data is processed, leading to a reduction in computational load. Moreover, backpropagation has been used to avoid overfitting. In terms of scalability, the model’s effectiveness with the SST-2 dataset, comprising more than 70,000 sentences, illustrated its capability to manage large volumes of data. This scalability indicated the possibility of applying it to even more extensive datasets, like real-time social media streams, which made the proposed framework appropriate for industrial-scale and practical sentiment analysis applications. The pseudocode of this model has been presented below:

Hardware acceleration

In this research, TensorRT has been used to enhance the inference stage of the suggested sentiment analysis system. TensorRT, a powerful deep learning inference library, has been employed to significantly diminish latency and boost throughput by utilizing the computational capabilities of the NVIDIA GPU. This optimization proved especially advantageous for real-life uses, facilitating quick and effective deployment of the suggested model. By incorporating TensorRT, a balance has been accomplished between model performance and computational efficiency, making the suggested model approach highly applicable for real-world scenarios.

Methodology

Procedure

This research has been conducted to assess Bi-GRU/EHEO for classifying text sentiment employing SST-2 dataset. The dataset employed in the current research is binary model of SST-2 (Stanford Sentiment Treebank)30. There were 70,042 sentences in the suggested dataset31 that 30% and 70% of them were, in turn, allocated for testing and training equal to 21,013 and 49,029. It was endeavored to have a balanced number of positive and negative sentences in both testing and training groups. Therefore, there were 10,500 positive and 10,513 negative sentences in testing group. Also, there were 24,500 positive and 24,529 negative sentences for training group. In general, this dataset has been found to be common resource for sentiment analysis, which has been typically used for movie reviews contexts. This distribution reflects a fairly balanced dataset that is essential for training models properly without favoring one class over another. It includes sentences taken from movie reviews, offering a wide variety of expressions and contexts associated with sentiment. Each sentence has been labeled by multiple human judges, guaranteeing high-quality annotations and minimizing noise of the data. In summary, the SST-2 dataset’s organization and variety make it appropriate for developing strong sentiment analysis models. The following figure represents the example of SHAP summary plot [Fig. 5].

However, there are several potential biases relevant to SST-2 dataset. Utilizing the SST-2 dataset for sentiment analysis can introduce domain-specific biases because it is centered on reviews of movie. Because this dataset consists of sentences taken from reviews of films, it comprehends sentiment explanations that are and contextually associated with the industry of film. Phrases that are frequently found in reviews, like those related to the acting, direction, or plot, might not accurately represent the range of sentiment explanations existing within other fields such as reviews of product, news articles, or social media posts. Consequently, a network that has been trained exclusively using SST-2 might have difficulties accurately assessing sentiment in the alternative contexts, where diverse vocabulary, even nuances, or tone of sentiment might exist.

In order to address this issue, it has been considered highly essential to utilize the transfer learning or domain adaptation. One strategy is pre-training the network on more diverse dataset that can work in several areas, enabling the model to learn broader sentiment expressions and linguistic patterns. Adjusting the model by training it on datasets specific to a particular domain can improve its performance for the intended application. Moreover, incorporating external tools like specialized word embeddings or using data augmentation across different domains can boost the model’s capacity to generalize. These approaches help the model become more adaptable and resilient, enabling it to tackle a variety of sentiment analysis tasks beyond just movie reviews, which means using diverse datasets to employ diverse datasets. The heat map has been demonstrated below [Fig. 6].

Data cleaning

The initial step in the processing of the current design is data cleaning. During this stage, the extracted data has been transferred from the files and stored in the memory for cleaning objectives. This phase comprises three sub-steps.

Removal of unwanted characters

In the current stage, several redundant features, such as online links, web addresses, and URLs have been omitted from the script while employing modified regular words. Unnecessary characters, including special symbols, punctuation marks, and numbers, typically lack sentiment information. Eliminating these elements allows for a focus on words that express feelings or viewpoints.

Correction

In this stage, it is attempted to correct all abbreviated words and slangs that are employed in online chats. Previously defined dictionaries and maps have been employed to convert abbreviations or slangs into their abbreviated and original forms. For example, we would convert “IDK” to “I do not know” and “GJ” to “Good Job”. This process is beneficial for subsequent steps, since abbreviated words can be confusing for analyzing throughout sentiment analysis.

Removal of stop words

There are numerous typical words, such as an, above, under, and related to, which are omitted during this phase. Generally, NLP is not influenced by these words, so they are considered redundant. CMU’s Rainbow stopword list32 has been employed in the present study to find all the stopwords existing here.

Data preprocessing

There are several stages in this stage, like part-of-speech tagging, word stemming, tokenization. These stages have been thoroughly described in the following.

Tokenization

In this stage, the text is broken down into several meaningful tokens, symbols, phrases, and words. LingPipe Tokenizer from Apache Lucene package33 has been employed for tokenizing the text. It is worth noting that regular data frameworks have been modeled to preserve sentences and tokens of the texts. Tokenization breaks down raw text into smaller, more manageable components, arranging the data in a manner that facilitates processing and analysis by algorithms. This organized structure is crucial for machine learning models to extract valuable insights from textual information. Proper tokenization is essential for preserving the contextual connections among words. This plays a vital role in sentiment analysis, where grasping subtle differences and emotional signals in text is key for precise understanding.

Stemming

In this procedure, the inflected words get decreased to its base word. Here, porter-2 algorithm34 has been utilized to change the tokens into the stem format and save them in the original token and the keyword. Stemming is a technique used in natural language processing (NLP) for preprocessing text, which involves converting words into their base or root forms, referred to as the stem. This method is especially important in sentiment analysis, where the objective is to grasp the emotional tone of textual data. Generally, stemming simplifies the vocabularies by collapsing diverse inflected word forms into a single representative form. For instance, the words “worker” and “working” are reduced to “work”.

Part-of-speech tagging

It is a process, in which the words of text are tagged on the basis of the context and definition. To do so, Maxent Tagger from Stanford CoreNLP has been employed35. The keyword objects have part-of-speech tag, the token’s stem form, and original format related to those tokens. When, the model has been preprocessed, it has been moved to the subsequent block.

POS tagging categorizes vocabularies into grammatical classes like adjectives, verbs, adverbs, and nouns. This categorization aids in determining the role of each word in a sentence, which is essential for grasping sentiment. For example, adjectives frequently convey sentiment (for example “joyful” and “awful”), whereas verbs can represent actions that might also express emotional context (for example “love” and “hate”). POS tagging gives context to words that could be unclear. For instance, “water” can function as both a noun and a verb; therefore, identifying its part of speech aids in clarifying its intended meaning based on context. This understanding of context is crucial for properly interpreting sentiments conveyed in intricate sentences.

Neural network and algorithm

In this stage, all the data are inserted into the Bi-GRU, which has been optimized by EHEO. Then, it is turn to extract all the features and classify them. These stages will be explained in the following. The improved dataset has several various features when pre-processing phase is achieved. This procedure is able to thoroughly extract the features of the dataset. After that, the adjectives are utilized to illustrate the negative and positive sentiment of sentences, which are immensely valuable for determining the opinions of individuals. After extracting the adjectives, they get segregated. The words after and before the adjectives are removed. When the text is “an inspiring book”, the words “an” and “book” are eliminated. Then, those extracted tokens have been classified into either negative or positive sentiment.

Hardware setup

All experiments were performed using a high-performance computation system specifically designed to meet the computational requirements for testing and training the Bidirectional Gated Recurrent Unit (BiGRU) neural network. The system featured a cutting-edge NVIDIA GeForce RTX 3090 GPU, equipped with 24 GB of GDDR6X VRAM, allowing for efficacious parallel processing and greatly speeding up the training of the BiGRU model. The CPU utilized was an AMD Ryzen 9 5900X, which is a 12-core processor with 24 threads, operating at a base clock speed of 3.7 GHz and can increase by 4.8 GHz.

The setup featured 64 GB of DDR4 RAM, operating at 3200 MHz, providing effective management of big datasets and memory-intensive tasks throughout model optimization. Furthermore, a 1 TB NVMe SSD was utilized for storage, delivering rapid read/write speeds to efficiently load datasets, store model checkpoints, and enhance input/output processes. The current hardware setup was essential for the effective training and optimization the BiGRU model, particularly when paired with embedding techniques such as Word2 Vec and GloVe, which demand significant computational power to create meaningful input representations for sentiment analysis.

Cross validation

In this research, the dataset was divided into training and testing portions, with 70% designated for training purposes and 30% for testing. To assess the model’s effectiveness more thoroughly and to mitigate the risk of overfitting, 10-fold cross-validation has been utilized on the training data. Throughout the cross-validation process, the training data were randomly divided into 10 equal folds. In every iteration, one fold served as the test set, while the other 9 folds were employed to train the model. This procedure was repeated 10 times, ensuring that every data point in the training set was utilized for both training and testing.

The average efficiency across the 10 folds was calculated to give a reliable computation of the model’s ability to generalize. Essential metrics such as F1-score, recall, and precision were utilized to assess efficiency throughout the cross-validation procedure. After completion of cross-validation, the ultimate network, which has been trained on the 70% of the training, has been tested on the independent 30% test set for evaluating its efficacy using unfamiliar data.

Evaluation metrics

In the current stage, the outcomes of the models have been assessed. All the findings are evaluated in accordance with the most typically employed evaluation metrics, including precision, recall, accuracy, and F1-score. All these metrics have been computed subsequently:

To begin, if the tokens are accurately classified as positive, it is the case of True Positive that has been displayed by TP. It means that the real values and the classified values are both positive. Also, if the tokens are mistakenly categorized as positive, it is the case of False positive depicted via FP. In addition, if the tokens are mistakenly categorized as negative, it is the case of False Negative illustrated via FN. And if the tokens are accurately categorized as negative, it is the case of True Negative demonstrated via TN. Furthermore, it was previously mentioned this study is binary in a way that all the data and tokens are divided into 2 groups, including negative and positive polarity. Here, the value of one and zero have been, in turn, allocated to negative and positive. All the data have been represented in the Table 3.

Results and discussion

Execution time

The models were assessed based on their accuracies and execution times to comprehend the balance. The BiGRU/EHEO/Glove model required 1400 s for training, whereas BiGRU/EHEO/Word2 Vec took a bit longer at 1430 s. On the other hand, the GRU/Glove and GRU/Word2 Vec models were considerably quicker, finishing their training in 730 s and 740 s, respectively. This variation in execution time arises from BiGRU’s bidirectional processing, which effectively doubles the computational effort in comparison with the unidirectional method used by GRU.

Although GRU models operate at a faster pace, their reduced accuracy may fall short of the necessary performance standards for intricate tasks. Conversely, BiGRU models offer a notable increase in accuracy, albeit at a slower speed, which justifies the extra computational expense in situations where precision is crucial. In this research, achieving optimal performance is the initial priority while recognizing the trade-off in processing time. This equilibrium ensures that the model is efficient and practical as well for real-life uses, where attaining high accuracy is more usually more important than reducing computation time.

Validation of the enhanced human evolutionary optimizer (EHEO)

The current algorithm’s efficacy has been validated by evaluating established performance indicators from the CEC-BC-2017 exam case36. Here, the Enhanced Human Evolutionary Optimization Algorithm has been compared with other algorithms, Table 4, including Artificial Electric Field Algorithm (AEFA)37, Harris Hawks Optimization (HHO)38, White Shark Optimizer (WSO)39, Butterfly Optimization Algorithm (BOA)40, Equilibrium Optimizer (EO)41, and Artificial Ecosystem-based Optimization (AEO)42.

To ensure a meaningful comparison, it is customary to use standardized parameter settings for each algorithm. The highest quantity of epochs as well as the population size for all approaches are consistently set at 200 and 50, respectively. To ensure reliable and precise results, each method was executed individually for a total of 20 iterations across all benchmark functions. The research conducted employed functions with a range of solution spanning from − 100 to 100. Each function had ten dimensions. The results of the evaluation, which compared the proposed EHEO algorithm with several algorithms on the CEC-BC-2017 functions of test, are presented in Table 5.

Based on the data provided in the table, it is evident that the EHEO algorithm has the highest average fitness value for the most of functions. Moreover, the EHEO algorithm demonstrates the lowest standard deviation for most functions, indicating its stability and reliability. The EHEO algorithm performs better than other algorithms on hybrid, multimodal, and composition functions, which are typically more difficult and complex than unimodal functions. These findings emphasize the HHEO’s strong ability to exploit and explore, as well as its adaptability and resilience across various types of problems.

The other algorithms have specific advantages and disadvantages when evaluated on the functions. For example, the AEFA algorithm outperforms with unimodal functions but faces challenges with multimodal and composition functions. The information in Table 5 suggests that the EHEO is an effective optimization algorithm. It demonstrates the capability to accurately and efficiently solve a range of optimization problems. Additionally, the EHEO algorithm can be enhanced through adjusting parameters, integrating diverse learning operators, or exploring new domains and applications.

Simulation results

It was mentioned before that several different metrics were employed to indicate the efficacy and performance of the suggested method in comparison with other approaches. Of course, these metrics show that to what extent the model worked efficiently. The outcomes of the metrics, including F1-score, recall, precision, and accuracy, are obtained and calculated for the suggested Bi-GRU/EHEO, GRU, SVM, RF, and K-NN based on Word2 Vec and GloVe models. Initially, the intention is to illustrate the effect of preprocessing on the results. To do so, Table 6 illustrates the results achieved by the suggested model with and with preprocessing.

It is observed from the results illustrated in Table 6 that the preprocessing, for sure, great effect on the result achieved by the suggested model. Accordingly, it can be deduced that this generalizes to other models as well, ensuring the effect of the preprocessing on the findings. The amount of error can be calculated as the subtraction of 100 from the accuracy. Therefore, the models’ amount of error were, in turn, 20.32 and 2.58 without preprocessing and with without preprocessing while employing Word2vec. Moreover, the models’ amount of error were, in turn, 12.46 and 2.46 without preprocessing and with processing while employing GloVe. The results achieved by the suggested model with preprocessing and without preprocessing have been illustrated in Fig. 7 and Fig. 8.

Efficiency of the networks has been compared with each other in terms of their ability in accurately classifying the polarity of the texts. It was previously mentioned that the suggested network was conducted employing SST-2 dataset to compare the various models, including Bi-GRU/EHEO, GRU, SVM, RF, and K-NN. Table 7 illustrate the performance of the suggested model and other advanced models employing GloVe and Word2 Vec. The outcomes illustrated that GloVe and Word2 Vec, which acted as word embedding and word vectorization, were beneficial and could achieve great results.

Based on the results represented in Table 7, it can be thoroughly witnessed and the accuracy achieved by various word vector approaches are approximately the same, with greatly slight differences. In addition, it is worth noting this holds true for all models in a way that the results obtained by each model on various word vector approaches are nearly the same, ensuring the efficiency of both approaches. Error distribution of the models have been provided below [Fig. (9)].

It can be seen that the suggested model could outperform other traditional approaches while classifying sentiment analysis and determining the polarity of texts in both word embedding techniques while employing SST-2 dataset. The Bi-GRU/EHEO could achieve the values of 97.26, 95.37, 97.42, and 96.39 for precision, recall, accuracy, and F1-score, respectively while using GloVe approach. In addition, the network could achieve the values of 98.54, 96.75, 97.54, and 97.63 for precision, recall, accuracy, and F1-score, respectively while employing Word2 Vec. The result achieved by the suggested model and other approaches employing different word embedding approaches have been illustrated in Fig. 10.

The results have been displayed as recall, precision, accuracy, and F-measure. The recommended sentiment analysis model outperformed the other networks in terms of accuracy. This happened because the model cleaned the data by removing unwanted characters, correcting slangs and abbreviated forms. Moreover, several data preprocessing stages like tokenization, stemming and part-of-speech tagging have been conducted, ultimately improving accuracy. Additionally, during the sentiment analysis process, they helped in recognizing accurate polarities texts, leading to the improved efficacy. Of course, the purpose of the suggested model is to compare the results achieved by the diverse word embedding models by diverse network, that is the results achieved while utilizing Word2vec and diverse networks and the results achieved by the GloVe and those networks. Precision Recall Curve of the two suggested models has been represented below [Fig. (11)].

The suggested model’s performance with Word2vec showed slight variation compared to the results obtained with GloVe across the four metrics used. However, the variation was not that much. The results confirm that the suggested model performs remarkably well. The strong performance in accuracy, precision, recall, and F1-score indicates that the model’s efficiency is enhanced by word embedding, part of speech tagging, and detailed preprocessing stages.

Moreover, the Bi-GRU was tested using diverse algorithms in order to choose the best algorithm and show the suggested algorithm EHEO was the best among all. The table carries on comparing different Bi-GRU models that have been optimized with various algorithms, assessed on sentiment analysis assignments using Word2 Vec and GloVe embeddings, along with metrics like Precision, F1-score, Accuracy, and Recall.

Once it is compared with other models, Bi-GRU/AEO is in the second ranking. This model could achieve the accuracy values of 93.95% and 94.09% for Word2 Vec and GloVe, respectively, which could strike a balance between precision and recall. On the other hand, these findings were lower than the values of Bi-GRU/EHEO, especially regarding F1-score that could highlight better capability of the recall and precision in two diverse embeddings.

Other models, like Bi-GRU/HHO and Bi-GRU/BOA, demonstrated competitive but with less remarkable performance. Bi-GRU/HHO achieved an accuracy value of 93.97% when using Word2 Vec, highlighting its strength with context-based embeddings; however, its performance with GloVe is comparatively weaker, with an accuracy value of 91.98%. Bi-GRU/BOA had a similar performance, showing a little improved results with Word2 Vec, but its F1-scores were considerably lower than the results of Bi-GRU/EHEO. Generally, all the optimization algorithms were lower than the suggested one in terms of efficiency, which proved the fact on selection of EHEO. Moreover, a comparison was made between the Bi-GRU and Bi-GRU/EHEO to show the efficacy of the suggested algorithm. It was revealed by the results that the EHEO had high ability optimizing Bi-GRU.

In the end, the purpose is to compare the results achieved by the suggested model with another model called BERT43, which is so new and widely used these days. The results of the suggested model while using Word2vec and GloVe will be compared with BERT in the Table 8, and the error distribution of the models have been provided [Fig. (12)].

Considering the table provided above, it can be seen that BERT underperforms Bi-GRU/EHEO in terms of accuracy in both Word2vec and GloVe. The Bi-GRU/EHEO models attain remarkably high accuracy rates with values of 97.26% and 98.54% for GloVe and Word2vec, respectively. However, BERT achieved only 82%. This difference indicates that BERT has difficulty in accurately identifying sentiments in a considerable segment of the dataset when compared to the Bi-GRU/EHEO models, which are proficient at reducing errors. Moreover, another recent deep learning model, which has been used for comparison, is GCN/GAN that could achieve the accuracy value of 74.36; this model could achieve the worst accuracy value among the all.

Precision is another domain where BERT performs worse than the Bi-GRU/EHEO models. BERT only achieved a Precision value of 75%, which was significantly less than the Precision value of the Bi-GRU/EHEO/GloVe which was 96.37% and the value of the Bi-GRU/EHEO/Word2vec model which was 97.75%. This suggests that BERT is more prone to misclassifying samples as either positive or negative sentiments, which diminishes its ability to accurately identify the correct sentiment.

When it comes to Recall, the Bi-GRU/EHEO models with Word2vec and GloVe clearly surpass the others. BERT achieved a Recall rate of 71%, which was considerably lower than the 97.42% Recall of the Bi-GRU/EHEO/GloVe model and 97.54% of the Bi-GRU/EHEO/Word2vec model. This metric highlighted BERT’s difficulties in recognizing all relevant negative or positive sentiments within the dataset, leading to a less thorough classification performance in comparison with the Bi-GRU models.

The F1-score indicates the overall balance between Recall and Precision. In this aspect, BERT does not perform well. With an F1-score of 72%, it performed worse than Bi-GRU/EHEO models, which achieved scores of 96.30% for GloVe and 97.63% for Word2vec. This highlights BERT’s relatively weaker capability in accurately classifying sentiment throughout the dataset. Moreover, GCN/GAN could achieve the F1-score values of 72.79. Generally, BERT and GCN/GAN could not perform well in this regard.

In the following, the desire is to compare Bi-LSTM/EHEO with Bi-GRU/EHEO to evaluate the added value of EHEO in terms of performance. Table 9 can represent the difference between these two networks.

The Bidirectional Gated Recurrent Unit (Bi-GRU) and Bidirectional Long Short-Term Memory (Bi-LSTM) models were fine-tuned with the Enhanced Human Evolutionary Optimization (EHEO) algorithm that represented the distinctions in their performance when they were evaluated using GloVe and Word2 Vec embedding methods. The error distribution has been represented below [Fig. (13)].

Utilizing GloVe, Bi-GRU achieved an accuracy value of 97.26%, which is slightly superior to Bi-LSTM with the value of 97.21%. Nevertheless, Bi-LSTM had a somewhat better performance in precision, achieving 96.97% in comparison with Bi-GRU with the value of 96.37%. The recall values of both models were quite similar; Bi-GRU with the value of 97.42% and Bi-LSTM with the value of 97.55%. Regarding the F1-score, Bi-LSTM slightly surpassed Bi-GRU with the value of 97.26% against Bi-GRU with the value of 96.39%, representing a better overall balance between precision and recall for Bi-LSTM when GloVe embeddings are used.