Abstract

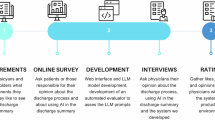

This study explores the use of open-source large language models (LLMs) to automate generation of German discharge summaries from structured clinical data. The structured data used to produce AI-generated summaries were manually extracted from electronic health records (EHRs) by a trained medical professional. By leveraging structured documentation collected for research and quality management, the goal is to assist physicians with editable draft summaries. After de-identifying 25 patient datasets, we optimized the output of the LLaMA3 model through prompt engineering and evaluated it using error analysis, as well as quantitative and qualitative metrics. The LLM-generated summaries were rated by physicians on comprehensiveness, conciseness, correctness, and fluency. Key results include an error rate of 2.84 mistakes per summary, and low-to-moderate alignment between generated and physician-written summaries (ROUGE-1: 0.25, BERTScore: 0.64). Medical professionals rated the summaries 3.72 ± 0.89 for comprehensiveness and 3.88 ± 0.97 for factual correctness on a 5-point Likert-scale; however, only 60% rated the comprehensiveness as good (4 or 5 out of 5). Despite overall informativeness, essential details—such as patient history, lifestyle factors, and intraoperative findings—were frequently omitted, reflecting gaps in summary completeness. While the LLaMA3 model captured much of the clinical information, complex cases and temporal reasoning presented challenges, leading to factual inaccuracies, such as incorrect age calculations. Limitations include a small dataset size, missing structured data elements, and the model’s limited proficiency with German medical terminology, highlighting the need for large, more complete datasets and potential model fine-tuning. In conclusion, this work provides a set of real-world methods, findings, experiences, insights, and descriptive results for a focused use case that may be useful to guide future work in the LLM generation of discharge summaries, perhaps especially for those working with German and possibly other non-English content.

Similar content being viewed by others

Introduction

Clinical documentation serves a variety of purposes, each with specific requirements. Typically, these documentation efforts fall into two categories: structured documentation and free text documentation. In this context, we consider structured documentation to be data stored with reference to a well-defined coding scheme, as opposed to a defined structure within a narrative text. Structured documentation is particularly important when patient data are analyzed across cases, for example in quality assurance, clinical research, or outcome benchmarking. Research relies on structured data for enrolling patients in clinical trials and for quantitative analysis of patient data in general. This is also true for quality assurance in clinical care1,2,3,4. Structured data are also more accessible to decision support systems, helping to improve patient care.

On the other hand, narrative documentation still plays a vital role in the hospital: At the end of a hospital stay, each patient receives a discharge summary describing medical history that has caused hospitalization, diagnostics, treatment, and the course of the inpatient stay. This important document is written by a physician for each discharged patient and is the basis for post-hospital care1. It is typically shared with the patients, their general practitioners, and any relevant outpatient specialists. By facilitating communication between healthcare professionals, the discharge summary plays a vital role in ensuring continuity of care and guiding the patient’s post-hospital treatment5,6,7. Given its importance, both the completeness and accuracy of the discharge summary are essential.

Several studies have examined the impact and quality of structured (tabular, e.g.) discharge summaries as opposed to narrative summaries, which may be richer in describing the individual case2,3. Our work is not intended to add to that discussion or to convince physicians to change their documentation practices. In our hospital, it is standard practice to provide a narrative discharge summary for each patient. In addition, a second, structured documentation is maintained for research and quality assurance purposes. We are exploring how we can possibly support medical professionals in such a scenario.

In any case, the process of writing discharge summaries is a time-consuming task for physicians. Arndt et al.8 found that physicians spend approximately 44% of their daily working hours on electronic health record (EHR) management, with a significant portion of this time dedicated to documentation tasks. In addition, timely completion of discharge summaries is crucial. These documents should ideally be available on discharge and are for that reason time-critical documents. If the summaries are sent later, the risk of rehospitalization and medication errors might increase9,10.

This dual requirement to provide both a comprehensive narrative discharge summary and structured documentation places an additional burden on documenting physicians. To assist physicians and possibly save them some time, automatically generating discharge summaries from structured EHR data could reduce the need for manual writing, allowing physicians to focus more on patient care and clinical decision-making4. Several efforts have been made to automate the generation of discharge summaries using natural language generation techniques. Early methods focused on rule-based systems that leveraged text components and medical ontologies5. These systems relied on a narrowly defined domain-specific rule base, making it difficult to scale this approach to other areas of medicine. More recently, transformer-based models, such as Bidirectional Encoder Representations from Transformers (BERT) and Bidirectional and Auto-Regressive Transformers (BART), have been employed to classify relevant text and generate summaries based on EHR data3. Additionally, generative pre-trained transformer (GPT) models have shown promising results in automating this task5,6,7,8.

For instance, Aali et al. and Van Veen et al. compared various LLM and benchmarked them based on a task of summarizing free text clinical documents. They compared several LLMs, including LLaMA2-13B and GPT4, against human written texts, both qualitatively and quantitatively9,10. Schwieger et al. followed a similar approach by generating psychiatric discharge summaries with ChatGPT-4 from electronic health records8. They reported that one of their most significant evaluation questions for physicians was whether the generated summaries were ready for use without manual revision. In contrast, Ellershaw et al. focused on generating discharge summaries based on clinical guidelines and physician notes by means of LLM. For their study, the authors used the Medical Information Mart for Intensive Care III (MIMIC-III) data set and had their results evaluated by clinicians11,12.

Despite these advancements, the effectiveness of LLMs varies significantly depending on the domain and language in which they are applied6. Many existing models are trained primarily on English datasets that often lack the specialized medical terminology necessary for clinical settings. So far, there are only few approaches for German medical data7.

Here, we explore approaches to generating German discharge summaries from structured German clinical data using open-source LLMs and describe the quality of the generated summaries. Unlike other studies, our goal is not to produce a discharge summary that can be sent without further review. Instead, we focus on supporting physicians with a body of text that they can revise during the important process of reflecting on the patient’s course of treatment. This study explores whether structured data, originally collected for science and quality management, can be secondarily used for generating clinical documentation. We utilized EHR data from 25 cases of pancreatic surgery at Heidelberg University Hospital, aiming to evaluate LLM-generated summaries in a non-English medical context. To accomplish this, we applied prompt engineering (PE) techniques to create a tailored prompt, developed a structured data scheme, and successfully generated discharge summaries. This study builds upon prior work that established structured data collection in pancreatic surgery integrating an electronic data collection platform, called “IMI-EDC”. We connected the platform to the established research patient registry of our pancreatic surgery center via API to gain a next generation database with semi-automated data collection15. We addressed both the technical and clinical challenges of automated summary generation for this complex surgical field. We conducted a systematic error analysis, as well as both quantitative and qualitative evaluations of the generated summaries to assess the accuracy, completeness, fluency, and relevance compared to those written by physicians, identifying potential improvements and limitations of the automated approach. With this study, we aim to explore a potential pathway for integrating AI-driven documentation tools into clinical workflows, to assist documentation where structured and narrative documentation are required.

Results

Clinical data included

This study utilized data from 25 patients who had undergone pancreatic surgery at the European Pancreas Center of Heidelberg University Hospital and had been treated in either an inpatient unit or an intermediate care (IMC) unit. Patients requiring admission to the intensive care unit (ICU) were excluded to focus on the majority of standard cases. The data were collected from four primary sources, a patient self-disclosure form and three inpatient documentation forms:

The patient self-disclosure form, filled out by the patients during their initial outpatient pancreatic consultation, contained general demographic information, as well as the patient’s medical and family history. The inpatient documentation forms consisted of three components: the admission questionnaire served as a structured summary of the relevant previous medical information by the admitting physician. Intraoperative documentation should include details of the surgery directly from the operating surgeon. Finally, the course of inpatient treatment should be recorded by the attending ward physician at the point of discharge. At the time of the study, structured data collection using IMI-EDC had not been fully implemented at the Pancreas Center, so we decided to collect data retrospectively from completed cases to investigate the secondary benefit of this data, which was originally collected for a different purpose, for automated clinical documentation. The inpatient documentation was extracted retrospectively from the patients’ unstructured EHR by a medical doctoral candidate with one year of clinical experience (PF). The EHR primarily contained free-text data, such as documentation of the clinical course, histological reports, and radiological result letters. Some information, such as care documentation, was recorded only by hand and was available as a scanned document, which was considered if it was legible. The inpatient stays occurred between January 2023 and March 2024.

A detailed review of existing original discharge summaries of these patients revealed that sections such as “Histologic Findings” had often been copied verbatim from existing documents as well as lists like “previous diagnoses”. In contrast, sections such as “Medical History and Findings” and “Therapy and Course” were largely written in running text by physicians, making them the primary focus of this study. For comparison, a physician-authored discharge summary was available for each case.

We assessed the extent of information available in the abstracted EHR data compared to the physician-written discharge summaries by analyzing 10 randomly selected summaries. For the “Medical History and Findings” and “Therapy and Course” sections, 54% of the content in the physician-written summaries—measured as shared text volume (number of characters)—was present in the structured dataset and could be used by the LLM with a goal to mirror the structure and key elements of physician-authored summaries. This 54% overlap, based on character count, provides a rough approximation of the extent to which structured data aligned with physician-written summaries. However, this metric does not distinguish between clinically meaningful content and redundant or stylistic differences. The remaining 46% may include information that was undocumented, only present in free-text scanned forms, synthesized by the physician, or outside the abstraction scope.

Data scheme

To create an understandable data structure for the LLM from the available patient data, specific rules were developed in collaboration with an experienced pancreatic surgeon (TMP). Data fields were included only if the associated conditions were met:

-

1.

Size and weight The Body Mass Index (BMI) was calculated using the patient’s height and weight. If the BMI fell outside the normal range, both height and weight were added to the data set.

-

2.

Alcohol consumption This information was included if the patient had a diagnosis of pancreatitis or reported frequent alcohol consumption.

-

3.

Tobacco consumption Data on smoking was included if the patient was a current smoker or had a history of smoking.

-

4.

Drainages If the intraoperative drainage was removed within three days of the surgery, it was recorded as “timely removal”. If the drainage was kept for a longer period, the actual removal date was noted.

-

5.

Laboratory values The laboratory results were included only if they fell outside the normal range.

-

6.

Bowel movements The number of daily bowel movements was included if it exceeded three or if the patient experienced diarrhea or fatty stools.

Additionally, a new data structure was developed, organized into four main sections reflecting the data sources as described above: “General Information”, “Before Surgery”, “During Surgery” and “Inpatient Stay”. This structure organized the data in broad chronological categories to improve its logical flow and comprehensibility. Table 1 shows a corresponding example data set of a patient used to generate the discharge summary. The two columns have the same content, left hand side in English, right hand side in German.

Prompt engineering

Prompt Engineering (PE) is an iterative and exploratory process driven by both creativity and intuition8,9. The literature provides various patterns for structuring prompts, which are employed to enhance the performance of the LLM. Table 2 presents the different prompt patterns we experimented with, along with their corresponding outcomes. The column labeled “improvement” indicates whether any observable improvement in model performance was achieved for each pattern.

To evaluate improvements, we first selected a complex test case as a benchmark. The test patient was diagnosed with a resectable ductal adenocarcinoma of the pancreas (PDAC) and underwent a total pancreatectomy via open surgery. The patient was treated on the IMC unit and had a prolonged hospital stay of 18 days. The details of his case are presented in Table 1.

Next, NK prompted the LLM using the various engineered prompt patterns and compared the generated discharge summaries to the physician-written summary. Improvements were noted based on the following criteria: how closely the LLM-generated summary aligned with the physician’s version and its readability in terms of comprehensiveness, conciseness, fluency and factual correctness. If an improvement was observed, the corresponding row in the “improvement” column was marked “Yes”. For example, the ‘Mind the grammar’ prompt was evaluated by comparing the number of grammatical mistakes before and after the change, with a reduction in errors indicating improved fluency. The principle ‘Content description of both paragraphs’ was assessed by comparing whether the revised summary better aligned with the structure of the original physician-written summary—specifically, whether key information was included and placed in the appropriate paragraphs, indicating improved comprehensiveness and conciseness.

Two prompt patterns emerged as particularly useful: The template and the role pattern. The template pattern ensured a consistent structure of the generated discharge summaries, with each summary containing identically formatted sections “Medical History and Findings” and “Therapy and Course”. The role pattern was helpful in maintaining an appropriate medical tone and factual accuracy, ensuring that the summaries were written exclusively in German. The final prompt, incorporating the patterns that demonstrated measurable improvement, is provided in Table 3.

Prompt Chaining In addition to standard PE principles, we explored the technique of prompt chaining, which is particularly useful for handling complex, detailed tasks by breaking them down into manageable subtasks. In prompt chaining, the output from one prompt is used as input for the next, creating a sequence of interconnected prompts. This method enhances the model’s controllability and improves the reliability of its responses13.

We applied this technique using the initial prompt developed in the previous step (see Table 3), dividing it into discrete sections that were sequentially adapted and executed. By chaining prompts in this way, the model was able to manage more complex cases with promising results, as demonstrated in the supplementary materials.

However, a key challenge with prompt chaining was extracting the final generated discharge summary. The model tended to embed the summary within the ongoing conversation, making it difficult to isolate the final version. Sometimes the most up-to-date summary appeared in the last response, while other times it was found in earlier responses. Table 4 highlights the sections that needed to be extracted, shown in italics. To address this, we developed a pattern-matching approach that reliably extracts the discharge summary in most cases. Our method identifies summaries based on structural features, such as the presence of specific headings (‘History and Findings’ and ‘Therapy and Course’), the organization of text into paragraphs, and the absence of paragraph breaks in the main section. While not perfect, this approach allows for accurate extraction in the majority of cases.

In-Context Learning (ICL) Another promising approach explored in this study was ICL, a lightweight method that integrates example-based learning directly into the model’s prompt during inference. By including in-context examples, the model learned the structure, inputs, and labels of the dataset and could apply this understanding to new test cases14.

Building on the prompt from Table 3, we experimented with ICL as an enhancement option. To generate discharge summaries, the prompt was extended using one- and two-shot examples, randomly selected from the dataset. Both complex and simple examples were tested. In a further variation, only the relevant sections of the discharge summaries (“Medical History and Findings,” “Therapy and Course”) were provided, omitting the corresponding structured dataset.

Across all scenarios, it became clear that the model heavily relied on the provided examples, often reproducing sentence structures from them verbatim—no matter if they were contextually appropriate or not. This resulted in discharge summaries containing significantly more errors compared to outputs generated without ICL. Additionally, due to hardware limitations, a maximum of two examples could be included per prompt.

Discharge summary generation

All 25 discharge summaries were generated successfully, each containing the required running text sections “Medical History and Findings” and “Therapy and Course”. The summaries were written entirely in German and, aside from a few minor details, were readily comprehensible. The generated summaries are displayed in the supplement.

The average time to generate each discharge summary was 112.89 ± 8.19 s, excluding the preprocessing of the structured dataset. The “Medical History and Findings” section had an average length of 698 ± 100 characters, while the “Therapy and Course” section was typically longer, averaging 886 ± 158 characters. In comparison, physician-written discharge summaries had average lengths of 652 ± 374 characters for the “Medical History and Findings” section and 2047 ± 1248 characters for the “Therapy and Course” section.

Error analysis

Frequent mistakes The generated discharge summaries were analyzed for errors, with an average of 2.84 ± 1.71 mistakes found per summary. Below are some frequent types of errors we observed and potential solutions.

-

1.

Incorrect Age Calculation in two-thirds of the summaries, the patient’s age was inferred correctly from the date of birth and discharge date. However, in one-third, the age was incorrect. We could resolve this by calculating the age during preprocessing and including it in the structured dataset. We could observe that the incorrectly inferred age calculations not only considered the wrong month and day and were therefore one year off, but several years off. Calculating the age during preprocessing and explicitly including it in the structured dataset could prevent these errors. When we explicitly provided the current date (corresponding to the discharge date) in the prompt, the model was able to determine the age difference in years but could not account for the exact month and day, leading to deviations of up to one year.

-

2.

Date Confusion the model often used the date of first diagnosis for the date of first clinical presentation, which led to incorrect dates in half of the cases. However, these two dates were only identical in two cases. Since the first date of clinical presentation was not available in the structured data we collected, it should be added to our future experiments to address this issue. Notably, when we explicitly provided the correct date of first clinical presentation, the model used it correctly, suggesting that the issue arises from missing data.

-

3.

Pathological Bowel Movements in 10 cases, when bowel movements per day were provided in the structured dataset, the summaries incorrectly described them as pathological, even when normal (Summary 16). According to the rules in section “Data Scheme”, one to three bowel movements per day were considered physiological. The number of bowel movements mentioned did not affect this misclassification, suggesting that the model did not know this rule.

-

4.

Imprecise or Incomplete Information some summaries contained vague or misleading details. For example, one summary inaccurately described the procedure as being stopped and replaced by another, when in fact it was converted to a different type of surgery (Summary 3). In another case, while adjustments were made to the patient’s anti-hypertensive medication, the summary failed to mention that the medication was later discontinued due to intolerance (Summary 16). Additionally, some summaries incorrectly presented cause-and-effect relationships, such as suggesting that the removal of a drainage was part of the treatment for a lymphatic fistula, when it was just a routine step in the patient’s overall recovery process (Summary 6). In total we could observe 4 cases in 3 summaries.

-

5.

Literal Use of Information from the Structured Dataset the model often reproduced text directly from the EHR without adjusting for context, such as when referring to the patient’s gender. In multiple instances, it incorrectly used a male pronoun and descriptor for a female patient (Summary 2). To address this, a possible solution would be to modify the language during preprocessing to ensure the text reflects the correct gender.

-

6.

Grammatical Errors various grammatical mistakes were identified throughout the summaries. Some examples involved incorrect grammatical cases, such as incorrect article usage (Summary 3). Other issues included improper verb conjugation (Summary 13), as well as the use of uncommon or awkward phrasing that appears to be literal translations from English, which are not typical in German medical language (Summary 11).

-

7.

Spelling Errors some summaries contained spelling mistakes, such as misspellings of medical terms (Summary 2), even though the correct versions were available in the structured dataset. These errors are likely caused by inconsistencies in the model’s training data.

-

8.

Hallucinations the model occasionally generated false information. For instance, a summary mentioned delayed patient mobilization, while the structured dataset indicated timely mobilization (Summary 3). In another case, nausea was incorrectly described as decreasing from day 3, despite the structured dataset showing it persisted from day 1 onward (Summary 3). In total we could observe 5 cases in 4 summaries.

Missing Information We analyzed details that had been present in the structured dataset but were either partially or entirely omitted from the generated discharge summaries. Our analysis aimed to highlight the most commonly missed information and assess the completeness of the summaries.

In the “General Information” section, key details like the patient’s height and weight were consistently absent from the generated discharge summaries, despite being available in the structured dataset. Previous illnesses were included in fewer than half of the cases. Family history was fully or partially included in only 4 cases and omitted in 10. Similarly, information about the patient’s smoking and alcohol consumption habits was missing in approximately three-quarters of the summaries, even when available in the structured dataset.

In the “Before Surgery” section, histological findings were included in only one-third of the summaries, reflecting a significant gap in capturing important preoperative details.

For the “During Surgery” section, intraoperative assessments, such as the condition of the pancreatic parenchyma, were included in only 25% of cases. However, other intraoperative details, such as blood loss and whether a transfusion was required, were at least partially included in two-thirds of the summaries.

In the “Inpatient Stay” section, the transfer of patients from the operating room to recovery, and subsequently to the normal ward or IMC, was included in about two-thirds of the summaries.

Overall, no consistent pattern could be recognized as to which information was included, and which was omitted by the LLM. Determining the clinical relevance of each missing piece of information, and whether it should be consistently included in discharge summaries, requires further evaluation by experienced clinicians.

Quantitative evaluation

To align the quality of the LLM-generated discharge summaries with physician-written summaries, we employed two widely recognized metrics: the Recall-Oriented Understudy for Gisting Evaluation (ROUGE) score and BERTScore.

The ROUGE score served as standard metric to evaluate summarization tasks and to measure syntactic similarity by comparing n-grams, word sequences, and sentence structures between the generated text and a human-written reference summary15. Specifically, we used ROUGE-1 (unigrams), ROUGE-2 (bigrams), and ROUGE-L (longest common subsequences). We also utilized BERTScore, a semantic metric to evaluate the similarity of meanings between the words in the generated and original reference texts.

Table 5 presents the average scores and standard deviations for all calculated metrics:

ROUGE-1 and ROUGE-L indicated that around 25% of the words or word sequences in the summaries generated matched the reference summaries. ROUGE-2 (0.06 ± 0.03) reflected lower bigram overlap, which could suggest variability in sentence structure and the use of different combinations of words.

The BERTScore of 0.64 showed moderate semantic similarity.

Qualitative evaluation

A qualitative evaluation of clinicians and medical students provided initial feedback from medical professionals. Five individuals participated in the evaluation, using a questionnaire adapted from Aali et al.23 and translated into German. Each participant rated five summaries on four criteria—comprehensiveness, conciseness, fluency, and factual correctness—using a 5-point Likert scale from 1 (very poor) to 5 (very good). For each case, the evaluators were provided with the LLM-generated summary and the corresponding structured input data used to generate it. Figure 1 displays the ratings on each criterion across the five included summaries. Summaries 1, 2, and 4 performed slightly better compared to summaries 3 and 5. Importantly, none of the summaries received a score of 1 (very poor) on any criterion. Notably, summary 3 scored approximately one point lower than the others in the comprehensiveness criterion, indicating that the participants found it to be less detailed or missing key information.

Table 6 displays the mean values and standard deviation for each criterion across all summaries. Overall, the average scores across all criteria ranged from 3.72 to 3.96, with factual correctness and fluency scoring the highest. However, the standard deviation extended below 3 for some criteria. The percentage of ratings classified as “good” (4 or 5) was 60% for comprehensiveness, 72% for conciseness, 80% for factual correctness, and 68% for fluency.

The findings indicate that while AI-generated summaries have potential, certain limitations remain. Specifically, the model occasionally oversimplifies complex clinical cases and also exhibits significant issues with completeness—likely due to missing or insufficient input data, and potentially related to the same mechanisms that drive oversimplification.

Discussion

This study introduces several new findings in the application of LLMs for generating German-language discharge summaries from structured datasets. It is among the first to assess how well LLMs can capture clinically relevant information in German, and shows that summaries generated by the LLaMA3 model can be a first step toward aligning with physician-written summaries in both structure and content. This might eventually lead to added value for clinicians by collecting structured data through secondary use for automated physician letter writing. The data were collected using the modern data capture platform IMI-EDC, also with a scientific focus.

Our results suggest that structuring the input data into broad chronological categories and applying specific inclusion rules may help organize the summary content. However, handling complex cases, such as intermediate care (IMC) patients, remains challenging for the model. Additionally, PE and prompt chaining techniques seem to help enhancing summary quality, albeit with considerable processing time.

Compared to the literature, our study underscores similar challenges in data completeness and consistency seen in LLM-generated summaries. About 46% of the content typically found in physician-written summaries, measured as shared text-volume, was missing from the structured dataset. This echoes findings from earlier research, which suggest that some clinically relevant information resides only in physicians’ notes or unstructured data sources outside the EHR16. This finding also aligns with our qualitative evaluation, which showed that only 60% of summaries were rated “good” in terms of comprehensiveness.

Our study supports previous research findings on the limitations and potentials of PE and in-context learning (ICL) in improving LLM performance in complex data-to-text tasks. For instance, while ICL has demonstrated potential in improving model performance on specific tasks, our findings echo Reynolds and McDonell’s17 observation that examples do not always improve model outputs, particularly for complex and nuanced tasks like clinical documentation. Overall, iterative prompt engineering and prompt chaining were judged as more fruitful than ICL for generating higher quality summaries.

The model’s settings were tuned to optimize performance. As recommended in existing literature18,19,20, a low temperature setting was used to minimize hallucinations and ensure a more objective output. While this resulted in fewer factual inaccuracies, slightly increasing the temperature improved fluency and readability, suggesting the need for fine-tuning based on specific use cases. Careful testing of these parameters remains essential.

Some errors made by the model—such as the literal use of information from the structured dataset, grammatical errors, and spelling errors—only marginally affect the comprehensibility of the generated discharge summaries. These issues primarily stem from the model’s limited proficiency in medical terminology and German.

A more significant challenge is the incorrect age calculation observed in one-third of the cases. As noted by Tan et al.21, many LLMs struggle with temporal reasoning. In our analysis, we found that while LLaMA3 can accurately compute the difference between two given dates as a standalone task, it fails to apply this ability when generating full discharge summaries that require multiple reasoning tasks. This limitation arises because LLMs do not inherently possess a structured understanding of time; instead, they rely on pattern recognition from their training data rather than explicit date arithmetic. Tan et al.21 highlight that LLMs often default to heuristics, such as assuming that later-mentioned dates are more relevant or interpreting temporal relationships based on co-occurrence statistics rather than logical computation. These biases may explain why the model occasionally miscalculates the patient’s age.

The LLaMA3 model24,25 used in this study showed strong performance in handling minor inconsistencies in the structured dataset. For instance, despite the presence of conflicting information—such as antibiotics being documented as administered only intraoperatively in one section of the EHR and recorded again in the inpatient course—the model correctly synthesized this and included antibiotic administration in the generated discharge summary (Summary 3).

Hallucinations, vague or misleading statements, or otherwise imprecise information were identified in 9 out of 25 summaries. This refers to individual content issues, not the overall completeness of each summary. Such errors are particularly difficult to detect, making mitigation strategies essential. One potential approach is the integration of Retrieval-Augmented Generation (RAG)22, which could help reduce inaccuracies caused by a lack of medical knowledge—such as the misclassification of bowel movement frequency—by incorporating up-to-date information from literature and clinical guidelines. Some errors may arise due to issues with reasoning or context integration. In these cases, implementing Human-in-the-Loop (HITL) validation within clinical workflows could provide real-time oversight. A potential HITL workflow could involve generating a draft discharge summary that is then reviewed and corrected by a physician before being finalized and handed to the patient. The data gathered from these HITL interactions could also be leveraged for reinforcement learning with human feedback (RLHF) to further refine model outputs in future research23.

For quantitative evaluation we applied ROUGE and BERTScore as commonly used in related research and while they provided valuable insights into syntactic and semantic similarity, they were limited in capturing clinical relevance and domain-specific language quality. As highlighted by Jung et al.24, these metrics do not account for critical aspects of medical documentation, such as the correct use of medical terminology, underscoring the need for clinically oriented evaluation metrics.

We achieved low to moderate ROUGE and BERTScore scores compared to the literature. However, it is difficult to compare the absolute metrics with other studies because we compared the generated summaries with those written by physicians. Most other studies use these metrics as relative values to compare summaries generated by different language models on the same base text within the self-contained systems of their studies9,23,27. In particular, ROUGE-L could suffer from our approach of generating texts from structured data, which are typically short concepts that do not lead to long overlaps with texts written by physicians. Our qualitative survey findings are in line with those of Aali et al.24, who conducted a similar survey. Although they do not report numbers, according to the violin plots of their publication, GPT-4 achieved the highest ratings (average 4–5, small standard deviation), while LLaMA2-13B and physician-written summaries were rated lower, but at a comparable level (comprehensiveness/factual accuracy: average 2–3, very large standard deviation; conciseness/fluency: 3–4, large standard deviation). Considering Table 6 the LLaMA3 model used in our study appears to fall between GPT-4 and LLaMA2 in terms of performance across all criteria.

However, several limitations must be considered. First, the relatively small dataset of 25 cases restricted both the diversity of clinical contexts and the generalizability of our findings. This sample size limits our ability to fine-tune models with techniques like Quantized Low-Rank Adaptation (QLoRA)25, which would likely improve LLM performance with larger datasets. Additionally, inconsistencies, missing structured data, and errors in the raw EHR data highlight the challenges of retrospective data collection. However, the completeness of LLM-generated discharge summaries depends upon the completeness and/or curation of the data fed to the LLM. The model also showed limitations in handling missing information, especially when critical details—such as hand-written notes or non-EHR information—were absent from the structured input data. This missing data reduced the accuracy of the summaries generated, underscoring the need for more comprehensive data integration in future studies. Further, the ability to incorporate a larger number of ICL examples was constrained by GPU limitations, potentially impacting the model’s performance. Also, model-generated summaries exhibited issues with German grammar, spelling, and medical terminology, underscoring the need for model fine-tuning on medical data or integrating RAG28, which could improve the model’s medical competency and reduce the need for manual corrections32. Additionally, alternative models, such as GPT-4, which performed well in similar studies24,33, may have produced better results in our context. The rapid evolution of LLMs also suggests that new models in the near future may significantly outperform those used in the current study, potentially addressing some of the limitations observed here.

Future research should consider larger datasets and improved data collection methods to enhance the diversity and completeness of training data, which could improve model accuracy and reduce the need for physician oversight. Expanding data sources to include unstructured clinical texts and nursing notes could also enrich input quality, addressing limitations due to incomplete raw EHR data. Testing alternative model fine-tuning approaches, such as QLoRA, or incorporating RAG could enhance domain-specific accuracy and medical terminology use. Using ICL with an increased and carefully selected number of examples can have a significant impact and should therefore be tested with more eligible hardware26,27. More extensive qualitative evaluations are also needed, involving a broader group of healthcare professionals to evaluate the practical relevance, acceptance, and error identification in automatically generated summaries. Future research should also address questions of time efficiency, acceptance among clinicians, and the ability to categorize errors in generated content20. With improved model accuracy and clinically oriented evaluation metrics, LLMs could offer a viable tool for discharge documentation in real-world settings.

Conclusion

Our study describes the application of an open-source LLM to generate German-language discharge summaries from structured clinical data in a real-world setting. The approach demonstrated the ability to produce coherent and moderately accurate drafts. However, our data also underscore significant limitations—particularly in temporal reasoning, error-prone verbatim copying in ICL, and inconsistent inclusion of clinically relevant content. These findings highlight that while LLM-based tools may one day be useful for supporting the clinical documentation process, achieving high-quality outputs remains dependent on multiple factors, including data completeness, task framing, and post-processing pipelines. Our methods, findings, and descriptive analyses can inform future research and implementation efforts, particularly in the context of non-English medical documentation.

Methods

LLM selection

To generate discharge summaries from raw EHR data, we first identified a suitable LLM based on specific requirements: the model needed to be open source for transparency and local deployment, compatible with available GPU constraints, able to generate text from structured data, support German language comprehension, handle medical terminology, and maintain a context window of at least 4000 tokens.

We reviewed domain-specific literature, consulted experts, and evaluated models using leaderboards like Open Medical LLM Leaderboard. SauerkrautLM28, OpenBioLLM29, and LLaMA330 emerged as the top candidates. OpenBioLLM scored highly in medical comprehension, particularly on medical multiple-choice benchmarks, suggesting suitability for clinical language tasks. SauerkrautLM, fine-tuned on German texts, demonstrated strong language proficiency. LLaMA3, with a robust multilingual foundation, showed versatility in generating high-quality German medical text without domain-specific fine-tuning. All three models support an 8192-token context window.

While GPT-4 is widely used in comparable research18,19,20, it was excluded from consideration due to the requirement for an open-source model that could be deployed locally, ensuring compliance with strict data protection protocols.

Due to its high performance on our qualitative criteria (comprehensiveness, conciseness, factual correctness, and fluency), LLaMA3 was selected for this project.

Data preprocessing and prompt engineering

For this study, a total of 25 datasets from completed cases from the European Pancreas Center of Heidelberg University Hospital were retrospectively collected by a medical doctoral candidate (PF, one year clinical practice) and recorded in two distinct forms: a self-disclosure form and an inpatient documentation form. The data was collected with the electronic data capture tool “IMI-EDC” and exported as CSV file. The use of the hospital data was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of the medical faculty at the University of Heidelberg (S301/2001; S708/2019; S083/2021).

To protect patient privacy, to the extent feasible and as described below, all protected health information (PHI) was removed. While the German Data Protection Regulation does not specifically define how to de-identify clinical documents, we adhered to best practices established by the Health Insurance Portability and Accountability Act (HIPAA)31, similar to other German and European studies32,33,34. This process involved an initial automated PHI removal using a pattern-matching approach to identify and redact personal identifiers such as names, titles, organizations, histological findings, locations, and phone numbers. In a second step, any remaining PHI was manually reviewed and removed. Additionally, dates were shifted forward by a random, fixed interval—adjusting the day, month, and year—to further anonymize the data, in line with HIPAA standards31.

The design of the prompts for the LLM followed an iterative process to determine the most effective approach. Various principles identified in the literature were tested on a sample case. The generated discharge summaries were evaluated based on qualitative criteria, including comprehensiveness, conciseness, factual accuracy, and fluency. Principles that led to improvements in the output were retained, while those that did not were discarded.

Additionally, we tested two advanced techniques, Prompt Chaining and ICL, which may further enhance prompt effectiveness. Prompt Chaining is a method used to handle complex tasks by breaking them into smaller, more manageable subtasks. The model’s output from one prompt serves as the input for the next, creating a chain of prompts. This approach can increase both the controllability and reliability of the model’s responses. For this study, the initially developed prompt (see Table 3) was divided into five stages: first, the model was asked to organize the provided structured dataset; next, it generated the “Medical History and Findings” section; followed by the “Therapy and Course” section; fourth, it combined and refined both sections and finally, the content was reviewed by comparing it against the original data. The complete chat, including all prompts, is provided in Table 2 of the supplementary material. For ICL, we provided the model with one- or two-shot examples, which were included in the prompt to serve as references for the task at hand. These examples were intended to guide the model and help it adapt its responses by learning from the patterns and structures presented in the examples.

The structured data provided was transformed into a rule-based natural language description of each feature. Through an iterative process, an optimal structure and sequence were developed to guide the LLM. This involved adding explanatory information for specific data points and formulating conditional rules under which certain attributes were made available to the model.

Model implementation and setup

The preprocessed data, along with the prompt developed during the PE process, was used with the LLaMA3 chat template to generate the discharge summaries. The generation process followed these steps for each patient record:

-

1.

Prompt Generation:

-

Abstracted EHR Data Preprocessing: The structured data was extracted from the completed forms and formatted according to the structure presented in Table 1.

-

Prompt Formatting: The extracted data was inserted into the predefined prompt structure (as shown in Table 3), replacing the {data} placeholder.

-

-

2.

Summary Generation:

-

The formatted prompt was processed by LLaMA3 using the specified chat template to generate the discharge summary.

-

-

3.

Summary Evaluation:

-

The generated summary was evaluated as described in the ‘Model Evaluation’ section.

-

-

For text generation, the following libraries and versions were employed: Transformers (Version 4.39.3)

-

PyTorch (Version 2.2.2)

-

BitsAndBytesConfig (Version 0.43.1) for 4-bit quantization and bfloat16 inference.

The Python (v3.11.5) script ran on an NVIDIA RTX A6000 GPU (48 GB), with hyperparameters set to a temperature of 0.2 for deterministic outputs and a 1000-token limit for new content generation.

Model evaluation

Error Analysis Where possible, underlying causes or explanations for errors were explored, along with potential strategies for improvement. Additionally, the average number of errors per summary was calculated to quantify the model’s performance.

The error analysis was conducted by one of the authors (NK), with a second author (TMP) consulted in cases of uncertainty. The analysis of frequent mistakes involved a systematic comparison between structured data from the EHR and the generated summaries. An error was defined as any piece of information in the summary generated that either did not match the corresponding structured dataset or could not be inferred from it. All identified errors were labeled within the respective summaries, recurring errors were categorized.

Furthermore, instances where information present in the structured dataset was absent from the generated summaries were identified and marked in the structured datset. This “missing information” was defined as any information available in the structured EHR dataset but not included in the generated summary.

Quantitative Evaluation Summary quality was evaluated using BERTScore and ROUGE by comparing the generated summaries to the physician-written summaries. BERTScore assessed semantic similarity using the BERTScore library (v0.3.13) and contextualized embeddings from “facebook/bart-large-mnli”35. ROUGE metrics (ROUGE-1, ROUGE-2, and ROUGE-L) measured n-gram overlaps and sequence commonalities. Consistent with prior literature, both ROUGE (syntactic similarity) and BERTscore (semantic similarity) were used9,10,23,27.

Qualitative Evaluation Physicians and medical students conducted an anonymous survey evaluating five randomly selected summaries, along with their corresponding structured dataset. The survey consisted of four questions, adapted from Aali et al.18 and translated into German. Respondents rated each summary on a 5-point Likert scale, ranging from 1 (very poor) to 5 (very good), based on the following criteria:

-

1.

Comprehensiveness: how well does the summary capture important information? This assesses the recall of clinically significant details from the input text.

-

2.

Conciseness: how well does the summary exclude non-important information? This compares how well the summary is condensed, considering the value of a summary decreases with superfluous information.

-

3.

Factual Correctness: how well does the summary agree with the facts outlined in the clinical note? This evaluates the precision of the information provided.

-

4.

Fluency: how well does the summary exhibit fluency? This assesses the readability and natural flow of the content.

The goal of the evaluation was to gather preliminary insights into how well the LLaMA3-generated summaries align with clinical expectations.

Data availability

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Code availability

The underlying code for this study is available on GitHub and can be accessed via this link https://github.com/IMI-HD/llm-discharge-summaries.

References

Lenert, L. A., Sakaguchi, F. H. & Weir, C. R. Rethinking the discharge summary: a focus on handoff communication. Acad. Med. J. Assoc. Am. Med. Coll. 89, 393–398. https://doi.org/10.1097/ACM.0000000000000145 (2014).

Salim Al-Damluji, M. et al. Association of discharge summary quality with readmission risk for patients hospitalized with heart failure exacerbation. Circ. Cardiovasc. Qual. Outcomes 8, 109–111. https://doi.org/10.1161/CIRCOUTCOMES.114.001476 (2015).

Martin, D. B. et al. Preferences in oncology history documentation styles among clinical practitioners. JCO Oncol. Pract. 18, e1–e8. https://doi.org/10.1200/OP.20.00756 (2022).

Liu, J., Nicolson, A., Dowling, J., Koopman, B. & Nguyen, A. e-Health CSIRO at “Discharge Me!” 2024: Generating discharge summary sections with fine-tuned language models. (2024).

Hüske-Kraus, D. Suregen-2: A shell system for the generation of clinical documents. In EACL ‘03: Proceedings of the tenth conference on European chapter of the Association for Computational Linguistics - Volume 2, 215–218. https://doi.org/10.3115/1067737.1067788 (2003).

Starlinger, J., Kittner, M., Blankenstein, O. & Leser, U. How to improve information extraction from German medical records. it Inf. Technol. 59, 171–179. https://doi.org/10.1515/itit-2016-0027 (2017).

Heilmeyer, F. et al. Viability of open large language models for clinical documentation in German Health Care: Real-world model evaluation study. JMIR Med. Inform. 12, e59617. https://doi.org/10.2196/59617 (2024).

Amatriain, X. Prompt design and engineering: Introduction and advanced methods. Available at http://arxiv.org/pdf/2401.14423v3 (2024).

Ggaliwango, M., Nakayiza, H., Daudi, J. & Nakatumba-Nabende, J. Prompt engineering in large language models. In Data Intelligence and Cognitive Informatics. Proceedings of ICDICI 2023 1st edn (eds Jacob, I. J. et al.) 387–402 (Springer, 2024).

Meskó, B. Prompt engineering as an important emerging skill for medical professionals: tutorial. J. Med. Internet Res. 25, e50638. https://doi.org/10.2196/50638 (2023).

Ekin, S. Prompt engineering for ChatGPT: A quick guide to techniques, tips, and best practices. TechRxiv. https://doi.org/10.36227/techrxiv.22683919.v2 (2023).

White, J. et al. A prompt pattern catalog to enhance prompt engineering with ChatGPT. Available at http://arxiv.org/pdf/2302.11382v1 (2023).

Prompt Engineering Guide. Techniques: Prompt chaining. Available at https://www.promptingguide.ai/techniques/prompt_chaining (2024).

Min, S. et al. Rethinking the role of demonstrations: What makes in-context learning work? Available at http://arxiv.org/pdf/2202.12837v2 (2022).

Cai, P. et al. Generation of patient after-visit summaries to support physicians. In Proceedings of the 29th International Conference on Computational Linguistics (eds Calzolari, N. et al. 6234–6247 (International Committee on Computational Linguistics, Gyeongju, Republic of Korea, 2022).

Ando, K., Okumura, T., Komachi, M., Horiguchi, H. & Matsumoto, Y. Is artificial intelligence capable of generating hospital discharge summaries from inpatient records?. PLOS Digit. Health 1, e0000158. https://doi.org/10.1371/journal.pdig.0000158 (2022).

Reynolds, L. & McDonell, K. Prompt programming for large language models: Beyond the few-shot paradigm. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (eds Kitamura, Y., Quigley, A., Isbister, K. & Igarashi, T. ) 1–7 (ACM, New York, 2021).

Aali, A. et al. A dataset and benchmark for hospital course summarization with adapted large language models. J. Am. Med. Inform. Assoc. JAMIA 32, 470–479. https://doi.org/10.1093/jamia/ocae312 (2025).

van Veen, D. et al. Adapted large language models can outperform medical experts in clinical text summarization. Nat. Med. 30, 1134–1142. https://doi.org/10.1038/s41591-024-02855-5 (2024).

Ellershaw, S. et al. Automated generation of hospital discharge summaries using clinical guidelines and large language models. In AAAI 2024 Spring Symposium on Clinical Foundation Models. Available at https://openreview.net/pdf?id=1kDJJPppRG (2024).

Tan, Q., Ng, H. T. & Bing, L. Towards benchmarking and improving the temporal reasoning capability of large language models. (2023).

Lewis, P. et al. Retrieval-augmented generation for knowledge-intensive NLP tasks. Available at http://arxiv.org/pdf/2005.11401v4 (2020).

Stiennon, N. et al. Learning to summarize from human feedback. In NIPS’20: Proceedings of the 34th International Conference on Neural Information Processing Systems, 3008–3021. https://doi.org/10.48550/arXiv.2009.01325 (2020).

Jung, H. et al. Enhancing clinical efficiency through LLM: Discharge note generation for cardiac patients (2024).

Dettmers, T., Pagnoni, A., Holtzman, A. & Zettlemoyer, L. QLoRA: Efficient finetuning of quantized LLMs (2023).

Brown, T. B. et al. Language models are few-shot learners. Available at http://arxiv.org/pdf/2005.14165v4 (2020).

Liu, J. et al. What makes good in-context examples for GPT-$3$? Available at http://arxiv.org/pdf/2101.06804v1 (2021).

Golchinfar, D. SauerkrautLM Model Card. Available at https://huggingface.co/VAGOsolutions/Llama-3-SauerkrautLM-70b-Instruct (2024).

Pal, A. & Sankarasubbu, M. OpenBioLLMs: Advancing open-source large language models for healthcare and life sciences. Available at https://huggingface.co/aaditya/OpenBioLLM-Llama3-70B (2024).

AI@Meta. Llama 3 Model Card. Available at https://github.com/meta-llama/llama3/blob/main/MODEL_CARD.md (2024).

U.S. Department of Health and Human Services. Guidance regarding methods for de-identification of protected health information in accordance with the health insurance portability and accountability act (HIPAA) privacy rule. Available at https://www.hhs.gov/hipaa/for-professionals/privacy/special-topics/de-identification/index.html (2012).

Richter-Pechanski, P. et al. A distributable German clinical corpus containing cardiovascular clinical routine doctor’s letters. Sci. Data 10, 207. https://doi.org/10.1038/s41597-023-02128-9 (2023).

Menger, V., Scheepers, F., van Wijk, L. M. & Spruit, M. DEDUCE: A pattern matching method for automatic de-identification of Dutch medical text. Telemat. Inform. 35, 727–736. https://doi.org/10.1016/j.tele.2017.08.002 (2018).

Pérez-Díez, I., Pérez-Moraga, R., López-Cerdán, A., Salinas-Serrano, J.-M. & de La Iglesia-Vayá, M. De-identifying Spanish medical texts - named entity recognition applied to radiology reports. J. Biomed. Semant. 12, 6. https://doi.org/10.1186/s13326-021-00236-2 (2021).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North (eds Burstein, J., Doran, C. & Solorio, T.) 4171–4186 (Association for Computational Linguistics, Stroudsburg, 2019).

Funding

Open Access funding enabled and organized by Projekt DEAL.

The research leading to these results received funding for T.M.P. through state funds approved by the State Parliament of Baden-Württemberg for the Innovation Campus Health + Life Science alliance Heidelberg Mannheim. The other authors did not receive funding for this research. For the publication fee the authors acknowledge financial support by Heidelberg University.

Author information

Authors and Affiliations

Contributions

T.M.P., M.G. and N.K. designed the study. P.F. collected and curated the data retrospectively. T.M.P. and M.G. provided critical insights and guidance throughout the project. M.L. provided clinical data for the study. C.K.L. contributed to the interpretation of the text data. N.K. implemented the experiments and performed the data analysis. M.G., T.M.P., and N.K. drafted the manuscript. All co-authors read and commented on the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

Ethical review and approval were waived for generating a patient data sample from completed inpatient cases from the patient registry database of our pancreatic surgery center at Heidelberg University Hospital. No new patients were recruited, and all the obtained patient information was de-identified before data processing. Informed consent had been previously obtained from all subjects. The use of the database from our hospital was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of the medical faculty at the University of Heidelberg (S301/2001; S708/2019; S083/2021).

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ganzinger, M., Kunz, N., Fuchs, P. et al. Automated generation of discharge summaries: leveraging large language models with clinical data. Sci Rep 15, 16466 (2025). https://doi.org/10.1038/s41598-025-01618-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-01618-7