Abstract

The use of machine learning to analyze sentiments has attained considerable interest in the past few years. The task of analyzing sentiments has becfigome increasingly important and challenging. Due to the specific attributes of this type of data, including length of text, spelling errors, and abbreviations, unconventional methods and multiple steps are required for effectively analyzing sentiment in such a complex environment. In this research, two distinct word embedding models, GloVe and Word2Vec, were utilized for vectorization. To enhance the performance long short-term memory (LSTM), the model was optimized using the amended dwarf mongoose optimization (ADMO) algorithm, leading to improvements in the hyperparameters. The LSTM–ADMO achieved the accuracy values of 97.74 and 97.47 using Word2Vec and GloVe, respectively on IMDB, and it could gain the accuracy values of 97.84 and 97.51 using Word2Vec and GloVe, respectively on SST-2. In general, it was determined that the proposed model significantly outperformed other models, and there was very little difference between the two different word embedding techniques.

Similar content being viewed by others

Introduction

In this world, internet can playing a decisive role in shaping direction of society, emotion, success, and failure of people1. There exist numerous platforms where people share their ideas and start to communicate with other people. Moreover, it can be employed to promote products and services2. Generally, social media has been examined from diverse perspectives, like identifying nasty movements3. However, it can also be used for sentiment analysis to investigate a text’s or an idea’s polarity.

Although sentiment analysis is widely utilized, it encounters considerable difficulties due to the casual nature of social media language, such as extraneous content, abbreviations, and slang. Conventional approaches frequently find it challenging to handle these intricacies, resulting in suboptimal results. Moreover, while deep learning architectures like long short-term memory (LSTM) networks have demonstrated potential, their success is often constrained by the need for manual hyperparameter adjustments and insufficient preprocessing methods. Tackling these issues is essential for enhancing the reliability and accuracy of sentiment analysis systems.

Generally, sentiment analysis is interpreted as an endeavor to analyze the meaning and polarity of texts and sentence. In addition, it has been employed to recognize and categorize the emotional tone and aims to ascertain the polarity, negative or positive, in accordance with a standard categorization4,5,6.

Sentiment analysis involves determining perspectives, emotions, and opinions explained in source materials. This area typically includes analyzing large text datasets and generally focuses on formal language. However, there are some stages that can be applied to analyze informal language as well. Sentiment analysis utilizes NLP (natural language processing) and machine learning to recognize the overall sentiment toward a topic or product7,8,9,10,11. Sentiment analysis usually requires utilizing a set of features that are obtained from the initial dataset. However, the efficiency has been regularly influenced by the volume of data, noise, the reduction of dimensionality, and the data areas used for training and testing the networks.

Commonly, three main methods exist for sentiment analysis, comprising the supervised approach (ML-based method)10,12,13, a hybrid method14,15, and an unsupervised approach (lexicon-based method)16,17,18. It is an area of study with significant potential for various real-world applications, where opinion data are collected to help various stakeholders make well-informed decisions.

Representation of words as numerical vectors or values in a matrix is known as word embedding. This process generates vector representations for words with alike meanings. Word embedding is also vectorization of word, wherein all words are converted into an input vector of neural network. The mapping generally takes place in a low-dimensional space, although it may vary based on the vocabulary’s size.

Recurrent neural networks (RNNs) are often employed in sentiment analysis because of their efficient network memory, enabling them to analyze contextual information. GRU (gated recurrent unit) and LSTM (long short-term memory) have been considered popular kinds of RNNs that are appropriate for sentiment analysis categorization tasks19,20,21,22,23,24. Moreover, convolutional neural network (CNN) is employed in many studies in combination with other models to extract local features; moreover, it has also been employed alone25,26,27,28. Moreover, the Artificial Intelligence and neural networks can be used for other purposes as well, for example kidney disease diagnosis29, coffee bean classification30, and Leukemia Detection31. In the following, some other studies pertinent to sentiment analysis have been explained.

The significance of this work is its capability to fill essential gaps within sentiment analysis, especially regarding data of social media, which tends to be informal and noisy. By presenting a new optimization algorithm and preprocessing stages, this study enhances the accuracy of sentiment classification while minimizing the need for manual hyperparameter optimization, which can be both time-consuming and prone to errors. These improvements are vital for practical applications, including public opinion assessment, customer feedback analysis, and brand monitoring, where efficacious and accurate sentiment analysis is critical for informed decision-making.

In this research, long short-term memory (LSTM) was selected as the main deep learning model for sentiment analysis because of its capability to process sequential data and remember long-term dependencies within text. Although models such as BiLSTM, GRU, and Transformer-based frameworks (like BERT, RoBERTa, or DistilBERT) have demonstrated effectiveness in NLP, LSTM provides some benefits that make it especially well-suited for sentiment analysis, particularly when dealing with noisy social media data.

LSTM has been developed to overcome the vanishing gradient issue, which frequently occurs in conventional RNNs. This capability enables LSTM to efficiently learn long-term dependencies within text. In comparison with Transformer-based models like BERT, LSTM is less computationally intensive and demands less resources during training. Data of social media often has informal language, abbreviations, and slang, posing challenges for models that rely on pre-trained embeddings. LSTM’s proficiency in learning from sequential data and adjusting to various lengths of text makes it more appropriate for managing such unstructured and noisy information. Furthermore, although BiLSTM might also be considered for its ability to understand context in both directions, LSTM’s more straightforward architecture facilitates optimization and combination with the ADMO.

Kaur et al.32 conducted a research in which the Twitter data was employed by R programming language. The Twitter data have been gathered on the basis of the keywords of hashtag, comprising coronavirus, COVID-19, new case, recovered, and deaths. In the present investigation, an optimizer has been developed named Hybrid H-SVM (heterogeneous support vector machine). Also, the sentiment categorization was implemented, and they were separated into neutral, negative, and positive scores. The efficiency of the suggested optimizer has been contrasted with other networks like RNN (recurrent neural network) and SVM (support vector machine) employing several metrics, including recall, accuracy, F1-score, and precision.

Dashtipour et al.33 developed a model, which was deep-learning-driven and context-aware, and was developed for Persian sentiment analysis. Particularly, this model classified the review of the Persian movies and segmented them into positive or negative. There were two various deep learning network, which were employed, including LSTM (long-short-term memory) and CNN (convolutional neural network). Then, they were compared with other models, including Autoencoder, SVM (support vector machine), Logistic Regression, and convolutional neural network (CNN), multilayer perceptron (MLP). In the end, it should be mentioned that the Bidirectional-LSTM could accomplish the best accuracy with the value of 95.61% regarding the dataset of movie, and 2D-CNN achieved the best accuracy with the value of 89.76%.

Kaur and Sharma34 presented a hybrid method for sentiment analysis in the present study. The procedure included pre-processing, feature extraction, and sentiment classification. The pre-processing phase removed the unnecessary data existing in the review while employing NLP approaches. A hybrid approach, including aspect-related features and review-related features, were suggested for extraction of feature in an efficient manner and for developing the unique hybrid attribute that is relevant to the reviews. In the present research, LSTM has been implemented for classification of sentiment as the classifier. Three various datasets were employed to assess the suggested model. The recommended model, in turn, obtained the values of 92.81%, 91.63%, and 94.46 for average F1-score, recall, and precision.

Mutinda et al.35 suggested a model for sentiment classification called LeBERT by integrating CNN, BERT, N-grams, and sentiment analysis. In this model, BERT, N-grams, and sentiment lexicon have been employed for vectorizing words that were chosen from a section existing within the input text. In fact, CNN has been employed for mapping of feature and giving the sentiment class of output. The suggested network has been assessed using 3 publicly available datasets, including Yelp restaurants’ reviews, Imbd movies’ reviews, and Amazon products’ reviews. The results indicated the suggested LeBERT model could perform better than the other models and has F-measure value of 88.73% in sentiment categorization in a binary mode.

Paulraj et al.36 offered a sentiment analysis approach in data of Twitter. The preprocessing of the database included tokenization, remove of stop word, etc. The words, which were preprocessed were fed into HDFS (Hadoop Distributed File System) to decrease frequent words and were omitted by employing MapReduce approach. The non-emoticons and emoticons were features that were extracted. The extracted features were graded by considering their desired meaning. After that, the categorization procedure was implemented while employing the deep learning modified neural network (DLMNN). The outcomes were compared with other models, including DCNN, K-Means, SVM, and ANN. The outcomes revealed that DCNN could achieve the values of 95.78%, 95.84%, 95.87%, 91.65% for precision, Recall, F-Score, and Accuracy.

In the present study, the main innovation is the amended dwarf mongoose optimization (ADMO) algorithm. ADMO is a hybrid optimization framework that combines Particle Swarm Optimization (PSO) with the Dwarf Mongoose Optimizer (DMO) to overcome limitations of DMO, like slow convergence and getting stuck in local optima. This combination introduces a velocity-guided mechanism of search that hits a balance between exploitation and exploration, representing a new application within sentiment analysis.

Another significant contribution is the use of ADMO to optimize the hyperparameters of LSTM. Although LSTM is a widely recognized model, its hyperparameters (such as batch size and learning rate) have typically been optimized manually or through generic optimization methods. This research employs ADMO to automate this optimization process, improving performance of LSTM in sentiment analysis. The results of the experiments show that LSTM–ADMO greatly exceeded the performance of other networks. This combination between LSTM and ADMO illustrates a new application of metaheuristic optimization within the realm of sentiment analysis.

Moreover, the extensive preprocessing phases represent a noteworthy contribution. These phases encompass part-of-speech tagging, slang correction, and advanced tokenization designed for informal text found within social media data. These preprocessing measures tackle issues such as spelling errors and abbreviations, which have been found to be essential for precise sentiment analysis.

Ultimately, the thorough validation and benchmarking underscore the innovation of this research. ADMO has been validated using 20 benchmark functions, and LSTM–ADMO has been assessed on the SST-2 dataset with Word2Vec and GloVe embeddings. These experiments demonstrate the network’s superiority and generalizability compared to existing models. The following table represents the limitations of the studies that mentioned in the literature review and the contributions of the present study (Table 1).

Instruments

This study aims to develop a novel approach for improving analyzing sentiment by utilizing long short-term memory (LSTM) optimized by amended dwarf mongoose optimization (ADMO) Algorithm. The structure of the recommended network and the stages have been fully described in this section.

The amended dwarf mongoose optimization (ADMO) algorithm has been chosen over popular methods like particle swarm optimization (PSO), genetic algorithm (GA), and Bayesian optimization because of its exceptional capability to avoid local optima and effectively optimize the parameters of neural networks. Dissimilar to PSO and GA, which may get stuck in local optima as a result of early convergence, ADMO uses dynamic adjustments and adaptive perturbations of step size to efficiently explore novel areas of the solution space.

ADMO also accomplishes a more effective balance between exploration and exploitation. Bayesian Optimization often encounters difficulties in high-dimensional solution spaces. In contrast, ADMO methodically enhances its search methodology, which contributes to a more reliable convergence trend. Moreover, ADMO is computationally efficacious, minimizing unnecessary assessments while still achieving robust efficacy. Dissimilar to particle swarm optimization and genetic algorithms, which typically need a larger size of population for exploration, ADMO adjusts its search in a dynamic manner, making it better suited for resource-demanding deep learning applications. Empirical findings indicate that ADMO provides improved classification results.

In order to address overfitting in the proposed model, various strategies have been utilized to improve generalization and avoid performance decline on unseen data. Regularization techniques, such as dropout and L2 regularization, were applied to decrease overfitting by imposing restrictions on the parameters of model. In particular, dropout layers with a rate of 0.5 were used to stochastically deactivate neurons throughout training, thus preventing the network from becoming overly dependent on certain features.

In addition, L2 regularization was applied with a penalty term (λ = 0.01) to discourage excessively large values of weight, fostering a more balanced distribution for learned variables. To enhance robustness of model, early stopping has been employed, where training has been discontinued if the loss of validation showed no improvement for 10 epochs that avoids unnecessary overfitting of the training data. Furthermore, a fivefold cross-validation method has been adopted to make sure the reliability and stability of the outcomes. This method comprised dividing the dataset into 5 diverse parts, training the network using four of these parts while validating on the remaining one, and reiterating this procedure 5 times. The performance metrics presented in Table 6 represent the mean scores across each folds, offering a more thorough assessment of the model’s efficacy.

Word embedding

At the present stage, the network takes in raw text as input and then breaks it down into tokens or single words. These tokens are then converted into a numerical value vector. Pre-trained word embedding systems like GloVe and Word2Vec have been utilized to produce a matrix of word vector. Both GloVe and Word2Vec models have been independently utilized to evaluate the network’s efficacy. The text that has \(n\) number of words is represented via \(T\), equaling \([{w}_{1}, {w}_{2}, . . . , {w}_{n}]\). Subsequently, the words get transformed into word vectors that have \(d\) dimensions. The input text is explained subsequently:

The input text must be standardized to a fixed length, represented via \(l\), since their lengths are diverse. In addition, zero-padding method amplifies its length. The text with excessive length is reduced. In contrast, the zero-padding approach is applied once the text has a shorter length compared to \(l\). Therefore, a matrix with the same dimension exists in all texts. All the texts have \(l\) dimensions that is mathematically represented in the subsequent manner:

Neural network

Background of recurrent neural network and gated recurrent unit

In this section, the background theory of the RNN will be described, and then LSTM will be fully explained with its features37. Initially, it should be mentioned that RNN has been utilized in diverse contexts for processing sequential data, and sentiment analysis is one of those contexts that has employed RNN a lot19,38,39. When the input has been regarded as \(x={x}_{1},. . ., {x}_{T}\), the hidden vectors and the output vectors, represented by \((h= {h}_{1},. . .,{h}_{T})\) and \((y={y}_{1},. . ., {y}_{T})\), have been accomplished by utilizing the following equation:

where component-wise of activation function and sigmoid function are represented via \(\Phi\). The weight of the input-to-hidden matrix is demonstrated via \(\cup\), the weight of hidden-to-hidden matrix is indicated via \(W\), the hidden-to-bias vector is depicted via \(b\), the weight of the hidden-to-output matrix is exhibited via \(V\) while utilizing Eq. (3), and output-to-bias is illustrated through \(c\)40. The traditional RNN has been depicted in Fig. 1.

Long short-term memory

The NN (neural network) is a prominent example of the nonlinear and nonparametric models, since it never depends on conceptions of the parametric design of the functional connection among parameters. Many research works are carried out on the use of NN. The main purpose of it is RNNs can store information from previous time steps and use it while processing the present step of time. Because of disappearing gradients, RNNs encounter difficulties in maintaining long-term dependencies. In the following, it should be mentioned that an LSTM is a form of RNN created to preserve long-term dependencies. When compared with other neural networks, machine learning, and other traditional deep learning models, it becomes evident that LSTM has the potential to outperform other networks.

The model recommended incorporates a gating unit within its network structure to regulate the influence of current data on previous data, thereby enhancing its memory capabilities. It has been designed to address the challenges in nonlinear sequence forecast. The LSTM model comprises forget gates, input gates, output gates, and cell states.

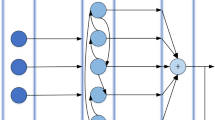

LSTM is a particular kind of RNN. Its gate system avoids the issues of gradient explosion and vanishing that arise in traditional RNNs. Furthermore, it is expert at grasping long-term dependencies. Hence, the LSTM that has memory functionality demonstrates a significant benefit when contending with challenges related to the prediction and classification of time series. The whole design of the LSTM has been represented in Fig. 2.

The hidden unit state and the sequence of the input layer are represented by \(({x}_{1}, {x}_{2}, . . . , {x}_{T})\) and \(\left({h}_{1}, {h}_{2}, . . . , {h}_{T}\right)\), respectively. In addition, the previously noted elements are represented in the following formulas:

where input vector of this network is depicted via \({x}_{t}\), the vector of cell output is represented via \(h\), the output gate has been demonstrated via \({o}_{t}\), the forget gate has been represented via \({f}_{t}\), and the input gate has been demonstrated via \({i}_{t}\). The status of the cell unit is displayed via \({C}_{t}\), and the input weight matrix is demonstrated via \(U\), the activation function is indicated via \(tanh\) and \(\sigma\). In addition, the bias and weight elements are, in turn, indicated via \(b\) and \(W\).

There is an essential sector within the present model called cell state displayed via \({C}^{t}\). Additionally, it can retain the memory of unit state in tth time, then unit state memory has been adjusted by utilizing gates of forget and input illustrated by \({f}_{t}\) and \({i}_{t}\), respectively. The key goal of the forget gate is for the cell forget remind its former situation \({C}_{t-1}\). In addition, the key goal of the input gate is enabling the received signals to upgrade the unit cell or stop it from being updated. In the end, the objective of the gate of output is controlling the unit state \({C}_{t}\)’s output and change it to the following cell. The internal framework of the recommended model consists of some perceptrons. Normally, backpropagation algorithms are mostly utilized methods of training.

Dwarf mongoose optimizer

The current sector explores the DMO (dwarf mongoose optimization) algorithm, which offers valuable visions into its processes and structure for optimization aims. The algorithm takes inspiration from the Helogale, a type of dwarf mongoose found in semi-desert regions of Africa and the savannah bush. These animals typically live in the vicinity of termite mounds, rocks, and hollow trees, employing those objects as accommodation. These creatures are small carnivores, measuring approximately 47 cm in length and weighing around 400 g. They typically live in family groups, led by a pair of alphas.

The young members of these individuals’ families play more important roles than their siblings, while females have more noteworthy roles in comparison with males. The animals exhibit the highest level of collaboration amid the other mammals. Each animal has a specific role determined via its age and gender, such as attacking intruders, guarding, babysitting, and attacking hunters. Researchers have thoroughly examined all roles, rank order, and relationships amid candidates in separate studies.

The candidates mark the objects within their territory using their cheeks and anal glands. This marking manner helps them have more secure feeling. Every family participates in marking their territory and works together, and their cooperation is heavily reliant on their marking.

Studying the small animals that inhabit the Taru Desert reveals that the region consists of numerous rocks, termite mounds, and hollow trees. Research indicates that it takes approximately 21.8 days for these animals to mark the determined zone. Various studies have shown that the size of the area can greatly influence their coverage range, foraging patterns, and sleeping mounds, while the boundaries of their territories remain unchanged.

These creatures lack a venomous bite and instead rely on a strong bite to crush their prey’s skull, targeting the eye. This hunting method limits the size of the animals they can hunt, and they are not observed cooperating to take down larger prey. The constraints of their hunting method significantly impact their ecological adaptations and social behavior, forcing them to be advanced in securing enough nutrition for their family members.

The candidates mainly hunt small animals, which are not suitable to share within the group, with the exception of the young individuals. These individuals sometimes quest arthropods as their main target; however, they probably catch minor mammals, small birds, and gecko lizards, dependent on elements such as space use, group size, and prey size. The unpredictable and wide range of targets makes it hard for the candidates to discover a good meal. Extensive search has been considered necessary to gain sufficient nutrition. These candidates have a semi-nomadic lifestyle, rarely returning to their former mound of sleeping and traveling long distances to discover nutrition.

Following the current pattern, the group reduces the target, ensures a hunting territory, and avoids over-hunting. The groups move together and stay linked by the use of a brief nasal sound “peep” at a 2 kHz frequency or vocalization produced via the alpha female and obtaining nutrition at the same time. The space moved via the group on a daily basis varies based on factors, such as the group size, disruptions in foraging to avoid predators, and the presence of young members. The alpha determines various aspects, including sleeping mounds, foraging routes, and the distance covered, then the search is initiated.

Studies relevant to these individuals have shown that they do not engage in the behavior of bringing food to young members or nursing mothers. This discovery has impacted the social organization of the animals, particularly regarding parental behavior. As a result, compensatory community adjustments are observed and are detailed below. Some candidates assume the responsibility of caring for the young, a task that is typically undertaken by both male and female members. A team of babysitters looks after the juveniles in the middle of the day, when they have been altered by the initial search group. Nests are not built for the young ones; instead, they are placed among sleeping mounds. When the juveniles join the group, they have thin fur and cannot cover long distances by running. As a result, their daily searching decreases, and their move becomes restricted.

Simply put, these individuals cannot hunt big prey to provide food for the whole group. The individuals have selected to inhabit a seminomadic life in an area that is big for the total group. Living a seminomadic life helps prevent excessive use of any region and makes sure global search of the whole zone, because the individuals never return to their former mounds of sleeping.

To optimize the suggested algorithm, the candidates are initialized by utilizing Eq. (11). The current population is generated in a stochastic manner in the lower bound \(LB\) and upper bound \(UB\).

where the element \(B\) is selected from population in a stochastic way produced by utilizing Eq. (12), \(d\) is the dimension of the problem, the position of the dimension \(j\) of the \({i}^{th}\) population is represented via \({b}_{i,j}\), the size of the population is indicated via \(n\).

A stochastically generated quantity is demonstrated via \(unifrnd\) distributed in a uniform manner. Here, the upper and lower bounds of the problem have been, in turn, depicted via \(VarMax\) and \(VarMin\), and the decision variables’ size is demonstrated via \(VarSize\). The finest achieved solution is taken into account as the best problem-solver achieved up to now.

The model of DMO

The DMO has been made to imitate the compensatory adjustment of behaviors witnessed in the creatures. This adaptation entails regulating prey size through social interaction with determined caretakers and adopting a partially nomadic lifestyle. To implement the proposed model, the social organization of these creatures is divided into three classes, including the babysitters, alpha group, and scouts. The compensatory alteration of behaviors of all these groups results in a semi-nomadic life within a region capable of supporting the whole group.

These creatures collaborate to scout and search for food, and the same group does these activities at the same time, seeking out new sleeping mounds to discover nutrition. The group of alpha begins foraging and looking for a novel sleeping mound concurrently to determine when the conditions for exchanging babysitters have been met. The process has been repeated by calculation of the mean of sleeping mounds in all the iterations. In accordance with the current value, the following step of the current optimizer has been ascertained employing Eq. (12). Their nomadic life assists in preventing overuse of a zone and promotes the global search of the whole space without returning to the former mound of sleeping.

The group of alphas

Once the starting population has been computed, population’s possibility fit value has been measured in accordance with Eq. (13). Furthermore, the alpha is represented via \(\beta\) and is selected in accordance with the formerly determined component.

The quantity of these members in the group of alpha is represented via \(n-ds\), \(ds\) indicates the quantity of these individuals, and vocal sound of the alpha female assists each candidate in being on the right path, which is represented by \(peep\).

These candidates utilize the expressions of this optimizer to generate a great situation for nutrition according to the Eq. (14). Additionally, the sleeping mounds are indicated via \(\epsilon\).

where the random quantity has been displayed via \(phi\) that ranges from -1 to 1. When all the iterations are conducted, the mound of sleeping has been calculated in the following manner:

The following formula has been utilized to calculate sleeping mound’s average value.

The scouting stage gets started to assess the following sleeping mound or nutrition source when the condition of babysitting exchange has been met.

The group of scouts

Each scout actively searches for novel mounds of sleeping, since they generally do not return to old mounds that promotes global search. While foraging for nutrition, the scouts simultaneously seek out novel mounds of sleeping. This behavior is displayed as an evaluation of the candidates’ success in finding a novel sleeping mound, in accordance with their overall efficacy. Through effective foraging, the group will eventually discover a various mound of sleeping. This has been depicted in Eq. (17). This equation can replicate the manner of scout candidates.

where the random number has been illustrated via \(rand\) that ranges from 0 to 1, \(CF\) displays a parameter that can manage all the manner of these individuals and can be reduced in a linear way that has slight decrease in each iteration; this equals \({\left(1-\genfrac{}{}{0pt}{}{iter}{Ma{x}_{iter}}\right)}^{\left(2\genfrac{}{}{0pt}{}{iter}{Ma{x}_{iter}}\right)}\). \(M\) is regarded as vector that displays these candidates move to the novel sleeping mound, which equals \({\sum }_{i=1}^{n}\frac{{B}_{i}\times s{m}_{i}}{{B}_{i}}\).

The babysitters

Babysitters typically look after younger candidates and ensure their safety. Their duties often differ, and they typically look after candidates of a subordinate individuals. The alpha female, who is the leader of the group, has the authority to lead the group in search of nutrition. The dominant female regularly comes back two times a day to feed the young candidates. The quantity of caregivers has been ascertained by the population, reducing it by a definite proportion. The community has been replicated by diminishing the size of population on the basis of the number of caregivers. The caregiver exchange variable reorganizes scouting information and the nutrition that were handled before by members of family and substitutes them.

Furthermore, to decrease the motion of the alpha group and insist on the exploitation, the babysitters’ fitness weight has been set to 0. Therefore, it is ensured that there will be a reduction in weight of average group in the following iteration.

Amended dwarf mongoose optimizer (ADMO)

The effectiveness of the DMO (dwarf mongoose optimizer) has been illustrated in overcoming a variety of optimization problems. Although, it has a few constraints, such as a tendency to get stuck in local optima and slow convergence, especially when contending with problems with high dimensions. To address these issues, combining DMO with another optimization algorithm turns out to be a good approach. One interesting method is to merge DMO with the particle swarm optimizer (PSO), which is inspired by the collective manner of fish schools and bird flocks.

By merging DMO with PSO, numerous benefits can be achieved. Initially, the incorporation of PSO facilitates improved escape from local optimum by offering further global search in the solution space. This assists DMO in evading early convergence and successfully avoiding local optimum. Next, PSO introduces a method that achieves a fine equilibrium between exploiting present favorable regions and exploring novel solutions.

This method of balancing exploitation and exploration speeds up the convergence of the suggested algorithm. Finally, PSO increases the variety of the population of DMO by introducing novel particles into the search space. This helps prevent early convergence and encourages a wider global search of candidate solutions. To improve DMO’s efficacy, the PSO is smoothly combined with the location-updating procedure. The exact summary of the incorporation has been discussed below:

The process of updating the DMO’s position has been improved by combining the position updates and velocity on the basis of PSO. By adjusting PSO’s velocity and situation formulas to match the search manner of this algorithm, a seamless combination has been achieved. Incorporating velocity concept of PSO offers a directed search approach that leads the candidates of DMO toward more advantageous solutions.

The exploitation and exploration stages of PSO are influenced by its social and cognitive components, respectively, which further improve the search operation. Integrating DMO with PSO offers a method for improving the optimizer’s efficiency, overcoming its limitations, and expanding applicability of it to a broader set of optimization issues. By employing the power of both optimizers, a more resilient optimization procedure, higher quality of solution, and faster convergence can be achieved.

The constant element \(\alpha\) has been employed for enhancing PSO and DMO, where \(\alpha\) equals 0.6 and has been employed to raise the efficiency of DMO. Moreover, the velocity that has been achieved by this process is calculated in the following way:

where, the finest location of the swarm and the particle have been, in turn, illustrated by \({B}_{i}^{G}\) and \({B}_{i}^{L}\). The present and the prior velocity of the particle have been, in turn, demonstrated by \({v}_{i+1}\) and \({v}_{i}\). Furthermore, the coefficients of local and global best solutions have been, in turn, displayed via \({\theta }_{2}\) and \({\theta }_{1}\).

The hyperparameters have been chosen using grid search on each benchmark function whose values optimize quality of solution and speed of convergence. To exemplify, the hyperparameter \(\alpha\) balances local search and global search, and \({\theta }_{1},{\theta }_{2}\) prevent early convergence.

The theoretical advancement of ADMO lies in its distinct combination of DMO’s compensatory foraging manner with the velocity-driven search of PSO. In contrast to conventional PSO hybrids, which depend on random mutation or crossover, ADMO employs the leadership of the mongoose alpha group to guide particles. This hierarchy mitigates the issue of early convergence, a frequent problem in PSO, while still ensuring effective local search. The velocity update enhances this equilibrium by balancing local and global optima, a feature that is missing in DMO or PSO.

LSTM on the basis of amended dwarf mongoose optimizer

The models’ variables are manually adjusted to fit LSTM, affecting the forecast results from the present network based on the aforementioned factors. Consequently, this study proposes an enhanced LSTM/ADMO model.

The primary goal is the optimization of the pertinent variables of the LSTM using the remarkable variable optimization skills of the optimizer presented earlier. To make sure the investigation’s objectivity, the configuration of the LSTM has been kept constant, and there has been no raise in the quantity of nodes with the layers. The process of forming the LSTM/RDMO has been thoroughly described as follows:

-

(1)

The RDMO’s relevant parameters are set up, such as fitness function and population size, which are identified as tasks with free variable objects. The LSTM’s parameters are established by defining the initial rate of learning, initial quantity of iterations, time window, and size of packet. In the present article, the error has been regarded as a cost function calculated subsequently:

$$cost=1-acc\left(f;D\right)=1-\frac{1}{m}\sum_{i=1}^{m}I(f\left({x}_{i}={y}_{i}\right)$$(20)where the sequences of training is represented via \(D\), the quantity of samples is indicated via \(m\), the predicted sample is displayed via \(f({x}_{i})\), the original label of sample is depicted via \({y}_{i}\), and the function is illustrated via \(I(f\left({x}_{i}={y}_{i}\right)\). Once \(f ({x}_{i})\) equals \({y}_{i}\), \(I(f ({x}_{i}) = {y}_{i})\) equals 1. On the other hand, if it does not equal \({y}_{i}\), \(I(f ({x}_{i}) = {y}_{i})\) equals 0.

-

(2)

The LSTM’s variables have been considered highly essential to develop the constant particles. The components of the particle have been regarded as \(num\_iter\), \(alpha\), and \(batch\_size\) that in turn illustrate the quantity of iterations, rate of learning, and the size of batch. They have been found to be diverse elements of RDMO optimizer.

-

(3)

The level of focus increases. According to the improved focus level, the fitness function is calculated, and then the potential best focus level is increased. Ultimately, the overall best concentration level of the particles is increased.

-

(4)

The LSTM provides prediction value when the number of iterations is able to achieve the highest value of 30 iterations. If this is not the case, the process needs to restart from phase three.

-

(5)

In order to develop the LSTM/RDMO, the optimum parameters are applied to the LSTM.

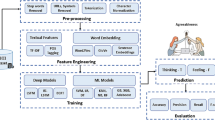

Methodology

Materials and the stages

The SST-2 (Stanford Sentiment Treebank) dataset serves as a popular benchmark for sentiment analysis tasks41. It is a binary classification dataset sourced from the Stanford Sentiment Treebank, that contains reviews of movie from the Rotten Tomatoes platform. This dataset has been created to categorize sentences into two sentiment classes, including positive and negative.

The SST-2 dataset contains 70,042 sentences that each one has been annotated with a sentiment classification. The dataset is divided into a training set and a testing set, with 70% (49,029 sentences) designated for training and 30% (21,013 sentences) set aside for testing. This division facilitates a thorough assessment of the model’s effectiveness. Regarding class distribution, the dataset is balanced. This even distribution guarantees that the model is trained on an approximately equal number of negative and positive sentiments, lessening the likelihood of bias toward a specific class.

The dataset holds significant importance for sentiment analysis as it encompasses a diverse array of linguistic expressions, such as slang, informal language, and subtle emotional nuances. Each sentence within the dataset has been tagged according to the sentiment it conveys, making it ideal for training models to interpret and categorize sentiments.

Additionally, the IMDB dataset consists of 50,000 movie reviews obtained from IMDb, created by Andrew Maas42. This collection is split into 25,000 reviews for training and the rest for testing the network. Each of these sets contains 12,500 positive reviews and 12,500 negative reviews. The classification of the reviews is based on the IMDb rating system. Viewers have the option to rate movies on a scale from 1 to 10, and according to the dataset creator, ratings of 5 stars or lower are deemed negative, whereas ratings of 6 stars or above are considered positive. Each movie can have a maximum of 30 reviews. On average, a review contains 234.76 words and a standard deviation of 172.91 words. Overall, the dataset includes 88,585 unique words.

Data cleaning

Data cleaning has been considered the initial phase while processing the present study. Throughout this phase, the extracted data is shifted from the files and have been retained within the memory. There are three sub-stages here help clean the data before prep-processing stage begins. By conducting this stage, the raw data get ready to go through other stages; ultimately, it helps achieve superior, more accurate, and, more consistent results.

-

(a)

Removal of unwanted characters. In this phase, some redundant attributes like URLs, online links, etc. are eliminated from the text while utilizing the enhanced regular words. Here, all the unnecessary data are eliminated, so that data get cleaned.

-

(b)

Correction. Concerning the current phase of the study, it has been endeavored to change the abbreviated words into a token and correct all the slangs utilized by the users. To exemplify, “GM” would change into “good morning”. This stage has been considered really essential and worthy for the following stages, because abbreviated words are highly confusing to be analyzed while doing sentiment analysis. In fact, by the use of this stage, the following stages will have more accurate results.

-

(c)

Removal of stop words. There are myriad words that must be eliminated while conducting this stage. Some common stopwords are accordance, above, a, an, and actually. Generally, stopwords have been utilized in Natural Language Processing (NLP) and text mining to remove the words that have been utilized and convey no meaning or minor beneficial data. The stopwords that have been utilized are available at: https://countwordsfree.com/stopwords

Data preprocessing

There are several procedures that should be conducted during this phase, including word stemming, tokenization, and part-of-speech tagging, all of which have explained subsequently.

-

(a)

Tokenization. The movement of tokenization is to break the text down into meaningful phrases, symbols, words, and tokens. TextBlob Word Tokenize tool is utilized for tokenization of the text. It is noteworthy that the common data structures are replicated to maintain tokens and sentences of the texts. This tool is publicly available at: http://textanalysisonline.com/textblob-word-tokenize.

-

(b)

Stemming. In this procedure, the inflected words get decreased to its base word. Here, porter-2 algorithm43 has been utilized to change the tokens into the stem format and save them in the original token and the keyword.

-

(c)

Part-of-speech tagging. This is a procedure that words have been tagged in accordance with the explanation and context. Maxent Tagger has been employed for doing this44. The critical objects have unique design pertinent to those tokens, the stem format of the token, and part-of-speech tag. Once the network is preprocessed, it is transferred to the following module.

Neural network and algorithm

During the present phase, the data have been fed into the LSTM optimized via ADMO. After that, all features get extracted and classified. In fact, the phases of data cleaning and data pre-processing extract the attributes of the dataset. Of course, the polarity of sentiment analysis, negative or positive, have been determined by the adjectives. After adjective extraction, they get segmented. There are some words place before and after the adjective that get eliminated. If the phrase is “an amazing scene”, “an” and “scene” get eliminated. In the following, the extracted tokens are classified into 2 polarities, namely positive and negative.

Evaluation metrics

During this section, the findings of the network are evaluated. The outcomes get assessed according to the most normally utilized metrics, including F1-score, recall, precision, and accuracy. These measurement indicators have been computed in the subsequent manner:

where True positive has been displayed via \(TP\) that is when the tokens have been categorized as positive in an accurate manner, revealing that the categorized and the real values are positive. Moreover, Flase Positive has been demonstrated via \(FP\) that occurs when the tokens have been erroneously categorized as positive. False Negative is represented via \(FN\) that occurs when the tokens are erroneously classified as negative. Ultimately, True Negative has been indicated via \(TN\) that occurs when the tokens have been erroneously categorized as negative.

Complexity and FLOP analysis

In order to further confirm the effectiveness of the suggested LSTM–ADMO model, a thorough examination of its computational complexity and the quantity of floating-point operations (FLOPs) have been presented necessary during both inference and training. This assessment is essential for comprehending the model’s feasibility and scalability, particularly when implemented in practical applications.

Theoretical complexity analysis

The computational complexity associated with the LSTM model mainly depends on the quantity of parameters and the length of the sequence. For an LSTM that has \(h\) hidden units and an input sequence of length \(T\), the complexity for one forward pass has been indicated via \(O(T\times {h}^{2})\). This is a result of the element-wise operations and matrix multiplications that take place within the gates of LSTM.

The ADMO algorithm, utilized for optimizing the LSTM’s hyperparameters, adds an extra layer of complexity. This complexity has been influenced by the quantity of iterations represented via \(num\_iter\), the size of the population demonstrated via \(n\), and the \(D\) dimensionality of the optimization problem. The total complexity of ADMO is calculated via \(O(num\_iter \times n \times D)\).

When integrating ADMO with LSTM, the overall complexity is the integration of the complexity from the forward and backward passes of LSTM and the optimization intricacy of ADMO. The total complexity of suggested model has been represented in the following:

FLOP analysis

To compute the total quantity of FLOPs necessary for the LSTM–ADMO model, both the ADMO and LSTM elements have been considered. For an LSTM that has an input sequence of length \(T\) and \(h\) hidden units, the FLOPs needed for each time step has been found to be \(4{h}^{2}+4h\). As a result, the overall FLOPs required for a single forward pass can be expressed as:

The ADMO requires assessing the cost function of all individual solutions within the population. If the population consists of \(n\) individuals and the quantity of iterations is \(num\_iter\), the FLOPs for ADMO is calculated in the following manner:

hence, the FLOPs of the suggested model throughout training can be computed like below:

Empirical runtime analysis

To support the theoretical examination, an empirical runtime assessment has been performed using the SST-2 dataset. In Table 2, the training duration, inference time, and FLOPs for the LSTM–ADMO model alongside other models have been represented, which include LSTM, Bi-GRU, GRU, KNN, CNN, BERT, and CNN-BERT.

From Table 2, it is clear that the LSTM–ADMO model requires more training time and has higher FLOPs than simpler models such as CNN and KNN, yet it remains more efficient than other models. This is attributed to the extra computational burden introduced by the ADMO optimization procedure. Nevertheless, the inference time for the suggested model is competitive, making it appropriate for real-life applications of sentiment analysis. Amongst the conventional models, KNN showed the least computational expense concerning FLOPs and training time, since it does not require intricate optimization of parameter. Nevertheless, KNN’s robustness and accuracy are considerably lower in comparison with deep learning models such as LSTM–ADMO.

Discussion on efficiency

The LSTM–ADMO model is computationally demanding throughout its training phase as a result of the optimization procedure; however, it provides notable enhancements in robustness and accuracy, which is evident from the experimental findings. The balance between model effectiveness and computational complexity has been justified by the outstanding outcomes produced by LSTM–ADMO. Additionally, the inference time of the suggested model is similar to other models, making it a suitable choice for implementation in practical scenarios where both efficiency and accuracy are essential.

In contrast, simpler frameworks such as GRU and KNN are efficient in terms of computation but fail to effectively capture intricate patterns within the data, leading to diminished accuracy. Conversely, transformer-based frameworks like BERT and CNN-BERT, although powerful, demand much greater computational power that makes them less appropriate for real-time uses.

Computational efficiency

First of all, it should be mentioned that the number of 30 individuals was selected for population since the population smaller than 20 resulted in insufficient global search, and the population bigger than 50 resulted in increased cost without any improvement in accuracy.

The efficiency of the ADMO (amended dwarf mongoose optimization) regarding the optimization of LSTM hyperparameters is a crucial element of this research. While section "Validation of the amended dwarf mongoose optimization (ADMO) algorithm" highlights ADMO’s outstanding performance on benchmark functions, as illustrated in Table 4, it is also vital to assess its efficacy regarding convergence speed and computational resources in comparison with other hyperparameter optimization techniques like Particle Swarm Optimization (PSO), Genetic Algorithm (GA), and Bayesian Optimization (BO).

Regarding convergence speed, ADMO demonstrates quicker convergence to the optimum hyperparameters as a result of its hybrid characteristics, which integrate the exploratory strengths of DMO (Dwarf Mongoose Optimization) with the velocity-guided approach of PSO. In contrast to BO, which depends on probabilistic replacement models and can cause high computational costs in high-dimensional environments, ADMO effectively modifies its search approach, thereby minimizing unnecessary assessments. Empirical findings, as shown in Table 4, indicate that ADMO achieves lower mean values with fewer iterations in comparison with SDO, TOA, MRFO, WCO, and EO.

Additionally, ADMO needs a smaller size of population compared to PSO or GA to obtain similar or superior results, as demonstrated by its efficacy. This leads to a decrease in computational overhead related to larger populations. Furthermore, the incorporation of PSO’s velocity approach into ADMO promotes a favorable balance between global search and local search. This prevents the common early convergence problems experienced by PSO and GA while preserving computational efficiency.

Other optimization methods have their own limitations and challenges. For instance, BO is recognized for its sample efficacy but faces high computational costs within each iteration, particularly when working with complicated spaces with high dimensions. Conversely, ADMO’s iterative updates are computationally efficient, making it more scalable for optimization of hyperparameters of LSTM. The FLOP analysis, as represented in Table 2, illustrates that the training time for LSTM–ADMO (1100s) is reasonable considering simpler models (e.g., LSTM at 900s) and considerably quicker than transformer-based methods such as BERT (1400s).

ADMO achieves computational efficacy through its adaptive search technique, which reduces unnecessary assessments while it avoids local optima. In contrast to other optimizers, ADMO does not depend on costly large populations, making it particularly appropriate for scenarios where resources are limited. The validation presented in section "Validation of the amended dwarf mongoose optimization (ADMO) algorithm" (Table 4) alongside the runtime metrics (Table 2) demonstrate that ADMO provides a more resource-efficient and quicker method for hyperparameter optimization in comparison with traditional techniques, while maintaining performance standards.

From a theoretical view, the convergence of ADMO has been assured under specific conditions. First, the leadership of the alpha group guarantees comprehensive exploration of the solution space, and second the velocity component meets the random convergence requirements for algorithms like PSO. More specifically, the inertia weight has been dynamically modified, which ensures asymptotic convergence. This combined approach prevents the stagnation issues seen in DMO (with stable step sizes) and PSO (which has inflexible social-cognitive dynamics).

Validation of the amended dwarf mongoose optimization (ADMO) algorithm

Impartial and thorough validation has been conducted to verify the effectiveness of the amended dwarf mongoose optimization (ADMO) algorithm. The first assessment of the algorithm includes the application of 20 widely recognized cost functions. Subsequently, the results generated by the ADMO have been compared with those of five other algorithms, including supply–demand-based optimization (SDO)45, world cup optimization (WCO)46, equilibrium optimizer (EO)47, teamwork optimization algorithm (TOA)48, and manta ray foraging optimization (MRFO)49. The parameter values used for the employed algorithms are presented in Table 3. Table 3 lists the values of the variables utilized in the analysis of each algorithm.

The research has utilized benchmark functions categorized into 3 groups, including unimodal (functions F1 to F7), multimodal (functions F8 to F13), and fixed-dimensional (functions F14 to F20), each with 30 dimensions. The primary objective of this study is to identify the lowest value for 23 selected functions from the CEC2017 benchmark.

The algorithm with the lowest indicator value will show the highest performance. The outcomes’ standard deviation (StD) is also considered to confirm the comparison results’ reliability. To confirm reliable research outcomes, each algorithm has undergone 20 tests, and their standard deviation and mean value are analyzed. Table 4 displays the comparative analysis of the ADMO in comparison with other algorithms.

The results presented in Table 4 indicate that the ADMO outperforms other optimization algorithms across various test functions. Specifically, the ADMO has achieved the lowest mean value in comparison with other algorithms for 18 out of 20 test functions. Moreover, the ADMO has showed the lowest standard deviation in comparison to other algorithms for 17 out of 20 test functions. These results indicate that the ADMO not only finds superior solutions but also demonstrates better stability and reliability compared to other optimization algorithms.

It is important to mention that in just two functions out of 20, other algorithms have a lower average value in comparison with the ADMO. However, the ADMO has displayed strong performance on most of the functions, with competitive average values and smaller standard deviations compared to many other algorithms. This represents the ADMO consistently delivers strong performance across a range of problems.

Briefly, the statistical results presented in Table 4 indicate the efficacy of the proposed ADMO in comparison to other new optimization algorithms, including SDO, WCO, EO, TOA, and MRFO. The ADMO proves its reliability and effectiveness in finding optimal or nearly optimal solutions in different problem domains that establishes itself as a valuable tool for tackling intricate optimization challenges.

Simulation results

Prior to this, it was stated that a variety of metrics were used to demonstrate the effectiveness and performance of the proposed technique compared to other methods. These metrics help to illustrate how well the model performed.

The performance metrics, such as F1-score, precision, accuracy, and recall are computed for the proposed LSTM–ADMO, LSTM, Bidirectional Gated Recurrent Unit (Bi-GRU), GRU, and K-Nearest Neighbors (K-NN) models using both Word2Vec and GloVe embedding methods. The primary objective is to analyze the impact of preprocessing on the model outcomes. In Table 5, the results obtained from the suggested model have been compared with and without preprocessing to demonstrate the effect of preprocessing on the performance.

It has been seen from the results that have been represented Table 5 the procedure of pre-processing can highly influence the results of the recommended LSTM–ADMO. Of course, the procedure of data cleaning and data preprocessing stages facilitate the operation of the sentiment analysis. Thus, it can be understood that these stages can generalize to other models as well. In fact, the proportion of error is computed by subtracting 100 from the accuracy value. It can be interpreted from the aforementioned table that proportions of error in this model while using Word2vec were 2.16 and 7.33 with and without preprocessing stages, respectively. In addition, the proportions of error while using GloVe were 2.49 and 7.73 with and without preprocessing stages, respectively. Furthermore, all the data and the results obtained with and without preprocessing stages while using both models, namely GloVe and Word2vec have been represented in Figs. 3 and 4.

Of course, the results have illustrated that preprocessing can greatly influence the performance of the suggested network while using two diverse models. Although there are minor differences between Word2vec and GloVe. To exemplify, regarding recall and accuracy, Word2vec model could outperform the GloVe model by 0.05 and 0.33, respectively. However, regarding precision, the GloVe model could outperform the other one by 0.53.

Within the current research, an extensive evaluation of the efficiency of diverse neural networks have been performed in accurately classifying the sentiment. The SST-2 dataset has been used to compare and analyze the efficacy of multiple models, including LSTM–ADMO, LSTM, Bidirectional Gated Recurrent Unit (Bi-GRU), GRU, and K-Nearest Neighbors (K-NN). The obtained results, which have been outlined in Table 6, not only demonstrated the effectiveness of LSTM–ADMO but also offered insights into how it was compared with other cutting-edge networks, all of which employed GloVe and Word2Vec for word embedding and vectorization. Essentially, the obtained findings emphasized the considerable influence of GloVe and Word2Vec as methods for word embedding and vectorization, highlighting their ability to produce outstanding results. In the following, all the obtained results and data are represented in Table 6.

The data in Table 6 shows that the accuracy of different word vector techniques is nearly identical, with only slight variations. It is important to note that this holds true for all models, indicating that the results for each model using different word vector approaches are very similar, demonstrating the effectiveness of both techniques.

In this analysis, it is evident that the proposed model showed superior performance compared to traditional methods when it comes to sentiment analysis and determining text polarity using two diverse word embedding techniques with the SST-2 dataset. The LSTM–ADMO model achieved precision, recall, accuracy, and F1-score values of 95.74%, 96.27%, 97.51, and 96.00 respectively while using the GloVe approach. Furthermore, when utilizing Word2Vec, the model achieved precision, recall, accuracy, and F1-score values of 95.21%, 96.32%, 97.84, and 95.71%, respectively.

The CNN model showed slightly good results, achieving precision, recall, accuracy, and F1-score values of 89.38%, 89.62%, 89.51%, and 89.50% with Word2Vec, and 89.46%, 89.73%, 89.50%, and 89.59% with GloVe. These findings suggest that while CNNs may not outperform the proposed LSTM–ADMO model, they exhibit slightly good performance. The slight enhancement in performance with GloVe embeddings indicates that the pre-trained semantic relationships in GloVe could enhance the CNN’s feature extraction abilities.

BERT demonstrated relatively lower performance in comparison with other models, achieving precision, recall, accuracy, and F1-score values of 88.40%, 88.27%, 88.35%, and 88.33% for Word2Vec, and 88.45%, 88.21%, 88.39%, and 88.33% for GloVe. The CNN-BERT hybrid model achieved precision, recall, accuracy, and F1-score values of 92.20%, 92.37%, 92.42%, and 92.28% with Word2Vec, and 92.25%, 92.31%, 92.45%, and 92.28% with GloVe. This indicated that integrating the local feature extraction strengths of CNNs with BERT’s contextual comprehension can lead to considerable enhancements in efficacy. The CNN-BERT model connected transformer-based models with traditional deep learning frameworks, showcasing the effectiveness of hybrid strategies within tasks of sentiment analysis. While its performance was still marginally lower than the suggested LSTM–ADMO model, it highlighted the significance of utilizing global and local contextual information for precise sentiment categorization. The comparison between the performance of the recommended model and other techniques using diverse word embedding techniques is depicted in Fig. 5.

The outcomes are presented in the form of F1-score, precision, recall, and accuracy. The suggested sentiment analysis model performed better than the other models regarding accuracy. This occurred since the model could clean the data by eliminating unnecessary characters and correction of informal language and shortened forms. Furthermore, multiple data preprocessing steps such as tokenization, stemming, and part-of-speech tagging were carried out, ultimately enhancing accuracy. In addition, in the sentiment analysis procedure, they assisted in identifying accurate polarities in texts, resulting in improved efficacy.

The recommended model demonstrated slight differences in performance when using Word2vec in comparison with the results achieved by GloVe based on the 4 metrics that have been utilized. Nevertheless, the differences were not significant. The outcomes validated the exceptional performance of the recommended model. The impressive F1-score, precision, accuracy, and recall reflected that the model’s effectiveness is improved by part of speech tagging, word embedding, and thorough preprocessing steps. In the following, FastText as contextual embedding has been used in this study in order to investigate its influence on the results (Fig. 6).

The experimental findings presented in Table 7 illustrate that the proposed LSTM–ADMO model outperformed other deep learning architectures even when utilizing FastText embeddings. The LSTM–ADMO model accomplished the highest results, achieving an accuracy value of 95.24%, precision value of 94.19%, recall value of 95.38%, and an F1-score value of 95.23%. This indicated a notable enhancement compared to the conventional LSTM model, which showed a 6% decrease in performance across all metrics, underscoring the effectiveness of the ADMO optimization method.

Among the comparison models, CNN-BERT and Bi-GRU represented somewhat good results, reaching approximately 90–91% across various metrics, while more straightforward models like KNN, CNN, and GRU showed more modest performance within the 85–87% range. The transformer-based BERT model performed worse than LSTM–ADMO in spite of its increased complexity, which indicated that for this task, the optimized LSTM design offered better efficiency. These outcomes align with the model’s efficiency utilizing other embedding approaches, namely GloVe and Word2Vec, further confirming LSTM–ADMO’s applicability and resilience across various text representation approaches. Collectively, these outcomes illustrated that the integration of LSTM with ADMO optimization achieved great performance for sentiment analysis while preserving computational efficiency. For further analysis, the models have tested on IMDB dataset (Fig. 7).

The results of the experiments clearly indicated that the LSTM–ADMO model outperformed all baseline methods. Utilizing both GloVe and Word2Vec embeddings, LSTM–ADMO achieved higher accuracy rates (97.74–97.47%) and F1-scores (96.50–96.37%), surpassing transformer-based networks such as BERT (88.49–88.42% accuracy) and CNN-BERT (92.52–92.49% accuracy) by 5–9%. This notable difference in performance implies that complex pre-trained models might be unnecessarily demanding in terms of resources for tasks of sentiment analysis. Additionally, the model demonstrated impressive consistency, exhibiting small fluctuations in performance across various embedding approaches, which highlighted its strength to different input representation options (Table 8).

In conventional frameworks, Bi-GRU demonstrated slight enhancements in comparison with GRU, whereas standard LSTM exceeded GRU variants by 2–3% in accuracy, underscoring the importance of modeling long-term dependencies. The thorough assessment in all metric validates the balanced efficiency of LSTM–ADMO for sentiment analysis. These results establish LSTM–ADMO as a capable and efficient alternative to more resource-intensive transformer models and traditional deep learning methods, especially in scenarios where resource efficiency and accuracy are of high importance. The findings strongly indicated the model’s practical applicability for sentiment classification tasks in real-world settings.

Conclusion

The development of artificial intelligence has displayed favorable progress for the future. The current article has proposed a novel categorization model for sentiments by employing Long Short-Term Memory (LSTM), which has been optimized by the amended dwarf mongoose optimization (ADMO) algorithm. This unique approach aided the network in effectively classifying sentiments of text. The model has been tested and trained using the SST-2 dataset. The word embedding approaches, called Word2Vec and GloVe, were utilized for word vectorization. In the present study, the suggested dataset contained 70,042 sentences. 30% of these sentences were allocated for testing, and 70% were allocated for training, which equal 21,013 and 49,029 sentences respectively. An important step was taken to adapt hyperparameters employing the ADMO to optimize the LSTM. In the end, the model successfully categorized sentiment of text and determined if they were positive or negative. The experimental outcomes verified the effectiveness and feasibility of the network. Additionally, all the data underwent cleaning prior to getting preprocessed, including stages such as removal of stop words, correction, and removal of unwanted characters. After cleaning the data, data preprocessing stage was conducted. This stage has been conducted by the use of some techniques like stemming, part-of-speech tagging, tokenization. The suggested LSTM–ADMO was contrasted with some other networks. After analyzing the data, it was revealed that the suggested model illustrated high rate of accuracy, robustness, and reliability compared to other networks, namely LSTM, Bi-GRU, GRU, and KNN. The LSTM–ADMO could, in turn, accomplish the values of 95.74%, 96.27%, 97.51, and 96.00 for precision, recall, accuracy, and F1-score while using GloVe and could, in turn, accomplish the values of 95.21%, 96.32%, 97.84, and 95.71% for precision, recall, accuracy, and F1-score while using Word2Vec. Generally, it was concluded that the suggested model could immensely surpass other models, and there was so little difference between two various word embedding techniques. The initial processing steps have been specifically designed for English language text. The effectiveness of the model when applied to multilingual or non-English datasets has not been assessed, which limits its use in other languages.

Data availability

All data generated or analysed during this study are included in this published article.

References

DiMaggio, P., Hargittai, E., Neuman, W. R. & Robinson, J. P. Social implications of the Internet. Ann. Rev. Sociol. 27(1), 307–336 (2001).

Wang, C. & Zhang, P. The evolution of social commerce: The people, management, technology, and information dimensions. Commun. Assoc. Inf. Syst. 31(1), 5 (2012).

Han, M., Zhao, S., Yin, H., Hu, G. & Ghadimi, N. Timely detection of skin cancer: An AI-based approach on the basis of the integration of echo state network and adapted seasons optimization algorithm. Biomed. Signal Process. Control 94, 106324 (2024).

Severyn, A. & Moschitti, A. Twitter sentiment analysis with deep convolutional neural networks. In Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval, 959–962 (2015).

Jianqiang, Z., Xiaolin, G. & Xuejun, Z. Deep convolution neural networks for Twitter sentiment analysis. IEEE Access 6, 23253–23260 (2018).

Tellez, E. S. et al. A case study of Spanish text transformations for Twitter sentiment analysis. Expert Syst. Appl. 81, 457–471 (2017).

Dang, N. C., Moreno-García, M. N. & De la Prieta, F. Sentiment analysis based on deep learning: A comparative study. Electronics 9(3), 483 (2020).

Hasan, M. R., Maliha, M. & Arifuzzaman, M. Sentiment analysis with NLP on Twitter data. In 2019 International Conference on Computer, Communication, Chemical, Materials and Electronic Engineering (IC4ME2) 1–4 (IEEE, 2019).

Zehao, W. et al. Optimal economic model of a combined renewable energy system utilizing modified. Sustain. Energy Technol. Assess. 74, 104186 (2025).

Hasan, A., Moin, S., Karim, A. & Shamshirband, S. Machine learning-based sentiment analysis for Twitter accounts. Math. Comput. Appl. 23(1), 11 (2018).

Bo, G. et al. Optimum structure of a combined wind/photovoltaic/fuel cell-based on amended dragon fly optimization algorithm: A case study. Energy Sources Part A Recov. Util Environ. Effects 44(3), 7109–7131 (2022).

Jain, A. P. & Dandannavar, P. Application of machine learning techniques to sentiment analysis. In 2016 2nd International Conference on Applied and Theoretical Computing and Communication Technology (iCATccT), 628–632 (IEEE, 2016).

Agarwal, B., Mittal, N., Agarwal, B. & Mittal, N. Machine learning approach for sentiment analysis. In Prominent Feature Extraction for Sentiment Analysis, 21–45 (2016).

Appel, O., Chiclana, F., Carter, J. & Fujita, H. A hybrid approach to the sentiment analysis problem at the sentence level. Knowl. Based Syst. 108, 110–124 (2016).

Appel, O., Chiclana, F., Carter, J. & Fujita, H. Successes and challenges in developing a hybrid approach to sentiment analysis. Appl. Intell. 48, 1176–1188 (2018).

Fernández-Gavilanes, M., Álvarez-López, T., Juncal-Martínez, J., Costa-Montenegro, E. & González-Castaño, F. J. Unsupervised method for sentiment analysis in online texts. Expert Syst. Appl. 58, 57–75 (2016).

Iparraguirre-Villanueva, O., Guevara-Ponce, V. Sierra-Liñan, F., Beltozar-Clemente, S. & Cabanillas-Carbonel, M. Sentiment analysis of tweets using unsupervised learning techniques and the k-means algorithm (2022).

Yadav, A., Jha, C., Sharan, A. & Vaish, V. Sentiment analysis of financial news using unsupervised approach. Procedia Comput. Sci. 167, 589–598 (2020).

Can, E. F., Ezen-Can, A. & Can, F. Multilingual sentiment analysis: An RNN-based framework for limited data. arXiv preprint arXiv:1806.04511 (2018).

Mahajan, D. & Chaudhary, D. K. Sentiment analysis using RNN and Google translator. In 2018 8th International Conference on Cloud Computing, Data Science & Engineering (Confluence), 798–802 (IEEE, 2018).

Agarwal, A., Yadav, A. & Vishwakarma, D. K. Multimodal sentiment analysis via RNN variants. In 2019 IEEE International Conference on Big Data, Cloud Computing, Data Science & Engineering (BCD), 19–23 (IEEE, 2019).

Sharfuddin, A. A., Tihami, M. N. & Islam, M. S. A deep recurrent neural network with bilstm model for sentiment classification. In 2018 International conference on Bangla speech and language processing (ICBSLP), 1–4 (IEEE, 2018).

Borah, G., Nimje, D., JananiSri, G., Bharath, K. & Kumar, M. R. Sentiment analysis of text classification using RNN algorithm. In Proceedings of International Conference on Communication and Computational Technologies: ICCCT 2021, 561–571 (Springer, 2021).

Monika, R., Deivalakshmi, S. & Janet, B. Sentiment analysis of US airlines tweets using LSTM/RNN. In 2019 IEEE 9th International Conference on Advanced Computing (IACC), 92–95 (IEEE, 2019).

Liao, S., Wang, J., Yu, R., Sato, K. & Cheng, Z. CNN for situations understanding based on sentiment analysis of Twitter data. Procedia Comput. Sci. 111, 376–381 (2017).

Rhanoui, M., Mikram, M., Yousfi, S. & Barzali, S. A CNN-BiLSTM model for document-level sentiment analysis. Mach. Learn. Knowl. Extr. 1(3), 832–847 (2019).

Minaee, S., Azimi, E. & Abdolrashidi, A. Deep-sentiment: Sentiment analysis using ensemble of cnn and bi-lstm models. arXiv preprint arXiv:1904.04206 (2019).

Ouyang, X., Zhou, P., Li, C. H. & Liu, L. Sentiment analysis using convolutional neural network. In 2015 IEEE International Conference on Computer and Information Technology; Ubiquitous Computing and Communications; Dependable, Autonomic and Secure Computing; Pervasive Intelligence and Computing, 2359–2364 (IEEE, 2015).

Elbedwehy, S., Hassan, E., Saber, A. & Elmonier, R. Integrating neural networks with advanced optimization techniques for accurate kidney disease diagnosis. Sci. Rep. 14(1), 21740 (2024).

Hassan, E. Enhancing coffee bean classification: A comparative analysis of pre-trained deep learning models. Neural Comput. Appl. 36(16), 9023–9052 (2024).

Hassan, E., Saber, A. & Elbedwehy, S. Knowledge distillation model for acute lymphoblastic leukemia detection: Exploring the impact of nesterov-accelerated adaptive moment estimation optimizer. Biomed. Signal Process. Control 94, 106246 (2024).

Kaur, H., Ahsaan, S. U., Alankar, B. & Chang, V. A proposed sentiment analysis deep learning algorithm for analyzing COVID-19 tweets. Inf. Syst. Front. 23(6), 1417–1429 (2021).

Dashtipour, K., Gogate, M., Adeel, A., Larijani, H. & Hussain, A. Sentiment analysis of Persian movie reviews using deep learning. Entropy 23(5), 596 (2021).

Kaur, G. & Sharma, A. A deep learning-based model using hybrid feature extraction approach for consumer sentiment analysis. J. Big Data 10(1), 5 (2023).

Mutinda, J., Mwangi, W. & Okeyo, G. Sentiment analysis of text reviews using lexicon-enhanced bert embedding (LeBERT) model with convolutional neural network. Appl. Sci. 13(3), 1445 (2023).

Paulraj, D., Ezhumalai, P. & Prakash, M. A deep learning modified neural network (DLMNN) based proficient sentiment analysis technique on Twitter data. J. Exp. Theor. Artif. Intell. 36(3), 415–434 (2024).

Huang, Q., Ding, H. & Razmjooy, N. Oral cancer detection using convolutional neural network optimized by combined seagull optimization algorithm. Biomed. Signal Process. Control 87, 105546 (2024).

Arras, L., Montavon, G., Müller, K.-R. & Samek, W. Explaining recurrent neural network predictions in sentiment analysis. arXiv preprint arXiv:1706.07206 (2017).

Kurniasari, L. & Setyanto, A. Sentiment analysis using recurrent neural network. J. Phys. Conf. Ser. 1471(1), 012018 (2020).

Alferaidi, A. et al. Distributed deep CNN-LSTM model for intrusion detection method in IoT-based vehicles. Math. Probl. Eng. 2022, 3424819. https://doi.org/10.1155/2022/3424819 (2022).

Socher, R. et al. Recursive deep models for semantic compositionality over a sentiment treebank. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, 1631–1642 (2013).

Maas, A., Daly, R. E., Pham, P. T., Huang, D., Ng, A. Y. & Potts, C. Learning word vectors for sentiment analysis. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, 142–150 (2011).

Porter, M. F. An algorithm for suffix stripping. Program 14(3), 130–137 (1980).

Manning, C. D., Surdeanu, M., Bauer, J., Finkel, J. R., Bethard, S. & McClosky, D. The Stanford CoreNLP natural language processing toolkit. In Proceedings of 52nd Annual Meeting of the Association for Computational Linguistics: System Demonstrations, 55–60 (2014).

Zhao, W., Wang, L. & Zhang, Z. Supply-demand-based optimization: A novel economics-inspired algorithm for global optimization. IEEE Access 7, 73182–73206 (2019).

Razmjooy, N., Khalilpour, M. & Ramezani, M. A new meta-heuristic optimization algorithm inspired by FIFA world cup competitions: Theory and its application in PID designing for AVR system. J. Control Autom. Electr. Syst. 27(4), 419–440 (2016).

Faramarzi, A., Heidarinejad, M., Stephens, B. & Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl. Based Syst. 191, 105190 (2020).

Dehghani, M. & Trojovský, P. Teamwork optimization algorithm: A new optimization approach for function minimization/maximization. Sensors 21(13), 4567 (2021).

Zhao, W., Zhang, Z. & Wang, L. Manta ray foraging optimization: An effective bio-inspired optimizer for engineering applications. Eng. Appl. Artif. Intell. 87, 103300 (2020).

Acknowledgements

The research is supported by Humanities and Social Sciences of Ministry of Education Planning Fund (23YJAZH022).

Author information

Authors and Affiliations

Contributions

Haisheng Deng and Ahmed Alkhayyat wrote the main manuscript text and prepared figures. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions