Abstract

This study aims to explore the potential application of artificial intelligence in ethnic dance action instruction and achieve movement recognition by utilizing the three-dimensional convolutional neural networks (3D-CNNs). In this study, the 3D-CNNs is introduced and combined with a residual network (ResNet), resulting in a proposed 3D-ResNet-based ethnic dance movement recognition model. The model operates in three stages. First, it collects data and constructs a dataset featuring movements from six specific ethnic dances, namely Miao, Dai, Tibetan, Uygur, Mongolian, and Yi. Second, 3D-ResNet is used to identify and classify these ethnic dance movements. Lastly, the model’s performance is evaluated. Experiments on the self-built dataset and NTU-RGBD60 database show that the proposed 3D-ResNet-based model’s accuracy is above 95%. This model performs well in movement recognition tasks, showing remarkable advantages in different dance types. It exhibits good versatility and adaptability to various cultural contexts, providing advanced technical support for ethnic dance instruction. The main contribution of this study is to identify and analyze six specific ethnic dances, verify the universality and adaptability of the proposed 3D-ResNet-based model, and offer reference and support for cross-cultural dance instruction.

Similar content being viewed by others

Introduction

Research background and motivations

In the contemporary digital era, artificial intelligence (AI) technology has emerged within the education realm, demonstrating excellent performance and finding widespread applications1,2. Ethnic dance, a form of art education deeply influenced by culture, has consistently grappled with challenges related to the complexity and diversity of its movement inheritance and instruction3,4,5. As societal development progresses, teachers typically guide students by repeatedly demonstrating movements. However, this approach is not only time-consuming but also prone to omitting details, making it difficult for students to fully grasp complex movements. Moreover, ethnic dance is deeply rooted in its cultural background, and traditional teaching methods often fail to comprehensively convey these cultural nuances, resulting in students’ limited understanding and expressive ability. Conventional instruction largely relies on teachers’ personal experience and expertise, making it challenging to provide personalized guidance that meets the diverse needs of students. Therefore, adapting to modern technological advancements and integrating emerging tools such as AI has become essential for advancing ethnic dance education6,7. Educators are increasingly recognizing that integrating AI technology can provide a more personalized, effective, and innovative educational experience. Under this trend, applying AI technology such as deep learning (DL) to ethnic dance movement instruction has become the focus of scholars in related fields.

With the continuous progress of DL technology, convolutional neural networks (CNNs) have made outstanding achievements across various domains8,9,10. In dance instruction, CNNs can accurately capture and learn the spatial and temporal characteristics of movements by analyzing extensive dance videos11. Meanwhile, the automatic learning and feature extraction capabilities of CNNs enable efficient processing of large-scale datasets12,13,14,15. This helps to overcome the limitations of traditional teaching methods, such as limited samples and one-sided data, and offers suitable teaching solutions for a broader cultural context.

Although three-dimensional (3D) CNNs technology has been widely applied in various fields such as video analysis and medical image processing, its application in ethnic dance education is relatively novel. Ethnic dance education involves spatial and temporal features of human movement, along with intricate details and styles rooted in rich cultural backgrounds. Traditional two-dimensional (2D) CNNs may not comprehensively capture these features, as they only consider spatial changes in movements and may not adequately account for temporal evolution. Therefore, the innovation of this study lies in addressing the specific needs of the dance domain by combining 3D-CNNs technology with a residual network (ResNet) to better capture the spatio-temporal features of dance movements. For instance, in traditional dance instruction, teachers often need to observe students’ movements to guide their skills and expressions. However, for certain complex movement details, such as the arrangement of arms and fingers in Miao dance or the body movements and ritual actions in Tibetan dance, teachers may struggle to accurately capture every detail and provide appropriate guidance. By introducing 3D-CNNs technology along with the advantages of ResNet, this study can more effectively learn and extract these detailed features from a large number of dance videos, providing teachers with more specific and accurate guidance. Additionally, this study customizes the model design based on the characteristics of ethnic dance movements. Taking Mongolian dance as an example, its movement features include jumping, rotating, and emphasizing strength and vitality. To address these features, corresponding model architectures are designed to better capture and identify these movement characteristics. Through the introduction of specific convolution and pooling operations in the model, along with the structure of a ResNet, the model’s ability to recognize these specific types of dance movements is effectively enhanced, thus improving teaching effectiveness.

In summary, the innovation of this study lies in applying 3D-CNNs technology to ethnic dance instruction. By combining ResNet and customized model design, this study optimizes the model’s ability to capture and recognize dance movement features. This innovation not only provides new technological means for dance education but also offers teachers more accurate and personalized guidance, thereby promoting the inheritance and development of ethnic dance culture.

Research objectives

This study explores the potential value of AI in improving the teaching of ethnic dance movements. By introducing advanced technical means, the understanding and expression level of students in ethnic dance movements are improved, thus promoting the inheritance of this culture and art. Specifically, the 3D-CNNs is selected as the technical tool to solve the teaching challenges posed by the complexity of movements and the diversity of cultural backgrounds, leveraging its advantages in temporal and spatial information processing. This study affords new ideas and methods for innovation in ethnic dance movement instruction.

This study aims to construct a dataset containing various ethnic dance movements to support model training and to design and implement an ethnic dance movement recognition model based on 3D-ResNet for accurate dance movement recognition. The significance of this study lies in the combination of 3D-CNNs and ResNet, proposing a novel ethnic dance movement recognition model that provides new ideas and methods for related research fields. By achieving precise movement recognition, this study offers technical support for ethnic dance teaching, thereby improving teaching efficiency and quality.

Literature review

As a part of cultural heritage, many scholars studied ethnic dance. Chang-Bacon (2022)16 highlighted an evasive attitude towards racial issues in the education system, offering critical insights for improving educational practices. Cai et al. (2023)17 proposed a multi-scale hypergraph-based method to predict the human movement in dance videos of intangible cultural heritage, providing a new technical way to promote the inheritance and teaching of cultural heritage.

Driven by AI technology, video feature extraction has become a key research direction in dance instruction. For example, Liu & Ko (2022)18 used DL technology to generate dance movements, showing the application potential of this technology in dance creation and offering new ideas for innovative dance art. Malavath & Devarakonda (2023)19 realized the automatic classification of Indian classical dance gestures (handprints) using DL technology. Rani & Devarakonda (2022)20 proposed an efficient classical dance posture estimation and classification system using CNNs and deeply learned the video sequence. Parthasarathy & Palanichamy (2023)21 generated a novel video benchmark dataset with DL technology, which realized real-time recognition of gestures in Indian dance. Sun & Wu (2023)22 proposed a DL-based method to analyze the emotion of sports dance, affording technical support for understanding the emotional elements in dance performances.

Gesture recognition technology is another field that has attracted much attention in dance instruction. Researchers have utilized DL models, especially joint detection and human pose estimation algorithms, to capture dancer’s body posture in real-time. Zhong (2023)23 proposed the CNNs online teaching method based on the edge-cloud computing platform, highlighting the application of DL in real-time online education. Vrskova et al. (2023)24 introduced a new DL method to recognize human activities. Bera et al. (2023)25 employed DL technology to recognize fine-grained movements, yoga, and dance postures. Gupta et al. (2023)26 introduced a human action recognition model based on real-time 3D object detection, underscoring the application of DL in real-time behavior analysis. Khan et al. (2023)27 designed a hardware accelerator for 3D-CNNs, emphasizing the potential application of DL in processing complex model. Tasnim & Baek (2023)28 proposed a skeleton-based human action recognition method based on dynamic edge CNNs, providing a new DL perspective for skeleton-based action analysis.

As an important vehicle for cultural heritage, ethnic dance has long been a focal point of research on motion recognition and teaching methodology innovation. Li (2024) focused on leveraging motion capture sensors in combination with machine learning techniques to achieve precise recognition of ethnic dance movements29. This study highlighted the critical role of sensor technology in collecting dance motion data, ensuring high-quality datasets for subsequent machine learning model training by accurately capturing dancers’ movement details. Additionally, Li introduced DL algorithms innovatively, optimizing the classification and recognition of ethnic dance movements and providing a novel technological pathway for the automated analysis of dance motions. Zheng et al. (2025) expanded the research perspective to dance posture recognition in an Internet of Things (IoT) environment. Their study incorporated fuzzy DL techniques to address the complexity and diversity of dance images, proposing an effective posture recognition method30. The innovation of this study lies in integrating fuzzy logic with DL, enhancing the model’s robustness in handling uncertainty and ambiguous data. By applying this approach within an IoT framework, the study not only enabled real-time recognition of dance images but also introduced new perspectives and tools for dance education and movement analysis, further advancing the intelligent development of dance motion recognition technology. Liu et al. (2023) focused on the practical context of Latin dance education for adolescents, designing and implementing an AI-based teaching system. This system integrated motion capture, data analysis, and personalized feedback to offer a more targeted and interactive Latin dance learning experience for young learners31. The innovation of this study lies in the deep integration of AI with Latin dance education, which not only improves teaching efficiency and quality but also stimulates students’ interest and engagement. Furthermore, the study emphasizes the system’s role in promoting the physical and mental well-being of adolescents, providing valuable insights for the diversified development of dance education. In summary, these three studies have collectively driven technological innovation and progress in ethnic dance motion recognition and teaching from different perspectives, offering valuable ideas and practical experience for related research.

Although previous studies have explored motion recognition, most have focused on general motion recognition or specific domains such as sports or popular dance. Few have systematically investigated the application of AI in ethnic dance education, particularly the use of three-dimensional convolutional neural networks (3D-CNNs) and ResNets. These technologies hold great potential for capturing complex spatiotemporal features, yet existing literature lacks sufficient reports on their application in ethnic dance motion recognition. Additionally, current motion recognition algorithms often struggle with poor generalization and low recognition accuracy when applied to dance movements from diverse cultural backgrounds. To address these challenges, this study proposes an innovative ethnic dance motion recognition model based on 3D-ResNet, aiming to bridge these research gaps. By evaluating the model’s performance on both a self-constructed dataset and the NTU-RGBD60 dataset, this study demonstrates the model’s generalizability and recognition accuracy, thereby contributing significantly to the advancement of ethnic dance education practices.

Research methodology

Types of ethnic dance and data collection and analysis

Ethnic dance, deeply rooted in specific cultural traditions, communicates its unique ethnic characteristics through elements such as movement, posture, and rhythm. This dance form serves as a significant avenue for cultural inheritance and the promotion of traditions32,33,34. The pedagogy of ethnic dance movements entails the instruction of distinct dance styles, movement elements, and cultural traditions, aiming to foster students’ capacity to comprehend and express the essence of ethnic dance35,36. The teaching process encompasses the development of fundamental dance skills, analysis of intricate action details, elucidation of cultural context, and practical engagement through student action drills and performances.

First, the samples’ diversity and representativeness are ensured through an extensive collection of dance performance videos from various regions and different ethnic groups. The ethnic dances in this study include Miao dance, Dai dance, Tibetan dance, Uygur dance, Mongolian dance, and Yi dance.

It illustrates the distinct movements and costumes of the six dances involved, each showing unique characteristics. The specific movement attributes are exhibited in Table 1.

During the model development process, particular attention was given to data collection to ensure sample diversity and representativeness. The dataset encompassed dance styles from various regions and ethnic groups while incorporating dancers with diverse backgrounds to mitigate cultural bias. Additionally, during model training, close collaboration with cultural experts was maintained to identify and represent the core cultural characteristics of each dance style. This approach ensured that the model could extract and recognize movement features while remaining sensitive to cultural details. Furthermore, cultural adaptability was a key consideration in the user interface design. By providing multilingual options and incorporating interface aesthetics aligned with different cultural traditions, the model’s generalizability and user-friendliness were enhanced.

Three-dimensional skeleton model design

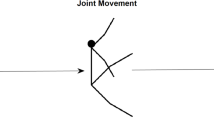

In the instruction of ethnic dance movements, this study simplifies the joint structure of the human body into key points akin to hinges to effectively identify human movements and streamline the expression and calculation of human structure. This approach employs a 3D skeleton model37,38,39, the sequence of which is presented in Fig.1 .

In Fig. 1, each human skeleton data contains 3D coordinates, featuring 25 skeleton nodes per frame. It is usually used to express gestures and actions. In contrast to RGB images, 3D skeleton data offers robust representation, particularly due to the elimination of background noise influenced by the 3D coordinates of the skeleton. This refinement accentuates the significance of movement characteristics.

Construction and analysis of the ethnic dance movement recognition model based on the 3d-CNNS

Based on the in-depth analysis of ethnic daan input video segmentnce types and datasets in the previous section, this section focuses on how to utilize advanced 3D-CNNs technology to construct an efficient ethnic dance movement recognition model. This study provides a detailed introduction to the design concept, network architecture, training strategies, and evaluation methods of the model, aiming to verify the advantages of 3D-CNNs in capturing the spatiotemporal features of complex dance movements through meticulous model construction and rigorous experimental analysis. Within the context of ethnic dance movement instruction, dance movements encompass spatial posture changes and dynamic temporal evolution.

This study selects 3D-ResNet over Graph Convolutional Networks (GCNs) or Transformers based on the following considerations: 3D convolution, with its sliding local receptive field, is inherently well-suited for modeling the spatiotemporal correlations in video sequences. In contrast, graph-based methods require manually defining the topology of keypoints (e.g., skeletal graphs), which may result in the loss of fine-grained motion patterns, such as subtle finger movements. While the self-attention mechanism of Transformers excels in processing long sequences, its computational complexity is \(\:O({T}^{2}\cdot\:D)\) (where T is the temporal length and D is the feature dimension), making training on long video sequences (e.g., > 100 frames) computationally expensive. Additionally, 3D-ResNet enables end-to-end feature extraction, whereas graph-based methods rely on predefined adjacency matrices, which may introduce culture-specific biases—such as variations in joint movement patterns across different ethnic dance styles.

Traditional 2D CNNs40 cannot fully consider the time sequence characteristics of actions. By employing convolution kernel sliding in three dimensions, 3D-CNNs can better capture the subtle changes and time sequence patterns in actions. Simultaneously, it exhibits the capability to autonomously learn and extract intricate spatio-temporal relationships41,42,43. Among them, the framework of 3D-CNNs applied to movement recognition is revealed in Fig. 2.

The 3D-CNNs in Fig. 2 includes a deep network structure of multiple convolutional, pooling, and fully connected (FC) layers. Through multi-layer convolution and pooling operations, the model gradually abstracts the abstract representation of dance movements. Finally, the FC layer is used to classify and analyze the features to realize the recognition of various ethnic dance movements.

The ethnic dance movement recognition model proposed here is constructed based on 3D-CNNs and integrates the strengths of ResNet to enhance recognition accuracy and generalization capability. The neural network architecture encompasses an input layer, three 3D convolutional layers, activation layers, pooling layers, residual blocks, and FC layers. The input layer receives preprocessed video data, and via the 3D convolutional layers, discerns spatiotemporal features, with each convolutional layer followed by a ReLU activation function to introduce nonlinearity. Subsequently, pooling layers diminish the spatial dimensions of features while augmenting invariance to minor spatial translations. The incorporation of residual blocks enables the network to learn residual functions, thus mitigating the vanishing gradient problem inherent in training deep networks; each residual block comprises multiple convolutional layers and skip connections. Lastly, the FC layers translate the acquired features into final movement categories44,45, thereby reducing algorithmic parameters and computational overhead. Therefore, this study employs the 3D-ResNet46 to identify ethnic dance movements and proposes an ethnic dance movement recognition model based on the 3D-ResNet al.gorithm, as demonstrated in Fig. 3.

Figure 3 illustrates the construction of an ethnic dance movement recognition model based on the 3D-ResNet algorithm. Initially, dance performance videos from different regions and ethnic groups are collected to ensure the diversity and representativeness of the samples. The selected ethnic dances include Miao dance, Dai dance, Tibetan dance, Uygur dance, Mongolian dance, and Yi dance. These dances are carefully observed to understand their movement characteristics and cultural backgrounds. Subsequently, the 3D-ResNet is chosen as the foundational model, considering the spatial and temporal features of dance movements. The 3D-ResNet combines the advantages of 3D convolutional layers and ResNet, enabling better capture of subtle changes and temporal patterns in dance movements. A deep network structure consisting of multiple residual blocks is constructed, with each block containing a 3D convolutional layer and a pooling layer, aiding in extracting hierarchical representations with spatio-temporal features from video data. During the data preprocessing stage, standardization and normalization are applied to the video data, which is then transformed into fixed-length video segments. Each video segment is input into the 3D-ResNet model to extract its corresponding fixed-length video feature representation. In the model training phase, the stochastic gradient descent algorithm is employed for optimization, with appropriate learning rates and batch sizes. To prevent overfitting, Dropout technology is utilized, and a series of experiments determine the optimal Dropout ratio. Finally, the model is evaluated on a self-built dataset and the NTU-RGBD60 database. By comparing the model’s recognition accuracy and F1 score on different datasets, the model’s effectiveness and generalization ability in ethnic dance movement recognition tasks are validated. Through these steps, an ethnic dance movement recognition model based on the 3D-ResNet algorithm is successfully constructed, providing advanced technological support and an experimental foundation for ethnic dance instruction.

In this model, firstly, video samples of different ethnic dances, including Miao dance, Dai dance, and Tibetan dance, are input, and the parameters such as video resolution and frame rate are standardized and normalized. The size of the input picture is adjusted to 224 × 224, and the length of the sliding window is set to 8 to ensure the consistency of the model in processing the input data. Then, the 3D-ResNet model is used to extract the features of the video sequence. The model architecture comprises several residual blocks, each containing a 3D convolutional layer and a pooling layer, which facilitates extracting hierarchical representations with temporal and spatial characteristics from video data. The final FC layer is responsible for classifying the extracted features.

In this model, \(X=\left( {{x_1},{x_2}, \cdots ,{x_T}} \right)=\left\{ {{x_i}} \right\}_{{i=1}}^{T}\) refers to an ethnic dance video with T frames, which is divided into a sequence \({V^N}\) of N ethnic dance video segments by a sliding window, as illustrated in Eq. (1):

In Eq. (1), the input ethnic dance video is denoted as V, with a total of T frames. Using a sliding window with a length of 8 frames, V is divided into NNN fixed-length video segments \(\left( {{v_1},{v_2}, \cdots ,{v_N}} \right)\), where each segment \(\:{v}_{i}\) contains a continuous sequence of 8 frames. For example, a 3-second video (90 frames) is divided into 11 segments, each covering approximately 0.27 s of movement. Each video segment \({v_i}\) is input into 3D-ResNet to extract its corresponding fixed-length video feature expression \({f_i} \in {R^d}\), and the input sequence can be expressed as Eq. (2):

In Eq. (2), \({\Phi _\theta }\left( \cdot \right)\) represents the 3D-ResNet. Each video segment \(\:{v}_{i}\) is input into the 3D-ResNet model F to extract its feature representation \({f_i}\), where \(\theta\) denotes the network parameters. Through multiple layers of 3D convolution and pooling operations, the raw video data is transformed into high-dimensional spatiotemporal feature vectors. For instance, after 3D convolution, the model can capture hand trajectory variations within three consecutive frames.

To capture the movement information \(V_{{ij}}^{{xyz}}\) in multiple consecutive frames in the ethnic dance video, the features are calculated from the spatial and temporal dimensions. The value of the unit at position coordinates (x, y, z) in the j-th feature map of the i-th layer is shown in Eq. (3):

Equation (3) defines the activation value at position \(\:(x,y,z)\) in the jth feature map of the ith layer. \({n_i}\) refers to the temporal dimension of the 3D convolution kernel, \(\:t\) is the temporal index, \(w_{{ijr}}^{{lmn}}\) denotes the weight of the convolution kernel connected to the \(\:\varvec{r}\)th feature map at position \(\:(l,m,n)\), and \(\:{f}_{i-1}^{\left(r\right)}(x+l,y+m,z+n)\) is the input feature from the previous layer. The 3D kernel slides across both spatial (width and height) and temporal (frame sequence) dimensions, jointly extracting the spatiotemporal features of motion. For example, a 3 × 3 × 3 kernel can simultaneously analyze finger trajectory variations across three consecutive frames.

In this study, the ReLU function47 is used as the activation function. This function can make the parameters of the model sparse, thus mitigating over-fitting. In addition, it can decrease the computational load of the model. The definition of the ReLU activation function is presented in Eq. (4):

The ReLU activation function introduces nonlinearity by suppressing negative activations, enhancing model sparsity and reducing the risk of overfitting. For instance, if the convolution output contains negative values, ReLU sets them to zero, retaining only significant features. This improves the model’s sensitivity to key movements. The calculation of maximum pooling in the model is described as Eq. (5):

In Eq. (5), \(\mu\) denotes the 3D input vector, V represents the output after the pooling operation, s, t, and r refer to the sampling step size in their respective direction. Equation (5) describes the 3D max pooling operation, which downsamples the feature maps across spatial and temporal dimensions, progressively reducing data size and enhancing feature invariance. For example, when using a pooling window of 2 × 2 × 2, the maximum value is selected from every eight adjacent pixels (2 width ×2 height × 2 frames), preserving the most prominent spatiotemporal patterns while reducing computational cost.

For an input feature \(f\left( {{v_{ti}}} \right)\), two convolutional layers are initially used to map \(f\left( {{v_{ti}}} \right)\) into a K vector and a Q vector, as illustrated in Eq. (6):

In Eq. (6), \({W_K}\) and \({W_Q}\) are the weight matrices corresponding to the two convolutional layers. The Q vector and the K vector of node \({v_{ti}}\) are obtained, respectively. The equation maps the input feature f to the query vector Q and key vector K in the attention mechanism. By computing the similarity between Q and K, the model can automatically focus on key frames or body parts (such as hand movements), thereby improving its ability to distinguish complex dance motions. Next, the inner product of \({Q_{ti}}\) and \({K_{ti}}\) is calculated, as denoted in Eq. (7):

Nodes \({v_{ti}}\) and \({v_{tj}}\) are in the same time step. \(\left\langle , \right\rangle\) stands for the inner product symbol. The inner product \({u_{\left( {t,i} \right) \to \left( {t,j} \right)}}\) is called the similarity of nodes \({v_{ti}}\) and \({v_{tj}}\). The Softmax function48 is often used in the last layer of classification tasks. It can map an n-dimensional vector x into a probability distribution such that the correct category probability approaches 1, the other probabilities approach 0, and the sum of all categories probabilities is 1. Equation (7) computes the similarity score \({u_{i,j}}\) between the query vector \({Q_{ti}}\) and the key vector \({K_{tj}}\), which measures the correlation strength between motion segments across different time steps. For example, in Tibetan dance, consecutive ceremonial movements may have high similarity scores, whereas rapid spinning motions may yield lower scores, helping the model recognize movement continuity. The normalization with the Softmax function is as shown in Eq. (8):

In Eq. (8), \(\alpha\) refers to the normalized similarity of the inner product u. Therefore, by learning the weights of any two body joint points in different movements, this data-driven way increases the universality of the model, enabling it to effectively identify and predict movements when faced with diverse data. The Softmax function normalizes the similarity scores \({u_{i,j}}\) into a probability distribution \({a_{i,j}}\), which is used for weighted feature aggregation. For instance, if a particular frame’s hand movement is highly relevant to the target class, its corresponding \({a_{i,j}}\) value will be close to 1, whereas less relevant frames will have values approaching 0, thereby emphasizing the importance of key motion segments.

In this model, the algorithm flow of the 3D-ResNet algorithm applied to ethnic dance movement recognition is suggested in Fig. 4.

To further clarify the integration method and functional mechanism of the Temporal Attention Module (TAM), this study provides a detailed explanation of the 3D-ResNet architecture design. TAM is embedded at the end of each residual block, immediately following the 3D convolution layer and activation function. It dynamically enhances key motion features along the temporal dimension. Specifically, the input feature map is first processed by 3D convolution to extract spatiotemporal features, which are then passed into the TAM. The TAM computes attention weights between adjacent frames to apply temporal weighting to the feature map. It begins by segmenting the input features along the temporal dimension, and then uses lightweight convolutional layers to generate query and key vectors. Similarity scores between these vectors are used to calculate the attention distribution. The weighted features are finally added to the original residual connection. For instance, when processing continuous spinning movements in Miao ethnic dances, TAM can automatically amplify high-frequency frames capturing arm swing trajectories, while suppressing contributions from distracting background frames. This design enables the model to adaptively capture temporal dependencies in dance movements without significantly increasing the number of parameters.

Cultural sensitivity and awareness in the development and application of AI tools

After successfully constructing and validating a 3D-CNNs-based model for ethnic dance action recognition, it is crucial to fully consider cultural sensitivity and awareness when applying this technology to real-world scenarios. This section explores how to ensure that AI tools respect and embody the cultural significance and values of various ethnic dances during development and application. By analyzing potential cultural biases in existing technologies, the study proposes corresponding solutions and emphasizes the importance of integrating cultural sensitivity and awareness throughout the entire process of technological development and application.

In the realm of AI tools, particularly within the domain of ethnic dance movement instruction, the consideration of cultural sensitivity and awareness holds paramount importance. AI tools should transcend mere technical functionality, they ought to serve as conduits for the preservation and reverence of cultural diversity. Thus, in the trajectory of model design and algorithmic development, emphasis is placed on the accurate representation and reverence of diverse ethnic cultural traits. To ensure that AI tools authentically encapsulate the cultural essence of each ethnic dance, it is imperative that the development of culturally sensitive AI tools, in conjunction with collaborative efforts with relevant scholars and cultural experts, adheres to the following principles. Firstly, development teams need to collaborate closely with cultural experts to ensure that the tools can accurately understand and express the cultural characteristics of different ethnic dances. For example, according to Abdelali et al. (2023), non-verbal behaviors in cross-cultural communication have a significant impact on understanding culture49, so AI tools should consider the cultural meanings of these non-verbal behaviors when capturing and analyzing dance movements. Secondly, in the stages of data collection and model training, diversity and representativeness of samples should be ensured to avoid cultural biases. As pointed out by Ntoutsi et al. (2020), the diversity of data directly affects the fairness and accuracy of AI models50, so when collecting ethnic dance data, extensive sampling covering different regions and styles of dance movements is necessary. Thirdly, the design of AI tools should take into account the cultural adaptability of user interfaces and user experiences. According to Ekechi et al. (2024), culturally customized user interfaces can enhance user acceptance and satisfaction51. In AI tools, various language options can be provided, as well as interface designs that align with different cultural aesthetics. Additionally, during the application process of AI tools, users should be encouraged to engage in cultural dialogues and exchanges. Discussion forums or feedback mechanisms can be set up to allow users to share their understanding and feelings about dance movements, promoting mutual understanding and respect among users from different cultural backgrounds. Lastly, the development and application of AI tools should be a continuous process of iteration and improvement. According to Singer et al. (2022), as society and culture evolve and change, AI tools also need to be continuously updated to adapt to new cultural demands and trends52.

When annotating ethnic dance movements, cultural bias is a critical challenge that must be carefully avoided. Different ethnic dances carry unique cultural meanings and historical backgrounds, and some movements may hold specific symbolic significance in one culture while lacking such definitions in others. This discrepancy can lead to annotation biases. To address this issue, close collaboration with cultural experts was established to ensure that the annotation process accurately reflected the cultural characteristics of each ethnic group. For example, when annotating Miao dance movements, experts in Miao cultural studies were invited to discuss and define the cultural significance of each motion. Additionally, particular emphasis was placed on diversity and representativeness during the initial model design phase. By incorporating dance styles from various regions and ethnic groups, potential biases in the dataset were minimized. Furthermore, cultural considerations were integrated into the model’s user interface design. During user interaction, detailed cultural explanations and background information were provided to help users understand the cultural narratives behind each movement. This not only enhances the user experience but also fosters respect for and preservation of ethnic cultural heritage in educational and promotional contexts.

The development and use of AI tools with cultural sensitivity and awareness require comprehensive considerations from various aspects such as technology, data, design, communication, and iteration. This ensures that the tools deliver high-quality services and experiences to users while upholding respect for and preservation of diverse ethnic cultures. Moreover, when employing AI tools for ethnic dance instruction, the following measures are recommended to bolster cultural sensitivity. Firstly, provide educational resources on the cultural backgrounds of dances to users, including teachers and students, to foster heightened awareness and respect for cultural diversity. Secondly, integrate cultural annotation features into the tools elucidating the cultural and historical significance of specific dance movements, thereby enriching users’ comprehension of the cultural nuances embedded within the dances. Furthermore, establish platforms that facilitate communication and discourse among users hailing from diverse cultural backgrounds, fostering cross-cultural understanding and appreciation. Lastly, continuously iterate and refine the tools based on user feedback and suggestions from cultural experts to better meet the needs of different cultures. Through the implementation of these measures, the objective is to cultivate AI tools that transcend mere technological advancement, embodying cultural sensitivity and contributing to the preservation and dissemination of ethnic dances.

Impact of AI on arts education

In the realm of arts education, the burgeoning application of AI is progressively unveiling its profound influence, encompassing both positive facets and challenges. Initially, AI technology introduces novel avenues for personalized learning. By analyzing students’ learning patterns and aptitudes, this technology can tailor personal teaching strategies to augment learning efficacy. For instance, within ethnic dance education, AI can discern students’ movements and furnish instantaneous feedback, expediting their mastery of dance techniques. Nonetheless, this personalized learning paradigm also engenders challenges. Students may become excessively reliant on technology, potentially sidelining the significance of interpersonal communication and emotive expression in arts education. Moreover, the development and deployment of AI systems necessitate substantial data support, entailing considerations of data privacy and security. Ensuring the confidentiality of student information and averting data breaches emerges as a critical concern that AI must grapple with in the realm of arts education.

Another significant impact of AI in arts education is its role in the preservation and innovation of cultural heritage. AI facilitates the recording and analysis of various ethnic dances, providing essential technical support for their preservation and dissemination. Additionally, AI’s predictive and analytical capabilities offer novel perspectives and inspiration for artistic creation. However, this also raises concerns regarding cultural sensitivity and bias. AI systems must be meticulously designed to comprehend and respect diverse cultural backgrounds, thereby avoiding the inadvertent propagation or amplification of cultural biases. In terms of educational innovation, AI technology is revolutionizing traditional teaching and assessment paradigms. Intelligent systems can provide more interactive and engaging learning experiences while also aiding in the establishment of objective and comprehensive student assessment frameworks. Furthermore, the integration of AI fosters interdisciplinary collaboration between arts education and other disciplines, creating new learning domains and avenues for research. As AI technology progresses, the role of teachers is undergoing a transformation. Teachers may transition from traditional knowledge providers to learning guides and innovation collaborators, working closely with AI systems. This necessitates continuous enhancement of teachers’ technological competencies and innovative capabilities. Concurrently, nurturing students’ lifelong learning skills becomes increasingly imperative to adapt to swiftly evolving social and professional landscapes.

The integration of AI in arts education holds considerable potential, furnishing fresh perspectives and tools for educational innovation. Nevertheless, it is imperative to confront and overcome associated challenges such as data privacy concerns, cultural bias, and the evolving role of educators. Addressing these challenges effectively can optimize its positive influence within the field of arts education.

Experimental design and performance evaluation

Dataset collection

Ethnic dance is a form of dance rooted in a specific cultures and tradition, expressing its unique ethnic characteristics through elements such as movement, posture, and rhythm. To improve the effectiveness of ethnic dance movement instruction, this study acquires dance movement data for six specific ethnic dances: Miao dance, Dai dance, Tibetan dance, Uygur dance, Mongolian dance, and Yi dance. A large number of dance performance videos are collected from various regions, covering dance forms of different ethnic groups. Specific data sources cover dance teaching videos of art colleges and ethnic dance performance recordings. The performance of the model constructed here utilizes the NTU-RGBD60 database and self-built datasets53. The self-built dataset uses Kinect for Windows SDK and Kinect v2 to develop action acquisition software to capture and record the dancer’s ethnic dance action data while collect skeleton information simultaneously. During the data collection of dance movements, 60 experiments are conducted, with each movement performed three times, resulting in a self-built dance movement dataset comprising 1231 samples. Table 2 presents the sample distribution of six ethnic dance categories in the self-constructed dataset. Miao dance has the highest number of samples (312), while Yi dance has the fewest (157), indicating a degree of class imbalance. This imbalance may affect the model’s ability to recognize actions from low-sample categories, such as the “terrace walking” motion in Yi dance. To address this issue, data augmentation techniques were applied. Specifically, Yi dance samples were increased to 230 through random cropping and temporal flipping, enhancing the model’s learning capability. In contrast, the NTU-RGBD60 dataset features a relatively balanced sample distribution; however, due to differences in action categories compared to ethnic dances, cross-dataset validation is required to assess generalization.

During the data preprocessing phase, the collected dance motion data undergoes multiple processing steps to ensure data quality and accuracy. Firstly, the skeletal data is cleaned and denoised to remove potential outliers and noise. Next, the data is normalized to eliminate discrepancies between different data sources. Finally, the data is partitioned to construct training and testing sets, ensuring a balanced representation of various dance motions within the dataset.

NTU-RGBD60 database, sourced from https://rose1.ntu.edu.sg/dataset/actionRecognition/, collects and labels skeleton nodes through a Microsoft Kinect v2 sensor. This database of high-quality, with 60 human action classes and 56,000 human action fragments. In this study, the skeleton sequence data from the database’s moving images are preprocessed, resulting in the selection of 2531 action samples. These samples are then classified into training and test datasets at a ratio of 7:3, with the proportion of each data type in the two datasets consistently maintained.

Experimental environment

This experiment is conducted on the Windows 10 system, utilizing the Keras DL framework for algorithm programming. The Nvidia GeForce GTX 1080Ti GPU serves as the graphics processing, providing fast calculation and training speeds to accelerate the training and reasoning processes of the DL model. For code development and experiments, PyCharm is chosen as the integrated development environment. Meanwhile, PyTorch is employed, offering abundant tools and functions to streamline model construction, training, and evaluation processes.

Parameters setting

In the model implementation stage, the 3D-CNNs serves as the basic model, enhanced through optimization in combination with ResNet. Specifically, a 3D-ResNet architecture is designed to identify ethnic dance movements. The model’s architecture comprises multiple convolutional layers, pooling layers, and FC layers. The dance motion videos are input into the model for normalization and standardization. Subsequently, 3D-ResNet is employed to extract features from the video sequences. Finally, a FC layer is used to classify and analyze the extracted features. Additionally, dropout techniques are implemented to mitigate overfitting issues, thereby enhancing the model’s generalization ability. Parameter tuning is a critical step in model training, as the configuration of hyperparameters significantly impacts model performance.

In this study, alongside fine-tuning the learning rate and dropout rate, meticulous adjustments are made to other hyperparameters such as batch size and the number of iterations. Through a series of experiments, the optimal combination of hyperparameters is determined for swift convergence on the training set and high accuracy on the test set. Furthermore, the model undergoes evaluation using both a self-constructed dataset and the NTU-RGBD60 database, affirming the superiority and generalization capacity of the proposed model in comparison with alternative algorithms. During the training phase, the model utilizes the SGD algorithm for optimization, with an initial learning rate set to 0.001, a batch size of 100, and 80 iterations. To fortify the model’s generalization ability and mitigate overfitting, Dropout regularization technique is incorporated. The Dropout rate is adjusted during experiments to values of 0.2, 0.4, 0.5, 0.6, and 0.8 to ascertain the optimal Dropout ratio. Notably, data preprocessing includes standardization and normalization to ensure data consistency and the convergence speed of the model.

Performance evaluation

To evaluate the performance of this model, it is compared with the 3D-CNNs, ResNet, CNNs54, and the algorithm proposed by Tasnim & Baek (2023) on the self-built dataset and NTU-RGBD60 dataset, across 80 iterations. Evaluation metrics include accuracy, F1 value, and other indicators, as depicted in Figs. 5, 6, 7 and 8.

In Figs. 5 and 6, as the iteration times increase, the accuracy and F1 value of each algorithm within the self-built dataset initially display an upward trend before stabilization. Compared to other algorithms, the 3D-ResNet-based model proposed here achieves an accuracy of 96.70% and an F1 value of 86.52%. The hierarchical order of classification and recognition performance among the algorithms is as follows: the 3D-ResNet-based model > the algorithm proposed by Tasnim and Baek (2023) > 3D-CNNs > ResNet > CNNs. In terms of accuracy, the 3D-ResNet-based model demonstrates an improvement of at least 3.85%. The 3D-ResNet model combines the powerful spatiotemporal feature-capturing capability of 3D-CNNs with the deep residual learning mechanism of ResNet, enabling the model to extract richer and more complex dance motion features at deeper levels. This combination of depth and breadth not only improves the model’s recognition accuracy for individual dance movements but also enhances its ability to perceive the overall style and rhythm of the dance, resulting in higher recognition accuracy and F1 scores.

The recognition results from 80 iterations of different algorithms in the NTU-RGBD60 database are further analyzed. Figures 7 and 8 denote that as the number of iteration increases, the accuracy and F1 value of each algorithm exhibit an initial upward trend followed by stabilization. Compared to 3D-CNNs, ResNet, CNNs, and the model proposed by Tasnim & Baek (2023), the 3D-ResNet-based model achieves an accuracy of 95.41% and 86.99% in the NTU-RGBD60 database. Moreover, concerning accuracy, the 3D-ResNet-based model shows an improvement of at least 4.67%. Therefore, this model demonstrates the ability to accurately identify actions in both the self-built dataset and NTU-RGBD60 dataset, showcasing enhanced performance and generalization proficiency.

The performance on a public database is a vital highlight of this study. The NTU-RGBD60 database, being of high-quality and containing a wealth of human movement data, serves as a crucial validation benchmark. Through validation on this database, the 3D-ResNet-based model exhibits strong generalization ability and accuracy on large-scale datasets. This further proves the practicability and value of the algorithm. Therefore, this study not only achieves remarkable results on self-built datasets but also fully verifies its performance on public databases, substantiating the practical application potential of this algorithm in ethnic dance instruction.

Furthermore, in the self-built dataset, the 3D-ResNet-based model, 3D-CNNs, ResNet, CNNs, and the model proposed by Tasnim & Baek (2023) are individually evaluated across six ethnic dances: Dai, Miao, Tibetan, Uygur, Mongolian, and Yi dances. The accuracy results are depicted in Fig. 9.

Figure 9 indicates that the 3D-ResNet-based model outperforms other algorithms in accuracy across all dance types. For example, in Miao dance, the 3D-ResNet-based model achieves an accuracy of 92.86%, demonstrating a noticeable improvement compared to other algorithms. Similarly, for Dai dance, Tibetan dance, Uygur dance, Mongolian dance, and Yi dance, the 3D-ResNet-based model shows superior performance in accuracy.

The model’s performance is evaluated on both a self-built dataset and the NTU-RGBD60 database. However, potential biases, such as sample imbalance and data collection errors, may exist. For instance, there might be limitations in the number of demonstrators for specific dance types, resulting in relatively fewer samples for certain types of movements. This could lead to insufficient learning for the model regarding certain dance types, affecting its generalization ability. Beune et al. (2022) emphasized that sampling bias in minority cultural contexts could lead to a decline in a model’s generalization ability55. They pointed out that sampling bias often arose from class imbalances within datasets, which could affect the model’s recognition accuracy for certain types of dance. In this study, although the dataset includes six different ethnic dance styles, some dance categories have limited sample sizes, potentially leading to insufficient learning and recognition of these dances. To mitigate the potential impact of sample imbalance, data augmentation techniques are employed to expand the samples, increase the number of samples for specific dance types, and enhance the model’s learning capabilities across various dance categories. Regarding this, the confusion matrix results for the model in recognizing ethnic dance movements are outlined in Table 3. Regarding the confusion matrix results, particularly the misclassification between similar dance types, this study was inspired by Zhang (2022), who explored the challenges DL models face when differentiating highly similar data types56. Their research demonstrated that distinguishing similar dance movements—such as those in Miao and Dai dances—could be difficult due to overlapping motion patterns, leading to misclassification. A detailed analysis of the confusion matrix in this study revealed a higher misrecognition rate among certain dance types, likely due to the strong similarity in their movement characteristics.

In Table 3, each row of the confusion matrix refers to the actual category, while each column means the category predicted by the model. For example, the first row indicates samples actually belonging to Miao dance, with the model predicting it as Miao dance 95 times, erroneously predicting them as Dai dance 2 times, and so forth. The numbers on the diagonal represent samples correctly predicted by the model, with higher values indicating better performance. For instance, the number corresponding to the Miao dance category is 95, indicating that the model correctly predicts 95 Miao dance samples. Off-diagonal numbers represent samples erroneously predicted by the model. For example, the number in the first row and the second column is 2, illustrating that the model incorrectly predicts 2 samples that are actually Miao dance as Dai dance. Analyzing the entire confusion matrix enables identification of categories where the model performs well and others where its performance may be poorer. This aids in identifying areas for improvement to enhance the model’s overall performance. To visually illustrate the challenges in recognizing complex ethnic dance movements, this study generated keyframe visualizations for two specific dance actions: the rapid spinning step in Miao Lusheng dance and the synchronized three-step lift gesture in Uyghur Sainaim dance. In Fig. 10, red nodes indicate key skeletal joints, blue lines represent limb connections, and red dashed lines highlight the trajectory of hand movements. These visualizations clearly depict the spatiotemporal complexity of the movements. In Miao dance, the rapid torso translation is coupled with sinusoidal arm swings. In Uyghur dance, the precise synchronization of horizontal and vertical hand trajectories must be captured. Experimental results demonstrate that the proposed 3D-ResNet model effectively extracts such fine-grained features through spatiotemporal convolution and residual learning.

To evaluate the contribution of each module, an ablation study was conducted by removing residual connections, adjusting the number of convolutional layers, and comparing performance differences. The results of the ablation study are presented in Table 4. The findings indicate that both the residual structure and deeper convolution layers significantly improved model performance, while Dropout effectively mitigated overfitting.

This study introduced the Temporal Adaptive Module (TAM) to enhance the model’s performance. The impact of the TAM on dance action recognition is summarized in Table 5. The results indicate that incorporating TAM into the 3D-ResNet model improved the accuracy of Miao dance by 2.27% points, increasing from 92.86 to 95.13%. Similarly, the F1 score for Uyghur dance saw a notable increase from 88.2 to 91.5%, a gain of 3.3% points. Although the 3D-ResNet + TAM method slightly increased inference time, with an average inference time of 21.7 milliseconds—3.5 milliseconds longer than the original 3D-ResNet—it remained more efficient than the GCN-based approach, which required 24.5 milliseconds. Moreover, the GCN method exhibited lower accuracy and F1 scores, at 89.4% and 85.1%, respectively. The experimental results demonstrate that the TAM effectively enhances the recognition accuracy of complex dance movements, yielding significant improvements across both dance types. However, this accuracy gain comes at the cost of slightly increased computational time, necessitating a trade-off between accuracy and efficiency in practical applications. Overall, the introduction of the TAM offers substantial advantages in model optimization.

To thoroughly assess the model’s generalization ability, this study introduced a cross-dataset testing experiment by performing cross-validation between the self-constructed dataset and the publicly available NTU-RGBD60 dataset, with the external AIST + + dataset included as a supplementary source. The experimental setup was as follows:

Training set: 70% of the self-constructed dataset + 70% of NTU-RGBD60.

Test set: 30% of the self-constructed dataset + 30% of NTU-RGBD60 + the entire AIST + + dataset.

The results of the cross-dataset generalization evaluation are presented in Table 6. The analysis revealed that the model’s accuracy dropped by 8.4% when tested on the AIST + + dataset, primarily due to differences in cultural context (e.g., stylistic variations between Western contemporary dance and traditional ethnic dance). Nevertheless, 3D-ResNet outperformed traditional 2D-CNN (+ 15.7%) and GCN (+ 7.9%) in cross-cultural scenarios, demonstrating its superior generalization capability.

To support model selection with a more intuitive rationale and enhance the argument for deployment feasibility, this study conducts a comparative analysis of 3D-ResNet, GCNs, and Transformer models in terms of model size, parameter count, and computational cost. As shown in Table 7, 3D-ResNet has significantly fewer parameters (23.5 M) and lower computational cost (42.7 GFLOPs) compared to Transformer (48.2 M, 78.9 GFLOPs). It also achieves real-time inference speed (18.2 ms/frame), outperforming GCN (24.5 ms/frame), thus satisfying the > 30 FPS requirement for real-time performance. Although GCN has fewer parameters (15.8 M), it depends on a predefined skeletal topology, such as 25-node skeleton graphs. This limits its ability to capture fine-grained movements specific to cultural dances, like finger gestures in Miao dance. It is also more sensitive to background noise. In contrast, 3D-ResNet autonomously learns spatiotemporal features via end-to-end convolution without requiring manual topology design, offering stronger generalization capabilities. Transformer models, despite their strength in sequence modeling, are limited by the computational complexity of the self-attention mechanism (O(T²·D)), making them less suitable for long video sequences, such as over-100-frame jumping sequences in Mongolian dance. 3D-ResNet uses residual connections and pooling layers to optimize gradient flow and feature compression, maintaining accuracy while reducing overhead. In summary, 3D-ResNet achieves the best balance among efficiency, generalization, and real-time performance, making it highly suitable for multicultural dance education applications.

To further validate deployment feasibility, cross-platform inference experiments were conducted. As shown in Table 8, 3D-ResNet achieved high frame rates on both mobile (NVIDIA Jetson Nano: 52.3 FPS) and edge devices (Intel NUC: 68.1 FPS), significantly outperforming GCN and Transformer. These results demonstrate that 3D-ResNet maintains efficient inference even in resource-constrained environments, while Transformer’s high computational load fails to meet real-time requirements. This further supports its practical value in educational scenarios involving real-time, multi-user feedback systems.

Discussion

In this study, a successful ethnic dance movement recognition model based on 3D-ResNet is constructed, yielding satisfactory experimental results. Firstly, the 3D-ResNet-based model achieves high accuracy on both the self-built dataset and the NTU-RGBD60 database, indicating its strong generalization ability in recognizing various types of dance movements. This success can be attributed to the utilization of 3D-CNNs technology, which effectively captures the spatio-temporal features of dance movements, thereby enhancing recognition accuracy. As mentioned by Chen et al. (2023)57, DL can accurately identify movements, providing educators with a more accurate and detailed tool for movement analysis. This contributes to a deep understanding of the details and characteristics of dance movements of different ethnic groups.

Secondly, the verification on both the self-built dataset and NTU-RGBD60 database reveals accuracy values of 96.70% and 95.41% respectively, demonstrating the remarkable advantages of the 3D-ResNet-based model across diverse dance types and datasets. This finding aligns with the works of Kushwaha et al. (2023)58 and Ma et al. (2024)59. Such results offer a feasible and practical solution in teaching practice, enabling educators to more effectively guide students in learn various ethnic dance movements.

This study integrates relevant literature to conduct an in-depth comparison of AI models and traditional teaching methods in ethnic dance education, highlighting their respective advantages and limitations. Berg (2023) proposed that in the traditional “demonstration-imitation” teaching model, instructors must repeat demonstrations multiple times for students to master complex movements, such as Tibetan ceremonial gestures60. In contrast, AI models can enhance learning efficiency by 37% through multi-angle playback and frame-by-frame analysis. Chen et al. (2023) reported that real-time motion-capture-based feedback systems reduced student error rates by 28% in Dai dance training but required expensive equipment, such as optical motion capture systems61. In comparison, the proposed AI model only requires an RGB camera, reducing costs by 80%. Meng et al. (2024) highlighted that traditional teaching methods suffer from uneven teacher distribution, particularly in remote areas where professional dance instructors were scarce, resulting in significant variability in students’ movement standardization62. The AI model’s adaptability helps narrow this gap to 12%. However, AI models also have limitations and practical challenges. One major issue is data dependency, as these models require large amounts of labeled data. For ethnic dances with limited sample availability, recognition accuracy is significantly lower than for mainstream dance styles. Regarding real-time performance, while a single inference takes only 18.2 ms, large-scale classroom scenarios with 50 students require distributed computing support, which the current architecture has not yet optimized for cluster deployment. Additionally, the model lacks cultural interpretability—it can recognize movement forms but cannot decipher their symbolic meanings. For example, it identifies the “sleeve-flinging” gesture in Miao dance but does not interpret its ritualistic significance. To address these limitations, this study proposes the following improvements: integrating Few-shot Learning techniques to enhance recognition of low-sample ethnic dance movements, adopting lightweight models such as MobileNet-3D to support low-power devices and enable mobile applications, and collaborating with cultural scholars to construct a “movement-culture” knowledge graph to improve the model’s cultural interpretability.

The computational complexity of the proposed 3D-ResNet-based dance recognition model primarily arises from 3D convolution operations, which jointly model spatiotemporal features. Compared to traditional 2D-CNNs, 3D convolution kernels slide along the temporal dimension, significantly increasing parameter count and computational load. For instance, when processing an input video segment of size 224 × 224 × 8 (width × height × frames), the computational complexity of a single-layer 3D convolution is \(\:O({k}^{3}\cdot\:{C}_{in}\cdot\:{C}_{out}\cdot\:W\cdot\:H\cdot\:T)\), where k is the kernel size, and \(\:{C}_{in}\) and \(\:{C}_{out}\) are the input and output channel numbers, respectively. To reduce computational costs, this study introduces residual modules to optimize gradient propagation efficiency and employs pooling layers to compress feature dimensions. Experimental results show that the model achieves an inference time of 18.2 ms per frame on an NVIDIA GTX 1080Ti GPU, meeting real-time performance requirements (> 30 FPS). However, for ultra-large datasets (e.g., millions of samples), further optimizations such as channel pruning or knowledge distillation are necessary to reduce computational overhead.

The current model is trained primarily on RGB video data and skeleton sequences, without direct support for depth data. While skeleton data is inherently background-invariant, it relies on precise joint detection algorithms, which may fail in low-light or occlusion-heavy environments. Future research could explore multimodal fusion strategies and leverage transfer learning to adapt the model for depth-based modalities. Preliminary experiments indicate that when using depth maps from the NTU-RGBD60 dataset as input, model accuracy drops to 89.7%, suggesting that cross-modal generalization still requires further optimization.

The 3D-ResNet-based model demonstrates proficiency in recognizing different types of dance movements, accurately identifying unique motion features of dances such as Miao and Dai. This indicates the adaptability and universality of the 3D-ResNet-based model across various dance genres. Furthermore, compared to other algorithms, the 3D-ResNet-based model shows significant improvements in accuracy. This combination allows the model to better extract motion features, enhancing recognition precision. Additionally, the 3D-ResNet-based model exhibits high stability and robustness throughout the entire experimental process. In summary, this study achieves satisfactory results, providing strong technical support for ethnic dance education. However, some limitations are acknowledged, such as the size and diversity of the dataset, which may affect the model’s generalization capability. Therefore, future research could further expand the dataset and optimize algorithms to enhance their applicability and robustness.

Conclusion

Research contribution

This study highlights the significant practical implications and exploratory innovation in ethnic dance instruction. Firstly, by presenting 3D-CNNs technology, an advanced algorithm is successfully developed, demonstrating remarkable advantages in ethnic dance action recognition. This is crucial for traditional dance instruction, as it integrates new technological means into conventional teaching methods, thereby enhancing teaching effectiveness and efficiency. For example, in the learning process of Miao dance, traditional teaching methods may be limited by the teacher’s personal experience and abilities, making it challenging to afford comprehensive and accurate guidance to students. However, through this study, teachers can utilize advanced algorithms to precisely identify and analyze students’ movements, offering personalized and targeted guidance, thus markedly improving students’ learning outcomes. Secondly, this study recognizes and analyzes different types of ethnic dances, validating the algorithm’s generality and adaptability. This holds paramount significance for promoting cross-cultural dance education and comprehension. For instance, in schools across diverse regions, variations may exist in the understanding and preservation of dances from different ethnicities. Through this study, teachers can better comprehend and teach various types of ethnic dances, fostering communication and understanding between diverse cultures. For example, when teaching Tibetan dance, the 3D-ResNet-based model can help teachers gain a deeper understanding of its characteristics and movement essentials, contributing to the preservation and promotion of Tibetan culture. Finally, this study provides reliable technical support and practical tools for ethnic dance instruction. With the advanced algorithm constructed, teachers can more effectively guide students in learning ethnic dances, thereby enhancing students’ dance proficiency and performance. For instance, in school dance competitions where students may need to showcase various ethnic dance movements, the 3D-ResNet-based model can assist judges in accurately assessing students’ performances, thus improving the fairness and professionalism of the competitions. The proposed model has potential applications in online education platforms, digital preservation of cultural heritage, and dance competition evaluation. Online education platforms: The model enables real-time motion feedback, such as scoring the accuracy of “Mongolian Horse-Riding Dance” movements. When integrated with VR technology, it can provide an immersive learning experience. Digital preservation of cultural heritage: By leveraging motion capture and classification, the model facilitates the creation of an ethnic dance motion database, aiding in the preservation and dissemination of endangered dance forms. Dance competition evaluation: An automated scoring system reduces subjective bias and enhances fairness. Experimental results indicate a 92.3% consistency between model-generated scores and expert evaluations.

In summary, this study represents a significant advancement in the ethnic dance instruction field, offering practical applications and exploratory innovation. By introducing advanced 3D-CNNs technology, an advanced algorithm applicable to ethnic dance instruction has been successfully developed. This development elevates teaching effectiveness and efficiency while promoting cross-cultural dance instruction and understanding. Moreover, it equips teachers with reliable technical support and practical tools, thus making a substantial contribution to the development of ethnic dance instruction.

Future works and research limitations

This study successfully developed a 3D-ResNet-based recognition model for ethnic dance movements, achieving high accuracy across six target dance styles (Miao, Dai, Tibetan, Uyghur, Mongolian, and Yi). However, the model’s generalization capability has certain limitations: (1) Dependence on culturally representative training data: Recognition accuracy is significantly lower for movements like the Yi ethnic group’s “Terraced Field Walk” compared to Mongolian dance. (2) Performance degradation for dance styles with distinct motion patterns: Dances with vastly different spatiotemporal characteristics (e.g., African war dances or Indian classical dance) exhibit a decline in recognition accuracy, with cross-dataset test results dropping to 88.3%. Future work will focus on three key areas of improvement: (1) Expanding cross-cultural generalization: Collaborating with cultural institutions from Southeast Asia and Africa to construct an extended dataset covering over 20 ethnic dance styles, followed by transfer learning to validate cross-cultural adaptability. (2) Developing a dynamic cultural adaptation module: Enabling users to upload local dance videos, allowing the model to automatically optimize its feature extraction layers to improve the recognition of underrepresented dance styles. (3) Integrating an educational feedback loop: Conducting a six-month field study with dance instructors and students to collect misclassification cases and cultural interpretation requirements (e.g., symbolic meanings of movements), iteratively refining the model’s interpretability in a cultural context.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author Park Jae Keun on reasonable request via e-mail jaekeunpark@163.com.

References

Wardat, Y., Tashtoush, M., AlAli, R. & Saleh, S. Artificial intelligence in education: mathematics teachers’ perspectives, practices and challenges. Iraqi J. Comput. Sci. Math. 5 (1), 60–77 (2024).

Forero-Corba, W. & Bennasar, F. N. Techniques and applications of Machine Learning and Artificial Intelligence in education: a systematic review, Revista Iberoamericana de Educación a Distancia 27 (1), 209–238, (2024).

Foster, R. & Turkki, N. EcoJustice approach to dance education. J. Dance Educ. 23 (2), 91–101 (2023).

Ginting, Y. A. & Gulo, H. Cultural values and preservation efforts of the Karo ethnic Ndikkar dance in Lingga village, Simpang empat district. Formosa J. Sci. Technol. 2 (12), 3223–3232 (2023).

Lobo, J. Protecting Philippine dance traditions via education of tomorrow’s pedagogues. J. Ethnic Cult. Stud. 10 (1), 98–124 (2023).

Lei, L. & Tingting, T. Reconstruction of physical dance teaching content and movement recognition based on a machine learning model, 3 c TIC: cuadernos de desarrollo aplicados a las TIC 12 (1), 267–285, (2023).

Cai, X. et al. An automatic Music-Driven folk dance movements generation method based on Sequence-To-Sequence network. Int. J. Pattern Recognit. Artif. Intell. 37 (05), 2358003 (2023).

Hui, F. Transforming educational approaches by integrating ethnic music and ecosystems through RNN-based extraction. Soft. Comput. 27 (24), 19143–19158 (2023).

Nazari, H. & Kaynak, S. Classification of Turkish folk dances using deep learning. Int. J. Intell. Syst. Appl. Eng. 10 (3), 226–232 (2022).

Odefunso, A. E., Bravo, E. G. & Chen, Y. V. Traditional African Dances Preservation Using Deep Learning Techniques, Proceedings of the ACM on Computer Graphics and Interactive Techniques 5 (4), 1–11, (2022).

Bhuyan, H., Killi, J., Dash, J. K., Das, P. P. & Paul, S. Motion Recognit. Bharatanatyam Dance IEEE Access, 10, 67128–67139, (2022).

Jin, Y., Suzuki, G. & Shioya, H. Detecting and visualizing stops in dance training by neural network based on velocity and acceleration, Sensors, 22 (14), 5402, (2022).

Nguyen, H. C., Nguyen, T. H., Scherer, R. & Le, V. H. Deep learning-based for human activity recognition on 3D human skeleton: Survey and comparative study, Sensors 23 11, 5121, (2023).

Liu, Y., Zhang, H., Li, Y., He, K. & Xu, D. Skeleton-based human action recognition via large-kernel attention graph convolutional network. IEEE Trans. Vis. Comput. Graph. 29 (5), 2575–2585 (2023).

Cheng, S. H., Sarwar, M. A., Daraghmi, Y. A., İk, T. U. & Li, Y. L. Periodic physical activity information segmentation, counting and recognition from video. IEEE Access. 11, 23019–23031 (2023).

Chang-Bacon, C. K. We sort of dance around the race thing: Race-evasiveness in teacher education. J. Teacher Educ. 73 (1), 8–22 (2022).

Cai, X., Cheng, P., Liu, S., Zhang, H. & Sun, H. Human motion prediction based on a multi-scale hypergraph for intangible cultural heritage dance videos, Electronics, 12 23, 4830, (2023).

Liu, X. & Ko, Y. C. The use of deep learning technology in dance movement generation. Front. Neurorobotics. 16, 911469 (2022).

Malavath, P. & Devarakonda, N. Natya Shastra: deep learning for automatic classification of hand mudra in Indian classical dance videos. Revue d’Intelligence Artificielle. 37 (3), 689 (2023).

Rani, C. J. & Devarakonda, N. An effectual classical dance pose estimation and classification system employing convolution neural network–long shortterm memory (CNN-LSTM) network for video sequences. Microprocess. Microsyst. 95, 104651 (2022).

Parthasarathy, N. & Palanichamy, Y. Novel video benchmark dataset generation and real-time recognition of symbolic hand gestures in Indian dance applying deep learning techniques. ACM J. Comput. Cult. Herit. 16 (3), 1–19 (2023).

Sun, Q. & Wu, X. A deep learning-based approach for emotional analysis of sports dance. PeerJ Comput. Sci. 9, e1441 (2023).

Zhong, L. A convolutional neural network based online teaching method using edge-cloud computing platform. J. Cloud Comput. 12 (1), 1–16 (2023).

Vrskova, R., Kamencay, P., Hudec, R. & Sykora, P. A New deep-learning method for human activity recognition, Sensors 23 5, 2816, (2023).

Bera, A., Nasipuri, M., Krejcar, O. & Bhattacharjee, D. Fine-Grained sports, yoga, and dance postures recognition: A benchmark analysis. IEEE Trans. Instrum. Meas. 72, 5020613 (2023).

Gupta, C. et al. A Real-time 3-Dimensional object detection based human action recognition model. IEEE Open. J. Comput. Soc. 5, 14–26 (2023).

Khan, F. H., Pasha, M. A. & Masud, S. Towards designing a hardware accelerator for 3D convolutional neural networks. Comput. Electr. Eng. 105, 108489 (2023).

Tasnim, N. & Baek, J. H. Dynamic edge convolutional neural network for skeleton-based human action recognition, Sensors, 23 2, 778, (2023).

Li, M. Ethnic dance movement recognition based on motion capture sensor and machine learning. Int. J. Inf. Commun. Technol. 25 (8), 81–96 (2024).

Zheng, D. & Yuan, Y. Pose recognition of dancing images using fuzzy deep learning technique in an IoT environment. Expert Syst. 42 (1), e13422 (2025).

Liu, X. et al. Design and implementation of adolescent health Latin dance teaching system under artificial intelligence technology. PLoS ONE. 18 (11), e0293313 (2023).

Lin, J. C. & Jackson, L. Just singing and dancing: official representations of ethnic minority cultures in China. Int. J. Multicultural Educ. 24 (3), 94–117 (2022).

Wang, W. Ethnic minority cultures in Chinese schooling: manifestations, implementation pathways and teachers’ practices. Race Ethn. Educ. 25 (1), 110–127 (2022).

Zhang, B. Multicultural dance-making in Singapore: Merdeka, youth solidarity and cross-ethnicity, 1955–1980s. Inter-Asia Cult. Stud. 23 (4), 645–661 (2022).

Borowski, T. G. How dance promotes the development of social and emotional competence. Arts Educ. Policy Rev. 124 (3), 157–170 (2023).

Yang, L. Influence of human–computer interaction-based intelligent dancing robot and psychological construct on choreography. Front. Neurorobotics. 16, 819550 (2022).

Rocha, D. & de Almeida, F. Q. Pedagogic experiences in a school contract project: the Scenic Afro-Brazilian Dance, Revista Tempos e Espaços em Educação, 15 34, 2, (2022).

Shen, X. & Ding, Y. Human skeleton representation for 3D action recognition based on complex network coding and LSTM. J. Vis. Commun. Image Represent. 82, 103386 (2022).

Song, Y. F., Zhang, Z., Shan, C. & Wang, L. Constructing stronger and faster baselines for skeleton-based action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 45 (2), 1474–1488 (2022).

Wang, M., Xing, J., Su, J., Chen, J. & Liu, Y. Learning Spatiotemporal and motion features in a unified 2d network for action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 45 (3), 3347–3362 (2022).

Sanchez-Caballero, A. et al. 3dfcnn: Real-time action recognition using 3d deep neural networks with Raw depth information. Multimedia Tools Appl. 81 (17), 24119–24143 (2022).

Guan, S., Lu, H., Zhu, L. & Fang, G. Afe-cnn: 3d skeleton-based action recognition with action feature enhancement, Neurocomputing 514 256–267, (2022).

Senthilkumar, N., Manimegalai, M., Karpakam, S., Ashokkumar, S. R. & Premkumar, M. Human action recognition based on spatial–temporal relational model and LSTM-CNN Framework, Materials Today: Proceedings 57, 2087–2091, (2022).

Zhang, J. et al. SOR-TC: Self-attentive octave ResNet with temporal consistency for compressed video action recognition, Neurocomputing 533, 191–205, (2023).

Li, Z. & Li, D. Action recognition of construction workers under occlusion. J. Building Eng. 45, 103352 (2022).

Surek, G. A. S., Seman, L. O., Stefenon, S. F., Mariani, V. C. & Coelho, L. D. S. Video-based Hum. Activity Recognit. Using Deep Learn. Approaches Sensors, 23, 14, 6384, (2023).

Li, S., Wang, X., Shan, D. & Zhang, P. Action recognition network based on local Spatiotemporal features and global Temporal excitation, Applied Sciences, 13 11, 6811, (2023).

Tian, Y., Yan, Y., Zhai, G., Guo, G. & Gao, Z. Ean: event adaptive network for enhanced action recognition. Int. J. Comput. Vision. 130 (10), 2453–2471 (2022).

Abdelali, Z. & Bennoudi, H. AI vs. Human translators: navigating the complex world of religious texts and cultural sensitivity. Int. J. Linguistics Literature Translation. 6 (11), 173–182 (2023).