Abstract

Retinal diseases recognition is still a challenging task. Many deep learning classification methods and their modifications have been developed for medical imaging. Recently, Vision Transformers (ViT) have been applied for classification of retinal diseases with great success. Therefore, in this study a novel method was proposed, the Residual Self-Attention Vision Transformer (RS-A ViT), for automatic detection of acquired vitelliform lesions (AVL), macular drusen as well as distinguishing them from healthy cases. The Residual Self-Attention module instead of Self-Attention was applied in order to improve model’s performance. The new tool outperforms the classical deep learning methods, like EfficientNet, InceptionV3, ResNet50 and VGG16. The RS-A ViT method also exceeds the ViT algorithm, reaching 96.62%. For the purpose of this research a new dataset was created that combines AVL data gathered from two research centers and drusen as well as normal cases from the OCT dataset. The augmentation methods were applied in order to enlarge the samples. The Grad-CAM interpretability method indicated that this model analyses the appropriate areas in optical coherence tomography images in order to detect retinal diseases. The results proved that the presented RS-A ViT model has a great potential in classification retinal disorders with high accuracy and thus may be applied as a supportive tool for ophthalmologists.

Similar content being viewed by others

Introduction

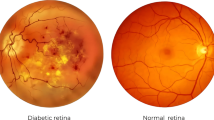

Age-related macular degeneration (AMD) is an ocular condition that presents significant challenges to global eye health1. Small yellow deposits beneath the retina, known as drusen, are hallmark features of dry AMD2. Vitelliform lesions are associated with a large spectrum of retinal diseases. In addition to Best disease, which is associated with hereditary inheritance and occurs in younger patients, there are acquired vitelliform lesions (AVL) often present in the elderly, as AMD.

AVL refers to yellowish subretinal vitelliform material accumulated in the macula, which progresses over time and eventually transforms to an atrophic lesion3. The incidence of AVL is believed to be 3 times higher than Best vitelliform macular dystrophy4 which has genetic background. The vitelliform lesion represent an accumulation of lipofuscin, melanosomes, melanolipofuscin and outer segment debris in the subretinal space, between the photoreceptors layer and retinal pigment epithelium (RPE)5. In the presence of drusen the material accumulates below the level of the RPE, which is well recognized using Spectral Domain Optical Coherence Tomography.

The relationship between AMD and vitelliform lesions was first noted by Gass. The natural course of AVLs could be further complicated by choroidal neovascularization or foveal atrophy5, as in AMD. Lesions in AVL appear in the same demographic as AMD6 and are often associated with drusen7 while in AVL the lipofuscin accumulation is within the subretinal space,8 AMD it accumulates below the RPE and still these two entities can still be easily confused.

Enormous development in computer vision and machine learning (ML) techniques has resulted in creating innovatory models for image-based automatic detection, indicating the location of various retina diseases or detecting them. This ability to identify patterns has revolutionized healthcare. These modern solutions have great potential in imaging that is extremely important for ophthalmology for detecting various retina disorders9,10. The sophisticated machine learning algorithms provide accurate diagnosis in all stages of the diseases. They also open new perspectives for ophthalmologists and patients for detecting diseases at an early stage and preventing them from vision loss. Many ML models have been applied with great success for recognition and classification of various AMD biomarkers utilizing different image modalities. These solutions support ophthalmologists in clinical decisions or determining the progression of the diseases. They decrease time needed for making a right diagnosis by indicating the proper distortions. They may have a decisive impact in cases where it is difficult to determine a specific disease, especially in its initial stage11.

The motivation for this study is to address the diagnostic challenge of distinguishing acquired vitelliform lesions from drusen in age-related macular degeneration, as both appear similarly on Optical Coherence Tomography (OCT) scans and can lead to delayed treatment if misdiagnosed. While many deep learning approaches focus on AMD, few target AVL specifically. According to the authors’ knowledge, this study is among the first to leverage Vision Transformers for AVL classification. By integrating OCT scans of AVL, drusen, and healthy eyes, the proposed Residual Self-Attention Vision Transformer aims to bridge a critical clinical gap. Early, accurate AVL recognition assists ophthalmologists in timely decision-making, reducing vision loss risk and ultimately improving patient outcomes.

The aim of the study is to automatically classify OCT images of eyes with Adult-onset vitelliform macular dystrophy eyes using machine-learning technology to distinguish between AVL and drusen in the course of AMD. The main motivation of this study covers the development of an automatic tool, using Residual Vit Transformer, for detection retinal eye diseases, such as AVL and AMD Drusen, as well distinguishing them from healthy cases. This novel approach has a potential to make far-reaching changes in ophthalmic care. Early recognition of these disorders will benefit an earlier diagnosis, which will allow for treatment to be established and protection against rapid progression of the disease. Moreover, this model may be treated as a supportive tool for quick determination of the analysed distortions.

The leading contributions of this paper are as follows:

-

Proposing a novel automatic method for AVL, macular drusen and normal cases detection from OCT imagining utilizing the Residual Self-Attention Vision Transformer. This model has a great potential in classification retinal diseases with high accuracy and thus in applying as a supportive tool.

-

Revealing the most ML models for retinal diseases, including CNN and ViT approaches. The ViT methods stated to outperform previously proposed ML solutions.

-

Applying the Residual Self-Attention module instead of Self-Attention one and prove to be effective model for retinal eye distortions.

-

Verifying the proposed model and prove its enormous potential for retinal eye diseases detection, regarding accuracy, precision, recall and F1-score metrics.

-

Applying Grad-CAM in order to assess the interpretability of the RS-A ViT.

Related works

Among all ML architectures, Convolutional Neural Networks (CNNs) models have been widely applied in retinal disease detection, for AMD detection12,13. They have ability to automatically extract features that are crucial for classification purposes. Their architecture consists of convolutional layers allowing for extracting hierarchical patterns from images, regardless their orientation, position, translation or scales. One example is the Deep CNN-GRU for classification of early-stage and end-stages AMD diseases, including Diabetic Macular Edema (DME), drusen, Choroidal neovascularization (CNV) and healthy cases was proposed in14. This high-level tool reached up to 98.90% accuracy, which proved to be a successive supporting tool for ophthalmologists.

Another example of CNN approach, hybrid Retinal Fine Tuned Convolutional Neural Network (R-FTCNN), achieved great success in recognition of diabetic macular edema, drusen, and choroidal neovascularization from OCT images15. This model obtained remarkable results, up to 100% accuracy for the Duke dataset and up to 99.70% for the n University of California San Diego dataset.

The pre-tuned algorithms on ImageNet dataset appeared to be excellent tool to recognize diabetic AMD, DME and normal cases16,17. In study16 the classification was performed using CNN and Bilinear Convolutional Neural Network (BCNN) methods. Among InceptionV3, VGG16, VGG19 and BCNN solutions, the customized CNN also achieved very high accuracy, exceeding 90%. Based on labelled OCTimagining ten various retina diseases including AMD, were recognized with great success17. The binary classification utilizing five pre-trained models was applied, such as: AlexNet, DenseNet, InceptionV3, ResNet50, and VGG16. The ensemble model was created which proved also to achieve very high accuracy, equal to 98.5%. Additionally, Gradient-weighted Class Activation Mapping (Grad-CAM) was applied for visualization how the classification analyzed the images. Early and intermediate stages of AMD were distinguished from normal cases with high performance using InceptionV3, ResNet50, InceptionresNet50 models, pre-trained on ImageNet dataset, based OCT scans18. The following biomarkers were investigated as AMD pathology: reticular pseudodrusen, intraretinal hyperrefective foci and hypofective foci.

The combination of CNN architecture, ML methods, explainable artificial intelligence and image preprocessing technique was proposed for choroidal neovascularization, diabetic macular edema and drusen detection with great success19. The fully dense fusion included neural network utilized pre-trained AlexNet architecture. The OCT images were preprocessed utilizing gaussian, anisotropic difusion, and bilateral flters in order to remove noise and improve edges. The extracted features by fusion network were then retrained with the deep SVM (D-SVM) and deep KNN classifers (D-KNN). This hybrid system with deep SVM reached up to 99.60% accuracy. Another CNN approach with ensemble learning and activation maps as extracting feature method was proposed for choroidal neovascularization, diabetic macular edema and drusen detection20. The model utilized the pre-trained AlexNet architecture, (called the Fine-Tuned AlexNet, FT-CNN). This network achieved very high performance, up to 99.70% accuracy.

ResNet34-Classifier was applied for AMD and normal cases detection based on biomarkers, such as drusen and pigmentary disorders21. Diabetic macular edema, drusen, CNV (wet AMD) together with normal cases were recognized. The transfer learning, applied with success, was verified using three executions: random initialization, ImageNet and OCT. Deep learning framework utilizing a 3D InceptionV1 model, detecting drusen or pseudodrusen from gradable scans, also obtained high performance22. Attention modules have been also applied with CNN to improve feature extraction and thus enhance the classification performance. Pseudodrusen were detected using multimodal, multitask, multiattention (M3) model using three various image types: fundus photography, fundus autofluorescence and the combination of these two with great success23. The deep learning approach consisted of InceptionV3, self and cross-modality attention and fully-connected layers. The study confirmed that adding attention models improves the network performance. Multi attention modules were gathered into highly effective Residual Attention Network for Optisk disk drusen and normal cases detection24. Each attention module consisted of the mask branch and the trunk branch, which allowed for extraction essential features. The examination was performed on the OCT datasets gathered from three research centers together with Kaggle dataset. This solutions obtained accuracy exceeding 97%.

Vision Transformer (ViT) has been one of the most popular ML model applied for image processing, recently. It presents a different approach to image processing. The input image is converted to flattened 2D patches which are further convert to a constant latency vector. Utilizing self-attention mechanism both local and global features are extracted. This groundbreaking technique has been applied for retinal eye detection with remarkable success, outperforming classification utilizing CNN methods11,25,26,27,28. Also in case of segmentation ViT models were stated to outperform CNN models29. Drusen, CNV, DME together with normal cases were detected in OCT imagining using ViT, Token-To-Token Vision Transformer (T2T-ViT) and Mobile Vision Transformer (Mobile-ViT) models11. The proposed solutions achieved very high accuracy results. The Mobile-ViT was stated as the most appropriate model. Medical ViT with fine-tuning utilizing stitchable neural networks for drusen, CNV and normal cases detection also achieved very high performance25. Two datasets were used for pre-training: NEH dataset and University of California San Diego (UCSD) dataset. The lightweight hybrid solution combining CNN with ViT models for DME, CNV, drusen and normal cases detection using OCT imaging were applied providing superior performance30. Another hybrid architecture, Conv-ViT, was applied with great success for CNV, DME, drusen together with normal cases31. This fusion model allowed for shape-based texture by integrating advantages of CNN and ViT approaches. The pre-trained InceptionV3 and ResNet50 architectures were used. DeepDrAMD architecture is one more example of high performance model, based on vision transformer, for dry AMD, wet AMD, type-I, type-II AMD and normal cases detection based on fundus photograph26. This approach integrated SwinTransformer together with augmentation technique. The model achieved performance not lower than 90%. The Swin Transformers tool obtained also high-level performance for macular derma, for multi diabetic retinopathy detection32. The deep learning (DL) approaches consisted of encoder and classification parts for AMD versus normal cases detection using three OCT dataset were studied in33. Pre-trained ResNet18 and ViT models were stated to be the most efficient for this type classification, exceeding 98%.

Subjective interpretation and inter-observer variability of the OCT images highlight the need for standardized diagnostic approaches in macular diseases. While modern multimodal imaging remains helpful, there is not a single diagnostic test or imaging modality that can provide a definitive diagnosis of AVL and AMD.

Methods

The Vision Transformer (ViT) represents a significant advancement in computer vision by adapting the transformer architecture - originally developed for natural language processing-to image recognition tasks. This adaptation involves reimagining images as sequences of patch embeddings and processing them through Transformer layers that utilize Multi-Head Self-Attention (MHSA) mechanisms and Feed-Forward Networks. This article provides an in-depth exposition of the Vision Transformer layer, including detailed mathematical formulations, comprehensive explanations of each component, and insights into how these components collectively contribute to the overall performance of the architecture.The whole flow diagram of proposed methodology is presented in Fig. 1, while the RS-A ViT processing is visually represented in Fig. 3 and transformer details are presented in Table 2.

In this study, several hyperparameters were taken into account during the design and training of the Residual Self-Attention Vision Transformer. The number of encoder layers, the dimensions of query-key-value vectors in each attention head, the feed-forward network size, and the patch size for dividing OCT images were considered. To achieve an optimal balance between model capacity and generalization, these parameters were varied, and validation accuracy was monitored at each training iteration. The search process was conducted using both random sampling of candidate configurations and a Bayesian optimization procedure, in which a probabilistic model of the search space was updated based on previous trials. This approach enabled the most promising sets of hyperparameters to be explored more thoroughly, while less effective configurations were quickly discarded. Through careful tuning of factors such as patch size and dropout rate, a final setup was reached that minimized overfitting and consistently improved classification metrics across the dataset.

Dataset

The structure of the OCT dataset, prepared for the purpose of this study, is depicted in Fig. 2. The AVL OCT subset from the same retinal imaging modality (AngioVue OCT-A system - Optovue Inc., Fremont, CA, USA) were gathered from two ophthalmic research centers: the Eye Clinic, Department of Public Health, Federico II University in Italy (ORC1) and the Chair and Department of General and Pediatric Ophthalmology at the Medical University of Lublin in Poland (ORC2). The collected AVL subset has been thoroughly verified. The images that were blurred or illegible have been excluded from the study. Totally, 125 images have been qualified for the study.

All OCT images were initially examined for motion artifacts and overall quality to remove scans with excessive blur or poor contrast. Each retained image was then processed with a gaussian and median filter to reduce speckle noise while preserving key structural boundaries. Following this denoising step, a rectangular region encompassing the macula was manually selected, in case of AVL images, ensuring that peripheral areas not relevant to disease recognition were excluded. The resulting images were resized to a fixed resolution of 224\(\times\)224 pixels to match the input layer requirements of the classification networks. To normalize pixel intensities and reduce variability across different imaging sessions, the intensities in each image were scaled to a [0, 1] range based on individual minimum and maximum values. The drusen and normal cases subsets were collected from the Labeled Optical Coherence Tomography34. Thus, ORC1/ORC2 are specialize ophthalmology research centers focusing primarily on advanced AMD (or a particular variant such as AVL), they have limited collection of either normal eyes or mild-to-moderate AMD (drusen) images. For this reason, it was decided to include two other classes from public data sets in the conducted studies. Using a publicly available UCSD dataset ensures that the drusen and normal classes are adequately represented. Incorporating a well-known dataset add credibility and reproducibility to the study. Finally, the whole dataset consisted of 125 AVL images, 577 DRUSEN images and 578 images of NORMAL cases. The entire dataset was divided into three subsets for training, validation, and testing purposes. The distribution follows a ratio of 70%, 15%, and 15%, respectively, maintaining proportions between individual classes. Division and data details can be found in Table 1.

All studies were carried in accordance with the relevant guidelines and regulations, as well as, the Helsinki Declaration. Their scope and method of conducted study were approved by the Ethics Committee of the Medical University of Lublin (no. KE-0254/260/12/2022). In addition, informed consent was obtained from all study participants or their legal guardians. All data available as part of the conducted studies were anonymized to prevent obtaining personal patient data.

The dataset structure. The AVL subset was gathered from ORC1 and ORC2. The DRUSEN and NORMAL subsets were collected fron OCT dataset34. ORC1 stands for Eye Clinic, Department of Public Health, Federico II University in Italy, ORC2 corresponds to Chair and Department of General and Pediatric Ophthalmology at the Medical University of Lublin in Poland.

Data augmentation

Data augmentation is a one of the often applied techniques for image classification to enhance the performance and generalization capabilities of machine learning models35. This process involves extending an existing dataset by creating new training samples by applying different transformations to the initial dataset. Data augmentation process increases the diversity of the samples and reduces the risk of overfitting. These image operations help in training models by taking into account common features such as changes in illumination, scale, orientation, and others. Moreover, data augmentation enables the enlargement of the dataset without the need for additional obtaining the samples qualified for a given criterion. It also improves the model’s ability to generalize new data, previously unused in the training process. Thus, data augmentation plays a pivotal role in DL models training by adapting it to the diverse real word images36. The following data augmentation method were utilized36,37:

-

Rotation: images are rotated by a certain angle to obtain various perspectives and orientations.

-

Scaling: images are resized while maintaining their aspect ratio to gain different distances or sizes.

-

Crop and resize: randomly cropping an image and then resizing it allows the model to learn features from various focal points and scales within an image.

-

Color jittering: lighting conditions and color variations are modified by changing brightness, saturation, contrast, as well as hue of image.

-

Gaussian noise: small distortions to images are added using random noise in order to reduce the model’s sensitivity.

-

Elastic deformation: local distortions utilizing elastic transformations are performed to enhance the model’s tolerance for deformations of the objects.

-

Random erasing: randomly selected parts of the image are erased utilizing a solid color or noise so that the model may focus on relevant features.

-

Cutout: the image is randomly masked out by square regions which improve the model’s robustness while the model focuses on various image parts during training process.

-

Horizontal and vertical flipping: these operations simulate various image orientation and perspective which improve training process, especially for symmetrical features.

Due to the small number of dataset, the above-mentioned were applied to enhance the model’s performance. The final structure of dataset in shown in Table 1.

Vision transformer layer

The traditional Vision Transformer (ViT) follows the common structure outlined in38, incorporating a sequence of MLP and attention modules that are stacked interchangeably within the network. Among various forms of attention mechanisms, the widely employed scaled dot-product self-attention allows the model to uncover intricate connections between the elements in the input data sequence, dynamically assigning different levels of importance to each element based on the learned relationships.

The Vision Transformer layer is composed of several key components: input embeddings, Multi-Head Self-Attention, Feed-Forward Networks, Layer Normalization, and Residual Connections. Each plays a vital role in transforming and processing the input data, enabling the ViT to learn complex patterns within images.

In this study, the foundational ViT architecture is utilized. The model specifications, which include 12 Encoder blocks, are outlined in Table 2. A ViT model configured is designed to handle images with 3 RGB channels and dimensions of 224 x 224 pixels. Initially, the Conv2D layer divides the input image into smaller fragments known as “patches”, each representing a distinct part of the image. These patches are subjected to a 16x16 filter within the Conv2D layer. Subsequently, ViT applies a transformation to each patch through the Conv2D layer, converting these visual input fragments into representations as a vectors, which are then processed within the encoder blocks of the ViT model.

This article progresses by utilizing an attention layer that takes tokens as its input. Initially, tokens represent patches in the input image. As the transformer model goes deeper, however, the attention layers compute attention scores based on tokens modified by the preceding layers. Thereby diminishing the straightforwardness of the representation.

The attention module in Vision Transformers is a key component that enables these models to capture and process visual information effectively. Vision Transformers represent a shift from traditional convolutional neural networks for image recognition tasks, leveraging the power of the transformer architecture, which was originally designed for natural language processing. The central component of the attention module in ViTs is the self-attention mechanism, enabling the model to assess the significance of various parts of the input data when making predictions39. This mechanism computes attention scores that determine how much each part of the input should contribute to the final representation. To capture different aspects of the input data, ViTs use multi-head attention. This involves running multiple self-attention operations in parallel, each with different learned projections. The outputs of these attention heads are then concatenated and linearly transformed to form the final output. Another attention mechanism applied in ViT is residual attention modules. They are inspired by residual networks (ResNets) and they incorporate residual connections into the attention layers to improve learning dynamics and model performance. The output of the MHSA mechanism is combined with the input through residual connections. This residual attention mechanism ensures that the model can learn to focus on important features while still retaining the context of the original input.

Input embeddings

The Vision Transformer extends the Transformer to image recognition tasks by directly applying it to sequences of image patches. A crucial component of this adaptation is the method by which images are converted into input embeddings suitable for the Transformer encoder.

In traditional Transformers used for natural language processing, the input tokens are words or subword units, each represented by a fixed-dimensional embedding vector. Similarly, in ViT, an image \({\bf{x}} \in \mathbb {R}^{H \times W \times C}\) is reshaped into a sequence of flattened patches \({\bf{x}}_p\), where (H, W) are the height and width of the original image, and C is the number of channels.

The image is divided into \(N = \frac{HW}{P^2}\) patches of size \((P \times P)\), Each patch is flattened into a vector and projected linearly to obtain the patch embeddings. Formally, the process can be described as:

where \({\bf{x}}^n \in \mathbb {R}^{P \times P \times C}\) represents the n-th image patch, \(\text {vec}(\cdot )\) denotes the vectorization operation that flattens the patch into a vector of size \(P^2 C\), and \({\bf{E}} \in \mathbb {R}^{D \times P^2 C}\) is a learnable linear projection (embedding) matrix that maps the flattened patch to a D-dimensional embedding space.

To retain positional information - which is crucial for capturing spatial relationships in images - ViT adds learnable positional embeddings \({\bf{E}}_{\text {pos}} \in \mathbb {R}^{(N+1) \times D}\) to the patch embeddings. Additionally, a special classification token \({\bf{x}}_{\text {class}} \in \mathbb {R}^{D}\) is prepended to the sequence. The final input embeddings \(\textbf{Z}_0\) fed to the Transformer encoder are given by (2):

where \([\cdot ]\) denotes concatenation along the sequence dimension, and \({\bf{E}}_{\text {pos}}^n\) is the positional embedding corresponding to the n-th patch. The inclusion of the positional embeddings allows the model to maintain spatial relationships between patches, which is essential for vision tasks. Unlike CNNs that inherently capture locality through convolutional kernels, the Transformer architecture relies on positional information to model the data effectively.

Multi-head self attention

The self-attention mechanism is unique in its ability to incorporate the context of input sequences to improve attention. This concept is groundbreaking as it successfully captured intricate details in images. This mechanism is successfully implemented in combined structure - MHSA (Fig. 4). This is a foundational component in ViTs that facilitates the modeling of complex interactions between different parts of an image. In ViTs, images are partitioned into a series of patches, which are then flattened and projected into an embedding space via a linear transformation. To maintain the positional information inherent in the spatial structure of images, to patch embeddings, positional embeddings are added.

Let \(X \in \mathbb {R}^{Nxd}\) represent the sequence of embedded image patches, where N denotes the number of patches and d is the dimensionality of the embeddings. The MHSA mechanism operates by linearly projecting X to multiple sets of Q, K, V, which are queries, keys and values, respectively, applying distinct learned projection matrices: \(Q_i = XW_i^Q\), \(K_i=XW_i^K\), \(V_i=XW_i^V\), \(\text {for i} = 1, \ldots , h\) where \(W_i^Q\), \(W_i^K\), \(W_i^V \in \mathbb {R}^{dxd_k}\) are the projection matrices for the i-th attention head, h is the total number of heads, and \(d_k\) is the dimensionality of the queries and keys in each head. Each attention head computes attention weights using the scaled dot-product attention mechanism (3) (4):

The scaling factor \(\sqrt{d_k}\) is critical as it counteracts the effect of large dot-product values, which can lead to small gradients and slow convergence during training.

Despite their exceptional ability to model token relationships, standard self-attention mechanisms can be computationally expensive when handling a large number of image patches or high-dimensional features. Instead of processing the full-dimensional Q, K, V directly, MHSA splits them into h smaller subspaces called heads, each with a reduced dimension of \(\frac{d_k}{h}\). This facilitates the simultaneous consideration of different aspects of the information input, which enables the model to have a more focused approach.

Formally, for a specific head h and layer i, the attention features for position p, denoted as \(\text {Attention}(Q,K,V)_{p,h}\), can be computed as (5)24:

where h represents the head index, \(Q_{p,h}\) is the query vector for position p in head h, and \(K_{h}\) and \(V_{h}\) are the key and value matrices for head h, respectively. By incorporating several attention heads, the model can simultaneously focus on different representation subspaces, allowing it to capture a variety of informational aspects. The outputs from each head are then concatenated to provide a comprehensive attention result that incorporates insights from each individual subspace. Formally, according to the definition of MHSA, the combined attention output at position p is (6)24:

where \(\text {Concat}(\cdot )\) denotes concatenation along the head dimension, and \(W^{0}\) is the output projection matrix.

The MLP module, on the other hand, extracts features independently from each position and is usually constructed by stacking two linear layers with weights \(W^0\) and \(W^1\), with a non-linear activation function \(\sigma\) in between. This module represents the last processing step of the transformer block, taking as input \(\text {Attention}(Q,K,V)_{i}\) and producing the output features of layer i, denoted as \(X_{i+1}\), which serve as the input features for layer \(i + 1\). Formally, it can be represented as (7)40:

where \(W^0\) and \(W^1\) are the weights of the two linear layers composing the MLP of layer i.

To prevent the vanishing gradient problem (as noted in38), in each attention head, the model employs scaled dot-product attention to compute weighted combinations of the value vectors \(V_i\). These weights are calculated based on the scaled similarity scores between the corresponding queries \(Q_i\) and keys \(K_i\), effectively measuring how relevant each key is to a given query. This attention mechanism allows the model to prioritize the most pertinent image patches during the encoding process, allowing it to effectively capture both short-range and long-range dependencies within the image data. By synthesizing information from diverse regions of the image, Multi-Head Self Attention enhances the model’s capacity to discern complex visual patterns, which significantly improves the performance of Vision Transformers.

Feature collapsing is a phenomenon commonly observed in ViTs architectures. It refers to the tendency of features extracted from different image patches to become less distinctive and increasingly similar as the network depth increases. This effect primarily arises due to the nature of the attention mechanism used in ViTs, which progressively aggregates information between image patches as it traverses through the network layers.

Mathematically, this process can be described by expressing the output of the attention mechanism as a weighted summation of feature patches41:

Here, \(Attention(Q,K,V)_{i,h}^{m}\) denotes the feature vector for patch m at layer i and head h, representing a weighted average of features from all patches j. The weights \(Attention(Q,K,V)_{i,p,h}^{m,j}\) are derived from the attention map and satisfy the normalization condition \(\sum _{j=1}^{M} Attention(Q,K,V)_{i,p,h}^{m,j} = 1\). The vectors \(V_{i,h}^{m,j}\) are the value vectors corresponding to each patch.

Although the attention mechanism is designed to capture global relationships between different feature patches38, it can inadvertently lead to a loss of feature diversity, resulting in feature collapsing. Empirical studies have visualized this phenomenon by constructing feature similarity matrices using cosine similarity between distinct feature patches extracted by the ViT model. These visualizations reveal a noticeable trend: as features progress from the shallower layers to the deeper layers, they become increasingly similar.

One strategy to mitigate feature collapsing is the use of residual connections, which establish pathways between features across different layers while bypassing the attention mechanism. Formally, the residual connection combined with the \(\text {MHSA}\) operation is expressed as41:

In Eq. (9), \(X^{i}\) is the input to layer i, and the identity mapping \(X^{i}\) runs in parallel to the \(\text {MHSA}\) operation. Intuitively, since the features in \(X^{i}\) exhibit greater diversity before passing through \(\text {MHSA}\), adding them to the output helps preserve the characteristics from the previous layer. Consequently, the output retains more diverse features. However, the residual connection alone may not be sufficient to fully prevent feature collapsing, as empirical evidence suggests.

Residual self-attention

While customizing ViT models, it is common to modify components such as the Multi-Head Self-Attention mechanism, normalization layers, the Multilayer Perceptron (MLP) module, or the residual connections between features. These modifications aim to maintain a specific flow of information at the feature level between adjacent transformer blocks39,42.

To enhance this information flow and increase feature diversity, a new skip connection between consecutive MHSA layers is introduced (Fig. 5). This innovation allows attention information from earlier (shallower) layers to propagate and accumulate in deeper layers. This process is referred to as residual attention and complements the existing residual connections typically found at the end of each transformer block. Unlike traditional skip connections that bypass the MHSA layer to forward low-level features, residual attention propagates the query Q and key K matrices. These matrices define the relationships between patches used for feature extraction, enabling the model to consider previously learned relationships while discovering new ones. Formally, the proposed attention mechanism modifies the computation of the attention scores in the MHSA. Instead of computing the attention for each layer independently, the attention scores are aggregated with those from the previous MHSA layers. Specifically, for the ith layer, the attention scores are computed as follows40:

For i=0 (the first layer):

\(\text {Attention}_0 = \frac{Q_0 K_0^\top }{\sqrt{d_k}}\)

For \(i>0\)

\(\text {Attention}_i = \xi \left( \frac{Q_l K_l^\top }{\sqrt{d_k}} \right) + (1 - \xi ) \, \text {Attention}_{i-1}\)

where Q and K are the queries and keys at layer i, \(d_k\) is the dimensionality of the queries and keys, \(\xi \in [0, 1]\) is a learnable gating parameter that balances the contribution of the current layer’s attention and the accumulated attention from previous layers. The scaling factor \(\sqrt{d_k}\) is critical, as it mitigates the effect of large dot-product values, which can lead to vanishing gradients and slow convergence during training.

Attention globality refers to the capacity of the attention mechanism in ViTs to encompass and consider information from all patches across an image, rather than being restricted to local or nearby elements. In other words, it represents the attention’s global receptive field. This capability becomes particularly pronounced in the deeper layers of a ViT, as these layers extract high-level representations that account for long-range relationships between patches. To quantify the globality of the attention mechanism across both heads and layers, we utilize the non-locality metric introduced by Cordonnier et al.43. This metric calculates, for each query patch m the relative positional distances to all key patches j weighted by their attention scores \(A_{m,j}^{i,h}\). The resulting sum is then averaged over all patches to derive the non-locality metric for a specific head h. Averaging over all heads yields the non-locality metric for the entire layer. Mathematically, the non-locality metric is defined as44:

where \(\Vert \delta _{m,j} \Vert\) represents the relative positional distance between query patch m and key patch j, M is the total number of patches, H is the number of heads, \(D_{i,h}\) denotes the non-locality metric for layer i and head h, and \(D_{i}\) is the average non-locality metric for layer i. Since \(\Vert \delta _{i,j} \Vert\) remains constant throughout the network (as the relative positions of patches do not change), the value of \(D_{i,h}\) depends entirely on the attention scores \(A_{m,j}^{i,h}\) . Considering the residual attention mechanism, which combines attention scores from both the current layer i and the previous layer \(i-1\), the attention scores are updated as44:

where \(\xi \in [0,1]\) is a weighting factor controlling the contribution from the current and previous layers. Consequently, the globality of layer i in at a given head h, can be represented as44:

Since the attention scores from earlier layers generally tend to be more locally focused compared to those of the current layer, the weighted combination of these sets of scores is expected to result in an attention matrix that exhibits less globality than if only the scores from the current layer were used. In other words, combining the attention scores of the current layer i with those of previous layers through residual attention at each head h effectively slows down the globalization process.

Evaluation metrics

Four evaluatiom metrics, Accuracy (14), Precisson (15), Recall (16) F1-score (17), and Specifictiy (18) widely used in classification purposes, have been applied to assess the models’ performance.

where TP stands for number of true positive samples, TN - number of true negative samples, FP - number of false positive samples, FN - number of false negative samples

Grad-CAM

Grad-CAM is an interpretability technique that identifies the regions of an image most relevant to a chosen class prediction. By computing the gradient of the target class score with respect to the final convolutional layer’s feature maps, Grad-CAM quantifies each map’s contribution to the decision. These gradients are averaged to derive a weight \(\alpha _i\) for the i-th feature map, as shown in Eq. (19)45:

where \(\partial y^c\) denotes the score for class c, \(\partial A_{kj}^i\) is the activation at spatial location (k, j), and N is the total number of pixels. Weighted feature maps are then summed and passed through a ReLU function to highlight positively influential areas, yielding a heatmap according to Eq. (20)45:

This heatmap, which assigns higher intensities to crucial regions, is resized and overlaid on the original image to illustrate the model’s focus. In doing so, Grad-CAM provides a visually interpretable account of where a network is “looking,” helping to assess whether the model bases its predictions on pertinent features or spurious artifacts. By revealing these focus areas, practitioners can further verify the model’s reliability or diagnose potential shortcomings under varied conditions.45

Results and discussion

A sequence of trials was conducted, considering the random partitioning of raw data into training, validation, and test elements, with proportions of 70%, 15%, and 15%, respectively. The subsets were selected to include instances of all types of classes in the proportions mentioned earlier. In each partitioning scenario, 5 separate tests were performed autonomously. The validation was aimed at determining the details values of the hyperparameters. The validation set served also as a quick checkpoint to verify whether the model is stable and whether it is worth improving it further - to stop the training early.

The comprehensive analysis of six models and their ability to classify retinal diseases are presented in Figs. 6, 7, 8 and 8 and Table 3. This study involves well-known DL networks, such as: EfficientNet, InceptionV3, ResNet50, and Vision Transformer. The obtained results were compared to the proposed new solution, namely Residual Self-Attention Vision Transformer. All the analysed models, except VGG16, achieved very high percentage of AVL recognition − 93.33% (Fig. 6). The VGG16 reached 80%. In case of drusen, the best recognition performance was obtained for the ViT model compared to the EfficientNet, InceptionV3 and ResNet50. Regardless, the highest classification performance was achieved by the RS-A ViT, outperforming other methods up to 5.27%. Although all analysed networks recognized normal cases with very high accuracy, the RS-A ViT achieved the highest result, 100%.

Precision and recall metrics play a pivotal role in investigating the model’s accuracy as well as determining the imbalanced problem. Precision indicates the model’s ability to correct classify the positive samples, while recall measures how well the model is able to identify the correct samples. These two metrics together evaluate the model’s ability to identify the positive representatives of a given dataset. All the models, involved in this study, achieved high and very high precision and recall results (Table 3) for the separated classes. However, only in case of the proposed tool, these metrics are on high and similar level, between 93.33% and 100%. In the case of the developed classifier, the difference between recall and precision results is the smallest, not exceeding 3.45%. The F1-score is the harmonic mean of the precision and recall results, which informs about the balance between these two measures. Although the InceptionV3 achieved the highest F1-score for AVL disease, the RS-A ViT outperformed all other models included in the study for drusen detection as well as for indicating healthy cases. The presented study analysis proved that the RS-A ViT is suitable for AVL, drusen and

The model’s accuracy and loss functions for all networks included in this study are depicted in Figs. 7 and 8. The classical models, like EfficientNet, InceptionV3 and ResNet, needed 80 epochs to reach optimal state. The ViT and the RS-A ViT models obtained their performance with only 50 epochs. Despite temporary drops, in each case accuracy increased gradually. The smoothest accuracy function was obtained for the proposed classifier, which means the greatest model stability during the learning process. In all models the loss gradually decreases, which informs that the models improve their predictions. The loss function contains the least fluctuations that proved the model is correct classification tool for retinal eye diseases. The overall results confirm that the proposed Residual Self-Attention Vision Transformer is a suitable model for retinal diseases detection, including AVL, drusen, as well as distinguish them from normal cases.

In Fig. 9, each Grad-CAM visualization highlights the regions the model considers most important for classifying an OCT scan with AVL, yet the heatmaps differ somewhat between CNN-based architectures (EfficientNet, InceptionV3, ResNet50, VGG16) and the transformer-based models (Vision Transformer, Residual Self-Attention Vision Transformer). The CNNs generally produce broader activation zones, often extending beyond the immediate lesion area and into adjacent retinal layers. This can be seen with EfficientNet and InceptionV3, which concentrate strongly on the central lesion but also show moderate attention in surrounding regions. In contrast, ResNet50 and VGG16 have a slightly more localized focus, though they still include portions of the outer retinal layers. By comparison, the Vision Transformer and the Residual Self-Attention Vision Transformer tend to emphasize the core AVL region more precisely. Both transformers’ heatmaps appear more contained around the lesion, indicating a somewhat tighter localization of features relevant to classification. The Residual Self-Attention model further refines this focus, showing a pronounced intensity almost exclusively over the vitelliform deposit. These distinctions suggest that while CNNs rely on hierarchical convolutional filters that can capture context from a wider area, transformers with self-attention can zero in more specifically on the lesion, which may help reduce the influence of background features.

In Fig. 10, all the networks highlight the drusen area, but the extent of the highlighted region varies by architecture. EfficientNet and InceptionV3 produce somewhat broader activation patterns, stretching into peripheral retinal zones and showing a more diffuse distribution. ResNet50 and VGG16 also focus intensely on the drusen, yet each shows a slight spillover into the neighboring layers, reflecting how convolutional filters capture contextual features beyond the lesion boundary. By contrast, the Vision Transformer narrows its attention more tightly onto the deposit area. Its reliance on patch-based attention appears to filter out much of the surrounding tissue. The Residual Self-Attention Vision Transformer further refines this localization, predominantly emphasizing the horizontal band of drusen with minimal activation outside that zone. Consequently, although both CNNs and ViT-based models identify the correct lesion site, the transformers generally confine their attention more precisely to the drusen and their immediate vicinity.

ViT algorithms have huge potentials in recognition of retinal diseases (Table 4). Most of the studies were performed on well-known and well-balanced dataset, the OCT2017, which consists of 84,484 samples.The models trained using this data reached very high performance, exceeding 91%, depending on the architecture. While examining the state-of-the-art ViT algorithms, gathered in Table 4, it can be seen that the new RS-A ViT method is among these with the highest performance. It obtained better results than ViT, T2T-ViT, HCTNet, Conv-ViT, models. It should be noted that solutions based on deep learning are also effective in detecting retinal diseases. The FD-CNN, D-KNN, D-SVM, FT-CNN, and R-FTCNN obtained very high accuracy results using the UCSD and Duke datasets15,19,20. The model performance, apart from the appropriate structure, is also influenced by the number of dataset elements, as well as dataset balance52. The conducted studies clearly indicate that the adopted the RS-A ViT model is a suitable solution for detecting retinal AVL and drusen and distinguishing them from the healthy cases.

In medical imaging, especially for rare conditions such as AVL, gathering tens of thousands of labeled samples can be a challenging task. Consequently, many published studies rely on large, publicly available datasets (e.g., OCT2017) containing common diseases like diabetic macular edema or generic AMD. The efficient of the RS-A ViT relay on several issues.

First, achieving high accuracy with fewer images. Despite having fewer training images (only 1280), which typically makes classification more difficult, the proposed approach still achieves 96.92% mean accuracy. This suggests that the RS-A ViT effectively learns from smaller, more specialized datasets and can generalize to new images, which is critical in clinical settings where data collection is often limited.

Second, this study targets AVL, a relatively rare macular lesion often confused with drusen on OCT scans. Many existing ViT-based methods, while reporting high accuracy, focus on more abundant pathologies (e.g., “wet” vs. “dry” AMD) or large collections of diabetic retinopathy images. In contrast, identifying AVL is a more nuanced classification task. Achieving near-97% accuracy under these circumstances’ points to the robustness and adaptability of the RS-A ViT.

Third, through Grad-CAM heatmaps, it has been shown that the RS-A ViT focuses more precisely on the areas of interest. Finally, by improving how the model retains and reuses intermediate attention maps, the RS-A ViT addresses potential shortcomings of standard ViTs (e.g., losing fine-grained details in deeper layers). This change contributes to its strong performance on a smaller dataset.

To put it concisely, while models trained on massive datasets may achieve comparable accuracies, the real efficiency of the RS-A ViT lies in its ability to handle fewer, more specialized clinical images and still deliver highly accurate, interpretable results.

In order to compare how each of the utilized models in this study interprets images containing retinal diseases, the Grad-CAM visualization technique was applied. It allows one to verify which areas of the image were analyzed by the network in the greatest extend53. This indicates which elements are the most crucial for retinal diseases detection. Grad-CAM is obtained utilizing gradient information from the last convolutional layer to specify importance weights to each neuron for a particular decision of interest53. The warmer color obtained in the heatmap, the most relevant area is for models’ determination. The interpretability of the utilized models are gathered in Fig. 9 for AVL distortions and in Fig. 10 for drusen diseases.

Moreover, to examine the impact of data augmentation, additional tests were conducted covering only raw data. The process of dividing the data into training, test and validation sets was conducted in a manner analogous to that for the augmented data. Similarly, in this case, 5 independent tests were also conducted. The collected results are presented in Table 5, Figs. 11 and 12.

Comparing the results obtained without the augmentation process (Table 5) and after data augmentation (Table 3), an increase in classification quality indicators is noticeable for all models. The most spectacular improvement after data augmentation was observed in the EfficientNet and VGG16 models, where Accuracy and F1-score increased significantly (Fig. 12). This confirmed the importance of the data augmentation process in the context of training deep learning models on limited data sets.

Analysis of the confusion matrix (Fig. 11) confirms that RS-A ViT is definitely the least likely to confuse classes, especially between the DRUSEN and NORMAL classes, which was the most common source of errors for the other models. It is also worth noting that despite a similar level of overall accuracy, clear differences are visible in the precision and sensitivity values for the AVL class, which is the least represented. This indicates that RS-A ViT also copes best with the problem of imbalanced datasets.

Conclusions

Despite the huge technological development, there is still a need for automatic tools for disease recognition in medicine54. New models and algorithms provide increasingly accurate data classification. Therefore, in this study, we propose a new, automatic model, Residual Self-Attention Vision Transformer, for retinal diseases detection. It obtained very high results in recognition of AVL, drusen, as well as distinguishing them from healthy cases. The RS-A ViT proved to have a great potential in classification retinal diseases, reaching 96.92% mean accuracy. It outperforms the classical ML methods, like EfficientNet, InceptionV3, ResNet and VGG16. The performance of the proposed solution is also higher than ViT algorithm. The results show that changing the Self-Attention mechanism into the Residual Self-Attention module improves the model’s performance. Thus, it can be applied as a supportive tool for ophthalmologists. The study was performed utilizing dataset created for the purpose of this study. It combines AVL data from two research centers and drusen and normal cases from a well-known OCT dataset. Although the dataset consists of 1,280 samples, the RS-A ViT obtained very high performance. Data augmentation methods were applied in order to enlarge the data. Grad-CAM as an interpretability method was used to display heat maps to visualize which areas are analyzed by the classifier and to what extent. The proposed classifier focuses the most on the region where both AVL and drusen changes occur.

While the standard ViT architecture has indeed shown impressive performance in medical image analysis, its main limitation lies in how the self-attention mechanism can cause certain features to collapse or become less discriminative in deeper layers. The proposed RS-A module addresses this limitation by introducing an additional “residual attention” pathway that propagates attention information from shallower layers into deeper layers.

Deeper layers in a plain ViT may gradually pool or mix features in such a way that local distinctions blur out, leading to slightly lower accuracy for subtle conditions like AVL or small drusen regions.

Residual Self-Attention retains earlier attention maps (queries, keys) and fuses them with attention from current layers. This helps preserve crucial local details while still capturing global context. As a result, the RS-A ViT better highlights the pathology regions, evidenced by the Grad-CAM visualizations focusing more narrowly on actual lesions rather than diffuse surrounding tissue.

Because ophthalmic OCT scans often contain fine-grained, subtle differences, preserving discriminative details throughout the network is crucial. Hence, the additional residual attention connections in proposed method make the final model more sensitive to those nuances, explaining why the RS-A ViT slightly outperforms a standard ViT approach on this particular classification task.

Despite the great successful of the RS-A ViT model, some limitations may be encountered. One of them is the number of data containing OCT images with AVL and drusen diseases. The problem of small-size dataset was partially overcome by applying augmentation methods. However, in the future studies more sophisticated techniques may be utilized, like Generative Adversarial Network that are able to produce completely new samples. This kind of attitude may additionally improve the robustness and generalization of the network. In the future research the created model will be verified utilizing other datasets containing retinal diseases.

It is still possible to improve the RS-A ViT in order to obtain higher performance by adjusting its parameters and examining how they influence on the image feature extraction and final accuracy.

Data availability

The datasets used and analysed during the current study available from the corresponding author on reasonable request.

References

Li, F. et al. Deep learning-based automated detection of retinal diseases using optical coherence tomography images. Biomed. Opt. Exp. 10, 6204–6226 (2019).

Gehrs, K. M., Anderson, D. H., Johnson, L. V. & Hageman, G. S. Age-related macular degeneration-emerging pathogenetic and therapeutic concepts. Ann. Med. 38, 450–471 (2006).

Querques, G., Forte, R., Querques, L., Massamba, N. & Souied, E. H. Natural course of adult-onset foveomacular vitelliform dystrophy: A spectral-domain optical coherence tomography analysis. Am. J. Ophthalmol. 152, 304–313 (2011).

Dalvin, L. A., Pulido, J. S. & Marmorstein, A. D. Vitelliform dystrophies: Prevalence in Olmsted county, Minnesota, United States. Ophthalm. Genet. 38, 143–147 (2017).

Balaratnasingam, C. et al. Clinical characteristics, choroidal neovascularization, and predictors of visual outcomes in acquired vitelliform lesions. Am. J. Ophthalmol. 172, 28–38 (2016).

Freund, K. B. et al. Acquired vitelliform lesions: Correlation of clinical findings and multiple imaging analyses. Retina 31, 13–25 (2011).

Lima, L. H., Laud, K., Freund, K. B., Yannuzzi, L. A. & Spaide, R. F. Acquired vitelliform lesion associated with large drusen. Retina 32, 647–651 (2012).

Arnold, J., Sarks, J., Killingsworth, M., Kettle, E. & Sarks, S. Adult vitelliform macular degeneration: A clinicopathological study. Eye 17, 717–726 (2003).

Crincoli, E., Sacconi, R., Querques, L. & Querques, G. Artificial intelligence in age-related macular degeneration: State of the art and recent updates. BMC Ophthalmol. 24, 121 (2024).

Leng, X. et al. Deep learning for detection of age-related macular degeneration: A systematic review and meta-analysis of diagnostic test accuracy studies. Plos one 18, e0284060 (2023).

Akça, S., Garip, Z., Ekinci, E. & Atban, F. Automated classification of choroidal neovascularization, diabetic macular edema, and drusen from retinal oct images using vision transformers: a comparative study. Lasers Med. Sci. 39, 140 (2024).

Thakoor, K. A. et al. A multimodal deep learning system to distinguish late stages of AMD and to compare expert vs. AI ocular biomarkers. Sci. Rep. 12, 2585 (2022).

Skublewska-Paszkowska, M., Powroznik, P., Rejdak, R. & Nowomiejska, K. Application of convolutional gated recurrent units u-net for distinguishing between retinitis pigmentosa and cone–rod dystrophy. Acta Mech. Autom. 18 (2024).

Powroznik, P., Skublewska-Paszkowska, M., Rejdak, R. & Nowomiejska, K. Automatic method of macular diseases detection using deep CNN-GRU network in oct images. Mech. Autom. 18, 197–206 (2024).

Kayadibi, İ, Güraksın, G. E. & Köse, U. A hybrid r-ftcnn based on principal component analysis for retinal disease detection from oct images. Expert Syst. Appl. 230, 120617 (2023).

Gueddena, Y. et al. A new intelligent system based deep learning to detect DME and AMD in oct images. Int. Ophthalmol. 44, 191 (2024).

Chen, X. et al. Deep learning-based system for disease screening and pathologic region detection from optical coherence tomography images. Transl. Vis. Sci. Technol. 12, 29–29 (2023).

Saha, S. et al. Automated detection and classification of early AMD biomarkers using deep learning. Sci. Rep. 9, 10990 (2019).

Kayadibi, İ & Güraksın, G. E. An explainable fully dense fusion neural network with deep support vector machine for retinal disease determination. Int. J. Comput. Intell. Syst. 16, 28 (2023).

Kayadibi, I. & Güraksın, G. E. An early retinal disease diagnosis system using oct images via CNN-based stacking ensemble learning. Int. J. Multiscale Comput. Eng. 21 (2023).

Yildirim, K. et al. U-net-based segmentation of current imaging biomarkers in oct-scans of patients with age related macular degeneration. In Caring is Sharing—Exploiting the Value in Data for Health and Innovation. 947–951 (IOS Press, 2023).

Schwartz, R. et al. A deep learning framework for the detection and quantification of reticular pseudodrusen and drusen on optical coherence tomography. Transl. Vis. Sci. Technol. 11, 3–3 (2022).

Chen, Q. et al. Multimodal, multitask, multiattention (m3) deep learning detection of reticular pseudodrusen: Toward automated and accessible classification of age-related macular degeneration. J. Am. Med. Inform. Assoc. 28, 1135–1148 (2021).

Nowomiejska, K. et al. Residual attention network for distinction between visible optic disc drusen and healthy optic discs. Opt. Lasers Eng. 176, 108056 (2024).

Azizi, M. M., Abhari, S. & Sajedi, H. Stitched vision transformer for age-related macular degeneration detection using retinal optical coherence tomography images. Plos one 19, e0304943 (2024).

Xu, K. et al. Automatic detection and differential diagnosis of age-related macular degeneration from color fundus photographs using deep learning with hierarchical vision transformer. Comput. Biol. Med. 167, 107616 (2023).

Jiang, Z. et al. Computer-aided diagnosis of retinopathy based on vision transformer. J. Innov. Opt. Health Sci. 15, 2250009 (2022).

Zhou, Z. et al. Diagnosis of retinal diseases using the vision transformer model based on optical coherence tomography images. In SPIE-CLP Conference on Advanced Photonics 2022. Vol. 12601. 1260102 (SPIE, 2023).

Kihara, Y. et al. Detection of nonexudative macular neovascularization on structural oct images using vision transformers. Ophthalmol. Sci. 2, 100197 (2022).

Singh, D., Ammar, M., Varshney, K. & Khan, Y. U. Optical coherence tomography image classification using light-weight hybrid transformers. In 2023 International Conference on Recent Advances in Electrical, Electronics & Digital Healthcare Technologies (REEDCON). 185–189 (IEEE, 2023).

Dutta, P., Sathi, K. A., Hossain, M. A. & Dewan, M. A. A. Conv-vit: A convolution and vision transformer-based hybrid feature extraction method for retinal disease detection. J. Imaging 9, 140 (2023).

Yao, Z. et al. Funswin: A deep learning method to analysis diabetic retinopathy grade and macular edema risk based on fundus images. Front. Physiol. 13, 961386 (2022).

Gholami, S. et al. Federated learning for diagnosis of age-related macular degeneration. Front. Med. 10, 1259017 (2023).

Kermany, D. S. et al. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell 172, 1122–1131 (2018).

Powroznik, P. et al. Deep convolutional generative adversarial networks in retinitis pigmentosa disease images augmentation and detection. Adv. Sci. Technol. Res. J. 19, 321–340 (2025).

Liang, W., Liang, Y. & Jia, J. Miamix: Enhancing image classification through a multi-stage augmented mixed sample data augmentation method. Processes 11, 3284 (2023).

Chlap, P. et al. A review of medical image data augmentation techniques for deep learning applications. J. Med. Imaging Radiat. Oncol. 65, 545–563 (2021).

Vaswani, A. et al. Attention is all you need. Adv. Neural Inf. Process. Syst. 30 (2017).

Dosovitskiy, A. et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020).

Diko, A., Avola, D., Cascio, M. & Cinque, L. Revit: Enhancing vision transformers feature diversity with attention residual connections. Pattern Recognit. 156, 110853 (2024).

Wahid, A. et al. Multi-path residual attention network for cancer diagnosis robust to a small number of training data of microscopic hyperspectral pathological images. Eng. Appl. Artif. Intell. 133, 108288 (2024).

Liu, Z. et al. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 10012–10022 (2021).

Cordonnier, J.-B., Loukas, A. & Jaggi, M. Multi-head attention: Collaborate instead of concatenate. arXiv preprint arXiv:2006.16362 (2020).

Hameed, M., Zameer, A., Khan, S. H. & Raja, M. A. Z. Arivit: Attention-based residual-integrated vision transformer for noisy brain medical image classification. Eur. Phys. J. Plus 139, 440 (2024).

Kumaran S, Y., Jeya, J. J., Khan, S. B., Alzahrani, S. & Alojail, M. Explainable lung cancer classification with ensemble transfer learning of vgg16, resnet50 and inceptionv3 using grad-cam. BMC Med. Imaging 24, 176 (2024).

Kermany, D. et al. Labeled optical coherence tomography (oct) and chest X-ray images for classification. Mendeley Data 2, 651 (2018).

Hemalakshmi, G., Murugappan, M., Sikkandar, M. Y., Begum, S. S. & Prakash, N. Automated retinal disease classification using hybrid transformer model (svit) using optical coherence tomography images. Neural Comput. Appl. 1–18 (2024).

Nejati Manzari, O., Ahmadabadi, H., Kashiani, H., Shokouhi, S. B. & Ayatollahi, A. Medvit: A robust vision transformer for generalized medical image classification. arXiv e-prints arXiv-2302 (2023).

Ma, Z. et al. Hctnet: A hybrid convnet-transformer network for retinal optical coherence tomography image classification. Biosensors 12, 542 (2022).

Ai, Z. et al. Fn-oct: Disease detection algorithm for retinal optical coherence tomography based on a fusion network. Front. Neuroinform. 16, 876927 (2022).

He, J. et al. An interpretable transformer network for the retinal disease classification using optical coherence tomography. Sci. Rep. 13, 3637 (2023).

Althnian, A. et al. Impact of dataset size on classification performance: An empirical evaluation in the medical domain. Appl. Sci. 11, 796 (2021).

Selvaraju, R. R. et al. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision. 618–626 (2017).

Kayadibi, İ, Köse, U., Güraksın, G. E. & Çetin, B. An AI-assisted explainable mtmcnn architecture for detection of mandibular third molar presence from panoramic radiography. Int. J. Med. Inform. 195, 105724 (2025).

Acknowledgements

The study was carried out as a part of the project “Lubelska Unia Cyfrowa - Wykorzystanie rozwia̧zań cyfrowych i sztucznej inteligencji w medycynie - projekt badawczy”, no. MEiN/2023/DPI/2194.

Author information

Authors and Affiliations

Contributions

P.P.: Writing - original draft, Methodology, Experiments, Formal analysis and results, Data curation, Conceptualization. M.SP.: Writing - original draft, Methodology, Experiments, Formal analysis and results, Data curation, Conceptualization. K.N.: Writing - original draft, Methodology, Formal analysis and results, Data curation, Conceptualization, Supervision. B.GD.: Data curation. M.B.: Data curation. M.C.: Data curation. M.D.T.: Data curation. R.R.: Supervision.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Powroznik, P., Skublewska-Paszkowska, M., Nowomiejska, K. et al. Residual self-attention vision transformer for detecting acquired vitelliform lesions and age-related macular drusen. Sci Rep 15, 17107 (2025). https://doi.org/10.1038/s41598-025-02299-y

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-02299-y