Abstract

Personalized recommendation systems are vital for enhancing user satisfaction and reducing information overload, especially in data-sparse environments like e-commerce platforms. This paper introduces a novel hybrid framework that combines Long Short-Term Memory (LSTM) with a modified Split-Convolution (SC) neural network (LSTM-SC) and an advanced sampling technique—Self-Inspected Adaptive SMOTE (SASMOTE). Unlike traditional SMOTE, SASMOTE adaptively selects “visible” nearest neighbors and incorporates a self-inspection strategy to filter out uncertain synthetic samples, ensuring high-quality data generation. Additionally, Quokka Swarm Optimization (QSO) and Hybrid Mutation-based White Shark Optimizer (HMWSO) are employed for optimizing sampling rates and hyperparameters, respectively. Experiments conducted on the goodbooks-10k and Amazon review datasets demonstrate significant improvements in RMSE, MAE, and R² metrics, proving the superiority of the proposed model over existing deep learning and collaborative filtering techniques. The framework is scalable, interpretable, and applicable across diverse domains, particularly in e-commerce and electronic publishing.

Similar content being viewed by others

Introduction

The term “e-commerce” refers to the practice of conducting business through the Internet and other electronic mediums1. The development of e-commerce has great social and economic significance, as it provides customers with more choices and convenience while offering businesses increased opportunities and challenges. According to recent statistics, the global volume of online purchases reached $29.4 trillion in 2023, a 19.9% increase from the previous year. Projections indicate that this figure will surpass $40 trillion by 20252. However, the rapid expansion of e-commerce and the Internet has led to common issues such as information overload and decision-making difficulties. Users face the daunting task of sorting through an overwhelming variety of products and services3.

At the core of e-commerce shopping recommendation systems are data mining and machine learning techniques, which simplify the buying process by sifting through vast amounts of data to identify products and services tailored to individual preferences. These systems effectively reduce information overload, enhancing user happiness, loyalty, conversion rates, and platform revenue4. Shopping recommendation systems have become a critical area of study in artificial intelligence, serving as the backbone of the e-commerce industry. They not only drive innovation in AI development but also enable platforms to offer smarter, more user-centric services, boosting transaction growth and improving user experience5,6.

However, the rapid expansion of e-commerce and the Internet has introduced several specific challenges in building effective recommendation systems. These include:

-

Data sparsity, where limited user-item interactions hinder the model’s ability to learn preferences.

-

Cold-start problems, especially for new users or products with insufficient historical data.

-

Low diversity and overfitting in content-based models that repeatedly recommend similar items.

-

Scalability and computational inefficiency, as models must process massive, ever-growing datasets.

-

Real-time adaptability, where systems must update recommendations as user behavior evolves dynamically.

These issues are particularly pronounced in domains like e-book and digital product recommendations, where metadata is often sparse, and reviews may be highly subjective or linguistically complex. Addressing these targeted issues is crucial for enhancing user engagement and improving platform performance.

Recommendation systems can be classified based on their methodologies:

-

Content-Based Recommendation: This method heavily relies on user preference data across dimensions such as categories, tags, and brands. It provides personalized recommendations by matching user interests with catalog items7. However, it struggles with limitations like high content matching, which can result in low diversity in recommendations, and challenges in catering to new users8.

-

Collaborative Filtering: This approach uses collective intelligence to suggest items based on shared preferences of similar users. It includes neighborhood-based methods, which use existing data for prediction, and model-based methods, which train on data to make forecasts9,10.

-

Knowledge Graph-Based Approach: This technique constructs a graph model to represent complex relationships between users, products, and other entities. While it handles multidimensional data effectively, challenges like data quality and knowledge representation persist11,12.

-

Deep Learning-Based Approach: By employing deep neural networks, this method extracts abstract features from high-dimensional, unstructured data. Despite its ability to address dynamic and complex datasets, it demands significant computational resources and careful network design13.

While these methodologies have achieved notable successes, challenges such as information overload, data sparsity, scalability, and real-time data processing remain significant hurdles. Information overload complicates users’ decision-making processes, while data sparsity reduces the system’s ability to accurately interpret user preferences14,15. Additionally, the dynamic nature of e-commerce categories requires scalable systems that adapt to new data and algorithms. Real-time data processing is essential for keeping recommendations relevant and up-to-date16.

To address these issues, research has focused on collaborative filtering, social network analysis, and deep learning-based solutions. Recent advancements include frameworks based on convolutional neural networks, sequential recommendation improvements, and utility-based approaches17. However, these methods often fall short in handling sentiment analysis, aspect extraction, and semantic comprehension, particularly with user-generated data18.

The e-commerce market’s continuous growth parallels the increasing adoption of electronic books (e-books). E-books are widely used by students and general readers, and their popularity is expected to rise due to their convenience and effectiveness in enhancing learning outcomes19. To remain competitive, electronic publishing companies require tailored recommendation systems to boost sales. Deep learning models, such as multilayer perceptrons, have demonstrated superior performance in various domains, including recommendation systems20. These models interpret user and product data, transforming it into feature vectors for effective similarity computations. However, existing models often demand extensive computational resources and time due to their reliance on diverse data sources, including user, product, and creator information.

To address the above challenges, this study employs two key innovations. First, the Self-Inspected Adaptive SMOTE (SASMOTE) technique enhances data quality by selecting “visible” nearest neighbors for oversampling and eliminates low-quality synthetic samples through a self-inspection mechanism. This significantly improves class balance in sparse datasets. Second, a Long Short-Term Memory (LSTM) network is integrated with a modified Split-Convolution (SC) module to extract both sequential and spatial features from user-item interaction data. These components work synergistically within a hybrid framework, optimized through bio-inspired algorithms, to deliver robust and scalable recommendations.

This study proposes using deep collaborative filtering combined with feature extraction to address these challenges in e-book recommendation systems. The introduction of a novel Self-Inspected Adaptive SMOTE (SASMOTE) model allows for the generation of high-quality minority class samples by identifying “visible” nearest neighbors. Additionally, the SASMOTE model incorporates an uncertainty inspection strategy to eliminate low-quality synthetic data. The proposed hybrid framework integrates Long Short-Term Memory (LSTM) and a modified Split-Convolution (SC) neural network to capture sequence-dependent and spatial features. Moreover, hyperparameter tuning is enhanced using the Hybrid Mutation-based White Shark Optimizer (HMWSO), improving classification accuracy.

The main contributions of this manuscript are as follows:

-

Hybrid LSTM-SC Network: Developed a hybrid model combining Long Short-Term Memory (LSTM) and Split-Convolution (SC) networks to extract both sequence-dependent and hierarchical spatial features effectively.

-

Self-Inspected Adaptive SMOTE (SASMOTE): Proposed a novel sampling technique that leverages adaptive nearest neighbor selection and uncertainty elimination to generate high-quality minority samples.

-

Quokka Swarm Optimization (QSO): Integrated QSO to optimize the sampling rate, enhancing the quality of synthetic data and reducing biases caused by data imbalance.

-

Efficient Hyperparameter Tuning: Utilized a Hybrid Mutation-based White Shark Optimizer (HMWSO) to fine-tune hyperparameters, achieving superior classification accuracy and generalizability.

The remainder of this paper is structured as follows: Sect. “Related work” provides a review of relevant literature, Sect. "Background of E‑Commerce database" presents an overview of e-commerce, Sect. “Proposed Methodology” details the proposed methodology, Sect. "Results and Discussion" discusses the results, and Sect. “Conclusion” concludes the study.

Related work

Xu et al.21 compared the internal mechanisms of personalized recommendation schemes to conventional online commodity categorization systems. Their study highlights the role of personalized recommendation systems in media, content information, and e-commerce industries. The research also identifies challenges such as data privacy concerns, algorithmic bias, scalability, and the cold start problem. To address these challenges, the authors proposed a customized recommendation system utilizing the BERT model and a nearest neighbor method. The system’s scalability and effectiveness were validated through practical applications and manual assessments, providing structured output suggestions.

For sentiment analysis (SA) on online product evaluations, Rasappan et al.22 proposed an Enhanced Golden Jackal Optimizer-based Long Short-Term Memory (EGJO-LSTM) model. Their approach consists of four steps: data collection, pre-processing, term weighting, and sentiment classification. Product reviews were gathered via web scraping, pre-processed to refine the data, and weighted using IGWO and LF-MICF algorithms. The EGJO-LSTM model, trained with this data, classified customer reviews into positive, neutral, or negative sentiments. Experimental results on Amazon’s cloud dataset showed that the model outperformed state-of-the-art methods, with 25% and 32% higher accuracy and precision compared to conventional and hybrid approaches.

Jain and Roy23 introduced a hybrid Long Short-Term Memory encoder-decoder model focused on generating a single emotion score. The study employed text normalization techniques to handle noisy sentences with improper syntax, acronyms, and typographical errors. Their model effectively standardized e-commerce reviews, revealing hidden insights and producing a sentiment score for products.

Suvarna and Balakrishna24 proposed a deep ensemble classifier leveraging probabilities from five pre-trained models, including MobileNet, DenseNet, Xception, and two VGG variants. Their method predicts product classifications using cosine similarity metrics. Testing on the Fashion product dataset and the Shoe dataset demonstrated that their approach achieved a 96% accuracy rate, outperforming state-of-the-art models and showcasing the potential of ensemble and transfer learning methods in improving fashion recommendation systems.

Rane et al.25 explored ensemble learning methods, focusing on developing smarter algorithms and using explainable AI (XAI) frameworks to enhance transparency and user trust. They highlighted technologies like federated learning and quantum computing as future directions for scalable, real-time solutions. Their study provided a comprehensive overview of ensemble learning’s challenges and opportunities, outlining its potential to address real-world issues in data processing and decision-making.

Xu et al.26 discussed the integration of cloud computing with advanced recommendation systems, emphasizing its impact on improving user experiences and operational efficiencies across industries like media and e-commerce. They compared conventional recommendation models with modern approaches, such as UniLLMRec, using news and e-commerce datasets. Their findings demonstrated the transformative role of cloud computing in facilitating efficient and scalable data processing, enhancing system performance.

Nawoya et al.27 reviewed the application of computer vision (CV) and deep learning (DL) in insect production for food and feed. Their study illuminated challenges and opportunities in this emerging field, showcasing advancements supported by machine learning. They concluded with insights into how CV and DL could improve production efficiency and suggested areas for further research.

Latha and Rao28 implemented a deep learning-based recommendation mechanism using data pre-processing techniques like stemming, lemmatization, and stop word removal. Their enhanced CNN model employed Glove embeddings for improved sentiment analysis of Amazon product reviews. Compared to the standard CNN, their model achieved higher precision (93.64%), recall (94.80%), and accuracy (96.72%).

Zhao et al.29 introduced BERTFusionDNN, a framework combining BERT for textual feature extraction with a deep neural network for numerical feature integration. Tested on a women’s clothing e-commerce dataset, their method effectively handled numerical complexity and linguistic subtleties, improving recommendations and laying a foundation for future enhancements in customer experience.

Chen et al.30 explored leveraging customer attention through multimodal reviews to enhance sales predictions. They proposed a deep learning approach incorporating timeliness, semantic variety, voting awareness, and multimodal interactions. Empirical evaluations on hotel occupancy data demonstrated the effectiveness of their methodology in predicting sales.

Sharma et al.31 tackled product recommendation challenges using natural language processing (NLP) and CNNs on the Amazon Apparel dataset. By extracting feature vectors from product images and titles using the VGG-16 architecture, their approach identified the closest matching products, improving recommendation accuracy.

Choudhary et al.32 developed an ensemble-based deep learning approach combining sentiment analysis and reviews to predict product recommendations. Their system, trained on both ratings and reviews, employed backpropagation neural networks for enhanced learning. Although their method demonstrated potential, they identified areas for improvement, including explainability and reliability.

Pham et al.33 proposed FuzzyRec, a hierarchical fused neural network based on fuzzy-driven heterogeneous network embedding. Their system reduced feature ambiguity and noise, outperforming existing recommendation models across multiple metrics.

Liu34 investigated machine learning techniques for advertising, focusing on Gaussian processes and anomaly detection for monitoring ad campaigns. Their approach effectively tracked campaign performance, ensuring transparency and efficiency.

Finally, Solairaj et al.35 presented EESNN-SA-OPR, a sentiment analysis framework using collaborative filtering and Elman spike networks for online product recommendations. Tested on Amazon data, their model achieved significantly higher accuracy, recall, and precision compared to existing techniques.

Research gap

Despite significant advancements in personalized recommendation systems, challenges remain in addressing data sparsity, computational efficiency, and real-time adaptability. Current approaches, such as collaborative filtering, content-based methods, and deep learning-based models, often fail to balance scalability with accuracy when faced with sparse datasets. Techniques like sentiment analysis and feature extraction enhance recommendations but are limited by their inability to handle complex data relationships and linguistic nuances effectively. Additionally, existing sampling methods, including SMOTE, often generate low-quality synthetic data, which negatively impacts model performance. The lack of adaptive mechanisms to select optimal neighbors and eliminate uncertainties in resampled data further compounds this issue.

Moreover, while deep neural networks and hybrid models demonstrate potential, their resource-intensive nature and reliance on diverse datasets pose challenges for practical implementation in dynamic environments like e-commerce and electronic publishing. Hyperparameter tuning methods also lack efficiency and robustness, limiting the generalizability of current systems. Addressing these gaps requires an innovative, adaptive framework capable of leveraging advanced feature extraction, high-quality sampling, and efficient optimization techniques to improve accuracy and scalability in sparse data environments. This study aims to bridge these gaps through a novel hybrid approach integrating self-inspected adaptive SMOTE with advanced neural network architectures.

Background of e‑commerce database

An e-commerce system’s intricate database structure includes various components such as categories, products, inventory, customers, employees, suppliers, manufacturers, news, promotions, reviews, orders, shipments, order details, and more. Figure 1 illustrates a section of the database architecture commonly used in online stores. To facilitate statistical processing, reporting, and data analysis, the database must accurately record all transaction details. This article focuses on a subset of the e-commerce database architecture, specifically the following primary data tables: Warehouse, Product, Product_Category_Mapping, Customer, ProductReview, Order, Shipment, and OrderItem.

The Category table enables the organization of products into various categories, making it easier to locate products within the main catalog. A product can belong to multiple categories, and the Product_Category_Mapping table manages these relationships. The Order table stores all customer transactions, enabling the analysis of purchasing behaviors. This analysis helps identify patterns, enhance product recommendations, and improve the overall understanding of the platform’s operations.

By leveraging these tables, the e-commerce database provides a robust framework for managing and analyzing complex data relationships, ultimately supporting better decision-making and user experiences.

The Shipment table provides shippers with information about which warehouse fulfilled specific product orders, facilitating the timely delivery of orders to customers. To extract customer ratings for each product over time, three tables in the database structure depicted in Fig. 1 are particularly important: Product, Customer, and ProductReview.

The experiment utilizes properties from the Product table, including ProductID, ProductName, and UnitPrice, which store key product information. The Customer table contains essential details about customers, with two critical columns: CustomerID and CustomerName. Lastly, the ProductReview table includes crucial data such as CustomerID, ProductID, Rating, and RatingTime. The RatingTime attribute is especially important for the model’s operation as it provides the temporal data required for training. To train the model, data from the Customer, Product, and ProductReview tables is extracted and formatted into JSON. The machine learning recommendation model relies solely on these tables, as other database columns have distinct but complementary roles within the overall system.

Proposed methodology

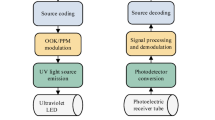

In this section, the advanced deep learning techniques are used to personalize the recommendation based on e-commerce products and it is exposed in Fig. 2.

Balancing the dataset using SASMOTE

Traditional SMOTE creates synthetic samples by randomly interpolating between a minority sample and its nearest neighbors. However, this can lead to poor-quality samples if the neighbors are far or belong to different classes. SASMOTE (Self-Inspected Adaptive SMOTE) improves upon this by selecting only “visible” neighbors—those that form sharp angles with the target sample—ensuring more reliable synthetic data. It then uses a panel of classifiers (called inspectors) to evaluate the quality of each generated sample. Samples predicted with high disagreement or uncertainty are discarded. This ensures that only trustworthy synthetic data augment the training set. The sampling rate is further optimized using a bio-inspired algorithm—Quokka Swarm Optimization (QSO)—to achieve better data balance. To resample data using the traditional SMOTE method, one must first find all of the minority data’s k-nearest neighbours (KNNs). Then, using the lines connecting the minority data to its KNNs, one must randomly create resampled data. A major drawback is that resampled data quality might be poor if minority data points are too far from their KNNs or if neighbours belong to different classes. In contrast to the standard KNNs approach employed by the SMOTE algorithm, the suggested SASMOTE technique uses an adaptive neighbourhood selection algorithm to determine which neighbours should be included for resampling. This results in data that is of higher quality. Applying the neighbourhood selection algorithm to prevent the lengthy edge connections between neighbours is done using the notion of “visible” neighbours. If there is no other neighbourhood in KNN(x) that separates y and x, then a sample y is considered a “visible” neighbour of x. In formal terms, to designate the KNNs of sample x as KNN(x). Put simply, for each and every neighbour z in KNN(x), the angle between edges xz and yz is sharp. A subset of x’s KNNs, the set of “visible” neighbours of x is defined formally as VN(x).:

A sample y is considered to be a visible neighbour of x if and only if no other neighbour z can be used to separate y besides x. which is articulated as \(\:\langle x\:-\:z,\:y\:-\:z\rangle \ge\:\:0,\:\forall\:z\:\in\:\:KNN\left(x\right)\). The set of visible neighbors of x is distinct as

Figure 3(A) shows a sample dataset with five trials, where A and B are the visible KNNs of a minority sample P, while C and D are invisible KNNs. Using the definition of “visible neighbors,” A and B are identified as appropriate for generating resampled data, helping prevent errors due to long-edge connections with unseen neighbors. Figure 3(B) illustrates a nonlinear decision boundary in a binary classification problem. It highlights how samples generated using invisible neighbors (red dashed lines) can lead to incorrect decision boundaries, while samples generated using visible neighbors (blue dashed lines) maintain correct class separation.

Figure 3 (A) Demonstration of visible (green dots) and invisible (red dots) neighbors for a minority sample. (B) Impact of synthetic samples generated between visible and invisible neighbors on classification boundaries.

The nonconvex set of minority samples, exposed by the blue dots, is outside the decision boundary. According to the definition, whereas C and D are not visible neighbours of sample P, A and B are. It is possible to trick the classification model into making an erroneous decision border if data points arbitrarily created between P and its unseen neighbours (red dashed lines) end up in the opposite class. Data produced between P and its “visible” neighbours, shown by the prone to be in the underground class, though. Thus, all that has to be done to produce the resampled data is to draw lines among P and its “visible” neighbours.

Uncertainty via self‑inspection

The performance of classification models heavily depends on the quality of the training data. Various statistical measures have been developed to assess the accuracy of classification data concerning its uncertainty. These include methods such as uncertainty via classification, Bayesian approaches, and direct uncertainty prediction36.

The uncertainty via classification technique assigns a scalar uncertainty score to each sample, reflecting the degree of disagreement among experts regarding the label of that sample. A method is proposed to assess uncertainty in the resampling schema, considering the likelihood of the resampled data belonging to the majority or an incorrect class. An increased likelihood indicates higher uncertainty in the resampled data.

By employing a trained collection of inspectors or classifiers to predict the labels of each resampled data point, the uncertainty score of the data can be approximated. The uncertainty score is then determined based on the number of inspectors who incorrectly or overwhelmingly categorize the resampled data.

Formally, given a data produced from adaptive selection procedure (\(\:{x}_{\text{i}}\)) besides M inspectors \(\:{Rf}_{1},\:.\:.\:.\:,\:R{f}_{M}\), Eq. (2) which characterizes the uncertainty score of this illustration is distinct as:

where \(\:I(\cdot)\) is an indicator function. The suggested SASMOTE method disregards resampled data as low-quality if its uncertainty score is greater than a predetermined threshold. The inspectors receive their training from training samples that are batched. Specifically, the minority class is combined with each of the M subgroups that are formed by dividing the majority class. This process yields the batched training data. In each batch of training data, the size of the majority class is controlled by the number of inspectors. The number of inspectors, denoted as M, is determined by dividing the training data’s majority and minority samples in half. This ensures that each batch has evenly distributed data. The Quokka swarm optimisation (QSO) is utilised to train each inspector, and a tuning parameter known as the threshold of uncertainty score is set at 0.7 by default. The final classification model is trained using an integration of the resampled data that passes through the inspection and the original training data.

Mathematical explanation of Quokka swarm optimization (QSO)

QSO is a nature-inspired optimization algorithm modeled after the social behavior of quokkas, known for their cooperative foraging strategies. In this work, QSO is used to automatically find the optimal sampling rate for SASMOTE and to train the inspectors used in the self-inspection process. Its ability to balance exploration (searching for diverse solutions) and exploitation (refining the best-known solutions) helps ensure that the resampling process does not overfit or underrepresent the minority class. By leveraging adaptive parameter updates based on environmental cues (like humidity and food availability), QSO improves the diversity and quality of synthetic samples, resulting in better generalization of the recommendation model. The following are some potential inspirations for future projects derived from the behavior of quokkas:

-

Interactions with Others: Quokkas are well-known for their sociability and ability to live in groups. By studying how quokkas interact within communities, we modeled our algorithm on their social behaviors and cooperative tendencies.

-

Adaptability: Quokkas have demonstrated remarkable adaptability to various environments, showcasing resilience and resourcefulness in their foraging and survival strategies.

-

Foraging and Exploration: Quokkas are recognized for their inquisitive and adventurous nature, often venturing into uncharted territories to gather food and resources. This behavior inspired the development of a novel swarm optimization algorithm that effectively balances exploration and exploitation to find optimal solutions.

A) Position update.

The following equations illustrate how the optimal quokka position within a group influences the updating of each quokka’s site within that group.:

(Where \(\:{D}^{\text{o}\text{l}\text{d}}\) characterizes the Drought besides its charge among \(\:\left[\text{0,1}\right]),\:T\) stands for the temperature ratio, which falls within the range of 0.2 to 0.44, and H for the humidity ratio, which ranges from 0.3 to 0.65. to settled on these ratios since that’s the range of temperatures and humidity levels that quokkas can survive. A random number with a value between 0 and represented by rand, Δw is the difference in weight among the leader and quokka i. 1, \(\:\varDelta\:X\) characterizes the differences of quokka \(\:i\), quokka’s new site is characterized by \(\:{X}^{\text{n}\text{e}\text{w}}\), while the old site is characterized by \(\:{X}^{\text{o}\text{l}\text{d}}\), This ratio was selected because quokkas require a certain quantity of nitrogen, and N symbolises the nitrogen ratio, where the value can be anywhere from zero to one. As the value approaches 0, the quokka’s dehydration rate will increase, which is bad for them. On the other hand, a value closer to 1 is beneficial. Because quokkas cannot withstand extremely high levels of both heat and humidity, the first equation specifies that the total of these two variables should not be more than 0.8.

B) Quokka optimization algorithm.

The QSO algorithmic software emulates the behavior of quokka animals. Below is an explanation of the pseudo-code for the proposed QSO algorithm. In exploratory mode, the QSO algorithm initially generates solutions randomly and evaluates their suitability. Following this, the values for nitrogen, humidity, and temperature are initialized. When the algorithm detects that the global optimum is nearby, it transitions from exploration to local exploitation mode, focusing on promising areas and promoting the fittest quokka to the role of leader. In subsequent optimization problems, the leader represents the optimal solution. The search agents then switch back to the exploration phase before initiating another exploitation phase. According to Eqs. (3) and (4), each quokka’s humidity and location are updated. After evaluating the leader’s fitness level, the fitness levels of all other quokkas are updated accordingly. The algorithm continues iterating until the halting condition is met, at which point it returns the leader as the best estimate for solving the optimization problem.

Classification using hybrid algorithm

Load forecasting is the primary function of the model. The hybrid model consists of two Long Short-Term Memory (LSTM) layers, two Short-Term Memory (SC) modules, and three skip connections. The LSTM layers extract load-related temporal characteristics, with each LSTM layer containing 48 units. Two modified SC modules are employed to process these features. To extract both local and global characteristics, each SC module utilizes three data-flowing paths with different combinations of Convolutional Neural Network (CNN) layers, each with varying filter widths.

The second LSTM layer’s output is concatenated with the outputs of the first and second SC modules, while the other two SC modules are utilized for the three skip connections. However, this architecture increases the hidden layer parameters, causing an imbalance in the representation of SC- and LSTM-derived features. To address this, a 1 × 1 convolutional block with 64 filters is applied in both directions. The third skip connection is formed by connecting the output of the first SC module to that of the second SC module.

After being flattened, the combined features pass through an activation layer with tanh activation. This is followed by a dense layer with a sigmoid activation function and a number of neurons proportional to the forecasting interval, which is succeeded by a dropout layer. It should be noted that the network extensively utilizes convolutional layers; as shown in Fig. 4A, each convolutional block consists of a batch normalization layer, a selu activation function, and a convolutional layer.

Architectural ingredients of the projected classical (a) convolutional block (b) SC unit37.

Furthermore, every convolution layer employs L2 regularization with k = 0.0005k = 0.0005k = 0.0005. As illustrated in Fig. 4B, the SC module is structured with three parallel routes. The first route utilizes a convolution layer with a kernel size of 1 to capture local feature information. The second route contains two convolution layers: the first employs a one-dimensional kernel, while the second uses a three-dimensional kernel to reduce dimensionality. Similarly, the third route mirrors the structure of the second but features a first layer with a kernel size of 1 and a second layer with a kernel size of 5. Table 1 outlines the hyperparameters for two of the representation’s inception modules, while Fig. 5 illustrates the complete design.

Hyper-parameter tuning using WSOA

If the data contains superfluous features, the model may learn to ignore them, resulting in reduced classification accuracy. This issue can only be addressed by extracting optimal solutions from the processed dataset. To achieve this, a hybrid mutation technique combined with the White Shark Optimizer (WSO) is employed to select the parameters.

The hypothesis behind the WSO is that it simulates the foraging behavior of white sharks38. While swimming, white sharks locate their prey by utilizing ocean currents and other underwater phenomena. White sharks exhibit three key behaviors during prey capture: (1) quickly capturing prey, (2) identifying the most abundant food source, and (3) attracting other sharks to swim toward the optimal food source when they are near it. The initial population of white sharks is represented as follows.

Where \(\:{W}_{q}^{p}\) refers to the pth white shark’s starting limits in dimension. The symbol for the boundaries in qth dimension is \(\:{up}_{q}\) and \(\:{lb}_{q}\), correspondingly. Whereas r denotes a random sum in the range [0, 1].

The speed at which a white shark can track its victim across the changing patterns of the ocean waves is;

where \(\:s\:=\:1,\:2,\:\cdots m\) represents the white shark for a populace size of m. The new speed of the pth shark is represented as \(\:{vl}_{s+1}^{p}\) in (s + 1)th step. The pth shark’s beginning speed in the sth step is represented as \(\:{vl}_{s}^{p}\). The top spot that any pth shark has reached in sth step on a global scale is represented as \(\:{W}_{{gbest}_{s}}\). The initial position of the pth shark in sth step is denoted as \(\:{W}_{s}^{p}\). The best position of the pth shark and vector on attaining the best position are denoted as \(\:{W}_{best}^{{vl}_{s}^{p}}\) and \(\:{vc}^{\text{i}}\). Where \(\:{C}_{1}\) and \(\:{C}_{2}\) generating random integers between 1 and 0 is defined in the equation. The forces that the shark uses to regulate the impact off \(\:{W}_{{gbest}_{s}}\) and \(\:{W}_{best}^{{vl}_{s}^{p}}\) on \(\:{W}_{s}^{p}.\mu\:\) characterizes to analyze factor of the shark. The index vector of white shark is characterized as;

where \(\:rand(1,\:t)\) is a vector of integers drawn at random from the interval [0, 1] using a uniform distribution. The following are the shark’s forces that it uses to influence the effect:

To have u and U for the starting and maximum iteration sums, and as for the white shark’s current besides subordinate velocities, to have \(\:{F}_{min}\) and \(\:{F}_{max}\). The convergence factor is represented as;

τ is the acceleration coefficient that is specified. The following is a representation of the approach for updating the white shark’s position.

The new site of the pth shark in (s + 1) repetition, \(\:\daleth\:\) to stand for the negation operative, and c and d for binary vectors. The boundaries of the search space are represented by \(\:lo\) and \(\:up\), respectively. The vector \(\:{W}_{o}\) and the frequency \(\:fr\) indicate the shark’s movement. Here is how the logic and binary vectors are represented:

The frequency at which shark interchanges is characterised as;

\(\:{fr}_{max}\) and \(\:{fr}_{min}\) characterizes the frequency degrees. The increase in force at every repetition is characterized as;

where MV characterizes the weight of terms in document.

The best optimal key is characterized as;

where the new location after the white shark’s food source is indicated as \(\:{W}_{s+1}^{{\prime\:}p}\). For changing the direction of the search, the sgn(r2 − 0.5) returns 1 or −1. Shark distance and food source \(\:{\stackrel{-}{Dis}}_{W}\) and how powerful white sharks are when they trail other sharks to their prey. \(\:{Str}_{sns}\) is verbalized as follows;

All other shark positions are adjusted in relation to the two initial most optimum solutions, which are maintained constant. Here is the formula for the sharks’ fish school behaviour.

The weight factor p\(\:we\) is represented as;

where \(\:{q}_{fit}\) is the appropriateness of every word used in the text. Here to see the expanded form of the equation;

In order to expedite the convergence process, the WSO is subjected to the use of hybrid mutation HM. Consequently, the optimizer-led hybrid mutation is depicted as;

whereas \(\:{G}_{a}\left(\mu\:,\sigma\:\right)\) and \(\:{C}_{a}\left(\mu\:{\prime\:},\sigma\:{\prime\:}\right)\) characterizes a random sum of both Gaussian besides Cauchy distribution. (µ, σ) besides (µ′, σ ′) characterizes the mean besides variance function of both Gaussian besides Cauchy distributions. D1 and D2 characterizes the coefficients of Gaussian \(\:{t+1}_{GM}\) along with Cauchy \(\:{t+1}_{CM}\) mutation. An alternative solution, denoted as x′; is generated by implementing these two-hybrid mutation operators.

where,

whereas \(\:{p}_{we}\) is the population size, and PS is the weight vector. As a representation of the features that were chosen from the features that were retrieved, \(\:Sel(p\:=\:1,\:2,\:\cdots m)\). The WSO output is indicated as \(\:\left(sel\right)=\{se{l}^{1},\:se{l}^{2},\:\cdots se{l}^{m}\}\), It constitutes an additional collection of terms within the database. Simultaneously, m represents a fresh count of every single characteristic. At last, the best features for a dataset document are provided by the parameter selection stage.

Results and discussion

Experimental analysis, including implementation setup and dataset descriptions, is discussed in this part.

Hardware environment

This experiment’s hardware setup began with server running an \(\:AMD\:Ryzen\:9\:5950X\:@\:3.40\:GHz\:\)CPU with 1 TB of RAM. On top of that, it has four 24-gigabyte Nvidia GeForce RTX 3080 GPUs installed39. Particularly well-suited for deep learning’s training and inference workloads, this robust hardware configuration offered exceptional computing and storage capabilities40,41.

Dataset description

The goodbooks-10k open user-book dataset was utilised. The dataset may be accessed through the GitHub website (\(\:https://github.com/zygmuntz/goodbooks-10k),\) besides Zygmunt made this data public42. Among the 53,424 distinct users, 10,000 distinct book data points, besides 5,976,479 ratings found in this dataset. The rating can take on values between 1 and 5. No further processing of the data was carried out. Dataset elements displayed in Table 2 comprise user IDs, book IDs, and ratings.

The data used for this investigation was collected from 3.5 million product reviews on Amazon43. For each review, details such as the reviewer’s name, ID, posting date, number of favorable votes, review title, and rating were logged. However, for this analysis, only two attributes—the review text and the rating—were utilized. The ratings were based on a scale from 1 to 5.

Three separate experiments were conducted in this study. In the first experiment, the proposed model was used to predict five classes, with ratings ranging from 1 to 5. In the second experiment, the model was used to forecast three groups: negative (class 0, ratings 1 and 2), neutral (class 1, rating 3), and positive (class 2, ratings 4 and 5). In the third experiment, the model was employed to predict these three classes.

Figure 6 illustrates the dataset used to visualize the relationship between the number of reviews and ratings, demonstrating a significant imbalance in the distribution of reviews across different data types. Table 3 provides a description of the second dataset.

Analysis of proposed methodology

Table 4 presents the performance investigation of proposed model on two datasets in terms of different metrics.

The performance evaluation of the proposed model was conducted on Dataset 1 and Dataset 2 using RMSE, MAE, MAPE (%), and R² metrics for both the training and test sets.

For Dataset 1, the training set achieves an RMSE of 1.447, MAE of 1.083, MAPE of 2.134%, and R² of 0.976, indicating high accuracy. The test set also performs well, with an RMSE of 1.595, MAE of 0.312, MAPE of 2.469%, and R² of 0.973, demonstrating reliable generalization.

For Dataset 2, the training set outperforms Dataset 1, achieving an RMSE of 0.354, MAE of 0.271, MAPE of 0.528%, and an excellent R² of 0.999, showcasing exceptional accuracy. Similarly, the test set maintains high performance, with an RMSE of 0.508, MAE of 0.080, MAPE of 0.653%, and R² of 0.997.

This analysis highlights that the proposed model performs consistently across both datasets, with particularly outstanding results on Dataset 2, reflecting its robustness and reliability.

Validation study of proposed model with existing techniques

The existing techniques utilize different datasets; therefore, this research considered the basic techniques and implemented them on the selected datasets. The results were averaged, and the outcomes are presented in Tables 5, 6 and 7.

A comparative study of the proposed model and three other classifiers—EGJO-LSTM22, Modified CNN28, and FuzzyRec33—on Dataset 1 was conducted using R², RMSE, and MAE for both training and validation datasets. EGJO-LSTM22 achieved the best training performance (R²: 0.95, RMSE: 20.35, MAE: 3.54) and decent validation results (R²: 0.62, RMSE: 45.00, MAE: 37.59). Modified CNN28 delivered moderate results during training (R²: 0.74, RMSE: 40.55, MAE: 25.99) and better validation metrics (R²: 0.68, RMSE: 42.04, MAE: 36.53). FuzzyRec33 showed slightly weaker training performance (R²: 0.72, RMSE: 43.80, MAE: 31.57) and poorer validation results (R²: 0.58, RMSE: 47.39, MAE: 37.31).

The proposed model exhibited consistent performance across both datasets, with an R² of 0.70, RMSE of 44.32, and MAE of 30.88 during training, and an R² of 0.70, RMSE of 46.71, and MAE of 39.81 during validation.

A comparative analysis of the proposed model with EGJO-LSTM22, Modified CNN28, and FuzzyRec33 was conducted on Dataset 2, using metrics R², RMSE, and MAE for both training and validation datasets. EGJO-LSTM22 achieves the highest training performance, with an R² of 0.95, RMSE of 26.48, and MAE of 5.91. However, its validation performance is the weakest, with R² dropping to 0.12, RMSE rising to 65.43, and MAE increasing to 58.20, indicating poor generalization. Modified CNN28 demonstrates moderate training performance (R²: 0.51, RMSE: 60.89, MAE: 44.35) but shows minimal improvement during validation (R²: 0.16, RMSE: 64.04, MAE: 52.86). FuzzyRec33 exhibits similar training results to Modified CNN, with an R² of 0.55, RMSE of 57.17, and MAE of 43.48. Its validation performance is also low, with an R² of 0.13, RMSE of 66.34, and MAE of 52.76.

The proposed model records the lowest training metrics, with an R² of 0.37, RMSE of 70.74, and MAE of 56.84, but achieves slightly better validation results than the others, with an R² of 0.23, RMSE of 64.67, and MAE of 51.98.

The performance of the proposed model was compared with EGJO-LSTM22, Modified CNN28, and FuzzyRec33 on a combined dataset using R², RMSE, and MAE metrics for both training and validation datasets.

In the Training Dataset, EGJO-LSTM22 performs the best, achieving an R² of 0.97, RMSE of 19.41, and MAE of 2.96, indicating strong predictive accuracy. Modified CNN28 and FuzzyRec33 deliver moderate results, with R² values of 0.86 and 0.87, respectively, along with relatively higher RMSE and MAE. The proposed model has an R² of 0.80, RMSE of 44.48, and MAE of 29.82, reflecting weaker training performance compared to the other models.

In the Validation Dataset, EGJO-LSTM22 suffers a sharp decline in performance, with R² dropping to 0.62, along with high RMSE (43.13) and MAE (35.83). Modified CNN28 performs better in validation than in training, achieving an R² of 0.73 and improved RMSE (38.68) and MAE (32.28). FuzzyRec33 exhibits the lowest validation performance, with an R² of 0.44, RMSE of 64.33, and MAE of 53.74. The proposed model balances training and validation performance, with an R² of 0.69, RMSE of 40.11, and MAE of 33.69, indicating better generalization than EGJO-LSTM and FuzzyRec.

Overall, the proposed model demonstrates a stable trade-off between training and validation performance, achieving better generalization compared to its counterparts.

Discussion

The results of this study demonstrate the robustness and generalizability of the proposed model in comparison to existing methods such as EGJO-LSTM, Modified CNN, and FuzzyRec. While EGJO-LSTM excels in training performance, its significant drop in validation metrics highlights poor generalization capabilities. Modified CNN achieves a moderate balance between training and validation but lacks the consistency required for high-stakes applications. FuzzyRec, despite stable training results, underperforms significantly during validation, indicating limitations in handling diverse datasets.

In contrast, the proposed model shows a stable trade-off between training and validation performance, achieving superior generalization compared to EGJO-LSTM and FuzzyRec. Its ability to maintain balanced metrics across multiple datasets underscores its effectiveness in addressing challenges such as overfitting and data imbalance. Additionally, the model’s use of hybrid optimization techniques contributes to its improved performance, particularly in sparse and noisy data scenarios.

The findings highlight the need for models that prioritize generalization alongside training accuracy, especially in real-world applications where validation performance is critical. Future research could explore further enhancements to the proposed framework, including advanced feature extraction and optimization strategies, to improve performance and scalability across diverse datasets and domains.

Limitations

Despite its promising performance, the proposed model has several limitations. First, the evaluation is limited to the goodbooks-10k and Amazon review datasets, which, while representative of e-commerce applications, may not capture the full spectrum of recommendation scenarios. This may restrict the model’s generalizability to other domains such as healthcare, education, or entertainment. Future work will involve testing the framework on a broader range of datasets to assess its cross-domain adaptability and scalability.

Second, the model’s hybrid architecture—integrating LSTM, SC modules, and multiple optimization techniques—incurs greater computational overhead compared to simpler models like Modified CNN and FuzzyRec. This makes the model less suitable for real-time systems or environments with constrained resources. In future work, we plan to explore model compression techniques such as pruning, knowledge distillation, and quantization to reduce computational demands while preserving accuracy.

Conclusion

This study presents an innovative deep learning framework for improving e-publication service recommendations, addressing limitations in traditional collaborative filtering methods. By combining feature extraction with a hybrid approach involving LSTM and split-convolution networks, the proposed model effectively captures both local and global features. The incorporation of SASMOTE, a self-inspected adaptive SMOTE algorithm, addresses challenges in handling imbalanced data by generating high-quality, representative samples through adaptive neighborhood selection and uncertainty inspection. The experimental results demonstrate that the proposed model outperforms existing techniques in terms of prediction accuracy and other performance metrics, proving its robustness and reliability across large datasets. Despite its advantages, the model has certain limitations. The absence of precise user visit timestamps in datasets like Amazon reviews may introduce skewed results. Additionally, the model’s ability to handle complex linguistic challenges such as subjective reviews, sarcasm, and irony remains constrained. The opaqueness of deep learning models, often criticized for their lack of interpretability, also poses challenges in deriving traceable conclusions.

Future work will address current limitations by validating the model across diverse datasets from domains such as education, entertainment, and healthcare to ensure scalability and adaptability. Advanced NLP techniques—including sentiment analysis, emotion recognition, and sarcasm detection—will be integrated to better interpret complex user inputs. To enhance transparency, explainable AI methods like attention mechanisms, SHAP, and LIME will be employed, making model predictions more interpretable and trustworthy.

Data availability

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

References

Buzzetto-Hollywood, N. A. & Thomas-Banks, L. Impact of an unlimited e-book subscription service and digital learning solution in management education at a minority-serving university. J. Inform. Technol. Education: Res. 21, 597–622 (2022).

Kim, J. Y. & Lim, C. K. Feature extracted deep neural collaborative filtering for e-book service recommendations. Appl. Sci. 13 (11), 6833 (2023).

Merkle, A. C., Ferrell, L. K., Ferrell, O. C. & Hair, J. F. Jr Evaluating e-book effectiveness and the impact on student engagement. J. Mark. Educ. 44 (1), 54–71 (2022).

Rong, Y. et al. Dec., Du-Bus: A Realtime Bus Waiting Time Estimation System Based On Multi-Source Data, in IEEE Transactions on Intelligent Transportation Systems, vol. 23, no. 12, pp. 24524–24539, (2022). https://doi.org/10.1109/TITS.2022.3210170

Stejskal, J. & Hajek, P. The impact of COVID-19 on e-book reading behavior: the case of the municipal library of Prague. Libr. Q. 92 (4), 388–404 (2022).

Sun, G., Li, Y., Liao, D. & Chang, V. Service Function Chain Orchestration Across Multiple Domains: A Full Mesh Aggregation Approach, in IEEE Transactions on Network and Service Management, vol. 15, no. 3, pp. 1175–1191, Sept. (2018). https://doi.org/10.1109/TNSM.2018.2861717

Vahidy Rodpysh, K., Mirabedini, S. J. & Banirostam, T. Hybrid method of recommender system to decrement cold start and sparse data issues. J. Electr. Comput. Eng. Innovations. 9 (2), 249–263 (2021).

Maloney-Newton, S., Hickey, M. & Brant, J. M. (eds) Mosby’s Oncology Nursing Advisor-E-Book: A Comprehensive Guide to Clinical Practice (Elsevier Health Sciences, 2023).

Xu, Y., Zhuang, F., Wang, E., Li, C. & Wu, J. Learning Without Missing-At-Random Prior Propensity-A Generative Approach for Recommender Systems, in IEEE Transactions on Knowledge and Data Engineering, vol. 37, no. 2, pp. 754–765, Feb. (2025). https://doi.org/10.1109/TKDE.2024.3490593

Ko, H., Lee, S., Park, Y. & Choi, A. A survey of recommendation systems: recommendation models, techniques, and application fields. Electronics 11 (1), 141 (2022).

Ngaffo, A. N., Ayeb, W. E. & Choukair, Z. Service recommendation driven by a matrix factorization model and time series forecasting. Appl. Intell., 1–16 (2022).

Junfeng Hao, P., Chen, J., Chen, X. & Li Effectively detecting and diagnosing distributed multivariate time series anomalies via unsupervised federated Hypernetwork. Inf. Process. Manag. 62 (Issue 4), 104107 (2025).

Agarwal, N., Sikka, G. & Awasthi, L. K. A systematic literature review on web service clustering approaches to enhance service discovery, selection and recommendation. Comput. Sci. Rev. 45, 100498 (2022).

Zhou, Q. et al. Hybrid collaborative filtering model for consumer dynamic service recommendation based on mobile cloud information system. Inf. Process. Manag. 59 (2), 102871 (2022).

Cao, B. et al. Web service recommendation via combining bilinear graph representation and xDeepFM quality prediction. IEEE Trans. Netw. Serv. Manage. 20 (2), 1078–1092 (2023).

Chen, H. et al. Graph Cross-Correlated Network for Recommendation, in IEEE Transactions on Knowledge and Data Engineering, vol. 37, no. 2, pp. 710–723, Feb. (2025). https://doi.org/10.1109/TKDE.2024.3491778

Wang, G. et al. Motif-based graph attentional neural network for web service recommendation. Knowl. Based Syst. 269, 110512 (2023).

Zhang, Y., Zhao, M., Chen, Y. & Lu, Y. Yiu-ming Cheung, learning unified distance metric for heterogeneous attribute data clustering. Expert Syst. Appl. 273, 126738 (2025).

Shi, L. L., Liu, L., Jiang, L., Zhu, R. & Panneerselvam, J. QoS prediction for smart service management and recommendation based on the location of mobile users. Neurocomputing 471, 12–20 (2022).

Zhang, S., Zhang, D., Wu, Y. & Zhong, H. Service recommendation model based on trust and QoS for social internet of things. IEEE Trans. Serv. Comput. 16 (5), 3736–3750 (2023).

Xu, K., Zhou, H., Zheng, H., Zhu, M. & Xin, Q. Intelligent classification and personalized recommendation of e-commerce products based on machine learning. ArXiv Preprint. arXiv:2403.19345 (2024).

Rasappan, P., Premkumar, M., Sinha, G. & Chandrasekaran, K. Transforming sentiment analysis for e-commerce product reviews: hybrid deep learning model with an innovative term weighting and feature selection. Inf. Process. Manag. 61 (3), 103654 (2024).

Jain, S. & Roy, P. K. E-commerce review sentiment score prediction considering misspelled words: a deep learning approach. Electron. Commer. Res. 24 (3), 1737–1761 (2024).

Suvarna, B. & Balakrishna, S. Enhanced content-based fashion recommendation system through deep ensemble classifier with transfer learning. Fashion Textiles. 11 (1), 24 (2024).

Rane, N., Choudhary, S. P. & Rane, J. Ensemble deep learning and machine learning: applications, opportunities, challenges, and future directions. Stud. Med. Health Sci. 1 (2), 18–41 (2024).

Xu, K., Zheng, H., Zhan, X., Zhou, S. & Niu, K. Evaluation and optimization of intelligent recommendation system performance with cloud resource automation compatibility. Appl. Comput. Eng. 87, 228–233 (2024).

Nawoya, S. et al. Computer vision and deep learning in insects for food and feed production: a review. Comput. Electron. Agric. 216, 108503 (2024).

Latha, Y. M. & Rao, B. S. Product recommendation using enhanced convolutional neural network for e-commerce platform. Cluster Comput. 27 (2), 1639–1653 (2024).

Zhao, Z. et al. Enhancing e-commerce recommendations: unveiling insights from customer reviews with BERTFusionDNN. J. Theory Pract. Eng. Sci. 4 (2), 38–44 (2024).

Chen, G., Huang, L., Xiao, S., Zhang, C. & Zhao, H. Attending to customer attention: a novel deep learning method for leveraging multimodal online reviews to enhance sales prediction. Inform. Syst. Res. 35 (2), 829–849 (2024).

Sharma, A. K. et al. An efficient approach of product recommendation system using NLP technique. Mater. Today: Proc. 80, 3730–3743 (2023).

Choudhary, C., Singh, I. & Kumar, M. SARWAS: deep ensemble learning techniques for sentiment-based recommendation system. Expert Syst. Appl. 216, 119420 (2023).

Pham, P., Nguyen, L. T., Nguyen, N. T., Kozma, R. & Vo, B. A hierarchical fused fuzzy deep neural network with heterogeneous network embedding for recommendation. Inf. Sci. 620, 105–124 (2023).

Liu, B. Intelligent advertising recommendation and abnormal advertising monitoring system in the field of machine learning. Int. J. Comput. Sci. Inform. Technol. 1 (1), 17–23 (2023).

Solairaj, A., Sugitha, G. & Kavitha, G. Enhanced Elman Spike neural network-based sentiment analysis of online product recommendation. Appl. Soft Comput. 132, 109789 (2023).

Su, Q. et al. Attention transfer reinforcement learning for test case prioritization in continuous integration. Appl. Sci. 15 (4), 2243. https://doi.org/10.3390/app15042243 (2025).

Ullah, I. et al. Multi-horizon short-term load forecasting using hybrid of LSTM and modified split Convolution. PeerJ Comput. Sci. 9, e1487 (2023).

Braik, M. et al. White shark optimizer: A novel bio-inspired meta-heuristic algorithm for global optimization problems. Knowl. Based Syst. 243, 108457 (2022).

Aluvalu, R. et al. Komodo Dragon Mlipir Algorithm-based CNN model for detection of illegal tree cutting in smart IoT forest areas. Recent. Adv. Comput. Sci. Commun. 17 (6), 1–12 (2024).

Song, L., Chen, S., Meng, Z., Sun, M. & Shang, X. A Fine-Grained multimodal sentiment analysis dataset based on stock comment videos. IEEE Trans. Multimedia. 26, 7294–7306. https://doi.org/10.1109/TMM.2024.3363641 (2024).

Wang, P. et al. Server-Initiated federated unlearning to eliminate impacts of Low-Quality data. IEEE Trans. Serv. Comput. 17 (3), 1196–1211. https://doi.org/10.1109/TSC.2024.3355188 (May-June 2024).

Goodbooks-10k. Available online: https://github.com/zygmuntz/goodbooks-10k

King, E. Amazon customer reviews (2016). Available: https://www.kaggle.com/datasets/vivekprajapati2048/amazoncustomer-reviews?datasetId=1470538

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

All authors contributed equally.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval

The submitted work is original and has not been published elsewhere in any form or language.

Research involving human participants and/or animals

NA.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Vatambeti, R., Gandikota, H.P., Siri, D. et al. Enhancing sparse data recommendations with self-inspected adaptive SMOTE and hybrid neural networks. Sci Rep 15, 17229 (2025). https://doi.org/10.1038/s41598-025-02593-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-02593-9