Abstract

Federated learning provides an effective solution to the data privacy issue in distributed machine learning. However, distributed federated learning systems are inherently susceptible to data poisoning attacks and data heterogeneity. Under conditions of high data heterogeneity, the gradient conflict problem in federated learning becomes more pronounced, making traditional defense mechanisms against poisoning attacks less adaptable between scenarios with and without attacks. To address this challenge, we design a two-stage federated learning framework for defending against poisoning attacks—FedCVG. During implementation, FedCVG first removes malicious clients using a reputation-based clustering method, and then optimizes communication overhead through a virtual aggregation mechanism. Extensive experimental results show that, compared to other baseline methods, FedCVG improves average accuracy by 4.2% and reduces communication overhead by approximately 50% while defending against poisoning attacks.

Similar content being viewed by others

Introduction

With the growing demand for data privacy protection, traditional centralized machine learning methods have gradually exposed their limitations in the face of data privacy risks. In many practical applications, data involves a large amount of sensitive information, such as medical records, financial data, etc. Directly aggregating this data on a server for processing not only poses significant privacy risks but may also violate relevant laws and regulations.

To achieve training without moving data, federated learning1 was introduced. Federated learning is a new distributed learning paradigm, and its core idea is to decentralize the data processing process to various data-holding ends (i.e., clients), avoiding the need to upload raw data to a server. Specifically, clients train models locally and only upload model parameter updates instead of raw data, effectively protecting user privacy. This mechanism enables federated learning to ensure data privacy while achieving large-scale collaborative learning, providing feasible solutions for processing privacy-sensitive data in fields such as smartphones, medical systems, and financial platforms.

The basic process of federated learning typically includes the following key steps: First, the server initializes a global model and distributes it to the clients. Each client trains the model based on local data and calculates the local model parameter updates. Then, the clients send these model updates to the server, rather than uploading local data. The server collects and aggregates the model updates from each client, obtaining the global model update through an aggregation algorithm (e.g., weighted average). Finally, the updated global model is returned to the clients to begin a new round of training iterations, until the model reaches the predetermined convergence criterion.

Although federated learning offers significant advantages in data privacy protection, it still faces numerous challenges in practical applications. First, data heterogeneity2 is a major challenge in federated learning. Due to substantial differences in the local data distributions across clients, traditional model aggregation methods often struggle to effectively address this heterogeneity, resulting in suboptimal convergence of the global model and even compromising final predictive performance. Second, poisoning attacks3 pose a serious security threat to federated learning. Malicious clients may upload tampered model updates, leading to a significant decline in the performance of the global model and even disrupting the normal operation of the system. Therefore, how to address these issues while ensuring privacy protection remains a key challenge in the development of federated learning technology. The following sections will systematically review the latest advances in related research from the perspectives of optimization algorithms and defenses against poisoning attacks.

Federated learning optimization algorithms aim to efficiently train models on decentralized devices while addressing challenges such as high communication costs, data distribution heterogeneity, and system heterogeneity. McMahan et al. (2017) proposed Federated Averaging (FedAvg), which updates the global model by taking the weighted average of local model updates. This method has become the foundation of federated learning optimization4. However, FedAvg may converge slowly in scenarios with strong data heterogeneity, especially when the client data is non-independent and identically distributed (Non-IID).To address heterogeneity, Li et al. (2018) introduced FedProx, which adds a proximal term to the local objective function to constrain the deviation of the client model from the global model, thus improving stability5. Karimireddy et al. (2020) introduced SCAFFOLD, which uses control variables to estimate the direction of global model updates and correct client drift, thereby accelerating convergence6. Reddi et al. (2020) explored adaptive optimization methods such as FedAdam and FedYogi, which adjust learning rates based on historical gradients, similar to adaptive optimizers in centralized settings, improving federated learning performance7. Additionally, federated learning for different scenarios has also been continuously developing. FedNova normalizes the number of local update steps to balance the contributions of different clients8, making federated learning more adaptable to scenarios with varying client computational capabilities. FedOpt provides a universal federated optimization framework that supports flexible combinations of various optimization strategies9.

Due to its distributed nature, federated learning is vulnerable to poisoning attacks. Sagar et al. (2023) provided a classification of poisoning attacks and defenses in “Poisoning Attacks and Defenses in Federated Learning: A Survey.” Existing defense strategies for poisoning attacks are mainly based on Byzantine-robust aggregation, validation-based methods, and Model Analysis10. Byzantine-robust aggregation methods, such as Krum (Blanchard et al., 2017), select updates closest to the majority11, and trimmed mean (Yin et al., 2018) averages values after removing outliers12. These methods can mitigate the impact of outliers to some extent, but their robustness is limited in the case of non-IID data and multi-party coordinated attacks. Fung et al. (2018) proposed FoolsGold, which identifies Sybil attackers by analyzing the diversity of client updates, but this method assumes that malicious clients’ updates are more similar13. Model Analysis-based methods, such as Euclidean distance14, K-Means clustering15, joint similarity16, and disparity statistics17, assume that two clients sufficiently close belong to the same category, but they assume that attackers are in the minority. For validation-based methods18, proposed a federated learning framework that evaluates client reputation through the global model’s performance, but it uses real data from clients, while Han et al.‘s method19 set up a clean test set on the client side to evaluate client loss to identify anomaly stages. Similarly, these methods undermine federated learning’s privacy protection to some extent.

Some traditional algorithms for defending against poisoning attacks are shown in Table 1.

Although substantial progress has been made in both optimization algorithms and defense against poisoning attacks in federated learning, research that integrates these two aspects remains relatively limited. As data heterogeneity increases, gradient conflicts among clients become more pronounced, which not only complicates model training but also poses greater challenges for identifying malicious nodes22. Recently proposed methods such as MSGuard23 and SecDefender24 have provided new perspectives for addressing complex defense scenarios in federated learning. The core of MSGuard lies in integrating multiple anomaly detection features—including sign statistics, cosine similarity, and spectral anomaly scores—and employing clustering algorithms to adaptively identify trustworthy clients. SecDefender, on the other hand, addresses practical issues such as multi-domain applications, data heterogeneity, label-flipping attacks, and low-quality models. Nevertheless, comprehensive studies targeting such complex scenarios remain relatively scarce overall.

To address the above issues, this paper proposes a two-stage federated learning framework for defending against poisoning attacks. The first stage is the malicious node identification process based on a reputation mechanism and K-means clustering, aimed at identifying and removing malicious clients performing poisoning under data heterogeneity. The second stage decouples feature extraction and classifier training processes, and implements “virtual aggregation” of clients by recording historical gradients.

Our contributions are as follows:

-

1.

We propose a two-stage federated learning framework that reduces the likelihood of poisoning attacks while maintaining high accuracy.

-

2.

We design a decoupled feature extraction and classifier training process, and implement a “virtual aggregation” mechanism for clients by recording historical gradients, reducing the risk of model inversion attacks.

-

3.

Compared to traditional algorithms, our algorithm achieves better defense against poisoning attacks, improves accuracy, reduces communication overhead, and minimizes the risk of privacy leakage.

The structure of this paper is as follows: “Problem overview” Section will introduce the issues currently faced by federated learning; “Methods” Section will present the details and process of the FedCVG algorithm; “Results” Section will provide experimental results and analysis; “Conclusion” Section will summarize the paper and propose future research directions.

Problem overview

Federated learning

In a typical federated learning setup, there are \(\:n\) clients, each client \(\:{\:C}_{i}\) has its own local dataset \(\:{D}_{i}\:\left(i\in\:\left[1,n\right]\right)\). In each communication round, \(\:k\) clients\(\:\:\left(k\le\:n\right)\:\)are selected to participate in training the global model. All clients share a global model \(\:{W}_{t}\), and each client updates its local model based on its local data. Assume the local update rule for client\(\:{\:\:C}_{i}\) is:

where \(\:{W}_{i}^{t}\) is the global model at round \(\:t\), \(\:\eta\:\) is the learning rate, and \(\:L\left({w}_{t},{D}_{i}\right)\) is the loss function computed on the dataset \(\:{\:D}_{i}\) of client \(\:{\:C}_{i\:}\).

After calculating the local model \(\:{w}_{i}^{t+1}\), each client uploads it to the server. The server aggregates the local updates from all clients using the averaging mechanism to update the global model:

Poisoning attacks

The Gaussian attack is a method of disrupting model training by adding noise to the gradient updates. Specifically, the attacker adds Gaussian-distributed noise to the gradients or model updates uploaded by each client, thereby disturbing the global model update. The goal of this attack is to make global model training unstable or mislead the convergence direction of the global model.

The Gaussian attack disturbs the training process by adding noise to the gradients. Let the gradient calculated by client \(\:{\:C}_{i}\)be \(\:\nabla\:L\left({w}_{t},{D}_{i}\right)\), and let \(\:g\) represent the gradient after the attack. The attacker adds Gaussian noise \(\:N\left(0,{\sigma\:}^{2}I\right)\) to the gradient, and the updated gradient becomes:

A sign flip attack refers to an attack where the attacker flips the sign of the model’s gradient to influence the update of the global model. In each iteration, the attacker reverses the sign of the computed gradient, causing the global model to update in the wrong direction. This type of attack is simple but effective, as it does not require modifying the magnitude of the gradient.

Under normal circumstances, client \(\:{\:C}_{i}\) would upload its computed gradient \(\:\nabla\:L\left({w}_{t},{D}_{i}\right)\). The attacker flips the sign of the gradient, and the update formula becomes:

A targeted attack is a more complex form of attack where the attacker aims not only to disrupt the model’s training but also to steer the model toward a specific goal. The attacker may manipulate the client’s gradient to bias the global model output toward a specific target (e.g., misclassifying a particular class label). This attack is more specific and aims to influence particular predictions rather than merely degrade the global model’s performance.

Let the local gradient computed by client \(\:{\:C}_{i}\) be \(\:\:\nabla\:L\left({w}_{t},{D}_{i}\right)\). The targeted attack aims to adjust the gradient to make the model converge toward a specific target \(\:w\). The attacker adds an additional gradient correction term, making the gradient update bias toward the target model:

Non-IID

Non-IID (Non-Independent and Identically Distributed) refers to differences in the data distribution across clients. Compared to IID (Independent and Identically Distributed) data, Non-IID data is more complex and brings issues such as instability in the training process, slower convergence, and poorer performance of the global model. Data heterogeneity can be further divided into label skew and feature skew.

-

Label Skew: The data categories are unevenly distributed across clients. Some clients may mainly have data from certain categories, while other categories may be underrepresented.

-

Feature Skew: Each client may have a different feature space. For example, in image classification, some clients may only have specific types of images, while other clients may have completely different types.

Data heterogeneity and poisoning attacks combined

When data heterogeneity and poisoning attacks combine, it introduces a series of new challenges for federated learning. From the federated optimization perspective, data heterogeneity itself causes differences in the training data distribution between clients, leading to unstable convergence and slower training speed. If an attacker injects malicious data or gradient updates into certain clients, the impact of the attack is amplified. From the defense perspective, under Non-IID data, each client’s training data may be imbalanced or belong to a specific category. This means that poisoning attackers can exploit the natural data distribution differences to conceal their malicious behavior.

Methods

We propose that FedCVG consists of two stages, as shown in Fig. 1. Due to the presence of poisoning attacks under data heterogeneity, we enhance the traditional clustering algorithm by adding a reputation mechanism to introduce fault tolerance, forming the first stage of the FedCVG algorithm. The stability of the model is ensured during the second stage, where focused training is carried out. In the second stage, we decouple the model’s feature extraction layer and classifier layer, freeze the feature extraction layer, and focus on training the classifier layer. Additionally, a “virtual aggregation” strategy based on historical gradients is employed to improve model accuracy.

Stage 1: malicious node filtering

The first stage of the FedCVG algorithm combines traditional clustering algorithms with a reputation mechanism to address poisoning attacks under data heterogeneity. The main goal of this stage is to enhance the algorithm’s fault tolerance, ensuring that the clustering and update processes can proceed effectively, even in the presence of malicious clients. The following describes the algorithm according to the federated learning training process. The process of stage 1 is shown in Fig. 2:

Local update: We first adopt SCAFFOLD, which has a fast convergence rate, as the base aggregation algorithm. SCAFFOLD (Stochastic Controlled Averaging for Federated Learning) is a fast-converging federated learning algorithm that introduces control variables between clients and servers to reduce the slow training speed caused by data heterogeneity and inconsistencies in client gradient updates.

Each client \(\:i\) updates its control variable based on the global model parameters and control variables from the previous round:

where\(\:{\:w}_{t}\) is the local model of client\(\:\:i\:\)at round \(\:t\), and \(\:{w}_{t}\:\) is the global model of the server. The server aggregates all client control variables and updates its own control variable:

where \(\:N\) is the total number of clients, and \(\:{b}_{t}\:\)is the server’s control variable.

When the client performs local updates, the gradient computation is modified to ensure that the control variable’s impact is considered, reducing inconsistencies. The update formula for client \(\:i\) is:

Aggregation mechanism: We introduce clustering and reputation mechanisms to defend against poisoning attacks. Each client’s model update can be represented as a high-dimensional vector, denoted as the client’s update parameter set.

where \(\:{w}_{i}\) is the update vector of client \(\:i\). Upon receiving all client updates, the server performs K-Means clustering on these updates, calculating inter-cluster distances to identify malicious clients. The goal is to separate benign clients from malicious ones. The cluster center \(\:{M}_{k}\) is defined as:

where \(\:\left|{S}_{k}\right|\) is the number of clients in the \(\:k\) cluster, and\(\:{\:M}_{k}\:\) is the average of all client updates in that cluster. The distance between clusters can be calculated by the Euclidean distance between their centers:

where \(\:{M}_{ik\:}\)and \(\:{M}_{jk}\) are the k-th dimensional coordinates of cluster centers i and j, respectively.

The size of each cluster and the distances between clusters are calculated. If the number of clients in a cluster \(\:{S}_{i}\) is less than a preset threshold \(\:S\), and its distance from other clusters exceeds a distance threshold \(\:D\)—that is, if a cluster is both “small in scale and far from others”—it is marked as a malicious cluster. Updates from clients in malicious clusters are excluded from aggregation, and their reputation scores are penalized. If a client’s reputation \(\:{r}_{i}\) falls below a reputation threshold R, it is isolated. As with clustering, In scenarios with strong data heterogeneity, it is necessary to enhance robustness by appropriately increasing the distance threshold \(\:D\) and decreasing the cluster size threshold \(\:S\) to prevent false positives. Conversely, if data heterogeneity is low, \(\:D\) can be reduced and \(\:S\) increased to improve the model’s sensitivity and strengthen the identification of attacks. The choice of reputation threshold \(\:R\) also involves communication overhead considerations—a higher \(\:R\) may cause false positives and reduce data diversity, while a lower \(\:R\) may fail to promptly exclude malicious or underperforming nodes. In summary, the selection of \(\:D\:\), \(\:S\), and \(\:R\) depends on both the environment and communication cost, and requires manual tuning.

Finally, the server integrates updates from trusted clusters according to Eqs. (1) and (2), and completes global model updating and evaluation using a test set on the server. It should be noted that, in practical federated learning tasks, no data is stored on the server. In our experiments, the server’s test set is not involved in training; it is only used for model validation.

The pseudocode for stage 1 of the algorithm is shown in Table 2:

Stage 2: focused learning

In the second stage of FedCVG, our goal is to decouple the model’s feature extraction and classification layers, and adopt a “virtual aggregation” strategy based on historical gradients to focus on training the classification layer. The purpose of this approach is to minimize the model’s communication overhead while ensuring the model’s performance.

Decoupling: In the second stage, the model’s feature extraction layers (such as convolutional or embedding layers) are frozen based on the state achieved after the first stage of training, and their parameters remain unchanged; only the classification layer is updated. For a model \(\:{f}_{\theta\:\text{}}\) (where \(\:\theta\:\:\)denotes all model parameters), we split \(\:\theta\:\:\)into feature extractor parameters \(\:{\theta\:\text{}}_{f}\) and classifier parameters \(\:{\theta\:\text{}}_{c}\), with the following update rules

where \(\:\mathcal{L}\) is the classification loss function, \(\:\widehat{y\:}\) is the predicted output, \(\:y\) is the true label, \(\:\eta\:\) is the learning rate, and \(\:{\theta\:}_{c}^{\left(t\right)}\) are the model’s classification layer parameters.

Virtual aggregation strategy: To improve the training performance in the second stage, we introduce the virtual aggregation strategy by utilizing historical gradients to guide the server-side aggregation process. Specifically, in the second stage, the gradients of the selected clients are retained. If a client is not selected during a particular update, the historical gradients of that client are used to perform a weighted average of the client’s update.

From a security perspective, the most sensitive part of the proxy information is the gradient data. From the attacker’s viewpoint, if the endpoint device’s security is compromised, directly accessing client data is much easier and more straightforward than inferring raw data from the database. Therefore, the risk of gradient leakage mainly arises during communication. In contrast, since the training is split into two stages and only classification layer gradients are uploaded in the second stage, this structure actually reduces the likelihood of attackers reconstructing original data via inversion attacks, thereby enhancing overall security, as illustrated in Fig. 1.

Each client’s update can be represented as the difference between the current model and the previous update, i.e., the gradient \(\:\:{g}_{i}^{t}\) of the client. To reduce the influence of storing historical gradients for a long time, we add a time-decay parameter\(\:\:\alpha\:\), which controls the impact of historical gradients on the aggregation result. The historical gradient decays based on the time from the current round. If a client was selected in round \(\:{\:t}_{i}\) for aggregation, and if the client is not selected again until round \(\:t\), the client’s virtual aggregation gradient \(\:g\) is:

The aggregation process on the server considers both the historical gradients and the current client updates. Suppose the global model at the server in round \(\:t\) is \(\:{\:w}_{t}\), then each client’s update (virtual update) is weighted and aggregated as follows:

Meanwhile, the server combines reputation information from the first stage and aggregates only the classifier parameters from trusted clients to complete global model updating and evaluation.

The pseudocode for the second stage of the algorithm is shown in Table 3:

Results

The experiment consists of six parts. Sections 1–4 are introductory, covering the dataset and model setup, poisoning attacks and Non-IID environments, baseline algorithm introduction, and experimental environment and parameter setup. Section 5 contains the experimental section, which includes three experiments: experiments in different Non-IID environments, experiments in three poisoning attack environments, and communication overhead experiments. Finally, Section 6 summarizes the experimental content.

Dataset and model setup

In this experiment, we used two classic deep learning models for federated learning training: LeNet and ResNet34. These two models represent small convolutional neural networks and complex residual network architectures, respectively, and are able to demonstrate the performance of models with different complexities in federated learning.

We selected three commonly used datasets for our experiments: MNIST, FashionMNIST, and CIFAR-10. MNIST is a handwritten digit recognition dataset with 60,000 training images and 10,000 test images, each sized 28 × 28 pixels. It is suitable for evaluating model performance on simple tasks. FashionMNIST contains 10 categories of clothing images, with 7,000 images per category. The image format is the same as MNIST, but the content is more complex, making it useful for assessing model capability on tasks of moderate complexity. CIFAR-10 consists of 10 categories of object images, with 6,000 images per category and each image sized 32 × 32 pixels. It is well-suited for testing model performance on diverse image classification tasks.

Poisoning attacks and non-IID environments

Non-IID data distribution

In federated learning experiments, data is typically distributed across multiple clients, and the local data of these clients often has different characteristics and biases. To simulate data distribution in real-world environments, we adopted a Non-IID (Non-Independent and Identically Distributed) data distribution. Non-IID data not only differs in samples but also shows imbalance or bias in category distribution. This is closer to the actual data characteristics in distributed systems, where certain categories of data may be concentrated in a few clients, while other categories may be sparse.

In the experiment, we controlled the degree of Non-IID by adjusting the alpha value. We used the Dirichlet distribution to simulate the data distribution, simultaneously applying label Non-IID and quantity Non-IID. Smaller alpha values (e.g. \(\:\alpha\:\)<0.1) lead to stronger class imbalance, with data from certain classes concentrated in a few clients, while larger alpha values (e.g. α close to 1 or larger) make the data distribution more uniform, approaching IID. The impact of Non-IID on data heterogeneity is shown in Fig. 3:

Poisoning attacks

To evaluate the impact of malicious clients on the performance of federated learning systems, we employ three common poisoning attack modes:

Gaussian Attack: Malicious clients inject Gaussian noise during local training to disrupt gradient updates. This causes the client’s update to be inconsistent with the global model, potentially leading to unstable convergence or trapping the model in a local optimum.

Sign Flip Attack: Malicious clients interfere with training by flipping the sign of the gradients (e.g., converting positive gradients to negative), which causes the model parameters to be updated in the wrong direction.

Targeted Attack: Malicious clients intentionally shift the gradient in a specific direction to influence global model training, usually degrading performance on specific tasks or target labels.

Baseline algorithm introduction

In our experiments, we adopt two types of algorithms: federated learning optimization algorithms and poisoning attack defense algorithms.

Federated learning optimization algorithms mainly focus on improving the efficiency and performance of federated learning, but are not specifically designed to defend against malicious clients. Representative methods such as FedAvg, SCAFFOLD, and FedProx can enhance training effectiveness and stability, but still lack effective defenses when facing poisoning attacks.

Poisoning attack defense algorithms improve system robustness through various mechanisms. Traditional methods like Trimmed Mean mitigate the impact of abnormal clients by removing extreme values, while Auror combines robust aggregation with a dynamic trust mechanism to significantly enhance resistance to poisoning attacks. Recent approaches such as MSGuard employ multi-strategy fusion to automatically select trustworthy gradients, and SecDefender dynamically removes underperforming client models using an auxiliary validation set, further strengthening defense capabilities.

Experimental environment and parameter setup

Experimental Environment: All experiments in this paper were conducted on a machine with the following specifications: 16 vCPUs, AMD EPYC 9654 96-Core Processor, vGPU-32GB (32GB), and 60GB of memory.

Experimental Parameters: For each training round, 10 clients are involved by default, with a participation rate of 50%. The total number of training rounds is set to 100, with 3 local training rounds per client. The batch size is 64, the learning rate is 0.01, the momentum coefficient is 0.9, and the weight decay coefficient is 1e-5. For the FedCVG algorithm, the number of training rounds in the first stage is 40, and in the second stage, it is 60 rounds, the three clustering thresholds are \(\:D\) = 1, \(\:S\) = 0.45, and \(\:R\) = 0.25, respectively.

Experiments

Experiment 1: results in different non-IID environments

In this experiment, the federated learning system divides the dataset into a training set and a test set. The training set is distributed among different clients (using either IID or non-IID settings), while the test set is retained on the server for subsequent performance evaluation. In each training round, selected clients train the model using their local data and upload the updated model parameters to the server. The server aggregates the model parameters from all clients using the federated averaging algorithm to generate a new global model. Subsequently, the server evaluates the global model using the independent test set, calculating metrics such as accuracy and F1 score to assess the model’s generalization ability.

We focus on validating the performance of the FedCVG algorithm under different datasets and baseline models (such as FedAvg, Krum, FedProx, SCAFFOLD, etc.). To do this, we set up different Non-IID environments by adjusting the alpha value to simulate data imbalance, and conducted experiments on the CIFAR-10, FashionMNIST, and MNIST datasets. Figure 4 shows the in accuracy of different algorithms on the server-side test set as the number of training rounds increases.

From the experimental results, it can be seen that as the α value increases (i.e., the data distribution becomes more uniform), the model accuracy of all algorithms improves significantly, further confirming that data heterogeneity poses a considerable challenge to federated learning model training. Focusing on different algorithms, in environments without attacks, optimization-based algorithms generally perform well, with higher accuracy and faster convergence. Among them, FedCVG performs only slightly below SCAFFOLD on complex datasets such as CIFAR-10, and its fluctuations are smaller than those of SCAFFOLD; on the FashionMNIST dataset, FedCVG’s performance is close to that of SCAFFOLD and demonstrates even greater stability; on simpler datasets like MNIST, FedCVG shows more significant performance improvements compared to other optimization algorithms. In contrast, robust aggregation algorithms designed to defend against poisoning attacks have certain advantages in handling abnormal updates, but their accuracy is noticeably lower than that of optimization-based algorithms in clean or complex data distribution scenarios. Algorithms such as Auror also exhibit greater fluctuations and lower final performance in some experiments. Specifically, in environments without poisoning attacks, FedCVG achieves accuracy improvements of 0.42%, 1.25%, and 1.51% on the CIFAR-10, FashionMNIST, and MNIST datasets, respectively. Overall, FedCVG is able to ensure both convergence speed and model performance, while also maintaining a certain degree of stability and adaptability to data heterogeneity.

Experiment 2: experiments in three poisoning attack environments

In this experiment, we evaluate the performance of the FedCVG algorithm on the FashionMNIST dataset under three common poisoning attack modes: Gaussian noise attack, sign flip attack, and targeted attack. The main goal of this experiment is to compare the robustness of the FedCVG algorithm with several other baseline methods (such as Auror, Median, TrimmedMean, MSGuard, SecDefender etc.) when faced with malicious clients.

To simulate an environment with both poisoning attacks and data heterogeneity, we set different alpha values (0.1, 0.5, and 1.0) to test the algorithm’s performance. We also used the results from the last 50 rounds, removing the highest and lowest values, and averaging the remaining rounds to represent the model’s average accuracy after convergence. The results are shown in Table 4.

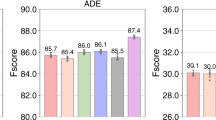

According to the results in the table, FedCVG achieves the highest or near-highest accuracy in most attack types and attack intensities (α values), especially under medium and high-intensity Sign_flip and Targeted attacks. It is worth noting that MSGuard fails to produce valid results under Sign_flip attacks, which may be due to the fact that after gradient sign flipping, the malicious clients’ model parameters significantly impact the global model. After several rounds of training, the global model becomes highly unstable, causing the parameter norms of all clients (including benign ones) to become abnormal and exceed the threshold range set by MSGuard’s norm-based filtering. SecDefender did not perform as expected, mainly because its filtering mechanism is relatively strict. When facing complex or diverse attacks, it may mistakenly filter out many benign clients, which reduces the robustness and generalization ability of the global model. Other algorithms are competitive in some scenarios, but overall, their stability is lower than that of FedCVG. We present the results from the table as bar charts, as shown in Fig. 5.

From the Fig. 5, it can be observed that under the Gaussian noise attack, as the alpha value decreases, the accuracy of all algorithms declines. However, FedCVG only falling below Auror when α = 0.1. This result indicates that FedCVG demonstrates stability and interference resistance when dealing with malicious clients that add noise.

In sign flip and targeted attack environments, FedCVG is also able to maintain high performance. This is because, compared to traditional robust aggregation algorithms, FedCVG, although it involves three threshold parameters, demonstrates stronger adaptability to different environments than the parameters of typical robust algorithms. Compared to algorithms that integrate multiple attack detection mechanisms, FedCVG maximizes the preservation of client data diversity. This is due to the clustering-based reputation mechanism in the first stage of FedCVG, which effectively filters out malicious clients, and the focused training mechanism introduced in the second stage, which enables the model to concentrate on learning from legitimate clients’ data, thereby effectively maintaining model performance.

Communication overhead experiment

In this experiment, we compared three federated learning algorithms: Auror, FedAvg, and FedCVG, which respectively represent federated learning optimization algorithms and algorithms for defending against poisoning attacks, under the CIFAR-10 dataset and ResNet34 model conditions, with a focus on evaluating the balance between communication overhead and accuracy. The experiment recorded the communication overhead (in MB) during each training round and the model accuracy, showcasing the performance differences of different algorithms when facing the same dataset and network model. The results are shown in Fig. 6:

From the experimental charts of Auror and FedAvg, it can be seen that as the number of training rounds increases, the communication overhead (orange curve) continues to rise, while the accuracy (blue curve) shows a gradual upward trend. Although Auror achieves stable accuracy improvement on the CIFAR-10 dataset, its communication overhead is very high. As training progresses, the cumulative communication cost of Auror (right Y-axis) continues to increase, indicating that it consumes a large amount of bandwidth resources when transmitting model updates, especially during larger training rounds.

Compared to Auror and FedAvg, the FedCVG chart shows a different trend. While the accuracy of FedCVG (blue curve) gradually increases and stabilizes, which is similar to both the Auror and FedAvg algorithms, its cumulative communication overhead (orange curve) is much lower than the other two. It can be seen that in the early stages of training, FedCVG’s communication overhead remains at the same level as Auror and FedAvg. However, after reaching the second stage, its communication overhead does not significantly increase. This is due to our mechanism of decoupling feature extraction from the classification task and freezing the feature extraction layer while focusing on training the classification layer. This shows that, compared to traditional federated optimization algorithms and robust aggregation algorithms, FedCVG optimizes communication efficiency without the need for communication compression, achieving a better balance between model performance and communication overhead.

Experimental summary

In the experimental section, we conducted experiments in different Non-IID environments, under three types of data poisoning attack scenarios, and compared the communication overhead of different algorithms using CIFAR-10 and ResNet34. Thanks to the two-stage federated learning mechanism, FedCVG achieved an average accuracy improvement of 1.06% over other algorithms in heterogeneous data environments, an average improvement of 4.2% in defending against poisoning attacks, and reduced communication overhead by approximately 50%.

Conclusion

In this paper, we proposed a two-stage federated learning framework for defending against poisoning attacks, FedCVG. In the first stage, the framework uses a reputation mechanism and clustering algorithm to filter out malicious nodes. In the second stage, the feature extraction and classification processes are decoupled, and the focus is placed on training the classification layer of the model. Experiments conducted in three different environments demonstrate that our defense algorithm achieves comparable performance to federated learning optimization algorithms. Compared to other baseline methods, FedCVG improves average accuracy by 4.2% and reduces communication overhead by approximately 50% while defending against poisoning attacks.

In future work, we will further explore the integration of FedCVG with privacy technologies such as differential privacy and homomorphic encryption to enhance defense against inversion attacks. Additionally, we will attempt to apply methods such as knowledge distillation and weight compression to further reduce the model’s communication overhead.

Data availability

You can visit https://github.com/zhangsanry/FedCVG to obtain the data resources and further details about the project.

References

Konečný, J., McMahan, H. B., Ramage, D. & Richtárik, P. Federated optimization: Distributed machine learning for on-device intelligence. arXiv. arXiv:1610.02527 (2016).

Ye, M., Fang, X., Du, B., Yuen, P. C. & Tao, D. Heterogeneous federated learning: State-of-the-art and research challenges. arXiv. arXiv:2307.10616 (2023).

Feng, Y. et al. A survey of security threats in federated learning. Complex. Intell. Syst. 11(2), 165. https://doi.org/10.1007/s40747-024-01664-0 (2025).

McMahan, B., Moore, E., Ramage, D., Hampson, S. & Arcas, B. A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics, 1273–1282. https://proceedings.mlr.press/v54/mcmahan17a.html (PMLR, 2017).

Li, T. et al. Federated optimization in heterogeneous networks. arXiv. arXiv:1812.06127.

Karimireddy, S. P. et al. SCAFFOLD: Stochastic controlled averaging for federated learning. In Proceedings of the 37th International Conference on Machine Learning, 5132–5143.https://proceedings.mlr.press/v119/karimireddy20a.html (PMLR, 2020).

Reddi, S. et al. Adaptive federated optimization. arXiv. arXiv:2003.00295 (2021).

Wang, J., Liu, Q., Liang, H., Joshi, G. & Poor, H. V. Tackling the objective inconsistency problem in heterogeneous federated optimization. Adv. Neural. Inf. Process. Syst. 33, 7611–7623. https://proceedings.neurips.cc/paper_files/paper/2020/file/564127c03caab942e503ee6f810f54fd-Paper.pdf (2020).

Asad, M., Moustafa, A. & Ito, T. FedOpt: Towards communication efficiency and privacy preservation in federated learning. Appl. Sci. 10(8), 2864. https://doi.org/10.3390/app10082864 (2020).

Xia, G., Chen, J., Yu, C. &Ma, J. Poisoning attacks in federated learning: A survey. IEEE Access 11, 10708–10722. https://doi.org/10.1109/ACCESS.2023.3238823 (2023).

Blanchard, P., El Mhamdi, E. M., Guerraoui, R. & Stainer, J. Machine learning with adversaries: Byzantine tolerant gradient descent. Adv. Neural Inf. Process. Syst. 30 (2017).

Yin, D., Chen, Y., Kannan, R. & Bartlett, P. Byzantine-robust distributed learning: Towards optimal statistical rates. In Proceedings of the 35th International Conference on Machine Learning, 5650–5659. https://proceedings.mlr.press/v80/yin18a.html (PMLR, 2018).

Fung, C., Yoon, C. J. M. & Beschastnikh, I. Mitigating sybils in federated learning poisoning. arXiv. arXiv:1808.04866 (2020).

Aloran, I. & Samet, S. A secure federated learning approach: Preventing model poisoning attacks via optimal clustering. In 2024 International Conference on Data Science and Its Applications (ICoDSA), 140–145. https://doi.org/10.1109/ICoDSA62899.2024.10651973 (2024).

Shen, S., Tople, S. & Saxena, P. AUROR: Defending against poisoning attacks in collaborative deep learning systems. In 32nd Annual Computer Security Applications Conference (ACSAC 2016), 508–519. https://doi.org/10.1145/291079.2991125 (2016).

Deng, J., Liu, S. & Li, C. Detecting Diverse poisoning attacks in federated learning based on joint similarity. In 2024 16th International Conference on Wireless Communications and Signal Processing (WCSP), 133–138. https://doi.org/10.1109/WCSP62071.2024.10827693 (2024).

Colosimo, F. & De Rango, F. Performance evaluation of distance-statistical based byzantine-robust algorithms in federated learning. In 2024 IEEE Wireless Communications and Networking Conference (WCNC), 1–6. https://doi.org/10.1109/WCNC57260.2024.10570891 (2024).

Assumpcao, N. R. G. & Villas, L. Fast, private, and protected: Safeguarding data privacy and defending against model poisoning attacks in federated learning. In 2024 IEEE Symposium on Computers and Communications (ISCC), 1–6. https://doi.org/10.1109/ISCC61673.2024.10733713 (2024).

Han, F., Zhang, Y. & Zhao, M. Defending poisoning attacks in federated learning via loss value normal distribution. In 2023 26th International Conference on Computer Supported Cooperative Work in Design (CSCWD), 1644–1649. https://doi.org/10.1109/CSCWD57460.2023.10152846 (2023).

Guerraoui, R. & Rouault, S. The hidden vulnerability of distributed learning in byzantium. In Proceedings of the 35th International Conference on Machine Learning (ICML) 80, 3521–3530 (2018).

Cao, X., Fang, M., Liu, J. & Gong, N. Z. Fltrust: Byzantine-robust federated learning via trust bootstrapping. arXiv preprint. arXiv:2012.13995 (2022).

Zhang, X., Sun, W. & Chen, Y. Tackling the Non-IID issue in heterogeneous federated learning by gradient harmonization. IEEE. Signal. Process. Lett. 31, 2595–2599. https://doi.org/10.1109/LSP.2024.3430042 (2024).

Li Yang, Y. et al. Enhanced model poisoning attack and Multi-Strategy defense in federated learning. IEEE Trans. Inf. Forensics Secur. 20, 3877–3892 (2025).

Sameera, K. M. et al. SecDefender: Detecting low-quality models in multidomain federated learning systems. Future Gener. Comput. Syst. 164, 107587 (2025).

Acknowledgements

This research was funded by the Postdoctoral Fellowship Program of CPSF, grant number GZC20240925, the China Postdoctoral Science Foundation, grant number 2024M751855, the Shandong Postdoctora1 Science Foundation, grant number SDCXRS-202400018, the Qingdao Postdoctoral Project, project number QDBSH20240102189.

Author information

Authors and Affiliations

Contributions

R.Z.: Methodology, Writing – original draft; Y.Z.: Software, Investigation, Data curation, Writing – review & editing; B.J.: Visualization, Formal analysis; W.L.: Writing – review & editing, Supervision.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhang, R., Zhang, Y., Zhao, Y. et al. FedCVG: a two-stage robust federated learning optimization algorithm. Sci Rep 15, 18357 (2025). https://doi.org/10.1038/s41598-025-02722-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-02722-4