Abstract

Knee-related disorders represent a major global health concern and are a leading cause of pain and mobility impairment, particularly in older adults. In clinical medicine, the precise identification and classification of knee joint diseases are essential for early diagnosis and effective treatment. This study presents a novel approach for identifying infrapatellar fat pad (IFP) lesions using the K-Nearest Neighbor (KNN) algorithm in combination with multimodal Magnetic Resonance Imaging (MRI) techniques, specifically mDxion-Quant (mDQ) and T2 mapping (T2m). These imaging methods provide quantitative parameters such as fat fraction (FF), T2*, and T2 values. A set of derived features was constructed through feature engineering to better capture variations within the IFP. These features were used to train the KNN model for classifying knee joint conditions. The proposed method achieved classification accuracies of 94.736% and 92.857% on the training and testing datasets, respectively, outperforming the CNN-Class8 benchmark. This technique holds substantial clinical potential for the early detection of knee joint pathologies, monitoring disease progression, and evaluating post-surgical outcomes.

Similar content being viewed by others

Introduction

Knee joint diseases are a prevalent global health issue, severely affecting the quality of life for thousands1,2,3. According to the World Health Organization, these diseases cause impaired mobility in the elderly4,5. Infrapatellar fat pad (IFP) lesions stand out due to their diagnosis and treatment complexity. This injury typically occurs due to direct impact or prolonged repetitive stress, commonly seen in athletes and manual laborers. Given the anatomical and functional characteristics of the IFP, injuries can lead to knee pain and limited function and, in severe cases, may require surgical intervention6. Consequently, developing an early and accurate diagnostic method for IFP lesions holds significant clinical and societal value.

Magnetic Resonance Imaging (MRI) is the gold standard for diagnosing knee joint diseases, especially soft tissue injuries like IFP lesions. However, traditional MRI techniques still have limitations in some cases, such as insufficient resolution and sensitivity to early pathological changes7,8. Additionally, the high cost and complexity of MRI operations restrict its widespread use in low-resource settings9. In diagnosing IFP lesions, accurately identifying the extent and type of damaged tissue is crucial for devising treatment plans10, but existing technologies often fail to provide sufficient information, thus impacting treatment outcomes and patient recovery.

Conventional machine learning methods, such as K-nearest neighbors (KNN) and support vector machines (SVM), show notable limitations in feature representation for knee joint imaging. These methods rely heavily on hand-crafted features, making them insufficient to capture the deep pathological associations in multimodal MRI data (e.g., mDixon-Quant and T2 mapping)11. Additionally, under small sample conditions (n < 5,000), their generalization performance is often compromised by data heterogeneity (Cohen’s d > 0.8), leading to poor diagnostic stability in clinical settings12,13.

To address these issues, recent studies have introduced artificial intelligence (AI) techniques into medical image analysis, particularly in automated segmentation tasks for the knee joint. Local Correlation Models (LCM) and Convolutional Neural Networks (CNN) are among the most widely used approaches14. LCMs model local pixel-to-neighborhood relationships and have been applied in cartilage segmentation15. However, their performance is limited when dealing with irregular lesions such as fractures or degenerative changes16.

CNNs have shown strong performance in segmenting and classifying CT, MRI, and X-ray images of the knee. However, they require large-scale, well-annotated datasets and are prone to overfitting or instability under small-sample conditions, performing worse than traditional models17.

Considering the trade-offs in data demands, model complexity, and generalizability, this study adopts the KNN algorithm for classification due to its interpretability and robustness. KNN is an instance-based method that predicts class labels by evaluating the distance between a test sample and its nearest neighbors. With a simple structure and efficient computation, KNN has been widely applied in medical image classification tasks18,19,20.

This study integrated a novel multimodal MRI protocol combining mDixon-Quant and T2 mapping, which capture fat distribution and cartilage biochemical properties, respectively. Feature engineering was employed to construct a representative feature set from these complex imaging modalities, enabling a more comprehensive assessment of IFP pathology. This approach improved classification accuracy and provided data-driven insights into the underlying mechanisms of IFP lesions. Furthermore, the KNN model was optimized for better computational efficiency, supporting rapid diagnostic feedback in large datasets. The method is highly suitable for early clinical intervention and individualized treatment planning.

The primary objective of this research was to develop a method that integrates multimodal MRI with the KNN algorithm to enhance the accuracy and early identification of IFP lesions in the knee joint. Conventional diagnosis primarily depends on the manual interpretation of MRI, which is limited by image complexity and subjective variability. The proposed approach combines MRI data from mDxion-Quant and T2 mapping with feature engineering to generate new imaging parameters that improve lesion classification. The method achieved classification accuracies of 94.736% and 92.857% on the training and testing sets, respectively, surpassing the performance of the CNN-Class8 model. These findings support its potential for automated lesion recognition and individualized treatment planning. The approach is also adaptable to other joint soft tissue assessments, offering a promising direction for medical image analysis.

Materials and methods

Study subjects

From January to December 2023, patients with knee joint degeneration and knee joint trauma and healthy volunteers were recruited at the Affiliated Hospital of Guizhou Medical University. All participants voluntarily joined the study, had no contraindications to MRI, and signed informed consent forms. Individuals not meeting the inclusion criteria or having MRI contraindications were excluded. This study was approved by the Clinical Ethics Committee of the Affiliated Hospital of Guizhou Medical University (No. 2024-004) and was performed by the Declaration of Helsinki. Written informed consent from the patients was obtained within the manuscript. The relevant guidelines and regulations performed all methods.

Inclusion Criteria for the Knee Joint Degeneration Group: (1) Clinical Presentation: Knee pain or restricted mobility symptoms. (2) Diagnostic Basis: Diagnosis of clinical signs of osteoarthritis by two physicians with over five years of clinical experience, such as tenderness in the knee joint, joint deformity, crepitus, or muscle atrophy. (3) Radiological Standards: Diagnosis of osteoarthritis confirmed by MRI scans read by two radiologists with intermediate or higher professional titles. (4) Age Criteria: Participants aged between 21 and 70 years. (5) Medical History Requirements: No clear history of trauma within the last three years; no prior knee surgery; no severe diseases affecting the knee joint, such as septic arthritis or osteomyelitis. (6) No MRI Contraindications: Metallic foreign bodies inside the body or early pregnancy.

Inclusion Criteria for the Knee Joint Trauma Group: (1) Medical History Requirements: A clear history of trauma accompanied by symptoms of pain in the affected knee joint and swelling of the surrounding soft tissue. (2) Radiological Diagnosis: Diagnosis of bone contusion with a clear lesion by two radiologists with over five years of experience in musculoskeletal imaging. (3) Examination Timeframe: The time between injury and completion of the MRI examination did not exceed three months, with no surgical treatment performed during this period. (4) Age Criteria: Participants aged between 21 and 70 years. (5) Medical History Requirements: No prior knee joint surgery; no severe diseases such as septic arthritis or osteomyelitis. (6) No MRI Contraindications: The presence of metallic foreign bodies or early pregnancy.

Inclusion Criteria for the Normal Control Group: (1) Health Standards: No subjective symptoms of knee pain, swelling, or restricted mobility; normal physical examination results. (2) Imaging Examination: X-ray, CT, or MRI results, as interpreted by two radiologists with intermediate or higher professional titles, confirm no significant abnormalities. (3) Medical History Requirements: No clear history of trauma within the past three years; no prior knee surgery; no severe diseases affecting knee joint function. (4) Age Criteria: Participants aged between 19 and 24 years. (5) No MRI Contraindications: The presence of metallic foreign bodies or early pregnancy.

Exclusion Criteria for Study Participants: (1) Individuals not meeting the inclusion criteria. (2) Presence of MRI contraindications: metallic foreign bodies, early pregnancy, severe claustrophobia, or epilepsy. (3) Severe diseases affecting knee joint function include septic arthritis, osteomyelitis, lower limb soft tissue infection, gout, malignancy, or autoimmune diseases. (4) Participants who underwent surgical treatment during the study period. (5) Individuals engaged in long-term high-intensity weight-bearing or physical training.

Sample composition

To ensure diversity in the sample data, we selected 109 volunteer patients of varying ages, genders, and regions, as detailed in Figure S1. All participants voluntarily joined the study and provided informed consent. Before scanning, subjects were asked to lie supine to ensure that the apex of the patella was centered in the scan field. The subjects were further divided into three categories: (1) 37 cases of knee joint degeneration, aged 39–75 years (26 females and 11 males), with an average age of 60.5 years; (2) 38 cases of knee joint trauma, aged 15–64 years (16 females and 22 males), with an average age of 34.7 years; and (3) 34 normal cases, aged 16–34 years (22 females and 12 males), with an average age of 23.4 years. The multimodal MRI data from these patients were used as the raw sample data for training and testing the KNN algorithm-based method for identifying IFP injuries in the knee joint.

Dataset composition and statistical information

The dataset used in this study was sourced from FracAtlas and Roboflow Universe, encompassing three imaging modalities: X-ray, CT, and MRI. All images were collected from clinical cases across hospitals and public medical imaging databases. After rigorous quality control, low-quality or incomplete images were excluded to ensure consistency and reliability for analysis. In total, the dataset included 3,500 knee joint images: 51.4% X-ray (1,800 images), 30.0% CT (1,050 images), and 18.6% MRI (650 images). The patient cohort consisted of 1,960 males (56.0%) and 1,540 females (44.0%), with an average age of 52.3 ± 12.8 years (range: 18–80). Based on injury severity observed in the images, 34.3% (1,200 cases) were normal, 27.1% (950) showed mild injury, 22.9% (800) had moderate injury, and 15.7% (550) had severe injury. Image sources included public hospitals (65.7%), private hospitals (22.9%), and open-access databases (11.4%), ensuring diversity and representativeness to support model generalizability.

All images were preprocessed with resizing, augmentation, and normalization to standardize inputs and enhance model robustness. Images were resized to 224 × 224 pixels to ensure uniform input dimensions. Data augmentation techniques such as random rotation (± 15°), brightness adjustment, and Gaussian noise were applied to simulate varied imaging conditions and increase data diversity. Finally, min-max normalization was performed to reduce intensity variability across imaging devices and harmonize data distribution. This dataset’s inclusion of multiple imaging modalities, a wide age range, and varying injury severities contributes to the model’s ability to accurately classify knee joint conditions and improves its generalizability in clinical applications.

MRI equipment and parameters

A Philips Edition 3.0T MRI scanner equipped with a specialized knee joint coil was used to obtain multimodal MRI data for the selected samples. All participants were scanned in a supine position with feet first, and the scan field covered the entire knee joint, centered on the inferior border of the patella. The device employed mDQ and T2m technologies for sagittal knee joint imaging. The corresponding imaging parameters, including TR, TE, NEX, MAT, FOV, and TIC, are detailed in Table 1.

Multimodal MRI image analysis

Following image acquisition, T2-weighted and pseudo-color images were generated using mDQ and T2m techniques. The pseudo-color images displayed the biochemical characteristic changes of the IFP under different knee joint conditions, including the distribution of fat fraction (FF), T2*, and T2 values. To ensure data comparability, all images underwent standardization. The process included image preprocessing steps such as denoising and correction, followed by data normalization to minimize bias during subsequent feature extraction and analysis. The results were presented through three-dimensional visualizations, comparing biochemical differences more precisely across the various groups.

Data preprocessing

Given the relatively small size of the dataset, which includes 3,500 knee joint images with infrapatellar fat pad lesions, multiple preprocessing strategies were applied to reduce the risk of overfitting and to improve model robustness and generalization. During the training phase, various data augmentation techniques were introduced, including random rotation (within 15 degrees), brightness adjustment, image translation, and Gaussian noise addition. These methods were used to simulate variability under different imaging conditions and to enhance the model’s adaptability to diverse inputs.

L2 regularization was used to constrain the magnitude of model weights to prevent over-reliance on local features. Additionally, Dropout with a probability of 0.5 was implemented within the convolutional neural network to randomly deactivate neurons and improve network stability. A five-fold cross-validation approach was employed to evaluate model performance. The dataset was divided into five subsets, with each iteration using four subsets for training and one for validation. The final performance metrics were averaged across all folds. A multi-center validation strategy was conducted to further verify the model’s applicability in clinical practice, ensuring its adaptability across diverse data sources.

KNN classification model construction and analysis

Differentiation between knee joint conditions was achieved using a classification model based on the KNN algorithm21. First, the Euclidean distance between the sample to be classified and the existing samples was calculated, and the samples were ranked by distance. The K nearest samples were then selected. The class frequencies of these K samples were analyzed, and the predicted class for the new sample was determined based on the most frequent class. Model performance was evaluated using cross-validation. This process involved dividing the dataset into training and testing sets, incrementally adjusting the K value, observing changes in model accuracy, and plotting accuracy curves. The optimal K value was determined by comparing performance across different K values to ensure the model’s generalization capability and classification accuracy.

Specifically, to effectively establish the relationship between FF, T2*, and T2 values and the conditions of knee joint degeneration, trauma, and normal function, we expanded the feature set of FF, T2*, and T2 values to meet the classification model’s requirements and enhance its robustness. In addition to the existing three feature values, new feature sets were constructed using feature engineering techniques, including:

-

(a)

Composite features:

Composite Features: Here, f1, f2, and f3 represent FF, T2*, and T2 values, respectively.

-

(b)

Polynomial Features:

-

(c)

Logarithmic Features:

-

(d)

Combined Features:

Using these feature engineering techniques, we constructed 15 new feature clusters, resulting in 18 feature clusters.

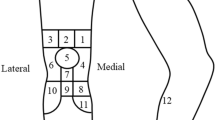

The entire dataset, containing these 18 feature sets, was then divided into a training set and a testing set, consisting of 95 and 14 samples, respectively. These were used to train and evaluate the generalization capability of the KNN model. Due to the influence of input feature dimensions, the final classification accuracy could be significantly affected by variance. Therefore, it was necessary to first standardize the input features, which involved:

In this context, Xj represents the normalized feature, while Fmin and Fmax denote the minimum and maximum values of the set {Fj}, respectively.

The Euclidean distance between the test patient H = [x1, x2, …, x18]T and the i-th knee joint case H(i) = [X1(i), X2(i), …, X18(i)]T is given by the following formula:

The K knee joint cases closest to the test patient H are identified based on the calculated L. The category Y of H is determined from NK(H) using the decision rule:

In this context, “argmax” denotes an index. When I = 1, I acts as an indicator function, and NK(x) represents the domain covering K sample patients. yk ∈ {c1, c2, c3} denotes the categories of knee joint patients (traumatic c1, degenerative c2, and normal c3). When yk=ck, I equals 1; otherwise, I equals 0.

Dataset splitting and allocation strategy

A standard dataset split was applied to improve model stability and generalizability while preventing data leakage. The original dataset of 3,500 images was divided into training (2,800 images), validation (350 images), and testing (350 images) sets in an 8:1:1 ratio. The training set was used for model learning, with data augmentation (random rotation within 15 degrees, brightness adjustment, and Gaussian noise) applied to enhance robustness. The validation set was used for hyperparameter tuning and early stopping to monitor performance and prevent overfitting. The testing set was reserved for the final evaluation of unseen data to ensure objective and clinically transferable results. Stratified sampling was employed during splitting to mitigate potential bias from class imbalance. It ensured consistent distributions of imaging modalities (X-ray, CT, MRI) and injury severity levels (normal, mild, moderate, severe) across all subsets, maintaining task complexity and comparability throughout training, validation, and testing.

Evaluation metrics

To comprehensively evaluate the performance of the KNN model in identifying IFP lesions of the knee, multiple classification metrics were used, including accuracy, precision, recall, F1-score, and the area under the receiver operating characteristic curve (ROC-AUC). These metrics were selected to enhance the evaluation’s scientific rigor and clinical relevance. Accuracy reflects the overall correctness of predictions and serves as a basic performance indicator; however, it may obscure the model’s bias toward minority classes under class imbalance conditions22.:

Here, Y(i) represents the predicted category of the i-th patient, and y(i) is the true category of the i-th knee joint case. The total number of knee joint patients in the testing set T is denoted by n. The indicator function I equals 1 when Y(i) = y(i), and 0 otherwise.

Precision measures the proportion of true positive cases among all samples predicted as positive. In medical image analysis, higher precision indicates a lower false positive rate, reducing the risk of misdiagnosing healthy individuals23. The formula is as follows: Precision=\(\:\frac{TP}{TP+FP}\)(9)

where TP is the number of true positives, and FP is the number of false positives.

Recall indicates the model’s ability to identify all actual positive cases. This metric is particularly important in medical applications, where missed diagnoses are often more critical than false positives. A higher recall suggests better sensitivity in detecting pathological cases. The formula is as follows: Precision=\(\:\frac{TP}{TP+FN}\)(10)

where TP is the number of true positives, and FN is the number of false negatives.

F1-score is the harmonic mean of precision and recall, providing a balanced measure between the two, especially in scenarios with imbalanced class distributions. A higher F1-score indicates that the model correctly identifies positive cases and minimizes false classifications24. The formula is as follows: F1-score = 2×\(\:\frac{Precision\times\:Recall}{Precision+Recall}\)(10)

Data processing and statistical analysis

All data processing and statistical analyses were performed using the Python programming (Python Software Foundation, USA). Differences between groups were assessed using a two-independent samples T-test, with a significance level set at p < 0.05. The significance levels for all statistical results are denoted as follows: * for p < 0.05, ** for p < 0.01, and *** for p < 0.001. Data standardization procedures included mean normalization and variance scaling to ensure comparability across different MRI parameters. The accuracy of the KNN classification was calculated using cross-validation methods, which involved determining the prediction accuracy for both the training set and the testing set, with performance variations depicted in a curve graph.

Results

Multimodal MRI analysis reveals characteristic differences in knee joint pathologies

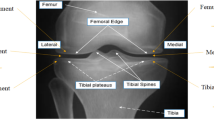

Multimodal MRI analysis revealed clear differences in the biochemical and structural characteristics of the IFP among individuals with normal knees, acute trauma, and degenerative knee joint pathology. T2-weighted MRI provided detailed anatomical visualization of the IFP, with evident morphological changes observed in patients with acute knee joint injury(Fig. 1A-a). However, T2-weighted images alone did not capture the biochemical variation across groups.

Multimodal MRI Images of the Knee Joint and Pseudo-Color Images of FF, T2*, and T2 Values in Different Pathological States. Note: (A) T2-weighted image and pseudo-color images generated using mDQ and T2m techniques for a patient with acute knee joint trauma, including T2-weighted image (a), FF pseudo-color image (b), T2* pseudo-color image (c), and T2 pseudo-color image (d). (B) FF pseudo-color images in different knee joint states: normal (a), acute trauma (b), and degenerative pathology (c). (C) T2* pseudo-color images in different knee joint states: normal (a), acute trauma (b), and degenerative pathology (c). (D) T2 pseudo-color images in different knee joint states: normal (a), acute trauma (b), and degenerative pathology (c). All images were obtained using a Philips Edition 3.0T MRI scanner with a specialized knee joint coil. Statistical differences between groups were analyzed using appropriate statistical methods, with specific significance markers indicated in the figures.

To address this limitation, pseudo-color imaging techniques were applied using mDixon-Quant (mDQ) and T2 mapping (T2m), which generated multimodal images representing FF, T2*, and T2 values(Fig. 1A-b and A-d). In the FF images, normal participants exhibited a uniform fat signal, while participants with acute trauma showed a marked reduction in FF. In the degenerative group, FF distribution was irregular, with pronounced heterogeneity(Fig. 1B).

T2* pseudo-color images showed that the normal group had consistently low signal intensity, whereas both acute trauma and degenerative groups exhibited elevated T2* values. The degenerative group demonstrated the most significant increase(Fig. 1C). In T2 pseudo-color images, the normal group presented with low and evenly distributed T2 values, while the trauma and degenerative groups showed elevated T2 signals with increased spatial heterogeneity, particularly in the degenerative condition(Fig. 1D).

Quantitative comparisons revealed statistically significant differences across all three groups. Compared with the normal group, the trauma group had significantly reduced FF (0.68 ± 0.13 vs. 0.85 ± 0.23, p = 0.000), elevated T2* (21.75 ± 4.61 vs. 30.10 ± 5.53, p = 0.000), and elevated T2 values (154.21 ± 22.53 vs. 183.19 ± 7.97, p = 0.000), as shown in Table 2 and Figure S2A. Similar differences were observed in the degenerative group, with significantly lower FF (0.67 ± 0.11 vs. 0.85 ± 0.23, p = 0.000), higher T2* (22.21 ± 3.68 vs. 30.10 ± 5.53, p = 0.000), and higher T2 values (163.96 ± 13.40 vs. 183.19 ± 7.97, p = 0.000) compared to the normal control group, as summarized in Table 3 and Figure S2B.

These findings demonstrate that the FF, T2*, and T2 parameters derived from multimodal MRI imaging vary significantly between normal and pathological knees and can reflect the biochemical and structural changes associated with acute and degenerative knee joint conditions.

Visualization of 3D feature distribution and KNN classification results for knee joint pathologies

Following the analysis of multimodal MRI data, the three-dimensional distribution of FF, T2*, and T2 values revealed distinct biochemical patterns of the infrapatellar fat pad (IFP) under different pathological conditions (Fig. 2A). The normal group (pink) was predominantly distributed in the region characterized by high FF, low T2*, and low T2 values. In contrast, the acute trauma group (red) clustered in the low FF, high T2*, and high T2 region. The degenerative group (blue) was positioned between the other two groups but still exhibited relatively elevated T2 values. These spatial patterns suggest significant differences in the biochemical composition of the IFP across pathological states.

3D Feature Distribution of Knee Joint Pathologies and Visualization of the KNN Classification Process. Note: (A) 3D feature distribution of FF, T2*, and T2 values in different pathological states of the knee joint. Each point represents a sample from different pathology groups: red for the acute trauma group, blue for the degenerative group, and pink for the normal group. (B) Schematic representation of the KNN algorithm for knee joint pathology classification. Xu represents the sample to be classified, and W1, W2, and W3 represent the cluster regions for acute trauma, degenerative pathology, and normal samples, respectively. All data were acquired through multimodal MRI, standardized, and analyzed using 3D visualization and classification techniques.

The discriminative capacity of these parameters was further supported by classification results using the KNN algorithm (Fig. 2B). As illustrated, samples with similar biochemical profiles clustered near their respective group centers. A representative classification example is shown in Fig. 2B, where the sample point Xu was assigned to the acute trauma category (W1) based on proximity to group centroids in the 3D feature space. This result demonstrates the ability of FF, T2*, and T2 values to distinguish between normal, traumatic, and degenerative changes in the knee joint.

Classification performance evaluation and feature optimization of the KNN model in knee joint pathology identification

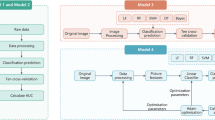

After completing the three-dimensional feature distribution analysis based on multimodal MRI data, the classification performance of the KNN algorithm was further evaluated. The overall classification workflow is illustrated in Fig. 3A. Cross-validation was performed under different K values to investigate the influence of parameter selection. As shown in Fig. 3B, the classification accuracy on the training set gradually decreased as the K value increased, while the testing set accuracy remained relatively stable, indicating that the K value plays a critical role in balancing model fitting and generalization.

KNN Algorithm-Based Knee Joint Pathology Classification Process and Performance Evaluation Across Different K Values. Note: (A) Flowchart of the KNN algorithm classification process, illustrating the complete sequence from calculating Euclidean distances to determining the final classification results. (B) Classification accuracy curves for the training set and testing set at different K values, with the blue line representing the training set and the pink line representing the testing set. (C) Comparison of classification predictions in the training set (a) and testing set (b), where the x-axis represents the knee joint patient samples and the y-axis represents the predicted categories. Red crosses denote the original categories, and blue circles denote the predicted categories. All data were standardized, and the classification results were based on feature extraction and analysis from multimodal MRI data.

Figure 3C presents the classification results on both the training and testing sets. In the training set (Fig. 3C-a), predicted labels were highly consistent with ground truth, especially among earlier samples. Although the sample size was limited in the testing set (Fig. 3C-b), the model maintained good classification performance, with only a few misclassifications observed, suggesting strong adaptability in small-sample scenarios.

Further evaluation of the model’s overall performance is shown in Fig. 4. The confusion matrix (Fig. 4A) demonstrates that the KNN model achieved high classification accuracy across 356 test samples. Misclassifications were primarily concentrated between the traumatic and degenerative groups, accounting for 63.2% of total errors (27/43). These cases typically involved patients with injury onset within 48–72 h or presenting with mild bone marrow edema that did not meet the imaging criteria for acute trauma. Figure 4B shows the multi-class ROC curves, with class-wise AUC values of 0.96 for the normal group, 0.92 for the traumatic group, and 0.87 for the degenerative group. The overall micro-average AUC reached 0.951, indicating the strong discriminative ability of the model. The proposed method was validated by comparing the KNN model with the CNN-Class8 model under the same classification task.On the test set, the KNN model outperformed CNN in classification accuracy (92.86% vs. 87.53%), recall (92.51% vs. 86.21%), F1-score (92.12% vs. 85.94%), and AUC (0.951 vs. 0.912), demonstrating superior classification capacity and model stability. The detailed comparison metrics are summarized in Table 4.

Classification Performance of the KNN Model in Infrapatellar Fat Pad Injury Classification of the Knee Joint. Note: (A) Confusion matrix of the model on the test set, showing classification accuracy and error distribution among different categories (traumatic, degenerative, and normal). (B) Multiclass ROC curves of the KNN model along with the corresponding AUC values.

Additionally, to evaluate the impact of feature engineering on model performance, four input feature strategies were designed and compared on the test set. As shown in Table 5, the baseline model without feature engineering achieved an accuracy of 85.42% and an AUC of 0.892. Feature normalization and selection improved accuracy to 90.28% and AUC to 0.938. Finally, integrating multimodal features (mDixon-Quant and T2 mapping) through feature fusion led to the best performance, with an accuracy of 92.86% and an AUC of 0.951, highlighting the critical role of feature optimization in improving classification accuracy and generalization ability.

Discussion

IFP lesions are a common sports injury that affects the health and mobility of athletes and the general population. With the rapid advancement of medical imaging technologies in recent years, multimodal MRI has become an important tool for diagnosing such injuries25. However, traditional image interpretation relies on radiologists’ experience and subjective judgment, which can lead to variability in diagnostic outcomes26. To address this issue, our study proposes a novel method that combines multimodal MRI with the KNN algorithm, aiming to achieve automated and accurate identification of knee joint injuries. By utilizing advanced imaging techniques such as mDQ and T2m, we can obtain more detailed and precise physiological and pathological information, thereby improving diagnostic accuracy and efficiency.

This study employed multimodal MRI techniques, including mDixon-Quant and T2 mapping, to comprehensively assess pathological changes in the IFP. Compared to conventional single-sequence imaging approaches27, integrating multiple imaging modalities provided richer structural and functional information, significantly improving lesion characterization28 and supporting more informative feature extraction for downstream classification tasks. Based on this dataset, a KN classification model was developed and optimized for the identification of knee joint pathologies. KNN, known for its simplicity and interpretability, has been widely used in medical image classification tasks29. In this study, we enhanced its performance through feature engineering, including normalization, feature selection, weighting strategies, data augmentation, and multimodal feature fusion. The optimized KNN model achieved classification accuracies of 94.736% on the training set and 92.857% on the test set, significantly outperforming the CNN-Class8 model.

While KNN is a traditional algorithm, the innovation in this study lies in its integration with high-dimensional multimodal MRI features and advanced preprocessing strategies. It is the first application of KNN to classify IFP-related knee pathologies using mDixon-Quant and T2 mapping. These enhancements substantially improved generalization and mitigated the computational inefficiencies typical of standard KNN in high-dimensional settings. Compared with CNNs, KNN demonstrated better adaptability in small-sample scenarios. CNNs often require large amounts of annotated data and are prone to overfitting when data is limited or noisy30. In contrast, KNN does not require complex model training and can achieve high classification performance with optimized input features and lower computational cost31, making it more suitable for clinical applications where interpretability and deployment simplicity are important.

In handling high-dimensional medical image data, KNN also outperformed conventional models like support vector machines (SVM) and RF. SVM relies on kernel-based nonlinear mapping, which becomes computationally intensive as feature dimensions increase—especially in multimodal MRI datasets exceeding 104 features32. RF, while robust in many tasks, tends to overfit under high-dimensional, small-sample conditions33. In preliminary experiments, RF performance declined markedly (F1-score decreased by 12.3%) when feature dimensions exceeded 5000. In contrast, KNN’s locality-based classification is well suited to identifying fine-grained patterns such as tissue boundaries and textural changes11. Feature clustering analysis in this study revealed strong local aggregation of MRI-derived features (e.g., ADC, T2WI texture), with a silhouette coefficient of 0.71, further supporting the use of KNN.

The KNN model combined with multimodal MRI feature optimization demonstrated excellent classification performance, strong generalizability, and low computational complexity. Without requiring extensive model training, it enables efficient and accurate classification in high-dimensional, small-sample imaging contexts, offering a promising approach for intelligent diagnosis of knee joint pathologies. Despite promising results, some limitations should be acknowledged. First, KNN’s computational load increases with dataset size, which may limit inference efficiency in large-scale applications. Future studies may explore acceleration strategies such as KD-trees or ANN algorithms. Second, this study focused exclusively on IFP-related pathologies; the model’s generalizability to other joint or soft tissue conditions remains to be validated.

Future research may further expand the sample size and incorporate multi-center data to enhance the robustness and generalizability of the model. A systematic comparison between KNN and mainstream machine learning and deep learning algorithms, such as support vector machines, random forests, and convolutional neural networks, should be conducted to clarify their respective strengths and limitations. By introducing transfer learning and automatic feature extraction, end-to-end deep learning frameworks can be developed to improve feature representation and classification accuracy. Additionally, integrating the interpretability of KNN with the expressive power of deep models through hybrid learning strategies may further boost model performance. To facilitate clinical translation, efforts should also focus on optimizing user workflows, reducing computational costs, and improving the efficiency of large-scale MRI data processing and interpretation, thereby accelerating the deployment of intelligent recognition methods in real-world diagnostics.

Conclusion

This study successfully developed a method for identifying IFP lesions in the knee joint using the KNN algorithm combined with multimodal MRI technology. This method accurately classified knee joint conditions through feature engineering optimization, including degenerative, traumatic, and normal samples. In the experiments, the method achieved high accuracy rates of 94.736% on the training set and 92.857% on the testing set, outperforming the traditional CNN-Class8 model (Fig. 5). These findings demonstrate that integrating advanced image processing techniques with machine learning models can significantly enhance the diagnostic accuracy of knee joint diseases. To enhance the scientific rigor and clinical applicability of this method, future studies should utilize larger datasets, compare alternative machine learning models, and incorporate deep learning techniques to develop a more robust and generalizable diagnostic system.

Data availability

The datasets used or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- FF:

-

Fat Fraction

- IFP:

-

Infrapatellar Fat Pad

- KNN:

-

K-Nearest Neighbor

- MDQ:

-

MDxion-Quant

- MRI:

-

Magnetic Resonance Imaging

- T2M:

-

T2 Mapping

References

Dieter, V., Janssen, P. & Krauss, I. Efficacy of the mHealth-Based Exercise Intervention re.flex for Patients With Knee Osteoarthritis: Pilot Randomized Controlled Trial. JMIR mHealth and uHealth vol. 12 e54356 (2024).

Vitaloni, M. et al. Global management of patients with knee osteoarthritis begins with quality of life assessment: a systematic review. BMC Musculoskelet. Disorders 20 (2019).

Zhang, Y. et al. Associations of Dietary Macroelements with Knee Joint Structures, Symptoms, Quality of Life, and Comorbid Conditions in People with Symptomatic Knee Osteoarthritis. Nutrients vol. 14 3576 (2022).

Chen, H. et al. The effects of a home-based exercise intervention on elderly patients with knee osteoarthritis: a quasi-experimental study. BMC Musculoskelet. Disorders 20 (2019).

Jormand, H., Mohammadi, N. & Khani Jeihooni, A. & Afzali Harsini, P. Self-care behaviors in older adults suffering from knee osteoarthritis: application of theory of planned behavior. Frontiers Public. Health 10 (2022).

Nemec, A. & Caldwell, N. Knee joint degeneration in 22-year-old collegiate soccer player. Int. J. Exerc. Sci.: Conf. Proc. 2, 139 (2024).

Nacey, N. C., Geeslin, M. G., Miller, G. W. & Pierce, J. L. Magnetic resonance imaging of the knee: an overview and update of conventional and state of the Art imaging. J. Magn. Reson. Imaging. 45, 1257–1275 (2017).

Wang, X., Oo, W. M. & Linklater, J. M. What is the role of imaging in the clinical diagnosis of osteoarthritis and disease management? Rheumatology 57, iv51–iv60 (2018).

Jerban, S., Chang, E. Y. & Du, J. Magnetic resonance imaging (MRI) studies of knee joint under mechanical loading: review. Magn. Reson. Imaging. 65, 27–36 (2020).

Li, J. et al. MRI-based texture analysis of infrapatellar fat pad to predict knee osteoarthritis incidence. Radiology 304, 611–621 (2022).

Litjens, G. et al. A survey on deep learning in medical image analysis. Med. Image. Anal. 42, 60–88 (2017).

Che, C. et al. Improved deep learning-based macromolecules structure classification from electron cryo-tomograms. Mach. Vis. Appl. 29, 1227–1236 (2018).

Chartrand, G. et al. Deep learning: A primer for radiologists. RadioGraphics 37, 2113–2131 (2017).

Chen, L. C. et al. Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 40, 834–848 (2018).

Zhou, Z., Zhao, G., Kijowski, R. & Liu, F. Deep convolutional neural network for segmentation of knee joint anatomy. Magn. Reson. Med. 80, 2759–2770 (2018).

Kimura, H. et al. Risk factors for cage migration following lateral lumbar interbody fusion combined with posterior instrumentation: A multicenter retrospective study of 983 cases. Spine https://doi.org/10.1097/brs.0000000000004602 (2023).

Guida, C., Zhang, M. & Shan, J. Knee Osteoarthritis Classification Using 3D CNN and MRI. Applied Sciences vol. 11 5196 (2021).

Ratna, H. V. K. et al. Machine learning and deep neural network-based learning in osteoarthritis knee. World J. Methodol. 13, 419–425 (2023).

Kinger, S. Deep learning for automatic knee osteoarthritis severity grading and classification. Indian J. Orthop. 58, 1458–1473 (2024).

Wu, W. et al. Self-Supervised multimodal fusion network for knee osteoarthritis severity grading. 2023 Int. Conf. Digit. Image Computing: Techniques Appl. (DICTA). 8, 57–64 (2023).

Xiao, Q., Zhong, X. & Zhong, C. Application research of KNN algorithm based on clustering in big data talent demand information classification. Int. J. Pattern Recognit. Artif. Intell. 34, 2050015 (2019).

Ozdemir, C. & Dogan, Y. Advancing early diagnosis of Alzheimer’s disease with next-generation deep learning methods. Biomed. Signal Process. Control. 96, 106614 (2024).

Ozdemir, C. & Dogan, Y. Advancing brain tumor classification through MTAP model: an innovative approach in medical diagnostics. Med. Biol. Eng. Comput. 62, 2165–2176 (2024).

OZDEMIR, C. Adapting transfer learning models to dataset through pruning and Avg-TopK pooling. Neural Comput. Appl. 36, 6257–6270 (2024).

Larbi, A. et al. Hoffa’s disease: A report on 5 cases. Diagn. Interv. Imaging. 95, 1079–1084 (2014).

Howell, G. Patellar fat pad abnormalities. MRI Web Clinic, September 2008. Available at: https://radsource.us/patellar-fat-pad-abnormalities/

Ye, Q. et al. Quantitative evaluation of the infrapatellar fat pad in knee osteoarthritis: MRI-based radiomic signature. BMC Musculoskelet. Disorders 24 (2023).

Yu, K. et al. Prediction model for knee osteoarthritis using magnetic resonance–based radiomic features from the infrapatellar fat pad: data from the osteoarthritis initiative. Quant. Imaging Med. Surg. 13, 352–369 (2023).

Xuan, A. et al. The application of machine learning in early diagnosis of osteoarthritis: a narrative review. Therapeutic Adv. Musculoskelet. Disease 15 (2023).

Hsieh, Y. M. et al. Convolutional neural networks for automatic virtual metrology. IEEE Rob. Autom. Lett. 6, 5720–5727 (2021).

Demir, F., Siddique, K., Alswaitti, M., Demir, K. & Sengur, A. A. Simple and effective approach based on a Multi-Level feature selection for automated Parkinson’s disease detection. J. Personalized Med. 12, 55 (2022).

Noaman Kadhim, M., Al-Shammary, D. & Sufi, F. A novel voice classification based on gower distance for Parkinson disease detection. Int. J. Med. Informatics. 191, 105583 (2024).

Becker, T., Rousseau, A. J., Geubbelmans, M., Burzykowski, T. & Valkenborg, D. Decision trees and random forests. Am. J. Orthod. Dentofac. Orthop. 164, 894–897 (2023).

Funding

This study was supported by National Natural Science Foundation of China (No. 52265066), Guizhou Provincial Department of Education Youth Science and Technology Talent Cultivation Program (Qianjiaohe [2022] 138).

Author information

Authors and Affiliations

Contributions

Peng Zhou and Lingling Song conceptualized and designed the study. Zhenyan Liu, Jiang Dai, and Ming Yang were responsible for data collection and preprocessing. He Sui and Zhaoshu Huang contributed to MRI protocol optimization and imaging analysis. Yu Li conducted the algorithm implementation and statistical analysis. Lingling Song supervised the entire project and finalized the manuscript. All authors contributed to manuscript drafting, reviewed the final version, and approved it for submission.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was approved by the Clinical Ethics Committee of the Affiliated Hospital of Guizhou Medical University (No. 2024-004). Written informed consent from the patients was obtained within the manuscript.

Competing interests

The authors declare no competing interests.

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhou, P., Liu, Z., Dai, J. et al. KNN algorithm for accurate identification of IFP lesions in the knee joint: a multimodal MRI study. Sci Rep 15, 18163 (2025). https://doi.org/10.1038/s41598-025-02786-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-02786-2