Abstract

In order to face the uncertainty and semantic complexity of speech signals in real-time interactive scenes and achieve more efficient and accurate speech recognition results, this study proposes a Dynamic Adaptive Transformer for Real-Time Speech Recognition (DATR-SR) model. The study is based on public datasets such as Aishell-1, HKUST, LibriSpeech, CommonVoice, and China TV series datasets covering various contexts, and extensive experiments and analysis are carried out. The results show that DATR-SR has excellent adaptability and robust performance in different language environments and dynamic scenes. With the increase of data volume, the character error rate decreases from 5.2 to 2.7%, the reasoning delay is always kept within 15ms, and the resource utilization rate reaches more than 75%, showing efficient computing ability. On the two kinds of datasets, the word error rate is as low as 4.3%, and the accuracy rate is over 91%. Especially in complex contexts, the semantic coherence rate is 92.3% and the speech event recall rate is 91.3%. Compared with other cutting-edge models, DATR-SR is significantly improved in diverse speech event recognition and dynamic scene switching response. This study aims to provide an efficient speech recognition solution for technical developers and service providers in real-time interactive fields such as emotional socialization, online education and intelligent customer service to enhance the user experience and help the intelligent development of industrial applications.

Similar content being viewed by others

Introduction

Research background and motivations

With the rapid development of artificial intelligence (AI) technology, speech recognition, as one of the core technologies of human-computer interaction, has been widely used in real-time interactive scenes such as emotional socialization, online education and intelligent customer service1,2. However, the speech signals in these scenes are highly continuous, uncertain and complex context-dependent, and the traditional speech recognition model shows obvious limitations in dynamic scene adaptability, semantic coherence and processing efficiency. Especially in dealing with multi-language, multi-accent and complex context-changing tasks, there are often problems of information loss and semantic deviation3,4,5. At the same time, the demand for real-time processing further aggravates the bottleneck of computing resources, making it a key challenge to build a speech recognition model with high efficiency and robustness6,7. Therefore, the combination of innovative optimization based on Transformer architecture and multi-modal feature fusion technology provides an important technical direction and theoretical basis for solving existing problems.

Research objectives

Aiming at the key challenges of speech recognition in real-time interactive scenes, this study proposes an end-to-end model that can dynamically adapt to complex contexts and efficiently process diverse speech signals. The specific objectives include: First, by optimizing the Transformer architecture, the dynamic coding and multi-scale feature modeling of speech signals are realized, thus enhancing the semantic consistency and context capture ability of the model in complex scenes. Second, semantic optimization generator and context-aware decoding mechanism are introduced to improve the information retention rate and event recognition accuracy in the process of speech-to-text conversion. Finally, a real-time speech recognition system with high adaptability and robustness in multi-language and multi-scene conditions is constructed, which lays the foundation for improving the real-time and interactivity of speech technology.

Literature review

Application and optimization of transformer in End-to-End speech recognition

In recent years, with its powerful feature modeling capabilities, the Transformer architecture has gradually become the mainstream technology in end-to-end automatic speech recognition (E2E-ASR). Shahamiri et al.8 designed the Dysarthric Speech-Transformer (DS-Transformer) model in response to the complexity and scarcity of dysarthric speech. Through the neural freezing strategy and pre-training with healthy speech data, they achieved a performance improvement of up to 23%. Dong et al.9 proposed a soft beam pruning algorithm combined with a prefix module, which achieved a dynamic balance between accuracy and efficiency when optimizing the decoding paths of speech recognition in specific domains. Vitale et al.10 studied acoustic syllable boundary detection through the encoding layer of E2E-ASR, revealing the potential advantages of the Transformer in capturing the rhythmic features of syllables. Rybicka et al.11 effectively dealt with the complexity of multi-speaker recording scenarios through the attractor refinement module and the k-means clustering algorithm. Their performance improvement in real data reached 15%, demonstrating the adaptability of the Transformer in flexibly handling complex speech tasks. Regarding the speech synthesis of rare languages, Lu et al.12 introduced cross-lingual context encoding features and Conformer blocks into the FastSpeech2 model. Combined with the token-average mechanism, they optimized the generation quality in scenarios with scarce data, successfully achieving a significant decrease in the character error rate and providing a breakthrough solution for the speech synthesis of minority languages.

Verification of speech recognition effect of transformer end-to-end model in specific dataset scene

The performance of the Transformer architecture in speech recognition for specific scenarios and datasets has also been widely verified. Tang13 designed the Denoising and Mandarin Recognition Supplement-Transformer (DMRS-Transformer) network, which integrated a denoising module and a Mandarin recognition supplement mechanism. It achieved a reduction in the character error rate of 0.8% and 1.5% on the Aishell-1 and HKUST datasets respectively. Hadwan et al.14 proposed the Acoustic Feature Strategy-Transformer (AFS-Transformer) model. By embedding speaker information in acoustic features and optimizing the processing strategy for silent frames, it effectively improved the model’s adaptability in scenarios with two speakers. Aiming at the bottleneck problems of high parameter quantity and low deployment efficiency, Ben-Letaifa and Rouas (2023) proposed a variable-rate-based pruning algorithm to dynamically optimize the parameter distribution of the feed-forward layer of the Transformer, achieving an optimized balance between performance and resource utilization15. Loubser et al.16 combined Convolutional Neural Network (CNN) with a lightweight Transformer architecture, which significantly reduced the computational cost while maintaining a low Word Error Rate (WER). Based on Squeezeformer, Guo et al.17 designed a multimodal pronunciation error detection model. Experiments on the PSC-Reading Mandarin dataset showed that the proposed model significantly improved the F1 score and diagnostic accuracy, further verifying the practical value of the Transformer architecture in speech error diagnosis. Pondel-Sycz et al.18 conducted a systematic analysis of five Transformer-based models in multilingual datasets (Mozilla Common Voice, LibriSpeech, and VoxPopuli). The results showed that these models demonstrated excellent performance and adaptability in both clean audio and degraded signal scenarios.

Existing research and analysis

In conclusion, the current research on Transformer-based end-to-end speech recognition mainly focuses on three aspects. Firstly, improving the robustness and accuracy of the model for complex speech signals through structural optimization. Secondly, controlling the model scale in scenarios with limited resources to reduce the burden of training and inference. Thirdly, enhancing the perception ability for specific contexts and rare expressions. However, despite the positive progress made in the technical approach of existing achievements, when facing real-time interaction tasks, the existing models generally still have insufficient responses in terms of high dynamics, cross-scenario adaptability, and decoding efficiency. Especially in scenarios such as multilingual alternation, dialect interference, and complex context switching, problems like semantic breaks and recognition lags are likely to occur in the models. At the same time, the architecture with a large number of parameters and the complex decoding paths limit its application in edge devices or resource-sensitive systems19,20,21. Therefore, starting from the dual dimensions of real-time performance and interactivity, this study aims to propose an end-to-end model that can dynamically adapt to context changes and has high computational efficiency. Through structural reconstruction and the optimization of the semantic guidance mechanism, the practicality and performance boundaries of the Transformer in real-time speech recognition scenarios will be improved.

Research model

Method of model design

The proposed Dynamic Adaptive Transformer for Real-Time Speech Recognition (DATR-SR) model is based on E2E-ASR architecture, and the improved transformer is embedded to realize efficient speech signal processing. Its core design includes four key processes: adaptive coding module, multi-scale feature extraction module, context-aware decoding module and semantic optimization generator module.

Aiming at the continuity, uncertainty and context dependence of speech signal in real-time interactive scene, DATR-SR adopts dynamic hierarchical adaptive encoder to dynamically allocate computing resources according to signal complexity to avoid redundant operations. Multi-scale feature extraction module captures local and global features to enhance the adaptability to continuous speech22,23. In the decoding stage, the context-aware event-driven mechanism is introduced, the decoding path is adjusted in real time, and the semantic association is optimized by combining with Graph Neural Network (GNN)24. Semantic optimization generator provides context guidance through semantic prediction, improves the dynamic adaptation ability of the model in real-time speech recognition, and realizes efficient processing and real-time output of diversified requirements.

The improvement principle of DATR-SR model in two stages

Coding stage

In the coding stage, DATR-SR employs a dynamic hierarchical adaptation mechanism to adjust the number of encoding layers and the allocation of computational resources based on the complexity of the speech signal. This avoids over-modeling of low-complexity signals, which is common in traditional architectures, and enhances the processing efficiency for high-complexity signals25,26,27. At the same time, the multi-scale feature extraction module uses a hierarchical attention mechanism to model local and global features separately. This effectively handles long segments of continuous speech and alleviates the issues of feature loss and redundant calculations. It achieves a balance between resource allocation and representation efficiency28.

The core calculation in the coding stage can be expressed as:

-

(1)

Signal complexity evaluation

\(\:{x}_{t}\) represents the feature vector of the input speech signal. \(\:\text{V}\text{a}\text{r}\), \(\:\text{E}\text{n}\text{t}\text{r}\text{o}\text{p}\text{y}\) and \(\:\text{S}\text{p}\text{a}\text{r}\text{s}\text{i}\text{t}\text{y}\) are the variance, entropy and sparsity of the feature respectively. \(\:\alpha\:\), \(\:\beta\:\) and \(\:\gamma\:\) are the adjustment parameters.

-

(2)

Dynamic computing resource allocation

\(\:{L}_{max}\) is the maximum number of layers. \(\:{L}_{\text{t}\text{o}\text{t}\text{a}\text{l}}\) is the total number of layers. \(\:\tau\:\) is the complexity threshold.

-

(3)

Calculate the weight distribution layer by layer

\(\:{h}_{l}\) represents the output of the \(\:l\)-th layer. \(\:\sigma\:\) is the activation function. \(\:{W}_{l}\) and \(\:{b}_{l}\) are the weights and offsets.

-

(4)

Multi-head attention mechanism

\(\:Q\), \(\:K\) and \(\:V\) are the matrix of query, key and value respectively, and \(\:{d}_{k}\) is the dimension of key vector.

-

(5)

Multi-scale polymerization

\(\:{H}_{i}\) represents the feature representation of the \(\:i\)-th scale, and \(\:{\alpha\:}_{i}\) is the scale weight.

Decoding stage

In the decoding stage, DATR-SR adopts context-aware event-driven mechanism and semantic optimization strategy to monitor key events (such as pause and speech rate change) in real time and dynamically adjust the decoding path to adapt to multi-scene changes. Combined with GNN, the semantic association in decoding path is optimized to reduce decoding isolation29. The semantic optimization generator generates prior semantic information through context prediction, guides the decoder to choose the best path, reduces the invalid search space, and comprehensively improves semantic coherence and real-time response ability.

The core calculation in the decoding stage can be expressed as:

-

(1)

Speech event detection

\(\:\varDelta\:{h}_{t}\) is the characteristic of time step difference. \(\:\text{E}\text{n}\text{e}\text{r}\text{g}\text{y}\) and \(\:\text{D}\text{u}\text{r}\text{a}\text{t}\text{i}\text{o}\text{n}\) are the energy and duration of speech signal respectively. \(\:\delta\:\), \(\:ϵ\) and \(\:\eta\:\) are parameters.

-

(2)

Dynamic path adjustment

\(\:{z}_{t}\) represents the current decoding state. \(\:{f}_{adjust}\) is the dynamic path adjustment function.

-

(3)

Transcendental semantic generation

\(\:{s}_{t}\) is the generated prior semantic information. \(\:{W}_{s}\) and \(\:{b}_{s}\) are the weight and bias.

-

(4)

GNN optimization path

\(\:\mathcal{V}\) is the set of graph nodes. \(\:\mathcal{N}\left(v\right)\) is the neighborhood of node \(\:v\). \(\:{W}_{edge}\) and \(\:{b}_{edge}\) are edge weight and offset.

-

(5)

Decoding path update

\(\:{f}_{decode}\) is the decoding path update function.

-

(6)

Final decoding output

\(\:{y}_{t}\) is the decoded output of time step \(\:t\).

In this process, the related parameters and implementation of GNN are shown in Table 1:

Experimental design and performance evaluation

Datasets collection

In order to meet the diversity requirements of speech recognition in real-time interactive scenes, this study uses two kinds of datasets for training and testing. The first kind of dataset includes Aishell-1, HKUST, LibriSpeech and CommonVoice, covering multilingual environment and pronunciation variants, and enhancing the adaptability of the model to multiple languages and accents. The second kind of dataset selects ten Chinese TV series which have been popular in recent years, and shows rich scene and language diversity by crawling audio content, while avoiding privacy issues.

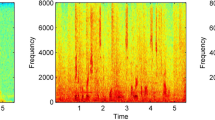

The second kind of dataset uses FFmpeg tools to extract audio with high precision, and combines with short-time Fourier transform for feature decomposition. In order to meet the optimization requirements of speech recognition for the length of speech segments, according to the distribution characteristics of speech duration, the audio is cut into sub-data packets ranging from 1 min to 5 min, and a total of 88,125 sub-data items are generated. In addition, in order to reduce the influence of environmental noise and the deviation of scene characteristics, 76,232 pieces of data that meet the standard are preserved through the processing of mean return to zero and variance normalization. There are 174,966 pieces of data in the two types of datasets, 80% of which are used for training and 20% for testing.

The sorting results of the first kind of dataset are shown in Table 2:

The sorting results of the second kind of dataset are shown in Table 3:

Experimental environment

In this study, the performance of DATR-SR model is tested from two aspects: one is to evaluate its robustness in a large number of data environments, and the other is to test its speech recognition effect, and compare it with the cutting-edge model to verify its superiority. The specific analysis is as follows:

-

(1)

Robustness analysis of DATR-SR in mass data environment.

Using the first kind of public data set, the data are divided into six proportions: 10%, 20%, 40%, 50%, 80% and 100%, and the DATR-SR is tested in stages. The Character Error Rate (CER) and training convergence time are analyzed, and the influence of data amount on model performance is evaluated30. At the same time, the reasoning delay error and the utilization rate of computing resources are monitored to verify the computational efficiency and hardware adaptability of the model in large-scale data processing31.

-

(2)

Analysis of speech recognition effect of DATR-SR on two kinds of datasets.

Based on the first kind of public dataset and the second kind of TV series dataset, the speech recognition performance of DATR-SR is evaluated, and the core recognition ability of DATR-SR in multi-language and multi-scene conditions is tested by calculating the WER, F1 index of pronunciation error detection and recognition accuracy on each dataset.

-

(3)

Comparative analysis of DATR-SR and frontier speech recognition model.

In order to objectively compare the advantages and disadvantages of DATR-SR with other cutting-edge speech recognition models, a unified comparative experiment was designed on two kinds of datasets, and the same training and testing process is adopted to ensure fairness. It is divided into three dimensions, as shown in Table 4:

In Table 4 above, the calculation equation of CCR is:

\(\:N\) is the total number of test speech samples. \(\:{M}_{i}\) is the total number of sentences in sample \(\:i\). \(\:{w}_{i,j}\) is the \(\:j\)-th sentence in sample \(\:i\). \(\:{f}_{coherence}\) is the coherence scoring function of sentences \(\:{w}_{i,j}\) and \(\:{w}_{i,j+1}\) based on the included angle of semantic vectors.

The calculation equation of MSD is:

\(\:{K}_{i}\) is the total number of words in sample \(\:i\). \(\:{V}_{input}\left({w}_{i,k}\right)\) is the semantic embedding vector of the input speech. \(\:{V}_{output}\left({w}_{i,k}\right)\) is the semantic embedding vector of the model output.

The calculation equation of IRR is:

\(\:{T}_{i}\) is the total time step of sample \(\:i\). \(\:{\delta\:}_{t}\) is the marking function of whether the information is correctly retained in time step \(\:t\) (1 means correct, 0 means error). \(\:P({w}_{i,t}\mid\:{x}_{i,t})\) is the probability that the input signal \(\:{x}_{i,t}\) is transcribed into the word \(\:{w}_{i,t}\) at time step \(\:t\).

The calculation equation of STRT is:

\(\:{S}_{i}\) is the total number of scene changes in sample \(\:i\). \(\:{t}_{start}\left({x}_{i,s}\right)\) is the switching start time of voice signal in scene \(\:s\). \(\:{t}_{end}\left({x}_{i,s}\right)\) is the end time of voice signal switching in scene \(\:s\).

The calculation equation of SAR is:

\(\:{M}_{i}\) is the total number of scenes in sample \(\:i\). \(\:{e}_{i,m}\) is the error frame number of the model in scene \(\:m\). \(\:{T}_{i,m}\) is the total number of frames of scene \(\:m\).

Parameters setting

It is necessary to compare DATR-SR with the most advanced speech recognition models in the past two years. First, the study sorts out the implementation methods of the advanced models, and then tests them at a unified data level to reduce errors. The research refers to the previous literature review, and counts seven most advanced speech recognition models at this stage. The sorting results are shown in Table 5:

The software and hardware environment of the study is arranged as shown in Table 6:

Performance evaluation

Robustness analysis

The robustness analysis results of DATR-SR model are shown in Fig. 1:

In Fig. 1, DATR-SR shows strongly demonstrates robustness and adaptability under different datasets and data volume ratios. With the increase of data volume, CER decreases from the highest 5.2% to the lowest 2.7%. Especially on the CommonVoice dataset with complex context, and keeps a low semantic error (2.8-3.1%), which reflects its recognition accuracy for multi-language and multi-scenes. The training convergence time is controlled in the range of 74–90 s with 100% data, which shows high efficiency for large-scale training. In the aspect of reasoning delay, all datasets are kept within 15ms, and the resource utilization rate is above 75%. It further proves the efficiency and hardware adaptability of the model in real-time interactive scenes. This shows that DATR-SR can effectively balance complexity and efficiency, and meet the real-time requirements of diverse speech recognition tasks.

Analysis of speech recognition effect

The analysis result of speech recognition effect of DATR-SR model is shown in Fig. 2:

In Fig. 2, DATR-SR shows high speech recognition ability and cross-scene adaptability on two kinds of datasets. On the first kind of dataset, the WER is maintained at 4.3-6.2%, and the accuracy is over 91%, which reflects the stable performance of DATR-SR in multi-language and multi-accent environment. The F1 index is as high as 0.91, which verifies the accurate capture of semantic information. On the second kind of dataset, DATR-SR faces TV drama scenes with complex contexts, and the WER fluctuates slightly, but the accuracy rate is always above 90%, and the F1 index reaches 0.91. It proves that DATR-SR can effectively adapt to different scenes and language styles. These results further confirm that DATR-SR not only has cross-domain speech recognition ability in real-time interactive scenes, but also shows excellent dynamic adaptability in complex semantic conversion tasks.

Comparative analysis with Cutting-Edge speech recognition model

The comparative analysis results of DATR-SR model and frontier speech recognition model are shown in Fig. 3:

In Fig. 3, DATR-SR shows excellent performance advantages in all three dimensions. In the context consistency analysis, the CCR of DATR-SR is as high as 92.3%, which is significantly better than other models, and the MSD is the lowest, only 4.2%, which reflects its semantic coherence and accuracy in complex dialogue contexts. In the analysis of event recognition ability, the ERR reaches 91.3%, and the EER is controlled at 4.2%, which shows the ability to accurately detect diverse voice events. In the dynamic scene adaptation analysis, STRT is only 485ms, far below the standard of 500ms, SAR reaches 91.8%, and SSER remains at 4.2%, which proves its efficiency and stability in real-time interactive scenes.

In-depth, DATR-SR achieves accurate capture of phonetic continuity and semantic consistency by optimizing context modeling and semantic mapping. The event detection module performs well in complex scenes, effectively reducing semantic ambiguity. The rapid response mechanism significantly enhances the adaptability and robustness of the model in multi-scene switching, and indicates wide potential its wide application potential in real-time interactive speech recognition tasks.

Discussion

From the experimental results, the advantages of DATR-SR in speech recognition tasks are multi-dimensional optimization and efficient dynamic adaptability. The model can dynamically adjust the computing resources according to the complexity of the signal, and realize efficient processing of semantic consistency and information retention through multi-scale feature extraction and context-aware decoding. Different from the traditional fixed computing framework, DATR-SR flexibly responds to input changes, and avoids redundant computation while improving efficiency. In addition, its high recall rate and low error rate in high-load and diverse voice events verify the adaptability and stability of multi-task parallel processing. GNN is introduced to further optimize the semantic path and strengthen the depth of language understanding and the accuracy of information transmission. This design provides a new idea for technical new perspective in the field of speech recognition, and lays a theoretical foundation for complex multimodal fusion and the construction of real-time speech processing system.

Conclusion

Research contribution

In this study, the DATR-SR model is proposed, which achieves a double new perspective in theory and practice in the field of end-to-end speech recognition. The model innovatively combines the dynamic hierarchical adaptive encoder and the context-aware decoding module. Through the real-time evaluation of signal complexity and the optimal allocation of computing resources, the processing efficiency and semantic consistency of speech signals are significantly improved. At the same time, the study further introduces multi-scale feature extraction and semantic optimization generation mechanism, and realizes the synchronous optimization of semantic coherence rate and information retention ability in complex context. Through extensive tests on open datasets and diverse scenes, the robustness and dynamic adaptability of the model in cross-language and cross-scene tasks are verified, which provides theoretical support and innovative path for speech recognition technology in real-time interactive scenes and provides efficient technical solutions for industry development.

Future works and research limitations

The future research can further explore the scope of application and the performance of deep optimization of the model. Although DATR-SR shows strongly demonstrates adaptability and stability in multi-language and multi-scene, the performance of the model in more complex multi-modal data fusion and high noise environment has not been fully verified, which provides an important direction for future optimization research. In addition, in order to further enhance the universality and expansibility of the model, the research can explore the combination of DATR-SR with pre-training language model and other deep learning frameworks to enhance its ability of multitasking. At the same time, with the continuous improvement of hardware performance, how to further reduce the computational complexity and energy consumption in the resource-constrained environment is also a problem worthy of in-depth discussion. These efforts will open a broader space for the development of intelligent voice technology and promote its popularization and application in practical scenes.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author Can Yang on reasonable request via e-mail yangcan826@163.com.

References

Liu, Y. et al. SFA: Searching Faster Architectures for end-to-end Automatic Speech Recognition Models 81101500 (Computer Speech & Language, 2023). 3.

Andayani, F. et al. Hybrid LSTM-transformer model for emotion recognition from speech audio files. IEEE Access. 10(16), 36018–36027 (2022).

Zheng, H. et al. Transformer encoder-based multilevel representations with fusion feature input for speech emotion recognition. J. Southeast Univ. (English Ed.). 39(1), 252 (2023).

Deng, K. & Woodland, P. C. Label-synchronous neural transducer for adaptable online E2E speech recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2(14), 27 (2024).

Yecchuri, S. & Vanambathina, S. D. Sub-convolutional U-Net with transformer attention network for end-to-end single-channel speech enhancement. EURASIP J. Audio Speech Music Process. 2024(1), 8 (2024).

Li, X. et al. Transformer-Based End-to-End speech translation with rotary position embedding. IEEE. Signal. Process. Lett. 31(5), 371–375 (2024).

Kheddar, H., Hemis, M. & Himeur, Y. Automatic speech recognition using advanced deep learning approaches: A survey. Inform. Fusion. 15(2), 102422 (2024).

Shahamiri, S. R., Lal, V. & Shah, D. Dysarthric speech transformer: A sequence-to-sequence dysarthric speech recognition system. IEEE Trans. Neural Syst. Rehabil. Eng. 31(7), 3407–3416 (2023).

Dong, F. et al. A Transformer-Based End-to-End automatic speech recognition algorithm. IEEE. Signal. Process. Lett. 30(12), 1592–1596 (2023).

Vitale, V. N. et al. Exploring emergent syllables in end-to-end automatic speech recognizers through model explainability technique. Neural Comput. Appl. 36(12), 6875–6901 (2024).

Rybicka, M. et al. End-to-End neural speaker diarization with Non-Autoregressive attractors. IEEE/ACM Trans. Audio Speech Lang. Process. 32(4), 3960–3973 (2024).

Lu, K. et al. Optimizing Uyghur speech synthesis by combining pretrained Cross-Lingual model. ACM Trans. Asian Low-Resource Lang. Inform. Process. 23(9), 1–11 (2024).

Tang, L. A transformer-based network for speech recognition. Int. J. Speech Technol. 26(2), 531–539 (2023).

Hadwan, M., Alsayadi, H. A. & AL-Hagree, S. An End-to-End Transformer-Based automatic speech recognition for Qur’an reciters. Computers Mater. Continua. 74(2), 138–140 (2023).

Ben-Letaifa, L. & Rouas, J. L. Variable scale pruning for transformer model compression in end-to-end speech recognition. Algorithms 16(9), 398 (2023).

Loubser, A., De-Villiers, P. & De-Freitas, A. End-to-end automated speech recognition using a character based small scale transformer architecture. Expert Syst. Appl. 252(12), 124119 (2024).

Guo, S. et al. Multi-Feature and Multi-Modal mispronunciation detection and diagnosis method based on the squeezeformer encoder. IEEE Access. 11(13), 66245–66256 (2023).

Pondel-Sycz, K., Pietrzak, A. P. & Szymla, J. End-To-End deep neural models for automatic speech recognition for Polish Language. Int. J. Electron. Telecommun.. 70(2), 315–321 (2024).

Unnisa, N. et al. Int. J. Inform. Technol. Comput. Eng., 12(2), 783–790 (2024).

Liu, Y. et al. Speech emotion recognition using cascaded attention network with joint loss for discrimination of confusions. Mach. Intell. Res. 20(4), 595–604 (2023).

Taşar, D. E., Koruyan, K. & Çılgın, C. Transformer-Based Turkish automatic speech recognition. Acta Infologica. 8(1), 1–10 (2024).

Yang, D. et al. Contextual and cross-modal interaction for multi-modal speech emotion recognition. IEEE. Signal. Process. Lett. 29(5), 2093–2097 (2022).

Xiong, Y. et al. Fuzzy speech emotion recognition considering semantic awareness. J. Intell. Fuzzy Syst. 6(2), 1–11 (2024).

Zhao, X. et al. Regularizing cross-attention learning for end-to-end speech translation with ASR and MT attention matrices. Expert Syst. Appl. 247(12), 123241 (2024).

Zhang, J. et al. Nonlinear regularization decoding method for speech recognition. Sensors 24(12), 3846 (2024).

Sameer, M. et al. Arabic speech recognition based on Encoder-Decoder architecture of transformer. J. Techniques. 5(1), 176–183 (2023).

Zheng, Y. E-learning and speech dynamic recognition based on network transmission in music interactive teaching experience. Entertain. Comput. 50(6), 100716 (2024).

Zahran, A. I. et al. Fine-tuning self-supervised learning models for end-to-end pronunciation scoring. IEEE Access. 11(21), 112650–112663 (2023).

Chowdhury, S. A., Durrani, N. & Ali, A. What Do end-to-end Speech Models Learn about Speaker, Language and Channel Information?? A layer-wise and neuron-level Analysis 83101539 (Computer Speech & Language, 2024). 6.

Fan, R. et al. A Ctc alignment-based non-autoregressive transformer for end-to-end automatic speech recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 31(12), 1436–1448 (2023).

Hemant, P. & Narvekar, M. Development of a code-switched Hindi-Marathi dataset and transformer-based architecture for enhanced speech recognition using dynamic switching algorithms. Appl. Acoust. 230(1), 110408 (2025).

Acknowledgements

The work was supported in part by Scientific Research Project of Hunan Provincial Department of Education(Grant No.23A0659); Research on the Talent Cultivation Model of Industry Education Integration in Software Engineering under the Background of Employment Education(Grant No.2023122958032); Case Resource Construction Based on Bulk Grain Transportation Control System - Design and Practice of Intelligent Upper Computer Solution(Grant No.231101913303102).

Author information

Authors and Affiliations

Contributions

Ping Li: Conceptualization, methodology, software, validation, formal analysis, investigation, resources, data curation, writing—original draft preparation Can Yang: writing—review and editing, visualization, supervision, project administration, funding acquisitionLei Mao: methodology, software, validation, formal analysis.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors. All methods were performed in accordance with relevant guidelines and regulations.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Li, P., Yang, C. & Mao, L. The analysis of transformer end-to-end model in Real-time interactive scene based on speech recognition technology. Sci Rep 15, 17950 (2025). https://doi.org/10.1038/s41598-025-02904-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-02904-0