Abstract

Debris flow is a type of non-homogeneous fluid with a high concentration that is created by melting snow and ice and heavy rainfall. Its formation and movement are intricate processes. The investigation of debris flow susceptibility assessment is crucial for disaster warning and mitigation. Since it is challenging to predict debris flows with precision using traditional methods, machine learning algorithms have been used more and more in this field in recent years. In this paper, a debris flow susceptibility assessment model is constructed based on RF (Random Forest) and XGBoost (Extreme Gradient Boosting) models with Stacking ensmble learning method, and SPY technique is introduced to optimize the negative sample selection. The outcomes demonstrate that the SPY-RF model with AUC value of 0.93 outperforms the original RF model, which had an AUC value of 0.82, by a significant margin, and performs well in all risk levels, particularly in the very high susceptibility zone with the highest debris flow density. Furthermore, the SPY-XGBoost model’s AUC value of 0.87 is superior to the original XGBoost model’s 0.72. This suggests that the SPY technique is able to improve the prediction accuracy and reliability of the model, especially effective in reducing the misclassification of non-prone areas. On the other hand, the high correlation of base-learner features prevented the Stacking-RF and Stacking-XGBoost models from improving the prediction performance any further, with AUC values of 0.80 and 0.71, respectively. The results of the factor contribution analysis indicate that the main factors influencing the susceptibility of debris flow are SPI, rainfall, curvature, and area. Of these, SPI contributes the most, indicating the critical role that water flow intensity plays in the formation of debris flow. This paper presents a study that demonstrates the benefits of integrating SPY technology with ensemble learning. Additionally, it investigates the shortcomings of the Stacking model in debris flow prediction, offering a valuable avenue for future research on optimizing model diversity and enhancing prediction performance.

Similar content being viewed by others

Introduction

With a material composition gradation spanning six orders of magnitude, debris flow is primarily a high-concentration, wide-graded, multi-phase, inhomogeneous fluid that is created by heavy rainfall or snowmelt. Its formation and movement process are extremely complex, frequently involving rapid mobility, strong impact, and sudden erosion of mountainous environments, which has a strong destructive effect and is challenging to manage1. Thus, it is crucial for early warning systems and debris flow disaster prevention to predict the spatial probability of debris flow occurrence through debris flow susceptibility evaluation studies. This is also a challenging and popular research topic. With the intensification of extreme weather in recent years2, the situation of debris flow disaster in China, which is already severe, has become more and more serious. It is also more challenging to precisely assess and evaluate debris flow susceptibility using conventional techniques, so knowing how to appropriately characterize debris flow susceptibility is crucial in this situation.

The expert scoring method, hierarchical analysis method, fuzzy comprehensive judgment method, weighted information entropy method, and multiple regression method are some of the traditional techniques frequently used in the evaluation of debris flow susceptibility3,4,5,6,7,8,9,10,11. Factor weights can be determined in two ways: first, using the expertise of the factors to allocate weights; second, using the expertise in conjunction with mathematical and statistical analysis techniques to derive factor weights. The fundamental idea behind both approaches is to evaluate the susceptibility of debris flows by calculating the factor weights. Nevertheless, due to the limitations of expertise, these approaches have limited applicability and are challenging to implement broadly in various geographic contexts12. For a considerable amount of time, this approach has been crucial, particularly in situations where data are scarce and technological resources are inadequate. However, because extreme weather events occur frequently and debris flows suddenly and quickly, traditional methods are frequently slow to react to disasters in real time and do not offer enough timely and accurate predictions. In recent times, machine learning algorithms have also become more popular for use in determining debris flow susceptibility. Convolutional neural networks, decision trees, random forests, artificial neural networks, and support vector machines have all been effectively used in the analysis of geologic hazards13,14,15,16,17,18. Both traditional assessment methods and disaster prediction accuracy can be greatly enhanced by machine learning models19. A single machine learning algorithm might not be able to sufficiently capture the best-fit function of the sample set in the hypothesis space or the real distribution due to the limited and extremely complex training data for the debris flow prediction problem, which would affect the prediction accuracy20. Consequently, by combining the benefits of several models, ensemble learning enhances prediction performance even more21. The primary techniques used in ensemble learning are Bagging (e.g., Random Forest), which trains several models of the same kind and averages or votes their outputs to lower the variance and overfitting risk of a model, and Boosting (e.g., Extreme Gradient Boosting), which builds upon the accuracy and stability of a model through iterative training to target the inaccurate predictions of the preceding model cycle; Stacking, in contrast, integrates various base model types and leverages a meta-model to learn from these base models’ outputs in order to achieve a more robust integrated prediction capability22. Ensemble learning improves the robustness and interpretability of geohazard prediction while also reducing bias and variance and handling intricate interactions between various features.

The training set for ensemble learning techniques typically includes a sizable percentage of both non-mudslide events (negative samples) and mudslide events (positive samples)23. When it comes to debris flow susceptibility assessment, researchers typically pay little attention to the negative samples that won’t occur in a disaster and instead concentrate only on the positive samples that have occurred or will occur in various investigations24. The negative samples needed for modeling were frequently selected at random from unlabeled samples in earlier research25,26. The irrational negative sample acquisition strategy is one of the primary sources of noise in the dataset due to this approach’s treatment of high-quality positive samples equally with negative samples from potentially noisy data27,28. This frequently results in issues like model overfitting. As a result, one of the main areas of focus for machine learning research is how to optimize the sample pool to enhance model performance. 2019 Zhu et al. presented a buffer zone control-based sampling technique that allows negative samples to be taken from outside the buffer zone. This technique is based on the fundamental idea that the area surrounding a disaster site is similar to the geoenvironmental conditions that caused the disaster29. In 2021, Liang et al. suggested a different sampling strategy based on buffer zone control, in which negative samples can be drawn from outside the buffer zone by first creating an initial susceptibility map, and in order to enhance the performance of the model, negative samples can then be chosen from the samples with the lowest susceptibility rank30. The interference of noisy data on the model can be greatly decreased by enhancing the negative sample selection strategy, which will further enhance the use of machine learning in the assessment of debris flow susceptibility. This enhances the model’s usefulness in practice and offers a wider outlook for debris flow hazard prediction.

Active seismic zones frequently affect the complex natural geologic structure of the upper Minjiang River region, and the area frequently experiences mudslide disasters due to the mountainous area’s delicate ecological balance. In order to assess the debris flow susceptibility in the study area, this paper takes into account the complex natural geographic conditions of the area and combines with the actual situation. It then determines 14 evaluation factors, including area, gully density, roundness, Melton ratio, lithology, distance from road, distance from fault, aspect, NDVI, SPI, TWI, rainfall, land use type, and curvature. Finally, it uses the Spy Technique to construct dependable non - debris flow samples and adopts the ensemble learning model to assess the susceptibility of debris flow in the upper Minjiang River basin based on the watershed unit as the evaluation unit. The evaluation results can serve as a basis for future debris flow disaster prevention and control in the area. In addition, the integration of the SPY technology and the integrated learning method in this paper has opened up a new path for similar studies in complex geological regions. In the future, continuous exploration will be carried out focusing on the optimization of the model structure and the improvement of prediction accuracy, which will contribute to the development of the technology for evaluating debris flow disasters.

Study area

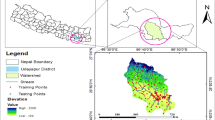

The coordinates of the Upper Minjiang River basin are 30°45’37 “N − 33°69’35 “N and 103°33’46 ‘E − 104°10’36 “E. In the Aba Tibetan and Qiang Autonomous Prefecture of Sichuan Province, the basin includes the counties of Wenchuan, Mao, and Songpan. The Minjiang River’s upper reaches are situated in an alpine canyon zone that is typical of the Sichuan Basin and the eastern edge of the Tibetan Plateau. The elevation changes sharply from 750 m to 5200 m over a 40–50 km horizontal range, with notable variations in surface relief. This area is mainly exposed in Paleozoic and Mesozoic clastic and carbonate rocks, mainly composed of sandstone slate, schist, shale, dry sandstone, marble and metamorphic rocks, which are extremely broken. The region is also in the western Sichuan trench area, which has seen numerous tectonic movements that have left behind fractured geological formations and shaky foundations. Additionally, summers are frequently marked by heavy rainfall due to the influence of the monsoon climate. This uneven distribution of precipitation increases the risk of natural disasters like debris flow. The Minjiang River, a significant tributary of the Yangtze River’s upper reaches, serves as the study area’s principal river. With a watershed area of 7326 km2, the upper reaches of the Minjiang River in the study area span approximately 295 km. The main stream can be separated into the northern, middle, and southern sections from the upper reaches to the lower reaches based on the longitudinal profile characteristics of the main stream channel (Fig. 1).

Geographic location of the upper Minjiang River region and the distribution of debris flow basins. Created by ArcGIS Pro 3.0.1 https://www.esri.com/en-us/arcgis/products/arcgis-pro/overview.

Data and research methodology

Data

Debris flow dataset

The debris flow susceptibility evaluation model is built using the debris flow dataset, which aids in locating the occurrence of debris flow and determining the correlation between debris flow occurrence and indicator factors. Remote sensing images clearly show the geomorphological characteristics of debris flow, which can be specifically identified as the debris flow formation area, circulation area, and accumulation area31. This paper therefore makes use of the upstream 2021 Gaofen-2 satellite imagery of the Minjiang River, field research, and other methods. The base map of the map is combined with the DEM data obtained from the Chinese Academy of Sciences’ Resource and Environmental Science and Data Center (https://www.resdc.cn/) with a resolution of 12.5 m x 12.5 m. A total of 113 debris flow events were interpreted within the study area.

Evaluation factor

In accordance with the circumstances surrounding the formation of debris flow in the sub-watershed, fourteen evaluation factors were chosen from five categories of topography and geomorphology, rainfall, geology, vegetation cover, and human activities. (1) Topographic and geomorphologic data, based on the DEM data with a spatial resolution of 12.5 m in the study area, were extracted by Arcgis10.8 to obtain the watershed area, gully density, roundness, Melton ratio, slope direction, curvature, and hydrological processing to obtain the streamflow power index (SPI) and topographic wetness Index (TWI) of the watersheds; (2) Rainfall data, which were obtained through Arcgis10.8 cropping of the average annual rainfall in Sichuan Province (National Meteorological Science Data Center) to get the average annual rainfall vector data in the study area; (3) geomorphology: from the Chinese Academy of Sciences Resources and Environment Science and Data Center (http://www.resdc.cn/) to obtain the study area faults and stratigraphic rock body data, through the coordinate transformation to get the distance from the faults, the stratigraphic rock body data; (4) Vegetation cover data, the Landsat8 near-infrared and far-infrared bands with a resolution of 30 m in August 2024 were selected to obtain the normalized difference vegetation index (NDVI); the vector data of land use types in the study area were obtained by cropping the global 30-meter surface cover data (http://www.globeland30.org/home/background.aspx); (5) human activity, the 2021 Gaofen-2 satellite image was used to extract information about the study area’s roads and as well as the distance from each road.

Methodology

Evaluation units

A suitable choice of evaluation units, which typically includes raster units, slope units, special condition units, and watershed units, is essential for geohazard susceptibility modeling32,33,34,35. The watershed unit is capable of encapsulating the geometric properties of debris flows and elucidating their correlation with associated indicators, among other things36. Thus, the chosen watershed unit serves as the primary research foundation for this work, and the 12.5 m DEM data of the research unit is delineated using the hydrological processing method of ArcGIS10.8. In hydrological analysis, the selection of thresholds is essential for the delineation of watershed units. Thresholds are typically used in hydrological processing methods to identify accumulation areas within a watershed. By setting an appropriate threshold, the accumulation areas can be accurately delineated, ensuring the avoidance of excessive subdivision or oversimplification of watershed units. The study’s findings indicate that the ideal threshold is 5000 through threshold selection. The final study area was defined in 226 sub-watershed units using the Gaofen-2 satellite images. As shown in Fig. 1.

A total of fourteen index factors were discretized based on the watershed unit using the regional statistics function of the ArcGIS 10.8 software. The values of the corresponding factors in the watershed unit were averaged for area, gully density, roundness, Melton ratio, distance to road, distance to fault, NDVI, SPI, TWI, rainfall, and curvature. The most frequent values within the watershed unit were used to determine the values of stratigraphic rocks and land use types. As illustrated in Fig. 2, the spatial resolution was unified to 12.5 m×12.5 m after all raster layers were reclassified using the natural discontinuity method.

Ensemble learning

Multiple base learners are employed in ensemble learning, and a unifying approach is used to aggregate their findings for regression or classification tasks. Generally, ensemble learning exhibits better overall performance in terms of generalization than individual learners. Ensemble learning can be divided into three main categories: serial-sequence methods, where the base learners exhibit significant interdependencies, such as Boosting, exemplified by XGBoost; parallel methods, where there are no substantial dependencies between the base learners, like Bagging, symbolized by Random Forest; and Stacking, which combines the outputs of diverse types of base learners37.

The Random Forest (RF) algorithm builds multiple classifiers and combines their predictions to increase overall prediction accuracy. A subset of the available features is randomly selected during the construction of each decision tree, thereby assisting in the reduction of overfitting. Several subsets are randomly selected from the original dataset to serve as training sets, and for each training set, a decision tree is built using a random subset of features38. The training set is used to train each decision tree until the stopping condition is met. Voting or averaging is used to determine the classification or regression results of the final predicted values when predictions are made for new data. The training results of each decision tree are combined based on the Bagging integration concept39.

Based on Gradient Boosting Decision Tree (GBDT), Extreme Gradient Boosting Tree (XGBoost) is an enhanced optimization algorithm40. XGBoost and GBDT are similar in that they are both serial serialization algorithms based on the concept of Boosting integration. Based on the residuals between the previous decision tree and the actual value of the prediction results for the decision tree’s training, they fit the residuals through a number of iterations to continuously approximate the actual value until they reach the set value or the number of iterations after the training ends. The final prediction of this sample in the entire integrated model is determined after the weighted and summed individual decision tree results are forecast. The distinction is that, in solving the loss function, XGBoost employs Taylor’s second-order expansion to streamline the calculation and adds regular terms, like L1 and L2, to the objective value to manage the model’s complexity and avoid overfitting41. The XGBoost algorithm has greater computational efficiency and the ability to prevent overfitting when compared to the conventional GBDT algorithm42.

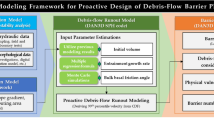

By combining multiple learning models and using their predictions as inputs, the stacking principle aims to train a meta-learner to make final predictions. The fundamental idea of stacking, in contrast to bagging and boosting, is to use a new model to learn how to efficiently integrate predictions from other models. The basic steps are as follows: first, train multiple base models (e.g., Random Forest, Extreme Gradient Boosting Tree, and Linear Regression) on the original dataset; second, create a new dataset using the predictions of these base models as new features; and third, train a meta-model on the new dataset to produce the final prediction. By using this method, stacking can improve overall prediction performance and generalization ability by fully utilizing the benefits of each base model43,44. Figure 3 illustrates the stacking modeling procedure used in this work.

Spy technology

A negative sample acquisition strategy based on the SPY technique is as follows: First, a predetermined percentage (15% in this paper based on prior research) of debris flow samples are chosen and fed into the unlabeled samples. These samples will be treated as spy samples along with the unlabeled samples in order to train a classifier to predict all the samples. The lowest probability of the spy samples is used as the value, and the probability value is lower than the samples with the threshold value are considered as reliable negative samples45. The main premise of this approach is that reliable negative samples can be filtered out by looking at the probability threshold of the spy samples, and that spy samples and potential debris flow samples in unlabeled samples will behave similarly in the classifier’s prediction.

Model accuracy evaluation

(1) Accuracy, precision, recall and F1 score.

The process of assessing debris flow susceptibility involves validating and evaluating the model’s performance. A confusion matrix is typically used to evaluate a binary classification model’s performance46. Four parameters comprise the confusion matrix: True Positive (TP) represents the number of debris flow that the model predicts to be mudslides and that are actually mudslides; False Negative (FN) represents the number of non-mudslides that the model predicts to be mudslides but that are actually mudslides; False Positive (FP) represents the number of mudslides that the model predicts to be mudslides but are actually non-mudslides; True Negative (TN) is the number of mudflows that the model predicts to be non- mudslides but are in fact mudslides. Based on this, four statistical metrics (accuracy, precision, recall, and F1 score) were used to assess each model’s performance. Each metric’s description is displayed in Table 1.

The True Positive Rate (TPR) and False Positive Rate (FPR), which show how well the model performs at various classification thresholds, are represented on the vertical and horizontal axes, respectively, of the ROC curve. The percentage of samples that are wrongly predicted to be positive is known as the False Positive Rate, while the percentage of samples that are correctly predicted to be positive is known as the True Positive Rate. The model performs better the closer the ROC curve is to the upper left corner47. The Area Under the Curve (AUC) value, which offers a thorough evaluation of the model’s performance, is the area under the ROC curve. When the AUC value is 0, the model’s predictions represent perfect misclassification, meaning the predictions are completely opposite to the actual outcomes. In such a case, reversing the predictions would result in a perfect model. In contrast, an AUC value of 0.5 indicates random guesswork, where the model cannot distinguish between positive and negative samples. On the other hand, an AUC value of 1 reflects perfect discrimination between positive and negative samples. The closer the AUC value is to 1, the better the model performs in terms of prediction.

Research process

Figure 4 shows a schematic representation of the technical route flow for this study.

Debris flow susceptibility analyses

Correlation analysis of indicators for evaluating susceptibility to debris flow

In order to select the evaluation factors with the most predictive ability and to improve the accuracy of model prediction, correlation analysis was performed on the 14 factors initially selected. The matrix of correlation coefficients between the 14 influencing factors can be obtained and visualized to create Fig. 5 using the Correlation Plot plug-in of Origin plotting software. In the graph, negative correlations are displayed in blue, and positive correlations are displayed in red. The image’s size and the correlation coefficient’s magnitude are directly correlated. The figure illustrates that all evaluation factors have correlation coefficients that are less than 0.54. The weaker correlation coefficients suggest that factor interactions are smaller and the evaluation factors that were chosen are more rational.

Multi-Collinearity test

In a regression model, multicollinearity is defined as a strong linear relationship between independent variables. In addition to making it challenging to distinguish between the various effects on the dependent variable, this circumstance may raise standard errors and cast doubt on the regression coefficients. Typically, the variance inflation factor (VIF) and tolerance (TOL) are used to evaluate the covariance between variables. A high correlation or lack of precision between the variables is indicated when the VIF is less than 5 and the TOL is greater than 0.2, respectively; the opposite suggests that an issue exists. The chosen evaluation factors for multiple covariance are analyzed using SPSS 27 software in this paper (Table 2). The minimum value of TOL in the indicator system is 0.304, and the maximum value of VIF is 3.290, and the results demonstrate that there is no covariance issue in the indicator factor system, further confirming the rationality of the evaluation factors that were chosen.

Model construction

The debris flow susceptibility assessment problem became a dichotomous problem when watersheds experiencing debris flow events were labeled as ' 1 ' and watersheds not experiencing debris flow events were labeled as ' 0 ‘, in accordance with the classification requirements of the ensemble learning model48. A sample dataset with an equal number of positive and negative samples was created by taking the 113 watersheds that had experienced debris flow out of the 226 sub-watershed units in the study area and another 113 watersheds that had not. In the process of constructing the sample dataset into a training set and a test set, a reasonable allocation of the division ratio is crucial, because an unreasonable division ratio may significantly affect the precision and accuracy of the model. As a result, the base dataset is split with reference to the studies in related literature, with 70% going toward the training set and 30% going toward the test set. This division strategy not only maintains sample balance but also gives the ensemble learning model for debris flow susceptibility assessment a solid foundation for training and testing, enhancing the model’s capacity for generalization and prediction accuracy49.

In accordance with the research methodology for ensemble learning suggested in 2.2.2, in this paper, we select RF model based on Bagging integration idea, XGBoost model based on Boosting integration idea, and construct Stacking-RF and Stacking-XGBoost models based on Stacking integration idea with RF and XGBoost as the base model and RF and XGBoost as the meta-model respectively.

Following the suggested SPY debris flow negative sample collection strategy outlined earlier, the process of obtaining trustworthy negative samples for our base dataset can be broken down into two stages: (1) Designate 15% of the debris flow samples as spy samples and non-mudflow samples to create a negative sample pool. This collection contains 129 samples in our analysis, with the remaining 85% of the samples serving as debris flow positive samples (consisting of 97 samples). By integrating RF, XGBoost, Stacking-RF, and Stacking-XGBoost models, we assign a probability value to each sample within the study area. (2) Identify the lowest probability associated with the spy samples, and all samples with probabilities lower than this threshold are deemed reliable negative samples. The probability thresholds derived from the RF, XGBoost, Stacking-RF, and Stacking-XGBoost models for spy sample prediction are 0.294, 0.228, 0.23, and 0.05, respectively. Consequently, all samples in the study area below these probability thresholds are recognized as reliable negative samples. To maintain balance, an equal number of positive samples are randomly selected from the pool of mudflow positive samples, resulting in the creation of the base dataset for SPY-RF, SPY-XGBoost, SPY-Stacking-RF, and SPY-Stacking-XGBoost models in equal proportions, as shown in Table 3. Ultimately, this base dataset is split into a 70% training set and a 30% test set, which are utilized for the training and testing of the constructed debris flow susceptibility assessment ensemble learning models.

Debris flow susceptibility mapping

Following the development of the ensemble learning principle-based debris flow susceptibility model, the model was used to determine the debris flow susceptibility index for each watershed unit in the study area. As illustrated in Fig. 6, the debris flow susceptibility index was divided into five grades based on the natural breakpoint method in ArcGIS 10.8 software. These grades are very low, low, moderate, high, and very high in that order. The debris flow susceptibility grading map in the study area demonstrates that the range of very high susceptibility zones that actually occur and the mudslide susceptibility zones derived based on the SPY-RF model are roughly consistent. This outcome offers a crucial foundation for managing the risk of debris flow and further validates the model’s validity.

The evaluation results of debris flow susceptibility can also be analyzed using statistical methods. Table 4 analyzes the number of watershed units in each model’s debris flow susceptibility level, the area occupied by each level, and the corresponding debris flow events. It calculates the ratio between the proportion of debris flow events in each susceptibility level and the corresponding area proportion. Debris flow density is defined as the ratio of the number of debris flow events in each susceptibility level to the corresponding area. Figure 7 presents the statistical results of debris flow susceptibility for each model. From Table 4; Fig. 7, the distribution characteristics and density differences of debris flows within each susceptibility level can be observed.

For the RF model, the area of high susceptibility regions is 2135.76 km², with a debris flow density of 2.20; for very high susceptibility regions, the area is 1829.57 km², with a density of 2.40. The SPY-RF model shows a high susceptibility area of 1374.51 km² and a density of 1.38; the very high susceptibility area is 2893.50 km², with a density of 2.49. For the Stacking-RF model, the high susceptibility area is 1029.02 km², with a density of 1.26; the very high susceptibility area is 4251.15 km², with a density of 2.02. The SPY-Stacking-RF model has a high susceptibility area of 574.69 km², with a density of 1.22; the very high susceptibility area is 3519.44 km², with a density of 2.02. The XGBoost model shows a high susceptibility area of 3641.39 km², with a density of 2.11; the very high susceptibility area is 781.48 km², with a density of 2.30. The SPY-XGBoost model has a high susceptibility area of 765.18 km², with a density of 1.96; the very high susceptibility area is 4832.82 km², with a density of 1.61. For the Stacking-XGBoost model, the high susceptibility area is 444.00 km², with a density of 1.35; the very high susceptibility area is 4804.30 km², with a density of 1.96. Finally, the SPY-Stacking-XGBoost model has a high susceptibility area of 66.51 km², with a density of 3.01; the very high susceptibility area is 3568.33 km², with a density of 2.21.

Overall, the SPY-RF model performs better across different risk levels and exhibits the highest debris flow density in extremely high susceptibility areas. This model can more comprehensively reflect the debris flow risk across various susceptibility levels, making it suitable for a wider range of application scenarios. It is not only adept at precise predictions focused on high-risk areas but also demonstrates higher sensitivity and predictive capability in low-risk regions.

Model accuracy verification

In accordance with the prior research methodology, this study builds 70% of the training dataset and 30% of the testing dataset using a 7:3 sample dataset. As demonstrated in Table 5 and Figure.8, the model performance metrics accuracy, precision, recall, and F1 score were computed based on the counts of TP, FN, FP, and TN, respectively.

In the model accuracy validation, the overall performance of SPY-RF surpasses that of other models. While RF excels in recall, the high accuracy, precision, and F1 score of SPY-RF make it more advantageous for practical applications. Although RF can identify all susceptibility areas, the comprehensive advantages of SPY-RF indicate that it can provide more balanced and reliable evaluation results in real-world applications.

The ROC curve of the debris flow susceptibility evaluation model based on ensemble learning is shown in Fig. 9. The AUC value of the SPY-RF model is 0.93, slightly higher than that of the SPY-XGBoost model, which is 0.92. Considering the performance of the aforementioned ensemble learning models in debris flow susceptibility evaluation, the SPY-RF model is the best. Therefore, the subsequent analysis will utilize the evaluation results of the SPY-RF model to examine debris flow susceptibility and the contribution rates of the evaluation factors.

Analysis of the contribution rates of debris flow evaluation indicators

Based on the Random Forest model, the contribution rates of different factors influencing debris flow susceptibility were generated using the feature_importances function in Python software. The calculation is expressed in Eq. (1):

In the equation: \(\:I{P}_{i}\)—— Contribution rate of the \(\:i\)th factor;

The analysis results of the contribution rates of debris flow evaluation indicators in the study area indicate that, as shown in Fig. 10, the four factors with the highest contribution rates to debris flow susceptibility are SPI, rainfall, curvature, and watershed area, with contribution rates of 0.21, 0.18, 0.09, and 0.09, respectively. In contrast, factors with lower contribution rates include land use type, slope aspect, distance from faults, and rock mass.

Discussion

This study selects the RF model based on Bagging ensemble ideas, the XGBoost model based on Boosting ensemble ideas, and constructs Stacking models using RF and XGBoost as base models, namely Stacking-RF and Stacking-XGBoost, for mapping debris flow susceptibility in the upper Minjiang River Basin. The results indicate that high susceptibility areas are consistent with previous studies16;50;51. A comparison of model accuracy shows that the SPY-RF model performs better across different risk levels and exhibits the highest debris flow density in very high susceptibility areas. This model can more comprehensively reflect the debris flow risk across various susceptibility levels, making it suitable for a wider range of application scenarios. Previous methods for studying debris flow susceptibility largely relied on traditional statistical analyses or single machine learning algorithms. However, given the suddenness and rapidity of debris flow events, these methods often appear lagging in responding to emergencies, lacking sufficient accuracy and timeliness52,53,54. Additionally, due to data scarcity and complexity in debris flow predictions, single machine learning models often fail to capture the underlying patterns in the data, affecting prediction outcomes. In contrast, ensemble learning effectively integrates multiple algorithms, addressing complex interactions between features, reducing model bias and variance, thereby enhancing robustness and interpretability. By combining the predictions of multiple base models, ensemble learning provides a more reliable basis for decision-making. Thus, ensemble learning plays a crucial role in debris flow prediction, offering significant support for the early warning and prevention of geological disasters.

In the research on debris flow susceptibility evaluation, researchers typically focus on positive samples that have occurred or are likely to occur, while paying less attention to negative samples where disasters are not expected55,56,57. This study employs SPY technology to select reliable negative samples and constructs a foundational dataset for debris flow susceptibility evaluation. As shown in Table 4; Fig. 7, the SPY-XGBoost model can more accurately identify debris flow events in very high susceptibility areas compared to the XGBoost model, significantly reducing the likelihood of non-susceptible areas being misclassified as susceptible, thereby enhancing the reliability of risk assessment. Moreover, the model based on SPY technology outperforms the original model in terms of accuracy, precision, recall, and AUC value. This performance improvement is attributed to the advantages of SPY technology in negative sample selection and feature extraction, allowing the model to more precisely distinguish between susceptible and non-susceptible areas. Additionally, SPY technology enhances the representativeness of the dataset, improving the model’s learning capability, which further increases overall predictive reliability. These results further validate the effectiveness of using SPY technology for debris flow susceptibility assessment, providing important theoretical support for future research.

Stacking is an ensemble learning method that improves overall model performance by combining the predictions of multiple base learners. The fundamental idea is to train different types of models on the same dataset, and then use the outputs of these models as new features input into a higher-level model for final predictions58. In this study, Stacking models were constructed based on RF and XGBoost as base models, with RF and XGBoost serving as meta-models for Stacking-RF and Stacking-XGBoost, respectively. The results indicate that both Stacking-RF and Stacking-XGBoost models do not perform well. Several reasons may contribute to this finding: the output features of the base learners may exhibit high correlation, preventing the meta-model from extracting new information. The meta-model requires a sufficiently diverse set of features to learn effectively. If the output features of the base learners are similar, the learning effectiveness of the meta-model may be limited. Additionally, the insufficient number of debris flow data samples results in a small training dataset, making it challenging for the meta-model to learn effective combination patterns, which leads to a decline in performance. Therefore, in future research, it is important to select multiple base models based on feature and data types, considering the strengths and weaknesses of different algorithms, such as logistic regression, support vector machines, and KNN. Alternatively, more complex meta-models, such as neural networks, could be employed to ensure diversity among the models, aiming for improved predictive performance.

In the analysis of the contribution rates of debris flow evaluation indicators in this study, the SPI has the highest contribution rate, indicating that water flow intensity plays a crucial role in the occurrence of debris flows, as strong water flow can exacerbate soil erosion and the formation of debris flows. The contribution rate of rainfall follows closely, demonstrating the direct impact of rainfall on debris flow occurrence. Curvature and watershed area also significantly influence debris flow susceptibility, particularly as curvature reflects the effect of terrain undulation on debris flows, while watershed area affects the scale of water flow convergence. However, factors with lower contribution rates include land use type, slope aspect, distance from faults, and rock mass. The low contributions of land use type and slope aspect may relate to the relative uniformity of these factors in the study area or the prominence of other factors influencing debris flow occurrence. The contribution rates of distance from faults and rock mass are the lowest, suggesting that in this specific study area, the spatial distribution of faults and rock mass may not have formed significant patterns of debris flow susceptibility. If the occurrence of debris flows in the study area is primarily influenced by factors such as rainfall and topography, and the distribution of faults and rock mass does not exhibit clear impact characteristics, their contribution rates are naturally low. This analytical result provides important evidence for the selection and application of influencing factors in future research.

Conclusion

This study uses watershed units as evaluation units and proposes SPY technology to construct reliable non-debris flow samples. It employs the Random Forest (RF) model based on Bagging ensemble ideas, the XGBoost model based on Boosting ensemble ideas, and constructs Stacking models using RF and XGBoost as base models, with RF and XGBoost serving as meta-models for Stacking-RF and Stacking-XGBoost, respectively. The evaluation of debris flow susceptibility in the upper Minjiang River Basin, combined with watershed evaluation factors, yielded the following results:

-

(1)

This study establishes a debris flow susceptibility evaluation model for the Upper Minjiang River Basin, incorporating hyperparameter tuning and negative sample optimization. The AUC values for the RF, SPY-RF, XGBoost, SPY-XGBoost, Stacking-RF, SPY-Stacking-RF, Stacking-XGBoost, and SPY-Stacking-XGBoost models were 0.82, 0.93, 0.72, 0.87, 0.80, 0.92, 0.71, and 0.90, respectively. The SPY-RF model outperforms others across various risk levels, providing a more comprehensive representation of debris flow risk. Models utilizing SPY technology consistently perform better than their original counterparts, indicating that SPY significantly enhances the quality of negative samples, improving prediction accuracy and reliability.

-

(2)

Although the Stacking-RF and Stacking-XGBoost models were designed to improve predictive performance, the high correlation between the base learner outputs hindered the meta-model from extracting new information, leading to poor performance. Furthermore, the limited number of debris flow data samples restricted the model’s learning capacity, affecting the effective learning of combination patterns. Future research should focus on model diversity by selecting base models from various algorithms (such as logistic regression, support vector machines, and KNN) or by adopting more complex meta-models (such as neural networks) to ensure model diversity and enhance predictive performance and robustness.

-

(3)

The contribution analysis of debris flow evaluation indicators revealed that the top four contributing factors are SPI, rainfall, curvature, and area, while the bottom four are land use type, slope aspect, distance to faults, and rock mass. For debris flow risk management in the Upper Minjiang River Basin, attention should be focused on rainfall and topography, as these primary factors significantly influence debris flow occurrence, while other factors play a lesser role. Therefore, targeted optimization of rainfall management and terrain modification measures will be crucial for improving debris flow prevention and control in the watershed.

Data availability

The datasets used and analysed during the current study available from the corresponding author on reasonable request.

Change history

04 December 2025

The original online version of this Article was revised: The Funding section in the original version of this Article was omitted. The Funding section now reads: “The Open Fund of the State Key Laboratory of Geological Hazard Prevention and Control and Geological Environmental Protection (SKLGP2023K010); the Natural Science Foundation of Sichuan Province (2023NSFSC0809).” The original article has been corrected.

References

Cui, P. et al. Landslide-Dammed lake at Tangjiashan, Sichuan Province, China (Triggered by the Wenchuan earthquake, May 12, 2008): risk assessment, mitigation strategy, and lessons learned. Environ. Earth Sci. 65, 1055–1065 (2012).

Tran, T., Lakshmi, V. & Enhancing Human Resilience Against Climate Change. Assessment of hydroclimatic extremes and sea level rise impacts on the Eastern shore of Virginia, united States. Sci. Total Environ. 947, 174289 (2024).

Gang, L., Xinsheng, Z., Lihua, F. & Shichuan, L. Application of fuzzy mathematics in geological disaster prevention and treatment research for debris flow in Qinghe County. Chin. J. Geol. Hazard. Control. 25, 18–23 (2014).

Jiang, W., Rao, P., Cao, R., Tang, Z. & Chen, K. Comparative evaluation of geological disaster susceptibility using Multi-Regression methods and Spatial accuracy validation. J. Geogr. Sci. 27, 439–462 (2017).

Miao, Y., Huige, X. & Shiyu, H. Debris flow susceptibility assessment based on information value andlogistic regression coupled model:case of Shimian County,Sichuan Province. Yangtze River. 52, 107–114 (2021).

Nianqing, W. & Yong, Y. Method of Debris-Flow proneness evaluation based on fuzzy mathematics and Least-Square method. Journal Catastrophology 5–9 (2008).

Quanfu, N., Ming, L., Yuefeng, L. & Zunbin, F. Hazard assessment of debris flow in Lanzhou City of Gansu Province based on methods of grey relation and rough dependence. Chin. J. Geol. Hazard. Control. 30, 48–56 (2019).

Yonghong, Z., Taotao, G., Wei, T. & Guanghao, X. Evaluation of susceptibility to debris flow hazards based on geological big data. J. Comput. Appl. 38, 3319–3325 (2018).

Zhefeng, C. & Chaoxu, G. Sensitivity analysis of terrain factors of debris flow vulnerability based on fuzzy analytic hierarchy process. J. Inst. Disaster Prev. 25, 21–30 (2023).

Zhichao, C., Junhao, W., Chuanfeng, C. & Guoying, P. Evaluation of the susceptibility of debris flow in Badan gully of Dongxiang County of Gansu based on Ahp and fuzzy mathematics. Chin. J. Geol. Hazard. Control. 31, 44–50 (2020).

Haili, Z. District of Haidong City evaluation on susceptibility of debris flow of Miaogou gully in Ledu. Soil Water Conserv. China 55–58 (2015).

Yanqin, X., Shuying, B. & Yongming, X. Comparative analysis of debris flow susceptibility assessment based Ontwo methods in Panxi district. Res. Soil. Water Conserv. 25, 285–291 (2018).

Li, Y., Xu, L., Shang, Y. & Chen, S. Debris flow susceptibility evaluation in meizoseismal region: A case study in Jiuzhaigou, China. J. Earth Sci. 35, 263–279 (2024).

Li, Y. et al. Debris flow susceptibility mapping in alpine Canyon region: A case study of Nujiang Prefecture. Bull Eng. Geol. Environ 83, (2024).

Pengning, G., Huige, X., Congjiang, L. & Yuxing, W. Methods for evaluating debris flow susceptibility based on Ood generalization verification and deep fully connected neural networks. Adv. Eng. Sci. 56, 182–193 (2024).

Xiong, K. et al. Comparison of different machine learning methods for debris flow susceptibility mapping: A case study in the Sichuan Province, China. Remote Sens 12, (2020).

Zhang, Y., Ge, T., Tian, W. & Liou, Y. Debris flow susceptibility mapping using Machine-Learning techniques in Shigatse area, China. Remote Sens 11, (2019).

Zhi, L., Ningsheng, C., Runing, H. & Mingyang, W. Susceptibility assessment of debris flow disaster based on machine learning models in the loess area along Yili Valley. Chin. J. Geol. Hazard. Control. 35, 129–140 (2024).

Marshall, S. R. O., Tran, T., Tapas, M. R. & Nguyen, B. Q. And Integrating artificial intelligence and machine learning in hydrological modeling for sustainable resource management. Int J. River Basin Manag 1–17 (2025).

Merghadi, A. et al. Machine learning methods for landslide susceptibility studies: A comparative overview of algorithm performance. Earth-Sci Rev 207, (2020).

Dou, J. et al. Improved landslide assessment using support vector machine with bagging, boosting, and stacking ensemble machine learning framework in a mountainous watershed. Japan Landslides. 17, 641–658 (2020).

Zeng, T. et al. Ensemble learning framework for landslide susceptibility mapping: different basic classifier and ensemble strategy. Geosci Front 14, (2023).

Haoyuan, H., Desheng, W. & Axin, Z. A new training data sampling method for machine Learning-Baseclandslide susceptibility mapping. Acta Geogr. Sin. 79, 1718–1736 (2024).

Guodong, L., Shengwu, Q., Fanqi, M. & Feng, G. Applieation of geographie information similarity based Absenee sampling method to debris flow susceptibility mapping. J. Eng. Geol. 31, 526–537 (2023).

Xiaolong, L., Guohu, S., Lingzhi, X. & Liang, L. Hazard analysis of debris flows based on different evaluation units and disaster entropy:a case study in Wudu section of the Bailong river basin. Chin. J. Geol. Hazard. Control. 32, 107–115 (2021).

Yongan, X., Yujie, W., Jingcong, Z. & Haochen, L. Study of slope geological hazard susceptibility valuation with Smal sample based on Cf and Svm. J. Taiyuan Univ. Technol. 53, 672–681 (2022).

Ke, T. et al. in Building High-Performance Classifiers Using Positive and Unlabeled Examples for Text Classification. 187–195 (eds Wang, J., Yen, G. G. & Polycarpou, M. M.) (Springer Berlin Heidelberg, 2012).

Lee, W. S. & Liu, B. Learning with Positive and Unlabeled Examples Using Weighted Logistic Regression. International Conference on Machine Learning, (2003).

Zhu, A. et al. A Similarity-Based approach to sampling absence data for landslide susceptibility mapping using data-Driven methods. Catena 183, 104188 (2019).

Liang, Z. et al. A Hybrid Model Consisting of Supervised and Unsupervised Learning for Landslide Susceptibility Mapping. Remote Sensing, (2021).

Chao, Z., Zhenyu, C., Fenghuan, S. & Zhen, Z. A. Dataset of High-Precision aerial imagery and interpretation of landslide and debris flow disaster in Sichuan and surrounding areas between 2008 and 2020. China Sci. Data. 7, 195–205 (2022).

Palamakumbure, D., Flentje, P. & Stirling, D. Consideration of optimal pixel resolution in deriving landslide susceptibility zoning within the Sydney basin, new South Wales, Australia. Comput. Geosci. 82, 13–22 (2015).

Rossi, M., Guzzetti, F., Reichenbach, P., Mondini, A. C. & Peruccacci, S. Optimal landslide susceptibility zonation based on multiple forecasts. Geomorphology 114, 129–142 (2010).

Shanjun, L., Shiyao, L., Lianhuan, W. & Dongling, L. Debris flow susceptibility and hazard assessment in Fushun City based on hydrological response units. J. Northeastern University(Natural Science). 45, 713–720 (2024).

You, T., Hai, H., Bo, G. & Long, C. Susceptibility evaluation for debris flow in different watershed unitsconsidering Freeze-Thaw Erosion type sources: taking Gonjo area of easterntibet as an example. J. Glaciology Geocryology. 46, 40–51 (2024).

Runing, H., Zhi, L., Ningsheng, C. & Shufeng, T. Modeling of debris flow susceptibility assessment in Tianshan based on watershed unit and stacking ensemble algorithm. Earth Sci. 48, 1892–1907 (2023).

Hong, H. Assessing landslide susceptibility based on hybrid multilayer perceptron with ensemble learning. Bull Eng. Geol. Environ 82, (2023).

Shanshan, R. & Xiaopeng, L. Debris flow susceptibility evaluation of Liangshan Prefecture based on the Rsiv-Rf model. Bull. Geol. Sci. Technol. 43, 275–287 (2024).

Jiayi, Z., Shujun, T., Kai, L. & Pengpeng, H. Susceptibility assessment of debris flow in the upper reaches of the Minjiang river before and after the Wenchuan earthquake. Chin. J. Geol. Hazard. Control. 35, 51–59 (2024).

Cao, J. et al. Multi-Geohazards susceptibility mapping based on machine Learning-a case study in Jiuzhaigou, China. Nat. Hazards. 102, 851–871 (2020).

Qiu, C., Su, L., Pasuto, A., Bossi, G. & Geng, X. Economic risk assessment of future debris flows by machine learning method. Int. J. Disaster Risk Sci. 15, 149–164 (2024).

Zhang, J. et al. Insights into Geospatial heterogeneity of landslide susceptibility based on the Shap-Xgboost model. J Environ. Manage 332, (2023).

Arabameri, A. et al. Drought risk assessment: integrating meteorological, hydrological, agricultural and Socio-Economic factors using ensemble models and Geospatial techniques. Geocarto Int. 37, 6087–6115 (2022).

Gao, B. et al. Landslide risk evaluation in Shenzhen based on stacking ensemble learning and Insar. Ieee J. Sel. Top. Appl. Earth Observ Remote Sens. 16, 1–18 (2023).

Yao, J. et al. Application of a Two-Step sampling strategy based on deep neural network for landslide susceptibility mapping. Bull. Eng. Geol. Environ. 81, 148 (2022).

Kun, L., Junsan, Z., Yiling, L. & Ke, C. Assessment of debris flow susceptibility in Dongchuan based on Rf and Svm models. J. Yunnan University(Natural Sci. Edition). 44, 107–115 (2022).

Pinzeng, R., Ran, C. & Weiguo, J. Susceptibility evaluation of geological disasters in Yunnan Province based on geographically weighted regression model. J. Nat. Disasters. 26, 134–143 (2017).

Erener, A., Sivas, A. A., Selcuk-Kestel, A. S. & Düzgün, H. S. Analysis of training sample selection strategies for Regression-Based quantitative landslide susceptibility mapping methods. Comput. Geosci. 104, 62–74 (2017).

Ling, G., Hua, X. & Pengxiang, S. Susceptibility evaluation of debris flow in Gansu Province based on La-Graphcan. Bulletin Geol. Sci. Technology (2024).

Di, B. et al. Assessing susceptibility of debris flow in Southwest China using gradient boosting machine. Sci Rep 9, (2019).

Ling, S., Zhao, S., Huang, J. & Zhang, X. Landslide susceptibility assessment using statistical and machine learning techniques: A case study in the upper reaches of the Minjiang river, Southwestern China. Front Earth Sci 10, (2022).

Chang, M., Tang, C., Zhang, D. & Ma, G. Debris flow susceptibility assessment using a probabilistic approach: A case study in the Longchi area, Sichuan Province, China. J. Mt. Sci. 11, 1001–1014 (2014).

Dash, R. K., Falae, P. O. & Kanungo, D. P. Debris flow susceptibility zonation using statistical models in parts of Northwest Indian Himalayas-Implementation, validation, and comparative evaluation. Nat. Hazards. 111, 2011–2058 (2022).

Chen, J., Li, Y., Zhou, W., Iqbal, J. & Cui, Z. Debris-Flow susceptibility assessment model and its application in semiarid mountainous areas of the southeastern Tibetan plateau. Nat Hazards Rev 18, (2017).

Ji, F., Dai, Z. & Li, R. A. Multivariate statistical method for susceptibility analysis of debris flow in Southwestern China. Nat. Hazards Earth Syst. Sci. 20, 1321–1334 (2020).

Gao, R., Wang, C. & Liang, Z. Comparison of different sampling strategies for debris flow susceptibility mapping: A case study using the centroids of the scarp area, flowing area and accumulation area of debris flow watersheds. J. Mt. Sci. 18, 1476–1488 (2021).

Qin, S. et al. Establishing a Gis-Based evaluation method considering Spatial heterogeneity for debris flow susceptibility mapping at the regional scale. Nat. Hazards. 114, 2709–2738 (2022).

58. Yutao, C., Ning, L., Chang, M. & Xing, F. Study On Debris Flow Susceptibility Based On Spy-Rf Model-a Case Study of the Upper Minjiang River Basin. Chinese J. Geo. Hazard Control. 1–19 (2025).

Acknowledgements

A combination of patience and time has produced the desired results. I am incredibly appreciative of my brothers’ and teachers’ selfless assistance. Additionally, Su Lingxi deserves special recognition for her assistance, which was as kind and unreserved as family. Her support and encouragement have been a continual source of fortitude. In addition, I want to sincerely thank the funding support that made this study possible by providing the resources it needed.

Funding

The Open Fund of the State Key Laboratory of Geological Hazard Prevention and Control and Geological Environmental Protection (SKLGP2023K010); the Natural Science Foundation of Sichuan Province (2023NSFSC0809).

Author information

Authors and Affiliations

Contributions

Y.C. wrote the main manuscript text.N.L. processed the data. F.X. and H.X. checked and edited the manuscript. Z.C.provided the investigation. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Chen, Y., Li, N., Xing, F. et al. Study on debris flow vulnerability of ensemble learning model based on spy technology A case study of upper Minjiang river basin. Sci Rep 15, 22480 (2025). https://doi.org/10.1038/s41598-025-03479-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-03479-6

Keywords

This article is cited by

-

An efficient ensemble learning model for time-dependent scour depth estimation

The Journal of Supercomputing (2025)

-

Comparative multi-criteria decision-making approaches for landslide susceptibility mapping in Khagrachhari district of southeastern Bangladesh

Discover Geoscience (2025)