Abstract

The exponential growth of Artificial Intelligence (AI) has led to the emergence of cutting edge methods and a plethora of new tools for media editing. The use of these tools has also facilitated the spread of false information, propaganda, and harassment through the creation of sophisticated fake video and audio content, commonly referred to as deepfakes. While existing efforts exist for identifying deepfakes videos, they have received less attention when it comes to social media videos. This paper presents 59-Layer Fake Dense Inception Network (FDINet59), designed to detect deepfakes contents. The dataset generated by Multi Task Cascaded Convolutional Networks (MTCNN) crop for training the system and evaluated its ability to spot deepfakes on datasets. The results show that FDINet59 provides impressive performance in identifying deepfakes material, achieving a maximum accuracy of 70.02% with a loss of 0.688 log units while using the training dataset. The ability of FDINet59 to detect deepfakes content generated by auto encoders and Generative Adversarial Network (GAN), commonly used to create deepfake videos. The results show that FDINet59 is 94.95% accurate with a log loss of 0.205. The proposed model can play an important role in preventing the spread of deceptive deepfake videos on social media. The development of more sophisticated deepfake detection algorithms is crucial to counter the negative impacts of this technology on society.

Similar content being viewed by others

Introduction

The proliferation of internet access globally, the dissemination of misinformation has also increased. The general populace believes that propaganda can be disseminated through online media. With the growing modification of digital information through sophisticated AI trained models, distinguishing fact from fiction may become challenging by mere observation. The reliability of information is the foremost necessity globally at present. Currently, an escalating volume of counterfeit recordings and images proliferates online, making it increasingly challenging to discern authenticity by mere visual inspection; this phenomenon is referred to as deepfakes. The media have designated false news as deepfakes1. The insufficiency of mere exposure to foster logical confidence in deepfakes has undermined consumers’ trust in the media. They may inflict misery and suffering on targeted individuals, exacerbate the dissemination of misinformation and incitement to hatred, and potentially ignite political strife, public unrest, violence, or even warfare. This is particularly crucial at present, as deepfake technology is increasingly accessible and social media sites can swiftly propagate fraudulent content. Utilize AI and deepfake technologies to generate images that appear to be captured by humans. In recent years, the proliferation of digital media has led to the widespread dissemination of numerous deepfakes videos online. Deepfake is produced with a form of deep learning called GAN and auto encoders to amalgamate and overlay various media types (videos and pictures)2,3.

The deepfake technology offers certain benefits it also presents significant drawbacks, including the production of non-consensual explicit content as a form of retribution, the distribution of misleading videos to damage a celebrity’s reputation, the fostering of political bias, the spread of false propaganda, and other unethical practices. The initial documented occurrence of a deepfake video emerged in 2017 when a Reddit user4 replaced celebrity faces in pornographic content, prompting the introduction of various algorithms for detecting such movies. Certain strategies employ recurrence networks to detect visual anomalies inside individual frames, whereas others utilize convolution networks to discern temporal inconsistencies among distinct facial frames in films.

During COVID-19, in 2021, Cadbury India created a deep fake video5 of a famous Indian movie actor to wish everyone Happy Diwali. The Cadbury website states that the firm worked with the translation platform rephrase.ai. The latter specialised in producing commercials with lifelike details and studio quality narration utilising AI techniques and synthetic voices. In exchange for providing some basic information about their business, the ad’s target audience is given the option of creating their own personalised ads using AI by visiting a dedicated website. Then, using AI and machine learning, Cadbury claims, actor is digitally re-created to make it appear as though he is announcing the name of a local business/brand. To overcome such demerits of deepfakes, some scientific approaches and algorithms like mesonet, GAN, phoneme-viseme-mismatches, etc. All these tools focus primarily on the character’s face to detect face manipulation and determine if the video has been distorted. It mainly extracts the main features of the face like eye blinking, facial expressions, and eye movements, and matches these features, and based on the result, it predicts whether the video is manipulated or not. When it comes to recognizing deepfakes across various datasets the effectiveness are transformer-based designs as opposed to CNN-based models. To identify deepfakes from various sources, transformer-based models will be more accurate and generalizable than CNN-based models. This paper discusses deepfakes detection techniques. Initially, deepfake videos were generated utilizing the mesonet GAN and auto encoder model. The proposed model enables effective differentiation between manipulated or distorted videos and authentic video clips.

The rest of the paper is structured: Sect. 2 provides the related works done in the field. The Sect. 3 gives a detailed description of the deepfakes. Deepfakes creation and detection is discussed in Sect. 4. The proposed model is presented in Sect. 5. Section 6 contains result and discussion for our proposed work. The last section contains the conclusion of this paper with future directions.

Related work

Technically speaking, it’s not easy to spot fake or tampered content. The field of media forensics, which focuses on uncovering instances of image and video fraud, has come a long way in the past twenty years. Proposed solutions for video forensics frequently try to handle elementary alterations like copy move6, missing/added frames7, and shifting interpolation8. In contrast, auto encoders and GAN have greatly enhanced the quality of fabricated media. These fake videos are often difficult to spot, even when using standard detection tools. Researchers have tested stacked auto-encoders, convolutional neural networks, long short term memory networks, and GAN in their models for identifying video manipulation.

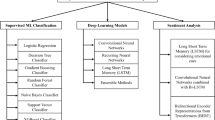

The authors of9 show how to use one class variation auto encoder to classify deepfake. The model provided in this paper only needs real-world examples for training purposes. Face forensic is the dataset used in this particular instance. Face detection and alignment using MTCNN are applied to subsets of the obtained dataset’s frames (Multitask Cascaded CNN: face-detection tool). In this case, a vibrational encoder of the smallest possible class is used. Deepfake video image feature detectors have been demonstrated by the authors in10. Identifying deepfake movies is done with Support Vector Machine (SVM), and regression. The 98-video archive contains both fictitious and authentic clips. The MP4 files in the dataset each have a duration of 30 s. When training a model to identify fake movies, feature points extracted from the video with conventional edge feature detectors are utilized as inputs. Author of11 presents a deep learning method for identifying fake content made with the deepfake and Face2Face algorithms, analysing at a mesoscopic level with few layers. In order to achieve high generalisation performance, the Meso-4 network has a single hidden layer with ReLU activation. Sequential convolutions with pooling make up the first four layers. In the mesoinception-4 network, the first two convolutional layers are constructed using a modified version of the inception module. The convolution long short term memory network is used to identify deepfake films in a recent paper12. The analysis of visual forgeries has been completed. The detection of deepfake films with high levels of compression has been accomplished with the help of triplet architecture13. Recent work14 has employed the use of sharp multiple instances learning to identify partial face attacks in deepfake films. By grouping together face and non-facial images and then eliminating the latter, the detectors’ performance has been enhanced15. Some deep learning approaches rely on visual artefacts, while others use temporal features. Visual artifact-based works analyze videos frame by frame. The modified part of an image will seem differently from frame to frame to varying degrees since each frame is unique. Since CNN models can identify these artifacts, these characteristics are first removed before being fed into a deep learning classifier. Inception V316, ResNet15217, VGG1618, DenseNet, and additional classifiers are available. The detection methods based on eye blinking rate19, determining the difference between the original and fake movies’ head position20, and recognizing the artifacts of the eyes, teeth, and face21 have all been built upon earlier research. Another recent study22 likewise uses the blinking pattern to draw conclusions about the human brain. To identify faked photos and movies, a comprehensive method based on capsule networks has been created23. To detect various spoofs, replay attacks, etc., a VGG19 network has been utilized for latent feature extraction in conjunction with a capsule network. In24, researchers look into the use of two inception modules, two max pooling layers, and two regular convolution layers. The mesoscopic level best describes this method. Emotion embedding has been used to identify false videos by analysing the audio and visual components of a clip25. In25, the authors assessed pre-existing methods and conducted a comparative study between several methodologies. There are many more important contributions in the deepfake area presented in26,27.

The FDINet-59 architecture is specifically designed for the detection of deepfakes and has been shown to outperform other state of art methods for deepfake detection. The main reason why FDINet59 is effective is because it has been trained on a dataset of both real and deepfake videos that is generated using the MTCNN algorithm, which is a popular face detection and alignment tool in computer vision. In paper28, authors examined the interaction of psychological factors abstinence self-efficacy, locus of control, and social support in substance use disorder recovery, revealing their combined impact on rehabilitation success. In neuro diagnostics29 proposed a multi view deep learning model using EEG signals to enhance schizophrenia detection accuracy. In agriculture30, systematically reviewed AI-based methods for diagnosing rice crop diseases and nutrient deficiencies, emphasizing precision farming benefits. Additionally31 improved depression detection from EHRs through optimal feature selection, enhancing model performance and interpretability32. Table 1 provides a concise overview of the relevant literature.

Overview of deepfake

The phrase deepfake, a combination of deep learning and fake, denotes synthetic media, especially films, created by AI methods to alter/construct an individual’s look, voice, or behavior. Deepfakes often entail the overlaying of a target individual’s face over another person’s body in a source video, resulting in a convincingly realistic but wholly fictitious depiction42. These technologies utilize deep learning frameworks, like auto encoders and GAN, to create persuasive alterations of audio visual content43. Deepfakes can be classified into several categories, such as face swapping, lip syncing, and manikin face deepfakes. In lip sync deepfakes, the subject’s lip movements are artificially aligned with unrelated audio, producing the illusion that they are conveying a certain message44. The manikin ace variation entails animating a passive subject to replicate the facial emotions, ocular movements, and head gestures of an active performer, culminating in a synthesized yet ostensibly lifelike film. While previous methods of video manipulation depended on conventional visual effects or Computer Generated Imagery (CGI), the emergence of deep learning has markedly improved the realism and accessibility of deepfake production. GAN based models acquire the ability to mimic facial geometry and expressions through training on extensive datasets of video frames and photos45. Public figures, such politicians, celebrities, and news presenters, possess a significant online presence, rendering them more susceptible to such manipulations. The ramifications of deepfakes transcend individual detriment and infiltrate national and international security spheres. For example, manipulated footage of political figures making incendiary comments might skew public opinion and undermine diplomatic ties46. In military and intelligence applications, counterfeit satellite imagery such as the fabrication of a strategic bridge or infrastructure can deceive decision-makers, potentially leading to tactical errors. Moreover, deepfakes have been employed to disseminate misinformation, influence financial markets, and disrupt political processes, so presenting an escalating threat to democratic institutions and public confidence. Due to the swift progress and possible abuse of deepfake technology, the development of effective detection methods and ethical considerations has emerged as a crucial focus of research in computer vision, cybersecurity, and media forensics29.

Scope of deepfake

Deepfakes are computer-generated media that can be used to create realistic and often deceptive videos, images, and audio recordings. Some potential uses of deepfakes include education and training, blackmail, politics, art, acting, movies, social media, advertising and marketing etc.

Associated challenges with deepfake

Deepfakes are a danger in numerous ways like the utilization of deepfake sounds in cash coercion, fraudsters focusing on big names and government officials to get out the fake words, making non-consensual porn, and so on. The spread of deepfake began with fake pornography recordings. Numerous female famous people succumbed to deepfake pornography. Among them were Daisy Ridley, Jennifer Lawrence, Emma Watson, and Gal Gadot47. The subject additionally impacted ladies related to the heads of various nations, like Michelle Obama, Ivanka Trump, and Kate Middleton. In 2019 a work area application called DeepNude was delivered. It was fit for taking off apparel from ladies. In a little while, it was taken out, yet you can in any case track down duplicates of the application drifting around on the internet. The following gathering impacted by deepfake is legislators48. There were recordings of President Obama offending President Trump. In another video, Nancy Pelosi’s discourse was handled to cause the crowd to accept that she was intoxicated. In another video, President Trump was demonstrated to be taunting Belgium for its enrolment in the Paris Climate Agreement.

Deepfake creation and detection

Due to the high quality of the manipulated movies and the accessibility of the corresponding software, deepfakes have gained in popularity and to distinguish between real and fraudulent videos, classifiers are typically applied in deepfake detection. This section discusses how the deepfake videos are created and how they can be detected in detail.

Deepfake creation

The majority of deepfakes will employ a hybrid machine learning approach consisting of auto encoders and GAN. GAN is employing generator neural network and discriminator neural network. These nets are continually contending with one another. This way they are making it workable for the machine to advance quickly. The generator will endeavour to take a reasonable picture, and the discriminator will attempt to decide whether it is a deepfake or not. Assuming the generator tricks the discriminator, the discriminator utilizes data accumulated to improve as an adjudicator. Similarly, assuming the discriminator decides the generator’s picture is a fake, the subsequent organization will get better at taking a fake picture. This is an endless cycle.

Deep auto encoders are a type of deep network with this capability that has found widespread use in the reduction of dimensionality and the enhancement of images through the application of computational pressure. By combining an auto encoder and a decoder, a Reddit user produced fake app, the first deepfake project. In this method, the auto encoder is responsible for eliminating unnecessary details from face images, while the decoder is responsible for recreating those images. A pair of encoders and a pair of decoders, with each pair used to create a different set of photos, is needed for face swapping between source and target images, while the encoder’s boundary regions must be split between two different sets of organisations. As a result, the encoder networks of the two groups are identical. This method makes it possible for the average encoder to discover and acquire accustomed to the comparability between two sets of face images, which is typically unchallenging due to the similarity between features such as the eyes, nose, and mouth positions in most human faces.

Auto-encoders

One use of auto encoders, a subset of neural networks, is to replicate input as faithfully as possible by first compressing it into a meaningful representation and then decoding it. In this section, we’ll look into the many common types of auto encoders. There are also depictions of various auto encoder uses and implementations. First introduced as a neural architecture capable of recreating its own feedback, auto encoders have since become increasingly popular. The basic goal of these systems is to allow individuals to independently learn an educational depiction of the data that can then be applied to other purposes, such as clustering. The task is to acquire the encoding and decoding functions.

Where \(\:Ed\:\)is the expectations over the entire \(\:x\) distribution and \(\:\phi\:\) is the reconstruction loss function used to quantify how far the output deviates from the input. Standard practice dictates that the latter be the \(\:\mathcal{l}2\)-norm shown in Eq. 1. One can think of the encoder, the code, and the decoder as the three legs of an auto encoder’s stool. The encoder compresses the data and creates a code, which can be decoded by the decoder to reveal the original data. Feedforward neural networks, which include the encoder and decoder, play a central role in modern coding systems. Our preferred dimensionality for an Artificial Neural Network (ANN) is one layer, and that layer is code49. During auto encoder setup, we adjust a hyper parameter called code size, which determines how many nodes will make up the code layer. The encoder, a fully connected ANN, receives the input and generates the code. The output is then generated only by the code, which is processed by a decoder with a similar ANN structure50. In this case, we want the result to be identical to the input. Consider that the decoder’s structure is a carbon copy of the encoders. It’s not necessary, but it usually works out that way. The one and only limitation is that the dimensions of input and output must be the same. Anything that falls somewhere in the middle is fair game. In order to get an auto encoder ready, there are four hyper parameters that need to be adjusted:

Code size density of the intermediate layer’s nodes. The greater force is exerted on something smaller. The auto encoder is as deep as we’d want, using a number of layers. The encoder and decoder each consist of two levels, ignoring the information and the output. Due to the progressive stacking of layers, a stacked auto-encoder is the type of auto encoder architecture that we are developing51. A fixed number of nodes constitute each layer. Stacked auto encoders typically take the form of a sandwich. With more layers in the encoder, fewer nodes are needed in each layer, and vice versa for the decoder. Encoder and decoder architectures are symmetrical in terms of the number of layers they contain. Fortunately, this isn’t necessary, and we have complete control over the relevant variables. As a loss function, we make use of either the Mean Squared Error (MSE) or the binary cross-entropy. While cross-entropy is the go-to statistic when the data points lie inside the range [0, 1], the mean squared error is what we use otherwise. In the same manner that ANNs are trained with backpropagation, auto encoders may also be trained with this method52.

When a fake video is made with auto encoders, certain inconsistencies are inserted into it. This is due to the fact that both videos were shot in distinctive locations, using a variety of tools. The encoder learns both the mean and the standard deviation of the latent space distribution during training. The decoder is used once the latent space vector has been generated. Using the standard deviation to distort one of the spheroids and some random error or noise, it attempts to recreate the original source image. In the end, the decoder will produce an image, but it won’t be an exact replica of the original. Sometimes in the process of making the fake image, little things like the skin tone or the position of the glasses frame edge can get messed up. Through meticulous data processing, we were able to take advantage of these variations in our model. As a result, the feature vector generated by the feature extractor is reliable.

GAN

There are two neural networks that make up a GAN. First, there’s a neural network called generator that uses a uniform random distribution to produce new data points53. The objective is to generate identically false outputs from inputs. Also, discriminator, a second neural network, is responsible for telling the difference between real data and the fake data produced by generator. A generative model, denoted by the letter G, and a discriminative model, denoted by the letter D, are both simultaneously constructed by the GAN, which is a deep learning system. The acquisition of some objective information is what we hope to accomplish using G as a goal (for example dispersions of pixel power in pictures)54. D guides the preparation of G by analyzing the information created by G concerning “genuine” information in this way assisting G with learning the dissemination that supports the genuine information. GAN is sorted through a couple of straightforward neural networks. In any case, by and by, the models can on a basic level be any generative-discriminative sets55.

DCGANs are a type of convolutional GAN that imposes strict compositional topological requirements on the training process to ensure its integrity. In a DCGAN, CNNs serve both the generation and discrimination roles. In our work, the generator of a DCGAN is a CNN that contains three down sampling layers, six remaining layers, and three up sampling layers, and the discriminator is another CNN that contains four convolutional layers56.

GAN objective function

As a binary classifier, the discriminator’s output should have a low probability for fake data while a high probability for real data is expected when real data is fed into it. Let’s set up some parameters and make some definitions.

It can be seen that the scores for \(\:D\left(S\right),\:D\left(G\right(K\left)\right)\) range from 0 to 1. The goal is to construct a model (discriminator) that places more weight on authentic information while placing less weight on erroneous data and we’d like to create a model (generator) that makes the most of the fabricated information. The following formulas are used to illustrate the above point shown in Eqs. 2 and 3.

At Discriminator D,

The total cost is

At Generator G,

The total cost is

Two players, D and G, play a mini-max game with the value function V (G, D) as follows shown in Eqs. 4 and 5. Through its training, D improves its chances of correctly labelling both the examples we provide it with, and random samples provided by G. Concurrently, we train G to have a minimum of\(\:\:log\:(1-D(G\left(K\right)\left)\right)\): So, D and G engage in the following value-function-based minimax game for two players shown in Eq. 6;

Here, we describe an imaginary study of adversarial networks, effectively proving that the information-generating dispersion may be recovered by employing the preparation model so long as G and D are appropriately constrained, i.e. in the non-parametric cut-off57. A mathematical, iterative approach should be used to carry out the game. Overfitting might occur on small datasets if we enhanced D until the very end of the internal loop of preparation, which is computationally costly. We make k iterations of D improvements followed by a single iteration of G enhancements29. Since G evolves slowly enough, D can be kept in a state close to its optimal configuration. This method is analogous to how SML/PCD study follows tests from a Markov chain from one learning step to the next in an effort to avoid duplication in the inner circle of learning. When k \(\:Pk\), the generator G defines an implicit probability distribution pg as the distribution of samples \(\:G\left(k\right)\). Accordingly, we hope that, with sufficient resources and time for training. This section’s findings are carried out in a nonparametric environment; for instance, we tackle an infinitely bounded model by centring our attention on the combination in the space of likelihood thickness capabilities. A global optimal strategy for pg = data in this minimax game. The most convincing utilization of GAN is in restrictive GAN for undertakings that require the age of new models. Here, Good fellow demonstrates three fundamental models shown in Fig. 1.

The skill of making accurate representations of given images are called picture super resolution. The ability to create incredibly original and imaginative images, representations, paintings, and just much anything else called making art. Picture to image translation, capacity to decipher photos across areas, for example, day to night, summer to winter, and the sky is the limit from there. The success of GANs is perhaps the most persuasive argument for their widespread consideration, development, and application. Images created by GAN can be so lifelike that viewers have a hard time believing that the subjects are CGI creations.

Deepfake detection

Deepfake is a long way from impeccable. For the most part, face obstructions can cause facial appearance extraction and reintegration to fail, leading to undesirable outcomes such as edges with no facial re-enactment, a large, concealed region, or a magnified facial shape. Still, with improved networks, it is possible to effectively avoid such specialized mistakes. Moreover, and this is true for various applications, auto-encoders will typically inadequately recreate fine details due to the pressure of the input information on a confined encoding space, thus the outcome frequently seems a bit hazy. While a larger encoding space does a better job of approximating subtleties, the resultant face still looks too much like the input face because details like morphological information are passed on to the decoder. This is an unintended consequence. Standard machine learning classifiers, deep neural networks, recurrent neural networks, long transient memory, CNN, and others can all be used to spot GAN-generated deepfake images. The primary objective of this work is to differentiate deepfake images from regular ones by employing a CNN architecture. Convolutional neural networks of eight different architectures (Meso-4, DenseNet121, MesoInception-4, DenseNet169, DenseNet201, VGG19, VGGFace, and ResNet50) can be utilized in investigation to identify deepfake images and audio.

Methodology

Dataset

The deepfake dataset are available on Kaggle64. The Dataset has 1,12,000 images. This dataset is generated by MTCNN crop, enlarged by 80 pixels, and resized to 150 × 150. This Dataset contains 50 folders and 50 meta-JSON files. Each folder has approximated 2000 + images. The Dataset contains both real and fake images. Information of images is stored in meta-JSON files. We made a training dataset from the first 47 folders while for validation we have taken the last 3 folders. There are 76,903 training samples and 7366 validation samples. There are 64,773 fake train samples and 12,130 real train samples. There are 6108 fake validation samples and 1258 real validation samples. This was an unbalanced dataset. We have applied random selection over these samples to balance the datasets. After balancing the dataset, there are 12,130 fake train samples and 12,130 real train samples. There are 1258 fake validation samples and 1258 real validation samples.

This work employed a publicly accessible deepfake image dataset from Kaggle, comprising roughly 112,000 facial photographs. Every image underwent pre-processing via the Multi-task Cascaded Convolutional Neural Network (MTCNN) to ensure precise face detection and alignment. Following detection, faces were expanded by 80 pixels and uniformly resized to 150 × 150 pixels to maintain consistency throughout the dataset. The Dataset Architecture is discussed below in detail.

Total Images: Approximately 112,000.

Folders: 50.

Meta-JSON Files: 50 (one for each folder), encompassing metadata such as image labels (authentic or fabricated), generating methodologies, and facial landmarks.

Images per Folder: Approximately 2,000+.

Picture Categories: Authentic and deepfake photos.

Strategy for Dataset Partitioning: The dataset was partitioned into training and validation sets according to folder indices:

Training Set: Obtained from the initial 47 folders.

Aggregate Samples: 76,903.

Counterfeit Images: 64,773.

Authentic Images: 12,130.

Validation Set: Obtained from the final three folders.

Aggregate Samples: 7,366.

Counterfeit Images: 6,108.

Authentic Images: 1,258.

Class Discrepancy & Balancing Approach: The original dataset exhibited considerable imbalance, featuring a markedly greater quantity of deepfake images relative to authentic ones. To rectify this, random undersampling was employed to provide a balanced dataset:

Balanced Training Dataset:

Counterfeit Images: 12,130.

Authentic Images: 12,130.

Balanced Validation Set:

Counterfeit Images: 1,258.

Authentic Images: 1,258.

This balancing method was essential in averting model bias and guaranteeing equitable performance assessment across both classes. The amounts of genuine and deepfake videos are summarized across multiple widely used data sets in Table 2.

Proposed model

To begin, we run the face image via the pre-processing module to improve its quality, then feed that improved image into the baseline network to train it, and ultimately, we see the projected probability for each frame output by the detection model. Figure 2, depicts the workflow of our proposed deepfake detection approach.

The FDINet59 was chosen for its proficiency in accurately capturing spatial and semantic characteristics from facial photographs, a crucial criterion for deepfake detection. This design integrates the advantages of Dense Net and Inception modules, providing numerous significant benefits compared to conventional CNNs; through the implementation of dense blocks, FDINet59 enhances feature reutilization, alleviates the vanishing gradient issue, and optimizes information transmission between layers, rendering it especially proficient for nuanced facial alterations present in deepfakes. The Inception modules facilitate the model’s simultaneous processing of features across various receptive field sizes, hence enabling the network to catch both intricate and overarching artifacts characteristic of deepfake images. The 59-layer depth strikes a balance between model complexity and computing efficiency, surpassing shallower models such as MesoNet and proving more practical to train than exceedingly deep networks like DenseNet201.

The hybrid architecture of FDINet59 enhances generalization to various deepfake generation methods, hence increasing its robustness across different datasets. This design decision is substantiated by experimental findings, indicating that FDINet59 surpasses other prominent architectures, like VGG19, ResNet50, and MesoInception-4, in accuracy and precision, as demonstrated in the evaluation section. The neural network model FDINet59. FDINet59 consists of 6 Inception modules shown in Fig. 3, where the first and second layers (Inception layers) are connected to the initial Input layer. Each Inception module consists of 7 conv2D layers which are then concatenated into 1 single output layer of the inception module. Two kernel sizes are used. One is 1 × 1 while the other is 3 × 3. 1 × 1 kernel is used in the first, second, fourth, and sixth layers of the module while 3 × 3 kernel is used for the rest. Padding is applied to all the layers. The activation function used is Exponential Linear Unit (ELU) for each layer. Two inception modules are connected to the input layer. Both inception modules have two more inception modules connected to them respectively. Figure 6 shows how we interconnected each layer with a Batch Normalization layer to stabilize the learning process and shorten the number of epochs needed to train the model. Attached to it is a Max Pooling layer, which summarizes feature presence across patches in the feature map in order to down sample the feature map. Both the outputs are concatenated into two layers. Two separate convolutional neural network layers are connected to each of the concatenated layers. Each layer has padding applied to it with the activation function as ELU. The outputs from the four levels are combined together. We create two layers using the same approach, and then merge them into one single layer. This same method is applied to build a second layer, which is also combined into a single layer. The flattened layer takes the output from this stage and transforms it into a one-dimensional vector. This vector is then passed on to the dense layer, which consists of 16 neurons utilizing the Rectified Linear Unit (ReLU) activation function. To help prevent overfitting, we include a dropout layer with a 20% margin. The output layer of FDINet59 is made up of a single neuron, featuring a sigmoid binary output activation function. You can see the head, tail, and model architecture of FDINet59 in Figs. 4, 5 and 6, respectively.

Balancing the dataset

Given the importance of the underrepresented minority class in many applications, such as deepfake detection, medical diagnosis, and fraud detection, let’s take a closer look at the specifics of the process of balancing a dataset.

Preparing the dataset

FDINet59 is designed to work with image and video datasets that have had their faces cropped. There is a label for each sample: 0 for real and 1 for bogus. Partitioning the dataset before putting it into the network entails:

-

Class A: Real.

-

Class B: Fake.

Use random under sampling method

To bring the size of the minority class closer to that of the majority class (fake), random under sampling removes samples from the majority class (actual).

Sample Python code:

from imblearn.under_sampling import RandomUnderSampler.

# X = extracted features or frame representations.

# y = corresponding labels (0: real, 1: fake).

undersampler = RandomUnderSampler().

X_balanced, y_balanced = undersampler.fit_resample(X, y).

The real value will be decreased to 5,000 if the false value is equal to 5,000. Ten thousand samples, split evenly between real and bogus data (50/50). This guarantees that FDINet59 is trained with a similar amount of data from each classes, which improves its generalizability.

Algorithm FDINet59_deepfake_detection

The algorithm for proposed architecture of FDINet59 for deepfake detection is provided below.

Input: Dataset of face images or video frames (Real and Deepfake).

Output: Predicted labels (Real or Deepfake).

Preprocessing: For each image in dataset:

-

a.

Detect and crop face region.

-

b.

Align face using facial landmarks.

-

c.

Resize image to 224 × 224.

-

d.

Normalize pixel values to [0,1].

-

e.

Store in processed_dataset.

Define FDINet-59 Network:

-

a.

Define InceptionBlock(input):

-

branch1 = Conv1 × 1(input).

-

branch2 = Conv1 × 1(input) → Conv3 × 3.

-

branch3 = Conv1 × 1(input) → Conv5 × 5.

-

branch4 = MaxPool3 × 3(input) → Conv1 × 1.

-

output = Concatenate(branch1, branch2, branch3, branch4).

-

Return output.

-

-

b.

Define DenseBlock(L, input):

-

Initialize list features = [input].

-

For i = 1 to L:

-

-

i.

x = InceptionBlock(concatenate(features)).

-

ii.

Append x to features.

-

Return concatenate(features).

-

-

c.

Define TransitionLayer(input):

-

x = Conv1 × 1(input).

-

x = AvgPool2 × 2(x).

-

Return x.

-

-

d.

Define FDINet-59(input_image):

-

x = Conv7 × 7(input_image), stride = 2.

-

x = MaxPool3 × 3(x), stride = 2.

-

x = DenseBlock(6, x).

-

x = TransitionLayer(x).

-

x = DenseBlock(10, x).

-

x = TransitionLayer(x).

-

x = DenseBlock(12, x).

-

x = TransitionLayer(x).

-

x = DenseBlock(16, x).

-

x = TransitionLayer(x).

-

x = DenseBlock(15, x) // Total 59 layers.

-

x = GlobalAvgPool(x).

-

x = FullyConnected(x, output_size = 2).

-

Return Softmax(x).

-

Training:

-

Initialize model = FDINet-59.

-

Define loss_function = CrossEntropyLoss.

-

Define optimizer = Adam(model.parameters).

-

For epoch = 1 to N:

-

For each (image, label) in training_set:

-

a.

prediction = model(image).

-

b.

loss = loss_function(prediction, label).

-

c.

optimizer.zero_grad().

-

d.

loss.backward().

-

e.

optimizer.step().

-

a.

Testing / Inference: For each test_image in test_set:

-

a.

output = model(test_image).

-

b.

predicted_label = ArgMax(output).

-

c.

Store predicted_label.

Evaluation:

-

a.

Compute accuracy, precision, recall, F1-score.

-

b.

Generate confusion matrix and ROC curve.

-

c.

Analyze performance and adjust if needed.

End Algorithm.

Core libraries, framework and evaluation matrices

This section discusses the core libraries, framework and evaluation matrices used for implementation and evaluation of proposed architecture FDINet59. Table 3 provides the details about the Core libraries and Framework and Table 4 provides the idea about the evaluation metrices.

Result and discussion

There are 1,120,000 photos in the dataset. This data set was produced using mtcnn crop, and then scaled up by 80 pixels to a final size of 150 pixels on each side.

There are 50 total meta-JSON files and folders in this Dataset. There are over 2,000 pictures in each of those folders. True and false pictures are included in the dataset. For implementation of our proposed model, we split the dataset into 85:15 ratio for training and testing, respectively. This section presents the results of the experiments performed for the proposed FDINet-59 model for deepfake detection.

After training FDINet59 with the training dataset for 50 epochs with 2 K-Folds, customized learning rate, and ADAM optimizer, the Initial learning rate was 0.0001 and gradually decrease per epoch. We got a training accuracy of 94.95% while a validation accuracy of 70.02%. Figure 7 depicts the accuracy over time during training, and Fig. 8 depicts the same data during validation.

The loss during training and validation is represented in Figs. 9 and 10, respectively. Initially, the training loss was 0.725 and gradually decreases per epoch and after 50 epochs, the final loss was 0.205 while Initially. The validation loss was 0.688 and decreased up to 0.575 at the end of the 50th epoch.

ROC curves depict the ratio of the proportion of correct diagnoses to those that are incorrect is presented in Fig. 11. In this case, the top left point represents perfection, with a TPR of 1 and an FPR of 0. In most cases, success is measured by the area under the receiver operating characteristic curve (AUC-ROC), with a higher value indicating better performance. For validating FDINet59, the AUC is 0.72. The cut-off point is represented by the dashed red line.

We’ve put together a heat map that visualizes the confusion matrix, showcasing the real versus expected values. In a confusion matrix, there are four key outcomes: true negatives, true positives, false negatives, and false positives. Essentially, false positives and false negatives are what we call Type I and Type II errors, respectively. You can see the metrics derived from this confusion matrix illustrated in Fig. 12, while Table 4 lays out the calculated metrics. Focusing on the four main performance metrics—accuracy, precision, recall, and F1 score—Table 5; Fig. 13 offer a side-by-side comparison of the CNN designs. Notably, FDINet59 stands out by consistently outperforming the other models across all metrics, highlighting its prowess in detecting deepfake images. The hypothesis is validated by the empirical results. FDINet59 outperforms previous networks on a large-scale dataset in terms of stability and detection accuracy, proving to be a dependable model for deepfake detection.

Conclusion and future scope

As a result of the proliferation of deepfakes, people are starting to lose faith in the veracity of the information they read or see on television. Threats like this have the potential to provoke political conflict, public outcry, bloodshed, and even war, as well as bring distress and harm to the targets. Given the ease with which deepfake technologies may be accessed and the rapid dissemination of fake content via social media, this is of paramount importance right now. Given the model’s intended use in evaluating social media videos, it was crucial to assess FDINet59’s resilience in noisy and compressed settings—conditions frequently encountered on platforms like as Instagram, TikTok, and WhatsApp. Social media content generally experiences significant compression, frame rate diminishment, and degradation in quality during upload and transmission. To replicate this, supplementary experiments were performed utilizing compressed and artifact-laden deepfake samples, thereby more effectively mirroring real-world conditions. FDINet59 exhibited significant resilience to compression distortions, preserving excellent accuracy and precision relative to other models. This resilience is ascribed to: The Inception modules possess multi-scale feature extraction capabilities that facilitate the identification of artifacts at varying degrees of granularity. The intricate connectivity framework guarantees that even compromised features aid in categorization, enabling the model to detect tampering despite visual noise. FDINet59 is particularly advantageous for use in real-time detection systems, browser extensions, or social media monitoring tools where video quality is suboptimal. In this research article, describe a deep learning-based method for efficiently identifying deepfake videos shared across social media platforms. The end goal is to develop a system for identifying deepfake images and videos. The main contribution of the paper is as follows:

-

This paper introduces the work done and methods applied to develop FDINet59, Fake Dense Inception Network with 59 layers.

-

Deepfake media created using autoencoder and GAN can be detected using FDINet59.

-

FDINet59 detects deepfake media with accuracy up to 70.02% with 0.688 log loss for testing dataset. FDINet59 training accuracy is 94.95% with 0.205 log loss.

As a result, the proposed model can be implemented in a mobile app, allowing users to verify the veracity of any given piece of media before fully trusting it. Restricting the availability of manipulated digital materials is as a means of disseminating false information. FDINet59 exhibited robust capabilities in the detection of deepfake images; however, it is essential to recognize several limitations. The dataset utilized comprised MTCNN-cropped facial images, which may not comprehensively represent real-world conditions, including occlusion, varying lighting, or motion blur. The model’s testing was confined to image-based deepfakes, thereby restricting its applicability to video or audio deepfakes. The training data may not encompass the most recent deepfake generation techniques, potentially impacting generalizability. The evaluation did not include computational efficiency or real-time performance, and there was no cross-dataset validation performed to assess robustness across various data sources. Subsequent efforts should focus on these elements to improve practical applicability in real-world scenarios.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

Change history

28 September 2025

The original online version of this Article was revised: In the original version of this Article, Nafees Akhter Farooqui was incorrectly affiliated with ‘Department of CSE, Government Engineering College, West Champaran, Bettiah, Bihar, India’. The correct affiliation for Nafees Akhter Farooqui is ‘Department of CSE, Koneru Lakshmiah Education Foundation Vaddeshwaram, Guntur, Andhra Pradesh, India’. In addition, Rafeeq Ahmed was incorrectly affiliated with ‘Department of CSE, Koneru Lakshmiah Education Foundation Vaddeshwaram, Guntur, Andhra Pradesh, India’. The correct affiliation for Rafeeq Ahmed is ‘Department of CSE, Government Engineering College, West Champaran, Bettiah, Bihar, India’.

References

Karandikar, A. et al. Deepfake Video Detection Using Convolutional Neural Network (2020).

Baldi, P. et al. Autoencoders, unsupervised learning, and deep architectures. In Proceedings of ICML Workshop on Unsupervised and Transfer Learning, Proceedings of Machine Learning Research (eds. Guyon, I. et al.), vol. 27, 37–49 (PMLR, 2012).

Charitidis, P. et al. Investigating the Impact of Pre-processing and Prediction Aggregation on the Deepfake Detection Task (2020).

Mirsky, Y. & Lee, W. The creation and detection of deepfakes: a survey. ACM Comput. Surveys. 54 (1), 1–41. https://doi.org/10.1145/3395046 (2021).

Ogilvy. Shah Rukh Khan-My Ad. https://www.ogilvy.com/work/shah-rukh-khan-my-ad (2021).

Afchar, V. et al. Mesonet: a compact facial video forgery detection network. In 2018 IEEE International Workshop on Information Forensics and Security (WIFS) (IEEE, 2018).

D’Amiano, L., Cozzolino, D., Poggi, G. & Verdoliva, L. A PatchMatch-based dense-feld algorithm for video copy-move detection and localization. IEEE Trans. Circuits Syst. Video Technol. 29 (3), 669–682 (2019).

Afchar, D. et al. MesoNet: A Compact Facial Video Forgery Detection Network. https://arxiv.org/abs/1809.00888 (2018).

Li, S. Deepfake Dataset. https://www.kaggle.com/unkownhihi/deepfake.

Ding, X. et al. Identification of motion-compensated frame rate up-conversion based on residual signals. IEEE Trans. Circuits Syst. Video Technol. 28 (7), 1 (2018).

Sabir, E. et al. Recurrent convolutional strategies for face manipulation detection in videos. Proc. IEEE Conf. Comput. Vis. Pattern Recogn. Worksh. 3 (1), 80–87 (2019).

Kharbat, F. et al. Image feature detectors for deepfake video detection. In 2019 IEEE/ACS 16th International Conference on Computer Systems and Applications (AICCSA), Abu Dhabi, United Arab Emirates 1–4. https://doi.org/10.1109/AICCSA47632.2019.9035360 (2019).

Kharbat, F. F. et al. Image feature detectors for deepfake video detection. In 2019 IEEE/ACS 16th International Conference on Computer Systems and Applications (AICCSA) 1–4 (IEEE, 2019).

Gironi, A. et al. A video forensic technique for detecting frame deletion and insertion. In Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 6226–6230 (2014).

Goodfellow, I. J. et al. Generative Adversarial Nets. NIPS 2014 (2014).

Güera, D. & Delp, E. J. Deepfake video detection using recurrent neural networks. In Proceedings of 15th IEEE International Conference on Advanced Video and Signal-Based Surveillance 1–6 (2018).

Khalid, H. & Woo, S. S. OC-FakeDect: classifying deepfakes using one-class variational autoencoder. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops 656–657 (2020).

Jung, T., Kim, S. & Kim, K. DeepVision: deepfakes detection using human eye blinking pattern. IEEE Access. 8, 83144–83154 (2020).

Hashmi, M. F. et al. An exploratory analysis on visual counterfeits using conv-lstm hybrid architecture. IEEE Access. 8, 101293–101308 (2020).

Kaliyar, R. K., Goswami, A. & Narang, P. Deepfake: improving fake news detection using tensor decomposition-based deep neural network. J. Supercomput. 1, 1. https://doi.org/10.1007/s11227-020-03294-y (2020).

Nayak, J. P., Anitha, K., Parameshachari, B. D., Banu, R. & Rashmi, P. PCB fault detection using image processing. IOP Conf. Ser. Mater. Sci. Eng. 1, 225 (2017).

Korshunov, P. & Marcel, S. Deepfakes: A New Threat to Face Recognition? Assessment and Detection (2018).

Chi Maduakor, H. U. U. & Alo Williams, R. E. Integrating deepfake detection into cybersecurity curriculum. In Proc. Future Technol. Conf. (FTC) (Advances in Intelligent Systems and Computing) (eds Arai, K. et al.), vol. 1288, 588–598. https://doi.org/10.1007/978-3-030-63128-4_45 (Springer, 2020).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 770–778 (2016).

Kumar, A., Bhavsar, A. & Verma, R. Detecting deepfakes with metric learning. In 2020 8th International Workshop on Biometrics and Forensics (IWBF) 1–6 (2020).

Li, Y. et al. Celeb-DF: A Large-Scale Challenging Dataset for Deepfake Forensics. http://arXiv.org/1909.12962 (2019).

Li, Y. et al. Celeb-df: a large-scale challenging dataset for deepfake forensics. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 3207–3216 (2020).

Das, P. R., Talukdar, R. R. & Kumar, C. J. Exploring the interplay of abstinence self-efficacy, locus of control, and perceived social support in substance use disorder recovery. Curr. Med. Res. Opin. 1, 1 (2024).

Bhadra, S., Kumar, C. J. & Bhattacharyya, D. K. Multiview EEG signal analysis for diagnosis of schizophrenia: an optimized deep learning approach (accepted). Multimedia Tools Appl. 1, 1 (2024).

Sharma, M., Kumar, C. J. & Bhattacharyya, D. K. Machine/deep learning techniques for disease and nutrient deficiency disorder diagnosis in rice crops: A systematic review. Biosyst. Eng. 1, 1 (2024).

Bhadra, S. & Kumar, C. J. Enhancing the efficacy of depression detection system using optimal feature selection from HER. Comput. Methods Biomech. Biomed. Eng. 1, 1 (2023).

Dataset on Kaggle. https://www.kaggle.com/datasets/tusharpadhy/deepfake-dataset/data (Accessed January 2024).

Jiang, L. et al. DeeperForensics-1.0: A Large-Scale Dataset for Real-World Face Forgery Detection. http://arXiv.org/2001.03024 (2020).

Verdoliva, L. Media Forensics and DeepFakes: An Overview (2020).

Li, X. et al. Sharp multiple instance learning for deepfake video detection. In Proceedings of the 28th ACM International Conference on Multimedia 1864–1872 (2020).

Li, Y., Chang, M. & Lyu, S. In Ictu Oculi: Exposing AI created fake videos by detecting eye blinking. In Proceedings of IEEE International Workshop on Information Forensics and Security 1–7 (2018).

Li, Y. & Lyu, S. Exposing deepfake videos by detecting face warping artifacts. CoRR. https://github.com/deepfakes/faceswap (Accessed April 2024) (2018).

Beigzadeh, M. & Vafadoost, M. Detection of Face and Facial Features in Digital Images and Video Frames (2009).

Matern, F., Riess, C. & Stamminger, M. Exploiting visual artifacts to expose deepfakes and face manipulations. In Proceedings of IEEE Winter Applications of Computer Vision Workshops 83–92 (2019).

Mittal, T. et al. Emotions don’t lie: an audio-visual deepfake detection method using afective cues. In Proceedings of the 28th ACM International Conference on Multimedia 2823–2832 (2020).

Waqas, N., Safie, S. I., Kadir, K. A., Khan, S. & Kaka Khel, M. H. DEEPFAKE image synthesis for data augmentation. IEEE Access. 10, 80847–80857. https://doi.org/10.1109/ACCESS.2022.3193668 (2022).

Nguyen, H. H., Yamagishi, J. & Echizen, I. Capsule-forensics: Using capsule networks to detect forged images and videos. In Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2307–2311 (2019).

Dufour, N. et al. Deepfakes Detection Dataset by Google & Jigsaw.

Korshunov, P. & Marcel, S. DeepFakes: A New Threat to Face Recognition? Assessment and Detection. http://arXiv.org/1812.08685 (2018).

Sabir, E. et al. Recurrent convolutional strategies for face manipulation detection in videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops 80–87 (2019).

Agarwal, S. et al. Detecting deep-fake videos from appearance and behavior. In IEEE International Workshop on Information Forensics and Security (WIFS) 1–6 (IEEE, 2020).

Simonyan, K. & Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. http://arXiv.org/1409.1556 (2024).

Fernandes, S. et al. Detecting deepfake videos using attribution-based confidence metric. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops 308–309 (2020).

Nguyen, T. T. et al. Deep Learning for Deepfakes Creation and Detection: A Survey, 1909.11573_v2 (2019).

Yang, X., Li, Y. & Lyu, S. Exposing deep fakes using inconsistent head poses. In Proc. IEEE Int. Conf. Acoust., Speech Signal Process 8261–8265. https://doi.org/10.1109/ICASSP.2019.8683164 (ICASSP, 2019).

Chesney, R. & Citron, D. K. Deep fakes: A looming challenge for privacy, democracy, and National security. Calif. Law Rev. 107 (6), 1753–1820. https://doi.org/10.2139/ssrn.3213954 (2019).

Goodfellow, I. et al. Generative adversarial Nets. Adv. Neural. Inf. Process. Syst. 27, 1 (2014).

Harwell, D. Fake-porn videos are being weaponized to harass and humiliate women: everybody is a potential target. The Washington Post. https://www.washingtonpost.com/ (2018).

Kumar, R., Agarwal, A. & Singh, M. Lip-sync deepfakes: detection and challenges. J. Vis. Commun. Image Represent. 78, 103152. https://doi.org/10.1016/j.jvcir.2021.103152 (2021).

Tolosana, R., Vera-Rodriguez, R., Fierrez, J., Morales, A. & Ortega-Garcia, J. Deepfakes and beyond: A survey of face manipulation and fake detection. Inf. Fusion 64, 131–148.

Vaccari, C. & Chadwick, A. Deepfakes and disinformation: Exploring the impact of synthetic political video on deception, uncertainty, and trust in news. Soc. Media Soc. 6, 1. https://doi.org/10.1177/2056305120903408 (2020).

Verdoliva, L. Media forensics and deepfakes: an overview. IEEE J. Selec. Topics Signal Process. 14 (5), 910–932. https://doi.org/10.1109/JSTSP.2020.2992340 (2020).

Szegedy, C. et al. Rethinking the Inception Architecture for Computer Vision. http://arXiv.org/1512.00567 (2015).

Yang, X., Li, Y. & Lyu, S. Exposing deep fakes using inconsistent head poses. In Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 8261–8265 (2019).

Liu, Z. et al. Deep learning face attributes in the wild. In Proc. IEEE Int. Conf. Comput. Vis. (ICCV) 3730–3738. https://doi.org/10.1109/ICCV.2015.425 (2015).

Tan, Z., Yang, Z., Miao, C. & Guo, G. Transformer-based feature compensation and aggregation for deepfake detection. IEEE. Signal. Process. Lett. 29, 2183–2187. https://doi.org/10.1109/LSP.2022.3214768 (2022).

Wang, Z., Guo, Y. & Zuo, W. Deepfake forensics via an adversarial game. IEEE Trans. Image Process. 31, 3541–3552. https://doi.org/10.1109/TIP.2022.3172845 (2022).

Acknowledgements

The authors extend their appreciation to Taif University, Saudi Arabia, for supporting this work through project number (TU-DSPP-2024-67).

Funding

This research was funded by Taif University, Saudi Arabia, project No. (TU-DSPP-2024-67).

Author information

Authors and Affiliations

Contributions

Conceptualization, Abdullah Alharbi, and Wael Alosaimi; methodology, Mohd Nadeem; software, Raees Ahmad Khan.; validation, Bader Alouffi., Wael Alosaimi and Nafees Akhter Farooqui.; formal analysis, Nafees Akhter Farooqui; investigation, Raees Ahmad Khan; resources, Hashem Alyami; data curation, Mohd Nadeem; Wael Alosaimi.; writing—original draft preparation, Nafees Akhter Farooqui; writing—review and editing, Rafeeq Ahmed; visualization, Ahmed Almulihi; supervision, Rafeeq Ahmed; project administration, Raees Ahmad Khan; funding acquisition, Abdullah Alharbi, Wael Alosaimi, Hashem Alyami, Bader Alouffi, and Ahmed Almulihi.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Alharbi, A., Alosaimi, W., Nadeem, M. et al. Novel 59-layer dense inception network for robust deepfake identification. Sci Rep 15, 24159 (2025). https://doi.org/10.1038/s41598-025-03889-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-03889-6

Keywords

This article is cited by

-

Symmetric bug prediction in software requirement by machine learning algorithms

Scientific Reports (2025)

-

Quantum resistant blockchain and deep learning revolutionize secure communications for autonomous vehicles

Scientific Reports (2025)