Abstract

Concurrent developments in robotic design and natural language processing (NLP) have enabled the production of humanoid chatbots that can operate in commercial and community settings. Though still novel, the presence of physically embodied social robots is growing and will soon be commonplace. Our study is set at this point of emergence, investigating people’s first impressions of a humanoid chatbot in a public venue. Specifically, we introduced “Pepper” to attendees at an innovation festival. Pepper is a humanoid robot outfitted with ChatGPT. Attendees engaged with Pepper in a bounded interaction and provided feedback about their experience (n = 88). Qualitative analyses reveal participants’ mixed emotional resonance, reactions to Pepper’s embodied form and movements, expectations about interpersonal connection and rituals of interaction, and attentiveness to issues of diversity and social inclusion. Findings document live responses to a humanoid chatbot, highlight the affective, social, and material forces that shape human–robot interaction, and underscore the value of “in the wild” studies, creating space and scope for user-publics to express their perspectives and concerns. Such insights are acutely relevant as we enter this next era of social robotics.

Similar content being viewed by others

Introduction

First impressions have formative effects on the ways relationships take shape and develop1,2,3 These impressions may change over time through the course of subsequent and ongoing interactions, but the initial encounter holds disproportionate weight. This weightiness of first impressions applies to individuals and social groups, along with a range of non-human entities such as organizations, environments, activities, and objects4,5,6, including robots7,8. This is why, for example, people dress up for a first date and companies put special care into their initial launch. The significance of first impressions is at least partially a function of their rarity: by definition, they only happen once. First impressions are thus sociologically rich, yet difficult to observe and analyse. Laboratory studies can contrive initial encounters, but these are filtered by the controlled sterility of that setting. “Real world” first impressions are much harder to capture unless the opportunity organically arises. The rollout of ChatGPT9 and its coincidence with commercially available humanoid robots creates one such opportunity, which we draw upon in the present work. Specifically, we explore people’s reactions to humanoid chatbots—embodied robotic forms that have natural language capabilities. We focus in particular on lay publics, whose lived expertise will be vital to the design and deployment of robotic systems that integrate into the spheres of daily life.

Generative AI that runs on large language models (LLMs) entered the mainstream in late 2022 with ChatGPT as the predominant force. ChatGPT uses LLMs to transform mass troves of textual data into conversational outputs that respond to prompts in ways that mimic “natural” human speech patterns. The public rollout of ChatGPT and other LLM products has overlapped with an increasing availability of humanoid robots, such as SoftBank’s “Pepper”10, built for human–robot interactions across various personal and commercial sites. Our team integrated ChatGPT with a Pepper robot, creating something new from these two existing technologies. We introduced Pepper to attendees at an innovation festival in Australia in mid-2023. The festival was free and open to the public, attracting a diverse cross-section of the community.

In Australia at that time, no other embodied robots equipped with natural language processing were accessible to the public (of which we are aware), creating the conditions for a novel encounter. This type of robotic implementation is likely to be utilised across many domains going forward. Because of its newness, however, most festival attendees would not have previously interacted with a humanoid chatbot, allowing us to gather first impressions in a live and dynamic environment. We arranged interactive meetings between attendees at the festival and our chat-enabled Pepper bot. We then asked participants for their “thoughts, comments, and questions” after engaging with Pepper in a semi-structured interaction. Our study draws out the themes that arose from participants’ experiential accounts. In total, we collected qualitative data from 88 participants.

Literature

Humanoid robots are rapidly emerging from industrial and lab-based contexts to play a role in everyday scenarios such as healthcare, commerce, care work, and education11. Concurrently, LLMs and their use in natural language processing have experienced an explosion of activity, public interest, and investment12 as they scale13. Together, these twinned sites of development—physical robotics and conversational agents that use LLMs—have accelerated the already active field of human–robot interaction (HRI). Researchers are beginning to address these integrations between robotic hardware and LLMs, though much of that work is highly technical and laboratory based. For example, existing studies report on the integration of LLMs for task planning and execution14,15,16, as applied in both humanoid and non-humanoid robots17,18,19,20. With the advent of vision-language models, further advancements can be expected in multi-modal robot interaction capabilities with heightened contextual awareness21 and improved data alignment22.

Less common are studies about the social experience of interacting with humanoid robots that have natural language capabilities, even as these cyber-physical forms come to populate domains of daily life. Exceptions to this tend to be small-scale and confined to the laboratory. For example, Billing, Rosén and Lamb23 used ChatGPT to enable a chatbot-type verbal interface with both Pepper and Nao Softbank robots. Their lab-based study (n = 10) using ChatGPT embedded in Pepper examined impression formation over time, identifying mixed and often negative affect among participants, expectations of human interaction patterns and the substantial impact of technical factors7. These technical and lab-based research outputs offer a useful starting point from which we build and advance, working to inform the development of flexible, resilient, and grounded robotic systems.

In this study we sought to assess impression formation towards an LLM-embodied agent outside of a lab-based setting, in a public site occupied by everyday publics. Such research is specifically focused on establishing ecologically valid data, and is known as studying robots “in the wild”24,25. This approach supports gathering the experiences of (typically) untrained users in human-centric settings. Rather than seeking to control chaotic human elements during the research, in the wild studies embrace and indeed explore those additional elements, which could include new interlocuters joining and then exiting the interaction, cross-conversation about other tasks, and the presence of audio and visual distractions. Contexts used for robotic in the wild studies have included shopping malls, trade shows, museums and hotels26,27,28,29. There have been growing calls for more in the wild studies of human–robot interaction to enable a realistic understanding of robots in complex social contexts, supporting generalisation of lab-based research outcomes, and closer alignment between product development and user experiences25.

Concordant with the commitments of in the wild robotics research, our study is based on a broad and human-facing question, ascertaining the public’s first impressions of LLM-embodied agents (i.e., humanoid chatbots), exploring how people experience this hybridized form in an ecologically valid environment. Participants’ responses to “first contact” with such a system will provide key insights that can inform the direction of social robotics moving forward. The objective of the study was to gain a qualitative understanding of the initial impressions of the LLM-embodied agent by members of the general public. Previous research suggests responses may reflect factors such as novelty20 and excitement, frustration and confusion, and recognition of gaps between expectation and reality30,31, however the research design remained open to collate and interpret a broad range of themes.

Technical implementation

As a quickly evolving technology, convincingly embodying ChatGPT proved to be technically and aesthetically challenging. Figure 1 depicts the integration pipeline we adopted. The system is divided into two main components: the back-end, which performs the Natural Language Processing tasks and the front-end, which forms the necessary hardware components embodied within Pepper—microphones, speakers and actuators for gestural display.

Schematic diagram of the system architecture. The Pepper robot captures participants’ audio responses using its internal microphones and transmits them to the PythonAnywhere web interface for transcription. The transcribed text is then processed by ChatGPT, which generates a textual response. This response is sent back to the robot’s text-to-speech engine, enabling the robot to vocalize it.

Server back-end

The initial prototype relied on a deprecated version of Google Cloud Platform (GCP) speech recognition with limited quota and the use of a directional microphone to enhance audio quality. However, this required additional hardware (Raspberry Pi) for processing, and Pepper could not physically hold the microphone. This made the installation aesthetically unappealing and raised concerns about the disruptive effects of an external microphone pointed at participants in a way that affected the natural flow of interaction. Therefore, we excluded this option and relied on the embedded microphones in the Pepper robot, ensuring through pre-tests that audio quality was adequate. With the reworked speech recognition Python module, Pepper could listen to people, process their speech, and generate transcriptions without the risk of GCP service interruptions. The paid version of this service was used to optimise the performance. For the installation, we integrated the most advanced version of the ChatGPT available at the time, GPT-3.5-TURBO.

Due to limitations in Pepper’s software, the robot could not directly access GCP, OpenAI, or use modern APIs, as they require access to URLs (API endpoints). This meant that the code could not run on Pepper’s CPU. To address this issue, we developed a Flask server32 that integrated speech recognition (GCP) and ChatGPT (OpenAI) API calls. We modified the Python code to build the Flask server and incorporated the required libraries, credentials, and different function calls for the new APIs. This server was hosted online using PythonAnywhere, which ensured that the code ran continuously, allowing Pepper to access it on demand.

Prompt “engineering”

The chosen OpenAI model was specifically designed for creating chatbot applications capable of holding coherent dialogues and retaining entire conversations for later reference, effectively remembering previous interactions. This model also introduced “roles” for the system (defining rules for ChatGPT), assistant (ChatGPT’s responses), and user (user prompts). With this updated ChatGPT Python module, Pepper can follow predefined rules, assume different personalities, and engage in specific contexts, enabling it to interact with human-like responses. For instance, we wanted Pepper to autonomously infer when a conversation was coming to an end and avoid the typical ChatGPT answer of “As a language model, I cannot…,”, so we fed ChatGPT the following prompt rule: "Pretend you are Pepper the robot, you have a friendly and bubbly personality. You are speaking with people at the … Festival held in …. The answers must not exceed two sentences. Identify when the user wants to end the conversation and start your response with '(END)|', e.g. '(END)|Goodbye! It was great talking with you. Enjoy the rest of the festival and have a great time’. You can make up information you don’t know." For purposes of comparison across the sample, we programmed Pepper with this single prompt and related personality profile (“friendly and bubbly”) so that all participants were responding to a consistent stimulus.

Pepper front-end

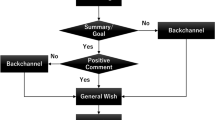

We used the Choregraphe33 software to program Pepper’s social behaviour. The Choregraphe file encompasses the necessary logic for the robot to converse with participants. It includes the ability to recognize when a participant says, "Hi Pepper," respond with a greeting, record the person’s speech, and send the audio to the back-end server for processing. We observed that over an uncongested and optimised network, the return journey of an utterance heard by the robot to response took between 4 to 5 s on average. To obscure the server delay during processing, a head-scratching motion was programmed into Pepper as a non-verbal backchanneling effect. The robot’s text-to-speech module was used to articulate the response and evaluate if the conversation has ended by analysing if the returned ChatGPT response starts with “(END)|…”. Like back-end programming, Pepper was programmed for a consistent front-end interaction style with all participants.

Results

Our analyses distil four interrelated themes that threaded throughout participant responses. We separate them here for analytic clarity, while at times highlighting the porous boundaries and overlaps between them. Themes include participants’ attention to their own emotions, Pepper’s embodiment, interpersonal elements of the interaction, and recommendations for improving the human–robot encounter. In presenting themes, we use illustrative examples from the data, noting that the selected examples are backed by a critical mass of similar content. Where necessary, we lightly edited text to correct for typos and grammar.

Emotions

We open the Results section with emotions because these loomed large throughout participant responses in ways that coloured the other thematic content. Participants expressed a variety of emotions when commenting on their interactions with Pepper, including interest, excitement, frustration, disappointment, and surprise. These emotions appear directly in the form of specific words, and also indirectly, through the context of participants’ comments. Contextual emotional expressions were discussed and categorized as part of the inductive coding process described under “Analytic Approach”.

Some participants expressed a single and summative emotion, for example, stating that the interaction was “fun” or “interesting”. Others expanded, indicating not just what they felt, but also why. For example:

Pepper was not reliable in understanding certain words I spoke, which was frustrating.

It was interesting to engage with Pepper, and I found myself exploring some ideas that I hadn’t expected to, with some interesting outcomes—the back and forth stimulated alternative ideas. I think the process of interrogation is facilitated by the humanoid presentation.

These more expansive statements revealed multiple meanings associated with a single emotion descriptor and gave a sense of the ways various emotions operate together. In the second excerpt, for instance, “interest” was an end in itself—the participant felt intrigued—but also, prompted a feeling of surprise as the interaction proceeded in ways that the participant had not expected.

With the multiplicity of emotional expressions, there were at times contradictions within a single account. These contradictions highlight the complexities and tensions of the social encounter, as participants learn about, experience for the first time, and make sense of a new interactive form. For example, the participant below found the interaction at once “entertaining” and “fun”, but also implicitly disappointing due to limitations in Pepper’s technical capacities:

It was entertaining but not much different from talking with Google Nest AI. I thought that Pepper would be able to recognise my emotion through facial recognition but they couldn’t. It was a fun experience.

Figure 2 presents a visualization of participants’ emotional expressions, demonstrating both the range of emotions and their relative prevalence—including ambiguous terms, like “interesting” that qualify and mediate varied emotional responses.

Word cloud of emotion terms. This word cloud visualizes the emotional expressions used by participants, illustrating both the variety of emotions conveyed and their relative frequency. Larger words represent emotions mentioned more frequently, while smaller words indicate less commonly expressed emotions. This visualization provides insight into the dominant emotional themes present in participants’ responses, including both direct expressions and mediating terms that qualify longer emotive accounts (e.g., “interesting”) (https://www.freewordcloudgenerator.com).

Embodiment

Integrating conversational AI with a humanoid robot was the core target of exploration in this study. The material form of the robot was not missed by participants, who commented on Pepper’s embodiment, with mixed evaluations. Some found the interaction eased by Pepper’s form and movement, stating, for example, that "…The robot’s body language helped in between questions” or that “The eye contact [and] head movements made Pepper feel a lot more personal”. Others, however, were underwhelmed by Pepper’s physicality and apparent discordance between language processing and bodily movements.

There was no link between words said by the robot and its body language- to the point there was no point in there being a physical robot in the interaction at all, I should have just ‘talked’ with chatGPT. To continue this line of disappointment a little further, I asked the robot to shake my hand (it said it couldn’t), asked it to wave its left hand (again could not) I even strongly simplified my sentences and asked it to wave its arm and it still could not do that.

Note the emotional resonance in the above quote, in which disappointment and frustration directly and indirectly follow from Pepper’s embodied (in)capacities, while alternatively, the embodied form seems to evoke positive affect and comfort from those participants who commented favourably about Pepper’s body language and facial expressions.

Beyond evaluations, participants also actively anthropomorphized Pepper as an embodied interlocutor. One way they did so was with gendered pronouns. Most often these were feminine, but both masculine and feminine were present, alongside one gender-neutral plural in which the respondent referred to Pepper as “they”. The tendency to anthropomorphize through use of gender-specific pronouns, and feminine pronouns in particular, may reflect the introduction of Pepper by name (rather than ‘the robot’) and culturally-specific understanding of the gendered nature of that name. However, the disproportionate feminization of Pepper through pronouns may also be a generalized function of feminized chatbots and other automated systems situated across home and commercial settings such that new encounters with a robot default to feminized attributions34. Interrogating these gendering practices are beyond the scope of the present work but indicate an avenue for future research. Participants also commented on Pepper’s agentic choices and apparent needs, for instance lamenting that Pepper agreed to sing a song, but then decided not to, and suggesting that Pepper “perhaps needs a holiday”.

Expectations about Interpersonal Interaction

Participants seemed to hold expectations for Pepper that resemble interpersonal interactions with other people. These expectations were wrapped up in both the robotic embodied form and the use of natural language processing. Although some participants commented that Pepper’s speech, movements, and facial expressions enriched the encounter, most commented on the ways the encounters fell short. Filtered through the lens of social psychology, we can categorize user dissatisfactions with the interpersonal encounter into two elements: role-taking and turn-taking. These represent norms about interpersonal connection and interaction rituals, respectively.

Role-taking

Role-taking is the process and practice of putting the self in the proverbial shoes of another or others. This is a hallmark of selfhood and lynchpin of community social life, foundational to social connection35,36. Role-taking involves both cognition and affect, perceiving what another is thinking and feeling (perspective taking) and being moved by another’s emotions (empathy)37. Role-taking is both an imperative and an expectation in social interaction. People are compelled to role-take with others in the course of interaction and expect others to role-take with them in return. Participants expressed dismay when they found Pepper difficult to read (i.e., it was hard to role-take with Pepper) and when Pepper seemed unable to understand them (i.e., Pepper was ineffective at role-taking with the participant). As demonstrated in the following excerpts, these comments related both to Pepper’s physicality and conversational programming.

…[It] would be nice if it also had facial expressions on Pepper.

…I realised that Pepper did not comment about her personal feelings.

…It did not at all seem to be getting any body language clues from me.

…Would be nice if she could perceive expressions and react accordingly.

Turn-taking

Social interactions are never entirely unique, but instead follow from shared rituals in which all parties collaborate to achieve a successful exchange38,39. A key element of social interaction is turn-taking between participants in a conversation40. During their sessions with Pepper, some participants found turn-taking to be inadequate. In particular, they felt that Pepper talked too much and invited too little, breaching the norms of polite conversation. For example:

Pepper was repetitive. We could not stop Pepper from talking. Pepper did not respond when I raised my hand or said excuse me. Pepper didn’t ask me any questions except ‘what can I help you with?’.

The robot talks too much. I can’t interrupt it or anything.

Pepper did not follow its own instructions. we tried to interrupt it, but it would not stop.

Like perceived deficiencies of role-taking, these missteps in Pepper’s interactional rituals seemed to not only draw complaints from participants, but also a sense of frustration that Pepper would not uphold expected social decorum.

Participant recommendations

Participants were forthcoming with recommendations about how to improve and enhance Pepper as an interaction partner. This indicates participants’ understanding of Pepper (and humanoid chatbots in general) as novel and emergent, with potential to change and further develop. Participants made recommendations that were both technical and social. On a technical level, participants had suggestions about timing, the physical interface, and Pepper’s processing capacities. For example:

The response time of 4 secs made it hard to interact.

I think it might be better if Pepper can talk and process new information at the same time, and respond accordingly.

Why did you decide to use chatGPT as the basis for Pepper? Is there any alternatives? Because currently, Pepper refuses to answer many of the questions because of ChatGPT’s limitations. Is there a way to make her more human-like and personable?

I would suggest a caption stream in the screen when Pepper speaks.

In addition to these technical recommendations, participants focused on issues if inclusivity, commenting on accent and language, age, and an apparent default Whiteness.

Pepper had some issues when I addressed it in Spanish, though I suppose annunciation is important. This could however be something to look into in the future.

I think understanding different accents is important.

Elderly people might struggle with the short time within which to ask a question before Pepper starts processing.

I am Aboriginal and Torres Strait Islander and I found that Pepper couldn’t understand me. We also noticed that the discussion was more robotic rather than engaging. The breakdown of the kinship system was more a generic response rather than an Indigenous response, and it made me wonder if the programming was input by a Caucasian person.

This feedback from participants, which draws attention to (in)accessibility and relevant issues of social diversity and inclusion, was likely bolstered by the naturalistic setting in which our study was conducted, especially given the demographic heterogeneity of attendees at the festival. This kind of astute and valuable feedback thus serves as a reminder that “real world” studies in HRI provide not only ecological validity, but also a diversity of perspectives that may not materialize in the lab.

Discussion

The findings of the current study identified key perceptions and experiences of participants who interacted with a humanoid chatbot in a public setting. Results suggest that participants desire a human-like verbal exchange, including role- and turn-taking, and are frustrated when this does not happen, mirroring previous research7. We also found that embodiment of the chatbot was associated with additional expectations of human-like behaviours by Pepper, such as the ability to “read” emotions in facial expressions and body movement, and that the robot’s physical gestures would reflect the content of its speech. Participants also raised issues of accessibility and inclusion, illustrating the importance of “in the wild” research, garnering grounded insights for an emergent field. We summarize these findings and the insights they generate in Table 1, documenting the tensions, overlaps, and patterns that derived from participants’ first impressions of an LLM-embodied agent. The themes constitute issues of import for participants as members of diverse lay-publics, representing salient variables that should be attended to when considering the design and deployment of embodied agents.

Methods

Sample and setting

We collected data over a 10-day period in July 2023 during an innovation festival at a mid-sized university in Australia. The festival was free to attend and open to the public, attracting a broad and diverse cross-section of the community. The festival offered a range of exhibits, lectures, demos, and interactive events which attendees moved in and out of as suited their interests. This setting was ideal for an “in the wild” study of people’s first impressions with a novel robotic form, allowing for observation and data collection within a live environment oriented towards new technological encounters41. Under these conditions, we accessed a socially and contextually situated sample with the time and inclination to focus on and respond to their interaction with Pepper. See Table 2 for participant demographics.

Each encounter took place within a small transparent dome situated within an open area of festival exhibitions. The dome provided noise-dampening from the rest of the festival while remaining within the wider social setting (Fig. 3). This context was chosen as it provided a mechanism for recruiting members of the general public and supported the inclusion of a wide variety of social permutations, including between the robot and individuals, family groups, and friends, all of which reflects how technology is used in real-world environments. Although the dome added an element of artificiality and separation, its thin and transparent material allowed both noise and visual stimulation to permeate the interaction. This sustained participants’ presence within the festival even as they cordoned off for a conversation with Pepper. In this way, the practicalities of monitored interactions and data collection were balanced against our intellectual commitment to ecological validity in the study design.

Procedures

In the weeks preceding the festival, the research team posted flyers around the community inviting people to sign up to meet Pepper. Those interested could register online and reserve a 30-min timeslot. Participants could also walk-up at the festival and interact with Pepper. Participating in the study was not a condition of engaging with Pepper, and data were only collected from participants who were over the age of 18 and opted-in for data collection. Those who wanted to interact with Pepper but did not want to be part of the study were allowed to do so and had a similar experience to study participants, only no data were collected.

Two research assistants staffed the Pepper exhibit. They greeted walk-up attendees, answered questions, and either facilitated an interaction with Pepper or scheduled an interaction for a later time during the festival. Those who wished to participate in the study filled out a consent form approved by the University Ethics Committee and registered their demographic information. This was all done via electronic tablet and stored on a secure drive.

Once participants finished filling out forms, they were brought inside the small transparent dome in which Pepper was situated along with two chairs. The research assistants greeted Pepper and made an introduction, before leaving the participants alone inside the dome with Pepper. Pepper then started the conversation by asking the participant about their experience at the festival. From there, the conversation proceeded organically and lasted for as long as the participant wished, or until the next time slot was scheduled. Interactions ranged from 3 to 41 min, with a median interaction length of 11 min. Once the interaction was complete, the research assistants gave participants the tablet once again and asked them to record any “thoughts, comments, or questions” through an open response prompt. These open responses, considered here as “first impressions”, are the core source of data in the present work.

Ethical considerations

The project was approved by the Human Research Ethics Committee of the University of Canberra (HREC—13352), and all methods were carried out according to relevant guidelines and regulations. All participants provided informed consent.

Our procedures were informed throughout by ethical considerations, with particular attention to inclusivity, meaningful consent, and the content of ChatGPT. The festival was open to the whole community. Many attendees came with family and friends. We allowed anyone who wished to interact with Pepper, whether or not they wanted to be part of the study or were eligible to do so. For instance, we invited children and families to participate together even though our Ethics approval for study participation and data collection applied to those ≥ 18-years old. We also sustained meaningful and ongoing consent. People who signed up for the study in advance or on site were given a full information sheet, signed their consent prior to participating, had opportunities to ask questions of the research team, and were allowed to rescind consent at any point without need for explanation. Finally, we were aware and cautious of the risks involved with generative AI. A defining trait of generative AI is that its responses to prompts are unpredictable, giving the technology a “natural” conversational character. Media accounts are rife with examples of ChatGPT veering into offensive, aggressive, or otherwise unacceptable speech-acts. To avoid this, we placed careful restrictions on tone and language and pre-tested extensively to ensure a respectful and safe interaction for festival attendees as described in the section on technical implementation.

Analytic approach

Our approach to data collection was intentionally broad and open, asking participants for their “thoughts, comments, and questions”. This created space for participants to express their first impressions of Pepper and of the interaction in their own terms, rather than fitting their impressions to a predetermined structure defined by the research team. As such, our analytic approach followed an inductive method, distilling themes from the ground up. Thematic analysis of this sort focuses on identifying general dimensions or focal points in participant responses, drawing out the elements that participants found most relevant42,43. Recognizing that inductive analyses are necessarily subjective and partial, we none-the-less worked to achieve “trustworthiness” through a rigorous process of thematic development42,43,44. Specifically, three members of the research team (DH, JB, JD) read full responses from participants and individually constructed initial themes that reflected patterns in the data. The team came together to identify and discuss points of overlap and disagreement, merging and refining themes as they related to example excerpts from participant responses. We continued this process iteratively until all data fit into one or more of the existing themes. Themes were fluid, interconnected, and had porous boundaries between them. As such, single excerpts could be categorized under more than one theme, and elements of a single excerpt often spanned multiple thematic categories.

Given that the theme of emotions emerged strongly, the research team also conducted a search of the text input to identify both specific emotional terms used (e.g., surprising), and longer comments that reflected emotional states (see quotes above). We convey this visually through a word cloud and analyse it textually in the thematic section. Our analysis of emotion was undergirded by a sociology of emotions approach, based on Thoits’ definition of emotion as a construct composed of four interrelated parts: (a) appraisals of a situational stimulus or context, (b) changes in physiological or bodily sensations, (c) the free or inhibited display of expressive gestures, and (d) cultural labels applied to specific constellations of one or more of the first three components45. For our purposes, we could only observe the emotive labels participants applied to their experiences. We documented these labels and worked to contextualize them through holistic analysis of how those labels fit within broader response statements, expressed textually within the analysis and visually within a word cloud.

Data availability

Anonymised data is available upon request by emailing the corresponding author (damith.herath@canberra.edu.au).

References

Asch, S. E. Forming impressions of personality. Psychol. Sci. Public Interest 41, 258–290. https://doi.org/10.1037/h0055756 (1946).

Kelley, H. H. The warm-cold variable in first impressions of persons (1950).

Ambady, N. & Skowronski, J. J. First impressions. (Guilford Press, 2008).

Swider, B. W., Harris, T. B. & Gong, Q. First impression effects in organizational psychology. J. Appl. Psychol. 107, 346–369. https://doi.org/10.1037/apl0000921 (2022).

Ornstein, S. First impressions of the symbolic meanings connoted by reception area design. Environ. Behav. 24, 85–110. https://doi.org/10.1177/00139165922410 (1992).

Xia, H., Pan, X., Zhou, Y. & Zhang, Z. J. Creating the best first impression: Designing online product photos to increase sales. Decis. Support Syst. 131, 113235. https://doi.org/10.1016/j.dss.2019.113235 (2020).

Rosén, J., Billing, E. & Lindblom, J. Applying the social robot expectation gap evaluation framework. in International Conference on Human-Computer Interaction 169–188 (Springer).

Paetzel, M., Perugia, G. & Castellano, G. The persistence of first impressions: The effect of repeated interactions on the perception of a social robot. in Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction. 73–82.

Ray, P. P. ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet Things Cyber-Phys. Syst. 3, 121–154. https://doi.org/10.1016/j.iotcps.2023.04.003 (2023).

Pandey, A. K. & Gelin, R. A mass-produced sociable humanoid robot: pepper: The first machine of its kind. IEEE Robot. Autom. Mag. 25, 40–48. https://doi.org/10.1109/MRA.2018.2833157 (2018).

Vianello, L. et al. Human–humanoid interaction and cooperation: A review. Curr. Robot. Rep. 2, 441–454 (2021).

Teubner, T., Flath, C. M., Weinhardt, C., van der Aalst, W. & Hinz, O. Welcome to the era of chatgpt et al. the prospects of large language models. Bus. Inf. Syst. Eng. 65, 95–101 (2023).

Wei, J. et al. Emergent abilities of large language models. arXiv preprint arXiv:2206.07682 (2022).

Brohan, A. et al. Do as i can, not as i say: Grounding language in robotic affordances. in Conference on Robot Learning. 287–318 (PMLR).

Chen, W., Hu, S., Talak, R. & Carlone, L. Leveraging large language models for robot 3d scene understanding. arXiv preprint arXiv:2209.05629 (2022).

Singh, I. et al. Progprompt: Generating situated robot task plans using large language models. in 2023 IEEE International Conference on Robotics and Automation (ICRA). 11523–11530 (IEEE).

Joublin, F. et al. CoPAL: Corrective planning of robot actions with large language models. arXiv preprint arXiv:2310.07263 (2023).

Kumar, K. N., Essa, I. & Ha, S. Words into action: Learning diverse humanoid robot behaviors using language guided iterative motion refinement. arXiv preprint arXiv:2310.06226 (2023).

Wu, J. et al. Tidybot: Personalized robot assistance with large language models. arXiv preprint arXiv:2305.05658 (2023).

Rosén, J., Lindblom, J., Lamb, M. & Billing, E. Previous experience matters: An in-person investigation of expectations in human-robot interaction. Int. J. Soc. Robot. 16, 447–460. https://doi.org/10.1007/s12369-024-01107-3 (2024).

Jiang, C., Dehghan, M. & Jagersand, M. Understanding contexts inside robot and human manipulation tasks through vision-language model and ontology system in video streams. in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). 8366–8372.

Chen, C. W., Chuang, Y. H., Yang, C. H. & Lee, M. F. R. Multimodal robotic manipulation learning. in 2024 International Automatic Control Conference (CACS). 1–4.

Billing, E., Rosén, J. & Lamb, M. Language models for human-robot interaction. in ACM/IEEE International Conference on Human-Robot Interaction, March 13–16, 2023, Stockholm, Sweden. 905–906 (ACM Digital Library).

Sabanovic, S., Michalowski, M. P. & Simmons, R. Robots in the wild: observing human-robot social interaction outside the lab. in 9th IEEE International Workshop on Advanced Motion Control, 2006. 596–601.

Jung, M. & Hinds, P. Robots in the wild: A time for more robust theories of human-robot interaction. ACM Trans. Hum. Robot Interact. (THRI) 7, 1–5 (2018).

Simone, G. D., Saggese, A. & Vento, M. Empowering human interaction: A socially assistive robot for support in trade shows. in 2024 33rd IEEE International Conference on Robot and Human Interactive Communication (ROMAN). 901–908.

Aaltonen, I., Arvola, A., Heikkilä, P. & Lammi, H. in Proceedings of the companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction 53–54 (Association for Computing Machinery, Vienna, Austria, 2017).

Steinhaeusser, S. C., Lein, M., Donnermann, M. & Lugrin, B. Designing social robots’ speech in the hotel context—A series of online studies. in 2022 31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN). 163–170.

Herath, D. C., Binks, N. & Grant, J. B. To Embody or not: A cross human-robot and human-computer interaction (HRI/HCI) study on the efficacy of physical embodiment. in 2020 16th International Conference on Control, Automation, Robotics and Vision (ICARCV). 848–853.

Albayram, Y. et al. in Proceedings of the 8th International Conference on Human-Agent Interaction 6–14 (Association for Computing Machinery, Virtual Event, USA, 2020).

Tolmeijer, S. et al. in Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction 3–12 (Association for Computing Machinery, Cambridge, United Kingdom, 2020).

Relan, K. in Building REST APIs with Flask: Create Python Web Services with MySQL 1–26 (Apress, 2019).

Pot, E., Monceaux, J., Gelin, R. & Maisonnier, B. Choregraphe: A graphical tool for humanoid robot programming. in RO-MAN 2009—The 18th IEEE International Symposium on Robot and Human Interactive Communication. 46–51.

Strengers, Y. & Kennedy, J. The smart wife: Why Siri, Alexa, and other smart home devices need a feminist reboot. (MIT Press, 2021).

Mead, G. H. Mind, Self, and Society. (University of Chicago Press, 1934).

Miller, D. L. The meaning of role-taking. Symb. Interact. 4, 167–175 (1981).

Davis, J. L. & Love, T. P. in Advances in Group Processes 151–174 (Emerald Publishing Limited, 2017).

Goffman, E. Presentation of Self in Everyday Life. (Doubleday, 1959).

Collins, R. Interaction ritual chains. (Princeton University Press, 2004).

Wiemann, J. M. & Knapp, M. L. in Communication Theory (ed C. David Mortensen) 226–245 (Transaction Publishers, 2017).

Hansen, A. K., Nilsson, J., Jochum, E. A. & Herath, D. On the importance of posture and the interaction environment: Exploring agency, animacy and presence in the lab vs wild using mixed-methods. in Companion of the 2020 ACM/IEEE International Conference on Human-Robot Interaction. 227–229.

Guest, G., MacQueen, K. M. & Namey, E. E. Applied Thematic Analysis. (Sage, 2011).

Clarke, V., Braun, V. & Hayfield, N. Thematic analysis. Qual. Psychol. Pract. Guide Res. Methods 3, 222–248 (2015).

Guba, E. G. Criteria for assessing the trustworthiness of naturalistic inquiries. Educ. Tech. Res. Dev. 29, 75–91 (1981).

Thoits, P. A. The sociology of emotions. Annu. Rev. Sociol. 15(1), 317–342 (1989).

Funding

This study was supported by funding from the Australian Capital Territory Government. The funding source had no role in the study design, data collection, data analysis, data interpretation, writing of the manuscript or its submission for publication.

Author information

Authors and Affiliations

Contributions

DH, JB, and JLD conceived and designed the study and drafted the manuscript. DH served as the principal investigator and supervised the study. AR developed the core software components used in the study and contributed to the manuscript in the Technical Implementation section. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Herath, D., Busby Grant, J., Rodriguez, A. et al. First impressions of a humanoid social robot with natural language capabilities. Sci Rep 15, 19715 (2025). https://doi.org/10.1038/s41598-025-04274-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-04274-z